Abstract

A significant challenge for many visually impaired people is they cannot be entirely independent and are restricted by their vision. They face problems with such actions and object detection should be an essential feature they can rely on a regular basis. Object detection is applied to discover objects in the real world from an image of the world, like chairs, bicycles, tables, or doors, that are normal in the scenes of a blind predicated on their places. Computer vision (CV) involves the automated extraction, understanding, and analysis of valuable information from a sequence of images or a single image. Machine learning (ML) and deep learning (DL) are significant and robust learning architectures broadly established, especially for CV applications. This study proposes a novel Advanced Object Detection for Smart Accessibility using the Marine Predator Algorithm to aid visually challenged people (AODSA-MPAVCP) model. The main intention of the AODSA-MPAVCP model is to enhance object detection techniques using advanced models for disabled people. Initially, the image pre-processing stage applies adaptive bilateral filtering (ABF) to eliminate the unwanted noise in input image data. Furthermore, the proposed AODSA-MPAVCP model utilizes the YOLOv10 model for object detection. Moreover, the feature extraction process employs the VGG19 method to transform raw data into meaningful and informative features. The deep belief network (DBN) technique is used for the classification process. Finally, the marine predator algorithm (MPA)-based hyperparameter selection process is performed to optimize the classification results of the DBN technique. The experimental evaluation of the AODSA-MPAVCP approach is examined under the Indoor object detection dataset. The performance validation of the AODSA-MPAVCP approach portrayed a superior accuracy value of 99.63% over existing models.

Similar content being viewed by others

Introduction

Globally, there are 285 million individuals who have vision impairment. Visually impaired people (VIPs) generally have complications walking and avoiding difficulties in their everyday lives1. Conventionally, such individuals utilize white cane to identify complications in front of them. Consequently, VIPs are unable to precisely recognize what kind of impediments are in front of them and should only based on guide canes and experiences to walk carefully and in the required path2. Moreover, in unknown settings, VIPs frequently need support in the form of volunteers to instruct them on the environmental conditions. Based on a guide cane, VIPs cannot become usual with their settings or react promptly to unforeseen conditions3. Thus, making novel solutions is vital to help VIPs execute these jobs effectively and quickly and stimulate them to be involved with their social setting. However, technological progressions have permitted the aid of those living in tragic conditions4. Therefore, VIPs can now accomplish everyday routines like walking over the streets and navigating buildings. Owing to novel technological progressions and its wide variety of applications, object detection (OD) has continuously gained more attention over recent years5. Amongst the multiple components and projects that have accelerated the development of OD models, the formation of deep convolution neural networks (DCNN) and GPU processing power deserve unique detection6. The OD method operates exactly as a child could learn in school. Children are skilled by learning the terms of diverse shapes and objects in school. He can recognize each relevant object he has already learned once he has conquered each object7. As a child learns, the machine should be entirely skilled in utilizing various Machine Learning (ML) models by inserting each object name. An effectual object identification method will accurately and quickly yield the object’s name. Recently, a few approaches have been proposed to help VIPs find objects in their settings8. Multiple investigation models are utilized, like Deep Learning (DL), Artificial Intelligence (AI), and ML models, to assist VIPs in recognizing their settings and avoiding complications9. Over the past years, with the progress in ML and DL, further investigators have explored the domain of smart assistive solutions for individuals with blindness or visual impairment10.

This study proposes a novel Advanced Object Detection for Smart Accessibility using the Marine Predator Algorithm to aid visually challenged people (AODSA-MPAVCP) model. The main intention of the AODSA-MPAVCP model is to enhance object detection techniques using advanced models for disabled people. Initially, the image pre-processing stage applies adaptive bilateral filtering (ABF) to eliminate the unwanted noise in input image data. Furthermore, the proposed AODSA-MPAVCP model utilizes the YOLOv10 model for object detection. Moreover, the feature extraction process employs the VGG19 method to transform raw data into meaningful and informative features. The deep belief network (DBN) technique is used for the classification process. Finally, the marine predator algorithm (MPA)-based hyperparameter selection process is performed to optimize the classification results of the DBN technique. The experimental evaluation of the AODSA-MPAVCP approach is examined under the Indoor object detection dataset.

Literature review

Kadam et al.11 developed a real-world edge-based risky object detection (OD) method that utilizes a lightweight DL technique for categorizing objects acquired by a camera. The method can substantially enhance their independence and safety by alerting and identifying visually impaired people to risky objects in their settings. Furthermore, the investigation supports the development of studies on DL-based OD methods that can change multiple domains over aiding technology. Arifando et al.12 developed an exact and lightweight bus recognition technique depending on an upgraded version of the YOLO-v5 technique. Incorporating the C3Ghost and GhostConv Modules into the YOLO-v5 technique is presented to mitigate the number of floating-point operations per second (FLOPs) and parameters, safeguarding recognition precision while decreasing the parameter models. Eventually, a slim-scale recognition technique will be introduced by altering the novel YOLO-v5 framework to make the method faster and more effective, which is crucial for real-world OD applications. In13, a technique is recommended as an intellectual method that can provide a blind individual with the capability to guide in an unknown setting, both externally and internally, by focusing on sound. These IoT-based smart techniques aim to develop a self-determined gadget utilizing an evolution board and a few sensors to offer full navigational assistance to the individual. The smart techniques are employed as a single-shot multiple-object detection (SSD) model for real-world OD. Alahmadi et al.14 developed an updated YOLO-v4 Resnet101 as backbone systems experienced on several object modules to aid VIPs in navigating their setting. Even though the Darknet, with a backbone employed in YOLO-v4, the ResNet-101 backbone in YOLOv4 Resnet101 provides a deeper and more potent feature extraction system. The ResNet-101’s better ability allows the best performance of complex visual patterns that raise the OD precision. Bhalekar and Bedekar15 developed an image captioning method that creates specified captions and eliminates text from an image. To remove the image features, the projected technique utilizes CNN, succeeded by LSTM, and makes equivalent sentences depending on the learned features of an image. Moreover, the text extraction component, which integrates the image captions and description, is demonstrated in audio form. In16, an enhanced OD approach depending on DNN is developed that utilizes a structure similar to the SSD. In the projected approach, an upgraded ResNet-50 technique is employed to improve the feature extractor system’s data transmission and expression ability. Simultaneously, the MCIE method is used to eliminate the contextual data of the indoor setting to enhance the indoor OD consequence. Wang et al.17 intend to attain a deep vision of the challenges and performance of 3D OD approaches under challenging sequences for AD. The complicated driving settings and the perception limitations of mainstream sensors (camera and LIDAR) are examined. Then, the challenges and performance of single-modality three-dimensional OD models are investigated. Consequently, to enhance the robustness and accuracy of three-dimensional OD approaches in a few complicated AD environments, the fusion of L-C (LIDAR-camera) is suggested and systematically examined.

Materials and methods

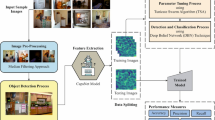

In this study, a novel AODSA-MPAVCP model is proposed. The main intention of the proposed AODSA-MPAVCP model is to enhance object detection techniques using advanced models for disabled people. It involved image pre-processing, object detection, backbone feature extraction, classification, and parameter selection. Figure 1 exemplifies the overall flow of the AODSA-MPAVCP approach.

Image pre-processing

At first, the image pre-processing stage applies ABF to eliminate the unwanted noise in input image data. ABF is a successful pre-processing model for object detection, as it enhances the robustness and accuracy of detection methods by decreasing noise while maintaining important image details such as edges18. Object detection particularly smooths areas with related intensity or colour, guaranteeing that object boundaries endure sharper and more clearly defined. This is important for precisely localizing and identifying objects, particularly in noisy or lower-contrast settings. Adjusting the filtering method derived from local image possessions improves the detection procedure, making it stronger for lighting and background noise changes. Therefore, ABF aids enhance the performance of object detection methods, resulting in more reliable and accurate object detection.

YOLOv10 model

Afterwards, the proposed AODSA-MPAVCP model designs the YOLOv10 model for the object detection process. YOLO-V10 symbolizes an essential development in the You Only Look Once (YOLO) series, recognized for its end-to-end, real-world object detection abilities19. Like the newer form in the family of YOLO, YOLO-V10 relies on the achievement of prior versions (V1-V9), presenting new improvements which enhance either efficiency or accuracy, making it mainly appropriate for applications like improving availability for visually impaired people. The structure of YOLO-V10 combines a unified dual allocation approach for NMS (Non‐Maximum Suppression)‐free training that minimizes computation efficiency and enhances the inference speed without cooperating recognition qualities. These are main advances over earlier YOLO variants, as they remove the requirement for NMS throughout inference that might present latency YOLO-v10’s concentrate on dropping inference time, whereas preserving higher precision makes it incredibly efficient for real‐world applications. The pipeline detector in YOLO-V10 starts by handling the input image over a progressive network of the backbone, which removes feature representations. The neck unit combines These characteristics at numerous scales, successfully combining the information before passing it to the head unit. The head unit makes numerous class predictions and bounding boxes for all objects. Unlike previous versions of YOLO, YOLO-V10 carries out object detection without NMS throughout inference, which aids in decreasing computing time and enhances real‐world performance.

An essential structural progress in YOLO-V10 is its usage of an improved version of the CSPNet (Cross-Stage Partial Network) that enhances gradient propagation and decreases computational redundancies. These outcomes result in higher extraction of the feature, which assists the method in preserving computation complexity while improving performance. The neck unit in YOLO-V10 also incorporates a PANet (Path Aggregation Network) layer that assists efficient multiple‐scale feature fusion, enhancing detection precision through objects of different dimensions. The YOLO-V10 head module contains dual configurations: a pair of multi‐heads and single‐heads throughout inference. The multi-head configuration makes numerous predictions for all objects in training, giving complete supervisory signals and enhancing learning precision. On the other hand, the single-head configuration provides a single optimum prediction per object in inference, eliminating the requirement for NMS and lessening latency, thus improving the complete efficacy of the method. For classification tasks, YOLO-V10 is adjusted to utilize only the backbone network, which is directly associated with classification heads. This efficient model uses the backbone’s progressive abilities of feature extraction, neglecting the detection and neck head that decreases computational complexity and preserves the real‐world implementation benefits of YOLO-V10. These flexibilities in the structure make YOLO-V10 extremely effective for object detection and flexible for other tasks such as classification. Figure 2 depicts the architecture of YOLOv10.

Feature extraction process

The feature extraction process is followed by the VGG19 method to transform raw data into a set of meaningful and informative features. VGG-19 contains 19 layers, 16 convolutional layers, and three fully connected (FC) layers20. All neural layers of this general image classification model utilize \(\:3\text{x}3\) filters. The VGG-19 method, pre-trained on the ImageNet dataset, acts as the backbone for feature extraction in this presented method. Numerous modifications were applied for tailoring the technique:

-

1.

Input layer adjustment: The VGG19 method’s input layer is adjusted to take resized images of \(\:224\text{x}224\) pixels, guaranteeing consistency with the dataset while preserving the reliability of the new architecture.

-

2.

Refinement of the convolutional layers: Although the convolutional layers are initialized with weights from the pre-trained method, these layers are adjusted. This procedure includes unfreezing the past fewer convolutional layers to permit the technique to learn particular features that might vary from overall image classifications in the ImageNet dataset.

-

3.

Custom classification head: It is added to the VGG-19 method, substituting the new FC layers. This head contains a sequence of dense layers accompanied by a softmax activation function tailored to output possibilities for the particular classes in the dataset (for example, benign, malignant, and normal). The last layer’s number of neurons equals the number of groups in this classification task.

-

4.

Dropout regularization: Dropout layers were presented between the dense layers of the traditional classification head to alleviate overfitting. This version enhances the model’s generalizability by arbitrarily neutralizing a neuron’s fraction in training.

-

5.

Modified loss function: A definite cross-entropy loss function appropriate for multiple class classification tasks is utilized. This selection permits improved processing of the unbalanced dataset while enhancing the model’s performance.

These particular versions of the pre-trained VGG-19 method allow it to learn successfully, utilizing its deep feature extraction abilities while aligning it with the requests of the presented application. Reducing the dimensions to the CNN method is required to calculate the main parameters throughout feature extraction.

Classification using the DBN model

The DBN technique is deployed for the classification process. DBN is fundamentally a probability-based generative method, a multi-layer perceptive network stacked with RBM (Restricted Boltzmann Machine), focusing on a layer-by-layer greedy learning model21. The DBN training is separated into dual stages: An unsupervised pre-training stage is initially accompanied by a supervised backward adjustment stage observed as a BP session. The RBM is divided into a hidden layer (HL) and a visible layer (VL); there are omnidirectional relationships between the nodes in the HL and VL, and the nodes inside the layers are separate. In training, nodes inside the VL attain features from the dataset and are distributed to the HL. Formerly, the nodes inside the HL were multiplied by the weights to get the bias and add it to the outcome. The output is handled by the activation function and given to the following HL again. This hierarchic learning model enhances DBN’s learning efficacy and improves the method’s multi-feature extraction ability.

The function of likelihood distribution \(\:p(V,\:H)\) during the pre-training stage is presented in (1).

Whereas \(\:E\left(V,\:H\right)\) denotes energy function, and \(\:N\) signifies normalization constant.

The energy function \(\:E\left(V,\:H\right)\) of the hidden and visible layer nodes is.

Here, \(\:{a}_{i}\) and \(\:{b}_{j}\) refer to the deviation of the VL and HL, \(\:{V}_{i}\) and \(\:{H}_{j}\:\)denote the node of the VL and HL, and \(\:{W}_{ij}\) is the joining weight amongst the hidden and visible layers.

The normalization constant \(\:N\) represents each node’s energy amount amongst the hidden and visible layers.

During this unsupervised learning process, the\(\:\:\text{l}\text{o}\text{g}\:\)-log-probability function is the main of the method and considers the learning capability of the model.

Whereas \(\:l\) signifies the amount of each training data.

In the training process of RBM, the CD (Contrastive Divergence) model is applied to upgrade the weights \(\:{W}_{ij}\) and deviations \(\:{a}_{i},\) \(\:{b}_{j}\) of the hidden and visible layers. The CD model is exposed in Eqs. (5)-(7).

Whereas \(\:\theta\:\) signifies the learning rate, \(\:(\cdot\:{)}_{Label}\) represents the real data value, and \(\:(\cdot\:{)}_{Predici}\) represents the predicted data value.

When the pre-training procedure, the reverse fine-tuning starts. During this method, the model is upgraded regularly depending on the weights \(\:{W}_{ij}\), the node bias \(\:{b}_{j}\) of the HL, and the loss function \(\:{E}_{Loss}\). The equation is presented in Eqs. (8) and (9).

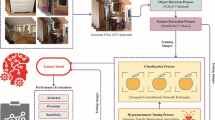

Hyperparameter tuning using MPA

Eventually, the MPA-based hyperparameter selection process is performed to optimize the classification results of the DBN model. The newly made MPA optimizer is the basis for the optional model process22. The MPA is a modern approach stimulated by the effective marine predator’s movements, though they hunt for prey. One phenomenal characteristic of MPA is that either prey or predators are considered searching members. The standard approaches to determine the movement of the predator approach are Brownian and Levy-based movements. The speed ratio of prey-to‐predator is an essential feature in the MPA model, transitioning the optimizer procedure among stages. The basic stages in the MPA are as demonstrated:

-

1)

Initialization procedure: Prey and Elite are two mathematical methods. The prey method utilizes a random variable location uniformly distributed above the presented region. Meanwhile, the placement vector by the better fitness function is iterated by Elite mathematical models.

-

2)

Stage no. 1 (the initial one-third of the repetitions): During the sample, the predator rests even, whereas the prey moves using the Brownian model, where the prey follows the following relationships to upgrade their positions using Eqs. (10) and (11):

$$\:{S}_{i}={R}_{B}\times\:\left(Elit{e}_{i}-{R}_{B}\times\:{Z}_{i}\right),i=\text{1,2},\dots\:,n$$(10)$$\:{Z}_{i}={Z}_{i}+0.5R\times\:{S}_{i}$$(11)Here, \(\:{S}_{i}\) signifies step size, while \(\:{R}_{B}\) represents the Brownian motion vector, and \(\:R\) is a randomly generated number vector amongst \(\:\left[\text{0,1}\right].\)

-

3)

Stage no.2 (the second one-third of repetitions): The predator uses Brown’s motion while the prey utilizes Levy’s. Dual sub-sections, the primary of which utilizes (12) and the next that utilizes (13), represent the population currently. Conversely, the succeeding locations are upgraded in the following subset utilizing (14) and (15). During this, the concepts of MPA and its process are offered utilizing mathematic methods:

$$\:{S}_{i}={R}_{L}\times\:\left(Elit{e}_{i}-{R}_{L}\times\:{Z}_{i}\right),i=\text{1,2},\dots\:,\frac{n}{2}$$(12)$$\:{Z}_{i}={Z}_{i}+0.5R\times\:{S}_{i}$$(13)Meanwhile, \(\:{R}_{L}\) refers to indiscriminate values based on Lévy’s motion distribution.

$$\:{S}_{i}={R}_{B}\times\:\left({R}_{B}\times\:Elit{e}_{i}-{Z}_{i}\right),i=\text{1,2},\dots\:,\frac{n}{2}$$(14)$$\:{Z}_{i}=Elit{e}_{i}+0.5CF\times\:{S}_{i}$$(15)Whereas,

$$\:CF=(1-\frac{t}{{t}_{\text{m}\text{a}\text{x}}}{)}^{2\frac{t}{{t}_{\text{m}\text{a}\text{x}}}}$$(16)Here, \(\:CF\) is predator step size, while \(\:t\) and \(\:{t}_{\text{m}\text{a}\text{x}}\) represent present and maximal values for repetitions.

-

4)

Stage no.3 (final one-third of the repetitions): During this phase, the predator applies Levy’s motion, and (17) and (18) are used to upgrade the prey.

$$\:{S}_{i}={R}_{L}\times\:\left({R}_{L}\times\:Elit{e}_{i}-{Z}_{i}\right),i=\text{1,2},\dots\:,n$$(17)$$\:{Z}_{i}=Elit{e}_{i}+0.5CF\times\:{S}_{i}$$(18) -

5)

Finishing procedure: Finally, in all repetitions, the desirable location is reserved in the Elite method, which offers the desirable location at the end.

The MPA generates a fitness function (FF) that improves classification performance by optimizing candidate solutions. It minimizes the classification error rate to achieve the best outcome. Its mathematical formulation is represented in Eq. (19).

$$\:fitness\left({x}_{i}\right)=ClassifierErrorRate\left({x}_{i}\right)=\frac{number\:of\:misclassified\:samples}{Total\:number\:of\:samples}\times\:100$$(19)

Experimental validation

In this part, the investigational validation of the AODSA-MPAVCP methodology is examined under the Indoor object detection dataset23. The dataset contains 6642 counts below ten class labels, as exposed in Table 1. Figure 3 portrays the sample images.

Figure 4 established the classifier results of the AODSA-MPAVCP methodology. Figure 4a and b displays the confusion matrix with correct recognition and classification of each class under 70%TRPH and 30%TSPH. Figure 4c exhibits the PR values, demonstrating superior performance over every class label. Simultaneously, Fig. 4d demonstrates the ROC values, establishing proficient results with better values of ROC for different class labels.

Table 2; Fig. 5 represent the object detection of the AODSA-MPAVCP approach below 70%TRPH and 30%TSPH. The outcomes imply that the AODSA-MPAVCP approach accurately identified the samples. With 70%TRPH, the AODSA-MPAVCP technique presents an average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\:\) \(\:{F}_{measure}\) and \(\:AU{C}_{score}\:\)of 99.49%, 87.56%, 81.62%, 83.23%, and 90.63%, correspondingly. Besides, with 30%TSPH, the AODSA-MPAVCP technique presents an average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\:\) \(\:{F}_{measure}\) and \(\:AU{C}_{score}\:\)of 99.63%, 95.31%, 89.96%, 92.15%, and 94.85%, respectively.

Figure 6 illustrates the training (TRA) \(\:acc{u}_{y}\) and validation (VAL) \(\:acc{u}_{y}\) analysis of the AODSA-MPAVCP approach. The \(\:acc{u}_{y}\:\)analysis is computed across an interval of 0–25 epochs. The figure highlights that the TRA and VAL \(\:acc{u}_{y}\) analysis exhibitions exhibit a rising tendency, indicating the AODSA-MPAVCP approach’s capacity with maximal performance across multiple iterations. This is followed by the TRA and VAL \(\:acc{u}_{y}\) leftovers closer across the epochs, which identifies inferior overfitting and exhibitions maximum performance of the AODSA-MPAVCP technique, guaranteeing reliable prediction on unseen samples.

Figure 7 illustrates the TRA loss (TRALOS) and VAL loss (VALLOS) curves of the AODSA-MPAVCP technique. The loss values are calculated within the range of 0–25 epochs. The TRALOS and VALLOS analysis exemplifies a decreasing trend, informing the capacity of the AODSA-MPAVCP technique to balance a trade-off between generalization and data fitting. The continuous reduction in loss values promises higher outcomes of the AODSA-MPAVCP methodology and tunes the prediction results over time.

Table 3; Fig. 8 study the comparison outcomes of the AODSA-MPAVCP approach with existing approaches24,25,26. The results highlight that the Hough Line + SVM, DCN-VGG-19, NB (NB), YOLOv5n, and YOLOv51 methods have reported lower performance. Meanwhile, the GLCM + LVQ and YOLOv5x approaches have attained closer outcomes. Furthermore, the AODSA-MPAVCP technique attained maximal performance with higher \(\:pre{c}_{n}\), \(\:rec{a}_{l},\) \(\:acc{u}_{y},\:\)and \(\:{F}_{measure}\) of 95.31%, 89.96%, 99.63%, and 92.15%, correspondingly.

Table 4; Fig. 9 demonstrate the mAP@0.5 outcome of the AODSA-MPAVCP model with existing models. The results imply that the AODSA-MPAVCP model gets better performance. Depend on mAP@0.5, the BEADL-EDCHD approach attains higher value of 78.83%, whereas the Hough Line + SVM, GLCM + LVQ, DCN-VGG-19, YOLOv5x, NB, YOLOv5n, and YOLOv51 have attained lesser values of 72.90%, 65.61%, 67.91%, 69.73%, 72.27%, 66.94%, and 70.80%, respectively.

Table 5; Fig. 10 illustrates the computational time (CT) analysis of the AODSA-MPAVCP approach with existing methods. The results show that the AODSA-MPAVCP approach attains a relatively fast CT of 4.38 s, outperforming the existing models. The Hough Line + SVM method exhibits a CT of 6.85 s, showing a moderate processing time. The GLCM + LVQ method depicts a higher CT of 10.86 s, while the DCN-VGG-19 model illustrates a CT of 7.34 s. YOLOv5x has a CT of 9.77 s, and the NB approach portrays a CT of 9.17 s. YOLOv5n and YOLOv51 attains higher CT values, at 14.58 s and 10.90 s, susequently, showing the more complex computations involved in their operations. Despite these variations, the AODSA-MPAVCP approach outperforms with the lowest CT, exhibiting improved efficiency.

Conclusion

In this study, a novel AODSA-MPAVCP model is proposed. The main intention of the proposed AODSA-MPAVCP model is to enhance object detection techniques using advanced models for disabled people. Initially, the image pre-processing stage applies ABF to eliminate the unwanted noise in input image data. Furthermore, the AODSA-MPAVCP model utilizes the YOLOv10 model for object detection. Moreover, the feature extraction process uses the VGG19 method to transform raw data into meaningful and informative features. The DBN technique is employed for the classification process. Finally, the MPA-based hyperparameter selection process is performed to optimize the classification results of the DBN technique. The experimental evaluation of the AODSA-MPAVCP approach is examined under the Indoor object detection dataset. The performance validation of the AODSA-MPAVCP approach portrayed a superior accuracy value of 99.63% over existing models.

Data availability

The data that support the findings of this study are openly available at https://www.kaggle.com/datasets/thepbordin/indoor-object-detection.

References

Prashar, D., Chakraborty, G. & Jha, S. Energy efficient laser based embedded system for blind turn traffic control. J. Cybersecur. Inform. Manage. 2 (2), 35–43 (2020).

Pydala, B., Kumar, T. P. & Baseer, K. K. Smart_Eye: a navigation and obstacle detection for visually impaired people through smart app. J. Appl. Eng. Technological Sci. (JAETS). 4 (2), 992–1011 (2023).

Rahman, M. M., Islam, M. M., Ahmmed, S. & Khan, S. A. Obstacle and fall detection to guide the visually impaired people with real time monitoring. SN Comput. Sci. 1(4), 219 (2020).

Farooq, M. S. et al. IoT enabled intelligent stick for visually impaired people for obstacle recognition. Sensors 22(22), 8914 (2022).

Rajwani, R., Purswani, D., Kalinani, P., Ramchandani, D. & Dokare, I. Proposed system on object detection for visually impaired people. Int. J. Inf. Technol. (IJIT) 4(1), 1–6 (2018).

Manjari, K., Verma, M. & Singal, G. A survey on assistive technology for the visually impaired. Internet Things 11, 100188 (2020).

Meshram, V. V., Patil, K., Meshram, V. A. & Shu, F. C. An astute assistive device for mobility and object recognition for visually impaired people. IEEE Trans. Human-Machine Syst. 49 (5), 449–460 (2019).

Islam, R. B., Akhter, S., Iqbal, F., Rahman, M. S. U. & Khan, R. Deep learning based object detection and surrounding environment description for visually impaired people. Heliyon, 9(6). (2023).

Islam, M. M., Sadi, M. S., Zamli, K. Z. & Ahmed, M. M. Developing walking assistants for visually impaired people: A review. IEEE Sens. J. 19 (8), 2814–2828 (2019).

El-Taie, M. & Kraidi, A. Y. Enhancing information fusion from UAV-Captured High-Altitude infrared imagery through machine learning. Full Length Article 4(2), 33–3 (2024).

Kadam, U. et al. Hazardous object detection for visually impaired people using edge device. SN Comput. Sci. 6 (1), 1–13 (2025).

Arifando, R., Eto, S. & Wada, C. Improved YOLOv5-based lightweight object detection algorithm for people with visual impairment to detect buses. Appl. Sci. 13(9), 5802 (2023).

Gupta, P., Shukla, M., Arya, N., Singh, U. & Mishra, K. Let the blind see: an AIIoT-based device for real-time object recognition with the voice conversion. In Machine Learning for Critical Internet of Medical Things: Applications and Use Cases 177–198 (Springer International Publishing, 2022).

Alahmadi, T. J., Rahman, A. U., Alkahtani, H. K. & Kholidy, H. Enhancing object detection for vips using yolov4_resnet101 and text-to-speech conversion model. Multimodal Technol. Interact. 7(8), 77 (2023).

Bhalekar, M. & Bedekar, M. D-CNN: a new model for generating image captions with text extraction using deep learning for visually challenged individuals. Eng. Technol. Appl. Sci. Res. 12 (2), 8366–8373 (2022).

Ni, J., Shen, K., Chen, Y. & Yang, S. X. An improved ssd-like deep network-based object detection method for indoor scenes. IEEE Trans. Instrum. Meas. 72, 1–15 (2023).

Wang, K., Zhou, T., Li, X. & Ren, F. Performance and challenges of 3D object detection methods in complex scenes for autonomous driving. IEEE Trans. Intell. Veh. 8 (2), 1699–1716 (2022).

Gollamandala, U. B., Midasala, V. & Ratna, V. R. FPGA implementation of hybrid recursive reversable box filter-based fast adaptive bilateral filter for image denoising. Microprocess. Microsyst. 90, 104520 (2022).

Arifando, R., Eto, S., Tibyani, T. & Wada, C. January. Improved YOLOv10 for visually impaired: balancing model accuracy and efficiency in the case of public transportation. In Informatics (Vol. 12, No. 1, 7). (MDPI, 2025).

Ponraj, A. et al. A multi-patch-based deep learning model with VGG19 for breast cancer classifications in the pathology images. Digit Health 11, 20552076241313160 (2025).

Duan, X. et al. Simulation Study of Deep Belief Network-Based Rice Transplanter Navigation Deviation Pattern Identification and Adaptive Control. Appl. Sci. 15(2), 790 (2025).

Hassan, A. et al. Optimal cascade 2DOF fractional order master-slave controller design for LFC of hybrid microgrid systems with EV charging technology. Results Eng. 25, 103647 (2025).

https://www.kaggle.com/datasets/thepbordin/indoor-object-detection

Utaminingrum, F., Johan, A. W. S. B., Somawirata, I. K., Shih, T. K. & Lin, C. Y. Indoor staircase detection for supporting security systems in autonomous smart wheelchairs based on deep analysis of the Co-occurrence matrix and binary classification. Intell. Syst. Appl. 200405. (2024).

Lee, K. & Jeon, C. Small tool image dataset and object detection approach for indoor construction site safety. KSCE J. Civ. Eng. 27 (3), 930–939 (2023).

Pokuciński, S. & Mrozek, D. Object detection with YOLOv5 in indoor equirectangular panoramas. Procedia Comput. Sci. 225, 2420–2428 (2023).

Acknowledgements

The authors extend their appreciation to the King Salman center For Disability Research for funding this work through Research Group no KSRG-2024- 441.

Author information

Authors and Affiliations

Contributions

Mahir Mohammed Sharif Adam: Conceptualization, methodology, validation, investigation, writing—original draft preparation, fundingHussah Nasser AlEisa: Conceptualization, methodology, writing—original draft preparation, writing—review and editingSamah Al Zanin: methodology, validation, writing—original draft preparationRadwa Marzouk: software, validation, data curation, writing—review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Adam, M.M.S., AlEisa, H.N., Zanin, S.A. et al. Advanced object detection for smart accessibility: a Yolov10 with marine predator algorithm to aid visually challenged people. Sci Rep 15, 20759 (2025). https://doi.org/10.1038/s41598-025-04959-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-04959-5