Abstract

Cervical cancer is a major cause of mortality among women, particularly in low-income countries with insufficient screening programs. Manual cytological examination is time-consuming, error-prone and subject to inter-observer variability. Automated and robust classification of the whole slide cytology images for cervical cancer is essential for detecting precancerous and malignant lesions. We propose a novel deep learning framework, the Compound Scaling Hypergraph Neural Network model (CSHG-CervixNet), for robust classification of cervical cancer subtypes. The model integrates a Compound Scaling Convolutional Neural Network (CSCNN) with a k-dimensional Hypergraph Neural Network (kd-HGNN) architecture. CSCNN balances the network’s depth, width, and resolution, supporting effective feature representation with minimal computational overhead. kd-HGNN captures higher-order relationships between the features, and its propagation mechanism ensures better feature diffusion across distant nodes. The model is evaluated on the benchmark Sipakmed dataset and achieves an accuracy of 99.31%, with a macro-averaged precision of 98.97%, recall of 99.38%, and F1-score of 99.34%, demonstrating its robustness in cervical cancer subtype classification. Pathologists and other medical experts will find this study helpful in distinguishing cervical cancer subtypes so that targeted treatment may be provided and effective disease management is made possible.

Similar content being viewed by others

Introduction

Cervical Cancer (CC) ranks as the fourth most prevalent cancer affecting women globally and accounts for a significant portion of cancer-related deaths1,2,3,4. According to recent statistics in 2020, it is estimated that Cervical Cancer caused over 600,000 new cases and about 340,000 deaths5,6,7. This projection suggests that by 2030, Cervical cancer-related deaths could escalate to 400,000 people annually. According to CC cytology, the subtypes are commonly categorised into five distinct subtypes: Metaplastic, Dyskeratotic, Koilocytotic, Superficial-Intermediate and Parabasal8,9,10. Accurate recognition and classification of these subtypes are crucial for cancer diagnosis and personalised treatments11.

Traditionally, Cervical Cancer subtyping relies on manual analysis of WSIs by expert pathologists, which is error-prone, subjective and labour-intensive9,12,13. Subtypes tend to have visual variations, which makes it challenging even for skilled pathologists to classify them. Furthermore, staining artifacts, overlapping cell features, and intra-class variations also make it more difficult for the classification task, which has the potential to cause diagnostic inaccuracies14. Therefore, an automated Computer-Aided Diagnostic (CAD) tool to classify cervical cancer subtypes from WSIs is essential for improving accuracy, reducing workload, and facilitating early and precise treatment planning15,16.

Many deep-learning techniques have shown considerable promise in detecting various cancers, such as lung, breast, cervical, and colorectal17,18,19. In recent studies, Convolutional Neural Networks (CNNs) are the basis of several CAD systems for evaluating cervical cytology images, significantly increasing diagnostic efficiency and precision20,21,22,23,24. However, CNNs effectively extract discriminative features; they often struggle to capture complex, multi-relational interactions among features25,26,27,28. Transformer-based architecture has recently gained attention for its powerful self-attention mechanisms in modelling global contexts, especially for medical image applications29,30. They are associated with drawbacks such as model complexity, large data requirements, and lack of interpretability in resource-poor clinical environments. Even though they have demonstrated promising results, they likely lack fine-grained, higher-order feature modelling capabilities, which are crucial for tasks such as cytological subtype classification.

To address the limitations of existing approaches, recent work has explored Graph Convolutional Networks (GCN), which have sought to reduce the inherent shortfalls of traditional approaches by adopting pairwise interactions31,32,33. However, standard GCNs are restricted from operating on pairwise (2-node) connections, whereas Hypergraph Neural Networks (HGNNs) have gained increased attention by enabling multi-way relational modelling through hyperedges. However, many conventional HGNN structures still use static structures and cannot handle higher-order correlations in large, heterogeneous datasets25,31,32,33,34. A hybrid subtype classification framework, Compound Scaling Hypergraph neural network (CSHG-CervixNet), is proposed to overcome these issues. The model utilises efficient Compound Scaling Convolutional Neural Network (CSCNN) deep feature extraction and modified k-dimensional Hypergraph Neural Network (kd-HGNN) for robust classification. We adopt CSCNN as a backbone for feature extraction, which uniformly scales depth, width and resolution, enabling the model to extract deep, multi-scale features from whole slide cytology images. The kd-HGNN models higher-order relationships of the extracted features through hypergraph construction based on neighbourhood similarity, ensuring comprehensive feature aggregation and precise classification. By embedding a feature propagation mechanism in the hypergraph architecture, we effectively model the diffusion of feature information between local and global relational contexts to capture inter-cellular dependencies inherent in cytological images more accurately. Leveraging the publicly available SipakMed dataset, our model is meticulously designed to address the nuanced morphological variances across subtypes, ensuring accuracy and scalability.

The major contributions of this study are threefold:

-

Compound Scaling Convolutional Neural Network for deep feature extraction: CSCNN is utilised to extract the deep features from cervical whole slide cytology images. The uniform scaling of depth, width and resolution ensures an efficient feature extraction process, capturing intricate morphological variations crucial for subtype classification.

-

Development of K-dimensional Hypergraph Neural Network for classification: A robust kd-HGNN architecture that effectively models higher-order relationships among the extracted feature vectors. The hyperedges are based on neighbourhood similarity. The kd-HGNN facilitates comprehensive feature aggregation, thereby enhancing classification accuracy.

-

A comprehensive evaluation of the SipakMed dataset: Extensive experiments are conducted on the benchmark SipakMed cervical cytology dataset. The results demonstrate that the proposed hybrid framework outperforms the baseline methods in cervical cancer subtype classification tasks.

The article is structured as follows: “Related works” explains the detailed literature review based on Machine Learning, Deep Learning, and hybrid techniques. “Proposed CSHG-CervixNet architecture” introduces the proposed methodology. “Experimental analysis and discussion” presents the experimental results and discussion. “Conclusion” summarises the conclusion and future work.

Related works

Machine Learning (ML) and Deep Learning (DL) techniques have significantly advanced cervical cancer diagnosis. These techniques have been used to improve automated diagnostic models’ accuracy, dependability, and interpretability while addressing issues including cytological image variability, the requirement for strong feature extraction and robust classification. This section examines the existing studies in three main areas: (1) ML approaches, which prioritise feature engineering and conventional classification algorithms; (2) DL methods, which employ CNNs and complex architectures to achieve increased accuracy and generalizability; and (3) ensemble and hybrid models, which integrate various approaches to enhance performance and robustness. This review contextualises and illustrates the development of techniques by evaluating various approaches.

Deep learning-based frameworks

A ResNet-based Autoencoder35 was proposed for cervical cell classification by using an attention mechanism achieving an accuracy of 99.26%. A cervical pap smear image classification model named CervixFormer36 which utilises the Swin Transformer achieved an accuracy of 98.29%. Muksimova et al.37 proposed a Reinforcement Learning (RL) based ResNet-50 that utilises supporter blocks to highlight essential feature information and a meta-learning ensemble to improve segmentation accuracy. Another model, CerviLearnNet38 automates cervical cancer diagnosis by combining RL with a modified Efficient-NetV2 model. A CNN with four convolutional layers39 was used to categorise cervical cells into five groups using the SipakMed dataset, with an accuracy of 91.13%. Bhatt et al.40 proposed a convolutional-based cervical pap smear image classification model utilising a progressive resizing technique that demonstrated an accuracy of 99.70%. Lin et al.41 employed a CNN architecture that had already been trained to extract essential features of CC pap smear images. These features are classified by using an SVM classifier, achieving an accuracy of 94.5%. Rehman et al.42 believe that transfer learning is a useful technique for resolving the issues of overfitting and excessive parameter correlation. DenseNet121 was used by Chen et al.43 to improve the classification rate of lightweight CNNs for cervical cell categorisation, and they achieved a classification accuracy of 96.79%. Mohammed et al.44 utilised a pre-trained DenseNet169 and attained a classification accuracy of 99% for five-class cervical pap smear images. Using ViT-CNN and CNN-LSTM, Maurya et al.45 introduced a computer-aided diagnostic system for classifying cervical cell subtypes. The model attained an accuracy of 97.65%. Combining the features from the visual transformer and pre-trained DenseNet201, as utilised by Hemalatha et al.46 extracted both local and global features of cervical cell images. Based on these combined features, fuzzy feature selection was then used. The model attained an accuracy of 98.13%. Attallah47 proposed the CerCanNet model, which integrates ResNet18 and Quadratic Support Vector Machine (QSVM) for pap smear cervical image classification, which attains an accuracy of 96.3% for the SipakMed dataset.

Machine learning-based models

Several ML-based approaches have been explored for cervical cancer diagnosis. A recent approach for CC diagnosis using the Gazelle Optimisation Algorithm (GOA) was proposed by Nour et al.48 It uses an improved MobileNetv3 architecture for feature extraction, and a Stacked Extreme Learning Machine (SELM) was employed for classification. A two-phase classification model49 based on the HErlev dataset was proposed, achieving 98.80% accuracy. The approach involved extracting texture features from nucleolus and cytoplasm, then classification through an optimised multilayer feed-forward neural network. DenseNet169, a technique that combines RCNN architecture with an attention pyramid network, was employed by Cao et al.50 and the model attained an accuracy of 95.08%. Medical experts had to annotate the labels manually and bounding boxes using this procedure, which was time-consuming. CerviXpert, proposed by Akash et al.51 utilises deep CNN for cervical pap smear image subtype classification, which achieved accuracies of 98.04% and 98.60% for three-class and five-class classifications, respectively. Liu et al.20 proposed a CVM-Cervix framework for cervical pap smear image classification, which attained an accuracy of 92.87%. The model combines Xception and Multilayer Perceptron (MLP) for classification. Integrating a Stacked Autoencoder with Generative Adversarial Networks (SOD-GAN) has been explored to facilitate lesion detection and classify cervical cell images into premalignant and malignant categories. Another study52 addressed segmentation and feature extraction issues with an accuracy of 97.08%. To enhance the accuracy of the cervical cancer prediction model, Ijaz et al.53 used outlier removal techniques such as DBSCAN and iForest. Graph Convolution Network (GCN) was utilised by Shi et al.54 for cervical pap smear classification, which attained an accuracy of 98.37%.

Hybrid models

Hybrid and ensemble models are becoming more popular for diagnosing cervical cancer, cancer grading and subtype classification. The CompactVGG model in55 demonstrated classification accuracies of 97.80% and 94.81%, respectively, on the HErlev and SipakMed datasets. Huang et al.56 utilised DenseNet-121, VGG-16, ResNet-50, and Inception v3 on various datasets, including HErlev and SipakMed. The highest accuracy was achieved by DenseNet-121 95.33%. Dong et al.5 proposed a hybrid model called BiNext-Cervix for cervical cancer subtyping. The model combines ConvNext and BiFormer and achieves an accuracy of 83.51%. Chauhan et al.57 utilised the progressive resizing technique with Principal Component Analysis (PCA) to classify cervical pap smear images and attained an accuracy of 98.97% for five-class classification and 99.29% for 2-class classification. Wu et al.58 employed a combination of two models CNN and transformers, named the CTCNet model, which attained an accuracy of 97.74%. Other studies explored object identification algorithms, including CenterNet, Faster R-CNN, and YOLOv5 by Xu et al.59 for cervical cancer detection. The aforementioned model’s tolerance to high variability and scalability to larger data sets remains challenging. Chauhan et al.60 utilised a hybrid-based network for cervical whole slide image classification and attained an accuracy of 97.45% and 99.49% for the sipakmed and LBP datasets, respectively. Table 1 summarises various state-of-the-art cervical cancer subtype classification techniques using pap smear images.

Earlier works have explored graph-based models in medical image analysis, but these models rely mostly on simple graph constructions and traditional CNNs. In contrast, our proposed architecture introduces a CSCNN to perform effective deep feature extraction combined with a k-dimensional hypergraph model to capture higher-order, non-pairwise relationships of features. With this, more complicated and semantic interactions can be simulated.

Proposed CSHG-CervixNet architecture

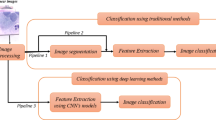

Figure 1 illustrates the overall working methodology diagram. The proposed hybrid model CSHG-CervixNet is trained and validated using the SipakMed cervical cancer cytology images. The model integrates a Compound Scaling Convolutional Neural Network (CSCNN) for feature extraction and a k-dimensional-based Hypergraph Neural Network (kd-HGNN) for robust classification.

Data set description

The SipakMed Database contains 4049 images featuring individual cells extracted from 966 group cell images obtained from whole slide images. These images are captured using a CCD camera mounted with an optical microscope. The cell images are sorted into five groups, including normal, abnormal, and benign. Normal cells are classified into “Superficial-Intermediate” and “Parabasal” categories, whereas abnormal cells, which are not malignant, are sorted into “Koilocytes” and “Dyskeratotic” groups. Additionally, a category for benign cells is called “Metaplastic” cells. The data set distribution is represented in Fig. 2. Figure 3 shows the subtypes of cervical cancer.

Feature extraction using compound scaling convolutional neural network (CSCNN)

The histopathological images from the SipakMed dataset serve as input, where deep features are extracted using a Compound Scaling Convolutional Neural Network. Unlike existing deeper CNN architectures like DenseNet ResNet, our feature extraction model utilises a compound scaling method. The Compound scaling method scales the network’s depth \(\:\widehat{\text{d}}\), width \(\:\widehat{w}\), and resolution \(\:\widehat{r}\) uniformly using a compound coefficient \(\:d\). This ensures effective scalability and efficiency, calculated using the following Eqs. (1–3).

where \(\:\alpha\:\) to scale the depth (number of layers), \(\:\beta\:\:\)to scale width (number of channels per layer) and\(\:\:\gamma\:\) to scale input resolution (height and width of input images). \(\:\varnothing\:\) is the user-defined coefficient. In our model, the base network is scaled by compound coefficients with \(\:\varnothing\:=1\) and a 1.2× deeper network, 1.1× wider channels, and 1.15× larger input resolution is achieved compared to the baseline CNNs.

The overall architecture of the CSCNN model is illustrated in Fig. 4. The architectural illustration shows a complete overview of the model, including the configuration of each layer, image size, stride and the most important functional components. CSCNN comprises 17 layers, with feature extraction eventually leading to the Global Average Pooling (GAP) layer. The GAP layer pools spatial information from the final feature maps into a low-dimensional feature vector well suited for the subsequent classification tasks. The model architecture initiates with a Conv2d layer, which preserves the spatial dimensions of the input feature maps by dynamically adjusting padding when stride \(\:s\) = 1. The size of the output of the convolutional layer is defined by

where the padding size is calculated using\(\:\:[f-1]/2\) based on the kernel size \(\:f\), after convolution, the feature maps are normalised by Batch Normalisation (BN). BN normalises the activations for every mini batch, making the training process stable and faster. The convolution output is ensured to have a mean of 0 and a variance of 1, accompanied by learnable scaling and shifting operations. Equation (5) computes the batch normalisation’s (\(\:\mathbf{Y}\)) output.

In this case, \(\:\mathbf{X}\) is the input, while\(\:\:\mu\:\) and \(\:\sigma\:\) are the input’s mean and variance, respectively.\(\:\:\gamma\:\) and \(\:\beta\:\) stand for the learnable parameters. To prevent division by zero, \(\:\delta\:\) is a small constant. The core building block of the CSCNN is a Mobile Inverted Bottleneck Convolution Block (MBConv block), which integrates five essential operations: depth-wise convolution, Projection, Squeeze and Excitation (SE) module, Expansion, and Swish Activation. The Expansion part verifies the expansion factor É. The input is extended using a \(\:1\times\:1\) convolution if É is greater than 1. A Depth-wise Separable Convolution (DSC) is used in this stage, applying one convolutional filter per input channel with stride \(\:s\) and kernel size \(\:f\). DSC performs convolution independently over each input channel. The output feature map for depthwise convolution is mathematically represented as:

where \(\:{\mathbf{f}}_{dw}\) is the depthwise kernel applied per channel. Equation (7) is utilised to determine the output size of this layer.

.

Two fully connected layers (expansion and reduction) and a squeeze operation (global average pooling) are applied in the squeeze and excitation (SE) module to recalibrate channel-wise feature responses. The number of output channels after excitation is reduced by using a ratio factor \(\:\omega\:\), given by:

Equation (8) can be used to define the output channels \(\:{C}_{out}\) from SE, where \(\:{c}_{in}\) denotes the number of input channels. The SE module recalibrates features using the sigmoid activation function. The output is reduced to the required number of channels by a final \(\:1\times\:1\) pointwise convolution. Equation (9) defines the swish activation function, which is used.

.

The model analyses the input image using MBConv blocks and convolutional layers, gradually extracting higher-level features at each step. MBConv blocks collect more complex patterns and correlations in the data, while the first convolution layers capture low-level features. It efficiently extracts essential features using SE blocks, expands and projects convolutions, and depthwise separable convolutions.

The CSCNN model systematically improves feature representation in successive layers. Early convolutional layers handle low-level features (e.g., edges, textures), and high-level semantic patterns (e.g., cellular structures) are formed in deeper MBConv blocks. Combining depthwise separable convolutions, expansion-projection mechanisms, and SE blocks enables cost-effective feature extraction while ensuring parameter efficiency. Using compound scaling, CSCNN attains a high-resolution tradeoff between performance and model complexity and is thus suitable for histopathological image analysis.

K-dimensional hypergraph neural network (kd-HGNN) with propagation for classification

The features extracted by CSCNN are subsequently processed by kd-HGNN. Relationships between sets of objects are represented by hypergraphs, which makes it possible to simulate complex interactions in a variety of fields, including computer science, data mining, social network analysis, and combinatorial optimisation. Unlike conventional graph-based models that rely on pairwise relationships, kd-HGNNs represent complex multi-node relationships, making them suitable for histopathological image classification. However, standard HGNN has limitations such as losing higher-order feature interactions and suboptimal graph construction techniques. To address these issues, we employ kd-HGNN, enhancing feature representation and classification accuracy.

\(\:\varvec{G}=(\mathcal{V},\epsilon\:,\:\varvec{W})\) is the definition of a hypergraph, which consists of a vertex set \(\:\mathcal{V}\), a hyperedge set \(\:\epsilon\:\), and a hyperedge weight matrix \(\:\varvec{W}\). A hypergraph \(\:\varvec{G}\) can be represented as an \(\:\left|V\right|\times\:\left|E\right|\) incidence matrix \(\:\mathbf{H}\) whose entries are specified as

Equation (10) specifies the incidence matrix \(\:H\). The features of \(\:N\) images in our classification task can be expressed as follows: \(\:\mathbf{X}\:=[{\mathbf{x}}_{1},{\mathbf{x}}_{2}\:.\:.\:.\:,\:{\mathbf{x}}_{i}]\), where \(\:{\mathbf{x}}_{\varvec{i}}\) is the feature vector of the \(\:i\)-th image. Each image is treated as a vertex in a hypergraph, and hyperedges are created between the vertices using feature vectors that have been retrieved. The k-dimensional-based hypergraph is constructed based on the distance between two features. The k-nearest neighbours of each feature vector are determined using Euclidean Distance as the distance function to generate hyperedges. Euclidean distance \(\:d\) between two feature vectors \(\:p\) and \(\:q\) are represented as points in \(\:n\)-space, which can be computed by Eq. (11)

where \(\:{\mathbf{q}}_{i}\) and \(\:{\mathbf{p}}_{i}\) are the Euclidean vectors, starting from the origin of the space (initial point). Each hyperedge weight is initialised to 1, represented by a diagonal matrix. Equation (12) specifies the diagonal matrix representation of weight.

The hypergraph convolution can be formulated as,

Equation (13) is the mathematical representation of the hypergraph convolution operation, which propagates the features across the hypergraph structure. The node features at each layer get updated based on neighbouring node information through hyperedges. The symbols represent the diagonal matrices of vertex degrees and edge degrees. \(\:{\mathbf{D}}_{v}\) and \(\:{\mathbf{D}}_{e}\), respectively where \(\:{\mathbf{D}}_{v}={\sum\:}_{e\in\:\epsilon\:}w\left(e\right)\mathbf{H}(v,e)\) and \(\:{\mathbf{D}}_{e}={\sum\:}_{v\in\:\mathcal{V}}\mathbf{H}(v,e)\). \(\:\varvec{\Theta\:}\) is the hypergraph propagation matrix and \(\:\sigma\:\) is a non-linear activation function, RELU. The parameter to be learned during training is \(\:\varvec{\Theta\:}\in\:{R}^{{C}_{1}\times\:{C}_{2}}\), where \(\:{C}_{1}\) and \(\:{C}_{2}\) are the feature dimensions. A three-layer hypergraph neural network has been employed. Batch normalisation is used, and the hidden layer’s channel count is 32. The cross-entropy loss function is minimised during training using the Adam optimiser, which has a learning rate 0.01.

Figure 5 illustrates the overall workflow of the classification model kd-HGNN. The proposed classification model, the kd-HGNN model, consists of two layers of hypergraph convolutional (HGConv). The input to the first HGConv layer is the 2304-dimensional feature vector obtained using the CS-CNN. Each HGConv layer is followed by the ReLu activation function and a dropout rate of 0.5 to prevent overfitting. The output of the HGConv layer is then passed through the fully connected layers, producing the final class probabilities via a SoftMax activation function. The model is trained using the Adam optimiser with a learning rate of 0.01 and weight decay of \(\:5\times\:{10}^{-4}\). Training is done for 200 epochs and measured using typical classification metrics on the validation set, such as accuracy and F1-score. The model uses fixed hyperparameters such as learning rate, dropout rate, and number of neighbours \(\:k\) in the hypergraph construction. However, it may exhibit sensitivity to hyperparameter tuning, and the performance could vary under different configurations.

Experimental analysis and discussion

The proposed CSHG-CervixNet uses the Intel i9 processor 12th Generation workstation with 64GB RAM. A comprehensive evaluation of both five-fold cross-validation and hold-out validation was carried out. A comparative analysis between the conventional Graph Convolutional Network (GCN) and the proposed CSHG-CervixNet was conducted under both validation settings. Further, an ablation study was performed to analyse the effect of the hypergraph propagation mechanism, through the comparison of the performance of the model with and without the feature propagation module.

The essential features are extracted using a Compound Scaling Convolutional Neural Network. t-SNE visualisation is employed to visualise the relationship between the features effectively. This t-SNE visualisation effectively explores the complex relationships and similarities among the various cervical cancer subtypes identified by the CSCNN. As can be seen from Fig. 6, the features are correctly classified and separated. A hypergraph is constructed from the extracted features from the CSCNN.

Figure 7 presents a sample hypergraph construction, where (a) represents \(\:k=6\) with 25 images, and (b) represents \(\:k=8\:\)with 25 images, both derived from the extracted image dataset.

Figure 8 shows the progressive construction of a k-Nearest Neighbours (KNN) graph with k = 10, showing increasing connectivity from 25% (2 neighbours per node) to 100% (10 neighbours per node).

At 25% connectivity, where each node has only two neighbours, the network is sparse and limits node interactions. As connectivity increases to 50% with five neighbours per node, previously isolated clusters merge, forming a more structured and cohesive network. When the connectivity reaches 75% with seven neighbours per node, the network becomes significantly denser, improving inter-node relationships and strengthening overall cohesion. Ultimately, the graph creates a completely connected structure with 100% connectivity, where every node has ten neighbours, guaranteeing optimal interconnectivity. This makes it possible for information to spread throughout the network effectively. The colour bar in the visualisation represents different node classes or other pertinent metrics, which offer information about the graph’s categorisation or clustering patterns. Figure 9a shows the complete kNN graph with t-SNE visualisation. The KNN distance distribution for k = 10 shows most Euclidean distances clustering around 0.045 (Fig. 9b).

Hold out validation results

For hold-out validation, a fixed partitioning approach was used to test the generalisation performance of the models. The dataset was split up into 70% training, 15% validation, and 15% test in a stratified manner to maintain class distribution and avoid sample overlap between subsets, thus preventing possible data leakage.

Using hold-out validation, a comparative analysis has been conducted between conventional GCN and the proposed CSHG-CervixNet model. Figure 10a illustrates the confusion matrix of the GCN. Figure 10b depicts the ROC curve for the GCN.

Figure 11a shows the proposed model’s confusion matrix, clearly showing each class’s true positive, false positive, true negative, and false negative values. Compared to GCN, the confusion matrix reveals few misclassifications, highlighting the proposed model’s robustness in classifying cervical whole slide images. Table 2 shows the performance metrics of the proposed model evaluated by hold-out validation. The five classes’ accuracy, F1-score, recall, precision, and specificity are calculated separately. Figure 11b shows the ROC Curve for the proposed CSHG-CervixNet.

The L2 regularisation technique was used to limit the model’s complexity and promote generalisation to avoid overfitting during training. This involves penalising high weight magnitudes, providing smoother optimisation and ensuring balanced feature propagation. Normalisation techniques were also incorporated to stabilise learning dynamics and reduce sensitivity to internal covariate shifts, mitigating the risk of overfitting.

K-fold cross-validation results

To ensure the model’s robustness and generalizability, a fivefold cross-validation approach was utilised. First, the dataset was divided into five stratified folds to maintain the class distribution in each. One fold was kept aside as the test set in each iteration. The remaining four were divided into training and validation subsets, and 20% of the training fold was reserved for validation. The training set was used to train the model for 200 epochs in every fold, and the performance was tracked on the validation set. The parameters of the model that obtained the best validation score were saved and then tested on the respective test fold. Accuracy and F1-score performance metrics were calculated using a shared evaluator for every fold. This thorough cross-validation process guarantees an equitable evaluation of the model’s classifying ability for multiple data splits and reduces the overfitting potential inherent to hold-out validation. Figure 12a shows the confusion matrix of the conventional GCN, which demonstrates a moderate number of true positive detections, along with a significant number of false positives and negatives, especially in classes like Koilocytotic and Superficial_intermediate. Figure 12b shows the ROC curve of the GCN model. The overall accuracy attained by the GCN model is 98.96%.

Figure 13a shows the confusion matrix of the proposed CSHG-CervixNet, which yields considerably better true positive rates for all five classes. The reduced number of false positives and false negatives indicates a lower misclassification rate, highlighting the model’s improved accuracy and class discrimination capability. Figure 13b illustrates the ROC curve of the proposed model, highlighting its strong class-wise separability. The overall accuracy of CSHG-CervixNet is 99.31%, which outperforms the conventional GCN model.

The comparative analysis between the traditional GCN and the proposed CSHG-CervixNet model, as shown in Figs. 12 and 13, provides strong evidence of the superior classification performance achieved by the proposed CSHG-CervixNet. The class-wise performance metrics, such as Specificity, Precision, Recall, and F1-score for the proposed CSHG-CervixNet model, are tabulated in Table 3.

The comparative analysis of the performance metrics GCN vs. CSHG-CervixNet with hold-out and k-fold cross-validation is tabulated in Table 4.

The results show that CSHG-CervixNet outperforms the GCN model consistently in performance metrics such as accuracy, precision, recall, F1-score, and specificity. Interestingly, fivefold cross-validation produces better performance scores than hold-out validation for both models, suggesting better generalizability and robustness. More specifically, CSHG-CervixNet yields an accuracy of 99.31% and an F1-score of 99.34% through fivefold cross-validation, outperforming the baseline GCN by about 0.35% in accuracy and 0.72% in F1-score. In addition, the specificity levels above 99% in all experiments validate the model’s strong ability to identify negative samples correctly to avoid false positives. These results confirm the effectiveness of the CSHG-CervixNet model and highlight the advantage of using cross-validation in model testing.

Ablation study

An ablation study was conducted with and without feature propagation to evaluate the effect of the hypergraph propagation mechanism. The CSHG-CervixNet model, when evaluated without propagation, recorded a precision of 98.72%, recall of 99.14%, and F1-score of 99.04% with a global accuracy of 98.97%. However, the model with propagation recorded better measures: precision of 98.97%, recall of 99.38%, F1-score of 99.34%, specificity of 99.77%, and accuracy of 99.31%. The propagation mechanism resulted in a significant decrease in misclassification and enhanced the consistency of class predictions. These results demonstrate the efficacy of integrating feature propagation into the hypergraph structure, augmenting the model’s ability to learn inter-class relationships and fine-grained feature variations in cervical WSIs.

Table 5 shows that the CSHG-CervixNet model with a feature propagation mechanism outperformed the model without propagation across all key metrics. Table 6 compares the proposed model’s accuracy, F1-score, recall, and precision with the cutting-edge methods. The comparison table shows that our proposed CSHG-CervixNet model performs better than other state-of-the-art techniques.

The use of a hypergraph-based structure in our model CSHG-CervixNet facilitates the modelling of complex interdependencies between the deep image features. This approach enables robust performance across various evaluation metrics, accuracy (99.31%), precision (98.97%), recall (99.38%) and F1-score (99.34%). While models such as BiNext-Cervix and CervixFormer VisionCervix yield competitive results, they tend to exhibit certain limitations. Transformer-based models usually demand large-scale data to generalise well and are computationally expensive. Although hybrid models attempt to combine both strengths, they can become architecturally complex and costly, affecting scalability and interpretability. Our proposed hypergraph approach, by contrast, offers a distinct advantage in dealing with complex feature interactions, an aspect not directly handled by most of the traditional or hybrid models discussed. This makes the proposed approach a structurally unique alternative in the context of cervical cancer subtype classification. Even though the model attains better accuracy, the model’s performance is sensitive to hyperparameter settings, which require careful tuning for optimum results.

Conclusion

A hybrid model for precisely classifying CC whole slide images is proposed. The model leverages a Compound Scaling Convolutional Neural Network to capture the images’ intricate morphological features. A robust k-dimensional-based Hypergraph Convolutional Neural Network is employed to further enhance the classification performance, which models the higher-order relationships between the feature vectors using the hyperedge construction based on neighbourhood similarity. The experimental analysis was conducted on the benchmark SipakMed dataset. The model achieved an overall classification accuracy of 99.31% and precision, recall, and F1-score values of 98.97%, 99.38%, and 99.34%, significantly outperforming the existing baseline techniques. The proposed model CSHG-CervixNet provides an efficient and accurate solution to automated cervical cancer subtype classification by addressing both feature representation and relational dependencies inherent in cytological data.

Although the model guarantees high classification performance, applying the framework to the multi-centre clinical database is challenging, as it involves variability in histopathological slide quality and patient demographics and integration with existing diagnostic workflows. Future studies should overcome these limitations by increasing model efficiency, hyperparameter search automation, and validating the methodology with diverse real-world clinical datasets. Model interpretability is one of the major considerations for clinical use. In future work, we will aim to integrate Explainable AI (XAI) methods to enable further insights into the model predictions.

Data availability

This work is based on the cervical cancer pap smear cytology images, and the SipakMed dataset is available in Kaggle. It is publicly available at https://www.kaggle.com/datasets/prahladmehandiratta/cervical-cancer-largest-dataset-SipakMed.

References

Vu, M., Yu, J., Awolude, O. A. & Chuang, L. Cervical cancer worldwide. Curr. Probl. Cancer. 42, 457–465 (2018).

Wu, J. et al. Global burden of cervical cancer: current estimates, temporal trend and future projections based on the GLOBOCAN 2022. J. Natl. Cancer Cent. https://doi.org/10.1016/j.jncc.2024.11.006 (2025).

Arbyn, M. et al. Estimates of incidence and mortality of cervical cancer in 2018: a worldwide analysis. Lancet Glob. Health. 8, e191–e203 (2020).

Chauhan, N. K. & Singh, K. Diagnosis of cervical cancer with oversampled unscaled and scaled data using machine learning classifiers. In 2022 IEEE Delhi Section Conference (DELCON) 1–6. https://doi.org/10.1109/DELCON54057.2022.9753298 (IEEE, 2022).

Dong, M., Wang, Y., Zang, Z. & Todo, Y. BiNext-Cervix: A novel hybrid model combining biformer and ConvNext for pap smear classification. Appl. Intell. 55 (2025).

Zhang, C. et al. Assessment of the relationships between invasive endocervical adenocarcinoma and human papillomavirus infection and distribution characteristics in china: according to the new WHO classification criteria in 2020. Cancer Epidemiol. 86, 102442 (2023).

Yu, W. et al. A 5-year survival status prognosis of nonmetastatic cervical cancer patients through machine learning algorithms. Cancer Med. 12, 6867–6876 (2023).

Alquran, H. et al. Cervical net: A novel cervical cancer classification using feature fusion. Bioengineering. 9, 578 (2022).

Goswami, A., Goswami, N. G. & Sampathila, N. Deep learning-based classification of cervical cancer using pap smear images. In 2023 4th International Conference on Intelligent Technologies (CONIT), 1–6. https://doi.org/10.1109/CONIT61985.2024.10627588 (IEEE, 2024).

Das, S., Sethy, M., Giri, P., Nanda, A. K. & Panda, S. K. Comparative analysis of machine learning and deep learning models for classifying squamous epithelial cells of the cervix.

Huang, P. et al. Classification of cervical biopsy images based on LASSO and EL-SVM. IEEE Access. 8, 24219–24228 (2020).

Boafo, Y. G. An overview of computer—aided medical image classification. Multimed. Tools Appl. https://doi.org/10.1007/s11042-024-19558-1 (2024).

Pang, L. et al. Semantic consistency network with edge learner and connectivity enhancer for cervical tumor segmentation from histopathology images. Interdiscip. Sci. https://doi.org/10.1007/s12539-025-00691-w (2025).

Tran, M. et al. Navigating through whole slide images with hierarchy, Multi-Object, and Multi-Scale data. IEEE Trans. Med. Imaging. https://doi.org/10.1109/TMI.2025.3532728 (2025).

Bakkouri, I. & Afdel, K. Computer-aided diagnosis (CAD) system based on multilayer feature fusion network for skin lesion recognition in dermoscopy images. Multimed. Tools Appl. 79, 20483–20518 (2020).

Mahmood, T., Saba, T., Rehman, A. & Alamri, F. S. Harnessing the power of radiomics and deep learning for improved breast cancer diagnosis with multiparametric breast mammography. Expert Syst. Appl. 249, 123747 (2024).

Hussain, D. et al. Revolutionising tumor detection and classification in multimodality imaging based on deep learning approaches: methods, applications and limitations. J. X-Ray Sci. Technol. Clin. Appl. Diagn. Ther. 32, 857–911 (2024).

Dixit, S., Kumar, A. & Srinivasan, K. A current review of machine learning and deep learning models in oral Cancer diagnosis: recent technologies, open challenges, and future research directions. Diagnostics. 13, 1353 (2023).

Nazir, A., Hussain, A., Singh, M. & Assad, A. A novel approach in cancer diagnosis: integrating holography microscopic medical imaging and deep learning techniques—challenges and future trends. Biomed. Phys. Eng. Express. 11, 022002 (2025).

Liu, W. et al. CVM-Cervix: A hybrid cervical Pap-smear image classification framework using CNN, visual transformer and multilayer perceptron. Pattern Recognit. 130, 108829 (2022).

Liu, L. et al. Computer-aided diagnostic system based on deep learning for classifying colposcopy images. Ann. Transl. Med. 9, 1045–1045 (2021).

Attallah, O. Cervical cancer diagnosis based on multi-domain features using deep learning enhanced by handcrafted descriptors. Appl. Sci. 13, 1916 (2023).

Bakkouri, I. & Afdel, K. Multi-scale CNN based on region proposals for efficient breast abnormality recognition. Multimed. Tools Appl. 78, 12939–12960 (2019).

Mahmood, T., Rehman, A., Saba, T., Wang, Y. & Alamri, F. S. Alzheimer’s disease unveiled: Cutting-edge multi-modal neuroimaging and computational methods for enhanced diagnosis. Biomed. Signal. Process. Control. 97, 106721 (2024).

Han, Y., Tuo, S., Li, Y. & Zhao, Q. Multirelational fusion graph convolution network with multiscale residual network for fault diagnosis of complex industrial processes. IEEE Trans. Instrum. Meas. 73, 1–15 (2024).

Bhatti, U. A., Tang, H., Wu, G., Marjan, S. & Hussain, A. Deep learning with graph convolutional networks: An overview and latest applications in computational intelligence. Int. J. Intell. Syst. (2023).

Song, W. & Yang, Z. Improving distantly supervised relation extraction with multi-level noise reduction. AI 5, 1709–1730 (2024).

Bakkouri, I. & Afdel, K. MLCA2F: Multi-level context attentional feature fusion for COVID-19 lesion segmentation from CT scans. Signal. Image Video Process. 17, 1181–1188 (2023).

Huang, P., Luo, X. & FDTs A feature disentangled transformer for interpretable squamous cell carcinoma grading. IEEE/CAA J. Autom. Sinica. https://doi.org/10.1109/JAS.2024.125082 (2025).

Rehman, A., Mahmood, T. & Saba, T. Robust kidney carcinoma prognosis and characterisation using Swin-ViT and DeepLabV3 + with multi-model transfer learning. Appl. Soft Comput. 170, 112518 (2025).

Wang, T., Wang, Z., Li, H., Xia, C. & Zhao, C. HHG-Bot: A hyperheterogeneous graph-based twitter bot detection model. IEEE Trans. Comput. Soc. Syst. https://doi.org/10.1109/TCSS.2025.3543419 (2025).

Waikhom, L. & Patgiri, R. A survey of graph neural networks in various learning paradigms: methods, applications, and challenges. Artif. Intell. Rev. 56, 6295–6364 (2023).

Song, Z., Yang, X., Xu, Z. & King, I. Graph-based semi-supervised learning: A comprehensive review. IEEE Trans. Neural Netw. Learn. Syst. 34, 8174–8194 (2023).

Gao, Y., Feng, Y., Ji, S. & Ji, R. HGNN+: general hypergraph neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 45, 3181–3199 (2023).

Dogan, Y. AutoEffFusionNet: A new approach for cervical cancer diagnosis using ResNet-based autoencoder with attention mechanism and genetic feature selection. IEEE Access. 13, 44107–44122 (2025).

Khan, A. et al. A multi-scale swin transformer-based cervical pap-Smear WSI classification framework. Comput. Methods Progr. Biomed. 240, 107718 (2023).

Muksimova, S., Umirzakova, S., Baltayev, J. & Cho, Y. I. RL-Cervix.Net: A hybrid lightweight model integrating reinforcement learning for cervical cell classification. Diagnostics. 15, 364 (2025).

Muksimova, S., Umirzakova, S., Kang, S. & Cho, Y. I. CerviLearnNet: Advancing cervical cancer diagnosis with reinforcement learning-enhanced convolutional networks. Heliyon. 10, e29913 (2024).

Alsubai, S. et al. Privacy preserved cervical cancer detection using convolutional neural networks applied to pap smear images. Comput. Math. Methods Med. 2023 (2023).

Bhatt, A. R., Ganatra, A. & Kotecha, K. Cervical cancer detection in pap smear whole slide images using ConvNet with transfer learning and progressive resizing. PeerJ Comput. Sci. 7, e348 (2021).

Lin, H., Hu, Y., Chen, S., Yao, J. & Zhang, L. Fine-Grained classification of cervical cells using morphological and appearance based convolutional neural networks. IEEE Access. 7, 71541–71549 (2019).

-Rehman, A., Ali, N., Taj, I. A., Sajid, M. & Karimov, K. S. An automatic mass screening system for cervical cancer detection based on convolutional neural network. Math. Probl. Eng. 2020, 1–14 (2020).

Chen, W., Shen, W., Gao, L. & Li, X. Hybrid loss-constrained lightweight convolutional neural networks for cervical cell classification. Sensors. 22, 3272 (2022).

Mohammed, M. A., Abdurahman, F. & Ayalew, Y. A. Single-cell conventional pap smear image classification using pre-trained deep neural network architectures. BMC Biomed. Eng. 3, 11 (2021).

Maurya, R., Nath Pandey, N., & Kishore Dutta, M. VisionCervix: Papanicolaou cervical smears classification using novel CNN-Vision ensemble approach. Biomed. Signal. Process. Control. 79, 104156 (2023).

K, H. & Dhandapani, V. V. A. G. CervixFuzzyFusion for cervical cancer cell image classification. Biomed. Signal. Process. Control. 85, 104920 (2023).

Attallah, O. CerCan·Net: Cervical cancer classification model via multilayer feature ensembles of lightweight CNNs and transfer learning. Expert Syst. Appl. 229, 120624 (2023).

Nour, M. K. et al. Computer aided cervical cancer diagnosis using gazelle optimization algorithm with deep learning model. IEEE Access. 12, 13046–13054 (2024).

Fekri-Ershad, S. & Ramakrishnan, S. Cervical cancer diagnosis based on modified uniform local ternary patterns and feed forward multilayer network optimised by genetic algorithm. Comput. Biol. Med. 144, 105392 (2022).

Cao, L. et al. A novel attention-guided convolutional network for the detection of abnormal cervical cells in cervical cancer screening. Med. Image Anal. 73, 102197 (2021).

Akash, R. S., Islam, R., Badhon, S. S. I. & Hossain, K. T. CerviXpert: A multi-structural convolutional neural network for predicting cervix type and cervical cell abnormalities. Digit. Health 10, (2024).

Elakkiya, R., Teja, K. S. S., Deborah, J., Bisogni, L., Medaglia, C. & C. & Imaging based cervical cancer diagnostics using small object detection - generative adversarial networks. Multimed. Tools Appl. 81, 191–207 (2022).

Ijaz, M. F., Attique, M. & Son, Y. Data-driven cervical cancer prediction model with outlier detection and over-sampling methods. Sensors. 20, 2809 (2020).

Shi, J. et al. Cervical cell classification with graph convolutional network. Comput. Methods Progr. Biomed. 198, 105807 (2021).

Chen, H. et al. CytoBrain: cervical cancer screening system based on deep learning technology. J. Comput. Sci. Technol. 36, 347–360 (2021).

Huang, P., Tan, X., Chen, C., Lv, X. & Li, Y. AF-SENet: classification of cancer in cervical tissue pathological images based on fusing deep Convolution features. Sensors. 21, 122 (2020).

Chauhan, N. K. et al. A hybrid learning network with progressive resizing and PCA for diagnosis of cervical cancer on WSI slides. Sci. Rep. 15, 12801 (2025).

Wu, J. et al. CTCNet: a fine-grained classification network for fluorescence images of circulating tumor cells. Med. Biol. Eng. Comput. https://doi.org/10.1007/s11517-025-03297-y (2025).

Xu, L., Cai, F., Fu, Y. & Liu, Q. Cervical cell classification with deep-learning algorithms. Med. Biol. Eng. Comput. 61, 821–833 (2023).

Chauhan, N. K., Singh, K., Kumar, A. & Kolambakar, S. B. HDFCN: A robust hybrid deep network based on feature concatenation for cervical cancer diagnosis on WSI Pap smear slides. Biomed. Res. Int. 2023 (2023).

Kaushik, B., Mahajan, A., Chadha, A., Khan, Y. F. & Sharma, S. A fine-tuned adaptive weight deep dense meta stacked transfer learning model for effective cervical cancer prediction. Phys. Scr. 100, 036002 (2025).

Abinaya, K. & Sivakumar, B. Improved cervicalnet: integrating attention mechanisms and graph convolutions for cervical cancer segmentation. Int. J. Mach. Learn. Cybern. https://doi.org/10.1007/s13042-025-02576-2 (2025).

Pacal, I. MaxCerVixT: A novel lightweight vision transformer-based approach for precise cervical cancer detection. Knowl. Based Syst. 289, 111482 (2024).

Mehedi, M. H. K. et al. A lightweight deep learning method to identify different types of cervical cancer. Sci. Rep. 14, 29446 (2024).

Liu, Y., Wang, Z., Dai, A. & Gu, W. A novel ensemble framework based on CNN models and Swin transformer for cervical cytology image classification. In Seventh International Conference on Computer Graphics and Virtuality (ICCGV 2024), vol. 5 (ed. Li, J.). https://doi.org/10.1117/12.3029445 (SPIE, 2024).

AlMohimeed, A. et al. ViT-PSO-SVM: cervical cancer predication based on integrating vision transformer with particle swarm optimization and support vector machine. Bioengineering. 11, 729 (2024).

Tan, S. L., Selvachandran, G., Ding, W., Paramesran, R. & Kotecha, K. Cervical Cancer classification from pap smear images using deep convolutional neural network models. Interdiscip. Sci. 16, 16–38 (2024).

Gonzalez-Ortiz, O., Muñoz Ubando, L. A. & Andrés Soto Fuenzalida, G. & Magallanes Garza, G. I. Evaluating DenseNet121 neural network performance for cervical pathology classification. In 2024 IEEE 37th International Symposium on Computer-Based Medical Systems (CBMS), 297–302. https://doi.org/10.1109/CBMS61543.2024.00056 (IEEE, 2024).

Zhu, L., Wang, X., Ke, Z., Zhang, W. & Lau, R. BiFormer: Vision transformer with bi-level routing attention. https://epfml.github.io/attention-cnn/ (2023).

Yaman, O. & Tuncer, T. Exemplar pyramid deep feature extraction based cervical cancer image classification model using pap-smear images. Biomed. Signal. Process. Control. 73, 103428 (2022).

Dosovitskiy, A. et al. An image is worth 16×16 words: Transformers for image recognition at scale. (2020).

Funding

We have not received any funding for this study.

Author information

Authors and Affiliations

Contributions

P.G., P.S., A.T.S., designed the study, conducted the experiments, and analysed the data. S.N., and R.A. provided critical feedback, helped write and edit the manuscript, and guided the project. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The above work is not based on animal or real-time human data. It is based on data from the public repository.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Govindaraj, P., Natarajan, S., Sampath, P. et al. A hybrid compound scaling hypergraph neural network for robust cervical cancer subtype classification using whole slide cytology images. Sci Rep 15, 22201 (2025). https://doi.org/10.1038/s41598-025-05891-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-05891-4