Abstract

This paper introduces a novel approach to enhancing spectrum sensing accuracy for 5G and LTE signals using advanced deep learning models, with a particular focus on the impact of systematic hyperparameter tuning. By leveraging state-of-the-art neural network architecture, namely DenseNet121 and InceptionV3—the study aims to overcome the limitations of traditional spectrum sensing methods in highly dynamic and noisy wireless environments. The research highlights that, through rigorous hyperparameter optimization, these models achieved substantial improvements in detection accuracy, reaching 97.3% and 98.2%, respectively, compared to initial performance levels of 93.0% and 95.0%. These performance improvements were particularly notable in controlled scenarios where low signal-to-noise ratio frames were excluded, with 60% of frames containing little or no information—highlighting the critical role of signal quality in both training and evaluation. It is worth noting that the models were trained and tested on a large and diverse dataset, including synthetic signals and real-world data, simulating a wide range of practical deployment conditions. This comprehensive database supports the generalizability of the proposed approach and its real-world applicability. The study also confirms that the models demonstrated competitive performance in various test scenarios, and that their integration into future wireless systems could significantly enhance smart spectrum management and real-time communication reliability in modern networks.

Similar content being viewed by others

Introduction

In the rapidly evolving landscape of wireless communication systems, the emergence of 5G technology alongside the widespread deployment of LTE networks has ushered in a new era of connectivity characterized by unprecedented speeds, low latency, and enhanced reliability1,2. With the proliferation of diverse wireless devices and the increasing demand for spectrum efficiency, the efficient utilization of available radio frequencies has become paramount. Spectrum sensing, a crucial aspect of cognitive radio networks, plays a pivotal role in enabling dynamic spectrum access by detecting and analyzing unused or underutilized frequency bands3,4. Traditional methods often rely on manual analysis, which can be time-consuming and prone to error5. Traditional spectrum sensing techniques often face challenges in accurately detecting signals in dynamic and noisy environments, particularly in the context of 5G and LTE systems with varying signal characteristics and deployment scenarios. To address these challenges and enhance spectrum sensing capabilities for 5G and LTE signals, this paper proposes the utilization of advanced deep learning models in conjunction with rigorous hyperparameter tuning. Deep learning algorithms, known for their ability to automatically learn intricate patterns from vast amounts of data, offer a promising approach to improving the accuracy and robustness of spectrum sensing mechanisms6,7.

By leveraging the power of deep learning models applied in this study, such as DenseNet121, InceptionV3, and ResNet50, advanced performance in data processing and analysis was achieved or hybrid architectures, this research aims to develop a sophisticated framework that can effectively identify and classify different types of signals in complex wireless environments. Furthermore, through systematic hyperparameter tuning, the proposed framework seeks to optimize the performance of deep learning models by fine-tuning critical parameters to achieve superior detection accuracy and efficiency8,9. The integration of advanced deep learning techniques with hyperparameter optimization has the potential to revolutionize spectrum sensing in 5G and LTE networks, enabling more intelligent and adaptive spectrum management strategies. Through empirical evaluations and comparative analyses, this study aims to demonstrate the efficacy of the proposed approach in enhancing spectrum sensing capabilities and facilitating the seamless coexistence of diverse wireless technologies in next-generation communication systems10. This study explores the application of deep learning, specifically semantic segmentation networks, to automate the identification of 5G NR and LTE signals in wideband spectrograms11.

The paper’s main contribution is enhancing spectrum sensing for 5G and LTE signals through advanced deep-learning models and systematic hyperparameter tuning. It focuses on:

-

1.

Enhanced Detection Accuracy: The paper introduces advanced deep learning models specifically designed to improve the accuracy of spectrum sensing for 5G and LTE signals, addressing the limitations of traditional methods.

-

2.

Comparative Analysis: The study analyzes different deep learning architectures, offering insights into which models perform best under various scenarios.

-

3.

Real-time Implementation: The research showcases the feasibility of deploying these deep learning models in real-time environments, emphasizing their potential for practical applications in dynamic wireless networks.

-

4.

Hyperparameter Tuning: This section emphasizes the importance of systematic hyperparameter tuning and demonstrates its impact on optimizing model performance and ensuring reliable spectrum sensing in variable conditions.

-

5.

Contribution to Spectrum Management: By enhancing spectrum sensing capabilities, the paper contributes to more efficient spectrum management strategies, vital for accommodating the growing demand for bandwidth in modern communication systems.

Overall, the paper advances the field of intelligent spectrum management for modern communication systems.

The rest of this paper is structured as follows: “Related work” provides an overview of some previous research in Spectrum Sensing for 5G and LTE Signals. “Methodology” proposes the framework. Subsequently, “The experimental and result ” presents the results of the experimental evaluation. Finally, “Advanced model of DenseNet 121 and inception v3” summarizes key findings and contributions.

Related work

Recent advancements in spectrum sensing have increasingly focused on leveraging deep learning techniques to address the challenges posed by low signal-to-noise ratio (SNR) conditions.

For instance, paper12 introduced a deep-learning-based method that utilizes the ‘SenseNet’ network to enhance spectrum-sensing accuracy specifically in low-SNR environments. This approach combines multiple signal features—including energy statistics, power spectrum, cyclo-stationarity, and I/Q components—into a comprehensive matrix for detection. The authors identified that existing methodologies often struggle under complex conditions at low SNR, which was a significant limitation. The results demonstrated that the SenseNet model achieved a spectrum-sensing accuracy of 58.8% at −20 dB SNR, marking a 3.3% improvement over conventional convolutional neural network models. This research underscores the potential of integrating deep learning into spectrum management, particularly in scenarios where traditional methods fall short.

In13, another notable contribution in spectrum sensing focused on modulation classification of Orthogonal Frequency Division Multiplexing (OFDM) signals pertinent to emerging technologies like 5G. This study employed a convolutional neural network (CNN)-based classifier, achieving an impressive accuracy of 97% using over-the-air (OTA) datasets. The methodology included the estimation of symbol duration and cyclic prefix length, critical for accurate classification, complemented by a robust feature extraction algorithm. The research assessed both synthetic and real-world OTA datasets, effectively addressing challenges such as synchronization errors that can affect feature extraction and classification accuracy. By successfully filtering these effects, the CNN demonstrated consistent performance, achieving the minimum 97% accuracy with OTA data above the required SNR. This work highlights the efficacy of deep learning approaches in not only enhancing spectrum sensing but also facilitating modulation classification in complex wireless environments.

On the other hand, A14 study further advanced the field of spectrum sensing by employing a convolutional network (ConvNet) specifically designed for intelligent signal identification in 5G and LTE environments. Utilizing a combination of semantic segmentation techniques, short-time Fourier transform, and wideband spectrogram images enhanced by DeepLabv3 + and ResNet18, this research aimed to address complex signal detection challenges. The methodology involved synthetic signals characterized by spectrum occupancy and standards-specified channel models incorporating diverse radio frequency (RF) impairments. The results indicated the model’s robustness, achieving approximately 95% mean accuracy in signal identification, even under various practical channel conditions. This work exemplifies the efficacy of deep learning in enhancing spectrum sensing capabilities, particularly in environments with challenging RF characteristics.

Additionally, In15, a significant advancement in wideband spectrum sensing was achieved through the development of a parallel convolutional neural network (ParallelCNN) specifically designed for the detection of tiny spectrum holes. This innovative approach utilizes two parallel CNNs that process in-phase and quadrature (I/Q) samples concurrently, enhancing both accuracy and efficiency. The model was rigorously evaluated on various transmissions, including LTE-M, 5G NR, and Wi-Fi, and demonstrated markedly improved performance compared to previous methods such as DeepSense and Temporal Convolutional Networks (TCN). Notably, the ParallelCNN achieved a latency reduction of up to 94% while surpassing its predecessors in precision and recall metrics. This research highlights the potential of parallel processing in deep learning to significantly enhance the responsiveness and accuracy of spectrum sensing in complex wireless environments.

A16 study introduced a spectrum sensing algorithm utilizing a deep neural network tailored for cognitive radio applications. This research employed a Noisy Activation CNN-GRU-Network (NA-CGNet) to enhance anti-noise performance, particularly in low signal-to-noise ratio (SNR) environments. The methodology included preprocessing the received primary user (PU) signal through energy normalization, addressing common challenges such as poor sensing performance due to noise interference. The NA-CGNet was evaluated at an SNR of −13 dB, achieving an accuracy of 0.7557 with a false alarm probability of 7.06%. This work underscores the model’s capability to perform effectively in challenging conditions, representing a significant step forward in improving spectrum sensing reliability within cognitive radio frameworks.

Finally, In17, a study focused on cooperative spectrum sensing by employing a Deep Residual Network (ResNet) to enhance detection capabilities. This research integrated a data cleansing algorithm alongside crowd-sourced sensors to improve spectrum availability sensing. The methodology involved a test statistic refining scheme utilizing the Deep Residual Network, paired with data fusion techniques to enhance the accuracy of detection. Operating within a Rician channel environment, the implementation of the data cleansing algorithm significantly improved detection probabilities. The results indicated an impressive detection probability of 0.9578, achieved using a matrix size of 10 × 5. This work demonstrates the effectiveness of advanced deep learning architectures in elevating spectrum sensing performance through cooperative approaches.

The following Table 1 summarizes the previous work in the realm of spectrum sensing, highlighting various methodologies, datasets, results, and challenges addressed by different studies:

Methodology

The methodology of this study is structured into several key phases, ensuring a comprehensive approach to enhancing spectrum sensing for 5G and LTE signals using advanced deep learning models. The models were trained and validated using a large and diverse dataset, including synthetic and real captured signals, enhancing their ability to generalize and making them suitable for real-world 5G and LTE applications. Since the training data includes a large and diverse dataset comprising both synthetic and real-world signals, the models are well-positioned for practical deployment in real-world networks. One key challenge is the sensitivity to noise, especially in low SNR environments, which may affect performance under fluctuating signal conditions. Additionally, the computational cost associated with training and deploying deep learning models such as DenseNet121 and InceptionV3 remains a consideration. These models require substantial resources, which may limit their direct use in resource-constrained systems or where real-time operation is required.

Data generation

In the deep learning domain, one significant advantage of using wireless signals is the ability to synthesize high-quality training data. For this study, 5G New Radio (NR) signals were generated using the 5G Toolbox™, while LTE signals were created using LTE Toolbox™ functions. These signals were passed through standard-compliant channel models to simulate realistic propagation effects. Further dataset description and analysis are discussed in “Dataset descriptions”.

These signals were then passed through channel models specified by the relevant standards to create a robust and realistic training dataset. In this study, raw wireless signals were converted into spectrogram images using the Short-Time Fourier Transform (STFT)18, providing a time-frequency representation of the signals with dimensions of 128 × 128 (for custom models) or 256 × 256 (for transfer learning models). Each spectrogram visually encodes signal intensity across time and frequency, serving as input to the semantic segmentation network.

Each training frame, with a duration of 40 milliseconds, was designed to contain either 5G New Radio (NR) signals, LTE signals, or a combination of both, with the signals being randomly shifted in frequency within the specified band of interest. This random frequency shifting simulates real-world scenarios and enhances the network’s ability to generalize.

For labeling, a pixel-wise semantic segmentation approach was adopted, where each pixel in the spectrogram image was annotated as belonging to one of three classes: LTE signal, 5G NR signal, or Noise. These labels were generated using a combination of synthetic data annotations and manual verification on real-world captured data to ensure accuracy.

Two different modeling approaches were explored: the first involved training a custom semantic segmentation network from scratch using 128 × 128 RGB spectrogram images as input. The second approach utilized transfer learning with the Deeplabv3 + architecture, employing a ResNet-50 backbone and trained on 256 × 256 RGB images. These models were trained to learn spatial and spectral patterns from the labeled spectrograms, enabling precise identification and classification of signal components within the wireless spectrum.

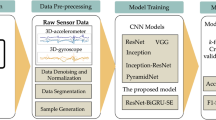

In this section, we explore various network architectures and models employed in our study to address the challenges posed by the dataset.

Transfer learning with Deeplabv3+

The second approach utilized transfer learning with the Deeplabv3 + architecture, which is renowned for its effectiveness in semantic segmentation tasks. This model was built upon a ResNet-50 backbone and trained on higher-resolution 256 × 256 RGB images. By leveraging pre-trained weights from a model previously trained on a large and diverse dataset, we significantly reduced the overall training time and computational resources required. Transfer learning proved particularly advantageous in scenarios where labeled data was limited, as it allowed the model to leverage learned features from the base network, enhancing performance and generalization capabilities. This strategy not only improved accuracy but also facilitated quicker convergence during the training process.

Compare between training model

Building on the previously discussed data generation and model design approaches, this section compares the performance of several deep learning architectures under consistent evaluation conditions. To comprehensively evaluate performance, a variety of deep learning architectures were tested.

-

ResNet-18: Known for its residual learning framework, ResNet-18 helps to mitigate the vanishing gradient problem, allowing for deeper networks without sacrificing performance.

-

MobileNetv2: This architecture is designed for mobile and edge devices, prioritizing lightweight models while maintaining robust performance. Its use of depth wise separable convolutions makes it highly efficient.

-

ResNet-50: A deeper version of ResNet-18, ResNet-50 incorporates more layers, enabling it to learn more complex features and representations.

-

Efficient Net: This family of models scales up the network dimensions systematically using a compound scaling method, achieving state-of-the-art accuracy while being computationally efficient.

Advanced model of densenet 121 and inception v3

Each of architectures was rigorously evaluated to facilitate a comparative analysis of performance across different model families. Metrics such as accuracy, F1 score, and computational efficiency were considered, providing valuable insights into the strengths and weaknesses of each approach in relation to the dataset. Figure 1 illustrates the frame work of the proposed methodology.

This section present the results of developing and evaluating deep neural network models used in the spectrum detection task of 5G and LTE signals, focusing on incorporating a balanced proportion of real and synthetic data to enhance the models’ generalization ability. The models were evaluated using a set of standard performance metrics that reflect different aspects of prediction quality. The global accuracy was 91.8%, the mean IoU was 63.6%, the weighted IoU was 84.8%, and the mean BF score was 73.3%, reflecting the quality of the detected shapes matching the truth. The study included a comparison of several deep architecture models, leveraging the key features of the ResNet-18, MobileNetV2, ResNet-50, and EfficientNet models to support and improve the performance of DenseNet121 and InceptionV3. For example, DenseNet121 leveraged the residual blocks of ResNet-18 to enhance feature transfer, while InceptionV3 used multi-level features of ResNet-50 to enhance feature extraction at different scales. These models were applied to spectral images representing signals using the short-order Fourier transform (STFT) and trained on balanced data from real and synthetic sources, achieving robust and balanced performance in spectral detection. These results reflect the efficiency of the selected models and their suitability for use in real wireless network environments, while taking into account the balance between accuracy and computational performance.In this section, we present the results of developing and evaluating deep neural network models used in the spectrum detection task of 5G and LTE signals, focusing on incorporating a balanced proportion of real and synthetic data to enhance the models’ generalization ability. The models were evaluated using a set of standard performance metrics that reflect different aspects of prediction quality. The global accuracy was 91.8%, the mean IoU was 63.6%, the weighted IoU was 84.8%, and the mean BF score was 73.3%, reflecting the quality of the detected shapes matching the truth. The study included a comparison of several deep architecture models, leveraging the key features of the ResNet-18, MobileNetV2, ResNet-50, and EfficientNet models to support and improve the performance of DenseNet121 and InceptionV3. For example, DenseNet121 leveraged the residual blocks of ResNet-18 to enhance feature transfer, while InceptionV3 used multi-level features of ResNet-50 to enhance feature extraction at different scales. These models were applied to spectral images representing signals using the short-order Fourier transform (STFT) and trained on balanced data from real and synthetic sources, achieving robust and balanced performance in spectral detection. These results reflect the efficiency of the selected models and their suitability for use in real wireless network environments, while taking into account the balance between accuracy and computational performance.

Hyperparameter tuning

Hyperparameter tuning is a critical step in the development of our models, as it directly influences their performance and ability to generalize to new data. For each network architecture, we applied Bayesian optimization to systematically explore and optimize key hyperparameters, including the learning rate, batch size, number of epochs, and choice of optimizer. Bayesian optimization allowed us to efficiently navigate the hyperparameter space by modeling the performance of the model as a probabilistic function, enabling us to identify optimal parameter configurations with fewer evaluations. The learning rate, which controls the magnitude of weight updates during training, was finely tuned to achieve a balance between convergence speed and stability. We selected batch sizes that ensured efficient use of computational resources while maintaining a representative sample of the dataset in each iteration. Additionally, we explored various epoch counts to determine the optimal training duration, preventing overfitting while ensuring adequate learning. The Adam optimizer was chosen for its effectiveness in adapting the learning rate during training, which contributed to faster convergence and improved performance in our spectrum sensing task. This comprehensive optimization process aimed to enhance the models’ efficacy in accurately detecting and classifying signals in real-world scenarios.

The experimental and result

Dataset descriptions

The datasets utilized in this study19,20,21 are fundamentally structured to support the development and evaluation of deep learning models for radio spectrum sensing. They comprise three primary components: synthetic training data, real-world captured data22,23 (refers to real-world data collected from actual 5G/LTE networks or environments, representing real signal conditions, noise, and interference. It is typically gathered from sensors, devices, or real-time communication systems), and a pre-trained model. The synthetic dataset23 (is artificially generated data, often created through simulation tools or models. It is used to simulate various scenarios and network conditions in a controlled environment, but may not capture the full complexity or variability of real-world data.) consists of 128 × 128 spectrogram images generated using MATLAB’s 5G Toolbox™ and LTE Toolbox™, which were used to simulate New Radio (NR) and LTE signals, respectively. These signals were passed through standardized ITU and 3GPP channel models to reflect realistic propagation effects. Furthermore, random shifts were applied in both time and frequency domains to emulate practical deployment conditions, such as user mobility, frequency offsets, and timing variations. This variability enhances the model’s exposure to diverse signal patterns and improves its ability to generalize beyond controlled environments.

Each training frame represents a 40-millisecond segment containing either NR, LTE, or a combination of both, categorized into three semantic classes: LTE, NR, and Noise. Pixel-wise semantic segmentation was employed for annotation, whereby each pixel in the spectrogram is labeled based on the presence and location of signal energy in the time-frequency domain. Active signal regions were labeled according to their corresponding protocol type (LTE or NR), while inactive or non-signal areas were marked as Noise. In addition to synthetic data, a set of captured spectrograms—acquired using RF receivers—was included to evaluate model robustness in real-world conditions and mitigate potential domain shifts between simulated and practical environments.

The deep learning approaches were investigated to learn the spatial and spectral structure of the signals. it utilized transfer learning with the DeepLabv3 + semantic segmentation architecture, employing a ResNet-50 backbone pretrained on large-scale image datasets and fine-tuned using 256 × 256 RGB inputs. This approach leverages the advantages of pre-learned features to accelerate training and enhance performance, particularly when labeled data is limited.

Given the computational demands associated with training deep models on large and complex datasets, cloud computing infrastructure was employed to enable efficient training workflows and systematic hyperparameter tuning. This hybrid framework—integrating synthetic and captured data, advanced segmentation techniques, and scalable computation—provides a robust foundation for deploying real-time, intelligent spectrum sensing models in dynamic 5G and LTE wireless communication environments.

These data set19,20,21 are related to the field of spectral sensing and contain training data (128 × 128), forecast data using custom models, and captured data for analysis. They can be used to train and analyze the performance of machine learning models in radio spectrum detection.

In the deep learning domain, one significant advantage of using wireless signals is the ability to synthesize high-quality training data, which is crucial for effective model training. In this specific application, 5G New Radio (NR) signals were generated using the 5G Toolbox™, while LTE signals were produced using LTE Toolbox™ functions. As described in Sect. 3.1, the dataset includes both synthetic signals generated via MATLAB toolboxes and real-world captured spectrogram.

These signals were then passed through channel models specified by the relevant standards to create a robust and realistic training dataset. Each training frame, with a duration of 40 milliseconds, was designed to contain either 5G NR signals, LTE signals, or a combination of both, with the signals being randomly shifted in frequency within the specified band of interest. This random shifting helps in simulating real-world scenarios and enhances the network’s ability to generalize. Regarding the network architecture, a semantic segmentation network was employed to identify spectral content within the spectrograms generated from the signals. Two different approaches were explored in this context. The first approach involved training a custom network from scratch using 128 × 128 RGB images as input. This method allows for the design of a network tailored specifically to the characteristics of the dataset. The second approach utilized transfer learning, leveraging the Deeplabv3 + architecture with a ResNet-50 base network. This network was trained on 256 × 256 RGB images. Transfer learning is particularly beneficial as it allows the model to take advantage of pre-trained weights, typically learned from large datasets, thereby speeding up the training process and potentially improving performance, especially when labeled data is limited.

The previous Fig. 2 shows the sample frame of the data and Fig. 3 presents a detailed analysis of a received wireless signal, represented through two subplots. The top subplot, labeled “Received Spectrogram,” illustrates the signal’s intensity over time and across a frequency range from 2320 MHz to 2380 MHz The color scale varies from blue to yellow, where yellow indicates regions of higher signal intensity, particularly around 2350 MHz The bottom subplot, titled “Estimated Signal Labels,” categorizes the signal into three distinct types: noise, LTE (Long-Term Evolution), and NR (New Radio, likely 5G). Most of the frequency spectrum is identified as noise (in pink), while a specific segment around 2350 MHz is classified as LTE (blue) and NR (cyan), suggesting active communication signals in that frequency range. Together, these plots provide a comprehensive view of the signal’s behavior and its classification within the observed frequency band.

For the training dataset, both synthetic 5G NR signals and LTE signals were generated using the 5G Toolbox™ and LTE Toolbox™, respectively. These signals were passed through channel models specified by relevant standards, simulating real-world conditions. Each frame in Fig. 4 had a duration of 40 milliseconds and contained either 5G NR, LTE, or a combination of both signals, randomly shifted in frequency within the specified band of interest.

The training process was conducted using the stochastic gradient descent with momentum (SGDM) optimization algorithm. This algorithm is known for its efficiency in converging to an optimal solution by accelerating the training process using momentum.

Table 2; Fig. 5 shows the training dataset was meticulously divided, with 80% allocated for training, 10% for validation, and the remaining 10% reserved for testing. This split ensures that the model is properly evaluated and fine-tuned throughout the training process. Additionally, class weighting was implemented to address any imbalances in the dataset, ensuring that the model does not become biased towards any class. By applying these techniques, the network was trained to effectively identify and segment different types of spectral content, improving its ability to operate in complex wireless environments.

Data provided from the following links were used to train the model19, with 80% of the data allocated to this set21, was used to validate the model’s performance during training. For the test set20, was used to test the model after the training process was complete. The data was split using random partitioning to ensure balanced representation of all patterns in the data across the different sets. This splitting is vital for obtaining accurate and systematic results in evaluating model performance.

Performance metrics of the data

Given the difficulty of obtaining sufficient and diverse natural data due to data collection constraints and information privacy, we generated synthetic data that accurately mimicked the characteristics of natural data using advanced scientific methods. Specialized tools and simulators, such as the 5G Toolbox and LTE Toolbox from the MATLAB environment, were used, which generate digital signals that adhere to official standards and protocols for telecommunications networks. After generating these signals, they were passed through simulated wireless channel models to reflect the effects of the real environment. This approach ensures that the synthetic data exhibits the same intrinsic characteristics as real data, enabling efficient model training and enhancing their generalizability and practicality.

The performance of the trained network was evaluated using several metrics:

The Table 3 illustrated the performance of the trained network on synthetic data was assessed using several key metrics. Global accuracy, which reflects the overall proportion of correctly classified pixels across all classes, achieved a value of 91.802%, indicating that the network performed well on the synthetic dataset. Mean IoU (Intersection over Union), a crucial metric for semantic segmentation tasks, was 63.619%. This value suggests that while the network could correctly classify most of the pixels, there were still areas for improvement, particularly in distinguishing between different classes. The Weighted IoU was considerably higher at 84.845%, which accounts for class imbalance by giving more weight to frequently occurring classes. Lastly, the Mean BF (Boundary F1) Score was 73.257%, indicating that the network performed moderately well in accurately segmenting the boundaries between different spectral content within the synthetic data.

When evaluating the network on captured data in Table 4, the performance metrics showed significant improvements. Global Accuracy reached 97.702%, demonstrating that the network was highly effective in correctly classifying the spectral content in real-world conditions. The Mean Accuracy, which provides an average of the accuracies for each class, was 96.717%, indicating consistent performance across different classes. The Mean IoU also improved substantially to 90.162%, showing that the network was more adept at correctly identifying and segmenting the various classes within the captured data. The Weighted IoU increased to 95.796%, reflecting the network’s enhanced ability to manage class imbalance. The Mean BF Score rose to 88.535%, suggesting that the network was better at precisely delineating the boundaries between different classes in real-world data compared to synthetic data.

For the captured dataset, approximately 60% of frames—primarily those characterized by low SNR and high temporal redundancy—were excluded, as they did not contribute meaningful diversity to the training data. Following this filtering, the network’s performance reached near-perfect levels. As shown in Table 5, the Global Accuracy increased to 99.924%, indicating highly accurate spectral classification. The Mean Accuracy reached 99.83%, reflecting consistent performance across all classes in the absence of excessive noise. The Mean IoU climbed to 99.56%, demonstrating excellent segmentation capability, while the Weighted IoU reached 99.849%, indicating strong handling of class balance. Finally, the Mean BF Score of 99.792% confirmed the model’s precision in delineating class boundaries under cleaner conditions. These results highlight the network’s potential for robust performance generalizability.

Network architectures and models

This comprehensive evaluation allowed us to identify the strengths and weaknesses of each architecture, guiding the selection of the most effective model for practical deployment. Algorithm 2 represents all the network model development and evaluation steps during model training and testing. This approach involved dividing the dataset into three subsets, where each subset was used once as a validation set while the remaining two subsets were used for training. The model training was implemented using the Keras library, while data splitting and cross-validation were managed using scikit-learn. The choice of 3 folds was motivated by the large size of the dataset and the considerable computational resources required for training deep learning models. This method helps mitigate the risk of overfitting and reduces the impact of randomness by providing a more comprehensive assessment of the model’s performance across different data splits.

ResNet-18

Among the various architectures evaluated, ResNet-18 serves as a foundational baseline model due to its simplicity and proven effectiveness in image classification tasks. It is a relatively shallow but effective convolutional neural network architecture that belongs to the ResNet (Residual Network) family24. ResNet-18 utilizes residual connections to address the vanishing/exploding gradient problem common in deep neural networks, enabling high performance on various computer vision tasks despite having only 18 weighted layers.

With approximately 11.7 million parameters, ResNet-18 is notably lightweight compared to deeper networks, making it a popular choice as a baseline or feature extractor, especially in applications requiring efficient inference or transfer learning. The performance of the trained network was evaluated using several metrics: global accuracy, mean accuracy, mean Intersection over Union (IoU), weighted IoU, and mean Boundary F1 (BF) score25.

The ResNet-18 model is a deep convolutional neural network designed for image classification as illustrates in Fig. 6. It begins by accepting an input image, which is passed through a 7 × 7 convolutional layer with 64 filters and a stride of 2. This reduces the spatial dimensions of the image. Following this, a 3 × 3 max pooling layer with a stride of 2 further decreases the image size while retaining important features. The network continues with several blocks of 3 × 3 convolutional layers, each progressively increasing the number of filters from 64 to 512. These convolutional layers work to extract increasingly abstract and complex features from the input image. After these convolutional layers, average pooling is applied to summarize the learned features and reduce the spatial dimensions further26. The output of this pooling layer is then fed into a fully connected layer, which helps in forming the final prediction. Finally, a softmax layer is used to classify the input image into one of two possible categories, resulting in binary classification outputs27.

ResNet-50

ResNet-50 is a 50-layer variant of the ResNet deep learning architecture, introduced in 2015 by researchers at Microsoft, that utilizes residual connections to enable the training of very deep neural networks; it takes 224 × 224 pixel RGB images as input, has around 25 million trainable parameters, offers strong performance on various computer vision tasks like image classification (achieving around 76% top-1 accuracy on ImageNet), and is widely used as a backbone or feature extraction network in state-of-the-art deep learning models due to its balance of complexity, efficiency, and performance.

The diagram represents the architecture of a deep convolutional neural network, resembling a ResNet-like model in Fig. 7. The process begins with an input image that passes through a zero-padding (ZERP PAD) layer to ensure consistent dimensions for convolution. In Stage 1, the image goes through a convolutional layer (Conv) to extract low-level features, followed by batch normalization (Batch Norm) to stabilize and speed up training28. A ReLU activation function introduces non-linearity, and max pooling (Max Pool) reduces the spatial dimensions while preserving key features. In Stage 2, two convolutional blocks (Conv Block ID x2) are applied for deeper feature extraction. Stage 3 increases the complexity of features with three convolutional blocks (Conv Block ID x3), while Stage 4 further extracts high-level abstractions using five convolutional blocks (Conv Block ID x5). Stage 5 utilizes two more convolutional blocks (Conv Block ID x2) to refine the extracted features. The image is then passed through an average pooling (AVG Pool) layer, which reduces the spatial dimensions by averaging pixel values in each region, summarizing the feature maps. The output is flattened into a one-dimensional vector and passed through a fully connected layer (FC) for classification. Finally, the output layer produces the network’s prediction29.

MobileNetv2

The following diagram represents the architecture of a deep convolutional neural network, likely based on the MobileNetV2 framework. The model begins by taking an input image with dimensions 128 × 128 × 3, where the image has a resolution of 128 × 128 pixels and three color channels (RGB). The image first undergoes preprocessing, which may include operations like resizing, normalization, or augmentation to prepare the data for further processing.

After preprocessing, the image is passed through a 3 × 3 convolutional layer that uses the ReLU activation function. This layer extracts basic features from the image, such as edges and textures. Following this, a 2 × 2 max-pooling layer is applied, which reduces the spatial dimensions of the feature map from 128 × 128 to 64 × 64, all while retaining the most significant information from the image30.

The core of the model is built on MobileNetV2 blocks as illustrates in Fig. 8, starting with a block that uses 32 filters to process the feature maps. This first block produces a feature map of size 64 × 64. The network continues to process these features in a second block, which applies 96 filters, further reducing the spatial size of the feature map to 32 × 32. Finally, the third block in the network consists of 1280 filters, which processes the data and outputs a smaller feature map of size 4 × 431.

Once these layers have processed the image, the output is flattened into a one-dimensional vector containing 1280 elements. This vector is passed into a fully connected layer, where the features are mapped to output classes. The final step involves a softmax classifier, which converts the fully connected layer’s output into probabilities that correspond to the different possible classes (C1, C2, … Cn). This probability distribution helps classify the input image into one of the predefined categories. This entire structure allows the network to efficiently process and classify images, balancing performance and computational efficiency32.

EfficientNet

EfficientNet is a family of highly efficient convolutional neural network (CNN) architectures developed by researchers at Google Brain, characterized by their use of a compound scaling method to uniformly scale the network’s depth, width, and resolution based on computational constraints, coupled with a set of innovative building blocks such as mobile inverted bottleneck convolutions, squeeze-and-excitation optimization, and swish activation functions, resulting in a range of EfficientNet-B0 to EfficientNet-B7 model variants that offer state-of-the-art performance on various image classification tasks with exceptional accuracy-efficiency tradeoffs, making them well-suited for deployment on resource-constrained devices while also serving as powerful feature extractors for transfer learning in a wide array of computer vision applications33.

The previous Fig. 9 illustrates the architecture of a deep convolutional neural network optimized for image processing, utilizing EfficientNet blocks for efficient feature extraction. The network begins by accepting an input image with dimensions 224 × 224 × 3, representing an RGB image. The first layer is a 3 × 3 convolutional layer, which extracts low-level features like edges and textures. Following this, a EfficientNet Convolution (MBConv1) with a 1x expansion factor and a 3 × 3 filter processes the features, ensuring low computational cost. The network then deepens with a series of MBConv6 layers, which apply a 6x expansion factor and 3 × 3 filters to extract more complex features while maintaining efficiency. This configuration is repeated multiple times, progressively capturing more detailed and abstract information. Next, the architecture shifts to MBConv6 layers with 5 × 5 filters, which, with their larger receptive field, are adept at detecting larger spatial features and refining the understanding of complex patterns. Several of these 5 × 5 MBConv6 layers are applied to further enhance feature extraction. Finally, the network produces a condensed feature map (7 × 7 × 320), which encapsulates essential image information, ready for classification or further processing tasks.

From the previous summarizing the main features of the models ResNet-18, MobileNetV2, ResNet-50, and EfficientNet. Table 6 also explains how their features can be used in DenseNet121 and InceptionV3 for feature extraction.

Advanced model of densenet 121 and inception v3

DenseNet121

DenseNet121 is a convolutional neural network architecture introduced in 2017 by researchers from Cornell University and Facebook AI Research, particularly well-suited for image classification tasks illustrates in Fig. 10. Its key features include dense connectivity where each layer takes input from all preceding layers, 121 total layers allowing it to learn complex representations, transition layers that control model complexity, and efficient parameter usage enabling state-of-the-art performance with a relatively small number of parameters33,34.

DenseNet121 achieves high accuracy on various benchmark datasets, such as ImageNet, and is particularly effective for tasks requiring detailed feature extraction35.

The Table 7 highlights the performance of the DenseNet121 model in image classification tasks, providing insights into its overall accuracy and segmentation quality. The model achieves a global accuracy of 93.802%, indicating that it can correctly classify the majority of images across various categories with a high degree of precision. In terms of segmentation performance, the mean Intersection over Union (IoU) stands at 68.619%, which suggests that the model is fairly effective in predicting object boundaries, though there is some room for improvement in capturing fine details. The weighted IoU, which accounts for the importance of different categories, is higher at 87.845%, reflecting the model’s strong performance on key categories with more significant representation in the dataset. Additionally, the model demonstrates good capability in detecting object edges, as shown by the mean Boundary F1 Score of 78.257%, which indicates reliable performance in recognizing fine details around object boundaries. Overall, DenseNet121 delivers robust results, particularly in global accuracy and weighted IoU, making it a reliable choice for image classification and segmentation tasks.

InceptionV3

The Inception V3 model is a convolutional neural network architecture introduced in 2015 by researchers at Google, serving as an improved version of the original Inception model and belonging to the broader Inception family as in Fig. 11. Key aspects of Inception V3 include its use of “Inception modules” to extract features at multiple scales simultaneously, factorized convolutions to reduce parameters and computations, auxiliary classifiers for additional supervision during training, extensive batch normalization for stabilizing the training process and improving generalization, and depth-wise separable convolutions to further reduce the model’s complexity36.

The overall network structure consists of stacked Inception modules interspersed with pooling layers, resulting in a model with around 23.8 million parameters, making it more complex than simpler CNN architectures like VGG-16 or ResNet-18, but less complex than deeper models like ResNet-50 or ResNet-10137,38.

The model achieved in Table 8a global accuracy of 95.802%, indicating its high capability in correctly classifying images. The mean Intersection over Union (IoU) of 72.619% demonstrates its precision in identifying and segmenting regions of interest within images. Furthermore, the weighted IoU of 89.845% reflects robust performance in prioritizing significant regions of the image. The mean Boundary F1 (BF) score of 82.257% underscores the model’s accuracy in delineating object boundaries. These results collectively illustrate that InceptionV3 delivers strong performance, despite being less complex than deeper architectures such as ResNet-50 or ResNet-101.

Result and discussion

The following Table 9 compares several neural network models based on four key metrics: the number of learnable parameters (in millions), overall detection accuracy, detection accuracy for captured data, and detection accuracy for synthetic data.

-

ResNet-18: With 20.6 million learnable parameters, it achieves an overall detection accuracy of 88%, 85% accuracy on captured data, and 92% accuracy on synthetic data.

-

MobileNetv2: This model, with 43.9 million parameters, reaches 90% overall accuracy, 87% on captured data, and 93% on synthetic data.

-

ResNet-50: Featuring 46.9 million parameters, it attains 92% overall accuracy, 90% for captured data, and 95% for synthetic data.

-

EfficientNet: With the largest number of parameters at 50.3 million, it shows strong performance with 94% overall accuracy, 91% on captured data, and 96% on synthetic data.

-

DenseNet121: Despite having fewer parameters (14.3 million), it achieves 93% overall accuracy, 89% for captured data, and 94% for synthetic data.

InceptionV3: With 23.9 million parameters, it records the highest overall accuracy at 95%, along with 92% for captured data and 97% for synthetic data.

.

The previous table provides a simplified analysis of the different model types and highlights cases where models perform poorly or inconsistently, which is crucial for understanding their limitations. For example, while models like ResNet-18 and MobileNetv2 exhibit lower detection accuracy (88% and 90%, respectively), they are computationally more efficient with fewer learnable parameters compared to more complex models like ResNet-50 and EfficientNet.

On the other hand, models like InceptionV3 and DenseNet121 offer higher detection accuracy (95% and 94%, respectively), but come at a higher computational cost. Thus, there is a trade-off between accuracy and computational efficiency, with simpler models offering faster processing times at the expense of slightly lower detection performance.

This comparison highlights how different architectures balance complexity (number of parameters) with performance across both captured and synthetic data.

Based on the analysis of training and validation loss over 20 epochs for various models in Fig. 12, here’s a detailed performance report and assessment of the best-performing model. ResNet-18 starts with high loss, which steadily decreases over the epochs, demonstrating effective learning. However, the validation loss shows some fluctuations, likely due to variability in the validation data. While ResNet-18 is not the top performer, it is a solid choice for general tasks, especially when model size or training speed is a priority39.

MobileNetv2 shows moderate performance in Fig. 13, with both training and validation loss improving over time. However, the model experiences some instability, particularly in the middle of the training process, and the validation loss exhibits more pronounced fluctuations. While MobileNetv2 is suitable for tasks requiring efficiency and smaller model size, it may not be the best option when the highest accuracy and stability are required.

In Fig. 14 the ResNet-50 model performs strongly, with a sharp decline in training loss, indicating faster learning compared to ResNet-18. The validation loss also decreases efficiently with greater stability, suggesting good generalization. These improvements make ResNet-50 a preferred choice over ResNet-18, especially for applications that demand higher accuracy.

In Fig. 15 EfficientNet model excels with a steady decline in training loss and minimal fluctuations, reflecting strong learning capability. The validation loss similarly shows consistent decrease and impressive stability, making EfficientNet one of the top performers. It’s ideal for applications requiring a balance between performance and model size40.

Figure 16 illustrates DenseNet121 model demonstrates a consistent decline in training loss with minor fluctuations, indicating effective learning. The validation loss follows a similar pattern, with slight fluctuations that indicate good generalization. DenseNet121 is a robust model with strong performance and relative stability, making it a solid choice for applications needing a moderately simple yet effective model.

Finally, InceptionV3stands out with outstanding performance in Fig. 17. The training loss decreases rapidly and consistently, showing highly effective learning, while the validation loss remains stable with minimal fluctuations, indicating excellent generalization capability. Among all models, InceptionV3 is the optimal choice for applications requiring the highest accuracy and performance41.

In conclusion, InceptionV3 emerges as the best-performing model, closely followed by EfficientNet, based on their excellent stability in validation loss and high learning efficiency. For applications where the highest accuracy and performance stability are paramount, InceptionV3 is ideal, while EfficientNet provides a more balanced option if efficiency and model size are also important considerations.

Hyperparameter tuning

Involves optimizing key parameters of the InceptionV3 model, such as learning rate, batch size, and dropout rates, to enhance its performance. This process ensures the model achieves better accuracy, generalization, and efficiency during training and inference. Proper tuning is critical for balancing complexity and computational cost, especially given InceptionV3’s 23.8 million parameters and its Inception module-based architecture.

Bayesian optimization search space

The Table 10 outlines the hyperparameter search space for two neural network architectures, DenseNet121 and InceptionV3, used in improving the detection of 5G and 4G networks. It lists various hyperparameters and their respective ranges or values for each model.

-

Learning Rate: Both DenseNet121 and InceptionV3 have a learning rate range from 1e-5 to 1e-2, following a log-uniform distribution.

-

Batch Size: The batch sizes for both models are set to 16, 32, or 64.

-

Dropout Rate: Both architectures have a dropout rate between 0.2 and 0.5 to prevent overfitting.

-

Optimizer: The optimizers used for both models are Adam and SGD (Stochastic Gradient Descent).

-

Epochs: Training epochs vary between 20, 40, and 60 for both DenseNet121 and InceptionV3.

This configuration allows for an extensive search of optimal parameters to enhance network performance in the context of 5G and 4G detection.

Hyperparameter tuning results

Hyperparameter tuning is a crucial step for improving the model’s performance. As shown in the Fig. 18, the process starts with data collection, which is used to train the model. Once the data is collected, an appropriate model selection is made based on the available data and the desired goals. After selecting the model, its performance evaluation is conducted using specific metrics to ensure it meets the desired objectives. One of the main challenges in this process is hyperparameter tuning, which involves iteratively adjusting the hyperparameters that influence how the model learns. Hyperparameters, unlike the parameters that the model learns during training, are fixed values set before training begins. Examples of hyperparameters include learning rate, number of layers, number of neurons, optimization algorithm, and activation function. As shown in the figure, hyperparameter tuning has a clear impact on model selection and performance evaluation, which helps in improving the model’s understanding of how to optimize for the best results across different stages of the process.

The Table 11 presents the results of Bayesian optimization conducted over 20 iterations, which identified the best hyperparameters for the DenseNet121 and InceptionV3 models.

-

Learning Rate: The optimal learning rate for DenseNet121 was found to be 0.00015, while for InceptionV3 it was 0.0004.

-

Batch Size: The best batch size for DenseNet121 was 32, and for InceptionV3, it was 16.

-

Dropout Rate: The optimal dropout rate was 0.3 for DenseNet121 and 0.4 for InceptionV3.

-

Optimizer: Both models found Adam to be the best optimizer.

-

Epochs: DenseNet121 performed best with 40 epochs, while InceptionV3 required 60 epochs for optimal performance.

The Table 12 provides a comparison of the final hyperparameters and performance for the InceptionV3 and DenseNet121 models before and after optimization.

Initial Accuracy: The initial accuracy for InceptionV3 was 95.0%, while DenseNet121 started at 93.0%.

Optimized Accuracy: After optimization, InceptionV3 achieved an accuracy of 98.2%, and DenseNet121 reached 97.3%.

Improvement: The accuracy improvement was + 3.2% for InceptionV3 and + 4.3% for DenseNet121.

Future improvements

In future work, there are several areas that can be explored to further enhance the performance and applicability of the proposed models. One potential direction is to investigate the robustness of the models in highly dynamic and noisy environments, particularly with low Signal-to-Noise Ratio (SNR) conditions, to better understand their limitations in real-world scenarios. Additionally, optimizing computational efficiency through model pruning or alternative architectures could facilitate the deployment of these models in resource-constrained environments. Further research should also explore the integration of multi-frequency band detection and cross-platform validation, ensuring that the models can be effectively applied across various communication systems. By addressing these areas, the proposed approach can be refined for broader and more reliable use in practical spectrum sensing applications.

Conclusion

The study presents a comprehensive and detailed approach to enhancing spectrum sensing capabilities for 5G and LTE signals by utilizing advanced deep learning models, paired with systematic hyperparameter tuning. The extensive experimentation and analysis demonstrate the transformative potential of these models in overcoming the limitations of traditional spectrum sensing techniques, particularly in environments characterized by dynamic signal conditions, high noise levels, and diverse signal characteristics. Through a rigorous comparative analysis of several deep learning architectures, InceptionV3 emerged as the top-performing model, achieving the highest detection accuracy of 98.2%. DenseNet121 closely followed with an accuracy of 97.3%, both models showing substantial improvements over their initial accuracies of 95.0% and 93.0%, respectively. These significant enhancements underscore the importance of careful hyperparameter tuning in achieving optimal performance. By fine-tuning parameters such as learning rate, batch size, dropout rates, and epochs, the models were able to better generalize to unseen data, making them more robust in real-world applications. The research highlights not only the improvement in detection accuracy but also the real-time feasibility of deploying these models within dynamic wireless networks. The ability of these deep learning models to process vast amounts of spectrum data in real time without compromising on accuracy or efficiency opens the door for practical implementations in cognitive radio and other wireless communication systems. Such advancements ensure a more intelligent, adaptive approach to spectrum management, making better use of available frequencies, reducing interference, and supporting seamless connectivity in next-generation networks.

Moreover, this work contributes significantly to the field of spectrum management by providing insights into how deep learning can be leveraged to improve the detection and classification of signals in challenging conditions, such as low signal-to-noise ratio (SNR) environments. The models’ adaptability to various signal conditions positions them as valuable tools for addressing the growing need for efficient bandwidth utilization in 5G, LTE, and future wireless technologies.

In conclusion, this research demonstrates that by integrating advanced deep learning techniques with systematic optimization, it is possible to significantly enhance the accuracy and efficiency of spectrum sensing systems. As the demand for bandwidth continues to grow with the expansion of wireless devices and services, the findings of this study offer a promising solution to the pressing need for intelligent and dynamic spectrum management strategies in modern communication systems.

Data availability

all datasets used and/or analyzed in this study have been appropriately referenced, and the relevant links to the datasets are included within the manuscript.

References

Nuriev, M. et al. The 5G revolution transforming connectivity and powering innovations. In E3S Web of Conferences. Vol. 515. (EDP Sciences, 2024).

Odida, M. O. The evolution of mobile communication: A comprehensive survey on 5G technology. J. Sen Net Data Comm. 4 (1), 01–11 (2024).

Murugan, S. & Sumithra, M. G. Big data-based spectrum sensing for cognitive radio networks using artificial intelligence. Big data analytics for sustainable computing. IGI Glob. 146–159 (2020).

Kassri, N. et al. A review on SDR, spectrum sensing, and CR-based IoT in cognitive radio networks. Int. J. Adv. Comput. Sci. Appl. 126 (2021).

Cheng, P. et al. Spectrum intelligent radio: Technology, development, and future trends. IEEE Commun. Mag. 58(1), 12–18 (2020).

Kulin, M. et al. End-to-end learning from spectrum data: A deep learning approach for wireless signal identification in spectrum monitoring applications. IEEE Access 6, 18484–18501 (2018).

Jdid, B. et al. Machine learning based automatic modulation recognition for wireless communications: A comprehensive survey. IEEE Access 9, 57851–57873 (2021).

Kumar, A. et al. Analysis of spectrum sensing using deep learning algorithms: CNNS and RNNS. Ain Shams Eng. J. 15(3), 102505 (2024).

Zhang, Y. & Luo, Z. A review of rsearch on spectrum sensing based on deep learning. Electronics 12(21), 4514 (2023).

Qiu, Y., Ma, L. & Priyadarshi, R. Deep learning challenges and prospects in wireless sensor network deployment. Arch. Comput. Methods Eng. 1–24 (2024).

Baumgartner, M. Often trusted but never (properly) tested: Evaluating qualitative comparative analysis. Sociol. Methods Res. 49(2), 279–311 (2020).

Yixuan, Z. & Zhongqiang, L. A Deep-Learning-Based method for spectrum sensing with multiple feature combination. Electronics 13, 2705–2705 (2024). https://doi.org/10.3390/electronics13142705

Byungjun, K., Christoph, F. & Mecklenbräuker., Peter, G. Deep Learning-based modulation classification of practical OFDM signals for spectrum sensing. arXiv.org: abs/2403.19292 (2024). https://doi.org/10.48550/arxiv.2403.19292

Thien, H. T. et al. Intelligent Spectrum Sensing with ConvNet for 5G and LTE Signals Identification. 140–144 (2023). https://doi.org/10.1109/ssp53291.2023.10208054

Ruru, M. & Zhugang, W. Deep learning-based wideband spectrum sensing: A low computational complexity approach. IEEE Commun. Lett. 27, 2633–2637. https://doi.org/10.1109/lcomm.2023.3310715 (2023).

Zhu, X., Zhang, X. spsampsps Wang, S. Research on spectrum sensing algorithm based on deep neural network. In International Conference in Communications, Signal Processing, and Systems. (Springer, 2022).

Raghunatha Rao, D., Jayachandra Prasad, T. & Giri Prasad, M. N. Applications deep learning based cooperative spectrum sensing with crowd sensors using data cleansing algorithm. In 2022 International Conference on Edge Computing and (ICECAA), Tamilnadu, India. 1276–1281 (2022). https://doi.org/10.1109/ICECAA55415.2022.9936242

Nair, S., Rajindran, B. S. & Ferrie, C. Short-time quantum Fourier transform processing. In ICASSP 2025–2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 1–5. (IEEE, 2025).

https://www.mathworks.com/supportfiles/spc/SpectrumSensing/SpectrumSensingTrainingData128x128.zip

https://www.mathworks.com/supportfiles/spc/SpectrumSensing/SpectrumSensingTrainedCustom_2024.zip

https://www.mathworks.com/supportfiles/spc/SpectrumSensing/SpectrumSensingCapturedData128x128.zip

Martinez-Esteso, J. P. et al. On the use of synthetic data for body detection in maritime search and rescue operations. Eng. Appl. Artif. Intell. https://doi.org/10.1016/j.engappai.2024.109586 (2025).

Shankar, A. et al. Transparency and privacy measures of biometric patterns for data processing with synthetic data using explainable artificial intelligence. Image Vis. Comput. https://doi.org/10.1016/j.imavis.2025.105429 (2025).

Ou, X. et al. Moving object detection method via ResNet-18 with encoder–decoder structure in complex scenes. IEEE Access 7, 108152–108160 (2019).

Wongso, H. Recent progress on the development of fluorescent probes targeting the translocator protein 18 kDa (TSPO). Anal. Biochem. 655, 114854 (2022).

Zhang, H. & Zheng, Y. Improved ResNet for image classification. In J. Comput. Vis. Image Underst. (2022).

Nguyen, H. Fast object detection framework based on mobilenetv2 architecture and enhanced feature pyramid. J. Theor. Appl. Inf. Technol. 98(05) (2020).

Kaur, P. & Singh, S. ResNet-50 based deep learning model for image classification. In Proceedings of the 2022 International Conference on Computer Vision and Image Processing (2022).

Patel, V. & Kumar, A. Enhancements to ResNet-50 for improved performance in real-time object detection. J. Real-Time Image Process. (2023).

Hastuti, E. et al. Performance of true transfer learning using CNN DenseNet121 for COVID-19 detection from chest X-ray images. In 2021 IEEE international conference on health, Instrumentation & Measurement, and natural sciences (InHeNce). (IEEE, 2021).

Chen, J., Zhang, Y. & Wang, M. Improving MobileNetV2 for Real-Time Object Detection. In 2021 International Conference on Artificial Intelligence and Computer Engineering (ICAICE) (2021).

Alzubaidi, L. et al. MobileNetV2 for medical image classification: A case study. In Biomedical Signal Processing and Control (2022).

Sam, S. et al. Offline signature verification using deep learning convolutional neural network (CNN) architectures GoogLeNet inception-v1 and inception-v3. Procedia Comput. Sci. 161, 475–483 (2019).

Sahu, A. K. & Verma, A. Improving Image Classification using DenseNet121 and Transfer Learning. In 2020 IEEE Calcutta Conference (CALCON) (2020).

Sadiq, A. et al. Application of DenseNet121 for Skin Lesion Classification: A Comparative Study. In Expert Systems with Applications (2022).

Rezende, E. et al. Malicious software classification using transfer learning of resnet-50 deep neural network. In 16th IEEE international conference on machine learning and applications (ICMLA). (IEEE, 2017).

Kaur, M. & Nand, K. Optimizing InceptionV3 for Real-Time Image Classification. In 2020 IEEE International Conference on Imaging Systems and Techniques (IST) (2020).

Sharma, P. & Gupta, S. A comparative study of InceptionV3 and ResNet for image classification in medical imaging. J. Biomed. Inform. (2021).

Marques, G., Agarwal, D. & Díez, Isabel De la Torre. Automated medical diagnosis of COVID-19 through efficientnet convolutional neural network. Appl. Soft Comput. 96, 106691 (2020).

Bello, I. et al. Revisiting resnets: improved training and scaling strategies. Adv. Neural. Inf. Process. Syst. 34, 22614–22627 (2021).

Zaidi, S. M. A., Manalastas, M., Abu-Dayya, A. & Imran, A. AI-assisted RLF avoidance for smart EN-DC activation. In GLOBECOM 2020–2020 IEEE Global Communications Conference. 1–6. (IEEE, 2020).

Author information

Authors and Affiliations

Contributions

Sally Mohamed Ali contributed by conceptualizing the research idea, designing the methodology, and conducting the practical implementation.Samah Mohamed Osman was responsible for developing the theoretical framework, creating the illustrations, and contributing to parts of the practical work.Samah Adel Gamel reviewed previous studies, identified research gaps and challenges, and provided the necessary contributions to enhance the research.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Elmorsy, S.M.A., Osman, S.M. & Gamel, S.A. Enhanced spectrum sensing for 5G and LTE signals using advanced deep learning models and hyperparameter tuning. Sci Rep 15, 24825 (2025). https://doi.org/10.1038/s41598-025-07837-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07837-2

Keywords

This article is cited by

-

Advanced of LLM transformers and zero-shot XGBoost for accurate Arabic text insights and profit predictions

Journal of Electrical Systems and Information Technology (2026)