Abstract

Medical imaging systems such as computed tomography (CT) and magnetic resonance imaging (MRI) are vital tools in clinical diagnosis and treatment planning. However, these modalities are inherently susceptible to Gaussian noise introduced during image acquisition, leading to degraded image quality and impaired visualization of critical anatomical structures. Effective denoising is therefore essential to enhance diagnostic accuracy while preserving fine details such as tissue textures and structural boundaries. This study proposes a robust and efficient denoising framework specifically designed for CT and MRI images corrupted by Gaussian noise. The method integrates a cluster-wise principal component analysis (PCA) thresholding approach guided by the Marchenko–Pastur (MP) law from random matrix theory and a non-local means algorithm. Noise level estimation is achieved globally by analysing the statistical distribution of eigenvalues from noisy image patch matrices and leveraging the MP law to accurately determine the Gaussian noise variance. An adaptive clustering technique is employed to group similar patches based on underlying features such as textures and edges and enables localized denoising operations tailored to heterogeneous image regions. Within each cluster denoising is performed in two stages where initially hard thresholding based on the MP law is applied to the singular values in the SVD domain to obtain a low-rank approximation that preserves essential image content while removing noise-dominated components. Residual noise in the low-rank matrix is then further suppressed through a coefficient-wise linear minimum mean square error LMMSE estimator in the PCA transform domain. Finally, a non-local means algorithm refines the denoised image by computing weighted averages of pixel intensities and prioritizing neighbourhood similarity over spatial proximity to effectively preserve edges and textures while reducing Gaussian noise. Experimental evaluations on CT and MRI datasets demonstrate that the proposed method achieves superior denoising performance while maintaining high structural similarity and perceptual quality compared to existing state-of-the-art approaches. The method demonstrates adaptability noise reduction capability and preservation of anatomical detail that make it well suited for precision critical medical imaging applications.

Similar content being viewed by others

Introduction

Medical imaging plays a critical role in modern healthcare, providing vital insights into the internal structures of the human body for the diagnosis and treatment of diseases such as neurological, cardiovascular, and oncological disorders. The advancement of digital imaging technologies, including magnetic resonance imaging (MRI), computed tomography (CT), and positron emission tomography (PET) has enabled high-resolution visualization of anatomical and physiological features1,2. However, a persistent challenge in medical imaging is the presence of noise i.e. random variations in pixel intensity that degrade image quality, particularly under conditions of low contrast or insufficient radiation exposure3. Such noise not only obscures fine structural details but also hampers accurate diagnosis and treatment planning, necessitating effective noise removal techniques as a critical preprocessing step. Traditional image denoising methods, including wavelet thresholding4, Wiener filtering, and median filtering have been widely used to suppress noise in medical images5. While these approaches can reduce noise to some extent, they often struggle to balance noise removal with the preservation of important features such as edges and textures which is essential requirement for clinical interpretation. More recent developments in sparse representation and dictionary learning6, as well as deep learning-based models3 have demonstrated improved denoising performance. However, these methods often require high computational complexity, extensive training data, or lack adaptability across different imaging modalities and noise types7,8,9.

In addition to its application in medical imaging, the proposed denoising method offers promising potential for enhancing user experience in consumer-grade photography and videography, where image quality is often compromised by noise under low-light or high-ISO conditions. By leveraging adaptive clustering and non-local means, the method effectively reduces noise while preserving fine textures and edges, making it well-suited for scenarios such as night photography, smartphone imaging, or videography in challenging lighting environments. To contextualize this work, it is important to consider the chronological development of image denoising techniques. Early approaches included spatial domain filtering methods such as mean, median, and Gaussian filters1, followed by transform-domain techniques like wavelet thresholding2 and the original non-local means algorithm by Buades et al.3. Subsequent advances introduced sparse coding4, dictionary learning5, and low-rank matrix factorization6 as powerful denoising strategies. More recently, deep learning-based methods such as DnCNN7, U-Net architectures8, and generative adversarial networks (GANs) for denoising9 have achieved state-of-the-art results. The proposed method builds upon these foundations by integrating adaptive clustering with principal component analysis and non-local similarity exploitation, offering a hybrid approach that balances statistical robustness and structural preservation. This framework situates the method as a competitive solution not only for medical images but also for consumer applications where perceptual quality and detail preservation are critical.

In this study, we propose an efficient and robust image denoising framework that combines adaptive clustering, principal component analysis (PCA) thresholding guided by the Marchenko–Pastur (MP) law10 from random matrix theory (RMT)11,12, and a non-local means (NLM) algorithm to achieve superior noise suppression while preserving fine image details. The key innovation of the proposed approach lies in its integration of statistical properties of eigenvalues under the MP law to estimate global noise levels and guide hard and soft thresholding operations in the PCA transform domain. Furthermore, an adaptive clustering strategy automatically determines optimal clusters of similar image patches, enhancing local feature representation and enabling targeted noise reduction within each cluster. Finally, a non-local means algorithm refines the denoised image by leveraging similarity across non-adjacent pixel neighbourhoods, improving detail preservation without sacrificing denoising effectiveness.

The main contribution of the paper is the development of a robust and efficient image denoising method that integrates adaptive clustering, principal component analysis (PCA)-based thresholding using the Marchenko-Pastur (MP) law, and a non-local means (NLM) algorithm to effectively remove noise while preserving fine image details, particularly in medical images. This study introduces a novel adaptive clustering approach that automatically determines the optimal number of clusters by combining over-clustering and iterative merging strategies to group similar image patches based on texture and edge features. Within each cluster, the method applies a two-stage denoising process: hard thresholding in the singular value decomposition (SVD) domain guided by the MP law to achieve a low-rank approximation, followed by a locally parameterized linear minimum mean square error (LMMSE) soft thresholding to further suppress residual noise. The final integration of an NLM algorithm enhances noise reduction by leveraging non-local similarity across the image. Experimental results demonstrate that the proposed method achieves superior denoising performance and better preserves textures and edges compared to existing techniques, making it highly suitable for precision-critical applications in medical imaging.

The remainder of the paper is as follows: Section "Related work" presents a detailed analysis of recent state-of-the-art image denoising techniques. Section "Proposed methodology" provides a step-by-step explanation of the proposed method for image denoising. The experimental setup and discussion section outlines an experimental setup, and discusses the effectiveness of the proposed approach and compares it with other recent techniques in Section "Experimental setup and results". The Ablation study results showing the effect of outlier exclusion and soft thresholding on denoising metrics is presented in Section "Ablation study". Lastly, Section "Conclusion" presented “Conclusion” summarizes the key findings and contributions of the proposed approach.

Related work

Image processing is a fundamental area in computer vision and medical imaging, encompassing techniques for enhancing, analysing, and interpreting visual information13,14,15,16,17,18. A critical task within image processing is image denoising that aimed at removing noise while preserving essential features like edges and textures19,20,21. Over the years, a variety of denoising methods have been developed, ranging from classical spatial and transform-domain filters (e.g., Gaussian, median, wavelet thresholding) to more sophisticated approaches like sparse coding, dictionary learning, and deep learning models22,23,24. Recently, hybrid techniques combining statistical models, clustering, and non-local similarity measures have emerged to achieve a better balance between noise reduction and detail preservation. Recently, Jifara et al.3 proposes a deep feedforward denoising by leveraging a deep learning framework. Their approach incorporated batch normalisation and residual learning into the deep model. Residual learning was used to effectively extract noise patterns from noisy images, while batch normalisation was integrated with residual learning for enhancing both training accuracy and efficiency. However, this technique did not assess the reduction of compression artifacts in images using a prominent CNN model. Ji et al.25 presents a geometric regularisation system for image denoising. This technique focused on rebuilding surfaces by minimizing approximation and gradient errors to alleviate noise in medical imaging. The surface was created by dividing the coefficients into two groups. The initial group was used to eliminate surface gradient while the subsequent group aimed to enhance estimation accuracy. However, the approach did not enhance the real-time efficiency. Aravindan et al.26 developed a method for effectively denoising a high noise density images with minimum computational expense. This method proposes denoising technique using SSO and DWT. First, salt & pepper, Gaussian and speckle noises are introduced followed by the adaptation of the DWT. Optimization of wavelet coefficients was performed for enhancing coefficient values utilizing SSO. Subsequently, the inverse discrete wavelet transform is employed in the optimization of wavelet parameters. The approach substantially reduced image noise; nevertheless, it was unsuitable to other datasets. Rani et al.27 developed a steepest learning model used in ANN to adaptively minimize image noise. In addition, a soft thresholding function was introduced as an activation function for artificial neural networks. But the strategy neglected to account for higher-order derivatives in addressing mathematical aspects. Laves et al.28 developed a randomly initiated conv network for image denoising. This methodology used a Bayesian scheme with Monte Carlo dropout to evaluate to estimate both epistemic and aleatoric uncertainty. This strategy enhanced predictive uncertainty and connects with predictive error. But the system did not use deformable registration to enhance results. Kumar and Nagaraju29 presents a filter for the restoration of noisy images. This filter integrates a type II fuzzy system with the cuckoo search scheme for image restoration. Noisy pixels in images are found using a circular related search technique, and the detected damaged pixels are removed. This approach is adaptable in numerous noisy circumstances but did not enable adequate exploration. Bai et al.6 presents a denoising technique which combined sparse dictionary learning with the cluster ensemble. This technique used both inherent self-similarity and disparity in image patches. The image feature set are initially created via steering kernel regression. The cluster ensemble is subsequently employed to obtain class labels. The trained dictionary exhibited greater adaptability and facilitated enhancements in recovered image quality; however, the system was hindered by significant mathematical complexity. Miri et al.7 developed a method for removing Gaussian noise using an ant colony optimisation scheme and 2DDCT. This method determines essential frequency coefficients and alleviated noise effects by removing high-frequency components. But the system did not successfully include deep learning techniques to enhance performance. Kollem et al.30 present an image denoising based on a diffusivity function-derived partial differential equation by utilizing the statistical features of observed noisy images. This method employs a QWT which generates the various coefficients of a noisy image. Huang et al.31 present a self-supervised sparse coding technique known as WISTA. This method does not depend on ground truth image pairs, and learns solely from a single noisy image. However, to enhance denoising performance, this method expands the WISTA framework for developing a DNN architecture referred to as WISTA-Net. Atal22 introduces an ODVV filter to denoise Lena and CT images. The input images are processed using a noisy pixel map recognition module, where a DCNN is utilised to identify noisy pixel maps. DCNN training is conducted using an Adam algorithm. Lepcha et al.32 present a low-dose computed tomography (LDCT) denoising technique using sparse 3D transformation and PNLM. The hard thresholding module in a sparse 3D transformation is utilised to alleviate noise. Also, PNLM addresses the shortages of non-local means weighting by providing weights that are more accurately represent patch similarities.

Jang et al.33 presented the Spach Transformer, an efficient encoder decoder transformer which utilizes spatial and channel information through global and local MSAs. Shen et al.34 presents the diffusion probabilistic scheme due to its robust generative capabilities. During the training phase, the model acquires a scoring function network through the optimization of the evidence lower bound (ELBO). Lepcha et al.35 devised an effective LDCT denoising scheme using a constructive non-local means approach using morphological residual processing to eliminate noise. Ma et al.36 employs a meticulously created block as the foundation of the encoder decoder model which merges Swim Transformer modules with residual blocks in a parallel configuration. Swim Transformer modules can adeptly acquire hierarchical representations of noise artifacts using self-attention method through non-overlapping shift windows and cross window connections while residual blocks are beneficial for mitigating the loss of precise information through shortcut connections. Annavarapu et al.37 proposes an adapted DCNN for image denoising and coupled with adaptive watershed segmentation to restore image information, and a hybrid lifting method based on revised bi-histogram equalization. This method is subsequently enhanced with the incorporation of marker-based watershed segmentation. Zeng et al.38 present a TNCDN model for denoising noisy images. The information from two denoising networks which possess ongoing learning capabilities is transmits to the primary denoising model. Sharif et al.39 confronts the constraints of current denoising techniques with an AI-driven two-stage learning approach. The proposed method acquires the capability to estimate the residual noise from the contaminated images. Subsequently, it integrates an innovative noise attention mechanism to associate estimated residual noise with noisy inputs, enabling denoising in a coarse-to-fine approach. Lee et al.40 presents a MSAN, a model designed for an effective denoising of medical images. It consists three primary modules such as (a) a feature extraction layer capable in identifying low and high frequency characteristics, (b) a multiscale self-attention scheme which predicts residual utilising both original and down-sampled feature maps and a decoder which generates a residual image. Huang et al.41 introduce Be-FOI, a weakly supervised model which employs cine images. BeFOI uses Markov chains for denoising and depends only on the fluoroscopic image as a reference, which have been trained using accurate noise measurement and simulation. It outperforms other methods by eliminating noise, improving clarity and diagnostic interpretation.

Proposed methodology

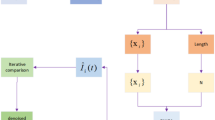

The proposed approach for medical image denoising begins with estimating the noise variance of the input noisy image using the Marchenko–Pastur (MP) law, which provides a robust statistical basis for noise level estimation. Once the noise level is determined, the image is divided into overlapping patches to capture localized structural information. These patches are then grouped into similar clusters through an adaptive clustering process, which initially over-clusters the patches using k-means and subsequently merges similar clusters based on an inter-cluster distance threshold. For each resulting cluster, denoising is performed in two stages: first, hard thresholding is applied in the principal component analysis (PCA)-singular value decomposition (SVD) domain by discarding noise-dominated singular values identified using the MP law; second, a soft thresholding step is performed using a local linear minimum mean square error (LMMSE) estimator to further suppress residual noise while preserving fine details. The denoised patches from all clusters are then aggregated to reconstruct an intermediate denoised image. Finally, a non-local means (NLM) refinement is applied, which computes weighted averages based on pixel neighbourhood similarity, enhancing detail preservation and ensuring effective noise removal. This multi-stage process integrates global statistical estimation, localized structural adaptation, and non-local similarity to achieve robust and detail-preserving denoising of medical images. The workflow of the proposed approach is demonstrated In Fig. 1.

Noise level estimation

Noise level is the important factor in an image denoising process42. An optimal performance of PCA-based noise level estimate is demonstrated in references43,44,45. In43,44, low-rank patch selection incurs significant computing costs and exhibits instability at elevated noise levels. 45 presents a rapid method for estimating noise levels based on the observation that patches extracted from a noiseless image frequently reside within a low dimensional subspace. The low-dimensional subspace can be obtained through the low-rank approximation of PCA while the noise level can be derived from eigenvalues of the covariance matrix of noisy patches. Motivated by this approach, we present a robust and efficient method for estimating noise levels via combining this estimation scheme with a MP law. The relationship between the noise level \(\sigma\) along with PCA eigenvalue λ of the random matrix N42 allows for the effective use of MP law for estimating the gaussian noise level of the image. The MP law is utilised for locally computing the noise level within a compact 3 D block11,12. Further we utilize the MP law to predict noise levels on a global scale. The global approach is justified as the MP law explains the characteristics of large matrices with greater precision than those of small matrices. Designate the subscript \(\phi\) to signify the whole image. To obtain precise estimations, we partition the entire \(a \times b\) image into overlapping \({d}_{\varnothing }\times {d}_{\varnothing }\) patches and aggregate all the overlapping patches to form a substantial matrix \({X}_{\varnothing } \epsilon {\mathbb{R}}^{{M}_{\varnothing }\times {L}_{\varnothing }}\), where \({M}_{\varnothing }={d}_{\varnothing }^{2}\) and \(L_{\emptyset } = \left( {a - d_{\emptyset } + 1} \right)\left( {b - d_{\emptyset } + 1} \right)\). The large nosy matrix \({X}_{\varnothing }\) is technically characterized as a low-rank matrix \({X}_{0,\varnothing }\) combined with the noisy matrix \({X}_{\varnothing }={X}_{0,\varnothing }+{N}_{\varnothing }\), where each column of \({N}_{\varnothing }\) is a vector which adheres to a multivariate gaussian distribution \({N}_{{M}_{\varnothing }}\left(0, {\sigma }^{2}\text{I}\right)\) with a mean of 0 and a covariance matrix of \({\sigma }^{2}\text{I}\). Estimation of noise level \(\sigma\) is directly correlated with the PCA eigenvalues of \({N}_{\varnothing }\). However, \({N}_{\varnothing }\) is indeterminate whereas \({X}_{\varnothing }\) is known. Low-rank of matrix \({X}_{0,\varnothing }\) allows to examine the correlation the PCA eigenvalues of \({N}_{\varnothing }\) and those of \({X}_{\varnothing }\). Some findings on this correlation are discussed in42. Utilizing the PCA eigenvalue \({\lambda }_{{x}_{\varnothing },i} 1 \le i \le {M}_{\varnothing }\) of \({X}_{\varnothing }\), we compute the noise level \(\sigma\). Statistical mean of the observed eigenvalues between \({\lambda }_{{n}_{\varnothing }-}\) and \({\lambda }_{{n}_{\varnothing }+}\) is almost equivalent to the predicted value of \({\lambda }_{{n}_{\varnothing }}\).

where \({\lambda }_{{n}_{\varnothing }\pm }= {\sigma }^{2}{\left(1\pm \sqrt{\gamma \varnothing }\right)}^{2}\) with \(\gamma \varnothing =\frac{{M}_{\varnothing }}{{L}_{\varnothing }},\)

Above, Eqs. (1) and (2) demonstrate that estimating the noise level involves identifying the range \(\left[{\lambda }_{{n}_{\varnothing }-},{\lambda }_{{n}_{\varnothing }+}\right]\). We can directly set \({\lambda }_{{n}_{\varnothing }-}= {\lambda }_{{x}_{\varnothing }}, {M}_{\varnothing }\) since \({\lambda }_{{n}_{\varnothing }-}\le {\lambda }_{{x}_{\varnothing }}, {M}_{\varnothing } \le {\lambda }_{{x}_{\varnothing }, i}, 1 \le i \le {M}_{\varnothing }.\) Subsequently, we determine a suitable \({\lambda }_{{x}_{\varnothing }+}\) by heuristic greedy search based on Eqs. (1) and (2). In particular for each \({\lambda }_{{x}_{\varnothing }, i},\) if \({\lambda }_{{n}_{\varnothing }+ }= {\lambda }_{{x}_{\varnothing }, i}\), we can compute the variance estimation \({\sigma }_{{i}_{1}}^{2}\) using Eq. (1) and \({\sigma }_{{i}_{2}}^{2}\) using Eq. (2), respectively. Subsequently, we compute the difference \({\Delta }_{i}= {\Vert {\sigma }_{{i}_{1}}^{2}- {\sigma }_{{i}_{2}}^{2}\Vert }_{2}^{2}\). The optimal \({\lambda }_{{n}_{\varnothing }+}\) is ascertained by selecting the suitable \({\lambda }_{{x}_{\varnothing },i}\) that minimizes the difference \({\Delta }_{i}\). The noise level \(\widehat{\sigma }\) is determined as per Eq. (1) after calculating \({\lambda }_{{n}_{\varnothing }\pm }\).

Adaptive clustering

After estimating the global noise level \(\widehat{\sigma }\), the proposed method performs an adaptive clustering of image patches to group similar features together for effective denoising42. The goal of clustering is to ensure that patches with similar local structures, such as edges or textures, are grouped into the same cluster, while patches representing different features are separated. Traditional clustering methods like K-means require specifying the number of clusters in advance, which is impractical because the number of distinct features in an image is generally unknown. Although various cluster validation indices have been proposed to determine the optimal cluster number, they tend to be computationally expensive and complex. To overcome this challenge, an adaptive clustering strategy based on an over-clustering-and-iterative-merging approach46 is developed. In the first stage, over-clustering is deliberately performed to produce a large number of clusters so that different features are initially separated. This is achieved using a K-means-based divide-and-conquer method47, where the initial number of clusters is set as \(\text{max}\left\{\frac{ab}{{256}^{2}}, 4\right\}\), with \(a\) and \(b\) being the image height and width. Each cluster obtained from this stage is further subdivided into \(\lfloor\frac{{L_{{j0}} }}{M} \rfloor\) clusters in a second stage, where \({L}_{j0}\) is the number of patches in cluster \({j}_{0}\), M is the patch dimensionality, and ⌊⋅⌋ denotes the floor function. After over-clustering, an iterative merging step is applied to combine similar clusters and prevent fragmentation. Two clusters are merged if the squared Euclidean distance between their centroids is below a threshold \(T\), which defines an acceptable similarity level between clusters. To derive this threshold, the distance between cluster centroids is modelled under the assumption that one cluster centre is noise-free and the other is affected by additive Gaussian noise. In this model, the squared distance follows a chi-squared distribution with M degrees of freedom, expressed as

where n represents Gaussian noise. The threshold T is determined to satisfy \(P \left({D}_{B,A} < \sqrt{T}\right)=\epsilon\), where ϵ is a small probability (e.g., 1.3 × \({10}^{-10}\), leading to T being calculated from the chi-squared cumulative distribution function as \(T= {\chi }_{1-\epsilon }^{2}, {M}^{{\sigma }^{2}}\). For practical purposes, when M = 64 and \(\epsilon =1.3 \times {10}^{-10}\), this yields T ≈ 16.0 \({\sigma }^{2}\). This adaptive clustering method does not require explicit specification of the number of clusters, instead automatically adjusting based on the noise level and statistical properties of the image. It ensures that similar image features are grouped effectively while preserving the separation of distinct features, thereby laying a robust foundation for subsequent low-rank denoising of each cluster.

Hard thresholding via MP-SVD with rank estimation

In this step, we denoise each cluster matrix by performing hard thresholding of the singular values based on the Marchenko–Pastur (MP) law, which provides a principled way to separate signal from noise in the singular value spectrum42.

Let \({X}_{j} \in {\mathbb{R}}^{M\times {L}_{j}}\) denote the noisy data matrix for the \(j\)-th cluster, composed of similar patches grouped together. The noisy matrix can be expressed as:

where \({X}_{0,j}\) is the underlying low-rank clean matrix, and \({N}_{j}\) represents additive Gaussian noise.

We perform singular value decomposition (SVD) on \({X}_{j}\):

where \({U}_{j}\) and \({V}_{j}\) are orthonormal matrices, and \({\Sigma }_{j}=diag \left({\sigma }_{j,1},{\sigma }_{j,2},\dots \dots .,{\sigma }_{j,M}\right)\) is a diagonal matrix containing singular values in descending order.

According to the MP law, the noise singular values are asymptotically confined below an upper bound \({\lambda }_{n}^{+},\) given by:

with \(\gamma = \frac{M}{{L_{j} }} {\text{and}} \sigma^{2}\) the noise variance.

To remove the noise-dominated components, we define a hard threshold:

where μ > 1 is an adjustment parameter to slightly push the threshold above \(\lambda_{n}^{ + }\) for better separation.

We retain only the singular values exceeding \(\xi\), yielding a low-rank approximation \(\tilde{X}_{j}\) as:

or equivalently in matrix form:

where \({U}_{j,r }{\Sigma }_{j,r }{V}_{j,r}\) correspond to the singular vectors and singular values associated with the \(r\) retained components (i.e., \({\sigma }_{j,i}>\upxi .\)

This hard thresholding effectively nullifies the singular values below ξ, removing the noisiest subspace while preserving the dominant signal structure. The matrix \({\widetilde{X}}_{j}\) serves as the initial denoised output for cluster \(j\), to be further refined (if necessary) in subsequent processing. Hard thresholding is applied during the initial phase of denoising within each adaptively formed cluster. Given a noisy cluster matrix X, we perform singular value decomposition (SVD) and discard singular values below a threshold determined using the Marchenko–Pastur (MP) law from random matrix theory. The threshold is defined as:

where \(\mu > 1\) is an empirical constant (we use \(\mu = 1.1),\) \(\sigma^{2}\) is the estimated noise variance, and \(\gamma = \frac{M}{L}\) is the aspect ratio of the patch matrix \(X \in {\mathbb{R}}^{M \times L}\). Only singular values \(\sqrt {\lambda_{x,i} } > {\upxi }\) are retained in the reconstructed low-rank matrix:

where the above equation describes the reconstruction of a denoised low-rank matrix \(\widetilde{\text{X}}\) by performing hard thresholding on the singular values obtained from the singular value decomposition (SVD) of a noisy matrix \(X\). In this equation, \(\widetilde{\text{X}}\) represents the denoised approximation, \(L\) is the number of columns (i.e., the number of patch vectors in the cluster), and M is the number of rows (i.e., the dimensionality of each patch vector). The term \({\lambda }_{i,j}\) denotes the \(i\)-\(th\) eigenvalue (or squared singular value) of the covariance matrix \(X{X}^{T}\), reflecting the energy captured by the corresponding principal component. The indicator function 1 \(\left(\sqrt{{\lambda }_{x,i}}>\upxi \right)\) takes a value of 1 if the square root of the eigenvalue (i.e., the singular value) exceeds the threshold ξ, and 0 otherwise; this step ensures that only significant (signal-dominated) components are retained, while noise-dominated components are discarded. The threshold ξ is calculated using the Marchenko–Pastur law, adapting to the noise level in the data. The vectors \({u}_{x,i}\) and \({v}_{x,i}\) are the \(i\)-\(th\) left and right singular vectors of matrix X, respectively, which describe the principal component directions in the row and column spaces. The summation runs over the M singular vectors and their corresponding singular values, multiplying each retained component to reconstruct the low-rank approximation. The scaling factor \(\sqrt{L}\) normalizes the reconstruction based on the number of columns. Together, these terms define a process that selectively reconstructs the data by retaining only those components that exceed the noise threshold, effectively suppressing noise while preserving meaningful signal structures.

Soft thresholding via local LMMSE estimation in PCA domain

After the removal of the dominant noise components through hard thresholding in the SVD domain, we obtain the signal-dominated low-rank matrix \({\widetilde{X}}_{j}\) for each cluster \(j\). While the hard thresholding discards the noisiest singular values, residual noise remains in the retained components. To further suppress this noise while preserving fine details, we apply a soft-thresholding process in the PCA transform domain, leveraging the linear minimum mean-square error (LMMSE) estimator with locally estimated parameters42.

Let \({\widetilde{X}}_{j}\) be decomposed as:

where \({\text{U}}_{{\tilde{X}_{j} }} {\text{and }}V_{{\tilde{X}_{j} }}\) are the left and right singular vectors, and \({\Sigma }_{{\tilde{X}_{j} }}\) is a diagonal matrix containing the retained singular values. Projecting \(\tilde{X}_{j}\) into the PCA domain yields the transform coefficient matrix:

Each element \(s_{{j,\left( {i,k} \right)}}\) in \(S_{j}\) represents the \(k\)-th coefficient of the \(i\)-th principal component in cluster \(j\). We perform soft-thresholding on each coefficient \(s_{{j,\left( {i,k} \right)}}\) using an adaptive weight \(w_{{j,\left( {i,k} \right)}}\), computed from the local variance estimate \(\tilde{S}_{{j,\left( {i,k} \right)}}\) as:

With

where \({\sigma }^{2}\) is the estimated noise variance, and \({\widetilde{S}}_{j,\left(i,k\right)}\) is the local variance estimated around position \(k\) in the \(i\)-th band:

where ζ controls the size of the local window and 1(⋅) is the indicator function.

Finally, the denoised patch matrix \(\hat{X}_{j}\) for cluster \(j\) is reconstructed by inverse PCA transformation as

where \({\widetilde{S}}_{j}\) is the matrix of soft-thresholded coefficients \({\widehat{s}}_{j,\left(i,k\right)}\). This locally adaptive soft-thresholding enables effective suppression of residual noise while preserving signal variations intrinsic to the principal components, leading to superior detail preservation compared to global thresholding strategies.

Non-local means algorithm

The Non-Local Means (NL-means)48 algorithm is an image denoising method that restores each pixel by computing a weighted average of all pixels in the image, rather than limiting the averaging to a local neighbourhood. Given an image \(v \approx {\widehat{X}}_{j}\) (i.e. Eq. 17), the denoised value at a pixel \(i\) and denoted as \(NL\left[v\right]\left(i\right)\) is calculated by summing the intensity values of all pixels \(j\) in the image, each multiplied by a weight \(w\left(i,j\right)\) that reflects the similarity between the neighbourhoods around pixels \(i\) and \(j\). Mathematically, this is expressed as

The weight \(w\left( {i,j} \right)\) is defined as

where \(Z\left(i\right)\) is a normalization factor ensuring that the sum of the weights equals one, and \({\Vert v \left({N}_{i}\right)-v \left({N}_{j}\right) \Vert }_{2,a}^{2}\) represents the weighted squared Euclidean distance between the neighbourhoods \({N}_{i}\) and \({N}_{j}\) of pixels \(i\) and \(j\) computed using a Gaussian kernel with standard deviation \(a\). The parameter \(h\) controls the decay of the exponential function, effectively determining how quickly the weight decreases as the neighborhood similarity diminishes. This approach allows pixels that have similar surrounding patterns to contribute more significantly to the denoised value, enabling the algorithm to preserve textures and fine details by leveraging repetitive structures throughout the image. Unlike traditional local filters, the NL-means algorithm utilizes information from the entire image, making it particularly effective in maintaining important features while reducing noise.

Experimental setup and results

In the experiments, the MRI and CT image datasets were obtained from https://www.med.harvard.edu/aanlib/home.html49, a publicly available repository of medical images for research purposes. The datasets were pre-processed by dividing them into training and testing sets to ensure robust evaluation of the denoising performance. Specifically, 70% of the images were randomly selected for training, allowing the model to learn from a diverse set of anatomical structures and noise patterns, while the remaining 30% were reserved for testing to evaluate the generalization capability of the proposed method. Both sets included images with varying noise levels (σ ≈ 10, 20, 30, 40) to simulate realistic noise conditions. Each image was divided into overlapping patches of size 8 × 8 for noise reduction and 10 × 10 for noise level estimation as described in the experimental methodology. The split ensured no overlap of image instances between training and testing to avoid data leakage. Performance was evaluated using standard metrics (PSNR, SSIM, FSIM, Entropy, BRISQUE, PIQE, NIQE) to quantify denoising quality across both sets. The quality of the image is better when PSNR50,51, SSIM, FSIM50,51 and Entropy have higher values, whereas BRISQU52, PIQE, and NIQE53 should have lower values. The quantitative study confirms that the proposed noise level estimation achieves superior denoising performance on average compared to other approaches. For calculating the efficiency of the image denoising method, we compare the proposed approach with several other popular algorithms, namely OD-CNN54, NDiff30, SDPM34, UDLF55, NGN56, CNCL57,BP-MEC25, FDCT58, NSCT-BT59, IDM-PM60, ONLM61, DCT-ACO7, ANLM62, Z-NLM63. Three test images with \(\sigma\) values of 10, 20, 30, and 40 are utilized for representation: two MRI images (MRI1 and MRI2) and one CT image from https://www.med.harvard.edu/aanlib/home.html49. All these methods are configured to their default parameters for optimal performance. All experiments were conducted on a desktop computer with an Intel Core i5-4460 Quad-Core CPU running at 3.2 GHz, 16 GB of RAM, and using MATLAB R2020b as the software platform. The comparative methods were evaluated using publicly available implementations where accessible, or re-implemented according to the algorithms described in the respective publications when source code was not provided.

The ability to preserve image details is demonstrated using visual analysis in Figs. (2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13) for three images at different noise levels. We begin by presenting the visual results and then present the quantitative analysis using several performance metrics. Figures (2, 3, 4) illustrates the denoising results. We introduce several degrees of Gaussian noise (\(\sigma =\) 10, 20, 30,40) to the ground truth image and implement different techniques for denoising. Figures 2, 3, 4b–c illustrates that OD-CNN and NDiff preserve the external details while the inside details are pixelated. In Figs. 2, 3, 4d–e, the SDPM and NGN approaches preserve the outer margins while the inside details remain pixelated. Figures 2, 3, 4f, g, h demonstrates that UDLF, CNCL, and BP-MEC effectively maintain the edges to some degree; nevertheless, the inside details remain indistinct. Figures 2, 3, 4k, l, m illustrates that the IDM-PM, DCT-ACO, and ANLM approaches are also ineffective in preserving the edges of the images. In Figs. 2, 3, 4b–c, it is obvious that OD-CNN and NDiff preserve the outer edges although the inner features of the image appear blurred. Figures 2, 3, 4i, j illustrates that FDCT and NSCT-BT fail to preserve edge sharpness which results in distortion. Figures 2, 3, 4n, o illustrates that Z-NLM and ONLM distort the image which results in an increased edge width. Figures 2, 3, 4p illustrates that the image generated using proposed method closely resembles the ground truth image while maintaining both internal and external details. Table 1 displays the quantitative evaluation which demonstrated computed values of PSNR, SSIM, FSIM, Entropy, BRISQUE, PIQE, and NIQE for an estimated denoised image derived from the use of several techniques across diverse noise levels in imaging. The Entropy, PSNR, FSIM, and SSIM of the denoising image produced by the proposed method are superior to those of existing techniques and PIQE, NIQE, and BRISQUE values are comparatively lower which is optimal. Figures (5, 6, 7) presents the qualitative results of the MRI2 image. Figures 5, 6, 7b, c demonstrate that the results of OD-CNN and NDiff exhibit pixelation. Figures 5, 6, 7d, e illustrate the results of the SDPM and NGN methodologies wherein the edges remain unclear and pixelated. Figures 5, 6, 7f, g, h illustrates the outputs of UDLF, CNCL, and BP-MEC, while the complex details are absent. In Figs. 5, 6, 7k, l, m, IDM-PM, DCT-ACO, and ANLM are used for image denoising which results in less clear edges that reduce the visual quality of the image. Figures 5, 6, 7b, c presents the outcomes achieved by the OD-CNN and NDiff methodologies which reveals that the outer edges are clear but the inside details are blurred. Figures 5–7i, j illustrates the results achieved by FDCT and NSCT-BT which reveals that the edges lack clarity and noise persists in the image, hence reduces its visual quality. Figures 5, 6, 7n, o illustrates that the images produced by the Z-NLM and ONLM methods exhibit blurriness and average visual quality. Figures 5, 6, 7p illustrates the findings derived from the proposed methodology which demonstrates that our model effectively minimizes noise to a greater degree than comparative methods. Table 2 lists average error scores achieved by several methodologies on the MRI2 image. The table indicates that the proposed method has superior values in Entropy, PSNR, FSIM, and SSIM compared to other methods. The proposed strategy exhibits lower PIQE, NIQE, and BRISQUE values in comparison to alternative methods which is optimal. Figures (8, 9, 10) illustrates the qualitative results of the denoised image reconstructed using several denoising techniques used in CT image affected by Gaussian noise. In Figs. 8, 9, 10b, c, OD-CNN and NDiff preserve the image structure; however, the emergence of.

grainy formations result in unclear edges. Figures 8, 9, 10d, e demonstrates that SDPM and NGN alleviate noise to a certain extent; however, the structure is slightly shifted from its original position. Figures 8, 9, 10f, g, h demonstrates that UDLF, CNCL, and BP-MEC fail to smooth the homogenous zones which results in a fragmented appearance. In Figs. 8–10k, l, m, we note that the outputs of IDM-PM, DCT-ACO, and ANLM are unclear which makes it difficult to distinguish the borders. Figures 8–10b, d demonstrates that overall, noise reduction is achieved by the OD-CNN and NDiff approaches; nevertheless, finer details could be enhanced. Figures 8, 9, 10i, j displays the denoised outputs from FDCT and NSCT-BT whereby noise remains visible. Figures 8, 9, 10n, o illustrates that Z-NLM and ONLM unclear the image, hence reduces its visual quality. Figures 8, 9, 10p demonstrates the result obtained from the proposed methodology that reveals that the reconstructed image closely resembles the ground truth image. The result obtained from the proposed approach for image denoising reduces.

the noise level to some degree. Table 3 presents the quantitative findings of all methods across various noise levels, indicating that the Entropy, PSNR, FSIM, and SSIM scores of the proposed approach surpass those of other methods while the PIQE, NIQE, and BRISQUE values of the proposed approach are comparatively low. We note that IDM-PM, DCT-ACO, and ANLM fails to preserve the edges, where interpreting boundary differentiation impossible. SDPM and NGN preserve the edges; nonetheless, noise remains in the image. The result indicates that the structure is preserved by UDLF, CNCL, and BP-MEC; nevertheless, the details in the image are compromised. The image produced by utilizing IDM-PM, DCT-ACO, and ANLM algorithms on the input image fails to maintain structural integrity. SDPM and NGN effectively retain edges; nonetheless, noise persists in the homogeneous areas of the image. The image produced by FDCT and NSCT-BT remains significantly noisy. It indicates that Z-NLM and ONLM cause the image to become blurred, where interpreting any portion of the image unclear. Our method outperforms other methods while preserving edges and maintaining visual quality. The data presented in Figs. 11, 12, 13 and Tables (1, 2, 3) indicates that the proposed approach outperforms other methods. Our noise level estimation approach offers more precise and consistent assessment of noise levels in medical images compared to current leading algorithms. The proposed denoising method generates superior restoration of essential image information as compared to state-of-the-art denoising techniques. It is noticed that the hard thresholding of singular values for the non-local groups is also illustrated in64. But our method differs from64 by using adaptive clustering of non-local patches and a more advanced PCA thresholding using to MP law. In addition to the PCA thresholding in the initial phase, the local approximation is used in the coefficient-wise LMMSE filtering during a subsequent phase which further aids in maintaining image detail when compared with the global LMMSE. The incorporation of the non-local means (NLM) algorithm provides substantial advantages by maintaining complex details and textures while efficiently reducing noise. By leveraging the redundancy of similar pixels across the image and it ensures denoising is adaptive and data-driven while making it mostly effective for handling complex and textured regions. This leads to significantly improved visual quality compared to state-of-the-art methods particularly for images with complex details or challenging low signal-to-noise ratios.

Ablation study

To validate the effectiveness of outlier exclusion and smoothing within the adaptive clustering and denoising process, we performed an ablation study to quantify their individual contributions to overall denoising performance. Specifically, we compared the full proposed method against two reduced variants: (1) a version without the iterative merging phase of adaptive clustering (thereby reducing outlier exclusion), and (2) a version omitting the soft thresholding (smoothing) step based on LMMSE. The evaluation was performed using MRI and CT datasets from the Cancer Imaging Archive under Gaussian noise levels σ = 10, 20, 30, and 40.

We used PSNR, SSIM, FSIM, Entropy, BRISQUE, NIQE, and PIQE as performance metrics. The quantitative results are presented in Table 4. The results show that excluding the merging phase reduced PSNR by an average of 1.2 dB and SSIM by 0.03 across noise levels, indicating the importance of outlier exclusion in preserving structural similarity. Similarly, omitting the LMMSE-based soft thresholding reduced PSNR by 0.8 dB and SSIM by 0.02, while slightly increasing BRISQUE, NIQE, and PIQE scores. These findings demonstrate that both strategies synergistically enhance the method’s denoising effectiveness by balancing noise suppression and detail preservation. Figure 14 presents a comprehensive comparison of quantitative evaluation metrics such as PSNR, SSIM, FSIM, Entropy, BRISQUE, and PIQE across different noise levels (\(\sigma\) = 10, 20, 30, 40) for the proposed method, a variant without the merging phase (no merging), and a variant without the soft thresholding step (no soft thresholding). The results clearly demonstrate that the proposed method consistently outperforms both ablated variants across all metrics and noise levels. Specifically, the proposed method achieves the highest PSNR, SSIM, and FSIM values, indicating superior noise suppression, structural similarity preservation, and feature similarity retention, respectively. In contrast, the no merging and no soft thresholding variants exhibit a noticeable degradation in performance, particularly at higher noise levels. Similarly, the proposed method yields lower BRISQUE, PIQE and NIQE scores across noise levels, signifying improved perceptual quality compared to the ablated variants. The entropy values also suggest that the proposed method better maintains image detail while suppressing noise. These findings collectively validate the individual and combined contributions of the merging phase (outlier exclusion) and the soft thresholding step in enhancing the overall denoising effectiveness of the proposed approach.

In order to evaluate the computational efficiency of the proposed method under varying noise conditions, we measured the average running time at different noise standard deviations (\(\sigma\) = 10, 20, 30, 40) across MRI1, MRI2, and CT images. The results, previously presented in Table 3, were further visualized in a running time versus noise level curve (see Fig. 15) to facilitate a clearer comparative interpretation. This figure demonstrates that while the running time slightly increases with noise density, the increase is relatively modest and remains computationally feasible within clinical and research settings. Additionally, the proposed method maintains a favourable balance between running time and denoising performance, especially at higher noise levels, outperforming several benchmark methods in robustness despite a moderate increase in execution time. This visualization confirms that the scalability of the method with respect to noise level is practically acceptable.

Conclusion

This study proposes a robust noise level estimation method along with a cluster-wise denoising approach. Our noise level estimation approach is more precise and reliable for medical images compared to other methods. The proposed denoising method uses an adaptive clustering method to employ effectively grouping nonlocal patches based on their special features. Each cluster is then accurately denoised using a PCA thresholding strategy. The denoising process begins with the removal of the most of noise through an enhanced singular value hard thresholding system based on MP law. This is followed by further refinement of noise reduction using coefficient-wise PCA domain LMMSE filtering which is a specific method of soft thresholding with locally computed parameters. Our method delivers superior detail preservation and outperforms other comparative methods in image denoising by combining denoising frameworks with an adaptive clustering method. Besides, the NL-Means algorithm reduces noise by estimating weighted averages of the pixels using the similarity of patches. It evaluates patch similarity using a Gaussian-weighted Euclidean distance which ensures further effective noise suppression while preserving textures and structures. Our future work will focus on enhancing the proposed approach and exploring its applications in other medical imaging and computer vision. In particular, we aim to improve image restoration techniques to address noise and alleviate any degradation in medical imagery by ensuring higher accuracy and reliability in clinical applications.

Data availability

The datasets used in this study are openly available on (1) The Whole Brain ATLAS, https://www.med.harvard.edu/aanlib/home.html and (2) Image-Fusion-Image-Denoising-Image-Enhancement, https://github.com/Imagingscience/Image-Fusion-Image-Denoising-Image-Enhancement-

References

Nazir, N., Sarwar, A. & Saini, B. S. Recent developments in denoising medical images using deep learning: An overview of models, techniques, and challenges. Micron 180, 103615. https://doi.org/10.1016/J.MICRON.2024.103615 (2024).

El-Shafai, W., El-Nabi, S. A., Ali, A. M., El-Rabaie, E. S. M. & Abd El-Samie, F. E. Traditional and deep-learning-based denoising methods for medical images. Multimed. Tools Appl. 83(17), 52061–52088. https://doi.org/10.1007/S11042-023-14328-X/METRICS (2024).

Jifara, W., Jiang, F., Rho, S., Cheng, M. & Liu, S. Medical image denoising using convolutional neural network: A residual learning approach. J. Supercomput. 75(2), 704–718. https://doi.org/10.1007/S11227-017-2080-0/METRICS (2019).

Binh, N. T. & Khare, A. Adaptive complex wavelet technique for medical image denoising. IFMBE Proc. 27, 196–199. https://doi.org/10.1007/978-3-642-12020-6_49 (2010).

Satapathy, L. M., Das, P., Shatapathy, A. & Patel, A. Bio-medical image denoising using wavelet transform. Int. J. Recent Technol. Eng 8(1), 2874–2879 (2019).

Bai, J., Song, S., Fan, T. & Jiao, L. Medical image denoising based on sparse dictionary learning and cluster ensemble. Soft. Comput. 22(5), 1467–1473. https://doi.org/10.1007/S00500-017-2853-7/METRICS (2018).

Miri, A., Sharifian, S., Rashidi, S. & Ghods, M. Medical image denoising based on 2D discrete cosine transform via ant colony optimization. Optik (Stuttg) 156, 938–948. https://doi.org/10.1016/J.IJLEO.2017.12.074 (2018).

Chithra, R. S. & Jagatheeswari, P. Enhanced WOA and modular neural network for severity analysis of tuberculosis. Multimed. Res. https://doi.org/10.46253/J.MR.V2I3.A5 (2019).

Do, M. N. & Vetterli, M. The contourlet transform: An efficient directional multiresolution image representation. IEEE Trans. Image Process. 14(12), 2091–2106. https://doi.org/10.1109/TIP.2005.859376 (2005).

Marčenko, V. A. & Pastur, L. A. Distribution of eigenvalues for some sets of random matrices. Math. USSR-Sbornik 1(4), 457–483. https://doi.org/10.1070/SM1967V001N04ABEH001994 (1967).

Veraart, J., Fieremans, E. & Novikov, D. S. Diffusion MRI noise mapping using random matrix theory. Magn. Reson. Med. 76(5), 1582–1593. https://doi.org/10.1002/MRM.26059 (2016).

Veraart, J. et al. Denoising of diffusion MRI using random matrix theory. Neuroimage 142, 394–406. https://doi.org/10.1016/J.NEUROIMAGE.2016.08.016 (2016).

Ike, C. S., Muhammad, N., Bibi, N., Alhazmi, S. & Eoghan, F. Discriminative context-aware network for camouflaged object detection. Front. Artif. Intell. https://doi.org/10.3389/frai.2024.1347898 (2024).

Khan, H., Xiao, B., Li, W. & Muhammad, N. Recent advancement in haze removal approaches. Multimed. Syst. 28, 687–710. https://doi.org/10.1007/s00530-021-00865-8 (2022).

Muhammad, N. et al. Frequency component vectorisation for image dehazing. J. Exper. Theor. Artif. Intell. https://doi.org/10.1080/0952813X.2020.1794232 (2020).

Ike, C. S. & Muhammad, N. Separable property-based super-resolution of lousy image data. Pattern Anal. Appl. 23, 1407–1420. https://doi.org/10.1007/s10044-019-00854-8 (2020).

Khan, H. et al. Localization of radiance transformation for image dehazing in wavelet domain. Neurocomputing 381, 141–151. https://doi.org/10.1016/j.neucom.2019.10.005 (2020).

Khan, S. N. et al. Early CU depth decision and reference picture selection for low complexity MV-HEVC. Symmetry (Basel) https://doi.org/10.3390/sym11040454 (2019).

Muhammad, N. et al. Image noise reduction based on block matching in wavelet frame domain. Multimed. Tools Appl. 79, 26327–26344. https://doi.org/10.1007/s11042-020-09158-0 (2020).

Muhammad, N., Bibi, N., Jahangir, A. & Mahmood, Z. Image denoising with norm weighted fusion estimators. Pattern Anal. Appl. 21, 1013–1022. https://doi.org/10.1007/s10044-017-0617-8 (2018).

Muhammad, N. et al. Image de-noising with subband replacement and fusion process using bayes estimators. Comput. Electr. Eng. 70, 413–427. https://doi.org/10.1016/j.compeleceng.2017.05.023 (2018).

Khmag, A. Natural digital image mixed noise removal using regularization Perona-Malik model and pulse coupled neural networks. Soft. Comput. 27, 15523–15532. https://doi.org/10.1007/s00500-023-09148-y (2023).

Khmag, A. Additive Gaussian noise removal based on generative adversarial network model and semi-soft thresholding approach. Multimed. Tools Appl. 82, 7757–7777. https://doi.org/10.1007/s11042-022-13569-6 (2023).

Khmag, A., Al Haddad, S. A. R., Ramlee, R. A., Kamarudin, N. & Malallah, F. L. Natural image noise removal using nonlocal means and hidden Markov models in transform domain. Vis. Comput. 34, 1661–1675. https://doi.org/10.1007/s00371-017-1439-9 (2018).

Ji, L., Guo, Q. & Zhang, M. Medical image denoising based on biquadratic polynomial with minimum error constraints and low-rank approximation. IEEE Access 8, 84950–84960. https://doi.org/10.1109/ACCESS.2020.2990463 (2020).

Aravindan, T. E., Seshasayanan, R. & Vishvaksenan, K. S. Medical image denoising by using discrete wavelet transform: Neutrosophic theory new direction. Cogn. Syst. Res. https://doi.org/10.1016/j.cogsys.2018.10.027 (2018).

Rani M. L. P., Sasibhushana Rao G., Prabhakara Rao B. ANN application for medical image denoising. In Advances in Intelligent Systems and Computing Vol. 816 675–684 (2019). https://doi.org/10.1007/978-981-13-1592-3_53

Laves M. H., Tölle M., & Ortmaier T. Uncertainty estimation in medical image denoising with bayesian deep image prior. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) Vol. 12443 LNCS 81–96 (2020). https://doi.org/10.1007/978-3-030-60365-6_9/FIGURES/11

Vijaya, K. S. & Nagaraju, C. T2FCS filter: Type 2 fuzzy and cuckoo search-based filter design for image restoration. J. Vis. Commun. Image Represent. 58, 619–641. https://doi.org/10.1016/J.JVCIR.2018.12.020 (2019).

Kollem, S., Reddy, K. R. & Rao, D. S. A novel diffusivity function-based image denoising for MRI medical images. Multimed. Tools Appl. 82(21), 32057–32089. https://doi.org/10.1007/S11042-023-14457-3/METRICS (2023).

Huang, H. et al. Self-supervised medical image denoising based on WISTA-Net for human healthcare in metaverse. IEEE J. Biomed. Health Inform. https://doi.org/10.1109/JBHI.2023.3278538 (2023).

Chyophel Lepcha, D., Goyal, B. & Dogra, A. Low-dose CT image denoising using sparse 3d transformation with probabilistic non-local means for clinical applications. Imag. Sci. J. 71(2), 97–109. https://doi.org/10.1080/13682199.2023.2176809 (2023).

Jang, S. I. et al. Spach transformer: spatial and channel-wise transformer based on local and global self-attentions for PET image denoising. IEEE Trans. Med. Imag. 43(6), 2036–2049. https://doi.org/10.1109/TMI.2023.3336237 (2024).

Shen C., Yang Z., & Zhang Y. PET image denoising with score-based diffusion probabilistic models. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) Vol. 14220 LNCS, 270–278 (2023). https://doi.org/10.1007/978-3-031-43907-0_26

Lepcha, D. C. et al. A constructive non-local means algorithm for low-dose computed tomography denoising with morphological residual processing. PLoS ONE 18(9), e0291911. https://doi.org/10.1371/JOURNAL.PONE.0291911 (2023).

Ma, Y. et al. StruNet: Perceptual and low-rank regularized transformer for medical image denoising. Med. Phys. 50(12), 7654–7669. https://doi.org/10.1002/MP.16550 (2023).

Annavarapu, A. & Borra, S. An adaptive watershed segmentation based medical image denoising using deep convolutional neural networks. Biomed. Signal Process. Control 93, 106119. https://doi.org/10.1016/J.BSPC.2024.106119 (2024).

Zeng, X., Guo, Y., Li, L. & Liu, Y. Continual medical image denoising based on triplet neural networks collaboration. Comput. Biol. Med. 179, 108914. https://doi.org/10.1016/J.COMPBIOMED.2024.108914 (2024).

Sharif, S. M. A., Naqvi, R. A. & Loh, W. K. Two-stage deep denoising with self-guided noise attention for multimodal medical images. IEEE Trans. Radiat. Plasma Med. Sci. 8(5), 521–531. https://doi.org/10.1109/TRPMS.2024.3380090 (2024).

Lee, K. et al. Multi-scale self-attention network for denoising medical images. APSIPA Trans. Signal Inf. Process. 12, 204. https://doi.org/10.1561/116.00000169 (2024).

Hu, H. & Huang, Z. BeFOI: A novel method based on conditional diffusion model for medical image denoising. J. Electron. Res. Appl. 8(2), 158–165. https://doi.org/10.26689/JERA.V8I2.6394 (2024).

Zhao, W., Lv, Y., Liu, Q. & Qin, B. Detail-preserving image denoising via adaptive clustering and progressive PCA thresholding. IEEE Access 6, 6303–6315. https://doi.org/10.1109/ACCESS.2017.2780985 (2018).

Pyatykh, S., Hesser, J. & Zheng, L. Image noise level estimation by principal component analysis. IEEE Trans. Image Process. 22(2), 687–699. https://doi.org/10.1109/TIP.2012.2221728 (2013).

Liu, X., Tanaka, M. & Okutomi, M. Single-image noise level estimation for blind denoising. IEEE Trans. Image Process. 22(12), 5226–5237. https://doi.org/10.1109/TIP.2013.2283400 (2013).

Chen G., Zhu F., & Ann Heng P. An efficient statistical method for image noise level estimation. (2015)

Ahirwar, R. AnovelKmeans clustering algorithm for large datasets based on divide and conquer technique. Int. J. Comput. Sci. Inf. Technol. 5(1), 301305 (2014).

Khalilian M., Boroujeni F. Z., Mustapha N., & Sulaiman M. N. K-means divide and conquer clustering. In Proceedings - 2009 International Conference on Computer and Automation Engineering, ICCAE 2009 306–309 (2009). https://doi.org/10.1109/ICCAE.2009.59

Buades A., Coll B., & Morel J. M. A non-local algorithm for image denoising. In Proceedings - 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005 60–65 (IEEE Computer Society, 2005). https://doi.org/10.1109/CVPR.2005.38.

Lepcha, D. C., Goyal, B., Dogra, A., Sharma, K. P. & Gupta, D. N. A deep journey into image enhancement: A survey of current and emerging trends. Inf. Fusion 93, 36–76. https://doi.org/10.1016/j.inffus.2022.12.012 (2023).

Lepcha D. C., Goyal B., Dogra A., & Goyal V. Image super-resolution: A comprehensive review, recent trends, challenges and applications, Mar 2023, Elsevier B.V. https://doi.org/10.1016/j.inffus.2022.10.007.

Goyal, B. et al. Detailed-based dictionary learning for low-light image enhancement using camera response model for industrial applications. Sci. Rep. https://doi.org/10.1038/s41598-024-64421-w (2024).

Lepcha, D. C., Goyal, B., Dogra, A., Wang, S. H. & Chohan, J. S. Medical image enhancement strategy based on morphologically processing of residuals using a special kernel. Expert. Syst. https://doi.org/10.1111/exsy.13207 (2022).

Atal, D. K. Optimal deep CNN–based vectorial variation filter for medical image denoising. J. Digit. Imag. 36(3), 1216–1236. https://doi.org/10.1007/S10278-022-00768-8/METRICS (2023).

Rai S., Bhatt J. S., & Patra S. K. An unsupervised deep learning framework for medical image denoising, accessed 26 January 2025; [Online]. Available: https://arxiv.org/abs/2103.06575v1 (2021).

Fu, B., Zhang, X., Wang, L., Ren, Y. & Thanh, D. N. H. A blind medical image denoising method with noise generation network. J. Xray Sci. Technol. 30(3), 531–547. https://doi.org/10.3233/XST-211098 (2022).

Geng, M. et al. Content-noise complementary learning for medical image denoising. IEEE Trans. Med. Imag. 41(2), 407–419. https://doi.org/10.1109/TMI.2021.3113365 (2022).

Anandan, P., Giridhar, A., Lakshmi, E. I. & Nishitha, P. Medical image denoising using fast discrete curvelet transform. Int. J. Emerg. Trends Eng. Res. 8(7), 3760–3765. https://doi.org/10.30534/IJETER/2020/139872020 (2020).

Wang, X., Chen, W., Gao, J. & Wang, C. Hybrid image denoising method based on non-subsampled contourlet transform and bandelet transform. IET Image Process 12, 778–784. https://doi.org/10.1049/iet-ipr.2017.0647 (2018).

Wang, N. et al. A hybrid model for image denoising combining modified isotropic diffusion model and modified perona-malik model. IEEE Access 6, 33568–33582. https://doi.org/10.1109/ACCESS.2018.2844163 (2018).

Kelm Z. S., Blezek D., Bartholmai B., & Erickson B. J. Optimizing non-local means for denoising low dose CT. In Proceedings - 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, ISBI 2009, , 662–665 (2009). https://doi.org/10.1109/ISBI.2009.5193134

Li, Z. et al. “Adaptive nonlocal means filtering based on local noise level for CT denoising. Med. Phys. 10(1118/1), 4851635 (2014).

Wang, J. & Yin, C. C. A Zernike-moment-based non-local denoising filter for cryo-EM images. Sci. China Life Sci. 56, 384–390. https://doi.org/10.1007/s11427-013-4467-3 (2013).

Deledalle C.-A., ParisTech Paris T., & Joseph Salmon F. Image denoising with patch based PCA: local versus global CNRS LTCI, accessed 26 January 2025.

Acknowledgements

The authors thank the Biomedical Sensors & Systems Lab for the research support and the article processing charges.

Author information

Authors and Affiliations

Contributions

M.S.: conceptualization, data analysis, experimental design, execution, and original draft preparation. A.D.: methodology, experimental design, project administration, validation, and original draft preparation. B.G.: conceptualization, experimental design, execution, and original draft preparation. A.G.: methodology, experimental design, and original draft preparation. M.J.S.: investigation, validation, supervision, funding acquisition, review and final manuscript preparation. All authors have read and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sharma, M., Dogra, A., Goyal, B. et al. Detail-preserving denoising of CT and MRI images via adaptive clustering and non-local means algorithm. Sci Rep 15, 23859 (2025). https://doi.org/10.1038/s41598-025-08034-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-08034-x