Abstract

This study presents an ensemble-based approach for detecting and classifying sesame diseases using deep convolutional neural networks (CNNs). Sesame is a crucial oilseed crop that faces significant challenges from various diseases, including phyllody and bacterial blight, which adversely affect crop yield and quality. The objective of this research is to develop a robust and accurate model for identifying these diseases, leveraging the strengths of three state-of-the-art CNN architectures: ResNet-50, DenseNet-121, and Xception. The proposed ensemble model integrates these individual networks to enhance classification accuracy and improve generalization across diverse datasets. A comprehensive dataset of sesame leaf images, representing healthy, phyllody, and bacterial blight conditions was utilized to train and evaluate the models. The ensemble approach achieved an impressive overall accuracy of 96.83%, demonstrating superior performance in accurately classifying the different leaf conditions. The results highlight the effectiveness of combining multiple deep learning models, which allows for the extraction of diverse feature representations and decision-making strategies. This thesis also discusses the advantages of the ensemble methodology, including improved robustness to variations in disease symptoms and enhanced adaptability to changing agricultural practices. The findings of this research have significant implications for precision agriculture. They offer a reliable tool for the early detection and classification of sesame diseases. By enabling timely interventions, this ensemble-based framework can contribute to the sustainability and productivity of sesame cultivation, ultimately supporting food security and agricultural resilience.

Similar content being viewed by others

Introduction

Sesame (Sesamum indicum L. Pedaliaceae), one of the first flowering oil crops, is thought to have originated in Africa. There is a sizable population of wild relatives in both Africa and India. This plant has a high rate of naturalization worldwide in tropical and subtropical climes1.

According to the global market for sesame production, Sudan was Africa’s top producer of sesame seeds in 2020. More than 1.5 million metric tons of seeds were produced in the country2. Tanzania and Nigeria, with output totals of 710,000 and 490,000 tons, respectively, placed in second and third. In the aforementioned year, Africa produced more than 4.2 million metric tons of sesame seeds1.

Protein, vitamins, minerals, and antioxidants are all abundant in sesame seeds. To give certain recipes a nutty flavor and crunchy texture, they are frequently added3. Sesame seeds are rich in protein, vitamins, minerals, and antioxidants. They are frequently added to certain recipes to give them a nutty flavor and crunchy texture4. Ethiopia is one of the top six countries in the world for sesame seed and linseed production, according to the Ethiopian pulses, oil seeds, and spices processors-exporters organization5,6.

Both the market trend and the consumption of sesame are rising annually all over the world. Oilseeds’ increased production and higher quality can significantly support economic growth on a national, regional, and family level7. Given Ethiopia’s potential for sesame exports, the government needs to promote the cultivation of safe, high-quality sesame and encourage farmers to make investments in crop-production technologies. This would raise the production of premium sesame to command a higher price and boost the farmers’ revenue8,9.

Sesame plant infections are to blame for significant financial losses in the global agricultural sector. To stop the spread of illness and enable efficient management techniques, sesame plant health monitoring and early disease detection are crucial10,11. Due to variations in pathogen species, adjustments in production practices, and insufficient plant protection systems, the number of illnesses affecting sesame plants and the severity of the damage they cause have grown in recent years. Phyllody (Phytoplasma spread by vector), producing deformation of leaves and blossoms, bacterial blight (Xanthomonasesesami), leaf spot (Pseudomonasesesami), and wilting (Fusariumoxysporium f. sesami) are a few diseases that harm the sesame crop12.

It can be challenging to determine the type and severity of illnesses, but small farms may be able to diagnose plant diseases to some extent with the help of professionals. However, it.

necessitates the availability of specialized techniques that enable quick and easy disease detection for everyone. Several researchers13,14,15,16 are working on detecting various crops using different methodologies, including deep learning and machine learning. Although many studies have examined different techniques for plant disease detection, including sesame, the specific context of Ethiopian sesame cultivation needs more research. Existing efforts, while valuable, have not fully achieved the desired accuracy and performance in detecting sesame diseases17. The potential advantages of ensemble-based approaches, which combine multiple models, have not been thoroughly explored in Ethiopia. This research gap highlights the need for further research and development to address the unique challenges and requirements of sesame disease detection in Ethiopia.

This study introduced a deep learning-based convolutional neural network (CNN) model as a useful tool for agricultural experts and farmers to accurately diagnose various sesame diseases, including phyllody and Bacteria blight. Considering the proven effectiveness of ensemble CNNs in various image-based prediction tasks, this approach is especially well-suited to overcome challenges in expert-based disease identification. By harnessing the power of deep learning, this model aims to improve diagnostic accuracy, enable early detection, and ultimately support better disease management practices in sesame cultivation.

This study tackles the major challenge of plant diseases in sesame farming, which threaten crop yields and farmers’ incomes. As global demand increases, quick and precise disease detection becomes crucial for sustaining productivity. Traditional methods are slow and rely heavily on expertise, reducing their usefulness in large-scale farming. Using ensemble learning and deep convolutional neural networks, this research aims to create a real-time, highly accurate disease detection system. By giving farmers actionable insights, the study improves diagnostic accuracy, promotes sustainable farming, and supports food security in sesame-growing areas.

Statement of the problem

Sesame, a cornerstone of Ethiopia’s agricultural economy, faces significant challenges that hinder its productivity and sustainability. Despite being a major foreign exchange earner, sesame production is constrained by factors such as limited supply, increased demand, and the prevalence of various diseases18.

Traditional disease identification methods, which rely on human expertise, are often time-consuming, subjective, and prone to errors. This can lead to misdiagnosis, delayed intervention, and further yield losses. To address these limitations, several researchers have explored various techniques for plant disease detection. For example, Abeje et al.13 proposed a stepwise deep learning approach for sesame disease detection, Jasrotia et al.14 suggested a disease identification model using convolutional neural networks for maize plants, and Alexandre et al.19 introduced a coffee leaf rust detection method using convolutional neural networks. However, existing research has not yet fully achieved the desired level of accuracy and performance in detecting sesame diseases. Additionally, only a few studies have been conducted specifically within Ethiopia.

Nowadays, deep convolutional neural networks (CNNs) have shown remarkable abilities in image analysis and classification tasks. By utilizing the power of CNNs, this study aims to create a robust and accurate model for detecting and classifying sesame diseases. An ensemble of CNNs, which combines the strengths of multiple models, is expected to improve the model’s performance and ability to generalize.

Several potential limitations or challenges associated with the proposed techniques used to improve the model’s effectiveness, or areas that require further investigation for real-world implementation: Need for Dataset Expansion and Diversity: Although data augmentation was used to increase the dataset size, the“Future research”suggests expanding the training dataset and incorporating additional data sources for improved performance and adaptability across diverse conditions. The original dataset size was relatively small (640 images total), and while augmentation helps, a larger and more diverse dataset collected over multiple seasons and locations might be necessary for the model to perform optimally and generalize robustly to all real-world variations in disease appearance. The literature review on other studies also notes limitations due to small dataset sizes.

Reliance solely on image data

The current model relies exclusively on image-based analysis of sesame leaves. The“Future research”section suggests incorporating additional data sources like climate, soil health, and agronomic practices. This implies that external environmental and agricultural factors can influence disease manifestation, and a model based only on images might not capture the full complexity or provide insights into the underlying causes, potentially limiting its diagnostic accuracy or applicability in certain situations.

Potential for further model optimization and tuning

The study suggests that future research should focus on optimizing and tuning the individual models (ResNet-50, DenseNet-121, and Xception). While the current ensemble shows superior performance, this suggestion implies that the individual models, and thus possibly their combination within the ensemble, might not be fully optimized for all scenarios, leaving room for potential performance improvements.

Computational resources and real-time deployment challenges

While the study mentions using Google Colaboratory with a GPU for training, which requires significant computational power and took 12 h for training, the“Future research”suggests developing real-time mobile or web applications for disease detection. Deploying such a complex ensemble model on devices with limited computational resources (like smartphones) for real-time analysis presents significant technical challenges regarding speed, processing power, and power consumption that are not detailed in the current work.

Need for longitudinal studies

The study evaluated the model based on a collected dataset. However, the“Future research”suggests conducting longitudinal studies assessing the model’s performance over multiple growing seasons. This highlights the challenge that disease appearance and prevalence can vary significantly year-to-year due to climate changes, pathogen evolution, or shifts in agricultural practices. The model’s performance and reliability need to be validated over time and under dynamic, real-world seasonal variations.

Exploration of other ensemble techniques

The study used a soft-voting ensemble method. The“Future research”suggests exploring other ensemble techniques like boosting and bagging. This indicates that while the current ensemble is effective, it might not be the most optimal combination strategy, and other methods could potentially yield further performance improvements or offer different trade-offs in computational cost or robustness.

Contribution of the study

This study’s contributions are multifaceted, aiming to improve agricultural practices and broader economic outcomes related to sesame cultivation. The study significantly contributes in the following ways: Reducing and controlling the impact of sesame diseases, which ultimately leads to improved crop yield and enhanced livelihoods for farming communities. This is achieved by enabling early and accurate disease detection. Providing a fast and efficient method for classifying different sesame diseases using image-based analysis. This allows producers to detect and treat infections before they spread. Empowering producers with early and accurate disease detection, enabling them to monitor crop health effectively and take timely action, thereby minimizing losses and ensuring healthier sesame cultivation. Increasing productivity and profitability for farmers. This is a direct result of effective and timely disease detection and treatment. Supporting the production of high-quality sesame seeds, which enhances export potential and national revenue. By improving agricultural productivity and strengthening global market relations, the study contributes to national economic growth, food security, and overall societal well-being. Ultimately fostering sustainable agricultural practices and technological advancements in disease management.

The research also opens new opportunities for further studies, addressing existing limitations and refining disease detection models. The research develops a robust and accurate model for identifying sesame diseases like phyllody and bacterial blight. This is achieved by leveraging an ensemble of state-of-the-art CNN architectures (ResNet-50, DenseNet-121, and Xception). The proposed ensemble model integrates these networks to enhance classification accuracy and improve generalization. This ensemble-based framework offers a reliable tool for early detection and classification, which has significant implications for precision agriculture.

The novelty of the proposed work lies primarily in its ensemble-based deep convolutional neural network (CNN) framework for sesame disease detection and classification, particularly applied within the Ethiopian agricultural context. Here’s a breakdown of the key aspects contributing to the novelty:

Leveraging an ensemble of deep CNNs

The study’s core novel contribution is the integration and application of an ensemble method combining multiple state-of-the-art CNN architectures (ResNet-50, DenseNet-121, and Xception) for sesame disease detection. The sources explicitly state that while some research has explored CNNs for plant disease detection, including sesame, they"rarely leverage ensemble techniques that could enhance performance". The potential benefits of ensemble-based approaches have"not been comprehensively explored in the Ethiopian context", highlighting this as a research gap that the study addresses.

Addressing limitations of previous methods

The proposed ensemble framework is presented as a way to overcome the limitations of traditional methods (which are slow, subjective, expertise-dependent, and prone to errors) and existing research (which has not fully achieved the desired level of accuracy and performance). The ensemble approach aims to"enhance classification accuracy and improve generalization"across diverse datasets, thereby increasing robustness to variations in disease symptoms and improving adaptability.

Focus on the ethiopian context

The research is specifically grounded in the context of Ethiopian sesame cultivation. Previous disease detection efforts, though valuable, have not fully addressed the specific challenges and requirements in Ethiopia.

Demonstrated superior performance

The study demonstrates that the ensemble model achieved a significantly higher overall accuracy of 96.83% compared to the individual models (ResNet-50 at 91.89%, DenseNet-121 at 94.5%, and Xception at 92.18%). This superior performance, including high recall and a strong F1-score, makes it a"robust and reliable disease classification framework"and a potentially more reliable tool for practical application.

Providing a fast and efficient method

By using image-based analysis and deep learning, the study offers a method that is described as"fast and efficient for classifying different sesame diseases", contrasting with slower traditional methods.

In summary, the novelty lies in pioneering the use of a combined ensemble of multiple deep CNN architectures specifically for sesame disease detection, demonstrating its effectiveness in achieving higher accuracy and robustness compared to single models, and applying this framework to address the specific needs and challenges within the Ethiopian agricultural landscape.

Literature review and related works

Abeje et al.13 proposed a comprehensive image processing pipeline for sesame disease detection, involving image acquisition, preprocessing, segmentation, data augmentation, feature extraction, and classification. Image data were collected from Ethiopia’s Amhara region using Samsung A32 and iPhone 6 s smartphones, yielding 540 images with 450 × 680 resolution. These images, representing bacterial blight, phyllody, and healthy leaves, were used to train a convolutional neural network (CNN). The network incorporated SoftMax fully connected layers to classify the images into their respective disease categories.

To address rice disease detection, Lu et al.20 developed a hybrid CNN-SVM model using a dataset of 619 rice leaf images across four disease classes. The model achieved a classification accuracy of 91.37% using an 80–20 training–testing split. Despite this success, the imbalanced dataset and limited data volume posed concerns about overfitting and model generalizability.

Haimanot21 focused on identifying Bacterial Blight (Xanthomonas campestris pv. sesami), Cercospora Leaf Spot (Cercospora sesame), and Sesame Gall Midge (Asphondylia sesami) using deep learning models CNN, AlexNet, and GoogLeNet based on a dataset collected from EIAR stations in Humera, Metema, and Assossa. GoogLeNet achieved the highest accuracy of 95.5%, outperforming CNN (89.4%) and AlexNet (81.6%).

Eyerusalem22 developed a deep learning-based classification system for sesame diseases Bacterial Blight, Phyllody, and healthy leaves incorporating image augmentation and preprocessing techniques such as median and average filtering, histogram equalization, and brightness preservation. A SegNet architecture was used for semantic segmentation to isolate leaf areas, followed by feature extraction and classification using a deep CNN. The system achieved 96.67% testing accuracy.

Bashier IH et al.23 conducted a comparative analysis between a custom CNN model and five established architectures (VGG16, VGG19, ResNet50, ResNet101, and ResNet152) using a dataset of 1,695 sesame leaf images from Sudan, classified into two disease types and healthy leaves. Their study highlighted the relative performance differences among models.

Tadele AB et al.24 proposed a systematic deep learning pipeline consisting of six stages: acquisition, preprocessing, segmentation, augmentation, feature extraction, and classification. A dataset of 540 sesame leaf images (Bacterial Blight, Phyllody, and healthy) was collected from Dejen, Northern Ethiopia. The model achieved 99% training and 98% testing accuracy, though limited sampling may affect generalizability.

Jasrotia et al.14 introduced an optimized CNN model for maize leaf disease detection, improving upon GoogLeNet and Cifar10 architectures. Using 500 maize leaf images, the enhanced models achieved accuracies of 98.9% and 98.8%, respectively. However, the controlled dataset environment may limit performance in diverse conditions.

Marcos et al.15 employed a simplified CNN to detect coffee leaf rust, using a small dataset of 159 images. The model, composed of convolutional, ReLU, and max-pooling layers, achieved 95% accuracy. While promising, the limited dataset and scope of disease detection constrain broader applicability.

El-Mashharawi et al.16 presented a rule-based expert system using the CLIPS shell to diagnose four tomato diseases. Users input symptom data, and the system returned diagnostic results. Although the system performed well during field testing, its rule-based structure limited flexibility in complex or unseen scenarios.

Arivazhagan et al.25 tackled mango disease detection using ResNet50 on a dataset of 8,853 leaf images with four disease types. Despite achieving a 91.5% accuracy, the study lacked in-depth parameter tuning or performance optimization.

Ashqar et al.26 developed a deep learning model to identify six tomato leaf conditions, including early blight, septoria leaf spot, leaf mold, bacterial spot, yellow leaf curl virus, and healthy conditions. Their model demonstrated a remarkable accuracy of 99.84%.

Gurusamy et al.27 applied ResNet18 and ResNet50 to detect blackleg disease in potatoes, using 532 images. Although the model yielded good results, issues such as image isolation, long training times, and lack of validation affected reliability.

Another study28 explored deep learning for apple leaf disease detection, covering black rot, scab, cedar, and rust. Using data augmentation and a CNN model, the researchers achieved 98.54% overall accuracy.

Jadhav et al.29 used AlexNet and GoogLeNet to classify soybean leaf diseases (brown spot, bacterial blight, Frogeye leaf spot) with 649 diseased and 550 healthy images. AlexNet achieved 98.75% accuracy, outperforming GoogLeNet (96.25%).

As discussed in Table 1 above, previous studies on sesame disease detection have predominantly relied on traditional image processing and individual convolutional neural network (CNN) models, which often face limitations such as sensitivity to environmental variability, plant growth stage differences, and small or imbalanced datasets. While CNNs have shown promise in disease classification, most approaches have not fully explored ensemble learning techniques, which have the potential to improve robustness and generalization by combining the strengths of multiple models. Furthermore, limited labeled data from the Ethiopian agricultural context and the diversity of disease manifestations across different regions pose additional challenges to model accuracy and reliability.

This study addresses these gaps by proposing an ensemble-based deep CNN framework specifically tailored for sesame disease classification in Ethiopia. By integrating multiple CNN architectures, the proposed method enhances the model’s ability to capture diverse disease features and variations caused by differing environmental conditions and regional disease patterns. This approach aims to improve classification accuracy and robustness, reduce overfitting risks associated with limited data, and ultimately provide a more effective tool for disease management in Ethiopian sesame crops. The ensemble framework thus offers a significant advancement over existing single-model methods, contributing to sustainable agricultural practices and improved crop health monitoring in the region.

Methodology

Traditional methods for disease detection, relying on human expertise, are often time- consuming, subjective, and prone to errors30,31. To address these limitations, this study proposes a deep learning-based approach, leveraging the power of CNNs to automatically extract relevant features from sesame leaf images. By combining multiple CNN architectures within an ensemble framework, the model seeks to enhance classification accuracy and robustness.

System architecture

As shown in Fig. 1 below, the system architecture comprised six main components: Sesame image data collection, preprocessing, feature extraction, data splitting, training, and classification. These components worked together to create an effective and accurate classification system.

Experimentation

This study followed a structured experimental process to develop and evaluate an ensemble-based deep convolutional neural network (CNN) model for sesame disease detection and classification. The key phases included dataset collection and preparation, model selection and implementation, and rigorous evaluation.

Dataset collection and preparation

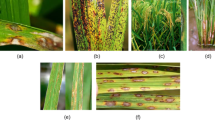

A dataset of sesame leaf images was collected to train and evaluate the proposed ensemble deep learning model. The images in the dataset are labeled by experts in the field, ensuring that the classifications are accurate and reliable. Their specialized knowledge and experience in the domain play a crucial role in the labeling process, enhancing the dataset’s quality and validity for subsequent analysis. A total of 640 sesame leaf images were captured using smartphone cameras with a resolution of 96 dpi × 96 dpi. The dataset comprised three distinct classes as shown in Table 2.

Images were collected from diverse sesame fields in Metema and Humera, Amhara region, Ethiopia to ensure data variability and representativeness. To capture the full spectrum of disease symptoms, images were acquired at different growth stages and under varying environmental conditions. Efforts were made to maintain consistent image quality throughout the collection process. Images were captured under adequate lighting conditions to avoid shadows and overexposure. The camera’s focus was adjusted to ensure clear and sharp image details.

To increase the size of the dataset and improve model generalization, image augmentation techniques were applied. These techniques included random rotation, flipping, shearing, and scaling of the original images. This process artificially expanded the dataset, enhancing the model’s ability to recognize diverse image variations. Table 3 shows the values we have used for each parameter of augmentation.

To enhance the quality of the collected sesame leaf images, various preprocessing techniques were applied, including image resizing, normalization, noise removal, histogram equalization, filtering, and enhancement. The images were standardized to 224 × 224 pixels using the bi-cubic interpolation technique, optimizing resource utilization and ensuring compatibility with pre-trained CNN models. Noise removal and histogram equalization were employed to improve image clarity, addressing issues such as speckle noise, Gaussian noise, and salt-and-pepper noise that occurred during acquisition. Median filtering was chosen for its effectiveness in preserving edge details, which are essential for accurate classification. Additionally, Brightness Preserving Dynamic Histogram Equalization (BPDHE) was implemented to enhance image contrast while maintaining natural brightness levels, further improving the dataset’s quality for deep learning- based classification.

To ensure an effective and unbiased model development process, the dataset was divided into three subsets: training (80%), validation (10%), and test (10%). The training set forms the foundation for learning patterns and making accurate predictions, while the validation set is used for fine-tuning hyperparameters and improving generalization. The test set provides an independent evaluation of the model’s performance on unseen data. This structured division enables rigorous training and unbiased assessment, leading to the development of robust sesame disease classification models. We adopted a deep learning approach due to its superior performance in image classification and detection. Specifically, we employed three pre-trained CNN models ResNet-50, DenseNet-121, and Xception capable of processing raw datasets without extensive segmentation. CNNs integrate feature extraction and classification within a unified framework, making them ideal for recognizing sesame plant diseases. Unlike traditional machine learning, which relies on handcrafted features and problem-specific algorithms, deep learning offers automation and robustness, enhancing the accuracy and efficiency of disease classification.

Feature extraction and classification

Pre-trained CNN models ResNet-50, DenseNet-121, and Xception were utilized for feature extraction in sesame leaf image analysis. CNNs, designed for image processing tasks, consist of multiple convolutional layers that extract hierarchical features from input images. These deep learning models enhance classification accuracy by automatically identifying relevant patterns, making them highly effective for sesame disease detection.

The study employs three pre-trained deep learning models ResNet-50, DenseNet-121, and Xception, for classifying sesame plant diseases. After extracting relevant features, these models are used for feature classification, leveraging their advanced convolutional architectures. Figure 2 illustrates the feature extraction and deep learning process within the convolutional neural network, highlighting how these models analyze and classify disease patterns in sesame plants.

Sesame image feature extraction and Classification process28.

To enhance accuracy, we used an ensemble approach using soft voting to combine predictions from ResNet-50, DenseNet-121, and Xception. This technique aggregates probability outputs, considering each model’s confidence levels for a more precise classification. By leveraging the strengths of all three models, our system captures diverse features and patterns in sesame leaf images, resulting in a more robust and reliable disease classification framework.

Model selection and implementation

For the implementation of our deep learning model, we used Keras, a high-level API built on TensorFlow, chosen for its ease of use and flexibility. TensorFlow provided the backend, enabling efficient computation and optimization. Google Colaboratory, a cloud-based platform with a free GPU environment, was utilized for training, offering a 12 GB NVIDIA Tesla K80 GPU and 12 GB of RAM. The Colab environment was selected for its flexibility, scalability, and ease of use, allowing rapid prototyping and testing of the proposed system without requiring extensive hardware or software infrastructure. The Colab environment was set up with an NVIDIA Tesla T4 GPU, Ubuntu 18.04.4 LTS as the operating system, Python 3.7.10, and deep learning frameworks including TensorFlow 2.4.0, Keras 2.4.3, and PyTorch 1.9.0. These specifications enabled efficient training, evaluation of deep learning models, and the implementation of the ensemble approach using the built-in libraries and tools provided by Google Colab. The model training was carried out continuously for 12 h on this setup, with the GPU significantly accelerating the process and the RAM handling the dataset and model complexity.

To visualize the performance of our proposed model, we use a confusion matrix, which clearly presents the classification results for each class. It compares true class labels with predicted labels, showing correctly classified (True Positive and True Negative) and misclassified instances (False Positive and False Negative).

Results and discussion

Dataset preparation

The dataset for this study was collected from sesame fields in Metema and Humera, Amhara region, Ethiopia, and included 640 images of sesame plants with various diseases (healthy, Phyllody, and Bacterial blight). The images were captured with a high-resolution camera and pre-processed to improve quality and reduce noise. The dataset was split into three subsets: 512 images for training (80%), 64 images for validation (10%), and 64 images for testing (10%), based on a preliminary experiment to determine the optimal data splitting ratio. The annotations for the dataset were performed by expert agronomists and validated for accuracy. The images were then resized to 224x224 pixels and normalized to have a mean of 0 and a standard deviation of 1, ensuring the dataset was in a suitable format for deep learning model training.

Training and evaluation

The dataset for this research, consisting of 640 images from sesame fields, was stored on Google Cloud Storage and accessed through Google Colab. To enhance the dataset, especially given its limited size, data augmentation techniques were applied, including random rotation, flipping, shearing, and scaling. These techniques increased the dataset size from 576 images to 1,728, ensuring balanced representation across the three classes: Healthy, Phyllody-affected, and Bacterial blight-affected. As shown in Table 4 below, the augmented dataset was crucial for training robust deep learning models, addressing class imbalance, and improving model accuracy.

Result of ResNet-50

As shown in Table 5 below, the ResNet-50 model achieved an overall accuracy of 91.89% in classifying sesame leaf images into healthy, Phyllody-affected, and Bacterial blight-affected categories. It demonstrated high precision for healthy leaves (93.00%) and Bacterial blight-affected leaves (94.00%), but slightly lower precision for Phyllody-affected leaves (88.00%). The recall rates were strong across all classes: 91.00% for healthy, 92.00% for Phyllody-affected, and 90.00% for Bacterial blight-affected, with some missed detections, especially in the Bacterial blight category. The ResNet-50 model achieved F1-scores of 92.00% for healthy leaves, 90.00% for Phyllody-affected, and 92.00% for Bacterial blight-affected leaves, indicating balanced performance across the classes. While it excelled in classifying healthy and bacterial blight-affected leaves, there is room for improvement in accurately identifying Phyllody-affected samples. Enhancing the dataset or using advanced techniques like ensemble methods could address this. Overall, the results highlight the potential of ResNet-50 for early disease detection and management in sesame cultivation.

The confusion matrix in Figure 3 below shows that the model correctly classified 19 out of 20 healthy leaves, with one misclassified as Bacterial blight. For Phyllody-affected leaves, 18 out of 20 were correctly identified, but 2 were misclassified as Healthy and Bacterial blight. The Bacterial blight class had the best performance, with 22 out of 24 correctly classified. Despite the high accuracy, these misclassifications suggest areas for further improvement.

The accuracy and loss curves for the ResNet-50 model, shown in Figure 4 (a) and (b), demonstrate a successful training process for sesame leaf image classification. The training accuracy steadily increased, reaching around 95% by the 23rd epoch, with validation accuracy stabilizing at 93%, indicating effective learning and minimal overfitting. The loss curves show a downward trend, with training loss reducing to 0.12 and validation loss stabilizing at 0.24, reflecting the model’s ability to minimize error and generalize well to unseen data.

Result of DensNet-121

The DenseNet-121 model achieved an overall accuracy of 94.5% in classifying sesame leaf images into three classes: Healthy, Phyllody, and Bacterial Blight. The detailed classification metrics for this model are shown in Table 6.

The DenseNet-121 model achieved an overall accuracy of 94.5%, with strong performance in identifying healthy leaves (96.0% recall) and balanced performance for Phyllody (96.0% precision, 92.0% recall). However, the Bacterial Blight class showed slightly lower precision (92.0%) and recall (93.0%), indicating potential misclassification due to symptom overlap, highlighting the need for further refinement and a more diverse training dataset.

The confusion matrix for the DenseNet-121 model, shown in Fig. 5, illustrates the model’s performance on a testing dataset of 64 sesame leaf images(20 Healthy, 20 Phyllody, and.

24 Bacterial Blight). While the model successfully identified most of the cases, some misclassifications occurred, indicating areas for improvement. Enhancing the training data or refining feature extraction techniques could help improve the model’s ability to accurately differentiate between the classes.

The accuracy and loss curves shown in Fig. 6 reveal a clear training progression for the DenseNet-121 model. Both training and validation accuracy stabilized at approximately 97.5% and 95.5%, respectively, after the 25th epoch, demonstrating effective learning. The training loss steadily decreased to around 0.2, while the validation loss followed a similar path, indicating the model’s ability to generalize well. Overall, the curves suggest a well-tuned model with balanced accuracy and loss, showcasing reliable performance.

Result of Xception

As described in Table 7 below, Xception model achieved an overall accuracy of 92.18% in classifying sesame leaf images, with strong performance across all classes. The Healthy class had a precision of 93.50% and recall of 90.00%, indicating effective identification of healthy leaves. The Phyllody class exhibited balanced precision and recall of 90.00%, while the Bacterial Blight class had the highest performance with a precision of 92.00% and recall of 93.00%. These results demonstrate the model’s effectiveness and robustness in distinguishing between the classes, making it suitable for agricultural applications.

Xception, with its depth-wise separable convolutions, reduces the number of parameters while preserving high accuracy. This architecture efficiently captures intricate features, making it ideal for image classification tasks. The model’s strong generalization, shown by the alignment of precision and recall, highlights its ability to manage variations in leaf appearance caused by environmental factors or disease symptoms.

Figure 7 shows the training and validation accuracy and loss curves for the Xception model over 50 epochs. The accuracy curves exhibit parabolic growth until around the 40th epoch, indicating effective learning and good generalization. After this point, both training and validation accuracy stabilize, suggesting the model has reached near-optimal performance. Similarly, the loss curves decrease and stabilize, confirming the model’s stable and generalized performance, typical of a well-trained neural network.

Result of the ensemble model

As indicated in Table 8 below, the ensemble model, combining ResNet-50, DenseNet-121, and Xception, leverages the strengths of each architecture: ResNet’s residual connections, DenseNet’s feature reuse, and Xception’s computational efficiency to enhance accuracy and robustness. This integrated approach improves prediction performance and generalization across diverse datasets, outperforming individual models in complex classification tasks.

The ensemble model, combining ResNet-50, DenseNet-121, and Xception, achieved an impressive overall accuracy of 96.83% in classifying sesame leaf images, outperforming the individual models (ResNet-50: 91.89%, DenseNet-121: 94.5%, Xception: 92.18%). It excelled particularly in the Healthy class with 97.5% accuracy, showcasing its ability to effectively identify healthy leaves and integrate the strengths of all three models for superior performance. In contrast, the Phyllody class shows slightly lower performance with an accuracy of 95.8% and an F1-score of 95.3%, indicating some difficulty in distinguishing it from other classes due to similar leaf characteristics. The Bacterial Blight class performs well with an accuracy of 96.0%. Overall, the ensemble model demonstrates high accuracy and balanced performance across all classes.

As shown in Fig. 8 above, the Ensemble model demonstrated excellent performance in classifying sesame leaf images, correctly identifying 20 healthy leaves, 19 Phyllody leaves, and 23 Bacterial Blight leaves. The few misclassifications (1 healthy leaf misidentified as Phyllody and 1 Bacterial Blight leaf misidentified as healthy) highlight its robustness. The model’s ability to leverage the strengths of multiple architectures underscores its effectiveness and potential for practical applications in sesame leaf disease detection and management.

Comparison and discussion

Table 9 below compares the performance of the developed models for sesame plant disease detection. The ResNet-50 model, with an accuracy of 91.89%, shows strong performance but has limitations in recall, suggesting it may miss some diseased samples. The DenseNet-121 model outperforms the others with an accuracy of 94.5%, along with high precision and recall, making it more reliable for identifying both healthy and diseased plants. The Xception model performs well but does not exceed the performance of DenseNet-121.

The standout performer is the Ensemble Model, which combines predictions from ResNet- 50, DenseNet-121, and Xception, achieving an impressive accuracy of 96.83%. This model excels in overall accuracy and boasts the highest recall at 97.15%, minimizing false negatives. With an.

F1-score of 96.39, it demonstrates a strong balance between precision and recall, making it highly effective for real-world applications where timely disease detection is crucial.

In conclusion, although each model has its strengths, the Ensemble Model stands out as the most robust choice for sesame plant disease detection. Its superior performance metrics indicate it will contribute to better agricultural outcomes. Future efforts should focus on continuous evaluation and optimization to ensure these models adapt well to real-world scenarios, enhancing disease management strategies.

Conclusion and future work

Sesame, a valuable oilseed crop, faces significant production challenges due to diseases like phyllody and bacterial blight, which affect crop yields and quality. This study proposes an ensemble-based deep learning framework to improve sesame disease detection and classification, addressing the challenges of variability and generalization in previous models. By combining the strengths of three pre-trained models ResNet-50, DenseNet-121, and Xception the system achieves an impressive 96.83% accuracy and accurately classifies healthy, phyllody, and bacterial blight leaves with minimal misclassifications. This approach enhances both the accuracy and robustness of disease detection, offering significant potential for precision agriculture, aiding farmers in making informed decisions and ensuring sustainable sesame production, while advancing the adoption of AI-driven solutions in agriculture.

Future research on Ensemble-Based Sesame Disease Detection and Classification should focus on optimizing and tuning the individual models (ResNet-50, DenseNet-121, and Xception) to improve performance and adaptability across diverse datasets and conditions. Expanding the training dataset and incorporating additional data sources, such as climate, soil health, and

agronomic practices, would enhance the model’s robustness and predictive capabilities. Developing real-time mobile or web applications for disease detection and exploring other ensemble techniques like boosting and bagging could further refine the model. Longitudinal studies assessing the model’s performance over multiple growing seasons would also provide insights into its long-term effectiveness, contributing to more practical solutions for sesame disease detection and promoting sustainable sesame cultivation.

Finally, future directions include evaluating feature diversity using Q-statistics and correlation metrics, integrating transformer-based architectures to enhance heterogeneity, and optimizing the ensemble for real-time deployment.

Data availability

The datasets generated and/or analyzed during the current study are available in the Google Drive link [https://drive.google.com/file/d/1zJl4qUQAqGA8FKT0uDjQ-EBJeJBoZiWR/view?usp = sharing]. Researchers interested in utilizing this data may download it directly from the provided link. Any further inquiries regarding the dataset can be directed to the corresponding authors and upon reasonable request, the respective authors will provide the collected datasets.

Abbreviations

- ANN :

-

Artificial neural network

- CNN :

-

Convolutional neural network

- CPU :

-

Central processing unit

- DCNN :

-

Deep convolution neural network

- FN :

-

False negative

- FP :

-

False positive

- GB :

-

Giga byte

- GHz :

-

Giga hertz

- HE :

-

Histogram equalization

- KNN :

-

K-nearest neighbor

- LR :

-

Logic regression

- ML :

-

Machine learning

- RAM :

-

Random access memory

- ReLu :

-

Rectified linear unit

- ResNet :

-

Residual networks

- RGB :

-

Read-green–blue

- ROC :

-

Receiver operating characteristic

- SegNet :

-

Segmentation network

- SGD :

-

Stochastic gradient descent

- SMOT :

-

Synthetic minority over-sampling technique

- SVM :

-

Support vector machine

- SVM :

-

Support vector machine

- TN :

-

True negative

- TP :

-

True positive

- VGG :

-

Visual geometry group

- VGG6 :

-

Visual geometry group

References

T. Ogasawara, k.Chiba, m.Tada in (Y. P. S. Bajaj ed ), "Medicinal and Aromatic Plants," 1988. [Online]. Available: https://en.wikipedia.org/wiki/Sesame. [Accessed 23 january 2020].

Girmay, A. B. Sesame production, challenges and opportunities in Ethiopia. Vegetos-An Int. J. Plant Res. 31(1), 51 (2018).

D. D. sasu, "prodaction quantity of sesame seesin africa," peaper , (2022).

Raghav Ram, David Catlin, Juan Romero, and Craig Cowley, "Sesame: New Approaches for Crop," 1990. [Online]. Available: https://hort.purdue.edu. [Accessed 19 december 2020].

"Ethiopian pulses, oil seeds and spices processors-exporters association," 2017. [Online]. Available: http://www.epospeaeth.org. [Accessed 23 december 2020].

Dennis W, "Ethiopian Pulses, Oilseeds and Spices Processors-Exporters Association," in InFourth International Conference on Pulses, Oilseeds, and Spices, (2014).

Jo H.M. Wijnands,E.N. Van Loo,J. Biersteker, "Oilseeds business opportunities in Ethiopia 2009," vol. 4, pp. 19–23, january (2009).

Z. J., "Sesame (Sesame indicumL.) Crop Production in Ethiopia: Trends,Challenges and Future," Science, Technology & Arts Research journal, pp. 1–7, (2012).

Zerihun, J. Sesame (Sesame indicum L.) Crop production in Ethiopia: Trends, challenges and future prospects. Sci. Technol. Arts Res. J. 1(3), 1–7 (2012).

E.S. Oplinger1, D.H. Putnam2, A.R. Kaminski1, C.V. Hanson2, E.A. Oelke2, E.E. Schulte1, and J.D., "alternative feild crops manual," 1990. [Online]. Available: https://hort.purdue.edu. [Accessed 18 january 2020].

El-Banna, O. H., Kheder, A. A. & Ali, M. A. Detection and molecular characterization of phytoplasma associated with phyllody disease on dimorphotheca pluvialis in Egypt. Int. J. Phytopathol. 13(1), 85–89 (2024).

Meena, B., Indiragandhi, P. & Ushakumari, R. Screening of sesame (Sesamum indicum L.) Germplasm against major diseases. J. Pharmacognosy Phytochem. 4, 1466–1468 (2018).

Abeje, Bekalu Tadele, et al. , "Detection of sesame disease using a stepwise deep learning approach," in 2022 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT). IEEE, 2022.

Jasrotia, S., Yadav, J., Rajpal, N., Arora, M. & Chaudhary, J. Convolutional neural network based maize plant disease identification. Procedia Comput. Sci. 218, 1712–1721 (2023).

Detection of sesame disease using a stepwise deep learning approach

El-Mashharawi, H. Q. & Abu-Naser, S. S. An Expert system for sesame diseases diagnosis using CLIPS. Int. J. Acad. Eng. Res. (IJAER) 3(4), 22–29 (2019).

Vamshi, J., Uma Devi, G., Chander Rao, S. & Sridevi, G. Sesame Phyllody Disease: Symptomatology and Disease Incidence. Int. J. Curr. Microbiol. Appl. Sci. 7(10), 2422–2437 (2018).

Esubalw, T. A. Economic of sesame and its use dynamics in Ethiopia. The Sci. World J. 2022, 1 (2022).

N. L. S. R. A. R. B. Alexandre Pereira Marcos, " "Coffee Leaf Rust Detection using convolution neural network,” article, vol. 9, pp. 1–5, (2019).

Lu, Y. et al. Image recognition of rice leaf diseases using atrous convolutional neural network and improved transfer learning algorithm. Multimedia Tools and Applications 83(5), 12799–12817 (2024).

Tesfaye, H. Sesame disease identification using image processing and deep learning approaches (Bahirdar University, 2021).

Alebachew, E. Sesame disease classification using deep convolution neural network (University of Gondar, 2021).

Bashier IH, Mosa M, Babikir SF. , "Sesame Seed Disease Detection Using Image Classification.," in In2020 International Conference on Computer, Control, Electrical, and Electronics Engineering (ICCCEEE) (pp. 1–5). IEEE. 2021 Feb 26.

Tadele, A. B., Olalekan, S. A., Melese, A. A. & Gebremariam, T. E. Sesame disease detection using a deep convolutional neural network. Journal of Electrical and Electronics Engineering 15(2), 5–10 (2022).

Arivazhagan, S. & Ligi, S. V. Mango leaf diseases identification using convolutional neural network. International Journal of Pure and Applied Mathematics 120(6), 11067–11079 (2018).

Ashqar, B. A. & Abu-Naser, S. S. Image-Based Tomato Leaves Diseases Detection Using Deep Learning. Image 2(12), 10–16 (2018).

Gurusamy, S., Natarajan, B., Bhuvaneswari, R. & Arvindhan, M. Potato plant leaf diseases detection and identification using convolutional neural networks. InArtificial Intelligence, Blockchain, Computing and Security 1, 160–165 (2024).

Baranwal S, Khandelwal S, Arora A. , "Deep learning convolutional neural network for apple leaves disease detection," in InProceedings of international conference on sustainable computing in science, technology and management (SUSCOM), Amity University Rajasthan, Jaipur-India, 2019.

Jadhav, S. B., Udupi, V. R. & Patil, S. B. Identification of plant diseases using convolutional neural networks. Int. J. Inf. Technol. 13, 2461–2470 (2021).

Belay, A. H., Ghrmay, T. M., Hailemariam, M. H. & Hailemariam, A. K. Challenges and opportunities in the oilseeds and grains productions in Humera, Tigray Region, Ethiopia: a multiple case study. EuroMed Journal of Management 5(3–4), 260–300 (2023).

Gonzales, R. C. & Wintz, P. Digital image processing (Addison-Wesley Longman Publishing Co., 1987).

Funding

The authors declared that no funding was provided for this research.

Author information

Authors and Affiliations

Contributions

Abenet Alazar Hailu, and Banchalem Chebudie Kassa, conceived the study, designed the methodology, collected and processed the dataset, implemented the deep convolutional neural network models and ensemble framework, performed the experiments and analysis, prepared all figures, and wrote the manuscript. The author reviewed and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hailu, A.A., Kassa, B.C., Desta, E.A. et al. Ensemble-based sesame disease detection and classification using deep convolutional neural networks (CNN). Sci Rep 15, 28757 (2025). https://doi.org/10.1038/s41598-025-08076-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-08076-1

Keywords

This article is cited by

-

Deep learning for precision agriculture: a systematic review of methods, challenges, and future directions

Knowledge and Information Systems (2026)