Abstract

Crop diseases and insect pests pose significant challenges to agricultural productivity, often resulting in considerable yield losses. Traditional pest recognition methods, which rely heavily on manual feature extraction, are not only time consuming and labor intensive but also lack robustness in diverse conditions. While deep learning (DL) models have improved performance over conventional approaches, they typically suffer from high computational demands and large model sizes, limiting their real-world applicability. This study proposes a novel and efficient DL-based framework for the accurate identification and classification of crop pests and diseases. The core of this approach integrates InceptionV3 as a backbone feature extractor to capture rich and discriminative features, enhanced further using a channel attention (CA) mechanism for feature refinement. To reduce model complexity and improve deployment feasibility, a metaheuristic optimization algorithm was incorporated that significantly reduces computational overhead without compromising performance. The proposed model was rigorously evaluated on the CropDP-181 dataset, outperforming several state-of-the-art methods in both classification accuracy and computational efficiency. Notably, the proposed method achieved a precision of 0.932, recall of 0.891, F1-score of 0.911, an overall accuracy of 88.50%, and an MCC of 0.816 demonstrating its effectiveness and practical potential in real-time agricultural monitoring systems.

Similar content being viewed by others

Introduction

Globally, the agriculture industry is crucial for maintaining social cohesion, promoting population health, and even preserving national security. Therefore, to boost food yield and feed the expanding population, ongoing improvement in contemporary agricultural technology and improved industrial effectiveness are needed1. Nonetheless, a lot of invasive species and microbes exist in the planting environment, increasing the susceptibility of crops to illnesses and insect pests. Pests and disease attacks are a serious threat to agriculture productivity and sustainability. Therefore, an efficient approach is demanded to identify crop pests and diseases with an effective alert prediction to pre-vent the occurrence of agricultural disasters and improve the farm’s quality and production2,3.

For the purpose of managing agronomy and producing food, agronomists and other businesses have been focusing on various forms of innovative data and intelligent technology to address crop pests and diseases and guarantee a “bumper” harvest. These technologies, which offer an effective way to identify any dangerous pests, are founded on DL, machine learning (ML), hybrid models, and different forms of image processing. The term precision agriculture (PA) refers to these principles. The PA is a common term used to handle different planting production works such as precise personality management, and intelligent process controlling, which are mostly used in food supply, and intelligent farming4. In PA, efficient and effective pest and disease recognition is considered one of the most useful applications. Currently, computer vision (CV)-based methods have achieved promising results in the detection and identification of pests and diseases in challenging agricultural environments5,6. In order to take pictures of different pests and illnesses, PA often possesses a large variety of vision sensors, including cellphones, robot visual perception units, security cameras, and more. Indeed, several studies have employed CV-based techniques to efficiently and effectively monitor the condition of pests and illnesses.

The CV-based methods are mostly divided into two broad groups, (1) traditional ML and (2) DL. In traditional ML methods includes fuzzy set7, support vector machine8 K-Nearest Neighbor9, local binary pattern10, etc. have been employed to recognize the different species of pests and disease. However, to achieve modest performance, these classical approaches rely on complex statistical analysis and feature engineering. The traditional ML process requires manual feature extraction and engineering to tweak several parameters for optimum results generation. Furthermore, the traditional ML approaches are trained on datasets that largely consist of datasets and include a small number of training samples collected in a laboratory environment, which is not applicable to deploy them in natural environments for pest recognition. Recently, the DL-based model has replicated the traditional ML model by achieving promising results in several fields such as image recognition/classification11,12,13, both DL models and medical image analysis14,15, object recognition16, and other applications showed promise in a range of agricultural tasks17,18. DL models create a computing mode consisting of an input layer, multiple hidden layers, and activation function modules. These models are inspired by the potential of multi-level protection and extract and learn deeper features in an end-to-end manner, yielding promising results when compared to traditional ML19.

Currently, various DL models have been utilized for pest species recognition and have achieved promising results in different agricultural perspectives. For instance, DL models based on convolutional neural networks (CNN) for the recognition of pest invasion situations on isolated plant leaves were employed20,21. Some other DL models such as Inception22, ResNet23, and VGG24 are used for pest species recognition and have obtained considerable results. Authors in25, employed a DL model for pests and disease recognition in tomato leaves, and achieved 89% accuracy. Nonetheless, their approach works well for recognizing the pest against a plain background, which raises doubts about its practical use. A DL model was trained for the identification of plant diseases after25 introduced a generative adversarial network (GAN) for dataset augmentation. Next, a fruit fly recognition model based on DL is used, and it achieves 95.68% accuracy26. Dawei et al.12., proposed a DL-based model that recognized ten various pest species and obtained an accuracy of 93.84%. In the investigation of previous research work, the DL models significantly increase the performance of pest recognition. However, various factors associated with the existing DL-based model restrict them from real-world deployment i.e., In terms of computing, speed, and model size, DL models are expensive. Moreover, most DL models are inappropriate for devices with limited resources, which ensure real-time deployment.

To cope with this, the study proposed an efficient DL model with a metaheuristic model compression approach for efficient and effective pest recognition. The proposed work employs InceptionV3 model as a backbone features extraction, CA is used to refine the channel-wise extracted features for more sophisticated results and finally an advanced metaheuristic approach is used to reduce the model without compromising performance, computing, size, and speed up inference. Based on the current studies, the research gap is outlined as follows:

-

Heavy DL models: DL models often require high computational resources, making them unsuitable for real-time deployment on low-power or edge devices.

-

Lack of efficient models: Most DL-based solutions are not optimized for speed or size, lacking model compression techniques needed for fast and practical pest recognition in PA.

-

Low accuracy: A key limitation of current lightweight models is their insufficient accuracy when tackling complex datasets.

The following are the main contributions of this study:

-

The study produced state-of-the-art results for pest and disease detection by proposing an efficient and effective approach based on InceptionV3 combined with CA processes.

-

Additionally, this study performed extensive experiments and ablation studies on a pest and disease recognition dataset. Regarding both qualitative and quantitative performance, the newly proposed method is contrasted with the most recent models, which shows the model’s efficiency and effectiveness over existing models.

-

Finally, the contribution in the domain of pests and disease recognition involves improving the model through the utilization of a metaheuristic approach. This optimization confirms the effective operation of the proposed model even in resource limited settings. The experimental results demonstrate, employing various tools and analyses, confirm the efficiency of these contributions.

This paper is organized into several parts. Section 2 reviews related work that sets the foundation for this study while Sect. 3 describes the methods utilized for pest and disease classification and Sect. 4 presents the main results from the experiments carried out using the selected datasets. Finally, Sect. 5 wraps up the study by highlighting the main findings, contributions, and possible directions for future work.

Related work

Given the importance of agriculture to the country’s economy, it is critical to identify dangerous pests and illnesses in natural settings. Consequently, a number of studies were put out by researchers to create a CV-based system for automated pest and disease identification in the agriculture sector. For instance, a CNN and YOLO-based method for pests recognition and detection are presented by Cheeti et al.19. They developed a self-created dataset from Internet sources and achieved promising performance. CNN was suggested by Mique et al.20 as a technique for rice pest and disease identification. They achieved 90.9% training accuracy by using photographs from the Internet to train their model. Furthermore, they implemented their model on mobile devices for public use. However, their model is expensive in terms of computation and model size, this needs more improvement in terms of precision. Nam et al.21 uses an already trained Single Shot Multi-Box Detection model with slight modifications for accurate trap insect recognition. Their approach is inspired by Deep CNN and obtained 84.0% and 86.0% of accuracies using the self-created dataset. Li et al.22 utilized the Deep CNN networks including Yolov5, Mask-RCNN, and Faster-RCNN for efficient pest’s recognition using the IPI02 dataset. Moreover, their method obtained promising results, whereas Faster-RCNN best results of 99% accuracy but their detection speed is comparatively low. Using the red palm-weevil dataset, In another study10 demonstrated an efficient region-based CNN red palm weevil recognition model that produced good results. Nevertheless, the intricacy of the model hindered the system’s practical use.

Additionally, Hu et al.23 used their own dataset to construct a near infrared imaging approach with YOLOv5 for pest detection, with promising results of 99.7% of mAP. Afterwards, Burhan and colleagues24, employed four different types of pre-trained CNN models including ResNet50V2, ResNet50, VGG19, and VGG16 with some modifications for pest and rice field disease detection. Their methods achieved an accuracy of 86.799%, however, the accuracy demands further enhancement for accurate pest detection. In another follow up study27, employed a pre-trained CNN model with several modifications for carabid beetle species recognition. Chen et al.26, presented an AI-assisted mobile-based model to detect any harmful pests in the agriculture field using a self-created dataset. In this study, they used various kinds of pre-trained DL models such as YOLOv4, single-shot detectors (SSDs), and faster region-based CNN (R-CNNs) for correct recognition. The YOLOv4 consistently produced higher F1-score values. Liu et al.,28 used the MPD2018 database to pre-send an end-to-end region-based DL model called PostNet for multi class pest recognition.

Their approach is based on three major steps, they used Channel-Spatial Attention to increase performance. In the second step, a region proposal network is employed, while in the third steps, fully connect layers are replaced. Using this strategy, PestNet obtained 75.46% mAP, however, the mAP needs further improvement. Liu et al.29, developed a DL model named YOLOv3 for pest and tomato disease detection using a self-created dataset. Furthermore, the YOLOV3 based model is utilized by Legaspi et al.30, for fruit flies and whiteflies recognition and achieved 83.07% accuracy. Their approach is focused on deployability over web applications, desktop, and Raspberry Pi for public use. For successful recognition, their strategy still has to be improved. Lim et al.,31, presented an AlexNet model with some modifications for accurate pests recognition in real environments. Authors in32, developed a DL model for mobile applications to recognize various pests. Furthermore, the model achieved 99.0% accuracy on the test set, according to their studies. The comparison also revealed they achieved state-of-the-art performance. In another follow up study33, authors utilized a swin transformer with dual attention multi scale attention network for crop disease and pest classification and achieved an accuracy of 95.68%, while in34 achieved an mAP90 of 34.9 on IP102 dataset.

Based on the literature review, proposed a DL model consisting of InceptionV3 for backbone features extraction, CA mechanism for refining the extracted features channel-wise and metaheuristic compression method to reduce the newly proposed model complexity in terms of computation and size. In the upcoming section, the study describes detailed information on the proposed model.

The proposed methodology

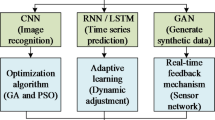

As explained in Sect. 2, the DL model obtained higher performance compared to the traditional ML model. However, the DL model has a fixed receptive field in the shallow layer used to extract different features, which results in insufficient spatial information and makes the DL model difficult to differentiate between similar objects. To address this concern, the proposed technique used InceptionV3 coupled with a CA mechanism to extract more refined details for pests and disease recognition. The overall framework of the model may be observed in Fig. 1 and is described in the following subsection.

Backbone features extraction

In 2014, Google’s research team proposed a novel CNN architecture called GoogLeNet35, which adopts the Inception module to reduce training parameters and increase network depth. There are several versions of GoogLeNet, for instance, InceptionV1 was developed in 2014, InceptionV2 and InceptionV3 in 2015, and Inceptionv4 in 2016. This paper employed the third version of renowned GoogLeNet architecture called InceptionV3, which is a robust and powerful CNN architecture, having built-in capability to process images at multiple scales and also suitable for RCD. This was initially developed by Szegedy et al. in 2015 to participate in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2015, which reduced the error rate up to 3.46%, as compared to AlexNet, VGG16, MobileNetV1 and MobileNetV2 achieved promising results. This architecture has increased Inception modules and added some more tweaks, resulting in superior performance when compared to older versions. One notable feature of Inception modules is their impressive multi-scale processing capabilities, which have consistently delivered outstanding results across a range of tasks. Three basic Inception modules Inception (A), Inception (B), and Inception (C) are present in the InceptionV3 model. An integration of pooling and convolution layers operates concurrently throughout each Inception module. The number of learning parameters is successfully reduced by these modules, which maximize model efficiency, by utilizing reduced convolution layers with filter sizes of 1 × 1, 1 × 3, and 3 × 1. By default, InceptionV3 operates on input images with dimensions of 299 × 299 × 3. The initial image processing involves passing the input images through five convolution layers, where each layer uses multiple 3 × 3 kernels for feature extraction. Then, a series of Inception modules process the input through the fully connected layer before final classification. The output of the Inception module is considered the input of the next module where the channel is concatenated after the convolution process, and then the nonlinear fusion is performed. In this way, network expressions and adaptation to different scales are improved, and overfitting is reduced. Unlike the previous version of InceptionV1 and InceptionV2, the InpcetionV3 has only one auxiliary classifier, which acts as a regularizer. There is an efficient grade size reduction block in the inception model to concatenate the features maps of the convolution layer and maxpooling layer in parallel for dimensionality reduction which shows significant improvement when compared to just using a pooling strategy for dimensionality reduction. Moreover, in this architecture, an activation dimension is expanded using 1 × 1 convolutions, and then a pooling layer is employed to minimize the geographical dimensions of the provided input block. This strategy helps to avoid representational bottlenecks and maintain effectiveness in the model.

Channel attention mechanism

Currently, the attention mechanism attracts several researchers and carries on being an omnipresent module in state-of-the-art models. Given pests and disease recognition, following its passage through several convolutional layers, it will provide a multichannel feature map denoted as F∈RC × H × W, where C stands for a channel, H for higher, and W for width. Each channel’s feature map expresses different information. In CA, the focus is on learning a 1-D weight based on the connections amid the channels of the feature map for which the 1-D weight is multiplied by the channel that corresponds to the weight. Using this strategy, the DL model can pay more attention to the significant semantic details for the pest and disease recognition task. Firstly, to achieve effective weight learning, concatenate spatial dimension data by extracting two feature maps for each channel using global maximum pooling and global average pooling. Following that, in order to produce more helpful feature vectors, these feature maps input into a shared multilayer perceptron with one hidden layer made up of c/8 units. Using the addition technique the generated feature maps are merged. Ultimately, the final CA map was produced using a sigmoid function. The mathematical formulation is given in Eq. 1.

Metaheuristic approach for model compression

The rapid growth of plant diseases and the devastating nature of pests necessitate early pest and disease recognition, which is possible via low latency, high inference speed on time response, etc. this is achievable with edge devices; but their limited processing and storage capacity necessitates the use of an effective DL model for the intended purpose. Consequently, to eliminate the redundant neurons and reduce the model parameters without sacrificing model performance, the study employs a unique approach for model compression. To lower the proposed model learning parameters and increase efficiency, a differential evolution (DE) metaheuristic36,37 technique is used in this study. Recent studies have demonstrated how metaheuristic optimization enhances ML methods across diverse domains, including agriculture and cybersecurity. They tackle key challenges such as crop yield prediction, food quality assessment, insider threat detection, and plant disease recognition using advanced methods like weight-agnostic neural networks, graph convolutional networks, and echo state networks38,39,40,41,42.

DE metaheuristic is the result of biological evolution starting with a population pool of vectors the same size as buried layer neurons and the value of the element is equal to 1 and 0. The 1 means that the neuron will be retrained, whereas the 0 means to discard the corresponding neurons. Recombination, mutation, and selection stages aid in identifying the best potential collection of redundant neurons to remove after several repeats. Equation 2 is used to calculate the donor vector in the mutation methods, which use three random vectors to substitute for the target vector from a population pool. Because the experiment’s values do not fall inside the set (0, 1), the mutation factor, represented by the letter F, is set to 0.5. The values are then rescaled to fall between 0 and 1, with values less than 0.5 being set to 0 and greater or equal to 1.

The flowchart of the DE metaheuristic approach is presented in Fig. 2. During the recombination phase, a random value is generated for each element vector; if the value is less than the designated recombination factor which is 0.7 for the current setup, the resultant element from the vector at that location is selected from the donor vector; if not, it is selected from the target vector. The procedure selects a better vector of elements for each iteration based on a fitness criterion, which aims to take the compression ratio and F1-score into account. Equations 3 and 4 provide a mathematical formulation of the suggested model fitness function.

Subject to

Where \(\:\omega _{i}\) indicates the reduced number of neurons at ith hidden layer, \(\:{\alpha\:}_{i}\) is the original neuron presented at the ith hidden layer, g indicates the weight assigned to the initial objective and 1-g is the weight assigned to the second objective. Moreover, the presented metaheuristic approach significantly decreases the model size and increases the inference speed without affecting the model performance.

Experiments and results

This section describes the information on the experimental setup (the hardware and software), the evaluation criteria, the dataset, and the comparison with state-of-the-art and ablation studies.

Experimental setup

In this study experiments were performed with the DL framework TensorFlow 2.6 version with front-end Keras library with CUDA version 11.2. The CPU is a Core i7 14700KF with an NVIDIA GeForce RTX 3070Ti GPU with 8 GB of VRAM, and the operating system is Windows 10. Additionally, to assess the accuracy, F1-score, precision, and recall along with matthews correlation coefficient (MCC) for class imbalance scenarios of the model are considered state-of-the-art evaluation parameters for any kind of DL model, and detailed information on these parameters is provided in43,44,45,46,47,48,49. The mathematical equations of the evaluation matrices are given below:

Whereas \(\:TP\) indicates correctly recognized samples (positive samples), \(\:FP\) (negative recognized samples), and \(\:FN\) positive samples that are not accurately recognized.

Dataset collection

The dataset is considered a major part of any DL or ML model training. This work utilized the CropDP-181 Dataset consisting of 124,437 images of pest and crop diseases of 88 upper-level classes and 181 sub-classes, which is one of the most satisfactory datasets for PA purposes. In this dataset, the disease images were gathered from 11 crops including tomato, corn, and alfalfa. The pests are derived from 77 species i.e., bees and butterflies. Statistics show that 100 categories have the fewest entries while 5109 have the most entries. The dataset size is adequate to fulfill the training prerequisite. Moreover, the dataset 181 classifications offer a strong basis for fine grained recognition. Figure 3 displays a selection of the CropDP-181 Dataset sample photographs. Because of the unbalanced data and diversity of this dataset, overfitting occurs during model training. Some data augmentation techniques are used to enhance the dataset size and balance it in order to address this issue.

This study used a number of augmentation strategies when it came to data augmentation, such as (1) cropping a rectangular region using a random cropping mechanism, where the aspect ratio is randomly selected in 3:4, 4:3, and the randomly sampled area in [8%, 100%]. The study then resizes the cropped area into 448 × 448 pixels. (2) By flipping each image 180 degrees both vertically and horizontally with a probability of 0.5, the images diversity was increased. (3) To improve distortion adaptability, rotate every picture in clockwise directions of 90°, 180°, and 270°. 4). To improve the lighting variety, each pixel’s S and V components are changed in the hue, saturation, and intensity (HSV) color space, but the H component remains constant. Scaling coefficients for the S and V channels are evenly selected from [0.25, 4]. Lastly, in order to standardize the models and favor simple linear performance in between training instances for mitigating unwanted performances, Zhang et al., mixup augmentation strategy is selected in step 5. More information is given in34, whereby two instances are randomly chosen from the training set each time to create a new virtual training example using weighted linear interpolation during the mixup phase.

Convergence performance of the proposed model

To assess the model performance, each method was trained including the proposed model for 30 epochs with batch size of 32 using the CropDP-181 Dataset and achieved promising results as shown in Fig. 6 and Table 1. As observed in Fig. 4, the training accuracy and loss is indicated by the blue line and the validation accuracy and loss are presented in orange line, where the training accuracy begins from 0.48 and gradually increases after each iteration. Similarly, the orange line indicates validation accuracy and loss, it is evident that validation loss starts from 0.7 and a gradual increase has been found. Both accuracy graphs are iteratively increasing, however, on the 13th epoch the training accuracy intercepts the validation accuracy. Furthermore, the proposed model converged on the 26th and obtained 0.92 training and 0.885 validation accuracy.

Evaluation against state-of-the-art techniques

On the other hand, the suggested model produced state-of-the-art accuracy and score results. The suggested model’s accuracy and F1-score were 88.50% and 0.911, respectively. The study contrasted the suggested method with eighteen state-of-the-art techniques, in the comparison the lowest F1-score and accuracy were obtained by VGG1650, the proposed technique surpassed VGG16 by achieving 0.117 and 13.88% higher F1-score and accuracy. In Table 1, it can be seen that the iSQ-RTCOV (32k)51 + ResNet-10152 (iSQ-RTCOV), Fe-Net3 + CSPNet-v2-5053 (Fe-Net-1), and Fe-Net54 + CSPNet-v2-101 (Fe-Net-2) are considered state-of-the-art methods by obtaining higher performance. In comparison, the iSQ-RTCOV achieved 0.871 and 83.11%, F1-score, and accuracy, however, the proposed model surpassed the iSQ-RTCOV by achieving 0.04 and 5.39% higher F1-score and accuracy. Furthermore, the proposed model outperformed the Fe-Net-1 and Fe-Net-2 by achieving 0.034 and 0.024 higher values for the score and 3.91% and 3.21% higher values for accuracy, respectively. Thus Table 1, indicates that the proposed model outperformed the other state-of-the-art methods in terms of F1-score and accuracy, which shows that the proposed model is the right choice for accurate pests and disease recognition.

Analysis of the proposed model architecture via ablation experiments

To design an effective and lightweight DL model for pest and disease recognition, this study conducted a series of ablation experiments focused on evaluating different backbone architectures and the impact of integrating a CA mechanism. Specifically, five widely used CNN models VGG16, ResNet-50, DenseNet-121, Xception, and InceptionV3 as standalone feature extractors and in combination with the CA mechanism were tested. As shown in Table 2, the baseline models achieved varying degrees of performance, with InceptionV3 yielding the best results among them in terms of both precision 0.860, accuracy 81.20%, and an MCC 0.683. This confirms the strong feature extraction capability of InceptionV3 in capturing fine-grained details relevant to pest and disease patterns. Introducing the CA mechanism consistently improved the performance of all baseline models. For example, the addition of CA to DenseNet-121 increased its F1-score from 0.808 to 0.821, accuracy from 76.84 to 80.55%, and an MCC from 0.622 to 0.644. Similarly, ResNet-50 improved from 76.91% accuracy and 0.617 MCC to 80.90% accuracy and 0.651 MCC with the inclusion of CA. This highlights the effectiveness of attention in enhancing the discriminative quality of features by focusing on the most relevant channels.

The radar charts in Fig. 5 provide a visual summary of these ablation results, illustrating the comparative performance and complexity of each model configuration. Each axis represents a different evaluation metric, and the model size has been inverted to maintain consistency in interpretation, where a larger radial span indicates better overall performance and efficiency. This visualization clearly demonstrates the superiority of the proposed model, which covers the largest area across all metrics.

To further boost performance while maintaining compact architecture, metaheuristic optimization techniques were explored as listed in Table 3. When applying the firefly algorithm (FA) and particle swarm optimization (PSO) to the evaluated architectures, additional gains in accuracy were observed. For instance, DenseNet-121 with CA improved from 80.55 to 82.11% with FA and reached 82.83% with PSO. Likewise, ResNet-50 + CA progressed to 82.49% with PSO. InceptionV3, which originally demonstrated the strongest baseline performance at 81.20%, further improved to 82.31% with FA and 83.19% with PSO, reinforcing its robustness in feature extraction and its suitability as the backbone for the proposed model. These results underscore the complementary role of metaheuristic-based tuning in refining model weights and feature selection.

The newly proposed model, which integrates InceptionV3 with a CA mechanism and further applies a DE metaheuristic-based compression technique, outperformed all other variants. It achieved the highest scores across all evaluation metrics: precision of 0.932, recall of 0.891, F1-score of 0.911, accuracy of 88.50%, and an MCC of 0.816. Additionally, it has the smallest footprint of 32 megabyte (MB) and the lowest parameter count i.e., 7.9 million, demonstrating its efficiency and suitability for real-time applications. In contrast, Xception performed the worst among the tested architectures, both as a standalone and when combined with attention, with the lowest accuracy 73.50% and an MCC 0.502, which can be attributed to its limited generalization to the pest and disease recognition domain and suboptimal feature representation for this task. Overall, these ablation experiments validate each component of the proposed approach and illustrate the importance of careful architectural choices and optimization techniques in building a high-performing and lightweight model.

Detailed visual analysis and interpretation of the proposed model

To further demonstrate the interpretability and reliability of the proposed approach, this study visualized the class activation maps (CAMs) for various pest and disease samples under diverse conditions. Figure 6 presents the heatmap visualizations produced by the model across multiple pest species. Each row displays the original images and their corresponding activation maps. The heatmaps clearly show that the newly proposed model is not only able to detect the presence of pests but also localizes them accurately, even in cluttered backgrounds or when the pest appears in non-standard poses. For example, in cases where insects are partially occluded or blend into the leaf texture, the model consistently highlights the correct regions. Notably, even fine-grained features such as the contours of wings or the body structure of pests like beetles and grasshoppers are well attended. The strong and concentrated heat responses in these areas suggest that the model has learned robust and discriminative representations, aided by the integrated attention mechanism.

Figure 7 provides a qualitative comparison between the newly proposed technique and other state-of-the-art models: CSPResNeXt-101, CSPNet-v2-101, iSQRT-Cov(32k), and Fe-Net101. Each row corresponds to an example input image followed by heatmaps from each model. The results reveal that competing methods often produce scattered or incomplete focus areas highlighting either fragmented regions or attending to irrelevant parts of the image. In contrast, the proposed method consistently localizes the infected or pest-affected regions with greater precision and continuity. For instance, in images of diseased fruits or insect-damaged leaves, the suggested technique outlines the affected spots more completely, while others either miss the regions or falsely highlight background areas. These visual comparisons validate the superior spatial awareness and reliability of the proposed model. The attention-enhanced feature extraction, coupled with the metaheuristic optimization, contributes to its strong localization ability. This not only supports the model’s quantitative performance but also enhances its trustworthiness and usability in real-world agricultural settings, where accurate visual evidence is critical for actionable decisions.

Conclusion

In CV, the effective application of DL has the potential to improve the performance of pests and disease recognition approaches. However, the current DL-based pests and disease recognition approaches have several issues: they misclassify pests and diseases in some challenging environments, and most DL models are not feasible to deploy on edge devices because of high computation and model size. Therefore, this work employed efficient and effective methods based on InceptionV3 as a backbone coupled with CA and compressed by DE metaheuristic approach to improve model efficiency and decrease the size on the disk. Additionally, the proposed model is evaluated on the benchmark CropDP-181 dataset and compared with the most recent state-of-the-art models. The newly introduced approach effectively balances accuracy, MCC, size, and speed. Furthermore, this study includes a detailed comparison with widely recognized metaheuristic algorithms such as FA and PSO. The proposed methodology supports PA by enabling early identification of pests and diseases, thereby contributing to increased crop yield. A limitation of this study is the lack of detailed analysis on disease characteristics and their progression over time. In addition, future work should focus on accurately localizing pest-affected or disease-affected regions within images using semantic segmentation or object detection techniques.

Data availability

The datasets generated during and/or analyzed during the current study are available in [Inaturalist], https://github.com/visipedia/inat_comp/tree/master/2017, [AIChallenger] can be obtained from the links below: https://aistudio.baidu.com/aistudio/datasetdetail/76075, and [IP102], https://github.com/xpwu95/IP102.

References

Manavalan, R. Automatic identification of diseases in grains crops through computational approaches: A review. Comput. Electron. Agric. 178, 105802 (2020).

Kong, J. et al. Multi-stream hybrid architecture based on cross-level fusion strategy for fine-grained crop species recognition in precision agriculture. Comput. Electron. Agric. 185, 106134 (2021).

Kong, J. et al. A Spatial feature-enhanced attention neural network with high-order pooling representation for application in pest and disease recognition. Agriculture 12 (4), 500 (2022).

Karunathilake, E., Le, A. T., Heo, S., Chung, Y. S. & Mansoor, S. The path to smart farming: innovations and opportunities in precision agriculture. Agriculture 13 (8), 1593 (2023).

Akintuyi, O. B. Adaptive AI in precision agriculture: a review: investigating the use of self-learning algorithms in optimizing farm operations based on real-time data. Res. J. Multidisciplinary Stud. 7 (02), 016–030 (2024).

Kumar, S. A. & Ilango, P. The impact of wireless sensor network in the field of precision agriculture: A review. Wireless Pers. Commun. 98, 685–698 (2018).

Khan, H. et al. Automated wheat diseases classification framework using advanced machine learning technique. Agriculture 12 (8), 1226 (2022).

Aladhadh, S. et al. An efficient pest detection framework with a medium-scale benchmark to increase the agricultural productivity. Sensors 22 (24), 9749 (2022).

Pattnaik, G. & Parvathy, K. Machine learning-based approaches for tomato pest classification. TELKOMNIKA (Telecommunication Comput. Electron. Control). 20 (2), 321–328 (2022).

Alsanea, M. et al. A deep-learning model for real-time red palm weevil detection and localization. J. Imaging. 8 (6), 170 (2022).

Coulibaly, S., Kamsu-Foguem, B., Kamissoko, D. & Traore, D. Deep learning for precision agriculture: A bibliometric analysis. Intell. Syst. Appl. 16, 200102 (2022).

Dawei, W. et al. Recognition pest by image-based transfer learning. J. Sci. Food. Agric. 99 (10), 4524–4531 (2019).

Anagnostis, A., Asiminari, G., Papageorgiou, E. & Bochtis, D. A convolutional neural networks based method for anthracnose infected walnut tree leaves identification. Appl. Sci. 10 (2), 469 (2020).

Anagnostis, A. A deep learning approach for anthracnose infected trees classification in walnut orchards. Comput. Electron. Agric. 182, 105998 (2021).

Thenmozhi, K. & Reddy, U. S. Crop pest classification based on deep convolutional neural network and transfer learning. Comput. Electron. Agric. 164, 104906 (2019).

Shijie, J., Peiyi, J. & Siping, H. Automatic detection of tomato diseases and pests based on leaf images, in 2017 Chinese automation congress (CAC), pp. 2537 – 2510. (2017).

Gandhi, R., Nimbalkar, S., Yelamanchili, N. & Ponkshe, S. Plant disease detection using CNNs and GANs as an augmentative approach, in IEEE International Conference on Innovative Research and Development (ICIRD), 2018, pp. 1–5. (2018).

Leonardo, M. M., Carvalho, T. J., Rezende, E., Zucchi, R. & Faria, F. A. Deep feature-based classifiers for fruit fly identification (Diptera: Tephritidae), in 2018 31st SIBGRAPI conference on graphics, patterns and images (SIBGRAPI), pp. 41–47. (2018).

Cheeti, S., Kumar, G. S., Priyanka, J. S., Firdous, G. & Ranjeeva, P. R. Pest detection and classification using YOLO AND CNN. Annals Romanian Soc. Cell. Biology, pp. 15295–15300. (2021).

Mique, E. L. Jr & Palaoag, T. D. Rice pest and disease detection using convolutional neural network, in Proceedings of the 1st international conference on information science and systems, pp. 147–151. (2018).

Nam, N. T. & Hung, P. D. Pest detection on traps using deep convolutional neural networks, in Proceedings of the 1st International Conference on Control and Computer Vision, pp. 33–38. (2018).

Li, W., Zhu, T., Li, X., Dong, J. & Liu, J. Recommending advanced deep learning models for efficient insect pest detection. Agriculture 12 (7), 1065 (2022).

Hu, Z. Research on identification technology of field pests with protective color characteristics. Appl. Sci. 12 (8), 3810 (2022).

Burhan, S. A., Minhas, S., Tariq, A. & Hassan, M. N. Comparative study of deep learning algorithms for disease and pest detection in rice crops, in 2020 12th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), pp. 1–5. (2020).

Hansen, O. L. Species-level image classification with convolutional neural network enables insect identification from habitus images. Ecol. Evol. 10 (2), 737–747 (2020).

Chen, J. W. et al. A smartphone-based application for scale pest detection using multiple-object detection methods. Electronics 10 (4), 372 (2021).

Schweiger, A. H. & Svenning, J. C. Down-sizing of Dung beetle assemblages over the last 53 000 years is consistent with a dominant effect of megafauna losses. Oikos 127 (9), 1243–1250 (2018).

Liu, L. PestNet: an end-to-end deep learning approach for large-scale multi-class pest detection and classification. Ieee Access. 7, 45301–45312 (2019).

Liu, J. & Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 11, 898 (2020).

Legaspi, K. R. B., Sison, N. W. S. & Villaverde, J. F. Detection and classification of whiteflies and fruit flies using YOLO, in 2021 13th International Conference on Computer and Automation Engineering (ICCAE), pp. 1–4. (2021).

Lim, S., Kim, S. & Kim, D. Performance effect analysis for insect classification using convolutional neural network, in 7th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), 2017, pp. 210–215. (2017).

Karar, M. E., Alsunaydi, F., Albusaymi, S. & Alotaibi, S. A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alexandria Eng. J. 60 (5), 4423–4432 (2021).

Karthik, R., Ajay, A., Bisht, A. S., Illakiya, T. & Suganthi, K. A deep learning approach for crop disease and pest classification using Swin transformer and Dual-Attention Multi-scale fusion network. IEEE Access, (2024).

Zhang, L., Zhao, C., Feng, Y. & Li, D. Pests identification of ip102 by yolov5 embedded with the novel lightweight module. Agronomy 13 (6), 1583 (2023).

Szegedy, C. et al. Going deeper with convolutions. pp. 1–9 .

Storn, R. & Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 11, 341–359 (1997).

Dehghani, M., Montazeri, Z., Trojovská, E. & Trojovský, P. Coati optimization algorithm: A new bio-inspired metaheuristic algorithm for solving optimization problems. Knowl. Based Syst. 259, 110011 (2023).

Jovanovic, L. et al. Evaluating the performance of metaheuristic-tuned weight agnostic neural networks for crop yield prediction. Neural Comput. Appl. 36 (24), 14727–14756 (2024).

Bukumira, M. et al. Carrot grading system using computer vision feature parameters and a cascaded graph convolutional neural network. J. Electron. Imaging. 31 (6), 061815–061815 (2022).

Mladenovic, D. et al. Sentiment classification for insider threat identification using metaheuristic optimized machine learning classifiers. Sci. Rep. 14 (1), 25731 (2024).

Bacanin, N. et al. Crop yield forecasting based on echo state network tuned by crayfish optimization algorithm. pp. 1–6 .

Protic, M. et al. Utilizing Modified Metaheuristic Optimizers for Computer Vision Optimization in Agriculture. pp. 1370–1375.

Yar, H. et al. Optimized dual fire attention network and medium-scale fire classification benchmark. IEEE Trans. Image Process. 31, 6331–6343 (2022).

Yar, H. et al. An efficient deep learning architecture for effective fire detection in smart surveillance. Image Vis. Comput. 145, 104989 (2024).

Parez, S., Dilshad, N., Alghamdi, N. S., Alanazi, T. M. & Lee, J. W. Visual intelligence in precision agriculture: exploring plant disease detection via efficient vision Transformers. Sensors 23 (15), 6949 (2023).

Parez, S., Dilshad, N., Alanazi, T. M. & Lee, J. W. Towards sustainable agricultural systems: A lightweight deep learning model for plant disease detection. Comput. Syst. Sci. Eng. 47 (1), 515–536 (2023).

Parez, S., Dilshad, N. & Lee, J. W. Attention-Driven optimized CNN for efficient early detection of plant diseases in resource constrained environment. Agriculture 15 (2), 127 (2025).

Nadeem, M. et al. Visual intelligence in smart cities: A lightweight deep learning model for fire detection in an IoT environment. Smart Cities. 6 (5), 2245–2259 (2023).

Chicco, D. & Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 21, 1–13 (2020).

Russakovsky, O. Imagenet large scale visual recognition challenge. Int. J. Comput. Vision. 115 (3), 211–252 (2015).

Ding, Y. et al. AP-CNN: weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 30, 2826–2836 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778. (2016).

Wang, C. Y. et al. CSPNet: A new backbone that can enhance learning capability of CNN, in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp. 390–391. (2020).

Xie, S., Girshick, R., Dollár, P., Tu, Z. & He, K. Aggregated residual transformations for deep neural networks, in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1492–1500. (2017).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. pp. 770–778 .

Xie, S., Girshick, R., Dollár, P., Tu, Z. & He, K. Aggregated residual transformations for deep neural networks. pp. 1492–1500 .

Wang, C. Y. et al. CSPNet: A new backbone that can enhance learning capability of CNN. pp. 390–391 .

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. pp. 4700–4708 .

Russakovsky, O. et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vision. 115 (3), 211–252 (2015).

Ding, F. Two-stage least squares based iterative Estimation algorithm for CARARMA system modeling. Appl. Math. Model. 37 (7), 4798–4808 (2013).

Du, R. et al. Fine-grained visual classification via progressive multi-granularity training of Jigsaw patches. pp. 153–168 .

Zhuang, P., Wang, Y. & Qiao, Y. Learning attentive pairwise interaction for fine-grained classification. pp. 13130–13137 .

Acknowledgements

This work was supported by the faculty research fund of Sejong University in 2024 and was also supported by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R40), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding

This work was supported by the faculty research fund of Sejong University in 2024 and was also supported by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R40), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

S.P.: writing—original draft preparation, Conceptualization, methodology, software, validation, N.S.A. and T.H.: Formal analysis, investigation, T.M. and W.U.: Resources, data curation, visualization, M.A.K. and N.D.: Writing—review and editing, visualization, supervision, project administration, funding acquisition, formal analysis, investigation. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Parez, S., Alghamdi, N.S., Mahmood, T. et al. Towards precision agriculture: metaheuristic model compression for enhanced pest recognition. Sci Rep 15, 20805 (2025). https://doi.org/10.1038/s41598-025-08307-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-08307-5