Abstract

This paper presents a layer-wise training procedure of neural networks by minimizing a Variance-Invariance-Covariance Regularization (VICReg) loss at each layer. The procedure is beneficial when annotated data are scarce but enough unlabeled data are present. Being able to update the parameters locally at each layer also handles problems such as vanishing gradient and initialization sensitivity in backpropagation. The procedure utilizes two forward passes instead of one forward and one backward pass as done in backpropagation, where one forward pass works on original data and the other on an augmented version of the data. It is shown that this procedure can construct more compact but informative spaces progressively at each layer. The architecture of the model is selected to be pyramidal, enabling effective feature extraction. In addition, we optimize weights for variance, invariance, and covariance terms of the loss function so that the model can capture higher-level semantic information optimally. After training the model, we assess its learned representations by measuring clustering quality metrics and performance on classification tasks utilizing a few labeled data. To evaluate the proposed approach, we do several experiments with different datasets: MNIST, EMNIST, Fashion MNIST, and CIFAR-100. The experimental results show that the training procedure enhances the classification accuracy of Deep Neural Networks (DNNs) trained on MNIST, EMNIST, Fashion MNIST, and CIFAR-100 by approximately 7%, 16%, 1%, and 7% respectively compared to the baseline models of similar architectures.

Similar content being viewed by others

Introduction

In deep learning, having enough representative data is essential for a model to generalize well. However, in many cases, obtaining large amounts of labeled data and ensuring annotation quality is challenging. Due to the increased availability of information on the Internet and large unlabeled datasets, advanced techniques such as self-supervised learning are utilized to exploit this large amount of information without relying on labels1. Learning image representations using self-supervised methods maximizes the agreement between embeddings of images from different viewpoints or augmented versions. Variance-Invariance-Covariance Regularization (VICReg) is a self-supervised method that uses variance, invariance, and covariance loss terms to produce augmentation-invariant and non-redundant representations2. Consequently, training a Deep Neural Network (DNN) to construct an abstract and informative representation space using VICReg loss has been proven to be useful, leading to outstanding performance on various downstream tasks.

Although the presence of large datasets is important for DNNs to generalize well, other factors such as the choice of model architectures, hyperparameters, and learning procedures also play a significant role in achieving good performance3. DNNs are generally trained on large labeled datasets using backpropagation4. Although the recent success of deep learning is enabled by the use of backpropagation to train a deep model to optimize its parameters based on only one loss term, it has several limitations. Initially, backpropagation is biologically implausible. No convincing evidence is found that the cortex stores neural activities or explicitly propagates error derivatives in a backward pass5,6. Another disadvantage of backpropagation is that it needs to know perfectly what computation is performed in the forward pass. It is impossible to use backpropagation after inserting black boxes or non-differentiable components into the forward pass. Furthermore, backpropagation suffers from vanishing gradient problems that require the use of batch normalization or a carefully chosen weight initialization technique and activation function7,8. This learning procedure also needs to store forward-pass computations and error derivatives, which makes it memory inefficient. Calculating and propagating derivatives backward is time-consuming. The Forward-Forward algorithm can alleviate these problems by enabling deep models to learn layer-wise with a simple objective without backpropagating error derivatives5. Some advantages of this learning method over backpropagation include the ability to utilize low-powered analog hardware without relying on reinforcement learning and the ability to be considered a model of learning in the cortex.

Given the fact that in recent years, the availability of large unlabeled datasets have increased, annotation quality and precision remains have become challenging due to the time-consuming and labor-intensive nature of the process. Utilizing extensive unlabeled datasets via self-supervised learning can be a solution, requiring the training of large-scale DNNs with numerous parameters using backpropagation. This study aims to integrate self-supervised methods with a layer-wise training strategy to combine the advantages of both approaches. The combined procedure allows the model to learn features layer by layer, addressing the drawbacks of backpropagation and leveraging large unlabeled datasets effectively. The motivation behind this work is to utilize large unlabeled datasets while alleviating the limitations faced by backpropagation using layer-wise training. This work investigates the possibility of using VICReg loss as the layer-wise objective function for DNNs trained in a layer-wise manner, combined with simple data augmentation techniques. Overall, the contributions of this study are as follows:

-

1.

This proposed learning procedure utilizes two forward passes, similar to the Forward-Forward algorithm. No label information or data corruption is needed in this approach. It requires feeding two batches of data to the model, one with the original data and the other with an augmented version of the same data.

-

2.

The procedure incorporates VICReg loss at each layer and minimizes it to construct useful abstract representation spaces. The experiments demonstrate that minimizing VICReg loss at each layer of a deep model can construct more informative representation spaces which can later be used for tasks such as classification later by fine-tuning with very limited labeled data.

Related works

The success of DNNs greatly depends on the availability of large datasets. It is pivotal for a deep model to learn good enough representations that lead to generalization. Researchers have found that the performance of DNNs on computer vision tasks increase logarithmically with the size of the training dataset9. One approach to making use of massive unlabeled datasets without relying on semantic annotations is self-supervised learning (SSL). Various SSL methods employ much larger datasets using pseudo-labels that enable models to recognize uncommon, more subtle representations10. DNNs trained with SSL techniques can detect patterns with the help of pretext tasks, such as predicting missing parts of an image, maximizing the agreement between augmented views of an image, predicting the order of a sequence, etc. SSL also improves uncertainty estimation and handles problems such as adversarial examples and label corruption well, making it more robust in practical real-world scenarios11.

SSL methods are widely used in natural language processing domain. For example, Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformer 3 (GPT-3) are trained in a self-supervised fashion12,13. In the computer vision domain, models such as Barlow Twins, Bootstrap Your Own Latent (BYOL), Momentum Contrast (MoCo), Simple Contrastive Learning of Representations (SimCLR), Swapping Assignments between Views (SwAV), and VICReg are examples of self-supervised learning methods14,15,16,17,18. Since the goal of learning in self-supervised methods is to minimize the distance between embeddings of different viewpoints, the encoders may produce identical and non-informative representation vectors. This phenomenon is called collapse and when it happens, the encoders of a joint embedding model ignore the inputs and produce identical output vectors, which do not capture useful information19.

To prevent this problem, self-supervised representation learning using a joint embedding architecture can be done via two methods namely contrastive and non-contrastive self-supervised learning. First, contrastive methods push dissimilar images away and pull semantically similar images closer together in the representation space explicitly. This technique often requires searching for offending dissimilar images from the memory bank, or from the current batch, which is costly and memory intensive2. SimCLR and MoCo are examples of contrastive self-supervised methods. SimCLR, an SSL method for contrastive learning of visual representations is used to maximize agreement between augmented views of similar images and minimize the agreement between different images and MoCo incorporates a dynamic dictionary of negative samples for better contrasting; hence, it creates better representations14,15.

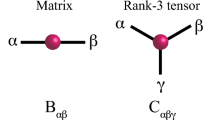

Next, non-contrastive methods focus on maximizing the information content of the output embeddings. An example of a non-contrastive learning method is VICReg. VICReg works well without requiring weight sharing, batch or feature-wise normalization, stop-gradient operations, memory banks, and contrastive samples. One of the advantages of VICReg is that it does not require the two encoders to share the same architecture or input modality, therefore it is possible to use this method for multimodal data, such as text and audio. This approach utilizes three loss terms to construct a convenient and useful representation space, namely variance, invariance, and covariance. The variance keeps the standard deviation of each variable of the embedding vectors above a threshold to restrict the model from producing identical embeddings, preventing collapse. Then the mean squared distance between embedding vectors from two encoders is minimized to pull the similar data points in the space closer. To avoid informational collapse the covariance is applied to ensure that the covariances between every pair of embedding variables are close to zero. This ensures the variables of the embeddings are not highly correlated, allowing them to span the entire space without losing useful information.

Training a joint embedding architecture model using a VICReg loss consists of four parts. First, encoder networks utilize different views of an image as both input and output representations. Second, the representation vectors are fed into expander networks that map representations into an embedding space. Third, VICReg loss is computed based on the embeddings from both expander networks. Finally, the parameters of the encoder and expander networks are updated. The expander networks are used to de-correlate the embedding variables, reducing the dependencies between the variables of the representation vector by expanding the dimensions in a non-linear fashion, and eliminating the information responsible for the two representations being different. Another method that relies on maximizing the information content of the embeddings does not require any negative samples. It makes the normalized cross-correlation matrix of the embeddings from the two encoders as close to the identity matrix as possible through training18.

Recent works have adopted VICReg across diverse domains. VICReg, as a pre-training loss, is used in Brugada ECG detection, improving Brugada-syndrome classification with a standard convolutional neural network (CNN)20. DA-VICReg abstains from using augmentations by pairing time-synchronous engine-vibration signals as positives21. It also uses attention to extract invariant features of the faults across various operating conditions. The covariance term of the VICReg loss is refined and then added to the IterNorm in the projector in VIbCReg (Variance-Invariance-better-Covariance), resulting in more rapid convergence and higher accuracy on time series data, including ECG data22. VICRegL is a variant of VICReg loss that focuses especially on local features. It applies its loss term to both local and global features23. In a recent study, researchers have demonstrated that ConvNeXt pretrained via VICRegL loss learns better wound structures, outperforming ResNet benchmarks24. JOSENet, a video violence detection system, also utilizes a custom VICReg loss25. It achieves higher accuracy, with reduced need for memory and computation. The potential use of VICReg and other SSL methods in reinforcement learning has been studied. It is evident from recent studies that incorporating RL-specific augmentations such as replay weighting or state masking in the VICReg approach significantly enhances data efficiency26. In MXene property prediction, it uses graph contrastive learning with a VICReg-inspired approach to embed property structures for property regression27. In a more recent study, IMSVD is introduced, which discretizes latent variables for mutual information maximization28. It explicitly enforces invariance with minimal redundancy as in VICReg. These contributions adapt the technique to new domains or data types such as time series signals, satellite imagery, reinforcement learning, and graphs etc29.

Learning using backpropagation consists of two passes through the neural network. A forward pass computation outputs some predictions based on the combination of the features learned so far, and a loss is computed based on the predicted and target outputs. The error derivatives flow backward to the layers, and based on those derivatives, the parameters are updated accordingly to train the model4. There are several attempts to mitigate the drawbacks of backpropagation using layer-wise training. A greedy layer-wise learning approach that scales to large datasets, such as ImageNet, constructs a deep convolutional neural network by sequentially solving 1-hidden-layer auxiliary problems that inherit both the advantages of shallow networks and the representational power of deep networks30. Another layer-wise CNN using local loss for human action recognition (HAR) tasks approaches state-of-the-art performance on several HAR benchmarks31. Research has explored complex interactions between layers of deep neural networks. Shallower layers of a DNN tend to converge faster than the deeper layers because shallow layers are responsible for detecting evenly distributed low-level features, whereas deeper layers combine these features to do specific tasks. This results in a comparatively flatter loss landscape in shallower layers than in deeper layers, ensuring faster convergence32.

The Forward-Forward algorithm is a greedy multi-layer learning procedure that tries to mitigate two limitations of backpropagation: the implausibility of backpropagation being involved in learning in the cortex and the computational inefficiency of backpropagation. This learning procedure is based on Boltzmann machines and Noise Contrastive Estimation33,34. It utilizes two forward passes to update the parameters. The first forward pass works on real data and optimizes the weight of each hidden layer to increase goodness, whereas the second forward pass uses negative data to adjust weights and decrease goodness at every hidden layer. Samples paired with correct labels are referred to as positive data, and samples combined with incorrect labels are called negative data. The measure of goodness is calculated as the sum of squared neural activities for positive data and the negative sum of squared neural activities for negative data. Considering the sum of squares of neural activities as goodness, then the goal of learning is to train the model to keep the goodness above some threshold for real data and below that threshold for negative data. Therefore, the model can classify inputs as either positive or negative data based on the logistic output of the goodness score. A goodness value above the threshold implies the input is paired with the correct label, and this way the model can associate images with true labels5.

Adaptation of the Forward-Forward algorithm with spiking neural networks (SNNs) is demonstrated in a recent study, showing that SNNs trained using the algorithm can achieve comparable accuracy to traditional SNNs trained with backpropagation35. In another study, the Forward-Forward algorithm is integrated with a layer-wise supervised contrastive objective36. The approach gradually tightens representations of the same classes, resulting in substantial accuracy gain and much faster convergence. In conclusion, these extensions prove the effectiveness of the Forward-Forward algorithm coupled with biologically inspired and contrastive learning approaches, mitigating the performance gap between backpropagation and local-update or biologically plausible learning paradigm. Table 1 shows the summary of the related works.

Methodology

The research investigates the plausibility of using local VICReg losses for layer-wise training of DNNs. At each layer, a local VICReg loss is calculated from the output representations of two augmented versions of the data. The parameters of that layer are then updated based on the local gradient. Figure 1 illustrates the proposed approach for the training process of DNNs. Initially, the model receives a batch of images denoted as (I). It then processes two batches of images for a joint embedding architecture where two branches share their weights. The first input to the architecture is the same as (I), denoted as (X). Then, from a uniform distribution of augmentation techniques (T), one random augmentation (t) is applied to each of the images of (I), producing the second input (\(X^{\prime }\)). The inputs (X) and (\(X^{\prime }\)) pass through each layer of the model (f1, f2, f3, f4), and producing embeddings (Z1, Z2, Z3, Z4) and (\(Z1^{\prime }\), \(Z2^{\prime }\), \(Z3^{\prime }\), \(Z4^{\prime }\)) respectively. A VICReg loss is calculated based on the output embeddings at each layer independently, unrelated to the previous and subsequent layers. This local loss value is used to compute gradients and update the parameters of that specific layer. This approach encourages the formation of increasingly abstract and refined representation spaces at deeper layers. Algorithm 1 summarizes the proposed layer-wise training procedure for DNNs.

After pre-training the DNN with the proposed layer-wise procedure, the informativeness of the learned feature space is measured using different clustering quality metrics. The intuition is that the learned features should form meaningful and distinct clusters in the representation space. Also, the effectiveness of the features is assessed by fine-tuning the DNN using a small labeled subset of the data and comparing it with a linear classifier (freezing the pre-trained layers) and a classifier of the same architecture trained with the same small subsets.

Dataset description

To evaluate the proposed approach, we utilize the MNIST37 dataset (10 classes), the EMNIST38 balanced dataset (47 classes), the Fashion MNIST39 dataset (10 classes), and the CIFAR-10040 dataset consisting of 100 classes. These datasets are then processed for training. Each pair consists of an original image and its augmented version based on some defined augmentation strategies. We use four data augmentation techniques: random shifting, random rotation, random zooming, and blurring.

During the augmentation of an image, one augmentation strategy is picked and applied uniformly from the four augmentation techniques mentioned. The dataset is then normalized to a range of 0-1. Random augmentation is applied at each epoch to improve the robustness of the model and expose the model to diverse augmented versions of the data. In addition, a random seed (42) is used to ensure reproducibility.

We evaluate our approach on multiple datasets using consistent split ratios. For MNIST and Fashion MNIST, which each contain 70,000 images, we allocate 50,000 for training (71.43%), 5,000 for validation (7.14%), 5,000 for fine-tuning (7.14%), and 10,000 for testing (14.29%). The EMNIST Balanced dataset, comprising 131,600 images, is split using the same properties, with 94,000 for training (71.43%), 9,400 for validation (7.14%), 9,400 for fine-tuning (7.14%), and 18,800 for testing (14.29%). For the more complex CIFAR-100 dataset, which contains 60,000 images, we use 40,000 for training (66.67%), 5,000 for validation (8.33%), 5,000 for fine-tuning (8.33%), and 10,000 for testing (16.67%). These splits ensure consistency while enable robust evaluation across datasets of varying complexity. Figure 2 presents examples of original and augmented MNIST images.

Model description

The research utilizes four different DNN models for the MNIST, EMNIST, Fashion MNIST, and CIFAR100 datasets. For MNIST, a model with four dense layers containing 500, 400, 300 and 200 neurons, respectively, are used. Another architecture with four layers of 1000, 800, 600, and 400 neurons is utilized for more complex datasets like EMNIST, Fashion MNIST, and CIFAR-100, which requires models capable of capturing intricate patterns and handling a larger number of classes. The suitable activation and kernel initializer are determined empirical analysis. These DNNs use an L2 kernel regularizer at each layer with a regularization factor of 0.1. Although VICReg can handle heterogeneity in encoding networks and multimodal inputs, in this case, the model uses the same set of parameters and receives the same type of input. The effect of different activation functions and kernel initializers is negligible, as expected. However, the Scaled Exponential Linear Unit (SELU) activation function and Glorot Uniform initialization do slightly better than other activation functions and initialization combinations. Therefore, this combination is used in the DNNs for experimental analysis.

SELU activation function: The SELU is an activation function that enables self-normalizing neural networks41. The main objective of SELU is to keep the average and variation stable when training deep neural networks. This helps ensure a smooth and effective learning process. Equation (1) shows the function used in SELU, where x is the input tensor, and \(\lambda\) and \(\alpha\) are predefined constants (\(\alpha = 1.67326324\) and \(\lambda = 1.05070098\)).

Glorot uniform: Glorot uniform initialization is a technique used to set the initial values of weights in a neural network. Its goal is to prevent vanishing or exploding gradients during training by ensuring the variance of activations remains roughly the same across all layers8. Equation (2) is used to calculate the Glorot uniform where \(n_{\text {in}}\) is the number of input units to the layer, \(n_{\text {out}}\) is the number of output units to the layer, and U denotes a uniform distribution between \(n_{\text {in}}\) and \(n_{\text {out}}\).

Table 2 describes the model architecture, including layer types, output shapes, and parameter counts for the MNIST model. The models we used have input layers and four dense layers with progressively lower output dimensions. The pyramidal structure is chosen because the upper layers retain fewer but more abstract components, resulting in the same level of data retention by learning patterns. The model for the MNIST dataset, with a total of 773,400 parameters, is relatively small due to the simplicity of the dataset. The EMNIST model is more complex, containing 2,306,800 parameters to accommodate the larger number of classes and intricate patterns in the data. Similarly, the Fashion MNIST model mirrors the EMNIST architecture with the same 2,306,800 parameters, as it also needs greater capacity to process the visual complexity inherent to fashion images. The CIFAR-100 model is the most complex, with a total of 4,594,800 parameters. Its layers accommodate higher-dimensional features, capturing the rich diversity and complexity of the CIFAR-100 dataset, which includes 100 classes and more intricate image structures.

Hyperparameter optimization

We determine suitable weights for the variance, invariance and covariance terms, which play a significant role in training. To find the best weights for the loss terms, five different combinations of the weights are utilized shown in Table 3. The model is trained layer-wise on MNIST three times for 50 epochs for each of the combinations to ensure that the choice is correct. The learning rate at the first layer is 0.01, and it is decreased by a factor of 10 in the next layers. Other configurations include the batch size of 2048, and Adam optimizer with an exponential learning rate decay. In our study, we assessed several combinations of variance, invariance, and covariance to enhance feature representation quality and clustering performance. To identify the optimal weight configuration for our tasks, we first replicate all weight combinations reported in the VICReg study2. It allows us to assess the impact of each configuration on clustering quality and representation performance in our model. Through this replication process, we evaluate each combination on the MNIST dataset using clustering metrics such as the Davies-Bouldin (DB) index and Calinski-Harabasz (CH) score. After comprehensive testing, we find that the (25, 25, 1) combination yields the best results, achieving the highest clustering performance and representation quality. We also calculate and report the standard deviation (SD) of DB values across the trials to check if the performance variance throughout the trials is consistent. Here, we find that the values span a consistent range. It shows that our approach is effectively able to achieve low DB values (good cluster separability) with less variability between random trials.

These metrics evaluate cluster separability and compactness. The DB index measures cluster quality based on how low the variation within each cluster is and how well the different clusters are separated42. Lower DB index values indicate better clustering performance. The CH score indicates cluster quality by measuring the ratio of the sum of between-cluster dispersion to within-cluster dispersion, with higher values indicating better clusters43. It is evident from the scores that, despite having fewer neurons in the deeper layers, the final model can capture the same amount of information. The scores of each layer start to converge at a point, meaning that with lower dimensional space, it is still able to capture the full information effectively. The DB index is averaged over three runs for each configuration and choose the combination that gives us the best average score. In this case, it is 25 for the variance term, 25 for the invariance term, and 1 for the covariance term of the VICReg loss. Table 3 summarizes the weight combinations for the loss terms, along with their best and average DB indices after testing each combination three times.

After finalizing the optimal hyperparameter combination, learning rate, activation, and kernel initializer, the model is trained again to evaluate the effectiveness of the proposed method. The learning rate is initially set to 0.1 and is reduced by a factor of 10 in each successive deeper layer. This choice of learning rates ensures stability in the deeper layers. Deeper layers extract abstract features and are more sensitive to large, abrupt changes, whereas shallower layers need faster learning of low-level features, so a higher learning rate can help. A learning rate scheduling is applied, which decreases the learning rate gradually at each epoch. The learning rate decreases exponentially. It starts at the mentioned initial learning rates and decays by a factor of 0.1 every 50 epochs. Additionally, the parameters of the layers are updated at each iteration instead of training a layer fully and then going to the next layer.

Mathematical formulation of VICReg-based layer-wise representation learning

During training, to ensure that the model encounters diverse sample pairs to enhance robustness, shuffling and data augmentation are applied at each epoch. In the proposed method, each layer receives both the original input and augmented versions of the original data, and transforms them into two batches of embeddings. The embeddings are then used to compute the VICReg loss, as shown in Fig. 3.

Each layer \(n\) processes the embedding outputs of the previous layer \(n-1\). The outputs of the previous layer \(n-1\), \({\text{Z}}_{n-1}\) and \({\text{Z}}'_{n-1}\), are fed into layer \(n\) to compute the embedding outputs of the current layer \(n\), \({\text{Z}}_n\) and \({\text{Z}}'_n\). The three loss terms are then calculated separately. Local updates in each layer aim to minimize the VICReg loss, which includes variance \(v({\text{Z}}_n)\), invariance \(s({\text{Z}}_n, {\text{Z}}'_n)\), and covariance \(c({\text{Z}}_n)\) terms. First, the variance term tries to maintain the embeddings of samples in a batch to be different, so that the risk of collapse is minimized. It keeps the standard deviation of the variables of embedding vectors above a defined threshold. \({z}_{ij}\) denotes the j-th feature of the i-th sample in the batch. \({\bar{z}}_{j}\) is the mean of the j-th feature of all n samples. \({Var(z_{j})}\) is the calculated variance (j-th feature) in the current batch. Second, the invariance term focuses on minimizing the mean square distance between the original and its augmented version. By doing that, the model attracts data of similar characters closer. Here, \({z}_{i}\) is the representation of the i-th original data, and \({\bar{z}}_{i}\) is the representation of the i-th data’s augmented version. The loss minimizes the distance between the two representations, reinforcing that the model learn useful augmentation invariant patterns. Third, the covariance term tries to make the covariance between pairs of embedding variables close to zero so that the embeddings are spread around the whole representation space. This operation prevents informational collapse that is commonly seen in self-supervised learning settings. Here, indices for data samples in a mini-batch is \(i = 1, 2, \dots , n\), and embedding dimensions are \(j = 1, 2, \dots , d\). This strategy creates compact and informative representation spaces, improving the model’s performance, particularly when annotated data are scarce. VICReg is a self-supervised learning method for image representation. It avoids mode collapse without requiring negative examples or momentum encoders by using a loss function composed of three terms, shown in Equations (3)–(8). Here, \({\text{Z}}\) and \({\text{Z}}'\) are the embeddings of the input images, \(d\) is the dimensionality of the embeddings, and \(\gamma\) and \(\epsilon\) are small positive constants to ensure numerical stability.

Variance loss term:

Invariance loss term:

Covariance loss term:

The combined loss function is as:

The VICReg loss is used to update the parameters of the layers locally. Each of the losses is unrelated to the preceding and subsequent layers. By locally updating the parameters with the help of local VICReg losses and the pyramidal structure of the architecture, the model captures useful information in increasingly compact representation spaces.

Experimental results

The evaluation of the layer-wise trained model with the proposed approach is done in two ways. First, by training a linear classifier using the representations from the pre-trained model (by freezing all the pre-trained layers). Several subsets of processed datasets are used to evaluate the efficiency of the trained model. Second, to estimate performance, classification accuracy between a baseline trained from scratch, and a fine-tuned model (all layers of the pre-trained model are fine-tuned) trained using the same subsets. The finding suggests that the linear classifier works well, meaning that it has learned important features. Furthermore, the training procedure yields approximately 7%, 16%, 1%, and 7% accuracy gains compared to baselines when the models are fine-tuned on small subsets of MNIST, EMNIST, Fashion MNIST, and CIFAR-100, respectively. While more labeled samples reduce the need for the proposed training procedure, it will be useful when having a small labeled dataset but a large number of unlabeled samples, since it still improves performance with limited labels.

Experiment on MNIST

We use clustering quality metrics to evaluate the feature informativeness of the pre-trained model. Clustering quality is assessed from the representations of the pre-trained model, and classification performance is evaluated using different-sized subsets of the labeled data.

Learned representation quality assessment with cluster quality metrics: After training the model layerwise on MNIST for 50 epochs with weights (25, 25, 1) for the loss terms variance, invariance, and covariance respectively, we obtain the cluster quality metrics shown in Table 4. The DB and CH indices assess clustering quality across layers, with each metric offering a different perspective on clustering performance. The DB index measures how well the clusters are separated from each other, with lower DB values indicating better clusters. This is achieved by evaluating the ratio of the intra-cluster dispersion to the inter-cluster separation. The gradual reduction in the DB index across layers suggests that the deeper layers form more compact clusters with lower intra-cluster variance, resulting in refined and informative representations.

The CH index measures the ratio of the sum of between-cluster dispersion to within-cluster dispersion. Higher CH values indicate better clustering, with well-separated and compact clusters. As shown in Table 4, the CH score peaks at the third layer, indicating improved representation learning during training. However, the slight dip at the last layer suggests that deeper layers may sacrifice some separation for more abstract representations, as expected in hierarchical models.

Figure 4a shows the epochs versus the DB index values in each layer as training progresses for 50 epochs. The DB index of each layer converges at a point near epoch 50, indicating deeper layers are now able to retain similar information as lower layers despite having fewer neurons. Figure 4b shows the loss minimization per layer during training on MNIST using the proposed approach. Note that although lower layers have lower DB index values, they exhibit a slightly higher loss due to their high-dimensional output embeddings.

Testing influence of learned representations in downstream task: The pre-trained model is then fine-tuned with fine-tune data subsets of different sizes, ranging from 500 samples to 5000 samples. A baseline model with the same architecture is also trained from scratch on similarly sized fine-tune datasets of 500, 1000, 2500, and 5000 samples. Training is performed with an initial learning rate of 0.0005 and a batch size of 128.

An exponential decay learning rate scheduler with a decay step of 50 and a decay rate of 0.95 is used. Instead of training for a fixed number of epochs, early stopping with a patience of five for fine-tuning and baseline is used to allow the model to stop at the optimal point. For the linear evaluation, we set the initial learning rate to 0.005 and patience to 10, since only the SoftMax layer is trained, and the model may underfit if not enough epochs are utilized. The training curves in Figures 5,6, 7 prove that neither of the models overfits the dataset. Although the gap between the validation and training curves is large, this is because only a small amount of labeled data is available for the model to learn.

Table 5 shows the macro average precision, recall, and F1 score of MNIST classification on each of the subsets with different numbers of samples, rounded to the nearest whole percent. The macro average of the metrics is used because the dataset is well-balanced. The uniformity in the metrics is evident from the table. This indicates that the trained models are consistent in predicting instances from each class, suggesting that the models are balanced and reliable.

Table 6 presents the performance comparison of various model versions trained on subsets of different sizes from the MNIST dataset. With just 500 samples, fine-tuning achieves an accuracy of 85.13%, while the baseline reaches only 77.78%. Similarly, for 1000 samples, fine-tuning results in 88.66% accuracy, whereas the baseline lags at 81.33%. When there is limited data available (around 500 or 1000 samples), fine-tuning delivers impressive results. It achieves up to 7% higher accuracy than the baseline model. As we increase the sample size to 2500 and 5000, the advantage of fine-tuning becomes less pronounced, but it still maintains a slight edge in terms of accuracy and efficiency. For 2500 samples, fine-tuning achieves an accuracy of 91.69%, whereas the baseline manages 88.52%, and with 5000 samples, fine-tuning reaches an accuracy of 94.23%, compared to the 93.68% accuracy of the baseline. This demonstrates that the proposed approach excels in a low-data regime, offering better generalization and efficiency. Traditional training methods become more competitive as data availability grows. Figure 8 shows the confusion matrices of our baseline and fine-tuned models on an MNIST subset of size 500.

The baseline model and fine-tuned model with 500 samples for 50 epochs without using early stopping or any other automatic convergence criterion are shown in Figure 9. Learning of the baseline model plateaus at an early epoch due to insufficient data, halting its ability to improve. In contrast, the trained model with the proposed approach and then fine-tuned with that small labeled data outperforms the baseline by a significant margin of around 7% with limited labeled data. As illustrated in the plot, the accuracy of the baseline model plateaus around 75%, whereas the fine-tuned model continues to improve, ultimately reaching above 85% training accuracy, meaning that initially learned representations via the proposed approach are useful.

Experiment on EMNIST

The pre-trained EMNIST model is evaluated by the same approach as discussed earlier for the MNIST experiment. Evaluation reports and discussions are given below for the EMNIST experiment.

Learned representation quality assessment with cluster quality metrics: The same loss term weights (25 for variance, 25 invariance, and 1 for covariance) are applied for the loss terms while training the model with the EMNIST dataset. Table 7 presents the cluster quality metrics for each layer. The DB index and CH score are used to assess the quality of the clusters. The decreasing trend of the DB index across layers indicates that the deeper layers of the model successfully capture meaningful features, ensuring more compact and well-separated clusters. Similarly, the CH score stabilise in the deeper layers, reflecting the model’s capability to manage the higher complexity of EMNIST. These observations confirm that our layer-wise VICReg training remains effective even when applied to larger, multi-class datasets like EMNIST. Figure 10a shows epochs versus DB index values, while Figure 10b shows epochs versus loss minimization of each layer while training on EMNIST.

Testing influence of learned representations in classification task: A pre-trained model (trained on unlabeled EMNIST samples) is fine-tuned and a baseline model with the same architecture is trained on five fine-tune subsets containing 1000, 1500, 2000, 5000, and 9400 samples to get a comparison of how well the proposed approach works. The initial learning rate is set to 0.0005 with a batch size of 256. A larger batch size is used because EMNIST consists of many classes, and each batch should ideally contain enough samples from each class. As used in the MNIST experiment, exponential learning rate decay and early stopping (with the same configuration) are utilized. Figures 11, 12, 13 shows the training accuracy curves of the baseline, linear classifier, and fine-tuned model. Table 8 presents the rounded macro average precision, recall, and F1 score of EMNIST classification on each subset. The closeness of the three metrics indicates consistent performance across all classes.

Table 9 presents the accuracy and number of epochs for the models trained on EMNIST dataset subsets. When labeled data is very limited (e.g., 1000 or 1500 samples), fine-tuning the pre-trained model helps achieve around 16% accuracy gain compared to baseline models trained on the same subsets. Specifically, only 1000 labeled samples, the baseline model reaches 40.52% accuracy, whereas our fine-tuned model reaches 56.71% accuracy.

For 1500 samples, the baseline model achieves 42.26% accuracy, while the fine-tuned model reaches 59.03%. As discussed earlier, sufficient labeled data helps to reach comparable performance with the proposed method. However, due to the efficiency of the pre-trained model, it rapidly achieves a high accuracy within just a few epochs. Figure 14 shows the confusion matrices of our baseline and fine-tuned model outputs. It shows that the distribution of predictions is scattered compared to the fine-tuned model, where the distribution of the diagonal element is prominent and less scattered, meaning that it is better than the baseline and the prediction is more accurate.

To compare, a baseline model is trained with 1000 samples for 50 epochs without using early stopping or any automatic convergence criteria. The pre-trained EMNIST model is then fine-tuned with the same number of samples for 50 epochs and compared with the results shown in Fig. 15. Learning of the baseline model plateaus early due to insufficient data, halting its ability to improve. In contrast, the fine-tuned model outperforms the baseline by a significant margin. As shown in the graph, the accuracy of the baseline model plateaus around 40%, while the fine-tuned model continues to improve, ultimately reaching training precision above 50%.

Experiment on more complex datasets

The model is subsequently evaluated on more complex MNIST datasets, namely Fashion MNIST and CIFAR-100. The same weights for variance, invariance, and covariance loss terms are utilized to train models on the Fashion MNIST and CIFAR-100 datasets. Tables 10 and 11 show the cluster quality metrics scores after training the model for 50 epochs on Fashion MNIST and CIFAR-100, respectively. Despite the increased complexity of the datasets, the proposed approach is still able to capture useful features, as evident from the cluster quality metrics.

The pre-trained models are then fine-tuned on the respective datasets (Fashion MNIST and CIFAR-100) and compared to baselines with identical architectures and trained on the same subsets. For Fashion MNIST, four subsets of size 500, 1000, 2500, and 5000 samples are used, while for CIFAR-100, three larger subsets (2500, 3500, and 5000 samples) are used due to the complexity and number of classes in the dataset. CIFAR-100 subsets are chosen to be larger because there are 100 classes, and there must be enough samples from each class in the subsets. A dropout layer with a dropout rate of 50% is added right before the softmax layer to minimize the risk of overfitting, given the small subset sizes and dataset complexity. A learning rate of 0.0001 is used for both models during fine-tuning with an exponential decay learning rate scheduler (decay step: 50 and a decay rate: 0.95). The model is fine-tuned for 200 epochs with a batch size of 128. The baseline is trained under the same configurations and data subsets to ensure a valid comparison.

Table 12 compares the efficiency of the pre-trained model on Fashion MNIST with limited labeled data. From the table, it is evident that fine-tuning the pre-trained model with a very few samples can lead to around 1% accuracy gain. Additionally, adding a softmax layer to the pre-trained model and only training that layer can achieve 55% to 66% accuracy with just the same small subsets, demonstrating that the representations learned during pre-training are effective.

In the CIFAR-100 experiment, accuracies are low due to the complexity of the dataset and the use of a fully connected neural network composed only of dense layers. A dense-only network cannot fully capture the complex patterns in that dataset. Nevertheless, the approach still helps the model achieve better accuracy. For example, the model fine-tuned with just 500 samples (an average of five samples from each class) gets 12.03% accuracy compared to the baseline (trained on the same subset) manages to get only 7.05%. With a simple architecture and a very small labeled dataset, the approach improves accuracy by approximately 5% to 8% in the CIFAR100 experiment as shown in Table 13.

Figures 16, 17 shows the comparison of the training accuracy of the baseline model and the pre-trained model on the Fashion MNIST and CIFAR-100 datasets. While the baseline model’s accuracy lags, the pre-trained model demonstrate a steady increase in accuracy throughout training.

Comparison with self-supervised learning and layer-wise training approaches

The proposed VICReg layer-wise method focuses on enhancing cluster and feature representation quality through a layer-wise optimization strategy. This approach ensures that features remain both compact and well-separated at each layer, as validated by the DB and CH metrics, making it highly effective for multi-class tasks such as MNIST and EMNIST. In comparison, the Forward-Forward (FF) layer-wise algorithm aims to eliminate backpropagation by using local optimization at each layer, making it suitable for resource-constrained hardware and neuromorphic computing. As shown in Table 14, FF’s scalability and transfer performance are limited compared to VICReg’s progressive representation refinement. Similarly, the Forward-Forward in a self-supervised setting (SSL) focuses on learning representations without labels through a goodness-based loss at each layer. While it aligns with VICReg’s layer-wise training concept, it underperforms in transfer learning tasks, especially on more complex datasets like CIFAR-10 and SVHN. The Probabilistic SSL approach (SimVAE) prioritizes style-content retention in its generative framework, making it better suited for fine-grained tasks requiring nuanced features. However, VICReg’s structured clustering optimization provides a practical advantage for tasks needing clear feature separation. Finally, SimCLR and MoCo, with their contrastive learning frameworks, excel in transfer learning but require large datasets and complex augmentations, which can be resource-intensive. In contrast, VICReg achieves robust performance through structured layer-wise optimization, offering a scalable and efficient solution for multiclass classification. This comparison, summarized in Table 14, highlights how VICReg bridges the gap between layer-wise optimization and effective representation learning, making it a strong competitor across various applications. The study explores solving MNIST and Fashion MNIST classification problems with various SSL methods and the SimVAE44. Whereas these methods involve various advanced architectures, our method outperforms or achieves comparable performance with a simple architecture and very few labeled data. Table 15 shows the comparison between various SSL methods and our proposed method.

Discussion on computational efficiency and scalability

Pre-training the model takes approximately three to six seconds per epoch, depending on the complexity and magnitude of the datasets. It takes longer than fine-tuning the model because pre-training the model involves calculating VICReg loss at each layer and local updates. However, this approach eliminates the need for massive, labeled data and the use of backpropagation during pretraining. With very little labeled data, it can easily gain impressive accuracy compared to the same small dataset due to the proposed pre-training step. Table 16 shows the time taken (in seconds) for each scenario: linear, baseline, and fine-tune.

However, our current experiments involve MLPs as a proof of concept for layer-wise training with local VICReg losses; this approach can potentially scale to larger architectures and datasets. VICReg is an architecture-agnostic approach, showing successful integration with deeper architectures, including ResNet and Transformers2,23. A recent study shows that large convolutional networks can be trained well with local losses like VICReg47. It demonstrates that deep networks like ResNet-50 can achieve nearly similar performance with local objectives, meaning our conceptually similar approach can achieve effective performance as well. In this work, we focus on MLPs to prove our concept, and we plan generalizability studies for future studies.

Representation space evolution during training

To visualize the evolution of representation spaces during training, the technique called t-distributed Stochastic Neighbor Embedding (t-SNE) is used, which is useful for projecting high-dimensional data in a low-dimensional space48. t-SNE converts the similarities between data points into probabilities and then minimizes the difference between these probabilities and the actual data. This is a visualization technique where similar objects are represented by nearby points and dissimilar objects are modeled by distant points with high probability. The high-dimensional output from the model is projected onto a two-dimensional plane to visualize the representation spaces using this dimensionality reduction technique. Figure 18 illustrates how the representation space evolves throughout training. The plots display the data at different epochs (0, 10, 20, 30, 40, and 50) using t-SNE to project the high-dimensional representations onto a two-dimensional space. We describe the details in the following.

-

Epoch 0: At the start, the clusters of data overlap, which means the model has figured out how to separate different classes effectively.

-

Epoch 10: As training progresses, the clusters start to become more distinct, but there is still some overlap.

-

Epoch 20: The separation between clusters keeps improving, showing that the model is starting to learn meaningful representations.

-

Epoch 30: The clusters become even more defined, with less overlap between different classes.

-

Epoch 40: The representation space shows well-separated clusters, indicating that the model is learning effectively and doing a better job at separating classes.

-

Epoch 50: Finally, at the last epoch, each cluster is clearly defined with minimal overlap. This demonstrates that the model has successfully learned how to represent the data. At this point, the individual VICReg loss and DB index for each layer converge at similar points.

Conclusion

This study focuses on developing a procedure for layer-wise training of a deep neural network using VICReg as local losses. Being a layer-wise training procedure, it handles the drawbacks of backpropagation by performing two forward passes, one on the original data and another on an augmented version. The procedure constructs compact and informative representation spaces, as evidenced by the improved classification accuracy in linear evaluation. It also enhances accuracy by approximately 7%, 16%, 1%, and 7% on MNIST, EMNIST, Fashion MNIST, and CIFAR-100 classification tasks, respectively, when trained on unlabeled data and fine-tuned on very limited labeled data. These findings highlight the effectiveness of VICReg-based layer-wise training in leveraging large unlabeled datasets, paving the way for more efficient and robust neural network models. This approach has broad potential applications across various domains. This research highlights the versatility and impact of VICReg-based layer-wise training, demonstrating its potential to address real-world challenges across multiple fields. Future research will focus on adapting this approach to more diverse datasets and advanced model architectures, further extending its applicability and effectiveness.

Data availability

All data generated or analyzed during this study are included in these published articles: Gradient-based learning applied to document recognition (http://yann.lecun.com/exdb/mnist/), EMNIST: Extending MNIST to handwritten letters (https://www.nist.gov/itl/products-and-services/emnist-dataset), Fashion-MNIST: A novel image dataset for benchmarking machine learning algorithms (https://github.com/zalandoresearch/fashion-mnist), CIFAR-10 and CIFAR-100 datasets (https://www.cs.toronto.edu/ kriz/cifar.html).

References

Rani, V., Nabi, S. T., Kumar, M., Mittal, A. & Kumar, K. Self-supervised learning: A succinct review. Arch. Comput. Methods Eng. 30, 2761–2775 (2023).

Bardes, A., Ponce, J. & LeCun, Y. Vicreg: Variance-invariance-covariance regularization for self-supervised learning. arXiv preprint arXiv:2105.04906 (2021).

Sarker, I. H. Machine learning: Algorithms, real-world applications and research directions. SN Computer Sci. 2, 160 (2021).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Hinton, G. The forward-forward algorithm: Some preliminary investigations. arXiv preprint arXiv:2212.13345 (2022).

Gandhi, S., Gala, R., Kornberg, J. & Sridhar, A. Extending the forward forward algorithm. arXiv preprint arXiv:2307.04205 (2023).

Ioffe, S. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015).

Glorot, X. & Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, 249–256 (JMLR Workshop and Conference Proceedings, 2010).

Sun, C., Shrivastava, A., Singh, S. & Gupta, A. Revisiting unreasonable effectiveness of data in deep learning era. In Proceedings of the IEEE international conference on computer vision, 843–852 (2017).

Gui, J. et al. A survey on self-supervised learning: Algorithms, applications, and future trends. IEEE Transactions on Pattern Analysis and Machine Intelligence (2024).

Hendrycks, D., Mazeika, M., Kadavath, S. & Song, D. (2019) Using self-supervised learning can improve model robustness and uncertainty. Advances in neural information processing systems 32

Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018).

Brown, T. et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 33, 1877–1901 (2020).

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A simple framework for contrastive learning of visual representations. In International conference on machine learning, 1597–1607 (PMLR, 2020).

He, K., Fan, H., Wu, Y., Xie, S. & Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 9729–9738 (2020).

Grill, J.-B. et al. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 33, 21271–21284 (2020).

Caron, M. et al. Unsupervised learning of visual features by contrasting cluster assignments. Adv. Neural Inf. Process. Syst. 33, 9912–9924 (2020).

Zbontar, J., Jing, L., Misra, I., LeCun, Y. & Deny, S. Barlow twins: Self-supervised learning via redundancy reduction. In International conference on machine learning, 12310–12320 (PMLR, 2021).

LeCun, Y. Open Rev. 62, 1–62 (2022).

Ronan, R., Tarabanis, C., Chinitz, L. & Jankelson, L. Self-supervised vicreg pre-training for brugada ecg detection. Sci. Rep. 15, 9396 (2025).

Chen, T., Xiang, Y. & Wang, J. Da-vicreg: A data augmentation-free self-supervised learning approach for diesel engine fault diagnosis. Measur. Sci. Technol. 35, 086109 (2024).

Lee, D. & Aune, E. Vibcreg: Variance-invariance-better-covariance regularization for self-supervised learning on time series. arXiv preprint arXiv:2109.007832 (2021).

Bardes, A., Ponce, J. & LeCun, Y. Vicregl: Self-supervised learning of local visual features. Adv. Neural Inf. Process. Syst. 35, 8799–8810 (2022).

Akay, J. M. & Schenck, W. (2024) Transferability of non-contrastive self-supervised learning to chronic wound image recognition. In International Conference on Artificial Neural Networks, pp 427–444 Springer

Nardelli, P. & Comminiello, D. Josenet: A joint stream embedding network for violence detection in surveillance videos. arXiv preprint arXiv:2405.02961 (2024).

Çağatan, Ö. V. & Akgün, B. Uncovering rl integration in ssl loss: Objective-specific implications for data-efficient rl. arXiv preprint arXiv:2410.17428 (2024).

Vertina, E. W., Sutherland, E., Deskins, N. A. & Mangoubi, O. Mxene property prediction via graph contrastive learning. In 2024 IEEE 14th International Conference Nanomaterials: Applications & Properties (NAP), 1–5 (IEEE, 2024).

Niu, C., Xia, W., Shan, H. & Wang, G. Information-maximized soft variable discretization for self-supervised image representation learning. arXiv preprint arXiv:2501.03469 (2025).

Brack, V. & Koßmann, D. Local representation learning using visual priors for remote sensing. In IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, 8263–8267 (IEEE, 2024).

Belilovsky, E., Eickenberg, M. & Oyallon, E. Greedy layerwise learning can scale to imagenet. In International conference on machine learning, 583–593 (PMLR, 2019).

Teng, Q., Wang, K., Zhang, L. & He, J. The layer-wise training convolutional neural networks using local loss for sensor-based human activity recognition. IEEE Sensors Journal 20, 7265–7274 (2020).

Chen, Y., Yuille, A. & Zhou, Z. Which layer is learning faster? a systematic exploration of layer-wise convergence rate for deep neural networks. In The Eleventh International Conference on Learning Representations (2023).

Hinton, G. E. & Sejnowski, T. J. Optimal perceptual inference. Proc. IEEE Conf. Computer Vision Pattern Recog. 448, 448–453 (1983).

Gutmann, M. & Hyvärinen, A. Noise-contrastive estimation: A new estimation principle for unnormalized statistical models. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, 297–304 (JMLR Workshop and Conference Proceedings, 2010).

Ghader, M., Kheradpisheh, S. R., Farahani, B. & Fazlali, M. Backpropagation-free spiking neural networks with the forward-forward algorithm. arXiv preprint arXiv:2502.20411 (2025).

Aghagolzadeh, H. & Ezoji, M. Marginal contrastive loss: A step forward for forward-forward. In 2024 13th Iranian/3rd International Machine Vision and Image Processing Conference (MVIP), 1–6 (IEEE, 2024).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324 (1998).

Cohen, G., Afshar, S., Tapson, J. & Van Schaik, A. Emnist: Extending mnist to handwritten letters. In 2017 international joint conference on neural networks (IJCNN), 2921–2926 (IEEE, 2017).

Xiao, H., Rasul, K. & Vollgraf, R. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747 (2017).

Krizhevsky, A., Nair, V. & Hinton, G. Cifar-10 and cifar-100 datasets. URl: https://www. cs. toronto. edu/kriz/cifar. html 6, 1 (2009).

Klambauer, G., Unterthiner, T., Mayr, A. & Hochreiter, S. Self-normalizing neural networks. Advances in neural information processing systems 30 (2017)

Davies, D. L. & Bouldin, D. W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 2, 224–227 (1979).

Caliński, T. & Harabasz, J. A dendrite method for cluster analysis. Commun. Stat. -Theory Methods 3, 1–27 (1974).

Bizeul, A., Schölkopf, B. & Allen, C. A probabilistic model behind self-supervised learning. Transactions on Machine Learning Research .

Scodellaro, R., Kulkarni, A., Alves, F. & Schröter, M. Training convolutional neural networks with the forward-forward algorithm. arXiv preprint arXiv:2312.14924 (2023).

Brenig, J. & Timofte, R. A study of forward-forward algorithm for self-supervised learning. arXiv preprint arXiv:2309.11955 (2023).

Siddiqui, S. A., Krueger, D., LeCun, Y. & Deny, S. Blockwise self-supervised learning at scale. arXiv preprint arXiv:2302.01647 (2023).

Van der Maaten, L. & Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 9, 11 (2008).

Funding

This work was supported by King Saud University, Riyadh, Saudi Arabia, under ongoing Research Funding program (ORF-2025-951).

Author information

Authors and Affiliations

Contributions

J.D. conceptualized the idea, J.D. conducted the experiment(s), R.R. prepared the visuals, P.S. found the optimal hyperparameter settings, J.D., P.S., and R.R. wrote the manuscript, J.U. and A.Z. supervised the research, M.W. acquired funding. All authors reviewed the manuscript.

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Datta, J., Rabbi, R., Saha, P. et al. Deep representation learning using layer-wise VICReg losses. Sci Rep 15, 27049 (2025). https://doi.org/10.1038/s41598-025-08504-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-08504-2