Abstract

Training AI models on imbalanced datasets with skewed class distributions poses a significant challenge, as it leads to model bias towards the majority class while neglecting the minority class. Various methods, such as Synthetic Minority Over Sampling Technique (SMOTE), Adaptive Synthetic Sampling (ADASYN), Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), have been employed to generate synthetic data to address this issue. However, these methods are often unable to enhance model performance, especially in case of extreme class imbalance. To overcome this challenge, a novel approach to generate synthetic data is proposed which uses Genetic Algorithms (GAs) and does not require large sample size. It aims to outperform state-of-the-art methods, like SMOTE, ADASYN, GAN and VAE in terms of model performance. Although GAs are traditionally used for optimization tasks, they can also produce synthetic datasets optimized through fitness function and population initialization. Our synthetic data generation approach analyzes the Simple as well as the Elitist Genetic Algorithms, along with Logistic Regression and Support Vector Machines to evaluate the population initialization and fitness function. Experimental results across three datasets (Credit Card Fraud Detection, PIMA Indian Diabetes, and PHONEME) demonstrate that the proposed method significantly outperforms the previous techniques based on the commonly used performance metrics, including accuracy, precision, recall, F1-score, ROC-AUC, and AP (Accuracy-Precision) curve. This highlights the potential of GAs in the development of accurate and reliable AI models for imbalanced datasets.

Similar content being viewed by others

Introduction

In recent years, Neural Networks are considered one of the most significant breakthroughs in the field of Machine Learning. Despite being widely used, Neural networks heavily rely on the quality and distribution of the training data1. A significant challenge they face is the imbalanced nature of many datasets, where the number of instances across different classes is unevenly distributed. This imbalance leads to biased model predictions, favoring the majority class while neglecting the minority class. Such bias can severely impact the model’s overall performance and accuracy, especially in critical areas like medical diagnosis or anomaly detection, where minority instances are vital. Recent applications in healthcare shows the critical importance of addressing class imbalance, such as in predicting mechanical ventilation outcomes and mortality rates2, orthopedic disease classification3, cardiovascular disease detection4, and lung cancer classification5. To address this problem, various methods have been developed over time, which are broadly classified into three categories, data-level methods that modify data samples, algorithm-level methods that adjust the learning algorithms, and hybrid methods that combine both approaches6.

The data-level methods allow the use of standard machine learning architectures and pipelines which has made them widely popular. Standard data-level methods include random over-sampling, which increases the number of minority class instances through random duplication, and random under-sampling, which decreases the number of majority class instances by randomly discarding samples of this class. The effectiveness of over-sampling versus under-sampling has been widely researched. Some studies have found under-sampling to be more advantageous in certain situations, while others suggest that a combination of both techniques effectively addresses imbalanced datasets. For example, a boosting-based approach that incorporates both over-sampling and under-sampling to handle imbalanced data has been used in a study7.

A comparative study8 of several over-sampling and under-sampling methods shows that the performance varies based on the dataset and the classifier used. Synthetic Minority Over-sampling Technique (SMOTE)9 generates synthetic samples for the minority class by interpolating between existing minority class instances. Borderline SMOTE is a variant of SMOTE10, which performs synthetic instance generation near the decision boundary. Other data-level methods, such as Adaptive Synthetic Sampling (ADASYN)11, Edited Nearest Neighbor (ENN)12, and Cluster-Based Over-Sampling (CBO)13, have also been proposed.

Algorithm-level methods modify the learning process to handle imbalanced data, allowing the possibility of more customized and specific solutions. Several methods fall in this category, such as Cost-Sensitive Learning14, which modifies the learning algorithm to assign higher costs to misclassifications of the minority class. Class weightings, ensemble methods15, Boosting16, Kernel-Based Methods17 have also been applied. The Active Learning approach18 has been employed as well, where the learning algorithm actively selects the most informative samples to label, focusing on minority class instances.

Some hybrid methods that combine both data-level and algorithm-level approaches have also been utilized. Another approach to data generation is Variational Autoencoders (VAEs)19, which are a type of generative model that combines the principles of deep learning and Bayesian inference to generate new data samples that resemble the training data. Although different from the traditional data-level and algorithm-level methods mentioned above, their behavior and a comparative analysis with our approach is also presented. While all the above-mentioned data generation methods find applications in a wide range of tasks, they still have some limitations, due to which optimal model performance is not achieved. One major issue with methods like SMOTE is the higher probability of overfitting, as synthetic instances are created by interpolating between the minority instances of the dataset. This leads to the creation of models that generalize well over the training data but perform poorly when presented with unseen test data20. A by product of overfitting is noise amplification, especially if noise is present within the minority class, further impacting the accuracy of the trained model. While some methods like CBO aim to reduce overfitting by encapsulating the synthetic data generation process within clusters, the probability of overfitting remains high if the data is of a higher dimension. Moreover, the effectiveness of CBO is heavily dependent on the clustering step. Inaccurate selection of the clustering algorithm or its parameters can result in clusters that do not appropriately represent the structure of the underlying data21. Algorithm-level methods often introduce additional complexity to the training process. For example, Cost-Sensitive Learning requires the incorporation of a cost matrix when modifying the learning algorithm, which makes training comparatively more complex22.

While hybrid methods such as Tomek Link and SMOTE23 address some of these drawbacks, they are often computationally quite extensive during the training process, especially when dealing with large datasets or real-time applications. In this research, a novel approach is proposed which uses Genetic Algorithms (GAs)24 for producing synthetic data for the ANNs in data-constrained environments. GAs, modeled after the phenomenon of natural selection, have found wide usage in various domains, most commonly in optimization and search problems. Their ability to explore a large search space and evolve solutions makes them a potential candidate for solving many real-world problems, including noise removal25, transportation and logistics26, image segmentation27, routing problems28, and manufacturing services29. GAs have also shown promise in biomedical signal processing, particularly in EEG-based classification tasks where optimization of feature selection and classification accuracy is crucial30.

Over the years, GAs have also been used for synthetic data generation by other researchers. For instance, synthetic datasets have been generated using GAs for testing and validating software systems, ensuring diverse and comprehensive test cases31,32. Additionally, realistic synthetic data has been applied to privacy-preserving data publishing, balancing the trade-off between data utility and privacy33. In the field of intrusion detection, synthetic attack data has been utilized for the development and testing of robust detection systems34.

Another study presents a method combining WCGAN-GP for synthetic attack data generation and Genetic Algorithms (GA) for feature selection to enhance Intrusion Detection Systems (IDS)35. While these applications are fairly useful, Genetic Algorithms (GAs) have not been specifically used to generate synthetic training data for Artificial Neural Networks (ANNs) in order to improve their performance and to mitigate the effect of imbalanced training data36. In this study, GAs are used to produce synthetic data while simultaneously addressing the limitations of existing data generation methods and improving ANN performance. Initially, a fitness function that accurately captures the underlying characteristics of the data is developed. Since a precise mathematical description of the data is difficult to achieve analytically, therefore, the process of creating the fitness function is automated. This approach utilizes Support Vector Machines (SVM)37 and logistic regression38 to fit a model to the data generating the equations for the underlying data distribution, and creating the fitness functions to maximize the minority class representation. The synthetically generated data is then used to train the neural network using three benchmark datasets all of which contain binary imbalanced classes.

To validate the proposed method, a comprehensive comparative analysis of various techniques is conducted, including different variants of Genetic Algorithms, such as Simple GA, Elitist GA, and SVM-based GA, alongside previously established methods, such as SMOTE and ADASYN. To measure the effectiveness of these techniques, several evaluation metrics, including accuracy, precision, recall, F1-score, ROC-AUC, and average precision (AP) are used. These metrics provide a comprehensive view of the model’s performance, particularly in terms of its ability to correctly classify minority class instances without compromising the overall accuracy.

Related work

Handling imbalanced data

Imbalanced data pose a significant challenge in machine learning, impacting the performance and accuracy of predictive models39. Researchers have explored various strategies to mitigate this challenge due to its significant implications6.

A detailed comparison of techniques for managing unbalanced data in machine learning, particularly in the context of electricity theft detection, is provided by40. Another study evaluates several machine learning methods aimed at overcoming the obstacles presented by extremely unbalanced datasets in industrial quality control41. Additionally, research has applied Random Forest algorithms to imbalanced datasets, enhancing detection accuracy and reliability, as seen in network monitoring analysis42,43. The Synthetic Minority Over-sampling Technique (SMOTE)9 is a widely used method that generates synthetic samples for the minority class, thus balancing the dataset and improving model learning effectiveness across both classes.

Synthetic data generation

Synthetic data generation techniques serve as viable solutions for addressing class imbalance in datasets. Methods such as Adaptive Synthetic (ADASYN)11 and Borderline-SMOTE10 have emerged as effective oversampling techniques.

ADASYN In11, ADASYN is employed to tackle imbalanced datasets by generating synthetic samples for minority class instances. The core concept of ADASYN is to utilize a weighted distribution for different minority class samples, generating more synthetic data for instances that are harder to learn. This approach reduces bias from class imbalance and adapts the classification decision boundary toward difficult examples, ultimately enhancing classifier performance. ADASYN is applied in combination with Random Forest to effectively identify fraud in telecommunication, showcasing the technique’s versatility in handling imbalanced datasets44.

Borderline-SMOTEIn10, Borderline-SMOTE is used to address imbalanced datasets by generating synthetic samples along the decision boundary between classes. This method, an extension of SMOTE, focuses on oversampling only the minority class instances near the borderlines, where class imbalance is most pronounced. By targeting these critical instances, Borderline-SMOTE enhances the classifier’s ability to differentiate between classes, resulting in improved true positive rates and F-values compared to traditional SMOTE and random oversampling methods.

Cost-Sensitive Learning The foundations of cost-sensitive learning are explored in14, with emphasis on the need to incorporate misclassification costs into the learning process. It is illustrated how traditional classification algorithms can be adapted to minimize total costs rather than merely focusing on error rates, providing a more practical approach for scenarios with varying misclassification costs.

Genetic Algorithms for Data Generation The use of Genetic Algorithms (GAs) for data generation is investigated in31. Recent work includes5 applying Greylag goose optimization with multilayer perceptron for lung cancer classification, and45 using Game Shapley local search embedded binary social ski-driver optimization for cancer classification from RNA sequencing data. A hybrid approach is described in46 that combines GAs with reinforcement learning to automate software test data generation. Since significant development costs are associated with software testing, substantial savings can be achieved through automation, especially in complex domains. Despite the success of GAs in generating simple test data, their application to more complex data types such as images, videos, sounds, and 3D models is rarely explored.

Machine Learning and Data Augmentation In47, a Generative Adversarial Network (GAN) is utilized for data augmentation, highlighting its ability to generate synthetic data without predefined classes. This method is proven effective for infrared small target detection, outperforming real data. However, it is acknowledged that GANs present challenges, including high training complexity which requires substantial computational power and time to converge. Additionally,48 proposes a three-phase hybrid soft computing approach for cancer classification of gene expression micro-array data. The article49 provides a systematic review of machine learning techniques in cancer classification for imbalanced medical datasets.

In another study50, GAN-based data augmentation is applied to preserve image objects and maintain translation consistency. Although effective, GAN models can be complex to implement and interpret, and evaluating generated data quality poses ongoing challenges. Furthermore, GANs are susceptible to overfitting, particularly with limited augmentation scope, and their success largely depends on the quality and diversity of the original training data.

Active Learning in Imbalanced Data Classification In18, Active Learning is introduced as a novel approach to address imbalanced data. It is demonstrated that active learning can effectively resolve class imbalance by providing the learner with more balanced classes. This method selects informative instances from a smaller sample pool, eliminating the need for exhaustive searches through the entire dataset, resulting in an efficient querying system applicable to large datasets.

Oversampling for Imbalanced Data Classification In51, an oversampling method is implemented using an Adversarial Network to tackle class imbalance. A synthetic minority dataset is generated via a black-box oversampler, followed by refinement with a network trained using adversarial loss. Striking a balance between generating realistic synthetic data and maintaining data quality is crucial, as is improving classification performance by ensuring synthetic data closely resembles minority class instances.

SMOTE-Boost-based Sparse Bayesian Model In52, the SMOTE algorithm is employed in combination with a boosting procedure to address imbalanced datasets. The proposed model comprises three modules: SMOTE-based data enhancement, an AdaBoost training strategy53, and sparse Bayesian model construction54. The SMOTE algorithm is utilized for data enhancement, generating additional minority samples to reduce imbalance, while a specific AdaBoost strategy is applied to adaptively enhance predictive ability and mitigate overfitting, ultimately improving learning from minority class instances.

Tomek Link and SMOTE Approaches In23, SMOTE and Tomek Link techniques are combined to address challenges in imbalanced dataset classification. These methods are applied alongside various classifiers, including Naïve Bayes, support vector machines, and k-nearest neighbors, to enhance classification performance. Our study investigates their application in condition monitoring systems for electrical machines, revealing the practical challenges posed by imbalanced data. Results indicate that combining SMOTE with Tomek Link improves performance across all classifiers, particularly k-nearest neighbors, thereby enhancing classification accuracy in scenarios with limited fault data.

Diffusion-based Synthetic Data Generation In55, the labor-intensive task of preparing training data for deep vision models is tackled by leveraging generative models to produce synthetic data. Unlike traditional models that generate image-level category labels, a novel approach is employed using the text-to-image generative model, Stable Diffusion (SD), to create pixel-level semantic segmentation labels. By utilizing text prompts, cross-attention, and self-attention mechanisms, three innovative techniques are introduced: class-prompt appending, class-prompt cross-attention, and self-attention exponentiation. These techniques generate segmentation maps corresponding to synthetic images, serving as pseudo-labels for training semantic segmenters and minimizing the need for labor-intensive pixel-wise annotation.

Privacy-Preserving Data Publishing In56, an information-driven distributed genetic algorithm (ID-DGA) is presented for optimal anonymization through attribute generalization and record suppression. The proposed study addresses the privacy-preserving data publishing (PPDP) problem by incorporating various components, including an information-driven crossover operator, mutation operator, improvement operator, and a two-dimensional selection operator. Additionally,57 introduces a technique for image captioning using hierarchical clustering and deep learning.58 presents improved GA-based clustering with new selection methods for categorical dental data.

Our contribution

Our work contributes significantly to synthetic data generation and class imbalance handling in machine learning:

-

We introduce the first GA-based synthetic data generation approach that systematically integrates SVMs and logistic regression within both the initialization and fitness evaluation phases. Unlike existing GA applications in data generation31,36,47 that rely on random initialization and simplistic fitness functions, our method uses SVM decision boundaries to intelligently initialize populations near classification boundaries and employs logistic regression-based fitness evaluation to ensure synthetic samples contribute meaningfully to model performance. This dual-model integration represents a significant departure from traditional GA approaches and addresses the critical limitation of generating synthetic samples that may not enhance classification performance.

-

A critical limitation of existing oversampling techniques is addressed by developing a distribution-aware synthetic data generation method. Unlike SMOTE and ADASYN, which rely on simple interpolation between neighboring points, the GA-based approach introduces controlled variations in the feature space. This allows for better exploration of potential minority class instances while maintaining the core characteristics of the original data distribution, as shown in the KDE plot analyses.

-

A comprehensive comparison is presented between the Simple Genetic Algorithm (SGA) and SVM-guided GA approaches for synthetic data generation. The experiments reveal that incorporating SVM decision boundaries enhances the effectiveness of synthetic samples, particularly near classification boundaries where discrimination is crucial. The SVM-guided approach consistently outperforms both traditional oversampling methods and basic GA implementations across the evaluation metrics.

-

It is demonstrated that the GA-based framework enhances model generalization through strategic synthetic data generation. By incorporating machine learning models in the fitness evaluation process, it is ensured that synthetic samples meaningfully contribute to the learning process rather than merely balancing the dataset numerically. This strategy addresses common challenges in synthetic data generation, such as amplifying noise or producing unrealistic samples that do not aid in classification.

-

The experimental results across multiple datasets highlight the value of this approach, particularly in severe class imbalance scenarios where traditional methods often struggle.

Dataset

The proposed algorithm is evaluated on three benchmark datasets, with their class distributions summarized in Table 1.

Credit card fraud detection

The Credit Card Fraud Detection dataset59 comprises credit card transactions made by European cardholders over two days in September 2013. With a total of 284,807 samples, only 492 transactions (0.172%) are classified as fraudulent, resulting in a highly imbalanced class distribution. This dataset contains 30 features.

Pima Indian diabetes

The Pima Indian Diabetes dataset60 provides information about diabetes cases within a population near Phoenix, Arizona. It consists of 768 samples divided into two classes: positive and negative cases. The minority class contains 268 samples (34.9% of the total), while the majority class comprises 500 samples. This dataset includes a total of 8 features.

Phoneme

This dataset61 contains 5404 samples and 5 features, with two classes distinguishing between nasal (class 0) and oral (class 1) sounds. The minority class has 1586 samples (29.35% of all samples), while the majority class has 3818 samples.

Proposed algorithm

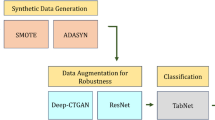

In proposed methodology, various machine learning (ML) algorithms, including logistic regression and support vector machines (SVM) are utilized to generate optimal equations for the dataset, which are subsequently integrated into the Genetic Algorithms (GAs) for population initialization and fitness function evaluation, as shown in Fig. 1. The process begins with the Original Data, which consists of the raw dataset to be processed. This data is passed into the machine learning models for feature extraction and predictive modeling.

Theoretical Foundations of GAs for Synthetic Data Generation Genetic Algorithms (GAs) are particularly effective for synthetic data generation in imbalanced datasets due to their evolutionary optimization, which addresses the challenges of sparse minority class instances and complex distributions. The schema theorem24 formalizes GAs’ ability to explore high-dimensional feature spaces:

where \(\xi (S, t)\) is the number of schema \(S\) instances at generation \(t\), \(f(S)\) is schema fitness, \(\bar{f}\) is average fitness, \(p_c\) and \(p_m\) are crossover and mutation probabilities, \(\delta (S)\) is the defining length, \(l\) is chromosome length, and \(o(S)\) is schema order. This ensures efficient navigation of non-linear minority class distributions, unlike SMOTE and ADASYN’s local interpolation, which may generate noisy samples52,62. Our fitness function (Eqs. 4, 9) prioritizes misclassified minority instances, guided by fitness proportionate selection:

This focuses synthetic data on challenging classification regions, enhancing model performance. Single-point crossover (Eq. 10) and mutation (Eq. 11) introduce controlled diversity, ensuring synthetic samples explore new feature space regions while remaining representative, unlike GANs and VAEs, which struggle with sparse data due to training instability and latent space assumptions63. GAs’ robustness to noise, modeled as:

mitigates outlier effects, outperforming deep generative models in data-constrained settings. These mathematical foundations provide rigorous theoretical support, validated by superior F1-scores (Section 7), justifying GAs’ effectiveness for class imbalance.

The output of the ML algorithms serves as the starting population for the GA. The next phase is Population Initialization, where the initial solutions from the ML models are introduced as candidates in the GA population. The GA then proceeds through an iterative process, beginning with Selection, where candidate solutions are evaluated based on a fitness function. This fitness function is derived from the ML models’ predictions, ensuring that only the most optimal solutions are retained. To introduce diversity and explore the solution space more thoroughly, Mutation is applied, where random alterations are made to certain solutions. Following mutation, Crossover is used to combine features from selected solutions, producing new off-springs that inherit traits from multiple parent solutions. After applying the GA process, synthetic data is generated, which is used to train a neural network model, improving its performance by providing a more diverse training set.

ML algorithms

Logistic Regression Logistic regression is widely used in classification analysis that models the probability of a binary outcome based on one or more variables. It is particularly suitable for cases where the dependent variable can take on two possible outcomes. The logistic regression model is given in Eq. (4).

where \(\text {P(y)}\) is the probability of the class for the dependent variable \(y\) being 1, \(\beta _0\) is the intercept, and \(\beta _1, \beta _2, \ldots , \beta _p\) are the coefficients of the independent variables \(x_1, x_2, \ldots , x_p\).

Logistic regression is selected due to its effectiveness in handling binary classification problems64 and its straightforward implementation and interpretability which make it a suitable choice for initial population generation and fitness evaluation in GAs. The logistic regression model is trained on the dataset to produce the coefficients \(\beta\) and the intercept \(\beta _0\), which are then used to initialize the GA population and evaluate the fitness of individuals.

Support Vector Machines (SVM) Support Vector Machines (SVM) are powerful supervised learning models used for classification65 and regression tasks. SVMs aim to find the optimal hyperplane that best separates data points of different classes in a high-dimensional space. The optimal hyperplane is defined by maximizing the margin between the closest data points of the classes, known as support vectors. SVMs have also been used for the imbalanced learning problems. For example, in66 support vector machine ensemble is utilized to effectively classify imbalanced data. The decision function for a logistic SVM is given in Eq. (5):

where \(\textbf{w}\) is the weight vector, \(\textbf{x}\) is the input feature vector, and \(b\) is the bias term. The objective is to minimize the optimization problem as given in Eq. (6):

subject to the constraints as given in Eq. (7):

Here, \(y_i\) represents the class labels, \(\xi _i\) are the slack variables that allow for misclassifications, \(C\) is the regularization parameter that controls the trade-off between maximizing the margin and minimizing the classification error, and \(n\) is the number of training samples.

Although nonlinear kernels such as RBF could potentially model more complex minority class distributions, we use linear SVMs to maintain computational efficiency during population initialization. This choice ensures scalability across large datasets without incurring the heavy computational cost associated with kernel-based methods.

GA components

While GAs have traditionally been known for optimization problems, in this study, they are utilized for generating an optimized population for the minority class. The two types of GAs used are Simple Genetic Algorithm (SGA) and Elitist Genetic Algorithm (EGA)67, and their performance is compared using a range of evaluation metrics, providing a comprehensive view of their effectiveness. The key components of GAs that impact their performance are Population Initialization, Fitness Function, Parent Selection, Crossover, and Mutation. These components are designed to enhance the effectiveness of the proposed approach.

Population Initialization Population Initialization is the first and one of the most crucial steps in GAs. The quality of the initial population significantly influences the performance of the algorithm. To enhance the initialization process, machine learning algorithms such as Logistic Regression and Support Vector Machines (SVM) are utilized to derive the equations that predict the output class on training data. The rationale behind selecting these algorithms is their ability to provide a mathematical representation of the data, which facilitates the generation of a more informed and diverse initial population. The initial population is created using these equations with slight random variations to ensure diversity, as given in Eq. (8).

where \(\mathbf {P_0}\) represents the initial population, \(\textbf{S}\) is the sample of misclassified minority class instances with central probability, and \(\mathcalligra{\scriptstyle N}\hspace{000.1cm}(0, 0.5)\) is a normal distribution with mean 0 and standard deviation 0.05. The standard deviation can be adjusted based on the scope of features in the dataset to adaptively control the variation introduced.

Fitness Function The Fitness Function is a key component of GAs as it determines which samples are identified as suitable to proceed to the next generation. In our dataset, the output class format is binary (0 for the majority class and 1 for the minority class). The aim is to generate a dataset such that the model trained on it can effectively perform classification on the original test data. For this purpose, emphasis is given to the subset of data that is misclassified by logistic regression. The minimum and maximum probabilities of misclassified samples of class 1, as well as of the minimum and maximum probabilities of the entire class (from only the training dataset), are identified and used in the fitness function. The goal is to generate a synthetic dataset for class 1 with probabilities falling mostly between these minimum and maximum probabilities of the misclassified class (extracted from logistic regression and support vector machine). By doing so, it is ensured that the synthetic samples are representative of the challenging cases, thereby enhancing the model’s ability to correctly classify the minority class in the original dataset. The coefficients from the ML algorithms are used to predict the output class for each sample in the initial population. Samples with probabilities that lie within the probability range of a minority class are considered fit samples and are assigned a fitness score of 1. Among these, samples with probabilities within the range of misclassified minority class samples are considered the fittest samples and are given a fitness score of 2. Samples whose probabilities do not fall within the minority class probability range are assigned a fitness score of 0, as given in Eq. (9). By doing this, samples for the minority class are generated, with more emphasis on the misclassified samples.

where \(f(\textbf{x})\) is the fitness function, \(P_{\min }\) and \(P_{\max }\) are the minimum and maximum probability of minority class respectively. \(MP_{\min }\) and \(MP_{\max }\) are the minimum and maximum probabilities of misclassified samples of minority class respectively. These values are extracted from the probabilities output by the machine learning model (Logistic Regression or SVM).

Parent Selection Parent Selection is another critical aspect of GAs, where the fitness scores are used to select parents for the next generation. In the proposed approach, a tournament selection method with a size of 5 is employed, which was found to yield the best results after experimenting with different sizes.. From these 5 individuals, the one with the highest fitness score is selected as a parent, as described in Algorithm 1.

Crossover and Mutation Crossover and Mutation are genetic operators used to maintain genetic diversity from one generation of a population to the next.

Crossover: Single-point crossover is utilized, where a crossover point is randomly selected, and the genetic material is exchanged between two parents to produce offspring, as given in Eq. (10).

Mutation: Mutation introduces random variations in the offspring. Each gene in the offspring has a probability of being altered as given in Eq. (11).

where \(\sigma\) is the mutation rate which is 0.01 in our case. Following the approach discussed above, the resulting Simple Genetic Algorithm is given in Algorithm 2.

Stopping Criteria for GA Iterations The Genetic Algorithm (GA) iterations were stopped after a fixed number of generations (50) across all datasets. This criterion was chosen based on preliminary experiments, which showed that fitness values and synthetic data diversity stabilized before reaching 50 generations in most cases. Fixing the number of generations ensures computational efficiency while maintaining high-quality synthetic data generation.

GA Components and Hyperparameter Specification The hyperparameters for Simple Genetic Algorithm (SGA), Elitist Genetic Algorithm (EGA), and Support Vector Machine-based Genetic Algorithm (SVMGA) were configured to balance computational efficiency and solution quality. The key settings were:

-

Population Size: Varied based on the target synthetic data percentage (20%–100% of majority class size)..

-

Number of Generations: 50.

-

Crossover Probability: 0.5.

-

Mutation Probability: 0.01.

-

Selection Method: Tournament selection (size: 5).

-

Elitism (EGA only): Top 2 individuals retained.

-

Initialization: Population initialized near ML Models decision boundaries.

These settings enabled better exploration and convergence, with crossover ensuring diversity and mutation preventing stagnation. EGA’s elitism preserved high-quality solutions, while SVMGA’s initialization focused the search near critical decision boundaries, enhancing performance across datasets.

Elitist genetic algorithm

Elitism is a strategy that ensures the best individuals (elite) are retained across generations. In the Elitist GA, a specified number of elite individuals (2 in our case) with the highest fitness scores are carried over to the next generation without alteration, as given in Algorithm 3. This mechanism ensures that the best solutions are not lost due to crossover or mutation, contributing to a steady improvement in the overall fitness of the population.

Neural network model

After the synthetic dataset is generated using the Genetic Algorithm and merged with the original dataset, a Neural Network is trained to predict the minority class. Initially, a simplified ANN model with only two hidden layers is tested. followed by a more complex model with batch normalization and dropout to stabilizing and speed up the learning process and prevent overfitting. This refined model facilitates the comparative analysis of the effectiveness of the synthetic dataset.

Time complexity analysis

In terms of computational complexity, SMOTE and ADASYN are relatively lightweight, with time complexities of approximately \(\mathcalligra{\scriptstyle O}\hspace{000.1cm}(n \cdot k \cdot d)\), where n is the number of samples, k is the number of neighbors, and d is the dimensionality of the feature space. These methods rely on nearest-neighbor computations and linear interpolation, making them suitable for quick augmentation but limited in generating diverse data. Deep learning approaches like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) offer more sophisticated data generation capabilities but at significantly higher computational costs, typically \(O(E \cdot B \cdot M)\), where E is the number of training epochs, B is the batch size, and M is the model complexity, which can be orders of magnitude larger than traditional methods due to the neural network architectures involved. Additionally, these approaches require substantial training data to achieve stable performance, potentially limiting their application in extreme imbalance scenarios where minority class samples are scarce.

In contrast, the GA-based methods introduce additional overhead due to their iterative nature. For a population size P, number of generations G, and fitness evaluation cost F, the overall complexity is \(\mathcalligra{\scriptstyle O}\hspace{000.1cm}(G \cdot P \cdot F)\). In our implementation, F primarily depends on model-based probability predictions using logistic regression or SVM, which are efficient for low-to-moderate dimensional data. While the GA-based methods are computationally more intensive, they operate on relatively small synthetic batches and converge within a limited number of generations (typically \(G \le 50\)), making the runtime practical for offline data preparation. Furthermore, the improved performance and generalization offered by the GA-generated data justify this modest computational cost, especially in critical tasks where minority class detection is vital. These theoretical complexity analyses are further validated by empirical runtime and memory usage measurements presented in Subsection 7.6, which demonstrate the practical tradeoffs between computational efficiency across all methods discussed.

Distribution of synthetic data vs. original data

The comparative analysis of synthetic data distributions through various methodologies reveals distinct patterns that highlight fundamental differences between traditional oversampling techniques and the proposed genetic algorithm-based approaches. To understand how the GA methods (SGA and SVM-based GA) differ from SMOTE, ADASYN, GAN and VAE in replicating the underlying distribution of data, a detailed analysis is performed using using the PIMA Indian Diabetes dataset. The distributions are illustrated using KDE plots, where the y-axis represents the Probability Density Estimate (PDE) and the x-axis represents the features.

The analysis shows that traditional oversampling methods demonstrate a notably conservative approach to synthetic data generation. As shown in Fig. 2, the synthetic data generated by SMOTE is constrained to lie within the convex hull formed by the existing minority class instances and therefore closely resembles the distribution of the original dataset, with only slight variations in some features. This high correlation between the original and synthetic data points limits the exploration of new, potentially beneficial regions in the feature space.

The performance of the ADASYN (Adaptive Synthetic Sampling) method is also evaluated on the same datasets. Like SMOTE, ADASYN aims to balance class distribution by creating synthetic samples. It adapts to the local density distribution of the data, using a density-based sampling strategy to generate more synthetic data in regions where the minority class is sparse, effectively focusing on harder-to-learn examples. However, as shown in Fig. 3, despite these adaptations, the ADASYN-generated data still largely overlaps the original data distribution, not exploring beyond the constrained space defined by existing minority class instances.

On the other hand, the SGA-based method introduces a certain variability into the synthetic data while still capturing the broader features of the original data, as observed in the differences between the distributions of the original and synthetic datasets in Fig. 4. Moreover, this method produces noticeably different distribution pattern, characterized by enhanced feature space exploration and evolutionary diversity.

In contrast to these traditional methods, Variational Autoencoders (VAEs) represent a deep learning approach to synthetic data generation. As illustrated in Fig. 5, VAEs encode the original data into a latent space and then decode it to generate new samples. While VAEs can theoretically create diverse synthetic instances by sampling from the learned latent distribution, the analysis reveals that VAE-generated data exhibits distinct characteristics. The distribution shows that VAEs tend to capture the central tendencies of the original data but may smooth out some of the finer details and extremes of the distribution. Similarly, the GAN-based approach introduces a higher degree of variability while retaining essential distribution characteristics, as shown in Fig. 6. By learning the underlying data distribution, GANs generate synthetic samples that approximate the original dataset, albeit with occasional deviations in features such as Skin Thickness and Insulin. This variability reflects GAN’s capacity to capture complex data patterns while enabling exploration of diverse feature spaces.

This variability is due to the genetic diversity introduced and propagated by the GA methods during the data generation process. The population is randomly initialized around a starting point, crossover is applied to merge features from different individual samples, and samples are also mutated. Controlled mutation introduces strategic variations in the feature space. Moreover, the fitness function allows for a range of possible solutions that approximately fulfill the target criteria rather than imposing strict uniformity. The combination of these mechanisms across successive generations leads to a diverse set of data points, which differ considerably from the original data points but still retain their most significant attributes. This expanded exploration is particularly significant for discovering new, yet valid, instances of the minority class.

The SVM-based GA method exhibits a more nuanced distribution pattern, as shown in Fig. 7. Notable variations in the synthetic dataset are produced; however, the distribution aligns more closely with the original dataset compared to the SGA method. This balanced behavior can be attributed to three key factors:

-

The SVM decision boundaries guide synthetic data generation, creating meaningful clusters near critical regions.

-

The dual-layered fitness function ensures that synthetic samples maintain proximity to the original distribution while introducing meaningful variations.

-

The synthetic data points concentrate in clusters at the decision boundary, where class separation is most crucial.

The theoretical foundations of these distribution patterns stem from the fundamental principles of genetic algorithms. The combination of initialization, crossover mechanisms, and mutation operators leads to synthetic samples that are both diverse and valid within the problem domain. Significant implications for downstream machine learning tasks are associated with this approach, particularly in enhancing model generalization through exposure to a more diverse set of training examples while preventing overfitting that might occur with more closely clustered synthetic data. A balance between maintaining the essential characteristics of the original data and introducing meaningful variations that contribute to improved model performance is thus achieved by the proposed genetic algorithm-based method. This results in the production of a comprehensive training set that helps the model generalize better.

To further examine the empirical differences in data distributions, a t-SNE projection was applied to the original training set of Phoneme together with all seven synthetic datasets as shown in Fig. 8. In this two-dimensional embedding, each point corresponds to a single sample, colored by its source. The original data forms a compact yet clearly structured cluster, reflecting the intrinsic feature correlations present in the raw observations. The t-SNE projection in Fig. 8 presents the two-dimensional embedding of the original training data alongside seven distinct synthetic datasets. Each point represents an individual sample, colored according to its source: the original dataset, GA-generated data (both basic and enhanced implementations), SMOTE and its variant, and samples produced by ADASYN, GAN, and VAE approaches. The original data exhibits a well-defined cluster structure, while the GA-based synthetic samples closely follow its overall topology but introduce additional dispersion along key feature axes. In contrast, SMOTE and ADASYN yield denser, more uniform clusters around the original distribution, and the GAN- and VAE-generated points display broader exploration of the feature space. These patterns confirm that each generation method imparts a unique distributional signature, with GA variants striking an effective balance between fidelity and diversity.

t-SNE projection of the original training set of and seven synthetic datasets. GA-based samples maintain the core structure of the original cluster while extending its boundaries, SMOTE/ADASYN densely fill the minority-class envelope, and generative models (GAN, VAE) explore broader feature-space regions. This comparative embedding highlights the balance between data fidelity and diversity achieved by each synthetic data generation method.

To further support explainability and practical model interpretability, we analyzed how GA-generated synthetic samples influence the decision boundaries of classifiers. Our observations indicate that these samples frequently populate regions close to class boundaries or areas with high misclassification rates. As a result, they encourage more refined and adaptive decision surfaces compared to interpolation-based methods like SMOTE and ADASYN, which often reinforce already well-represented regions. This effect is especially noticeable in SVM-guided GA, where the fitness function inherently targets the most informative zones near decision margins. By placing synthetic instances in these sensitive regions, GA-based approaches improve the classifier’s capacity to learn complex separations, thus producing more interpretable and trustworthy models. Consequently, beyond performance gains, the proposed method also enhances the explainability of learned models by influencing decision boundaries in a meaningful and controlled manner.

Quantitative noise analysis

To empirically assess the claim that GA-based methods introduce less harmful noise, we computed the average Signal-to-Noise Ratio (SNR) for synthetic data generated by different methods across all datasets. SNR is a widely used metric to quantify the amount of noise relative to the signal, where higher values indicate cleaner and more consistent data generation.

Table 2 summarizes the average SNR values for each synthetic data generation method.

While SNR values are comparable across most methods–especially between SMOTE, ADASYN, and GA variants–the crucial distinction lies in the nature of the variability introduced. Traditional oversampling methods like SMOTE and ADASYN often produce synthetic samples that closely mimic existing data points, limiting the exploration of new patterns. In contrast, our GA-based methods introduce purposeful and fitness-guided variability, especially near class boundaries or underrepresented regions in the feature space. This controlled variability does not equate to harmful noise. Instead, it enhances the diversity of the training set in a structured manner, allowing models to better generalize and learn complex decision boundaries. The slightly lower SNR of EGA, for instance, reflects this diversity rather than random noise, as evidenced by its strong classification performance across multiple datasets.

Results

In this study, the dataset is initially split into training and test sets randomly. Synthetic data is generated using the training data, and the model is trained on the combined dataset (original training data and synthetic data). The original test data, which is kept separate from the start, is used for evaluation. This approach aims to evaluate the effectiveness of synthetic data in improving classification performance when applied to real-world data. Specifically, the original dataset serves as a reference to gauge the enhancements brought about by synthetic data augmentation.

In evaluating the performance of our data generation models, a range of performance metrics is utilized, including accuracy, precision, recall, F1 score, and ROC AUC score. While accuracy is a common measure, it alone can be misleading, especially in the presence of class imbalance. Additionally, as demonstrated by another study68, the Area Under the ROC Curve (AUC) is a valuable metric for assessing the overall discriminative ability of the model across all classification thresholds, offering a more comprehensive evaluation beyond what accuracy alone can provide. As noted by69, accuracy might mask poor performance on minority classes, leading to an overestimation of a model’s effectiveness. Therefore, other metrics are also considered to obtain a comprehensive overview of the model’s performance.

In this study, the noise introduced by synthetic data generation methods such as SMOTE and ADASYN is also analyzed, as it can compromise the quality of the generated data and, consequently, the performance of machine learning models.

Moreover, as discussed by70, the generation of synthetic training data often leads to the production of unwanted noise that can adversely affect the domain adaptation of deep learning models. The noise introduced by synthetic data generation methods such as SMOTE and ADASYN is analyzed and compared with our proposed GA-based methods, which focus on producing noiseless synthetic data.

Credit card fraud detection

Initially, logistic regression is employed to generate an equation for both population initialization and the fitness function. Subsequently, support vector machine (SVM) is applied for the purposes of population initialization and the fitness function. These methods ensure a feasible starting point for the genetic algorithms. Following this, models are trained on both synthetic and original datasets, with both being tested on a consistent subset of the original data. Synthetic data is generated using state-of-the-art methods, SMOTE and ADASYN. Our experiments involve the implementation of Logistic Regression-based Genetic Algorithms (SGA & EGA) and SVM-based Genetic Algorithm. The performance metrics of models trained on synthetic data by our proposed method are compared with those of models trained on the synthetic dataset generated by SMOTE and ADASYN, and the proposed methods show notable improvements, as given in Table 3.

SMOTE Results SMOTE shows moderate initial performance with low precision and F1 scores at low data sampling, but precision and F1 score drop more as more data is sampled, particularly precision falling to 57.2% and F1 score decreasing to 68.2% when 80% of the data is sampled. This indicates that as synthetic data increases, patterns too specific to the data are learned by the model (overfitting), and hence the generalizability of the model is reduced. Despite this, the ROC AUC remains stable around 91.6%-92.3%, and the accuracy in predicting the minority class steadily increases proportional to the increase in data size. SMOTE achieves the highest Recall (85.1) and ROC AUC (91.6), but this leads to the lowest precision (57.1) and F1 score (68.3) after 80% sampling. It shows that the model trained on SMOTE-based generated synthetic data becomes more biased towards class 1, which tends to increase the number of false positives.

ADASYN Results In Table 3, the ADASYN results show a decline in precision and F1 scores as the percentage of synthetic data increases, particularly after 60% or more of the data is sampled. Initially, the method achieves high precision (80.1%) and a balanced F1 score (79.5%) at 20% sampling. However, at 80% data usage, precision drops to 57.2%, and the F1 score decreases to 68.2%, despite a stable high ROC AUC of 92.3%. Similar to SMOTE, this decline suggests that while the ADASYN method is effective in balancing class distribution, noise is introduced as the amount of synthetic data samples increases. This leads to a reduction in precision as the model starts to overfit. This trend is not observed in our proposed GA-based methods.

GAN Results The results of the GAN-based approach, as detailed in Table 3, highlight its potential in generating synthetic data for fraud detection. At lower data usage levels (20%), GAN achieves relatively high precision (83.0%) and balanced F1 scores (82.4%), indicating a good initial performance. However, as the percentage of synthetic data increases, the results suggest diminishing returns. For example, at 80% data usage, precision drops to 61.6%, and the F1 score falls to 74.2%. This decline may indicate that the GAN-generated data introduces noise or fails to generalize effectively as the volume of synthetic data increases. Despite this, GAN maintains a competitive ROC AUC (80.8%-90.1%) across all data splits, showcasing its capability to distinguish between classes. Overall, while GAN demonstrates promise, it faces challenges in maintaining precision and F1 scores as the data volume scales up, suggesting room for improvement in its generative capacity.

VAE Results The results of the VAE-based approach, as presented in Table 3, demonstrate its effectiveness in generating synthetic data for fraud detection. At 20% data usage, VAE achieves respectable precision (82.0%) and recall (80.2%), resulting in a competitive F1 score (81.1%) and strong ROC AUC (90.1%). As the percentage of synthetic data increases, VAE shows better stability compared to traditional methods like SMOTE and ADASYN. For instance, at 80% data usage, VAE maintains a precision of 84.2% and F1 score of 82.6%, notably higher than SMOTE’s 57.1% precision and 68.3% F1 score at the same threshold. However, VAE experiences a slight performance decline at 100% data usage, with recall dropping to 73.9% and F1 score decreasing to 79.6%. This suggests that while VAE effectively captures the underlying data distribution at moderate synthetic data volumes, it may introduce some generalization challenges when exclusively relying on synthetic samples. Nevertheless, VAE demonstrates more consistent performance across different data sampling percentages compared to SMOTE and ADASYN, indicating its reliability in generating quality synthetic data for imbalanced credit card fraud detection scenarios.

Logistic Regression-Based GA Results The results of the data generated by the Logistic Regression-based GA for the Credit Card Fraud Detection dataset, as demonstrated in Table 3, show a consistent improvement in performance metrics as the percentage of data usage increases. The data generated by SGA follows the trends of improved performance with increasing data. Overall, it achieves greater accuracy in minority class predictions, i.e., 83.3%. Interestingly, the highest precision of 90.5% is observed at 20% data sampling, and the lowest precision of 78.8% is recorded at 100% data sampling. The F1 score remains relatively stable throughout. The ROC AUC increases steadily.

The experimental results obtained from the data generated by EGA also demonstrate a consistent improvement in performance metrics with the increase in available data. It starts with precision and F1 scores better than SGA at 20% data usage, but as data usage increases, EGA shows a more pronounced improvement. For example, at 20% data usage, EGA achieves an F1 score of 84.1% and a ROC AUC of 89.4%, compared to SGA’s 82.7% F1 score and 88.0% ROC AUC. This trend continues at higher data levels, with EGA reaching a final F1 score of 81.1% and a ROC AUC of 92.0% at 100% data usage, outperforming SGA at both metrics.

SVM-Based GA Results The SVM-based GA results demonstrate high accuracy and precision across all data splits, with ROC AUC consistently above 90%, indicating the strong discriminative power of this classifier. The accuracy and precision remain high, particularly with increasing data percentages, highlighting the method’s effectiveness in correctly identifying the minority class samples. The Support Vector Machine Genetic Algorithm (SVMGA) method achieves the highest Recall (83.3) and highest ROC AUC (92.0), indicating its superior ability to capture patterns of class 1 and differentiate between classes more effectively than other methods.

Models comparison

In this section, the performances of models are compared by taking average values of all the metrics including accuracy, precision, recall, F1 score, and ROC AUC. The results show the dominance of the models using our proposed GA-based synthetic data, as given in Table 4.

From Table 4, it is observed that the accuracy remains consistent across all models. The highest precision is achieved by EGA (87.3), followed by SGA (85.12), GAN (84.9), VAE (84.1), and SVMGA (83.92). A notable difference is found between the precision of the proposed GA-based models and that of SMOTE (70.88) and ADASYN (71.98). All three of the proposed methods, as well as GAN and VAE, show higher precision compared to SMOTE and ADASYN, indicating that the false positive rate in these models is significantly lower. While the highest recall (82.9) is achieved by SMOTE, followed closely by SVMGA (82.40) and both SGA and EGA (81.32), both GAN (74.7) and VAE (80.1) lag behind in this metric. This suggests that while SMOTE is slightly better at identifying all positive instances, the GA-based models still perform more competitively with a more balanced precision-recall trade-off than GAN and VAE. Similarly, the proposed EGA-based model exhibits the highest F1 score (84.04), followed by SGA (83.38), SVMGA (83.12), VAE (82.0), and GAN (80.5). SMOTE (75.88) and ADASYN (76.34) perform poorly in terms of F1 score. Regarding ROC AUC scores, SVMGA achieves the highest value (91.48), followed by SMOTE (91.30) and ADASYN (91.24), while SGA and EGA both score 90.7, VAE achieves 90.0, and GAN shows the lowest performance with 87.2, indicating its limited ability to distinguish between classes effectively.

The Precision-Recall Curve after 100% data sampling, as illustrated in Fig. 9, shows similar trends with slightly varying values. The Average Precision of SGA (0.85) is improved, while SVMGA and ADASYN experience slight decreases, with AP scores of 0.85 and 0.79, respectively. Despite the slight decline, the highest AP score of 0.85 is still achieved by SVMGA, matching the performance of SGA and EGA, which also have an AP score of 0.85. SMOTE matches the AP score of 0.83 with the Original data.

The ROC AUC is plotted after 100% data sampling, which provides a complete picture of how well the model can separate the two classes, considering all possible thresholds, as given in Fig. 9. The highest ROC AUC score of 0.97 is achieved by the SVMGA and EGA methods, indicating that models trained on data generated by these methods can effectively distinguish between the classes. The SGA method attains a slightly lower ROC AUC score of 0.96, while both SMOTE and ADASYN match the original data’s ROC AUC score of 0.95. The proposed GA-based methods are found to outperform the SMOTE and ADASYN methods by a significant margin.

Statistical Significance Testing of F1 Scores To determine whether the observed differences in model performance are statistically significant, pairwise t-tests were conducted on the average F1 scores of all evaluated models. Table 5 presents the corresponding p values and mean differences for each comparison. The results indicate that the proposed GA-based methods–particularly EGA and SGA–frequently achieve p-values below the conventional threshold of 0.05 when compared to traditional oversampling techniques such as SMOTE and ADASYN. For example, EGA demonstrates statistically significant improvements over SMOTE (p = 0.0307) and ADASYN (p = 0.0298). Similarly, SGA exhibits statistically significant differences when compared to SMOTE (p = 0.0475) and ADASYN (p = 0.0470).

In contrast, comparisons between GA-based approaches and generative models such as VAE and GAN reveal fewer statistically significant differences. Although VAE achieves competitive F1 scores, the difference between EGA and VAE is statistically significant (p = 0.0030), as is the comparison between SGA and VAE (p = 0.0149). However, GAN does not yield statistically significant differences in most pairwise comparisons, suggesting its performance is not consistently distinguishable from the other methods.

PIMA Indian diabetes

The results with the PIMA Indian Diabetes dataset show notable differences in the performance of the EGA, SGA, and SVMGA methods. Overall, it is observed that SVMGA outperforms all other methods, indicating that more reliable and discriminative synthetic data is generated by SVMGA, as given in Table 6.

SMOTE Results The model trained on the synthetic data generated by the SMOTE method shows a considerable improvement in recall and F1 Score as the percentage of sampled synthetic data increases up to 40%, with the highest value of precision being observed at this point compared to earlier stages, as given in Table 6. However, at higher percentages (80% and 100%), precision and F1 Score begin to decline, particularly at 100%, where both metrics drop significantly. This demonstrates that the ability of the model to accurately predict class labels decreases with the increase in generated synthetic data samples.

ADASYN Results The results of the ADASYN method on the PIMA dataset, as given in Table 6, show that while balanced performance across different metrics is maintained, the overall effectiveness slightly decreases when using the full dataset (100%), with lower precision (55.2%) and ROC AUC (71.4%) compared to earlier stages. This suggests that noise may be introduced by ADASYN when generating synthetic data, which can affect the model’s precision and generalization as more data is utilized. The method demonstrates optimal performance with the highest ROC AUC (73.4%) after 40% sampling on the dataset.

GAN Results The GAN-based approach on the PIMA Indian Diabetes dataset, as shown in Table 6, demonstrates inconsistent performance across sampling percentages. At 20%, it achieves moderate precision (74.6%) but low recall (59.5%), missing many positive cases. As sampling increases to 40%, performance significantly deteriorates with precision dropping to 54.9% and accuracy decreasing to 67.0%–the lowest among all methods at this level. Although recall improves at 40% and 60% sampling (89.9% and 82.0%), precision suffers, indicating issues with generating balanced synthetic data. This instability suggests GAN fails to accurately capture the underlying data distribution of the PIMA dataset. By 100% data sampling, GAN’s F1 Score (63.9%) is among the lowest of all methods, demonstrating its limitations in generating quality synthetic data for this classification task.

VAE Results The VAE method on the PIMA Indian Diabetes dataset, as presented in Table 6, shows several shortcomings across different sampling percentages. Starting with a suboptimal 58.8% precision at 20% sampling–among the lowest of all methods–VAE struggles to generate discriminative synthetic samples. While precision improves to 68.9% at 40% sampling, the recall remains stagnant at 67.4%, indicating a persistent problem with identifying positive cases. As sampling increases to 60%, VAE shows a regression in performance with recall dropping to 62.9%, demonstrating an inability to maintain consistent improvement as more synthetic data is incorporated. Although VAE reaches 76.2% accuracy at 100% sampling, its overall metrics still fall short compared to GA-based methods, particularly in recall and F1 Score when compared to SVMGA. These limitations highlight VAE’s deficiency in generating optimal synthetic data for imbalanced classification problems like the PIMA dataset.

Logistic Regression-based GA Results The results from the experiments on the PIMA Indian Diabetes dataset show notable differences in the performance of the EGA and the baseline (SGA) methods across various metrics, as given in Table 6. Overall, it is observed that SGA outperforms the EGA methods, particularly in terms of precision and F1 Score, indicating that more reliable and discriminative synthetic data is generated by SGA. When focusing on experimental results obtained after 100% data sampling, the advantages of SGA become more apparent. It achieves better precision (70.8) and F1 Score (73.3) compared to EGA, which reports precision values of 60.6 and F1 Scores of 66.6 after 100% data sampling. The ROC AUC for EGA at 100% data sampling is superior (74.0) compared to SGA (72.0), suggesting a stronger overall performance in distinguishing between classes.

SVM-based GA Results The results from the SVM-based GA, as given in Table 6, demonstrate consistent improvement in performance metrics as the percentage of data used increases. Notably, the highest performance is achieved with 100% sampling of the data, where strong recall (91.4%), F1 Score (71.5%), and ROC AUC (76.3%) are shown, indicating that the SVM-based GA is effective in enhancing the model’s ability to identify true positives and maintain a balanced performance across various metrics as more data is leveraged. Initially, after 20% sampling, SVMGA achieves better scores across accuracy (78.2), precision (73.6), F1 Score (67.8), and ROC AUC (74.4), indicating the effectiveness of the data generated by SVMGA. All other methods perform similarly across all metrics but remain lower than SVMGA, with SGA achieving the highest recall of 69.6.

Models comparison

As given in Table 7, it is observed that the SVM-based method exhibits the best performance in terms of accuracy (77.0), precision (66.82), F1 Score (69.78), and ROC AUC (76.16) while GAN shows highest recall (77.26) followed by SVM-based method (75.48). This indicates that the SVM-based GA method generates data with considerable variability while effectively capturing the critical decision boundaries of the original data.

The Precision-Recall curve after 100% sampling on the data, illustrated in Fig. 9, shows a considerable improvement in the performance of the SGA (0.75) and EGA (0.74) methods, while the SVM-based GA (0.75) method maintains its highest position. This is attributed to the availability of the entire dataset, which introduces more diversity, inherently suitable for the GA-based methods. The performance of the ADASYN method worsens slightly, while the rest of the methods do not show a pronounced change in their outputs.

Figure 9 shows the ROC (Receiver Operating Characteristic) curve for the original and synthetic data and is used to evaluate the trade-off between the true positive rate (sensitivity) and the false positive rate (1-specificity) for different threshold values, with the Area Under the Curve (AUC) providing an overall measure of model performance. The SVM-based Genetic Algorithm (AUC = 0.84) outperforms all other methods, indicating that it is the most effective in improving model performance by maximizing the true positive rate while minimizing the false positive rate. SMOTE-based methods (AUC = 0.80) perform comparably to the original data (AUC = 0.80). EGA (AUC = 0.81) and ADASYN (AUC = 0.81) slightly outperform the original data-trained model but do not match the performance of the SVM-based GA. SGA (AUC = 0.83) shows better performance than EGA and ADASYN, though it still lags behind the SVM-based GA. Overall, the SVM-based GA and SGA-based approaches demonstrate superior effectiveness in handling class imbalance, as reflected by their higher AUC scores.

Statistical Comparison of F1 Scores on the PIMA Dataset Table 8 presents pairwise comparisons of mean F1-scores for models evaluated on the PIMA dataset. Diagonal entries show the average F1-score per model, while off-diagonal values indicate the mean difference and corresponding p-values from paired t-tests. SVMGA achieved the highest mean F1-score (69.78) and showed statistically significant improvements over SMOTE (p = 0.0451) and ADASYN (p = 0.0146). While comparisons with other models such as GAN and VAE yielded favorable differences, the p-values suggest marginal significance. Other GA-based methods (SGA and EGA) also demonstrated competitive performance, though their advantages were not statistically significant. These results indicate that GA-based approaches, particularly SVMGA, offer superior classification performance on the PIMA dataset compared to conventional resampling and generative methods.

Phoneme

The results from the experiments on the Phoneme dataset show notable improvements in performance across various metrics for several methods, particularly SVMGA. Overall, SVMGA is observed to outperform the other methods in most scenarios, indicating that more reliable and discriminative synthetic data is generated, as shown in Table 9.

SMOTE Results The model trained on the synthetic data generated by the SMOTE method shows a considerable improvement in recall and F1 Score, as presented in Table 9, as the percentage of sampled synthetic data increases up to 60%, with the highest precision being observed at this point compared to earlier stages. However, at higher percentages (80% and 100%), precision and F1 Score start to decline, particularly at 100%, where both metrics drop significantly. This indicates that the model’s ability to accurately predict class labels decreases as the volume of generated synthetic data increases. Compared to SGA and EGA, SMOTE provides better initial improvements in recall and F1 score; however, its performance declines at higher synthetic data percentages, suggesting sensitivity to the noise introduced by the larger volume of synthetic data.

ADASYN Results Similar to the SMOTE results, the results obtained from the ADASYN method show a gradual improvement in the accuracy of minority class predictions, but the performance of the model peaks at 40% data sampling. A notable decline in precision and F1 score is observed after more than 40% of the data is sampled. The ROC AUC remains relatively stable, with a slight improvement observed at 40%.

GAN Results The GAN-based approach on the PHONEME dataset shows mixed results as per Table 9. GAN achieves strong recall values (86.9%-89.9%) across all sampling levels and ties for the highest F1 Score (72.3%) at 80% sampling with EGA. It also shows competitive ROC AUC (82.8%) at 20% sampling. However, GAN consistently underperforms in precision, recording the lowest values at multiple sampling levels. Its accuracy remains below most other methods, particularly at 40% sampling (79.9%). These limitations suggest GAN generates synthetic samples that introduce classification errors, preventing it from achieving the balanced performance of GA-based methods, especially SVMGA, which demonstrates superior precision-recall trade-offs across sampling percentages.

VAE Results The VAE method on the PHONEME dataset demonstrates moderate strengths per Table 9. At 40% sampling, VAE achieves the highest recall (86.7%, tied with SGA), while at 60% sampling, it shows good accuracy (82.6%) and the second-highest precision (66.2%). VAE maintains relatively stable performance across metrics as sampling increases. However, VAE struggles to balance precision and recall simultaneously–when recall improves, precision often suffers. At 100% sampling, VAE’s precision drops to 58.5%, significantly lower than SVMGA’s 60.8%. While VAE achieves reasonable ROC AUC values throughout, it consistently falls short of the more balanced performance demonstrated by GA-based methods, particularly SVMGA, which better handles the precision-recall trade-off across different sampling levels.

Logistic Regression-based GAs Results When using SGA, accuracy scores remain relatively consistent across different sampling percentages, but precision and recall slightly decrease as the percentage increases, leading to a slight drop in F1 score and ROC AUC values. This suggests that while overall accuracy is maintained by SGA, it slightly struggles with precision and recall, particularly as more synthetic data is introduced. Interestingly, the highest accuracy for minority class predictions is observed at 80% data sampling, where the model appears to strike a balance between meaningful diversity and minimal noise.

The EGA results for the Phoneme dataset demonstrate relatively stable accuracy, with precision and recall showing modest variability as the percentage of synthetic data increases. The F1 score shows a slight decline at higher synthetic data percentages, reflecting a minor degradation in model performance, particularly in handling the trade-offs between precision and recall. Compared to the SGA results, EGA exhibits similar performance on precision and recall, though EGA achieves slightly higher accuracy than the SGA-based model.

SVM-based GA Result The results of the experiments conducted on synthetic data obtained from the SVM-based GA show a consistent improvement in the accuracy of minority class predictions, except for a slight dip at 100% data sampling. However, a slight drop in precision and F1 score is observed at higher synthetic data percentages, particularly at 80%, where both metrics display their lowest values. The ROC AUC remains relatively stable across different data percentages. Optimal model performance is observed at 20%-60% data sampling, with high accuracy, recall, and F1 score.

Models comparison

Resultant Images of all three datasets with 100% data sampling where (a) represents the PR-Curve for the Credit Card Fraud Detection dataset, (b) represents the ROC-AUC for the Credit Card Fraud Detection dataset, (c) represents the PR-Curve for the PIMA dataset, (d) represents the ROC-AUC for the PIMA dataset, (e) represents the PR-Curve for the Phoneme dataset, and (f) represents the ROC-AUC for the Phoneme dataset.

As observed from Table 10, the models perform comparably across all performance metrics, including accuracy, precision, recall, F1 score, and ROC AUC. The overall precision achieved ranges from 59 to 63%; however, the proposed SVMGA-based method outperforms the GAN, VAE, SMOTE and ADASYN methods. The most optimal performance appears to be achieved by the SVM-based GA method, with relatively high and stable values across all metrics. SVM-based GA demonstrates the highest F1 score (73.18) and ROC AUC (83.34), indicating a balance between precision and recall. While GAN shows highest recall (87.9), representing the percentage of class 1 samples correctly classified, ADASYN exhibits the lowest precision (59.18), accuracy (79.7), F1 score (70.0), and ROC AUC (80.0), indicating poor performance across all performance metrics.

The Precision-Recall Curve after 100% data sampling does not produce much variation in the outcome. The models are ranked in the same manner, with the SVM-based GA method leading the others, as observed in Fig. 9.

The ROC curve, illustrated in Fig. 9 indicates that the SVM-based GA method is the best performer, with an AUC of 0.93. This is followed by SGA and EGA, with AUCs of 0.92 and 0.91, respectively. The other models (ADASYN, SMOTE-based, Original) have lower AUC values of 0.89 and 0.9, suggesting slightly less effective classification performance compared to SVM-based GA, EGA, and SGA.

Statistical comparison of F1 scores on the PHONEME dataset Table 11 displays pairwise comparisons of mean F1-scores across models on the PHONEME dataset. Diagonal values indicate average F1-scores per model, and off-diagonal values show mean differences and associated p-values from paired t-tests. SVMGA attained the highest mean F1-score (73.18), outperforming several models with statistically significant improvements over ADASYN (p = 0.0355), VAE (p = 0.0146), and SGA (p = 0.0399). Although SVMGA also showed positive differences against other models such as GAN and EGA, the corresponding p-values indicate marginal or non-significant differences. Other GA-based models (SGA and EGA) remained competitive, though their relative advantages were not statistically significant. These findings further shows the effectiveness of GA-based methods, with SVMGA in particular showing statistically superior performance on the PHONEME dataset.

Comparative performance analysis

A comparative analysis of F1 scores across all synthetic data generation techniques and benchmark datasets is presented in Fig. 10. The analysis reveals the consistent superiority of the proposed genetic algorithm variants (SGA, EGA, and SVMGA) over conventional oversampling methods and deep learning-based approaches.

F1 Score comparison across synthetic data generation methods on three benchmark datasets. The proposed genetic algorithm variants (SGA, EGA, SVMGA) exhibit superior performance compared to traditional approaches, with SVMGA achieving optimal F1 scores on Phoneme (73.18%) and PIMA (69.78%) datasets, while EGA exhibits superior performance on the Credit Card Fraud dataset (84.04%).

The experimental results indicate that the proposed GA-based methodologies consistently outperform baseline approaches across all evaluated datasets. SVMGA exhibits the most consistent performance characteristics, achieving optimal F1 scores on two of the three benchmark datasets. The comparative analysis substantiates the effectiveness of integrating machine learning guidance within genetic algorithm frameworks for synthetic data generation, thereby validating the fundamental contributions of this research.

Feature importance analysis

A feature importance analysis was conducted using permutation importance across the three datasets: PIMA, Credit Card Fraud Detection, and Phoneme. This analysis identifies the most influential features contributing to synthetic data generation and overall model performance.

Key insights include:

-