Abstract

This study investigates the critical control factors differentiating human-driven vehicles from IoT edge-enabled smart driving systems Real-time steering, throttle, and brake control are the main areas of emphasis. By combining many high-precision sensors and using edge computing for real-time processing, the research seeks to improve autonomous vehicle decision-making. The suggested system gathers real-time time-series data using LiDAR, radar, GPS, IMU, and ultrasonic sensors. Before sending this data to a cloud server, edge nodes preprocess it. There, a Convolutional Neural Network (CNN) creates predicted control vectors for vehicle navigation. The study uses a MATLAB 2023 simulation framework that includes 100 autonomous cars, five edge nodes, and a centralized cloud server. Multiple convolutional and pooling layers make up the CNN architecture, which is followed by fully linked layers. To enhance trajectory estimation, grayscale and optical flow pictures are used. Trajectory smoothness measures, loss function trends, and Root Mean Square Error (RMSE) are used to evaluate performance. According to experimental data, the suggested CNN-based edge-enabled driving system outperforms conventional autonomous driving techniques in terms of navigation accuracy, achieving an RMSE of 15.123 and a loss value of 2.114. The results show how edge computing may improve vehicle autonomy and reduce computational delay, opening the door for more effective smart driving systems. In order to better evaluate the system’s suitability for dynamic situations, future study will incorporate real-world validation.

Similar content being viewed by others

Introduction

Smart car revolution

Smart cars are evolving at a very fast pace and dramatically changing the future of travel. IoT (Internet of Things) edge computing embedded in smart vehicles helms the advent of highly automated vehicles, which are poised to revolutionise the mode of travel worldwide. Autonomous, smartly driven cars will make travel safer, more efficient, and environment-friendly. Industry researchers believe the worldwide market for connected vehicles will reach $225 billion by 2025, and autonomous vehicles will be the primary growth drivers. According to the International Data Corporation (IDC), the global connected vehicle market will likely grow at a CAGR of 9.2 per cent between 2018 and 2025, reaching a market size of $225 billion by 20251. According to the development bank of the German government – the coalition KfW, cheap sensors make autonomous driving easier. On top of that, McKinsey Arts Company estimates that advanced driver-assistance systems (ADAS) and autonomous driving technologies can reduce traffic accidents by up to 90 per cent and save more than 1.25 million lives across the globe2.

Smart car systems

A smart car system incorporates several advanced technologies to facilitate autonomous driving and enhanced human assistance. Such systems contain a network of sensors and cameras to collect timely driving-related data and include actuators to control the vehicle. In particular, computers are used to process safety-critical data in real-time and maintain a moment-by-moment awareness of the vehicle’s environment. According to a report by Allied Market Research, the global automotive LiDAR market size is projected to reach $2.9 billion by 2026, driven by the increasing adoption of autonomous vehicles. Components such as LiDAR, radar, ultrasonic sensing, GPS and Inertial Measurement Units (IMU) jointly generate a vast amount of information regarding situations, thereby enabling detailed control and optimal performance3,4,5.

IoT-enabled smart cars

The IoT will also enable smart cars to be connected to other devices, infrastructure, and vehicles around them, allowing them to share information in real-time, for example, for vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communications. By 2025, more than 75 per cent of all vehicles built will incorporate some sort of V2V or V2I communications, predicts Gartner. IoT-enabled smart cars can also draw cloud services for navigation, diagnostics, and updates, thus helping them further improve the time spent driving and enhance the user experience6,7,8,9.

Edge computing in smart cars

With edge computing, data processing is handled closer to the source, thereby reducing latency and enhancing the real-time nature of decision-making. In smart cars, edge computing means that sensor data can be rapidly analysed and filtered and then pushed to the cloud for storage and further processing10. Consequently, the vehicle can respond in real-time to a dynamic driving experience by enhancing collision avoidance capabilities, adaptive cruise control, and emergency braking, which are all dependent on milliseconds. As per the data analytics company Frost Sullivan, the edge computing market in the automotive sector is estimated to grow at a compound annual growth rate (CAGR) of 35.4 per cent from 2020 to 202711.

Machine learning and CNN in smart car systems

ML can allow data to be passed through many levels of analysis. Therefore, it could effectively ‘improve’ the car’s decisions by looking for patterns in huge numbers of data entries. Over time, ML systems get better at the pattern recognition process. ML can, therefore, be very useful for making smart car systems more effective, for example, by processing multiple data streams. A sub-class of ML, called Convolutional Neural Networks (CNN), can be trained to analyse data in images and videos. It is especially useful for many different applications in a smart car system, such as object detection, lane keeping and traffic sign recognition12,13. CNNs can process and analyse images and video with extremely high levels of accuracy, helping to make autonomous driving safer. One report in the MIT Technology Review states that ‘over the past five years, we have seen the accuracy of convolutional neural networks charged with object detection in autonomous vehicles increase by 40%14,15,16.

Research focus

Though autonomous car technology has advanced considerably, current navigation systems have serious constraints in real-time decision-making, trajectory prediction, and processing efficiency. Traditional autonomous driving systems mostly depend on centralized cloud computing, which delays processing sensor data and restricts the vehicle’s capacity to make split-second judgments. Furthermore, traditional models have problems with sensor fusion, which complicates the integration of data from many sources like LiDAR, radar, IMU, GPS, and ultrasonic sensors. These constraints affect general navigation performance, control smoothness, and trajectory planning accuracy.

This paper combines IoT edge computing with deep learning-based navigation techniques to fill in these gaps, moving computation closer to the source to improve real-time processing. Unlike previous studies that emphasize cloud-driven decision-making, our method uses edge nodes for quick data processing, hence greatly lowering latency and enhancing responsiveness. A Convolutional Neural Network (CNN) is also used to handle optical flow and grayscale pictures, hence enhancing trajectory prediction and adaptive control techniques. By learning from substantial time-series data, the CNN-based model refines steering, throttle, and brake choices, exceeding classic rule-based or heuristic models. The suggested system is assessed using performance criteria such as Root Mean Square Error (RMSE), loss function trends, and trajectory smoothness, revealing considerable improvements over current techniques. By means of high-precision, edge-driven decision-making, our work advances smart driving and opens the path for more dependable and efficient autonomous navigation. Future work will concentrate on actual validation to further evaluate the system’s flexibility in dynamic driving conditions.

The key contributions of this paper are summarized as follows:

-

Novel multi-sensor integration framework: We present a unique approach to integrating GPS, camera, radar, LiDAR, and ultrasonic data through a hybrid CNN-based edge computing architecture, enabling more efficient real-time decision-making compared to traditional methods.

-

Edge–cloud hybrid system design: We propose and simulate an IoT-based smart vehicle system that optimally balances local edge inference with cloud-based processing, ensuring reliable performance even under limited edge node coverage.

-

Performance benchmarking: We provide detailed comparisons between CNN and LSTM models on key performance metrics such as accuracy, latency, RMSE trend, loss trend, demonstrating the superior suitability of CNN for the targeted real-time tasks.

-

Robustness and cost analysis: We assess the system’s operational limits, including communication ranges, bandwidth thresholds, and fallback mechanisms, and offer a comprehensive cost evaluation to assess large-scale deployment feasibility.

Driving forces

Our quest for fuelling safe, efficient and green vehicles remains at the core of developing these technologies. Harnessing the edge computing of IoT and the potential of smart cars might be the recipe to solve a big future challenge. The European Commission estimates that connected and autonomous vehicles could reduce traffic by as much as 30 per cent and drastically cut CO2 emissions for a brighter and cleaner future. We aim to push the development of smart cars toward the ultimate vision of autonomous driving with IoT edge computing and machine learning combined with advanced control strategies. Through systematic analysis and on-road testing, we expect that autonomous driving will lead to safer and more efficient driving operations than traditional manual driving.

Literature survey

To help researchers figure out what has been researched before, what are some gaps, trends, and future research directions, the literature survey is extremely necessary to researchers because it gives them a broad overview of the totality of what has already been done in a particular research area. The researcher can do better than what currently exists, avoid duplication of efforts, and make sure the paper or study contributes to the already existing academic discourse by studying previous work. Also, a review of the literature helps in the conceptualisation of the study as it provides the background to the study and helps in the framing of the research questions or clear hypotheses for the study. It helps the researcher know the most recent developments and ways of approaching the study area as it helps the researcher ensure that their research project is relevant and real.

Our third selection, titled “IoT workload offloading efficient, intelligent transport system in federated ACNN integrated cooperated edge-cloud networks” (2024), written by Lakhan et al.17, utilised a new concept that incorporated artificial intelligence techniques to solve the problems of smart transport systems while essentially handling the offloading problems of connected devices. The concept includes the design of an augmented convolutional neural network (ACNN) to enable users to make collaborative choices and the utilisation of machine learning methods for the offloading of workload and scheduling. Simulation metrics of the augmented federated learning scheduling scheme (AFLSS), cited in the authors’ work, show superior performance – achieving a higher average estimation accuracy and using less total time compared to existing approaches for management, scheduling, and workload offloading. The following can be a vision-based DTLS with objects detected using the YOLO (You Only Look Once) object detection algorithm developed by Hazarika et al. (2024)18 to overcome the issues in traffic management in urban areas.

Traditional static traffic lights operate in fixed time intervals based on the sensor’s brightness. This leads to excessive waiting times and congestion of the traffic flow. The smart traffic light system, on the other hand, dynamically adjusts the signal time interval based on the real-time traffic density, leading to a minimum delay in the traffic flow. The traffic density is estimated using self-learning algorithms, which allow minimum delay in traffic signal adjustments. Critical junctions can communicate with each other, allowing a prioritised traffic flow in junctions near schools, offices, and hospitals. Traffic computations can be performed using approximate computing techniques to minimise the overhead, along with state-of-the-art low-power communication technologies like the IEEE 802.15.4 standard DSME MAC and/or LoRaWAN. Smart cities shall integrate these systems to improve traffic handling in the cities while also providing green traffic corridors for emergency vehicles.

In edge offloading of IoT environments, Jin and Zhang (2024)10 propose a novel vehicle-splittable task offloading algorithm called VPEO, which achieves satisfactory numerical results. The performance indicators mentioned in the table result from the simulation experiments. The changes in the number of vehicles and the number of tasks, as well as the experiment results, are listed.

We can see from the table that the VPEO algorithm can achieve 94% accuracy in task calculation after 750 iterations within an acceptable time interval. In intelligent warning experiments, the average accuracy for lane offset distance measurement is 84.66%; the average judgment correctness for the identification of vehicle class is 92.26%; the accuracy for the identification of vulnerable traffic participants and their relationship with the maintenance of the lane line is 94.69%. These results show that the VPEO algorithm can provide real-time calculations of intelligent driving information and issue warnings in time for driving safety. Jacob et al.19, in their work “IoT-based Smart Monitoring System for Vehicles”19, describe a system that enforces strict penalties/fines on people who violate traffic laws by providing 100% monitoring without any possibility of evading penalty. It continuously monitors for traffic violations that take place right from the time the car is started, such as top speed, rash driving, drunken driving, and seat belt failures, using sensors on a smart device installed in the vehicle and in a visit to the cloud, sending the emergency data. Police can also check vehicle details from their mobile phones. Alcoholic breath sensors and speed sensors are added to know the driver’s character, which is continuously monitored. Details such as vehicle license, pollution, and insurance information are also stored in the cloud for future reference. The current violators are automatic, and the enhancement of laws and order on roads is ensured through taxation, automatically deducted and forwarded by an automatic system to the Motor Vehicle Department for issuing penalty/fine due to driver’s negligence of road safety.

Anto Praveena et al. (2021)20 have designed a speech recognition-based car system on the cloud using the IoT. The above diagram shows a smart car using IoT and speech recognition. This design is meant to make the car easy to operate and track. The car system uses a Bluetooth module with an APC recognition engine to detect speech, recognise it as commands, and measure distance to obstacles. Four types of motion commands can be recognised, and the system can detect the presence of obstacles in front of the car using an ultrasonic sensor. The system can be used in two modes: manual mode, where the user can control the car by voice commands, or automatic mode, where the car moves autonomously. The Arduino Uno board controls all operations by receiving digital output from sensors, while voice commands are given through speech commands using the APC recognition engine. The above system enhances the application process of speech-based motion commands on a vehicle by providing a user-friendly system to operate mechanisms in a vehicle (Table 1).

A comprehensive review of bio-inspired meta-heuristic algorithms emphasizes their rapid convergence and superior fitness scores in solving intricate problems21. In underwater systems, a probabilistic framework leveraging solar-powered hydrophone sensor networks achieves high reliability and energy efficiency22. For unmanned aerial vehicles (UAVs), an obstacle detection system using Advanced Image Mapping Localization (AIML) was proposed, though the article was later retracted23. Further advancements in UAV communication and scene classification integrate the White Shark Optimizer (WSO) with deep learning techniques, achieving remarkable accuracy and performance metrics24. Additionally, the War Strategy Optimization (WSO) algorithm optimizes electric vehicle (EV) charge scheduling, significantly reducing waiting times and charging costs. Building on these innovations, recent research explores IoT-edge-enabled smart driving systems, integrating high-precision sensors like LiDAR, radar, GPS, IMU, and ultrasonic sensors to collect real-time data. These systems leverage Convolutional Neural Networks (CNNs) at edge nodes and cloud servers to generate precise control vectors for autonomous navigation, demonstrating improved control precision and trajectory smoothness compared to conventional methods25. Collectively, these studies underscore the transformative potential of bio-inspired optimization, IoT-edge integration, and deep learning in enhancing efficiency, reliability, and real-time decision-making across diverse engineering applications.

Methodology: IoT edge–enabled smart car driving system

The smart automobile driving system, which aims to minimize energy consumption and improve control accuracy, enables autonomous driving through the integration of cutting-edge technologies. As shown in Fig. 1, the system architecture is organized as follows: to enable real-time data analysis and decision-making, cars are outfitted with edge nodes, several sensors, and a cloud server. Together, these elements provide robust and secure vehicle control. In contrast to traditional systems that handle sensor streams separately, our method presents a unique multi-modal sensor fusion strategy that uses an improved pre-processing pipeline to integrate LiDAR, radar, GPS, IMU, and ultrasonic data at the edge node level. This pipeline improves the efficiency of CNN analysis downstream by reducing duplicate data, filtering sensor noise, and creating context-enriched feature maps prior to transmission to the cloud. As shown in Fig. 1, the cloud server, edge nodes, and vehicles form an interconnected framework. Real-time data from various sensors are collected from moving vehicles and transmitted to nearby edge nodes, where this novel fusion approach is applied. At the cloud server, the system employs custom-designed CNN architectures that have been specifically adapted to leverage the multi-sensor fusion output, capturing both spatial and temporal dependencies in ways that outperform generic CNN or LSTM models applied on raw sensor streams. The computed control vectors are then transmitted back to edge nodes and relayed to the respective vehicles, enabling real-time autonomous control of steering, throttle, braking, and other driving functions.

A synthetic dataset that included different ambient elements, sensor noise, and dynamic vehicle behaviors was created to replicate real-world driving situations and guarantee reliable model training. With the use of this dataset, the suggested CNN-based model was trained to be flexible in a variety of driving situations. CNNs were chosen over other deep learning models like Transformers and LSTMs because of their greater feature extraction capabilities as well as their customized integration with the suggested multi-modal fusion architecture. Furthermore, a comparative analysis of CNN-based architectures, such as MobileNet and ResNet, has been carried out in order to maximize performance. Even though the present approach has been verified using simulation and artificial data, real-world testing and implementation are still necessary to confirm its effectiveness in dynamic and uncertain driving situations. This future evaluation will involve benchmarking against real-world datasets and on-road testing to assess model performance under practical conditions.

The suggested smart vehicle driving system, as seen in Fig. 2, combines several parts, including the cloud server, the car control unit, the edge sensor node, and the sensor unit of the automobile. Together, these parts guarantee the vehicle’s safe and highly efficient autonomous operation.

Car control unit

Each sensor detects and measures relevant aspects of the environment in real time, and then a controller relays this information to the car’s central computer. The car computer processes the data and decides on an appropriate course of action. Based on the computer’s command, the actuators regulate a specific car function, like throttle, brakes, and steering. Figure 1 Smart car driving system. In modern cars, there can be up to 70 sensors installed, compared to only 10 to 20 five years ago, to collect data from inside and outside the car in real-time. Figure 2 shows that the main sensors include:

GPS sensor

Precise geolocation data is available through the Global Positioning System (GPS) sensor. The information provided by the GPS sensor is vital to plan the optimal route from one location to another and in navigation. The GPS sensor helps to keep the car on track to its destination by tracking its position throughout the journey.

Camera sensor

The details related to the high resolution of the visual data from the camera sensor is recorded. It pertains to sighting visible obstructing objects, traffic lights, lane boundaries, road markers, and road signals and signs. The camera sensor collects this visual data from the surrounding environment of the car to identify the environmental objects and make appropriate decisions for driving.

Radar sensor

It senses the handle of an object for the automobile in order to inflect the engine speed of cars. For example, a radar sensor propagates the radio wave to the object that is nearing or moving parallel to the car. It is bounced by the adjacent objects and returned to the original point of radar. Hence, the distance, as well as the speed of those objects that bounced the radio waves, could be measured by the radar sensor. As only cars, pedestrians, and other obstructions could be detected in relatively low weather altitudes, the use of radar sensors is crucial in avoiding collisions.

LiDAR sensor

Three-dimensional maps of its surroundings can be determined with high precision by LiDAR sensors. These sensors create a precise point cloud, representing the objects and terrain around the car with the measurement of the times by which laser pulses that are projected and sent by the sensor are reflected back to it. This improves the car’s high-precision obstacle detection and driving abilities.

Ultrasonic sensor

It uses sound waves to detect how far objects are from the car and helps to obtain accurate distance measurements for low speeds such as parking. It is helpful when preventing collisions, for example, when other sensors do not detect objects close enough.

Any sensor information on any of the automobile sensor pairs that make up the car sensor unit provides added value to the driving environment information as the whole unit. Coordination among sensors and automobiles guarantees that the vehicle will function in hostile circumstances as a unified system to reach the final destination in a timely and safe manner. The car sensor unit is a significant pillar of the autonomous driving system as it gathers and constantly transmits an equal amount of sensor data, which is converted into its relevant mathematical form by the relevant edge node to control the automobile in real time and time the following mathematical equation represents this:

Let \(\begin{array}{c}{\mathbf{S}}_{\text{car}}\left(t\right)\end{array}\) represent the sensor data from a single car at time t. This sensor data may consist of multiple sensor readings, which we can denote as \(\begin{array}{c}{\varvec{s}}_{\varvec{i}}\left(t\right)\end{array},\) where i ranges from 1 to N, representing the number of sensor channels.

The sensor data vector of the car at time t is given by:

where, \(\begin{array}{c}{s}_{i}\left(t\right)\end{array}\) represents the value of the ith sensor channel at time t. N is the total number of sensor channels. This equation represents the sensor data transmitted from one car to the edge node at a specific time instant t.

Edge sensor node

The edge sensor node plays a crucial role in the smart car driving system by acting as a bridge between the sensor unit of the smart car and the cloud server. Its major mission is to collect, pre-process and send sensor data to the system, making it fast and efficient.

Data aggregation

The edge sensor node continually combines information from the many sensors on the vehicle, such as the GPS sensor’s geolocation (GPS), the camera sensor’s visual data, the radar’s distance and speed data, the LiDAR’s 3D environment map, and the ultrasonic sensor’s proximity data. After that, this data is contextualized to create a gateway dataset that depicts the state of the vehicle. Before sending the data to the cloud server, the edge sensor node completes a number of crucial pre-processing operations as soon as the data is collected. The computational cost and complexity brought about by the integration of numerous approaches, including CNN, GPS, LiDAR, and other sensors, must be taken into account. Efficient data fusion strategies and optimization techniques must be employed to minimize latency and ensure real-time performance in dynamic environments. Additionally, understanding the data sources and sensing methods aids in effective pre-processing, ultimately improving the efficiency of subsequent data analytics.

Noise filtering

Noise and non-useful features are being filtered out, ensuring useful information and improving data quality and validity.

Sensor-data synchronisation

bringing sensor data from different sources to a common timeline so that they can be handled collectively in further processing.

Basic analytics

Uncomplicated analysis of data for the facilitation of follow‑on analytics such as obstacle detection, calculating vehicle speed and direction and bearing so as to prioritise critical data and enable urgent local decision-making.

Decrease latency

A layered approach to data aggregation and pre-processing at the edge sensor node significantly cuts latency Taking decisions at the edge of the network means the information needed about network topology and peer status can remain relevant over longer timescales. There’s no need to continuously monitor between nodes since the network’s bandwidth is significantly reduced. Much of the processing involved not only remains local but can be preconfigured and partial so that an alert sent to the central node will be well-defined and include both the data it refers to and the details of the action it requests.

After pre-processing, the edge sensor node sends refined data to a cloud server. Cloud servers can work more efficiently and accurately as the volume of data is decreased and the edge sensor node improves the quality of data. Edge sensor node package data with a form of dense-and-able interpretation by the cloud server’s CNN module.

Control vector relay

The edge sensor node not only provides data to the cloud but also relays control vectors from the cloud server to the vehicle. Once the control vectors have been derived from the ingested data by the cloud server, these vectors are relayed to the edge sensor node and thence to the car’s control unit. The control vectors dictate the real-time action of the car controller, such as steering, throttle, braking, collision avoidance, and adaptive cruise control.

Mathematically, as mentioned earlier\(\:\begin{array}{c}{\mathbf{S}}_{\text{car}}\left(t\right)\end{array}\) represent the sensor data from a single car at time t. This sensor data may consist of multiple channels capturing various aspects of the car’s environment, such as GPS coordinates, camera images, radar readings, LiDAR point clouds, and ultrasonic sensor measurements. We can represent this sensor data as a vector:

where \(\:\begin{array}{c}{s}_{i}\left(t\right)\end{array}\) represents the value of the ith sensor channel at time t, and N is the total number of sensor channels.

If there are M cars transmitting sensor data to the edge node, we can represent the sensor data from all cars as a matrix:

This matrix represents the sensor data from all cars at time t, where each row corresponds to the sensor data from a single car.

Cloud server

One of the key segments of the smart car driving system is a cloud server, which involves a higher level of data and decision-making computing. Three subcomponents are the cloud receiver, CNN module, and cloud transmitter. These modules will jointly work on the sensor data that might be transferred from the edge sensor node and then get control vectors to deliver the autonomous behaviour of the car.

Cloud receiver

Receives the information delivered by the edge data sensor. The cloud receiver is designed to handle large volumes of data received from the edge sensor node and to store all the information so that such data is not lost. The cloud receiver must manage the instantaneous and ordered delivery of information. The functions of the cloud receiver include.

-

a.

Raw data uptake: It receives pre-processed sensor data, pushed by the edge sensor nodes, described in aggregated and filtered from the GPS, camera, radar, LiDAR and ultrasonic sensors of the vehicle.

-

b.

Cloud Storage: The incoming data is stored in the cloud for a brief period of time in a format that is easy for the CNN module to process.

-

c.

Data integrity: Is the data preserved at its original state without any errors or changes being introduced in transmission?

The proposed CNN architecture module

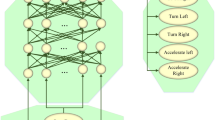

The Convolutional Neural Network (CNN) module plays a central role in the cloud server, serving as the core machine learning model for processing large-scale sensor data and generating control commands for the vehicle. Unlike traditional models such as Long Short-Term Memory (LSTM) networks, which excel in sequential time-series prediction, or Transformer-based architectures, which are computationally intensive for real-time edge processing, CNNs are particularly well-suited for sensor fusion tasks involving spatially correlated features, such as LiDAR and camera-based perception. The justification for selecting CNN over other deep learning models is based on its superior ability to capture spatial hierarchies in sensor data, making it effective for multi-modal data fusion. Moreover, CNNs offer computational efficiency on edge devices compared to recurrent architectures like LSTMs, which require sequential dependencies, and Transformers, which demand high memory and processing power. Figure 3 illustrates the proposed CNN architecture, which is trained on data from multiple car sensors (LiDAR, Radar, GPS, IMU, and Ultrasonic) to generate control output vectors for vehicle navigation. The CNN model processes multi-channel sensor inputs through optimized convolutional layers, extracting spatial and temporal patterns to enhance trajectory prediction. The selection of CNN parameters, including filter sizes, depth, activation functions, and pooling strategies, has been carefully designed based on empirical analysis. Additionally, a comparative study with other architectures such as ResNet, MobileNet, and LSTMs will be incorporated to further validate the robustness of the proposed model. By leveraging CNNs for feature extraction and decision-making in real-time driving scenarios, the proposed system ensures efficient navigation while maintaining computational feasibility for edge-based deployment in smart vehicles.

This CNN is composed of many units, each complete with an input, feature extraction, hierarchical representation learning, and decision layer:

Input Layer: The input layer accepts the information derived from the car sensor unit. Data from laser, radar, sonar, GPS, video cameras, blue-tooth, Wi-Fi, etc., contributes to this multidimensional input. The input layer encodes it into a network format. Feature Layer: Subsequently, this information is transferred to the feature layer in the CNN in the form of pixels, which are further used by the network.

Convolutional layers (Conv1 and Conv2)

Parallel to the input layer, there are two convolutional layers – Conv1 and Conv2 – that receive the input layer and apply a set of learnable filters (kernels) to combine the inputs and extract parallel hierarchical representations from the input via convolution. This process produces two feature maps that encapsulate learned patterns and correlations between the input data governed by the convolution filters in the convolutional and subsampling layers, respectively. The outputs are then passed to the next layers.

Pooling layer 1

In Pooling Layer 1, the outputs of Conv1 and Conv2 are combined together. Pooling operations (often max pooling) are performed to halve the feature maps’ spatial dimensions while retaining the most salient feature activations. This serves the dual purpose of reducing computational complexity and controlling overfitting.

Convolutional layers (Conv3 and Conv4)

The feature maps from Pooling Layer 1 are fed into two more levels of convolutional layers, called Conv3 and Conv4, in parallel. Just like Conv1 and Conv2, the feature maps from Conv3 and Conv4 are also generated by the convolution operation based on the pooled representations. Again, the covariate shifts are kept at a comparable scale but at a higher level. The covariate shift generated in Conv3 and Conv4 are more abstract and complex.

Concatenation of outputs in pooling layer 2

Outputs of Conv3 and Conv4 are concatenated in Pooling Layer 2, and the process of pooling is performed to reduce the spatial dimensions significantly.

Fully connected layers (FC1 and FC2)

The feature vector from Pooling Layer 2 is flattened and passed on to two fully connected layers, FC1 and FC2. These layers perform nonlinear transformations on flattened feature vector L1 = ReLU(FC1(L0) + bFC1)L2 = ReLU(FC2(L1) + bFC2)Here FC1 and FC2 are the two fully connected layers. The network has learnt complex feature control vectors (L0, L1, L2) using CNN and FC layers, which enables it to map complex data relationships to network output. FC1 and FC2 are the two layers of decision-making, where they integrate the learnt features and produce control vectors for autonomous vehicle control.

Output layer

The vector of control commands at the system’s output (‘decoders’) and based on the representations learned at its two previous hidden layers is simply FC2. The output layer uses this vector of control commands to navigate the vehicle via steering, throttle control, braking, collision avoidance and adaptive cruise control.

Table 2 now reflects the input as sensor data with dimensions N xT x C, where N is the Batch size, T is the Number of time steps or readings, C is the Number of channels or features in the sensor data, F1, F2, F3, F4, are Number of filters in Conv1, Conv2, Conv3, Conv4 respectively.

-

a.

Input Data The incoming sensor data is processed by the CNN, which identifies patterns and objects, learns about the vehicle’s environment, etc. A typical CNN will ultimately have a large number of layers, each layer containing convolutional filters exerted on visual and spatial (i.e. multidimensional) data in an effort to make sense of noisy data.

-

b.

Control Vector Generation: Upon its assessment, the CNN generates control vectors that direct the vehicle’s actions. These control vectors consist of directional commands for steering, throttle, braking, obstacle avoidance and adaptive cruise control. Mathematically, the CNN transforms the input data matrix \(\begin{array}{c}{\mathbf{D}}_{\text{edge}}\left(t\right)\end{array}\) into control vectors \(\begin{array}{c}V\left(t\right)\end{array}\)

where \(\begin{array}{c}{f}_{\text{CNN}}\end{array}\) is the function computed by the CNN, W represents the network weights, and b denotes the biases.

-

iii.

Lifelong Learning: The CNN module adapts incrementally as it learns from new data in an iterative and life-long process. This continuous learning process enables the system to adapt to new conditions and to improve its decision-making accuracy.

Cloud transmitter

The cloud transmitter takes the learned control vectors and sends them back to the edge sensor nodes. It provides a communication channel that can guarantee the delivery of control instructions to the vehicles from the cloud. The main tasks of the cloud transmitter are.

-

a.

Control Vector Transmission: Sends the control vectors V(t) generated by the CNN module to the corresponding edge sensor nodes to ensure each vehicle receives the corresponding command to carry out the autonomous operation.

-

b.

Communication Management: The cloud transmitter manages the ‘communication protocols’ to maintain a stable and secure connection with the edge sensor nodes, to maintain low latency, and – crucially – to make sure the control instructions never get lost in the ether.

-

c.

Feedback Loop: It allows for a feedback loop through which acknowledgement signals returned from the edge nodes indicate that the control vectors have been both delivered and implemented. Let’s denote the control vectors transmitted from the cloud server to the edge node and from the edge node to the individual car as \(\begin{array}{c}{\mathbf{V}}_{\text{cloud}}\left(t\right)\end{array}\) control vector relayed from the cloud server to the edge node at a time (t) and \(\begin{array}{c}{\mathbf{V}}_{\text{edge}}\left(t\right)\end{array},\) control vector relayed from the edge node to the individual car at time t.

The mathematical equations for these control vectors can be represented as:

-

a.

Control vector relayed from the cloud server to the edge node:

-

b.

Control vector relayed from the edge node to the individual car:

\(\:\begin{array}{c}{v}_{i}\left(t\right)\end{array}\) represents the ith control command at time t, and M is the number of control command signals relayed from the control cloud server to the edge node. N is the number of control command signals relayed from the edge node to the individual car. These equations illustrate the feasibility of remote control and coordination of the autonomous vehicles between the cloud server and the edge node and between the edge node and the individual car. The smart car driving system, which relies on computing power and high-end machine learning capabilities from the control cloud server, will be capable of making sophisticated and accurate driving decisions. As the models of the driving decision process and the real-time driving status are all deployed in the cloud server, such a centralised processing approach will facilitate vehicle driving with the highest levels of safety, efficiency, and adaptation to ever-changing complex driving environments.

Sensor cost evaluation and financial viability

To assess the practical feasibility of deploying the proposed IoT-based Smart System at scale, an approximate cost breakdown of the required sensors has been compiled. Table X presents the estimated costs of the standard models used in the system as well as identified low-cost alternatives that can help reduce overall expenses. These cost estimates are based on current commercial prices and reflect typical market ranges as of 2024 (Table 3).

The analysis indicates that while premium-grade sensors increase the initial deployment cost, the system’s financial viability can be significantly enhanced by integrating low-cost alternatives, particularly in LiDAR and radar components, without markedly sacrificing system performance. For fleet-level or large-scale deployments, the use of solid-state LiDAR and compact short-range radar modules can substantially reduce costs. Additionally, the modularity of the proposed system enables flexibility in sensor selection based on specific use cases and budget constraints, further supporting scalable and cost-effective implementation.

Compatibility with existing and new vehicles

Modular hardware kits with suitable sensor packages and external edge computer units make upgrading current cars possible. Standard car communication interfaces like OBD-II ports or CAN buses can be used to install these kits with little intervention. Our modular design reduces integration complexity and permits progressive adoption, even though retrofitting does offer some problems, such as space limitations, wire complexity, and sensor calibration to the vehicle’s control systems. Sensor compatibility and edge node processing power are the main hardware prerequisites. Integration with several current sensor platforms is made possible by the system’s support for widely used sensor types, including GPS, LiDAR, radar, and ultrasonic. Nonetheless, sensors that satisfy the system specifications’ minimal accuracy and update rate criteria operate at their best.

Experimental setup: IoT-based smart car driving system

To assess the performance of the intended architecture and validate the potential efficiency of the Smart System, the suggested IoT-based Smart System model was simulated in the MATLAB 2023 environment. Because of its strong computational capabilities and extensive toolbox offers for modeling and simulating complex systems, MATLAB 2023 was chosen. The IoT-based Smart System model was specifically simulated using toolboxes including Simulink, the Image Processing Toolbox, and the Deep Learning Toolbox, which covered topics like sensor data processing, control algorithms, and machine learning components.

The simulation also included settings for communication coverage range and edge node accessibility to address deployment issues. Under ideal circumstances in urban and suburban settings, the maximum dependable operational distance between the vehicle and the edge node was found to be around 200–300 m based on the tested communication protocols (Wi-Fi 6 and 5G). The system’s fallback methods kick in after this range, depending on onboard inference until a connection is made. In order to evaluate the system’s behavior at different proximity levels and make sure the realistic deployment constraints are fully understood, this coverage information was incorporated into the simulation. Details of the simulation system are shown in Table 4.

Table 5 outlines the parameters and specifications used for training the Convolutional Neural Network (CNN) within the smart car system simulation. To guarantee optimum model performance, substantial preprocessing approaches were applied. Data deduplication was achieved by recognizing and deleting similar sensor readings and picture frames captured within short time periods (e.g., consecutive frames with minor change in pixel intensity and sensor values). A time-based thresholding strategy was adopted, where data acquired within a preset time range (e.g., 100ms) were evaluated. If numerous readings displayed identical or near-identical values inside this frame, only the first occurrence was preserved to avoid redundant learning and bias. Missing sensor readings were imputed using linear interpolation, ensuring temporal consistency and eliminating false discontinuities. Outlier identification was achieved using a mix of Z-score analysis and box plot-based filtering, ensuring extreme values did not disturb model learning. Normalization was conducted using Min-Max scaling to normalize sensor readings and pixel intensities for consistent data representation. Feature engineering played a critical role in boosting predictive power—key characteristics such as velocity, acceleration, and steering angle derivatives were calculated to enhance real-time trajectory prediction. The CNN was trained using the Adam optimizer with an adjustable learning rate of 0.0001, guaranteeing steady convergence. Early stopping was introduced with a patience of five epochs to minimize overfitting, guaranteeing generalization over unexpected driving conditions. These preprocessing methods jointly increased model resilience, minimizing training bias and boosting real-time decision-making accuracy.

As shown in Fig. 4, the hierarchical architecture for the network in edge IoT-based smart car driving control is indicated. The simulation is a rectangular area of 100 × 100 units, and the topological network scale in terms of machine numbers consists of 100 cars with IoT nodes, 5 edge nodes, and 1 central cloud. Each car’s IoT node communicates with the closest edge node; then, the edge node transmits the collected information to the central cloud node for processing and decision-making. This hierarchical network topology can improve the processing efficiency of data and help to distribute control over all the smart cars during the driving process in real time with low latency and communication overhead.

In this stage, resources and information are transported and processed from the lips of the sensor unit at the vehicle, spread across multiple sensors. Data received from the sensor signals the commencement of the smart-car system into action, where it could be processed at various nodes to ascertain a decision. Digital data, through a ledger system of blockchain, could be forwarded to the blockchain and its respective edge nodes. Figure 5 showcased a simplified illustration of the structure of a smart car system which integrates smart sensors and artificial intelligence to enhance the car’s navigational capabilities and efficiency and mitigate risks (e.g. collision with other vehicles on the road). LiDAR data is received from the radio-frequency worm sensor on the front bumper. The LiDAR sensor constructs a spatial reflective mapping of the geometry of the surroundings, e.g., the perception of vehicles, pedestrians, or any objects in the left, right, front left, and front right directions of the vehicle. RADAR data, on the other hand, is received from the signal. RADAR data, to be processed, are derived from directional transmitters in the car’s front bumper and return back with its relevant speed and velocity in centimetres. e.g. toward the front of the vehicle. At the edge node, both datasets are cohort, making this a transformative juncture where sensor data becomes valid behavioural interactions. As data amasses at the edge, it is transmitted and stacked to be processed.

In Fig. 6, the edge node marks the entry point of GPS and IMU data and is pivotal in executing a smart car system. GPS data is the ultimate source of geographical position information, providing the latitude and longitude of the vehicle’s location over time, which are the key input for navigation algorithms and the basis for localization and path tracking, as shown in Fig. 2. On the other hand, IMU data provides dynamic information for the vehicle’s movement, which reflects the accelerations over the X and Y axis, as we can see in Fig. 6. With such speed information, the smart car has the ability to detect the dynamic movement of the vehicle and subsequently take appropriate control signals to improve the optimal control for the motion of the smart vehicle. Putting two sorts of data (spatial and motion) together, we are sure that the edge node here represents the junction of different dimensions and the fusion of data streams. Therefore, it is quite reasonable to say that this edge node is where all data streams are merged to work in synergy to wield the brilliant capability of the smart, automated car system in terms of being accurate in detecting the information of the live vehicles, namely, trajectory tracking, motion control, obstacle avoidance and safety.

In Fig. 7 we can see how the smart car behaved dynamically. The graph gives us a full description of how the vehicle moved, how it sped, and in what direction it turned automatically. The data shows how the smart car controlled itself graphically through the drawn line of colour in different time divisions. The above two parameters, which are known as velocity, also called speed of the car(M/S) and steering angle, also known as driver hand movement, are the main parameters for smart car driving and also same time which make dependence on the driver’s brain to reach his required place socially with good environment and situation whether the conditions are related to speed or to road itself and or time situation in go to work brands And all these points will be discussed based on the following chart for answering smart car control under smart transportation pervasion. The figure below indicates that smart car interactions between vehicle speed and steering angle are very important. The smart car needs to depend only on the human hand behaviour to make it work but also the smart to keep it stable. Data are plotted from the graph, which shows that a similar smart car will snap an image from every side with high colour and light.

Figure 8 illustrates major smart car performance and operational environment factors in detail. In Subplot 1, the status of the brake and accelerator pedal over time demonstrates the subtle and flexible control input (human-driven and autonomously controlled), which leads the vehicle to run at speed as the driver desires with fine-tuning of the vehicle behavioural responsiveness and system output. In Subplot 2, the temperature and the humidity over time are displayed as well - they are essential environmental conditions that have a significant impact on road grip, the vehicle power output and the reliability of the sensors. These factors will be monitored and analysed so that the smart car system can naturally adapt to the changing environment and adopt the right driving strategy for maximising safety and reliability with dynamic system control.

(a) CNN Training Iteration vs. Loss Fig. 9(b) CNN Training Iteration vs. RMSE.

Figure 9 illustrates the performance of the CNN during the training process of our research on IoT edge-enabled smart car driving systems. It shows the trends of loss function and Root Mean Square Error (RMSE) in relation to training iterations. Figure 9(a) describes how the performance of the CNN model gets better while training progresses. We can observe from the image that the loss function decreases over training iterations, which tells the capacity of the model to learn and optimise its parameters as the loss function is related to errors between the predicted and actual control parameters.

Figure 9(b) shows the training iterations in comparison with RMSE. It describes the performance of the CNN model in the prediction of the control vectors used in smart car driving systems since the Root Mean Square error tends to decrease over the iterations. This trend demonstrates how the CNN model accurately handles real-time data from multiple sensors including LiDAR, Radar, GPS and IMU. All in all, these subfigures demonstrate that the CNN model has an effective training process while training our research IoT edge-enabled smart cars driving system since its loss and RMSE tend to decrease over iterations. This imaginary decrease demonstrates the learning and convergence capabilities of the CNN model, which offers high accuracy in smart car driving and provides safe and efficient driving systems.

Figure 10 depicts a direct comparison in detail, presenting steering control parameters in various driving conditions that further lay out the effectiveness of smart driving algorithms efficiently. The data presented in the form of a line graph clearly distinguishes the intricate detail of manual driving, smart driving with variation capability and a reference condition without steering changes efficiently that provides us with a detailed explanation of smart driving system tendencies to identify natural driving systems precisely and more responsively than manual drive methods.

Figure 11 explores the complexity of throttle control parameters under different driving scenarios, with the data visually showing the subtle distinction of the situations crucial for evaluating smart driving planning. We can clearly see that throttle control for three kinds of driving scenarios, including manual driving and smart driving but with noise and a stable reference with continual throttle control, are shown in the figure.

Moreover, the plotted line clearly indicates that throttle control under a smart driving scene is more stable than the other two using simple throttle control. This represents that smart driving may adapt the throttle two to respond to the vehicle situation, thus obtaining more efficient results under a dynamic driving environment. Therefore, it could be concluded from the scatter plot that smart driving technology can give an advantage in saving time during driving.

Figure 12 provides comprehensive information regarding the distinguishing characteristics of brake controls of either smart driving or manual driving systems. The bar graph and the line graph below present clear data on the variances between manual driving systems with steadfast brakes and the smart driving system that brakes where and whenever necessary.

On one hand, compared with the bar graph, the line graph shows that a smart driving system brakes where and whenever necessary, whereas a manual driving system employs a consistent brakes approach. Particularly, as you can see as well, a smart driving system is a more explicative approach, which allows the driver to optimise brake manoeuvre explicitly, when necessary, by adapting brakes applied according to the dynamic conditions of roads. In essence, a smart driving system shows not just proper brakes applied when necessary but also enhances safety in case of collision. Meanwhile, the bar graph contrasts distinct features of smart driving and manual driving.

In conclusion, the chart plainly compares two different driving characteristics of smart driving and manual driving and explains their functions and differences. As depicted in Fig. 12; by applying brakes whenever necessary, a smart driving system demonstrates its benefits in terms of collision avoidance as well as enhancing the safety of driving when compared to typical manual driving.

The proposed CNN-based Edge Computing System was evaluated through five independent experiments, focusing on three critical performance metrics: Loss, RMSE (Root Mean Square Error), and CNN Accuracy shown by Fig. 13. These experiments aimed to assess the system’s ability to process real-time sensor data, generate precise control commands, and enhance autonomous driving efficiency. The loss function, which indicates model optimization, showed a steady decline from 2.98 in Experiment 1 to 2.11 in Experiment 5, confirming improved learning and reduced errors. Similarly, RMSE, representing trajectory prediction accuracy, decreased from 22.45 to 15.12, highlighting enhanced vehicle control and stability. The CNN model accuracy, crucial for sensor data classification, exhibited a positive trend, increasing from 85.23 to 92.34%, demonstrating the system’s ability to adapt and improve decision-making over successive trials. These results validate the efficacy of the proposed system in real-time vehicle navigation, ensuring accurate, safe, and energy-efficient autonomous driving. The dataset used was synthetically generated to reflect real-world driving conditions, and future work will focus on real-world implementation and benchmarking against traditional models such as Kalman filters and reinforcement learning-based control systems to further validate its feasibility in dynamic environments.

Conclusion

This study presents an IoT edge-enabled SCADA architecture for smart car driving management, integrating advanced sensor networks and deep learning-based control mechanisms. By leveraging edge computing, machine learning (ML), and convolutional neural networks (CNNs), the proposed system demonstrates significant advancements in real-time vehicle control, trajectory accuracy, and collision avoidance. Theoretically, this research contributes to intelligent transportation systems by refining predictive control strategies based on multi-sensor data fusion. It highlights how CNN-driven control vector generation improves actuation precision across varying driving conditions, providing an optimized framework for smart vehicle navigation. From a practical standpoint, the proposed architecture offers several advantages over traditional vehicle control systems. The fusion of LiDAR, radar, GPS, and IMU data enables more accurate and responsive decision-making, resulting in improved throttle, brake, and steering control. The system achieved a Root Mean Square Error (RMSE) of 15.123, a loss value of 2.114, and enhanced trajectory smoothness, demonstrating superior control precision compared to traditional autonomous driving models. The ability to dynamically adjust to real-time environmental changes enhances safety, efficiency, and driving adaptability. Additionally, the use of edge computing for low-latency processing ensures rapid response times, making the approach viable for real-world autonomous and semi-autonomous vehicle applications. Future research should explore several key directions. First, implementing the proposed system in a hardware-in-the-loop (HIL) or real-world testbed would help validate its effectiveness in practical driving scenarios. Second, integrating reinforcement learning with CNN-based control could further enhance adaptive decision-making by allowing the system to learn from dynamic driving environments. Lastly, extending the system to incorporate V2X communication and swarm intelligence could enable cooperative vehicle behavior, improving safety and efficiency in connected autonomous driving networks. By addressing these areas, future work can build upon the foundation established in this research, advancing the capabilities of IoT-enabled smart driving systems toward full-scale real-world deployment.

Data availability

Data available on request from the Corresponding author (Majjari Sudhakar).

References

BOUREKKADI, S. & INTERNET OF THINGS (IOT) APPLICATIONS IN THE AUTOMOTIVE SECTOR. J. Theoretical Appl. Inform. Technol. 102, 8 (2024).

Rajasekaran, Umamaheswari, A., Malini & Murugan, M. Artificial intelligence in autonomous Vehicles—A survey of trends and challenges. Artificial Intell. Auton. Vehicles 1, 1–24 (2024).

Yi, X., Ghazzai, H. & Massoud, Y. A LiDAR-assisted smart car-following framework for autonomous vehicles. In 2023 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1–5. IEEE, (2023).

Tippannavar, S. S. & Yashwanth, S. D. Self-driving Car for a smart and safer Environment–A review. J. Electron. 5 (3), 290–306 (2023).

Stähler, J., Markgraf, C., Pechinger, M. & David, W. G. High-Performance perception: A camera-based approach for smart autonomous electric vehicles in smart cities. IEEE Electrification Magazine. 11 (2), 44–51 (2023).

Das, S. & Kayalvihzi, R. Advanced multi location IoT enabled smart Car parking system with intelligent face recognition. Int. J. Res. Eng. Sci. Manage. 7 (1), 5–10 (2024).

Prathyusha, M. R. and Biswajit Bhowmik. Iot-enabled smart applications and challenges. In 2023 8th International Conference on Communication and Electronics Systems (ICCES), pp. 354–360. IEEE, (2023).

Songhorabadi, M. & Rahimi, M. AmirMehdiMoghadamFarid, and Mostafa Haghi kashani. Fog computing approaches in IoT-enabled smart cities. J. Netw. Comput. Appl. 211, 103557 (2023).

Rammohan, A. Revolutionizing intelligent transportation systems with cellular Vehicle-to-Everything (C-V2X) technology: current trends, use cases, emerging technologies, standardization bodies, industry analytics and future directions. Vehicular Communications, 43, 100638 (2023).

Jin, H. & Zhang, H. Smart vehicle driving behavior analysis based on 5G, IoT and edge computing technologies. Applied Math. Nonlinear Sciences 9 (1) (2024).

Patil, V. and Apurv Kulkarni. Edge Computing-Adaptation and Research. Vidhyayana-An International Multidisciplinary Peer-Reviewed E-Journal-ISSN 2454–8596 8, no. si7 : 736–746. (2023).

Chaudhuri, A. Smart traffic management of vehicles using faster R-CNN based deep learning method. Sci. Rep. 14 (1), 10357 (2024).

Singh, P., Sharma, R., Tomar, Y., Kumar, V. & Singh, N. Exploring CNN for driver drowsiness detection towards smart vehicle development. In Revolutionizing Industrial Automation Through the Convergence of Artificial Intelligence and the Internet of Things, (eds. Mishara D. U., & Sharma, S)213–228. IGI Global, (2023).

Triki, N., Karray, M. & Ksantini, M. A real-time traffic sign recognition method using a new attention-based deep convolutional neural network for smart vehicles. Appl. Sci. 13 (8), 4793 (2023).

Nair, S., BEYOND THE CLOUD-UNRAVELING THE BENEFITS OF, EDGE COMPUTING IN IOT & Int. J. Comput. Eng. Technol. 14 : 91–97. (2023).

Biswas, A. & Hwang-Cheng, W. Autonomous vehicles enabled by the integration of IoT, edge intelligence, 5G, and blockchain. Sensors 23, no. 4 : 1963. (2023).

Lakhan, A., Grønli, T. M., Bellavista, P. & Memon, S. Maher alharby, and orawitthinnukool. IoT workload offloading efficient intelligent transport system in federated ACNN integrated cooperated edge-cloud networks. J. Cloud Comput. 13 (1), 79 (2024).

Hazarika, A., Choudhury, N. & Nasralla, M. M. Sohaib Bin Altaf khattak, and Ikram Ur rehman. Edge ML technique for smart traffic management in intelligent transportation systems. IEEE Access, 12, 25443–25458 (2024).

Jacob, C. M. et al. An IoT based Smart Monitoring System for Vehicles, 2020 4th International Conference on Trends in Electronics and Informatics (ICOEI)(48184), Tirunelveli, India, pp. 396–401 (2020).

Praveena, M. D. et al. Nandini. Smart Car based on IOT. In Journal of Physics: Conference Series, vol. 1770, no. 1, p. 012013. IOP Publishing, (2021).

Selvarajan, S. A comprehensive study on modern optimization techniques for engineering applications. Artif. Intell. Rev. 57, 194. https://doi.org/10.1007/s10462-024-10829-9 (2024).

Kshirsagar, P. R. et al. Probabilistic framework allocation on underwater vehicular systems using hydrophone sensor networks water 14, no. 8: 1292. (2022). https://doi.org/10.3390/w14081292

Selvarajan, S. et al. RETRACTED ARTICLE: Obstacles Uncovering system for slender pathways using unmanned aerial vehicles with automatic image localization technique. Int. J. Comput. Intell. Syst. 16, 164. https://doi.org/10.1007/s44196-023-00344-0 (2023).

Nadana Ravishankar, T. et al. White shark optimizer with optimal deep learning based effective unmanned aerial vehicles communication and scene classification. Sci. Rep. 13, 23041. https://doi.org/10.1038/s41598-023-50064-w (2023).

Madaram, V. et al. Optimal electric vehicle charge scheduling algorithm using war strategy optimization approach. Sci. Rep. 14, 21795. https://doi.org/10.1038/s41598-024-72428-6 (2024).

Author information

Authors and Affiliations

Contributions

Data curation, M.S and K.V; Formal analysis, M.S and K.V; Funding acquisition,-Not Applicable-; Methodology, M.S and K.V; Project administration, M.S and K.V; Resources, M.S and K.V; Supervision, M.S and K.V; Writing–original draft, M.S and K.V.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sudhakar, M., Vivekrabinson, K. Enhanced CNN based approach for IoT edge enabled smart car driving system for improving real time control and navigation. Sci Rep 15, 33932 (2025). https://doi.org/10.1038/s41598-025-09805-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-09805-2