Abstract

Detecting skin melanoma in the early stage using dermoscopic images presents a complex challenge due to the inherent variability in images. Utilizing dermatology datasets, the study aimed to develop Automated Diagnostic Systems for early skin cancer detection. Existing methods often struggle with diverse skin types, cancer stages, and imaging conditions, highlighting a critical gap in reliability and explainability. The novel approach proposed through this research addresses this gap by utilizing a proposed model with advanced layers, including Global Average Pooling, Batch Normalization, Dropout, and dense layers with ReLU and Swish activations to improve model performance. The proposed model achieved accuracies of 95.23% and 96.48% for the two different datasets, demonstrating its robustness, reliability, and strong performance across other performance metrics. Explainable AI techniques such as Gradient-weighted Class Activation Mapping and Saliency Maps offered insights into the model’s decision- making process. These advancements enhance skin cancer diagnostics, provide medical experts with resources for early detection, improve clinical outcomes, and increase acceptance of Deep Learning-based diagnostics in healthcare.

Similar content being viewed by others

Introduction

Cancer is responsible for a significant number of deaths worldwide1. This type of cancer is a highly perilous form of cancer because the skin is the largest organ in the body2. The skin provides protection against several things such as infections, heat, and UV light3. If it is affected by cancer it poses the biggest threat to the body4. The World Health Organization and Skin Cancer Foundation report that skin cancer accounts for one-third of all cancer cases and the rate is increasing daily5,6. Skin cancer occurs when cells divide and grow without control7. New skin cells usually growss when the older one gets damaged or dies however, in this case, they grow quickly in an unsorted way. This is the reason why it is recognized as cancer. Melanocytes produce in dark pigments on the skin, that appear primarily black and brown and can also be red, pink, or purple8,9.

Some skin cancers develop in the upper part of the skin. The skin is composed of three distinct tissues: hypodermis, epidermis, and dermis. Epidermal tissue contains melanocytes that produce melanin at an increased rate compared with the usual amount10. There are four distinct forms of cancer of the skin: melanoma (MEL), melanocytic nevi (MN), basal cell carcinoma (BCC), and squamous cell carcinoma (SCC). Among these melanoma is the most dangerous cancer because of its impact on the body11. Melanomas are categorized as either benign or malignant.12. Common nevi and other benign lesions with an epidermal surface contain melanin. Melanin production is considerably higher in malignant lesions. The most dangerous aspect of melanoma cell impact is that it constantly migrates to other organs, especially the brain and liver.

According to the American Cancer Society’s estimates for 2024, approximately 100,640 new cases of melanoma are expected to be diagnosed in the United States, with about 8,290 deaths resulting from the disease13. Over the past 15 years, there has been an enormous 46% raise in the annual incidence of newly identified invasive melanomas14.Projections indicate that by 2040, the number of new melanoma cases could increase by more than 50% to approximately 510,000, with deaths rising by nearly 70% to around 96,000 annually15. Early identification of melanoma is essential for preventing the disastrous rate of death and initiating treatment as soon as possible. This type of cancer is slightly different from other cancers . Melanocytic nevi occur in pigmented moles of various colors corresponding to different skin tones. Moles appear most prevalent during childhood and early adulthood, as the number of moles on the body continues to increase until the age of 30-40 years. Quick identification is critical as it significantly enhances the survival rate over the course of five years to 98%16,17.

Medical system detects melanoma using several techniques. Dermatologists detect skin lesions using several steps, from the naked eye to examining through dermoscopy18. Precise recognition is distinct and mostly depends on the healthcare provider’s abilities. Additionally, the process of manually identifying skin cancer is extremely difficult and burdensome for patients, requiring significant time and financial resources. To solve this automated detection utilizing algorithms of deep learning, particularly Convolutional Neural Networks (CNN) have become more popular and common in recent times due to their capability for better detection and speed along with their wide application in research. These CNN models detect melanomas with improved efficiency and speed19.

Deep learning can detect complex patterns and result in a better diagnosis than previous diagnosis techniques. The survival rates are above 96% in the initial stage, and life expectancy decrease to five percentage points in the late stage. Because Early detection is very important to prevent this disease CNN models will greatly improve earlier detection20. Pre-existing CNN models apply the knowledge achieved when resolving a single concern to another connected issue. In the field of skin disease, this process comprises the utilization of neural network models that have been initially trained on extensive datasets. These models are then fine-tuned to perform specific tasks, such as melanoma identification. Several CNN algorithms include DenseNet, Inception, ResNet, Xception and VGG . In our study we implemented the proposed Model to detect melanoma at an early stage. The Xception architecture is widely recognized as having remarkable computational capacity as well as substantial enhancements. It effectively utilizes computing resources and manages an increase in the computational load to deliver a high-performance output21. By customizing the Xception model with techniques such as Swish activation, Batch Normalization, and Dropout, The detection abilities are improved, resulting in more accurate and reliable detection of early-stage melanoma.

Despite progress in this field there are still some gaps to improve the prevention of melanoma. A major issue is the lack of diversity in the existing datasets, which means that they do not accurately represent the range of skin lesions found in diverse populations and settings. This limitation negatively impacts the capacity of the models to perform well in real-world scenarios. Dermoscopic images often include artifacts such as hair, bubbles, and uneven lighting, which existing models do not fully handle22. This may have resulted in potential misdiagnosis. In addition, there is a requirement for models that can handle multi-class classification to create an improved diagnosis and a more comprehensive diagnostic tool.

To address these gaps, our study focuses on the early detection of melanoma by developing a proposed deep-learning model with improved prediction and detection capabilities. We constructed this model from a robust base and merged the two distinct datasets into a comprehensive dataset that included diverse skin tones, lesion types, and imaging conditions. We applied advanced preprocessing techniques such as artifact removal, contrast enhancement, hair removal, median filtering, and resizing and scaling to improve image quality. Additionally, our model incorporates Swish activation, Batch Normalization, and Dropout to enhance computational efficiency and stability. These enhancements lead to higher accuracy and reliability in the detection of early-stage melanoma. Our approach ensured that the model can handle various image variations. This makes it a powerful tool for the early and accurate detection of melanoma.

Ultimately, the complexity of assessing melanoma reinforces the necessity of obtaining profound enhancements in diagnostic accuracy. The limitations of existing methods highlight the need for novel approaches. Deep learning can standardize and enhance the precision of melanoma diagnosis, thereby leading to better patient outcomes. Our study utilized the advantages of deep learning and integrated several datasets and innovative preprocessing approaches to tackle these difficulties. The main contributions of this study are as follows:

-

1. Development of a Proposed Model : Our study introduced an enhanced version of the Xception model that has been improved for the purpose of identifying early-stage melanoma through the analysis of dermoscopic pictures. This model integrates advanced techniques such as as Swish activation, Batch Normalization, Dropout layers, and thick layers with L2 regularization to improve the performance and dependability.

-

2. High Diagnostic Accuracy and Precision : The improved model demonstrates excellent accuracy rates of 95.23% and 96.48% for Dataset 1 and Dataset 2 respectively. The accuracy rates of these models greatly exceed those of current models, representing a major improvement in automated melanoma diagnosis.

-

3. Preprocessing Techniques for better Image Quality: Our approach incorporates extensive preprocessing techniques, including artifact removal, contrast improvement, hair removal, median filtering, resizing and scaling. These strategies improve the quality of dermoscopic images, ensuring that the model receives exceptional quality inputs for accurate melanoma identification.

-

4. Incorporation of Explainable AI Techniques: We integrated Explainable AI (XAI) methods, such as Gradient-weighted Class Activation Mapping (Grad-CAM) and Saliency Maps, into our diagnostic framework. This integration improves the clarity and interpretability of the model’s decisions, thereby enhancing the transparency of our findings in medical diagnostics. This approach is aimed at building greater trust and acceptance among clinicians and patients.

-

5. Robust Evaluation with Diverse Datasets : We employed two separate and extensive datasets to train and assess our model, providing a broad range of complexions, lession types, and imaging settings.This comprehensive assessment emphasizes the model’s ability to apply and perform consistently in various real-life situations.

-

6. Potential for Early Detection and Improved Clinical Outcomes : Our methodology greatly improves the treatment effectiveness and outcomes for patients by enabling precise early diagnosis of melanoma. Timely detection enables prompt therapies, thereby minimizing physical and mental strain on patients and potentially decreasing healthcare expenses.

The remainder of this paper is organized into six sections. The first section provides an introduction to the topic, followed by a review of related literature in the second section. Section Materials And Methods details the methodology used in this study. In Section Result Analysis, the results obtained are presented and compared. This study’s findings are discussed in Section Discussion, and Section Conclusion provides the conclusions and direction for future research.

Literature review

Recent advancements in automated skin cancer detection have benefited significantly from deep learning and computer vision technologies. A notable contribution to the field is the development of an FCN-based DenseNet framework specifically designed for detecting and classifying skin lesions in dermoscopic images, which demonstrates remarkable accuracy and efficiency. This is further complemented by the introduction of ensemble lightweight deep learning networks that address the challenges of complex detection backgrounds and lesion features, significantly improving lesion recognition accuracy and segmentation precision23. Utilizing Intelligent Multilevel Thresholding with Deep Learning (IMLT-DL) methods, which incorporate pre-processing, segmentation, and classification processes tailored for skin lesion analysis, exemplifies the innovative approaches pursued. Exploring skin cancer detection systems utilizing dermoscopy images to differentiate between normal and cancerous skin areas underlines the potential of image processing techniques to enhance early detection capabilities24,25. Another significant contribution is the proposition of non-invasive diagnostic systems capable of distinguishing malignant melanoma from other skin conditions, underscoring the importance of precise and accurate diagnostic frameworks26.

The development of DermICNet, an efficient dermoscopic image classification network, emphasizes the collaborative capabilities of neural networks and clinical practice to achieve accurate diagnoses27. Inquiry into the autonomous identification of skin cancers using dermoscopy has expanded the application of AI in dermatology, with studies demonstrating the utilization of neural networks for non-contact automatic diagnosis, thereby improving early detection and treatment outcomes28. The effectiveness of AI in identifying skin cancer subtypes, which are crucial for personalized treatment plans, has been demonstrated by the successful detection of acral melanoma using CNNs. Efforts to support clinicians in diagnosing melanoma from dermoscopic images through decision support systems have illustrated the potential of AI to complement and enhance dermatological capabilities. As shown in recent studies, the capability of deep learning models for multiple category applications and CNNs in detecting skin tumors reinforces the argument for adopting these technologies in clinical settings29,30.

The introduction of CNN-based systems for skin cancer cell detection highlights the continuous push towards enhancing diagnostic capabilities by leveraging deep convolutional features and advanced machine learning algorithms. Similarly, the focus on non-invasive diagnostic systems emphasizes the importance of early and accurate detection mechanisms, which can significantly impact treatment outcomes31,32.

Recent studies have expanded the role of deep learning in medical image analysis through the introduction of diverse architectural designs. A MetaFormer-based model that combines convolutional and transformer structures for identifying skin cancer from dermoscopic images33. They further proposed a hybrid design using ConvNeXtV2 and focal self-attention, improving the model’s ability to focus on lesion-specific regions under challenging class distributions34. In a related approach, they integrated separable self-attention into a compact ConvNeXtV2 framework to enhance representational efficiency while reducing model complexity35. Attention-based U-Net variants have been applied for segmenting ischemic stroke lesions, where the attention mechanism contributed to more precise boundary detection in diffusion-weighted imaging36. Transformer-enhanced YOLO models were investigated for mammographic mass detection, showing how backbone modifications can strengthen object localization even in limited data conditions37. Together, these efforts highlight the adaptability of deep learning architectures across various clinical imaging contexts, offering insights applicable to skin lesion analysis as well38.

A comprehensive review of recent advancements in skin cancer diagnostics demonstrates the transformative capacity of deep learning methods, specifically the utilization of convolutional neural network (CNN) structures and machine learning frameworks to improve diagnostic accuracy and efficiency39. Concurrently, exploring multiple imaging systems for sequential examinations of skin lesions presents a novel approach to melanoma detection, leveraging morphometry and metrological features to enhance early diagnostic capabilities40. The development of methods for analyzing images for recognizing skin cancer types such as melanoma, utilizing advanced algorithms for retrieval of distinctive characteristics and categorization, further contributes to the expanding toolkit available for combating skin cancer. This is complemented by the deployment of neural network-based architectures to facilitate the multiclassification of skin cancer, which showcases the ability of AI technologies to identify distinct types of skin cancers with high efficiency41,42. The utilization of neural network algorithms on dermatoscopic images in melanoma identification signifies another significant stride towards harnessing the power of AI in medical imaging, providing a robust platform for automated inspection and categorization of skin abnormalities. Efforts to segment skin lesions from dermoscopic images using convolutional neural networks emphasize the critical role of precise lesion boundary identification in the diagnostic process43,44.

The proposition of a framework that combines multiple features to automatically diagnose skin cancer illustrates the potential of integrating multiple data processing techniques to achieve a more comprehensive and accurate diagnosis. Additionally, creating a programmed apparatus for detecting skin melanoma incorporating a measure for melanoma derived from entropy characteristics presents a novel diagnostic metric that enhances the interpretability and reliability of AI-driven diagnostics45,46. Explorations into the design of retrieval-based diagnostic aids utilizing compelling features for the classification of skin lesions highlight continuous innovation in the field, with the aim of refining diagnostic aids for better clinical utility. Moreover, the development of an autonomous multi-label categorization model classification for skin cancers using deep learning models marks a significant advancement in accurately categorizing skin cancer types and, facilitating targeted treatment strategies47,48. Finally, a comparative analysis of the efficacy of traditional visual analyzers and neural network models on an eventual dataset of skin cancers sheds light on the evolving landscape of dermatological diagnostics. This comparison underscores the superior diagnostic performance of CNNs, indicating a shift towards more AI-integrated approaches in clinical settings49.

Significant progress and advancements in melanoma detection in the early stages using deep learning have led to more accurate detection. However, some challenges still need to be addressed to achieve more robust performance. Data limitations, more complex and merged datasets, and extensive preprocessing steps for images are necessary for feeding models to learn complex patterns and provide more precise diagnoses and clinical outcomes. Additionally, the accurate detection of each type of melanoma, specifically classifying benign and malignant cases, remains critical for better results.

To address these problems and improve early-stage melanoma detection, two datasets with different types of images and lesions were used. We also implemented several preprocessing steps, including artifact removal, contrast enhancement, hair removal, median filtering, and resizing and scaling, to prepare the images for training in the model. Furthermore, we developed a proposed model with numerous modifications, including GlobalAveragePooling2D, Batch Normalization, multiple dropout layers, and dense layers with Swish and Rectified Linear Unit (ReLU) activation, among other processes. The proposed model detects melanoma by each class more accurately than existing models, providing better performance and more reliable diagnostic results.

Materials and methods

Dataset overview

For the purposes of this research, we utilized two distinct dermatological datasets to train and evaluate our model meticulously. By maintaining these datasets separately, we aimed to provide a comprehensive analysis that accurately reflect the performance of our model across different data sources.

Melanoma Cancer Image Dataset50, referred to as Dataset 1, comprised 2597 images. This dataset was carefully curated to enhance dermatological research and support computer-aided diagnosis. Each image was uniformly sized at 224 \(\times\) 224 pixels, facilitating consistent input for our deep learning model. The data set offers an in-depth analysis of the combination of malignant and benign lesions, making it a significant resource for the development of effective deep-learning models aimed at early detection of melanoma and improved patient outcomes. CNN for Melanoma Detection Data51, known as Dataset 2, comprises 2081 images. Similar to Dataset 1, this collection focuses on the early detection and classification of melanoma.

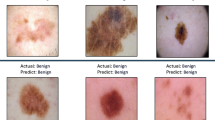

The inclusion of diverse skin lesion images makes this dataset crucial for advancing deep-learning models that aim to enhance diagnostic precision and reliability. Figure 1 shows examples from the benign class, and Fig. 2 illustrates samples from the malignant class, highlighting the diversity of lesions in our datasets. These figures are essential for understanding the dataset’s composition and the model’s training process.

Table 1 lists the datasets used in this study. We worked with two datasets, both containing benign and malignant classes. The overall image count for the Melanoma Cancer Image Dataset50 is 13900, and for the CNN for Melanoma Detection Data51 is 10000.

Each dataset was partitioned in the following categories: training, validation, and test forms, ensuring a consistent proportion for proper assessment of our model. Specifically, 70% of those pictures from the different classes were allocated in order to training, whereas the remainder 30% were equally divided between validation and test sets, each comprising 15% of the total images. We used a subset of the full dataset for our experiments due to the limited computational resources available on the Google Colab platform, specifically the T4 GPU. As shown in Table 2, the total number of images used was significantly reduced from the full dataset sizes listed in Table 1. This sampling approach was necessary to ensure that the model could be efficiently trained and tested within these constraints while still providing reliable and meaningful results.

By carefully dividing these datasets, we ensured that our model was trained, tested and validated using a diverse and representative sample of images, thereby enhancing its generalizability and robustness in real-world applications. The distinct datasets and their respective splits are detailed in Fig. 3, which provide a clear overview of the data distribution used in this study.

Data preprocessing techniques

Data preprocessing is a critical initial step in our workflow, ensuring that raw dermoscopic images are standardized and optimized for input into the neural network. The key stages of the preprocessing pipeline are summarized in Table 3.

Data augmentation approaches

To increase the variability of training data and improve the model’s generalization ability, we applied several data augmentation techniques during the training phase. These augmentations were implemented using the Keras ImageDataGenerator and were applied only to the training set, while the validation and test sets remained unchanged.The key stages of the augmentation are summarized in Table 4.

Proposed model

The Xception architecture was selected based on its efficient design and consistent performance during preliminary evaluation. Its depthwise separable convolutions support effective feature extraction while reducing computational complexity, making it well-suited for dermoscopic image analysis. Comparative models demonstrated less stable results or required extensive tuning to mitigate overfitting. An extensive description of the proposed model is provided in this section, detailing the architecture of the proposed models and the preprocessing techniques used to enhance its performance.

Structure of the proposed model

This study presents a robust neural network model that accurately classifies malignant skin diseases. The model utilizes a full preprocessing and augmentation procedure. Key preprocessing includes artifact removal, contrast enhancement, hair removal, median filtering, and resizing photos to a uniform size of 224 \(\times\) 224 pixels. Our model architecture is built on a pretrained Xception network, followed by custom layers consisting of global average pooling, batch normalization, dropout, and dense layers with ReLU and Swish activation functions, incorporating L2 regularization. This configuration was designed to enhance feature extraction and improve classification performance, as illustrated in the Fig. 4.

Global average pooling

The Global Average Pooling (GAP) approach was utilized to compress the spatial information of attribute maps in convolutional neural networks (CNNs) into a singular value per map of characteristics52. This approach generates a mean value for every element in the map, leading to an additional concise description.

The description for the GAP surface is described mathematically in the following manner:

where the variable \(x_{ij}\) indicates the specific element located on the \(i\)-th entry and \(j\)-th field within this feature map that appears \(H\) along with \(W\) represent the vertical and horizontal dimensions of the characteristic display, respectively.

Batch normalization

Batch normalization stages have been used to enhance stability while expediting instruction.53. The method optimizes the activation of cells in an arrangement, resulting in a reduction in inside covariate changes.

The batch normalization mechanism is precisely specified to be:

The variable \(x\) represents what is entered, \(\sigma\) and \(\mu\) represent the average plus degree of variation of the input, \(\gamma\) and \(\beta\) represents the obtainable measure while evolving factors, The symbols \(\varepsilon\) represent as tiny parameters to avoid the division with a value of zero

Dense layers with ReLU activation and L2 regularization

Along with L2 regularization, those ReLU trigger mechanisms are included in every robust section, along with L2 regularisation. The L2 constraint, also referred to as weighted decomposition, administers an infringement within the size of the loads throughout the system. By preventing the construction of excessively sophisticated algorithms and promoting the production of fewer intricate versions, this method helps mitigate overfitting54. Because the L2 regularization term has been incorporated into the loss function of the network, models with higher applicability to unknown inputs are encouraged to be created by penalizing overly large weights.

-

1. Dense Layer: A completely connected layer is a type of neural network layer in which each neuron is linked to every neuron in the preceding layer, allowing for comprehensive information exchange.

-

2. ReLU Activation: The utilized activation function is a Rectified Linear Unit (ReLU), which is mathematically defined as:

$$\begin{aligned} \text {ReLU}(x) = \max (0, x) \end{aligned}$$(3)The Rectified Linear Unit (ReLU) function creates nonlinearity by instinctively resulting in the input if it is positive; otherwise, it produces zero.

-

3. L2 Regularization: A strategy to mitigate overfitting requires the inclusion of a regularization term that is equivalent to the total of the square weights. The L2 regularization term is defined as follows:

$$\begin{aligned} \text {L2}\_\text {Regularization} = \lambda \sum _i W_i^2 \end{aligned}$$(4)where \(\lambda\) is the regularization parameter and \(W\) represents the weights of the dense layer.

Dense layers with Swish activation

The structure of the Swish activation function makes it easier to build a strong neural network layer that can correctly handle complex patterns55. A dense layer with Swish activation function is defined as follows:

1. Swish Activation: The Swish activation function is utilized on the output of the dense layer is defined as:

where, \(\sigma (x)\) is the sigmoid function.

Dropout regularization

Overfitting is a frequently occurring issue with sophisticated neural network architectures, particularly when there is a lack of appropriate training data56. To address this problem, our model integrates dropout regularization, a method specifically developed to improve applicability by avoiding unnecessary reliance on any one neuron. Dropout is a technique used during training that randomly eliminates a portion of the neurons, as explained in the theoretical framework of dropout regularization.

where, \(h\) represents the movement originating from the prior stratum, \(d\) is a bit array obtained by sampling generated according to Bernoulli dispersion having a retention estimation of \(p\), as well as \(\odot\) indicates component-wise product by selectively discarding a tiny percentage of these triggers. This system prevents excessive reliance on certain procedures, thus enhancing its resilience and efficacy when presented with unseen data. Cross-validation is a common method for determining the hyperparameter known as retention probability \(p\). Dropout rates, represented as \(1 - p\), are often set between the range of 0.2 to 0.5. Excessively elevated rates can result in underfitting, while low rates might not deal with overfitting. During the inferential phase, dropout is disabled, and the activations are multiplied by the dropout rate \(p\) to ensure consistency with the training phase.

The model architecture has removed the stratums set thoughtfully adhering to every thick stratum, excluding any ultimate prediction tier responsible for generating the class probability distribution. The regularization method mentioned here is particularly advantageous for short training datasets. It encourages the creation of feature representations that are more evenly distributed and resistant to variations, as demonstrated in several studies on dropout approaches such as DropBlock, AutoDrop, and Cutout.

Final prediction layer

The final component of the designed neural architecture includes a fully connected tier, which is a crucial aspect of this framework. This soft max processing mechanism was used in this layer to effectively address multi-class classification problems. The mathematical expression for the Softmax function is as follows:

In the given context, \(j\) represents the \(j\)-indexed component within the resultant array \(z\), variable \(K\) represents the complete count of types, and \(\sigma (z)_j\) represents the probability that the input belongs to class \(j\). In essence, each output score (logit) \(z_j\) is exponentiated by the softmax function, which then standardizes these numbers across every category to produce a probability spread with likelihoods by adding up to achieve a single unit.

Model evaluation metrics

The performance of the model is evaluated using a confusion matrix. Before training the model, the dataset was split into training, testing, and validation sets. We used a wide range of criteria to evaluate the effectiveness of the model. The assessment metrics utilized to determine the effectiveness of the proposed approach for the identification of skin cancer57,58,59 are widely established and can be seen using the following equations:

Explainable AI: integration framework

In this section, we present the integration of Explainable AI (XAI) techniques within our deep learning framework for dermatological image analysis, focusing on how these methods enhance model transparency and interpretability. By embedding XAI into our workflow, we aim to bridge the gap between model predictions and clinical understanding, ensuring that the decision-making process is both accessible and justifiable to medical professionals. This integration aids in validating the model’s accuracy and also fosters greater trust in its applications by providing clear visual explanations for each prediction. Explainability is important in medical AI, especially for tasks like melanoma detection. Visual tools such as saliency maps help check model decisions and can increase clinical confidence60. Explainability methods in dermatology often lack proper evaluation. They emphasized the need for clear validation and consistent practices when using XAI in skin cancer diagnosis61. Key Components of Our XAI Approach:

-

1. Neural Network Frameworks: Utilizing TensorFlow and Keras, we developed a flexible neural network architecture capable of handling complex image analysis tasks. These frameworks provided the essential tools for building and customizing layers tailored to medical image interpretation.

-

2. Image Enhancement Techniques: Images were preprocessed to ensure consistency, including resizing and normalization. We also introduced subtle variations to simulate real-world conditions, improving the model’s resilience and adaptability to diverse data inputs.

-

3. Refinement of Pre-trained Models: We adapted existing pre-trained models, incorporating additional layers and fine-tuning parameters specifically for the task of medical image analysis. This process allowed us to leverage the strength of established models while optimizing them for our specific needs.

-

4. Interactive Visualization Methods: We implemented Grad-CAM and Saliency Maps as interactive tools to visually examine the regions of interest identified by the model. These tools provide clinicians with an intuitive understanding of how the model arrives at its decisions.

-

5. Heatmap Generation for Clinical Insights: By generating heatmaps through Grad-CAM, we created detailed visual guides that highlight the areas within images that the model considers most significant, thereby aiding in the interpretability of the model’s predictions.

-

6. Model Validation and Assessment: The model’s performance was rigorously evaluated using validation data, focusing on accuracy, sensitivity, and specificity. We also performed error analysis to understand and improve model predictions.

-

7. In-depth Result Visualization: The results were comprehensively visualized, including original images, associated saliency maps, and generated heatmaps, providing a clear and holistic view of the model’s analytical process, essential for clinical decision-making.

Explainable AI: Fundamental concepts and key equations

Our methodology builds on established deep learning principles with a focus on interpretability. The fundamental concepts and equations that drive our XAI implementation are as follows:

1. Gradient-weighted Class Activation Mapping (Grad-CAM): Grad-CAM62 is employed to bring transparency to our CNN-based models by highlighting the areas of an image that are most influential in the prediction of a specific class.

Here the equation calculates the importance weights for each feature map in the final convolutional layer. The term \(\alpha _k^c\) represents the weight of the feature map \(A_k\) for the class c. The gradients \(\frac{\partial y^c}{\partial A_{ij}^k}\) indicate how much a small change in the feature map \(A_k\) at location (i, j) will affect the score for class c. Summing these gradients over all spatial locations (i, j), and normalizing by the total number of pixels Z, gives us \(\alpha _k^c\). This weight indicates the importance of the feature map \(A_k\) for the prediction of class c, thereby allowing us to generate a heatmap that highlights the regions in the input image that are most influential for the model’s decision.

2. Saliency Maps : Saliency Maps63 serve as a tool for identifying the regions of an image that most strongly influence the model’s output. By calculating the gradient of the output class score with respect to the input image, we can generate a map that shows the areas of the image that are crucial for the model’s decision.

The saliency map equation computes the gradient of the class score \(y^c\) with respect to the input image I. The saliency map \(S_c(I)\) captures how sensitive the model’s prediction of class c is to changes in each pixel of the input image. In essence, it \(S_c(I)\) highlights the pixels in the input image that have the greatest impact on the class score \(y^c\). This allows us to visualize which parts of the image the model is focusing on when making its decision, providing insights into the model’s reasoning process.

Through the integration of these XAI techniques, our model achieves high accuracy and provides meaningful insights into its decision-making process, thereby enhancing its reliability in clinical applications.

Training specifications

To ensure effective model convergence and maximize efficiency, we employed the Adam optimizer with a consistent learning rate of \(1 \times 10^{-4}\), paired with categorical cross-entropy as our loss function. The training process was carefully managed with a batch size of 16, balancing computational demands and training stability across both original and augmented datasets. To mitigate the risk of overfitting, we incorporated early stopping with a patience threshold of 3 epochs, and model checkpoints were strategically utilized to preserve the model showing the best validation performance. Our training setup, detailed in the relevant section, leveraged various state-of-the-art models, all optimized for execution on the NVIDIA Tesla T4 GPU.

Our approach was designed for efficiency, with training conducted over 100 epochs, but with the flexibility for early termination if performance plateaued. The use of multiple processing workers and the powerful computational resources of the NVIDIA Tesla T4 GPU, accessed via Google Colab, significantly accelerated the training process, ensuring both speed and resource efficiency.

To fine-tune the model’s performance, we manually experimented with different learning rates in the range of \([1 \times 10^{-3}, 1 \times 10^{-5}]\) and found that \(1 \times 10^{-4}\) provided the most stable and accurate convergence. The batch size of 16 was selected after evaluating multiple sizes (8, 16, 32, 64), where 16 achieved optimal balance between convergence behavior and memory utilization. Dropout rates were carefully assigned across layers between 0.1 and 0.3 to prevent overfitting, guided by incremental experiments and validation trends. These hyperparameter values were finalized based on consistent improvements in validation accuracy, loss stability, and generalization performance across both datasets.

Result analysis

This section demonstrates the outcome of a detailed examination of skin cancer diseases and the development of a revolutionary classification system using the proposed model based on the base Xception architecture. The main objective is to emphasize the significance of our framework, which was earned after a thorough evaluation in connection with the current benchmark.

Sets of evaluation metrics, such as the overall accuracy of the model, several classification reports such as precision, recall, F1 score, and metrics such as AUC, Loss have been performed to assess the performance of the trained models. Tables 5, 6, 7 present detailed results for Dataset 1. Table 5 shows the results for the training set, Table 6 provides the results for the validation set, and Table 7 displays the results for the test set. Tables 8, 9 and 10 present the detailed results for Dataset 2 training, validation, and test sets, respectively.

The results from both datasets indicate that the Xception model consistently outperformed other architectures across training, validation, and testing phases. While models such as ResNet and DenseNet achieved competitive performance in certain cases, their results were less reliable during validation. In contrast, Xception demonstrated higher accuracy, faster convergence, and improved generalization, supporting its selection as the base architecture. To improve further, the Xception model has been proposed for better and more accurate detection performance. Given the importance of melanoma detection in the early stages, it is very important to develop models that can predict highly accurately and provide more reliability. Our proposed model achieved the highest performance among all the models after customization, demonstrating exceptional effectiveness and performance in detecting melanoma.

For Dataset 1, the proposed model achieved an accuracy of 95.23%, an AUC of 98.25%, a recall of 95.18%, an F1 score of 95.26% and a precision of 95.34%. Additionally, the model showed a notably low Loss value of 0.2693, indicating very strong performance. For the validation set, the proposed model achieved an accuracy of 91.23%, an AUC of 95.26%, a recall of 91.85%, an F1 score of 91.81% and a precision of 91.78%. The Loss value for the validation set was 0.2731, reinforcing the model’s strong performance. For the test set, the proposed model achieved an accuracy of 91.09%, an AUC of 92.11%, a recall of 91.79%, an F1 score of 91.95% and a precision of 94.72%. The Loss value for the test set was 0.2812, further demonstrating the model’s effective generalization and robustness.

For Dataset 2, the proposed model achieved an accuracy of 96.48%, an AUC of 98.25%, a recall of 96.37%, an F1 score of 96.60% and a precision of 96.83%. For this dataset, the model is also showing a notably low Loss value of 0.1981. For the validation set, the proposed model achieved an accuracy of 88.76%, an AUC of 92.56%, a recall of 88.67%, an F1 score of 88.27% and a precision of 87.88%. The Loss value for the validation set was 0.1627, which indicates the model’s reliability and robustness. For the test set, the proposed model achieved an accuracy of 89.29%, an AUC of 93.71%, a recall of 89.17%, an F1 score of 89.39% and a precision of 89.61%. The Loss value for the test set was 0.1711, reflecting the model’s strong generalization ability and effectiveness on unseen data. These results underscore the robustness and reliability of our model, making it highly suitable for early-stage melanoma detection and significantly advancing in the field of medical imaging and diagnostic accuracy.

To evaluate the performance of our proposed model for Dataset 1, we thoroughly examined the confusion matrix given in Fig. 5. The confusion matrix represents the capacity of the model to appropriately identify the data. Our model performed well, as shown by the high number of correctly categorized instances along the diagonal. The model accurately classified 186 of 202 instances as class 0 (benign) and 174 of 187 as class 1 (malignant). These results show the effectiveness of the model in differentiating between the classes. The minimal misclassifications demonstrate the model’s reliability, with 16 for class 0 (benign) and 13 for class 1 (malignant). The color depth in the confusion matrix improves the visual representation of the numerical data, as darker colors along the diagonal correspond to a more significant number of correct predictions. Combined with numerical precision, the graphical cue effectively highlights the model’s proficiency in accurately identifying the input data. The confusion matrix validates the excellent performance of our proposed model, as it achieves a high rate of accurate classification and minimum errors. This indicates the robustness and effectiveness of the model for handling the dataset.

The result of the proposed model demonstrates its extraordinary capacity to accurately recognize precision and recall, showing strong performance in both cases. To make it easier to perform a deeper examination of the outcome measures of our proposed model, we provide visual representations in Figs. 6, 7 and 8 specifically for Dataset 1.

The graphs offer a concise summary of the effectiveness of the methods across many indicators during their training and validation stages, improving comprehension of their effectiveness.

The graphs provided the advancement of the model, showing significant improvements in the ratio between the training and validation slopes for accuracy as time passed. This signifies a progressive enhancement of the learning process. A strong correlation between the loss rates of the training and validation results demonstrates the ability of the architecture to generalize. Although initially confronting issues of overestimation in terms of recall and precision, the architecture eventually reaches convergence, showcasing its robustness and flexibility. The assessment illustrates in Fig. 8 the area under the curve evaluation. That exhibits an incredible outcome approaching one, showing the exceptional performance of the model across different data sources. This enhances their effectiveness.

To assess the performace of our model on Dataset 2, we closely analyzed the confusion matrix shown in the Fig. 9. The confusion matrix provides a clear representation of the accuracy of the modelin precisely categorizing the data being considered. Specifically, the model correctly identified 129 of 164 instances as class 0 (benign) and 136 of 149 instances as class 1 (malignant). The number of misclassifications was relatively low, with 35 for Class 0 (benign) and 13 for class 1 (malignant). This confusion matrix indicates that our proposed model performed well on Dataset 2, similar to its performance on Dataset 1.

Similarly, the performance of the proposed model on Dataset 2 is illustrated in Figs. 10, 11 and 12, highlighting its ability to maintain high precision and recall. These graphs show the model’s performance metrics in detail throughout both training and validation, which adds to the evidence of the effectiveness of the model. The training-validation accuracy ratio shows a consistent upward trend in these values over time, indicating the model’s increasing capacity for learning.

The model’s remarkable capacity to generalize is highlighted by the coherence of the loss values during training and validation. Despite initial issues related to overfitting, namely in terms of accuracy and recall, the model’s convergence demonstrates its flexibility and durability. The AUC statistic for Dataset 2 demonstrates a high score, approaching one, which confirms the architecture’s effectiveness in different data types. Uniform performance seen in both datasets highlights the dependability and efficiency of our suggested methodology.

To validate the interpretability and transparency of our model, we employed Explainable AI (XAI) techniques such as Grad-CAM and saliency maps. These methods allowed us to visually highlight the critical regions of the dermoscopic images that significantly influenced the model’s predictions. Figure 13 presents the results for Dataset 1, while Fig. 14 illustrates the outcomes for Dataset 2. In both figures, we provided the original images alongside the corresponding Grad-CAM and Saliency Map outputs for different classes. Each image is annotated with both the true label and the model’s predicted label, clearly demonstrating the model’s ability to distinguish between benign and malignant lesions across different datasets. The visualizations reveal the specific features within the images that most influenced the model’s decisions, thereby enhancing the transparency of the decision-making process. These results underscore the robustness of our approach, offering clear insights into the model’s diagnostic capabilities and fostering greater trust in its application to melanoma detection.

This research provides a substantial demonstration regarding the sustainability and reliability of the framework we stated, showcasing enhanced achievements across several variables. The model we proposed is specifically intended for detecting and classifying melanoma in its early stages and shows excellent capacity for rapid incorporation into real-time systems.

Discussion

This study introduces a proposed deep neural network approach aimed at identifying melanoma, demonstrating outstanding performance compared to existing models such as ResNet50, EfficientNetV2, and MobileNetV2. The accuracy rates of 94.23% for Dataset 1 and 96.4% for Dataset 2 are the main indicators of our model’s performance. These accuracies significantly surpass the results of standard deep learning models, highlighting the long-term stability and effectiveness of our proposed Xception-based framework. Although models such as ResNet50 and MobileNetV2 have shown good performance in previous studies, they do not match the accuracy achieved by our proposed model. Standard techniques such as ReLU and Swish activation, along with enhancements such as thick layers and Global Average Pooling (GAP), contributed significantly to these impressive gains in accuracy. In addition, our customization process, which involved incorporating multiple dense layers, batch normalization, and dropout, further improved the model’s performance.

Both datasets used in this study were selected to capture variability in lesion types, image quality, and acquisition settings. To address bias, we ensured equal distribution of benign and malignant samples during dataset splitting. Augmentation techniques such as horizontal and vertical flipping, rotation, zoom, and brightness adjustment were applied to increase visual diversity and simulate variations that may occur across different skin types and imaging conditions. Although the datasets did not include explicit demographic labels like skin tone, combining samples from two independent sources helped introduce broader variation. These steps were taken to reduce the risk of model bias during training. Additional datasets with labeled demographic attributes will be considered in future work to further strengthen generalizability.

The proposed model demonstrated exceptional proficiency in accurately classifying instances, with precision and recall values exceeding 90% for both datasets. Specifically, for Dataset 1, the model achieved an accuracy of 94.23%, a precision of 95.34%, a recall of 95.18%, an F1 score of 95.26% and an AUC of 98.25%. For Dataset 2, the model achieved an accuracy of 96.48%, a precision of 96.83%, a recall of 96.37%, an F1 score of 96.60%, and an AUC of 98.68%, underscoring its high precision and consistency in prediction. In comparison, existing models often struggle to maintain high precision and recall rates. For example, models such as EfficientNetV2 and ResNet50 typically do not reach the precision rates of 90-95% that our model. This highlights the ability of our model to correctly identify and capture actual positive instances with minimal false positives and negatives. The model’s efficiency also extends to other necessary measures, such as the AUC curve. The proposed model has exceptional performance with AUC values of 98.25% and 98.68% for Datasets 1 and 2, respectively, indicating its strong ability to distinguish between different illness groups, which is an improvement over many existing models. The loss values of 0.2693 for Dataset 1 and 0.3181 for Dataset 2 indicate that the optimization process was effective and that there were minimal mistakes during training. These values are lower than the loss values typically observed in models like DenseNet and MobileNetV2, indicating superior model training efficiency. These metrics were systematically produced from our thorough evaluation of carefully chosen datasets with various kinds of hair and scalp issues and to guarantee an extensive understanding of the functionality of the model. This comprehensive evaluation further reinforced its potential use in medicinal applications.

A notable feature of our model is the incorporation of Explainable AI (XAI) methods, including Grad-CAM and Saliency Maps, which greatly improve the clarity of the model’s decision-making process. These techniques allow for the visualization of key areas within the images that significantly influenced the model’s predictions. By providing clear insights into the factors the model considers, these methods enhance the model’s transparency and make it easier for clinicians to understand and trust the diagnostic outcomes. There is growing evidence that explainable AI can be useful in clinical applications. A study with 76 dermatologists and found that heatmap-based explanations improved diagnostic accuracy and helped guide attention to the relevant lesion areas64.

These results suggest that visual explanations can support clinical decision-making in dermatology. This enhanced interpretability is essential for clinical practice, where the ability to trace and comprehend the reasoning behind the model’s decisions can build confidence among healthcare providers and patients, positioning the model as a more trustworthy and practical tool in medical diagnostics. Early detection of melanoma is important for improving survival rates and reducing complications during treatment. The high recall, precision, and AUC values achieved by the proposed model show that it can identify melanoma cases accurately without missing early signs. This helps prevent diagnostic delays and allows timely medical response. The model performed well on two separate datasets, which supports its use in real diagnostic environments. Its consistent performance suggests that it can be integrated into existing clinical workflows to support dermatologists during routine screening. Identifying melanoma in its early stages reduces the need for more complex procedures and contributes to better patient outcomes.

Regarding the system resources, we used a shared T4 GPU provided by Google Colab. Using this shared GPU results in longer runtimes for each code execution. If we had access to a more powerful GPU, the results could be even better, because faster processing times and more efficient computations would further enhance the model’s performance. The exceptional accuracy and other performance metrics of the model significantly improved melanoma detection and classification. Existing models often face challenges in early detection and precise classification. Our model provides a much more dependable and expedient screening instrument, which is critical in disorders when quick diagnosis greatly influences treatment outcomes. The ability to reduce misdiagnoses substantially improves patient care and potential reductions in healthcare expenses. Our model represents remarkable progress in the use of deep learning for early-stage melanoma detection, surpassing previous models in terms of accuracy, interpretability, and clinical application.

Conclusion

Developing an Automated Diagnostic System for early melanoma detection using the proposed Xception model demonstrated promising results, achieving accuracies of 95.23% and 96.48% for Dataset 1 & Dataset 2, respectively. However, practical applications revealed several limitations that impacted the overall effectiveness and reliability of the system in clinical settings.

One significant limitation was the variability in dermoscopic images. Despite the application of several preprocessing techniques, the model struggled to generalize across diverse skin types, cancer stages, and imaging conditions. This indicates the need for a more diverse and extensive dataset that better captures a wide range of skin lesions encountered in real-world scenarios. The limitations of the current dataset suggest that improving its quality and diversity can enhance the model’s performance and reliability. By integrating techniques like Grad-CAM and Saliency Maps, our approach enhances model performance and provides greater transparency in decision-making, which is vital for clinical acceptance. The use of advanced modification techniques such as Swish activation, additional dense layers, dropout regularization, and the AdamW optimizer significantly improved the model’s performance but also increased the computational requirements. We used a shared T4 GPU from Google Colab, which limits the available computational resources. The performance of the model can be improved if better system resources are available, such as dedicated, high-performance GPUs. This constraint could limit the model’s practical deployment in resource-constrained environments such as smaller clinics or rural healthcare settings.

Future work will focus on increasing the diversity of the data set by incorporating images from various sources and demographic groups. External validation using independent, clinically obtained datasets will also be considered to evaluate the model’s performance in real-world diagnostic environments. It will also include statistical significance analysis through repeated experimental runs to validate the consistency and reliability of reported performance improvements.

Further refinement of preprocessing techniques and exploration of alternative deep-learning architectures could yield better performance. In addition, efforts to reduce the computational load of the model without sacrificing accuracy would make the system more accessible and practical for widespread use. Addressing these limitations will be crucial to improve the robustness and reliability of the model, making it a more effective tool for early detection of melanoma and improving clinical outcomes through timely interventions. Comparative evaluation with transformer-based models such as ViT, Swin, and DeiT will also be included in future experiments to explore their potential advantages in dermoscopic image analysis.

Data availability

The datasets generated and/or analyzed during this study are publicly accessible at the following links: Dataset 1 (Melanoma Cancer Image Dataset): https://www.kaggle.com/datasets/bhaveshmittal/melanoma-cancer-dataset. Dataset 2 (CNN for Melanoma Detection Data): https://data.mendeley.com/datasets/ggh6g39ps2/2

References

Inagaki, J., Rodriguez, V. & Bodey, G. P. Causes of death in cancer patients. Cancer 33, 568–573 (1974).

World Health Organization. Radiation: Ultraviolet (uv) radiation and skin cancer | how common is skin cancer (2023). Accessed: 2023-03-02.

Subramanian, R. R. et al. Skin cancer classification using convolutional neural networks. In 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), 13–19 (IEEE, 2021).

Piccialli, F., Di Somma, V., Giampaolo, F., Cuomo, S. & Fortino, G. A survey on deep learning in medicine: Why, how and when?. Information Fusion 66, 111–137 (2021).

Gandhi, S. A. & Kampp, J. Skin cancer epidemiology, detection, and management. Medical Clinics 99, 1323–1335 (2015).

Damsky, W. & Bosenberg, M. Melanocytic nevi and melanoma: unraveling a complex relationship. Oncogene 36, 5771–5792 (2017).

Society, A. C. Basal and squamous cell skin cancer causes, risk factors, and prevention (2022). Accessed: 2022-06-19.

Walters-Davies, R. Skin cancer: Types, diagnosis and prevention. Evaluation 14, 34 (2020).

Hodis, E. The somatic genetics of human melanoma. Ph.D. thesis, Harvard University (2018).

Feng, J., Isern, N. G., Burton, S. D. & Hu, J. Z. Studies of secondary melanoma on c57bl/6j mouse liver using 1h nmr metabolomics. Metabolites 3, 1011–1035 (2013).

Tahir, M. et al. Dscc_net: multi-classification deep learning models for diagnosing of skin cancer using dermoscopic images. Cancers 15, 2179 (2023).

Hasan, M. R., Fatemi, M. I., Khan, M. M., Kaur, M. & Zaguia, A. Comparative analysis of skin cancer (benign vs. malignant) detection using convolutional neural networks. Journal of Healthcare Engineering 2021 (2021).

American Cancer Society. Key statistics for melanoma skin cancer (2024). Accessed: 2025-01-16.

AIM at Melanoma Foundation. Facts and statistics (2023). Retrieved from AIM at Melanoma Foundation.

Dermatology Times. Global deaths from melanoma predicted to increase 50% by 2040 (2024). Accessed: 2025-01-16.

Mohan, S. V. & Chang, A. L. S. Advanced basal cell carcinoma: epidemiology and therapeutic innovations. Current dermatology reports 3, 40–45 (2014).

Guy, G. P. Jr., Machlin, S. R., Ekwueme, D. U. & Yabroff, K. R. Prevalence and costs of skin cancer treatment in the us, 2002–2006 and 2007–2011. American journal of preventive medicine 48, 183–187 (2015).

Bomm, L., Benez, M. D. V., Maceira, J. M. P., Succi, I. C. B. & Scotelaro, Md. F. G. Biopsy guided by dermoscopy in cutaneous pigmented lesion-case report. Anais brasileiros de dermatologia 88, 125–127 (2013).

Malik, H., Anees, T., Din, M. & Naeem, A. Cdc_net: Multi-classification convolutional neural network model for detection of covid-19, pneumothorax, pneumonia, lung cancer, and tuberculosis using chest x-rays. Multimedia Tools and Applications 82, 13855–13880 (2023).

Gaur, L., Bhatia, U. & Bakshi, S. Cloud driven framework for skin cancer detection using deep cnn. In 2022 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM), vol. 2, 460–464 (IEEE, 2022).

Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, 1251–1258 (2017).

Abdollahi, B., Tomita, N. & Hassanpour, S. Data augmentation in training deep learning models for medical image analysis. Deep learners and deep learner descriptors for medical applications 167–180 (2020).

Adegun, A. A. & Viriri, S. Fcn-based densenet framework for automated detection and classification of skin lesions in dermoscopy images. IEEE Access 8, 150377–150396 (2020).

Reshma, G. et al. Deep learning-based skin lesion diagnosis model using dermoscopic images. Intelligent Automation & Soft Computing 31 (2022).

Saravanan, S., Heshma, B., Shanofer, A. A. & Vanithamani, R. Skin cancer detection using dermoscope images. Materials Today: Proceedings 33, 4823–4827 (2020).

Ibraheem, M. R. & Elmogy, M. A non-invasive automatic skin cancer detection system for characterizing malignant melanoma from seborrheic keratosis. In 2020 2nd International Conference on Computer and Information Sciences (ICCIS), 1–5 (IEEE, 2020).

Manikandan, S. P., Karthikeyan, V. & Nalinashini, G. Dermicnet: Efficient dermoscopic image classification network for automated skin cancer diagnosis. Revue d’Intelligence Artificielle 36, 801 (2022).

Anand, V. et al. Deep learning based automated diagnosis of skin diseases using dermoscopy. Computers, Materials & Continua 71, 3145–3160 (2022).

Abbas, Q., Ramzan, F. & Ghani, M. U. Acral melanoma detection using dermoscopic images and convolutional neural networks. Visual Computing for Industry, Biomedicine, and Art 4, 1–12 (2021).

Rizzi, M. & Guaragnella, C. A decision support system for melanoma diagnosis from dermoscopic images. Applied Sciences 12, 7007 (2022).

Sivasankari, M. K., Akash, C., Ganesh, M. D. & Sijin, R. A. Cnn based skin cancer cell detection system from dermoscopic images. European Journal of Molecular & Clinical Medicine 8, 2021 (2021).

Khan, H., Yadav, A., Santiago, R. & Chaudhari, S. Automated non-invasive diagnosis of melanoma skin cancer using dermo-scopic images. In ITM Web of Conferences, vol. 32, 03029 (EDP Sciences, 2020).

Pacal, I., Ozdemir, B., Zeynalov, J., Gasimov, H. & Pacal, N. A novel cnn-vit-based deep learning model for early skin cancer diagnosis. Biomedical Signal Processing and Control 104, 107627 (2025).

Ozdemir, B. & Pacal, I. An innovative deep learning framework for skin cancer detection employing convnextv2 and focal self-attention mechanisms. Results in Engineering 25, 103692 (2025).

Ozdemir, B. & Pacal, I. A robust deep learning framework for multiclass skin cancer classification. Scientific Reports 15, 4938 (2025).

Ozdemir, B., Aslan, E. & Pacal, I. Attention enhanced inceptionnext based hybrid deep learning model for lung cancer detection. IEEE Access (2025).

İnce, S., Kunduracioglu, I., Bayram, B. & Pacal, I. U-net-based models for precise brain stroke segmentation. Chaos Theory and Applications 7, 50–60. https://doi.org/10.51537/chaos.1605529 (2025).

COŞKUN, D. et al. A comparative study of yolo models and a transformer-based yolov5 model for mass detection in mammograms. Turkish Journal of Electrical Engineering and Computer Sciences 31, 1294–1313 (2023).

Nie, Y. et al. Recent advances in diagnosis of skin lesions using dermoscopic images based on deep learning. IEEE Access 10, 95716–95747 (2022).

Kudrin, K. G. et al. Early diagnosis of skin melanoma using several imaging systems. Optics and Spectroscopy 128, 824–834 (2020).

Viknesh, C. K., Kumar, P. N., Seetharaman, R. & Anitha, D. Detection and classification of melanoma skin cancer using image processing technique. Diagnostics 13, 3313 (2023).

Naeem, A., Anees, T., Fiza, M., Naqvi, R. A. & Lee, S.-W. Scdnet: a deep learning-based framework for the multiclassification of skin cancer using dermoscopy images. Sensors 22, 5652 (2022).

Jojoa Acosta, M. F., Caballero Tovar, L. Y., Garcia-Zapirain, M. B. & Percybrooks, W. S. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Medical Imaging 21, 1–11 (2021).

Zafar, K. et al. Skin lesion segmentation from dermoscopic images using convolutional neural network. Sensors 20, 1601 (2020).

Bakheet, S., Alsubai, S., El-Nagar, A. & Alqahtani, A. A multi-feature fusion framework for automatic skin cancer diagnostics. Diagnostics 13, 1474 (2023).

Cheong, K. H. et al. An automated skin melanoma detection system with melanoma-index based on entropy features. Biocybernetics and Biomedical Engineering 41, 997–1012 (2021).

Singh, L., Janghel, R. R. & Sahu, S. P. Designing a retrieval-based diagnostic aid using effective features to classify skin lesion in dermoscopic images. Procedia Computer Science 167, 2172–2180 (2020).

Iqbal, I., Younus, M., Walayat, K., Kakar, M. U. & Ma, J. Automated multi-class classification of skin lesions through deep convolutional neural network with dermoscopic images. Computerized medical imaging and graphics 88, 101843 (2021).

Sies, K. et al. Past and present of computer-assisted dermoscopic diagnosis: performance of a conventional image analyser versus a convolutional neural network in a prospective data set of 1,981 skin lesions. European Journal of Cancer 135, 39–46 (2020).

Mittal, B. Melanoma cancer dataset (2020). Accessed: 2024-05-18.

Quishpe-Usca, A. et al. Cnn for melanoma detection data. https://doi.org/10.17632/ggh6g39ps2.3 (2024). Version V3, Mendeley Data.

Hsiao, T.-Y., Chang, Y.-C., Chou, H.-H. & Chiu, C.-T. Filter-based deep-compression with global average pooling for convolutional networks. Journal of Systems Architecture 95, 9–18 (2019).

Bjorck, N., Gomes, C. P., Selman, B. & Weinberger, K. Q. Understanding batch normalization. Advances in neural information processing systems 31 (2018).

Murugan, P. & Durairaj, S. Regularization and optimization strategies in deep convolutional neural network. arXiv preprint arXiv:1712.04711 (2017).

Ramachandran, P., Zoph, B. & Le, Q. V. Searching for activation functions. arXiv preprint arXiv:1710.05941 (2017).

Wu, L. et al. R-drop: Regularized dropout for neural networks. Advances in Neural Information Processing Systems 34, 10890–10905 (2021).

Sajjadi, M. S., Bachem, O., Lucic, M., Bousquet, O. & Gelly, S. Assessing generative models via precision and recall. Advances in neural information processing systems 31 (2018).

Kynkäänniemi, T., Karras, T., Laine, S., Lehtinen, J. & Aila, T. Improved precision and recall metric for assessing generative models. Advances in neural information processing systems 32 (2019).

Yacouby, R. & Axman, D. Probabilistic extension of precision, recall, and f1 score for more thorough evaluation of classification models. In Proceedings of the first workshop on evaluation and comparison of NLP systems, 79–91 (2020).

Ardila, D. et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nature medicine 25, 954–961 (2019).

Hauser, K. et al. Explainable artificial intelligence in skin cancer recognition: A systematic review. European Journal of Cancer 167, 54–69 (2022).

Selvaraju, R. R. et al. Grad-cam: visual explanations from deep networks via gradient-based localization. International journal of computer vision 128, 336–359 (2020).

Gomez, T., Fréour, T. & Mouchère, H. Metrics for saliency map evaluation of deep learning explanation methods. In International Conference on Pattern Recognition and Artificial Intelligence, 84–95 (Springer, 2022).

Chanda, T. et al. Dermatologist-like explainable ai enhances melanoma diagnosis accuracy: eye-tracking study. Nature Communications 16, 1–10 (2025).

Acknowledgements

The authors extend their appreciation to King Saud University for funding this research through the Ongoing Research Funding Program, (ORF-2025-1027), King Saud University, Riyadh, Saudi Arabia.

Funding

This research is funded by the Ongoing Research Funding Program, (ORF-2025-1027), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

M.A.A. Mahmud conceptualized and investigated this study, designed and implemented the methodology, collected and curated the data, and prepared the manuscript. S. Afrin analyzed, collected and curated the data, validated the findings, provided resources and reviewed the manuscript. M.F. Mridha contributed to the development of the ideas, supervised and administered the research. M.Safran and S. Alfarhood validated the findings, reviewed and edited the manuscript and acquired funding. D.Che reviewed the manuscript and validated the findings.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical Statement

This study was conducted in full compliance with all applicable ethical standards. Since the research did not involve any direct human or animal subjects, ethical approval was not required. The dermoscopic image datasets used in this study were obtained from publicly available sources.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mahmud, M.A.A., Afrin, S., Mridha, M.F. et al. Explainable deep learning approaches for high precision early melanoma detection using dermoscopic images. Sci Rep 15, 24533 (2025). https://doi.org/10.1038/s41598-025-09938-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-09938-4

Keywords

This article is cited by

-

Explainable fusion of EfficientNetB0 and ResNet50 for liver fibrosis staging in ultrasound imaging

Scientific Reports (2025)