Abstract

Background

Cerebral-cardiac syndrome (CCS) is a severe cardiac complication following acute ischemic stroke, often associated with adverse outcomes. This study developed and validated a machine learning (ML) model to predict CCS using clinical, laboratory, and pre-extracted imaging features. A retrospective cohort of 511 post-stroke patients was analyzed. Data on demographics, laboratory results, imaging findings, and medications were collected. CCS diagnosis was based on cardiac dysfunction occurring after stroke, excluding pre-existing cardiac diseases. Five machine learning models, including Logistic Regression, Random Forest, Support Vector Machine (SVM), XGBoost, and Deep Neural Network, were trained on 80% of the data and tested on the remaining 20%. Discrimination was assessed by AUC (95% CI), calibration by Hosmer–Lemeshow test and Brier score, and thresholds by Youden’s index. Model interpretability was evaluated using SHAP. On the test set, XGBoost achieved the highest discrimination (AUC 0.879; 95% CI 0.807–0.942), accuracy 0.825, precision 0.844, recall 0.675, and F1 score 0.750. Random forest followed closely (AUC 0.866; accuracy 0.845; precision 0.962; recall 0.625; F1 0.758). SVM and logistic regression yielded AUCs of 0.853 and 0.818, respectively. Calibration was optimal for SVM (HL p > 0.05; Brier 0.126) and random forest (HL p > 0.05; Brier 0.131). SHAP analysis identified D-dimer, ACEI/ARB use, HbA1c, C-reactive protein, and prothrombin time as top predictors. ML-based models accurately predict early CCS in ischemic stroke patients. XGBoost offers superior discrimination, while SVM and random forest demonstrate better calibration. Incorporation of these models into clinical workflows may enhance risk stratification and guide targeted preventive strategies.

Similar content being viewed by others

Introduction

Acute ischemic stroke (AIS) is a major global health concern, ranking among the leading causes of death and disability worldwide1. While the primary focus of stroke management has traditionally been on restoring cerebral perfusion and preventing neurological damage, the systemic complications of AIS, particularly those affecting the cardiovascular system, have garnered increasing attention in recent years2. Cerebral-cardiac syndrome (CCS), a severe and multifaceted complication of AIS, exemplifies the intricate interplay between the brain and the heart3,4. CCS encompasses a spectrum of cardiac dysfunctions, including arrhythmias, myocardial injury, and structural abnormalities, which collectively contribute to poor cardiovascular outcomes and increased mortality3,4. The pathophysiology of CCS is complex, involving excessive activation of the sympathetic nervous system, systemic inflammation, catecholamine surges, and endothelial damage, all of which exacerbate myocardial toxicity and impair cardiac function5.

Despite advances in understanding the mechanisms underlying CCS, its early detection and prediction remain significant clinical challenges6. The multifactorial nature of CCS, coupled with the variability in its clinical presentation, complicates risk stratification and timely intervention. Current diagnostic approaches rely heavily on clinical observation and basic biomarkers, which often fail to capture the dynamic and interconnected processes driving CCS3,7. This diagnostic gap underscores the urgent need for innovative tools that can integrate diverse data sources and provide accurate, real-time predictions of CCS risk.

In recent years, machine learning (ML) has emerged as a transformative technology in healthcare, offering powerful tools for analyzing complex datasets and uncovering patterns that elude traditional statistical methods8. ML algorithms have demonstrated remarkable success in predictive analytics, particularly in fields such as cardiology and neurology9,10. By integrating multidimensional data, including clinical, imaging, and biomarker information, ML models can identify subtle correlations and interactions, enabling the development of personalized risk prediction tools9,10,11,12,13. In the context of CCS, ML holds the potential to revolutionize risk assessment by integrating diverse data sources to predict the likelihood of cardiac complications post-stroke with high accuracy and reliability. However, the application of ML in CCS prediction is still in its infancy, with limited studies exploring its feasibility and effectiveness.

In this study, we aimed to develop and validate an ML-based predictive model for CCS using a comprehensive dataset, integrating SHAP analysis to elucidate the most significant predictors of CCS and ensure model transparency. The findings of this study provide a clinically applicable framework for early risk stratification, which could guide timely interventions and optimize the management of post-stroke patients at risk for CCS.

Methods

Study design and data sources

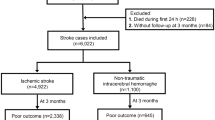

This retrospective cohort study analyzed data from 511 patients admitted to The Affiliated Hospital of Hangzhou Normal University between March 1, 2020 and March 15, 2023. Patient records were retrieved from the hospital’s electronic health system. Ethical approval was obtained from the institutional review board of The Affiliated Hospital of Hangzhou Normal University, and all procedures were conducted in compliance with the Declaration of Helsinki. Given the retrospective nature of the study, the requirement for informed consent was waived by the institutional review board. Patient data were anonymized to ensure confidentiality and used solely for research purposes.

Participants and setting

Inclusion criteria

Adults (age > 18 years) with confirmed AIS as diagnosed by CT or MRI.

Exclusion criteria

Pre-existing cardiac diseases (e.g., prior myocardial infarction, symptomatic heart failure [LVEF < 50%], known arrhythmias, moderate-to-severe valvular disease, previous coronary revascularization, cardiomyopathies, congenital heart defects), thrombolysis or thrombectomy recipients, malignancy, severe hepatic, renal, or pulmonary disease, and cases with > 30% missing data.

Patients were categorized into CCS and non-CCS groups based on new-onset cardiac dysfunction within 72 h post-stroke, excluding those with prior cardiac conditions.

Outcome definition

The primary outcome was occurrence of cerebral-cardiac syndrome (CCS), defined as development of cardiac dysfunction within 72 h of stroke onset, identified by one or more of: elevated troponin levels, ECG or Holter-detected arrhythmias, LVEF < 50% on echocardiography, ST-segment changes, QT prolongation, or T-wave inversion. Outcome assessment was blinded to predictor values. Outcome assessments were performed by two board-certified cardiologists blinded to predictor data; discrepancies were resolved by consensus.

Predictors

Candidate predictors included demographic variables (age, sex), stroke severity (NIHSS), vascular imaging (carotid stenosis grade), laboratory biomarkers (e.g., D-dimer, CRP, HbA1c, prothrombin time), medication use (e.g., ACEI/ARB, statins), vital signs, and pre-extracted imaging features. All predictors were defined and measured at hospital admission. Nominal variables were one-hot encoded; ordinal predictors were mapped to integer codes.

Sample size and missing data

The full cohort comprised 511 patients with 178 CCS events (34.8%). Cases with > 30% missing entries were excluded. Continuous missing values were imputed by median and categorical by mode; remaining data completeness permitted robust model training without additional omission.

Data preprocessing and partitioning

Continuous features were standardized to zero mean and unit variance using scikit-learn’s StandardScaler. The dataset was split via stratified sampling into training (80%, n = 408) and testing (20%, n = 103) sets, preserving CCS prevalence.

Model development and internal validation

Five classification algorithms (Logistic Regression, Random Forest, Support Vector Machine, XGBoost, Deep Neural Network) were implemented using default hyperparameters. Hyperparameter tuning employed stratified 5-fold GridSearchCV optimizing ROCAUC on the training set; no performance gain justified retaining defaults. Internal validation comprised 5-fold cross-validation, reporting mean AUC and accuracy across folds.

Performance evaluation

Discrimination was assessed by ROCAUC with 95% CIs estimated via bootstrapping (n = 1000). Classification thresholds were chosen by maximizing Youden’s index to derive accuracy, precision, recall, and F1-score on the held-out test set. Calibration was evaluated using calibration plots, Hosmer-Lemeshow test, Brier score, and Expected Calibration Error (ECE). Clinical utility was examined through decision curve analysis comparing net benefit against “treat all” and “treat none” strategies. Model explainability was quantified using SHAP to rank feature importance and visualize effect directionality.

Statistical analyses were conducted using Python (version 3.9). Continuous variables were compared using Student’s t-test or the Mann-Whitney U test, while categorical variables were analyzed using the chi-square or Fisher’s exact test. A p-value of < 0.05 was considered statistically significant.

Results

Cohort characteristics

Out of 571 screened records, 511 met eligibility criteria; 178 (34.8%) developed CCS. Baseline comparisons between CCS and non-CCS groups showed significant differences in age (68.8 ± 7.9 vs. 66.8 ± 8.2 years, p = 0.011), NIHSS (median 11 vs. 9, p < 0.001), D-dimer (median 1.17 vs. 0.79, p < 0.001), CRP, HbA1c, and severe carotid stenosis (9.0% vs. 2.4%, p = 0.006) (Table 1).

Model development and cross-validation

During 5-fold cross-validation on the training set, SVM achieved the highest mean ROCAUC (0.799 ± 0.056) but lower accuracy (0.662 ± 0.004). Random Forest maximized accuracy (0.819 ± 0.018, AUC 0.794 ± 0.042). XGBoost and Logistic Regression balanced discrimination and accuracy (XGBoost: AUC 0.779 ± 0.042, accuracy 0.811 ± 0.018; Logistic Regression: AUC 0.785 ± 0.052, accuracy 0.792 ± 0.033). Deep Neural Network performance was moderate (AUC 0.759 ± 0.041, accuracy 0.755 ± 0.023). All AUC standard deviations were below 0.06, indicating stable discrimination performance across folds (Supplementary Table S1). Hyperparameter tuning did not enhance test-set AUC; default models were retained. Supplementary Table S2 details each model’s parameter settings and performance before and after tuning.

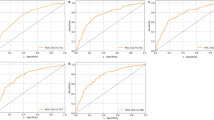

Test set performance

On the test cohort (n = 103), XGBoost achieved the highest discrimination (AUC 0.879; 95% CI 0.807–0.942), with accuracy 0.825, precision 0.844, recall 0.675, and F1score 0.750. Random Forest followed (AUC 0.866, accuracy 0.845, precision 0.962, recall 0.625, F1score 0.758). SVM and Logistic Regression yielded AUCs of 0.853 and 0.818, respectively; Deep Neural Network trailed (AUC 0.817). Detailed metrics are summarized in Table 2 and ROC curves shown in Fig. 1.

Confusion matrices for each classifier further illustrate that XGBoost predicted 27 true positives, 58 true negatives, 5 false positives and 13 false negatives, with analogous breakdowns for Random Forest (25 TP, 62 TN, 1 FP, 15 FN), SVM (26 TP, 62 TN, 1 FP, 14 FN), Logistic Regression (27 TP, 55 TN, 8 FP, 13 FN) and Deep Neural Network (29 TP, 52 TN, 11 FP, 11 FN) (Fig. 2).

Calibration performance

Model calibration was assessed using the Hosmer-Lemeshow goodness-of-fit test and Brier scores across all five machine learning algorithms. The analysis revealed significant differences in calibration quality among models (Table 3). SVM demonstrated the best calibration performance with a Hosmer-Lemeshow p-value of 0.246 (p > 0.05, indicating good calibration) and the lowest Brier score of 0.126. Random Forest also showed acceptable calibration (HL p-value = 0.153, Brier score = 0.131).

In contrast, XGBoost exhibited poor calibration despite achieving the highest discrimination performance, with a Hosmer-Lemeshow p-value < 0.001 and Brier score of 0.141. Similarly, the Deep Neural Network showed severe miscalibration (HL p-value < 0.001, Brier score = 0.178), while Logistic Regression demonstrated moderate calibration issues (HL p-value = 0.001, Brier score = 0.148).

Calibration plots (Fig. 3) visually confirmed these findings, with SVM and Random Forest showing predicted probabilities closely aligned with observed frequencies across deciles, while XGBoost and Deep Neural Network showed systematic deviations from the diagonal line of perfect calibration.

Decision-curve analysis

In decision curve analysis (Fig. 4), all five models confer greater net benefit than either default strategy (“treat all” or “treat none”) across a wide range of clinically relevant threshold probabilities (0.1–0.8). Across the clinically relevant threshold range of 0.1–0.6, the SVM and RF models yielded the highest net benefit, consistently exceeding both “treat-all” and “treat-none” strategies. XGBoost provided marginally higher net benefit only at very low thresholds (< 0.15), reflecting its aggressive detection of true positives. Beyond 0.6, none of the models offered advantage over default strategies, indicating diminishing clinical utility at high decision thresholds.

Threshold optimization analysis

Because the dataset exhibited a moderate class imbalance (Non-CCS: CCS = 1.96 : 1; CCS prevalence = 33.8% in training and 38.8% in test data), six threshold-selection strategies were compared: the default cut-off of 0.50, a prevalence-based cut-off (0.338), Youden Index optimisation, F1-score maximisation, and cost-sensitive optimisation with false-negative penalties of 2 : 1 and 3 : 1.

The SVM model with a Youden-optimised threshold (0.576) delivered the most balanced overall performance (accuracy = 87.4%, sensitivity = 70.0%, specificity = 98.4%, F1-score = 0.812), outperforming all other model–strategy combinations. For high-sensitivity applications, XGBoost tuned with a 3 : 1 false-negative penalty reached 95.0% sensitivity at a threshold of 0.019, albeit with reduced specificity (52.4%) and accuracy (68.9%). Conversely, Random Forest retained the highest specificity (98.4%) at the default 0.50 threshold, making it preferable for confirmatory testing despite lower sensitivity (62.5%).

Across models, prevalence-based thresholds consistently increased sensitivity (≈ 5–10% points) relative to the default but did not maximise any single metric. Notably, the Youden and F1-maximising thresholds converged for SVM, Random Forest, and XGBoost, indicating stable optimisation despite the modest imbalance. (Supplementary Table S3)

Feature importance

Feature importance analysis using SHAP identified D-dimer as the most influential predictor, followed closely by ACEI/ARB usage, HbA1c, CRP, and prothrombin time. Additional significant contributors included age, NIHSS scores, and severe carotid artery stenosis. Figure 5A presents the SHAP feature importance plot, which ranks predictors based on their average impact on model output. The SHAP summary plot (Fig. 5B) further illustrates the distribution and directionality of these features, demonstrating how higher levels of D-dimer, CRP, and HbA1c, among others, are associated with increased CCS risk.

Discussion

Our study demonstrates that ML algorithms can effectively predict CCS in AIS patients, offering a novel approach to early risk stratification. Among the five ML models tested, XGBoost achieved the highest discrimination (AUC 0.879), while SVM (AUC 0.853) and random forest (AUC 0.866) exhibited superior calibration. This performance divergence highlights a common modeling challenge: balancing discrimination with calibration. In clinical practice, an optimal algorithm must align with the intended application—XGBoost may excel at identifying high-risk individuals, whereas SVM or random forest may provide more reliable absolute risk estimates for individualized decision-making.

Key predictors identified by SHAP analysis, including D-dimer, prothrombin time, ACEI/ARB usage, HbA1c, and CRP, collectively highlight the critical roles of coagulation, inflammation, glycemic control, and blood pressure regulation in the pathophysiology of cerebral-cardiac syndrome (CCS). Among these, Elevated D-dimer and prolonged prothrombin time, both key markers of coagulation dysfunction, reflect the hyperactivation of the coagulation and fibrinolytic systems frequently observed following acute ischemic events14,15. This state is often accompanied by systemic inflammation, endothelial dysfunction, and microvascular thrombosis, all of which are central to CCS development16,17. The inclusion of these coagulation markers as key predictors underscores their utility in capturing the complex interplay between thrombosis and cardiovascular dysfunction. Clinically, monitoring these markers in ischemic stroke patients could facilitate early identification of those at risk for CCS, enabling timely interventions such as anticoagulation therapy or enhanced cardiac monitoring18.

Interestingly, ACEI/ARB usage emerged as a significant predictor of CCS, which may appear counterintuitive given their well-established protective effects in cardiovascular diseases. However, this association likely reflects the presence of poorly controlled hypertension in CCS patients, as suggested by the higher baseline systolic and diastolic blood pressures observed in this group. Poor blood pressure control, despite ACEI/ARB therapy, may indicate treatment resistance or suboptimal adherence, would increase the risk of cardiovascular complications following AIS18,19. This finding highlights the critical importance of achieving optimal blood pressure management to mitigate the risk of cardiac complications in stroke patients.

Elevated HbA1c levels highlight the impact of poor glycemic control, which exacerbates oxidative stress, endothelial dysfunction, and systemic inflammation—key mechanisms contributing to CCS development16,17. Similarly, elevated CRP levels emphasize the role of systemic inflammation, which is heightened after AIS and is known to promote cardiac dysfunction16,17. These findings collectively illustrate how vascular risk factors, combined with the inflammatory and thrombotic responses to stroke, drive the development and progression of CCS. Carotid artery stenosis, particularly severe stenosis (70–99%), was also more prevalent in CCS patients, highlighting the role of systemic atherosclerosis and cerebrovascular pathology in CCS development20.

Our work builds on earlier ML research in stroke by shifting the focus from neurological to cardiac outcomes. Whereas past studies predominantly addressed complications such as hemorrhagic transformation or functional decline21,22 our models emphasize transparent, interpretable risk insights via SHAP, facilitating clinician adoption and seamless integration into electronic health record systems.

From a translational standpoint, ML-driven CCS risk scores could inform targeted surveillance and preventive strategies. For example, patients identified as high-risk by XGBoost could undergo early echocardiography, continuous electrocardiographic monitoring, or preemptive cardioprotective interventions (e.g., beta-blockers, tailored anticoagulation). Those deemed low-risk might avoid unnecessary resource utilization and potential treatment-related complications. Decision curve analysis confirmed that all models provided net benefit over treat-all or treat-none approaches across clinically relevant threshold probabilities (10–60%), with random forest and SVM offering particularly robust benefit in intermediate-risk ranges.

The findings of this study have important clinical implications. Early identification of CCS risk through machine learning can improve clinical decision-making by enabling proactive measures to prevent cardiac complications, reduce morbidity, and improve outcomes. Future research should focus on integrating machine learning models into clinical workflows and evaluating their impact on patient care in real-world settings. Additionally, incorporating longitudinal data and external validation cohorts would further strengthen the robustness and applicability of the predictive model.

Limitation

This study has several limitations. Its retrospective design and single-center data may limit generalizability, requiring prospective, multicenter validation. The exclusion of thrombolysis and thrombectomy patients restricts applicability to this subgroup. Potential biases in data collection and the moderate sample size raise concerns about overfitting, despite cross-validation efforts. The use of static variables overlooks dynamic changes that might influence CCS risk, and while SHAP analysis highlights feature importance, causal relationships remain unconfirmed. Individual diabetes medications were not modeled; instead, we used diabetes status and HbA₁c as proxies to preserve model simplicity given inconsistent prescription coding. Finally, challenges in integrating machine learning models into clinical workflows, including data standardization and clinician acceptance, warrant further investigation.

Conclusion

ML-based models accurately predict early CCS in ischemic stroke patients. XGBoost offers superior discrimination, while SVM and random forest demonstrate better calibration. Important predictors highlight the roles of coagulation, inflammation, and metabolic control. This study underscores the potential of ML models in enhancing clinical decision-making and risk stratification, ultimately improving patient outcomes.

Data availability

The study protocol, de-identified data and analysis code are available from the corresponding author on reasonable request.

Abbreviations

- ACEI/ARB:

-

Angiotensin-converting enzyme inhibitors/angiotensin receptor blockers

- AUC:

-

Area under the curve

- APTT:

-

Activated partial thromboplastin time

- BNP:

-

Brain natriuretic peptide

- BMI:

-

Body mass index

- BUN:

-

Blood urea nitrogen

- CCS:

-

Cerebral-cardiac syndrome

- CRP:

-

C-reactive protein

- CT:

-

Computed tomography

- DBP:

-

Diastolic blood pressure

- FIB:

-

Fibrinogen

- HbA1c:

-

Glycated hemoglobin

- HDL-C:

-

High-density lipoprotein cholesterol

- HL:

-

Hosmer-lemeshow

- LDL-C:

-

Low-density lipoprotein cholesterol

- LVEF:

-

Left ventricular ejection fraction

- ML:

-

Machine learning

- MLP:

-

Multi-layer perceptron

- MRI:

-

Magnetic resonance imaging

- NIHSS:

-

National Institutes of Health Stroke Scale

- NT-proBNP:

-

N-terminal pro-brain natriuretic peptide

- PT:

-

Prothrombin time

- ROC:

-

Receiver operating characteristic

- SBP:

-

Systolic blood pressure

- SHAP:

-

SHapley additive exPlanations

- SVM:

-

Support vector machine

- TC:

-

Total cholesterol

- TG:

-

Triglycerides

- TT:

-

Thrombin time

- WBC:

-

White blood cell count

- XGBoost:

-

Extreme gradient boosting

References

Robba, C., van Dijk, E. J. & van der Jagt, M. Acute ischaemic stroke and its challenges for the intensivist. Eur. Heart J. Acute Cardiovasc. Care. 11, 258–268 (2022).

Battaglini, D. et al. Brain–heart interaction after acute ischemic stroke. Crit. Care. 24, 163 (2020).

Wang, L. et al. Stroke–heart syndrome: Current progress and future outlook. J. Neurol. 271, 4813–4825 (2024).

Mitrică, M. et al. The hidden heart: Exploring cardiac damage post-stroke: A narrative review. Medicina (Kaunas) 60, 1699 (2024).

Wang, M. & Peng, Y. Advances in brain-heart syndrome: Attention to cardiac complications after ischemic stroke. Front Mol. Neurosci 15 (2022).

Wang, X. C. et al. Predictive factors for cerebrocardiac syndrome in patients with severe traumatic brain injury: A retrospective cohort study. Front Neurol 14 (2023).

Lian, H. et al. Early prediction of cerebral-cardiac syndrome after ischemic stroke: The PANSCAN scale. BMC Neurol. 20, 272 (2020).

Sidey-Gibbons, J. A. M. & Sidey-Gibbons, C. J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 19, 64 (2019).

Bonkhoff, A. K. & Grefkes, C. Precision medicine in stroke: Towards personalized outcome predictions using artificial intelligence. Brain 145, 457–475 (2022).

Mathur, P., Srivastava, S., Xu, X. & Mehta, J. L. Artificial intelligence, machine learning, and cardiovascular disease. Clin. Med. Insights Cardiol. 14, 1179546820927404 (2020).

Vu, T. et al. Machine learning model for predicting coronary heart disease risk: Development and validation using insights from a Japanese population–based study. JMIR Cardio. 9, e68066 (2025).

Vu, T. et al. Prediction of depressive disorder using machine learning approaches: Findings from the NHANES. BMC Med. Inf. Decis. Mak. 25, 83 (2025).

Thanh, N. T., Luan, V. T., Viet, D. C., Tung, T. H. & Thien, V. A machine learning-based risk score for prediction of mechanical ventilation in children with dengue shock syndrome: A retrospective cohort study. PLoS One. 19, e0315281 (2024).

Barber, M., Langhorne, P., Rumley, A., Lowe, G. D. O. & Stott, D. J. Hemostatic function and progressing ischemic stroke. Stroke 35, 1421–1425 (2004).

Luan, X. et al. High levels of plasma fibrinogen and prothrombin time are related to post-stroke emotional impairment. Brain Res. 1748, 147017 (2020).

Dziedzic, T. Systemic inflammation as a therapeutic target in acute ischemic stroke. Expert Rev. Neurother. 15, 523–531 (2015).

Chen, Z. et al. Brain-heart interaction: Cardiac complications after stroke. Circ. Res. 121, 451–468 (2017).

Guidelines for the Early Management of Patients With Acute Ischemic Stroke. 2019 update to the 2018 guidelines for the early management of acute ischemic stroke: A guideline for healthcare professionals from the American heart association/American stroke association|stroke. https://www.ahajournals.org/doi/https://doi.org/10.1161/STR.0000000000000211

Bath, P. M. et al. Blood pressure management for ischemic stroke in the first 24 hours. Stroke 53, 1074–1084 (2022).

van Velzen, T. J., Kuhrij, L. S., Westendorp, W. F., van de Beek, D. & Nederkoorn, P. J. Prevalence, predictors and outcome of carotid stenosis: A sub study in the preventive antibiotics in stroke study (PASS). BMC Neurol. 21, 20 (2021).

Issaiy, M., Zarei, D., Kolahi, S. & Liebeskind, D. S. Machine learning and deep learning algorithms in stroke medicine: A systematic review of hemorrhagic transformation prediction models. J. Neurol. 272, 37 (2024).

Lee, R., Choi, H., Park, K. Y., Kim, J. M. & Seok, J. W. Prediction of post-stroke cognitive impairment using brain FDG PET: deep learning-based approach. Eur. J. Nucl. Med. Mol. Imaging. 49, 1254–1262 (2022).

Acknowledgements

None.

Funding

None.

Author information

Authors and Affiliations

Contributions

T.Z.: Conceptualization, Formal Analysis, Writing—Original Draft, Investigation; Z.H.: Methodology, Software, Formal Analysis, Visualization, Data Curation, Writing—Original Draft; L.Z.: Validation, Data Curation, Visualization, Investigation; Q.J.: Validation, Investigation, Software, Writing—Review and Editing; L.Y.: Conceptualization, Supervision, Writing—Review and Editing, Project Administration.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, T., Hao, Z., Jiang, Q. et al. Machine learning-based prediction model for post-stroke cerebral-cardiac syndrome: a risk stratification study. Sci Rep 15, 30657 (2025). https://doi.org/10.1038/s41598-025-10104-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-10104-z