Abstract

With the growth of social media, people are sharing more content than ever, including X posts that reflect a variety of emotions and opinions. AI-generated synthetic text, known as deepfake text, is used to imitate human writing to disseminate misleading information and fake news. However, as deepfake technology continues to grow, it becomes harder to accurately understand people’s opinions on deepfake posts. Existing sentiment analysis algorithms frequently fail to capture the domain-specific, misleading, and context-sensitive characteristics of deepfake-related content. This study proposes a hybrid deep learning (DL) approach and novel transfer learning (TL)-based feature extraction approach for deepfake posts’ sentiment analysis. The transfer learning-based approach combines the strengths of the hybrid DL technique to capture global and local contextual information. In this study, we compare the proposed approach with a range of machine learning algorithms, as well as, DL techniques for validation. Different feature extraction techniques, such as a bag of words (BOW), term frequency-inverse document frequency (TF-IDF), word embedding features, and novel TL features that combine the LSTM and DT, are used to build the models. The ML models are fine-tuned with extensive hyperparameter tuning to enhance performance and efficiency. The sentiment analysis performance of each applied method is validated using the k-fold cross-validation. The experimental results indicate that the proposed LGR (LSTM+GRU+RNN) approach with novel TL features performs well with a 99% accuracy. The proposed approach helps detect and prevent the spread of deepfake content, keeping people and organizations safe from its negative effects. This study covers a crucial gap in evaluating deepfake-specific social media sentiment by providing a comprehensive, scalable mechanism for monitoring and reducing the effect of fake content online.

Similar content being viewed by others

Introduction

The digital era has introduced novel innovations that have changed how information is generated, distributed, and understood. This technology can realistically change or synthesize audiovisual content, which has raised concerns about spreading misinformation, manipulating public opinion, and damaging digital media trust. Social media platforms bring people together, making it easy to share thoughts and opinions through photos, videos, audio, and text1. With the rise of deepfake content on X (Twitter), it is significant to grasp the public’s feelings towards these manipulations. A social media bot manages accounts and interacts with content, including sharing and reacting to likely real or fake posts2. Various software can customize data to user requirements, such as editing videos with selected faces, swapping voices, and generating deepfake text. Such manipulations can create more significant problems on social media, such as financial losses and stress3.

Sentiment analysis is a technique that looks at how people feel about things on X (Twitter) by analyzing their posts for emotions. It focuses on figuring out the opinions, evaluations, and attitudes people express through their posts4. This opinion significantly influenced people’s perceptions of specific things, products, ideas, and personalities5. As social media continues to grow, people can freely share their thoughts and opinions on topics like deepfake technology. Deepfake text is used with evil intent to spread fake information, making it difficult to verify its accuracy before spreading. Sentiment has a valuable effect on perceiving people’s opinions on X (Twitter), whether positive or negative6. The positive sentiment in the deepfake text represents the positive ways that increase the probability of the text being shared and believed. In contrast, the negative sentiment may spread the text with doubts. Furthermore, if we consider the perception of deepfake used to manipulate people’s sentiments to spread misinformation, it may have a strong negative impact.

Recent studies have focused on integrating deepfake technology, sentiment analysis, and the effects of social media. Bhukya et al.7 propose a deep learning (DL) model for detecting sarcasm, which faces limitations related to data diversity, model generalizability, and the inherent complexity of accurately identifying sarcasm across varied contexts. The sentiment analysis of ChatGPT tweets using Wolfram Mathematica is performed in8. It is limited by dataset bias, the challenge of effectively reading sentiments in social media content, and the ability to generalize transfer learning (TL) approaches. The study9 presents a TL fusion approach to improve sentiment analysis, especially when there is limited labeled data available. The authors highlighted issues such as computational complexity, overfitting, and knowledge transfer across various domains. The issues of detecting hate speech in languages with limited resources by employing DL are addressed in10.

The authors encountered challenges such as a lack of data, biases in annotations, and the ability of models to work well across different languages and cultures. Despite major advances by existing studies in sentiment analysis and deepfake detection, they are facing major challenges11. Many existing studies rely on domain-specific datasets and classical models that do not accurately capture the rapidly changing nature of deepfake content. Many researchers work on transformer-based analysis using bidirectional encoder representations from transformers (BERT) and robustly optimized BERT approach (RoBERTa), which can effectively capture the sentiments present in the text. The study12 introduced MisROBÆRTa transformer-based model for detecting misinformation. This suggests the hybrid models based on transformers can handle complexity in fake content, but increases computational cost. The study13 performs a comparative analysis of different machine learning and transformer-based related content for text classification.

The audiovisual fake content is detected by developing a Swiss transformer-based network14. The results are tested on five different datasets, demonstrating better performance. While these investigations signify substantial advancement, they also expose crucial constraints in the existing research, like static datasets that fail to extract public sentiments. Current models frequently encounter challenges in handling the contextual and semantic complexities of social media language. These limitations highlight the need for a unique, effective, and flexible sentiment analysis framework that is customized to distinct language and semantic patterns related to deepfake social media postings.

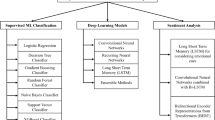

This study aims to create a new sentiment analysis framework that overcomes these limitations by utilizing advanced TL-based word embedding and a hybrid model that integrates gated recurrent unit (GRU) with long short-term memory (LSTM) and recurrent neural network (RNN) architectures. Several machine learning (ML) and deep learning (DL) algorithms, including decision trees (DT), support vector machines (SVM), K nearest neighbor classifiers (KNC), logistic regression (LR), LSTM, GRU, RNN, and TL, are applied to perform sentiment analysis on deepfake posts. The proposed hybrid DL model, combined with a unique word embedding-based TL technique, aims to enhance the model’s accuracy and performance in accurately classifying opinions expressed in tweets about deepfake technology into positive, negative, and neutral classes. This research aims to improve the accuracy and depth of our understanding of public opinion towards deepfakes on social media by using dynamic data-gathering methods and continuously adapting the model to incorporate new information. This research offers the following key contributions:

-

This research presents a novel X (Twitter) dataset scraped using different keywords over the last six years via the Python library SNScrape. The dataset is preprocessed to perform experiments, while exploratory data analysis is conducted to gain deeper insights into deepfakes. The dataset is labeled using the TextBlob lexicon-based sentiment analysis technique, which assigns multiclass labels.

-

A novel word embedding-based TL technique is presented that integrates LSTM with DT, which effectively identifies people’s opinions on deepfake technology.

-

This research introduces a novel LGR technique to analyze people’s sentiments toward deepfake technology. The approach combines DL models like LSTM, GRU, and RNN. Additionally, various ML algorithms, including DT, SVM, KNC, and LR, along with DL models such as LSTM, GRU, and RNN, are used for evaluation.

-

The evaluation parameters accuracy, precision, recall, F1-score, geometric mean, Cohen Kappa score, receive operating curve (ROC) accuracy score, and Brier score are used for evaluation. The comparison is performed with many other state-of-the-art techniques. All models used in the study are fine-tuned for hyperparameter tuning to enhance their performance and effectiveness precisely. The k-fold cross-validation is used to verify how well the models perform.

The novelty of the proposed approach lies in the structured integration of three models in a specific sequence that enhances temporal learning and improves accuracy. In the proposed architecture, the sequence first passes through an LSTM layer that handles long-term dependencies. The output is then processed by a GRU layer which improves efficiency, and finally, the RNN layer captures short-term dependencies and local sequential patterns. This layering mechanism is designed to maximize performance on complex, real-world text data and is not commonly employed in existing hybrid models. Additionally, the proposed architecture is applied to the specific domain of deepfake-related tweet sentiment analysis, which remains underexplored.

Section “Literature review” discusses the literature review on deepfake tweet sentiment analysis. Section “Methodology” discusses the methodology, including the dataset, data noise removal methods, ML, DL, and embedding-based transfer learning methods. Section “Results” represents the results and discussion. Section “Conclusion and future work” includes the conclusion and future research directions.

Literature review

In recent years, the advancement of deepfake technology has presented many modifications and challenges in the realm of sentiment analysis15. The comparative analysis challenges and constraints encountered in this domain are demonstrated in Table 1. The public opinions on deepfake tweets using a dataset from X (Twitter) analyzed by16. The authors employed BOW and TF-IDF for getting features and ML and DL models, including extra tree classifier (ETC), gradient boosting machine (GBM), SVM, Gaussian Naive Bayes (GNB), adaptive boosting algorithm (ADA), LSTM, GRU, bidirectional LSTM (BiLSTM), and convolutional neural network (CNN) + LSTM. The BiLSTM model outperformed others with an accuracy of 92%, highlighting the growing use of these techniques in sentiment analysis. This research used the TweepFake dataset, which includes posts from both bots and humans. Features were extracted using BoW and TF-IDF techniques. It utilized various deep learning techniques, including LSTM, RNN, and GPT-2, to analyze results, with the RoBERTa-based technique outperforming others by achieving a 90% accuracy score6.

The Fake-NewsNet dataset detects fake news in which SVM achieves the highest performance with 93% accuracy, but the Naive Bayes (NB) and LSTM did not perform well17. Thuseethan et al.18 utilized DL methods to evaluate attitudes from text and image data collected from the web, adopting a multimodal strategy to improve analysis precision and comprehensiveness. Deepfake posts analysis is performed on Russia and Ukraine war posts on X (Twitter)19. The study assesses various ML methods for classifying text created by humans and text provided by ChatGPT, achieving 79% accuracy with a transformer-based model on datasets generated by humans and ChatGPT queries. The efficacy of the model is limited in identifying the emotions present in the text20.

DL approaches are crucial for detecting deepfake content21. The publicly available image dataset from Kaggle is used to perform classification21. The proposed hybrid technique VGG16 CNN performs well by achieving a 94% accuracy score. Sentiment analysis on COVID-19 Arabian tweets by utilizing a dataset from the cities of Riyadh, Jeddah, and Dammam in Saudi Arabia, is performed in22. Many DL techniques, BiLSTM and CNN, are used, but the comparison results present that CNN outperforms with 93% accuracy for sentiment analysis of Arabic tweets. The generative adversarial networks GANBOT framework for detecting social bots is proposed in23. The proposed GANBOT-based technique is considered the best, with 95% accuracy compared to the previous contextual LSTM technique.

Understanding the differences between DL and ML techniques is key to accurately identifying fake and real content. The comparison of the closeness of ChatGPT to human experts is performed in24. This proposes the Human ChatGPT Comparison Corpus (HC3) dataset, which is based on ChatGPT response gap analysis by human experts and future directions by large language models (LLMs). The results demonstrate that RoBERTa with the LR model shows the most promising results with a 94% accuracy score. The English posts from the pan-competition base dataset are used to perform detection between humans and bots25. The BERT model shows an 83% accuracy score compared to other applied techniques.

Fake news is spread by social bots on social media platforms. The 30,000 posts from the PAN-20 dataset are analyzed in26, and the BiLSTM technique outperforms all other applied techniques. The DL and lexicon-based methods to analyze sentiments in COVID-19 tweets are employed in27. This covers data collection and model validation and addresses accuracy problems. The study points out challenges in deciphering confusing information and potential biases in the models caused by the dataset’s peculiarities. The GRU technique achieves a 93% accuracy on the COVID-19 dataset. The six publicly available datasets are used for sentiment analysis of tweets28. The BERT model is employed with a combination of deep learning techniques like LSTM, RNN, and CNN. This combination reveals 93% accuracy scores and other metrics also get achievable scores.

The lexicon-based analysis for web spam detection uses two datasets: one from news articles obtained using a web scrapper and the other from Kaggle29. Different ML techniques, like NB and RF, are used. The hybrid RCNN performs well by achieving 96% and 86% with web scrapped and Kaggle datasets, respectively. Sentiment analysis on various product reviews is performed, where the technique, LeBERT, gets an 88% score30. Detection of deepfake content on social media using GPT-2 and Amazon reviews is also carried out. The generative model GPT-2 creates lengthy fake stories, which creates uncertainty in real-world scenarios involving multiple generative architectures31.

A 3D convolutional LSTM model is proposed by32 for the detection of anomalies for surveillance purposes. Coarse-level feature fusion techniques are used to get better features to increase generalization and avoid vanishing gradients. Uses depth-wise feature stacking to reduce computational cost as compared to typical CNN designs. It includes micro autoencoder blocks for downsampling and feature concatenation blocks for temporal consistency during upsampling.33 proposed a light attention layered sequence model for detecting abnormalities in surveillance video. The other research34 uses a 2D convolutional layer on each video frame and passes this to an LSTM sequence model. The authors proposed an active learning approach in35 to improve data annotation. The approach is based on deep learning and can learn better representations from smaller datasets. An improved sample selection approach helps the model train better using only a smaller number of samples. Experiments on various datasets show improved classification results.

The study36 integrates emotion-cognitive reasoning with BERT for analyzing sentiments related to online opinions on emergencies. The model aims to provide auxiliary knowledge to enhance the performance of the BERT model by combining the emotion model with deep learning. In this regard, Ortony, Clore, and Collins model is used to build rules governing emotion-cognition. Experimental results indicate the best 1.74% improvement in the BERT model. Danyal et al. opted for a hybrid model for sentiment analysis in37. The model comprises BERT and XLNet models used to perform experiments on the IMDB dataset. Results show improved accuracy over traditional models.

Existing research in deepfake posts sentiment analysis includes different methods in which the feature-based method is employed38 and the graph-based method is used39. The survey conducted by40 delves into the construction and detection of deepfakes, additionally pointing out the current limits in this field. However, these studies concentrated on new long stories, raising concerns about their relevance to short social media communications. To address challenges in detecting people’s opinions about deepfake on social media, this study on deepfake tweets dataset aids research in identifying sentiments related to diverse deepfake posts text instances.

Methodology

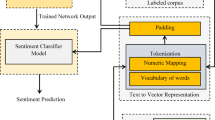

This section presents the dataset, methodology, and techniques applied for sentiment analysis of deepfake tweets. The workflow of the methodology is shown in Fig. 1. The designed methodology extracts a new dataset from X (Twitter) using the SNScrape Library. The dataset is cleaned and formatted using different text-processing techniques. The exploratory data analysis based on various graphs and charts is applied to determine deepfake-related insights. Textblob is used for sentiment labeling. Advanced text representation techniques, such as transfer features, are employed to translate tweet textual data into machine vectors. A range of ML and hybrid DL models are utilized for experiments. To evaluate performance, the 80% data is left for training while the 20% is used for testing, utilizing metrics such as accuracy, precision, recall, F1 score, geometric mean, Cohen’s kappa, ROC AUC, and Brier score.

Scrapped tweets dataset

A new dataset related to deepfake tweets is scrapped using the Python programming-based X (Twitter) API Snscrape44 to conduct experiments. The dataset comprises posts spread over the last nine years (2015 to 2023), capturing individuals’ thoughts, opinions, and sentiments regarding deepfake technology. Different keywords such as #deepfakes, #faceswape, #deepfake videos, and #deepfake news are used to scrape posts. These posts reflect user experiences as potential victims of deepfakes and express views on this technology’s positive and negative applications. The large dataset contains 105,375 rows and seven columns. The following information is presented in the dataset: ‘Date created’, ‘Tweet ID’, ‘Tweet Text’, ‘Number of Likes’, ‘Source of Tweets’, ‘Username’, and ‘Keywords’. The growing spread of deepfake content and its impact on everyday people have led to a significant increase in tweets about deepfake technology.

The class distribution of the labeled deepfake tweets sentiment dataset is shown in Fig. 2. The dataset is divided into three sentiment classes such as negative, positive, and neutral. This graphical analysis represents a significant imbalance between the classes, with the Neutral class having the largest percentage of samples. The model’s prediction may be affected by this imbalance, especially the fewer samples of the negative class. To tackle this, we incorporated a transfer features approach, a hybrid LGR approach, and hyperparameter tuning, and k-fold cross-validation is performed to validate results. This workflow guarantees resilience against skewed distributions.

Data noise removal

The dataset consists of a lot of unstructured and redundant data. The unnecessary data plays no role in training the models45. This type of data increases the training time and may impact the performance of models. The noise removal technique is essential to reduce the time required to train models and improve the prediction accuracy. Data preprocessing involves several steps including case conversion, removing usernames, hashtags, and punctuation, eliminating stop words, numbers, and null values, as well as tokenization and lemmatization.

ML models are case-sensitive, meaning they see words like “LEARNING” and “learning” as completely different, which can impact their performance. The first step is to convert the dataset into lowercase. In the next step of preprocessing, hashtags, and usernames are removed from the tweets. Additionally, punctuation symbols such as &, %, +, ##, and $ are also eliminated because they affect the model’s performance by creating confusion between actual text words and symbols. Null and numeric values are removed since they do not contribute to model predictions and only add unnecessary complexity to the feature vector. Stop words are removed because they are meaningless for model prediction; they only increase human readability. The final step is lemmatization, which simplifies words by converting them to their base form. For example, “better” becomes “good,” and “running” changes to “run.” The data is preprocessed and ready to use in the next step.

Sentiment labeling

The sentiment labeling techniques assign labels based on the emotions expressed in the text. These techniques used artificial intelligence models to classify the text as positive, negative, or neutral, providing a deep understanding of the written text. These methods use AI to analyze text and identify whether the sentiment is positive, negative, or neutral, providing deeper insight into its meaning. Lexicon-based techniques assign scores to the words based on predefined sentiment dictionaries.

TextBlob is a popular lexicon-based sentiment labeling library in Python used to process textual data46. TextBlob is reported to show better results in existing literature when used for labeling47. Although, it is not superior to human-based labeling, it can be used as an efficient tool where large datasets need to be labeled. TextBlob application programming interface (API) is used for performing Natural language processing (NLP) tasks48. This library is developed for text analysis, text mining, understanding the emotional tone of the text relying on pre-built sentiment dictionaries, and then assigning scores to the words. TextBlob has features like tokenization, POS tagging, n-gram, language detection and translation, WORDNET integration, and correction of spellings. In addition, TextBlob is used for the analysis of each sentence. At first, it inputs all the deepfake tweets and then categorizes these reviews into sentences. It calculates the polarity of the whole data by calculating how many times the positive and negative sentences come and comparing it with the total positive and negative reviews in this data. TextBlob offers a simple and convenient way to label sentiment in text using natural language processing.

Feature engineering techniques

Feature engineering is a critical process in NLP identifying and extracting the most meaningful information from raw text data. This process identifies related features, aiming to create a new one that finds meaningful patterns relevant to the dataset. ML models make predictions based on the features they are given. The more relevant the features, the better the model performs. Feature engineering is crucial in uncovering important patterns within the data, improving the model’s accuracy. In this study, we apply various feature engineering techniques to enhance our analysis.

-

BoW is a powerful technique that extracts the features of a text document in vector form, ignoring the structure of the words in the document4950. The BoW feature generates a set of unique words from the dataset and represents each text as a numerical vector by counting the frequency of each word. This technique simplifies the text data and converts it into numeric form so that machine learning models can understand it. Each word is given a value that shows how important it is in making accurate predictions. The BoW is a popular technique for sentiment analysis because it understands the presence of essential words in the document.

-

TF-IDF is used to determine the importance of a word within a document by analyzing its frequency across a large collection of texts. It assigns weights to words, giving more importance to those that appear frequently in a specific document but less often in others51. The weights indicate how relevant a term is to a document, the higher the weight, the more important the term, and vice versa. TF-IDF combines two key concepts: TF and IDF. The TF represents how to understand semantic relationships within the text better; this term is across the entire document set. TF-IDF represents meaningful information by highlighting the term with more specific and unique information concerning the whole corpus. This technique extracts the most valuable information used for text mining, sentiment analysis, and classification by understanding and ranking the significance of words in the entire corpus.

-

Word embedding is a technique that considers the surroundings of the words and their contextual relationships, allowing a deep understanding of each word in a word corpus. Word embedding grasps the word’s semantic relationship and contextual information by assigning each word a set of numeric values52. These words are transformed into a multi-dimensional space, where those with similar meanings are placed closer together, making it easier to identify relationships between them. The dimensions represent the features of the word. Machine learning models use these features to better understand semantic relationships within the text and improve performance.

Novel transfer features

A novel TL feature consisting of LSTM and DT (LSTM+DT) is proposed. The TL features used the pre-trained models to learn features from the large dataset. This technique reuses known features produced by the pre-trained models instead of training a model from scratch for each specific task53. This novel TL feature uses the learning of both models, LSTM and DT. The LSTM is the DL model used to get the features from the dataset.

Conversely, DT extracts the features at the end and combines the features produced by both models in a single feature space. The novel transfer feature technique extracts the most accurate features based on the pre-trained models. Transfer feature engineering techniques play an important role in NLP. They extract efficient features that are more effective for the training of models. This novel transfer feature technique improves the performance of various models. The visualization of novel transfer features related to deepfake tweets is presented in Fig. 3.

Artificial intelligence techniques

AI techniques are statistical techniques of diverse approaches and methodologies that enable machines to mimic human intelligence and perform cognitive tasks. AI techniques learn from data to form better decisions and keep improving over time. These techniques are applied to domains like NLP, robotics, computer vision, healthcare, finance, and autonomous systems. This study analyzes sentiment using different models54.

Support vector machines

SVM is an efficient model mainly used to solve classification and regression problems55. SVM is used for analyzing sentiments from textual data and classifying them based on their features. SVM works by mapping features into a high-dimensional space, making it easier to handle complex patterns and detect deepfake tweets effectively. This can draw a hyperplane called a decision plane, which helps classify the data based on their similarities. SVM is great at handling complex, high-dimensional data, this makes it a preferred option for text classification and sentiment analysis.

Decision tree

A DT is an ML model used to solve classification and regression problems56. DT can make decisions based on the input features. This model creates a tree-like structure by dividing the data recursively by making decisions at each node. The DT at each level represents an outcome, and picking the root note at each level is called attribute selection. The model makes decisions based on this selection. DTs rely on two popular methods for choosing the best attributes: the Gini index and information gain. The Gini index measures the impurities in the dataset while information gain qualifies the effectiveness of a feature, reducing uncertainty about class labels. DT is often used to classify textual data and make predictions.

K nearest neighbors classifier

KNC is another commonly used ML model for classification57. This non-parametric classifier works on the principles of proximity to allocate class labels to data on the majority class of K-neighbors in feature space. The K value represents the number of neighbors, a crucial parameter affecting model performance. KNC makes predictions based on the grouping of individual groups. KNC has been used in various domains such as text classification and image recognition.

Logistic regression

LR is a statistical ML model commonly used for classification tasks58. Instead of simply labeling data points, it calculates the probability of each one belonging to a particular class, making predictions based on likelihood rather than direct assignment. LR connects independent and dependent variables, using the sigmoid function to estimate probabilities. This model is trained by minimizing the logistic loss and improving classification efficiency. The model suits binary classification and multi-classification, such as spam detection and sentiment analysis.

Long short-term memory

LSTM is a well-known DL model widely used for NLP and text classification59. LSTM has a smart way of handling information by deciding what to keep and what to forget. It uses gates to manage the information flow, making it more effective at understanding patterns over time. The input gate determines what information to keep, the forget gate filters out what’s no longer needed, and the output gate determines the final result. LSTM selectively captures the most relevant information and makes it more effective by understanding the valuable patterns. The LSTM multi-layer architecture further enhances the capacity to model and learn historical features, significantly contributing to the success of different applications like sentiment analysis.

Gated recurrent unit

GRU is an advanced RNN technique that controls the flow of information in the network60. GRU consists of three gates: the current memory gate, the reset gate, and the update gate. These gates are used to capture and maintain information over sequence data effectively. The current memory gate helps the model store important information and update it as needed while working with sequential data. The reset gate maintains the information and checks what is deleted from the prior state. The update gate regulates the new information added to the current state. It updates and keeps the information in memory based on the current state. This can maintain and recall the most relevant information that depends on deepfake sentiment analysis. On the other hand, it vanishes all gradient problems. This makes GRU an efficient and well-suited model for deepfake tweet sentiment predictions.

Recurrent neural network

RNN is a model that deals with sequential data by using a feedback loop21. RNN operates sequentially and starts processing from the input state. It maintains the hidden state by capturing the information from the previous states, considering the entire sequence and its dependencies over time. This process updates the hidden state by combining new input with past information, allowing the model to retain context over time. RNNs are especially useful for understanding language, predicting trends, recognizing speech, and analyzing sentiments.

Proposed LGR approach

The novel LGR approach combines the strengths of DL models such as LSTM, GRU, and RNN presented in Fig. 4. This hybrid technique for sentiment analysis has many advantages by combining the long-term dependency-capturing capability of LSTM, the computational efficiency of GRU, and the sequential modeling of RNN. LGR technique enhances memory capacity, improves understanding of sentiment patterns and adaptive control over information, improves training efficiency, handles temporal dependencies, vanishes gradient problems, and improves accuracy. The novel LGR technique improves accuracy with the large dataset and addresses the complex temporal dependencies in the sentiment data. The flexibility of this novel LGR approach allows customization based on specific sentiment analysis tasks. At the same time, integrating different architectures helps reduce challenges like vanishing gradient problems and contributes to more effective training. The hybrid LGR technique is a powerful and versatile tool that combines the power of most vital algorithms and enhances the performance of deepfake tweet sentiment analysis.

Hyperparameter tuning

Hyperparameter tuning is critical in optimizing the parameters to improve the performance of ML models. This method does not learn from data but is set before training. The hyperparameter tuning process fine-tunes the model’s settings to boost performance and increase accuracy. Efficient hyperparameter tuning ensures that an ML model is fine-tuned to achieve its highest accuracy. An efficient hyperparameter improves the effectiveness of making predictions on unseen data, improving the accuracy of the applied techniques. This study implemented hyperparameter tuning to improve models’ performance, as shown in Table 2.

Evaluation metrics

Evaluation metrics are used to measure the performance of different ML and DL models. This process helps quantify how effectively a model performs concerning a specific task. The most commonly used evaluation metrics like accuracy, precision, recall, and F1 score are utilized. This study also utilizes other evaluation metrics like geometric mean, Cohen Kappa score, ROC AUC score, and Brier score to evaluate performance. In the classification context, four possible outcomes are:

-

True Positive (TP): This indicates instances where the model correctly identified the positive class.

-

True Negative (TN): indicates instances where the model correctly identified the negative class.

-

False Positive (FP): This refers to instances where the model mistakenly classifies a negative case as positive.

-

False Negative (FN): This occurs when the model wrongly classifies a positive case as negative.

The geometric mean (GM) measures the central tendency and calculates the root using the product of all the values. It evaluates the balance between correctly identified positive cases (sensitivity) and mistakenly identified negative cases (specificity). In classification, it is used to balance the assessment of imbalanced data.

Cohen Kappa score is a statistical measure that supports the agreement between the actual and predicted classifications while also analyzing the agreement expected by chance. This can inform us about the observed agreement (where the prediction and actual values match) and the expected agreement. By keeping this information, the Cohen kappa score compares the values and evaluates the reliability of the agreement in classification.

The ROC AUC score is a valuable performance evaluation metric for binary, multi-class, and multilabel classification. The ROC AUC curve determines the model’s effectiveness and how well it classifies different classes. A higher score means the model is predicting well, approaching a score of 1; conversely, a 0.5 score indicates the performance is not better than random chance, which shows poor performance.

Brier score is an evaluation measure mainly used to solve binary and multi-classification problems where the data is imbalanced. This can calculate the value between 0 and 1. The main difference between predicted and actual probability is that predicted values range between 0 and 1, while actual values can only be 0 or 1. The total refinement loss evaluates the accuracy of the prediction improvement, whereas the calibration loss evaluates the alignment between expected probability and actual results. The Brier score is an understanding of the model’s prediction and represents performance in a better way.

Results

This section describes the outcomes of several ML and DL models for deepfake posts’ sentiment analysis. All these models are tested on a large dataset that is scrapped from X (Twitter). The dataset labeling is performed using TextBlob into positive, negative, and neutral classes. Feature extracting techniques are employed, such as BoW, TF-IDF, word embedding, and a novel TL feature extraction. The performance of diverse ML and DL models is evaluated using a range of metrics. The k-fold cross-validation is also performed to verify the results of different models. All the models are fine-tuned using hyperparameter tuning.

Experimental design

The experimental design includes the implementation of models using Python programming language with libraries including NLTK, TextBlob, Sklearn, Keras, Pandas, Numpy, TensorFlow, Matplotlib, and Seaborn, among others. Experiments are conducted using the Google Colab platform. Table 3 describes the environment used to conduct the experiments.

Outcomes using BOW features

The performance comparison of different ML models with BoW features is represented in Table 4. The results represent that LR performs remarkably better than other models with an 87% accuracy. This model also scores well for other parameters like precision, recall, F1 score, geometric mean, Cohen Kappa score, ROC AUC score, and Brier score because LR can quickly deal with high dimensional data and prevent overfitting. The DT and KNC models’ performance is poorer than the LR model with a 78% and 64% accuracy, respectively. The SVM performs poorly with a 43% accuracy due to its computational complexity and data sensitivity issues.

Figure 5 shows the confusion matrix of different models like SVM, DT, KNC, and LR. The visual representation compares the performance of all these models using BoW features. The confusion matrix presents the correct and wrong predictions for these models. It shows that the LR gets the highest number of correct predictions, with 18,401 correct predictions and 2670 wrong predictions from a total of 21,071 predictions. The performance of DT is second to the LR model, with 16,457 correct predictions. On the other hand, the performance of SVM is the worst, with 11,947 wrong predictions and only 9124 predictions are correct. This comparison emphasizes the variations in the predictive accuracy of different models.

The chart given in Fig. 6 illustrates the comparison of the performance of the ML model applied using the BoW feature. The graphical analysis demonstrates that LR and DT perform exceptionally well when used with the BoW features. Meanwhile, the KNC and SVM did not perform well and showed poor performance.

Outcomes with TF-IDF features

Table 5 presents the performance comparison of different ML models using the TF-IDF feature. Outcomes represent that the LR model performs better with an 81% accuracy score, while the DT performs marginally lower with an 80% accuracy. The KNC model shows poor results with 65% accuracy. On the other hand, the SVM model achieves a phenomenal 48% accuracy, showing minimal improvement compared to its performance with the BoW features.

The visual comparison in terms of the confusion matrix for different ML models using the TF-IDF feature is presented in Fig. 7. The confusion matrix demonstrates the correct and wrong predictions for each model. The results show that LR shows the highest number of correct predictions with 17,220 correct predictions and 3851 predictions are wrong. On the other hand, the performance of SVM is not very good, with the highest wrong predictions of 10,749, and the lowest correct predictions of 10,322 only. The confusion matrix shows that the LR is the most effective model for predicting correct sentiments.

Figure 8 represents the graphical comparisons of applied ML models with TF-IDF features. The visual comparisons demonstrate that LR and DT achieve the best performance, but the KNC and SVM did not perform well for sentiment analysis from X (Twitter) posts. The performance is evaluated using accuracy, geometric means, Cohen Kappa score, ROC AUC score, brier score, precision, recall, and F1 score.

The bar chart in Fig. 9 represents the visual analysis of ML models’ performance using BoW and TF-IDF features. It can be observed that DT and KNC perform marginally well with TF-IDF features while SVM shows good improvement when used with TF-IDF features. On the other hand, LR models significantly well when used with BoW features.

Outcomes with word embedding features

DL models’ performance comparison using the word embedding feature is represented in Table 6. The comparison illustrates that the LSTM and GRU show the most promising results, with a 94% accuracy. These models effectively capture the long-term dependencies in textual sequences and resolve gradient problems. The results are also calculated for three individual classes: class 1 denotes positive, class 2 denotes negative, and class 3 denotes neutral, and the average score of these classes is calculated. The other performance evaluation metrics, such as precision, recall, F1 score, geometric mean, Cohen Kappa score, ROC AUC score, and Brier score, show better results for LSTM and GRU. The RNN works equally well with a 93% accuracy. The RNN model showed poor results due to its large dependencies and vanishing gradient problem.

The graphical analysis of the applied DL models with the word embedding feature is presented by a bar chart given in Fig. 10. The models worked well with word embedding features and achieved high scores. The performance of LSTM and GRU is the same, achieving a good performance in terms of accuracy, F1 score, etc. The performance of RNN is marginally lower than these models. The word embedding feature worked well with the DL model and showed promising results.

Results with novel transfer features

Table 7 uses the novel transfer feature engineering technique for the performance comparison of different ML models. This novel technique gets the best and combined features extracted by LSTM and DT models. The results show that LR shows outstanding performance by obtaining a 97% accuracy. These models also show the most promising performance against all three classes, as well as, their combined performance. The models also perform well concerning precision, recall, F1-score, geometric mean, Cohen-kappa-score, etc. The performance of DT, SVM, and KNC models is marginally lower than the LR model, with a 96% accuracy. These results show that the proposed novel transfer feature engineering techniques perform better with ML models compared to BoW and TF-IDF feature extraction techniques.

Figure 11 shows the performance of the applied ML model using novel transfer features. This suggests that the novel transfer features technique works well with applied ML techniques compared to other feature extraction techniques used in this study. This demonstrates that the LR model achieves the highest performance.

Achieving improved accuracy on slightly imbalanced datasets is possible using transfer features. Good results using the proposed approach can be attributed to several reasons. First, pre-trained models learn from large and diverse datasets and their representations generalize well, even in the case of minority classes61. Secondly, contrary to traditional models that overfit to the small samples, transfer features provide generalizable features that reduce the overfitting62. Thirdly, freezing layers and extracting features from a frozen backbone helps maintain feature quality. Further fine-tuning of these models on the local datasets helps maintain general feature quality even in the case of under presentation of a class. These aspects of transfer features help models get better performance which is the case in this study.

Results using proposed LGR model

The performance of the proposed hybrid LGR model (LSTM, GRU, RNN) is shown in Table 8. The results are obtained using the proposed LGR model combined with the transfer feature engineering technique. The results show that the proposed model performs better than the previous techniques discussed earlier by achieving a 99% accuracy. The other parameters like precision, recall, F1 score, Geometric mean, Cohen kappa score, ROC AUC score, and Brier scores are also better for the proposed model. The results illustrate that the proposed hybrid LGR model performs well, showing outstanding performance compared to other ML and DL models.

Cross-validation results

The comparative evaluation of the proposed approach is done using K-fold cross-validation in comparison to other ML models with BOW, TF-IDF, and novel transfer features. Table 9 shows the cross-validation results. The results indicate that the LR model consistently achieves better performance with all the applied features. On the other hand, the KNC and DT models demonstrate satisfactory performance and the SVM achieved poor performance when applied with the BOW feature. The comprehensive analysis generalized the performance of models with different features. The results demonstrated that the performance of the proposed novel transfer features is better compared to other features.

Computational cost analysis

The computational cost analysis of ML models provides insights into training time and is presented in Table 10. The computation cost analysis results reveal that the performance of the KNC model stands out for its efficiency with less training time using the TF-IDF feature. In contrast, the computational cost of the KNC model using the BoW feature is comparatively higher, suggesting a feature-dependent computational load. A cross-model comparison across different features finds the dominance of the proposed novel transfer feature engineering technique, demonstrating excellent computational efficiency compared to other methods. The results prove the potential of novel transfer feature engineering techniques to boost ML models’ performance by getting higher accuracy scores and less training time.

Figure 12 bar chart visualizes the computational cost analysis of ML techniques compared with BOW, TF-IDF, and novel transfer features. The graph demonstrates that the novel transfer feature works effectively well with less computational cost than other features. The performance with the BoW feature is very poor, requiring higher computational time. The results conclude that the proposed novel transfer feature technique performs well with higher performance scores and less computational cost.

Statistical significance analysis

Table 11 represents a statistical significance analysis conducted to validate the performance of the proposed LGR approach compared to the applied classical approaches like SVM, DT, KNC, LR, LSTM, GRU, and RNN. The analysis is conducted using a paired t-test, p-value, and the results regarding the null hypothesis. The null hypothesis assumes that there is no significant difference between the models being compared. The paired t-test examines two models’ outcomes over numerous runs, such as accuracy, precision, recall, and F1-score, to determine if the observed difference is due to chance or indicates a genuine performance gain. The outcomes show that all comparisons had very low p-values, which resulted in the rejection of the null hypothesis in each case. This demonstrates that the proposed model’s gains are statistically significant and not the result of random chance. Notably, comparisons with LSTM, GRU, and RNN yielded infinite negative t-statistics with p-values of 0.00, indicating that the hybrid LGR model is consistently and significantly superior. This investigation strengthens our belief in the reliability and robustness of our proposed architecture.

Error rate analysis

Table 12 represents the error rate analysis of machine learning models with TF-IDF, BOW, and proposed transfer feature extraction techniques. Models such as SVM, DT, KNC, and LR are used in this analysis. The comparisons show that the proposed transfer feature performs significantly well by reducing the error rate of applied models. The LR classifier reduced the error rate to 0.031 with the proposed transfer feature compared to TF-IDF (0.510) and BOW (0.566) error rates. Similarly, the SVM reduced the error to 0.032, which is much less as compared to classical feature extraction methods. These improvements were observed with KNC and DT. These results validate the high performance observed by our proposed transfer feature on different models with high accuracy and a lower error rate.

Ablation study analysis

The ablation study analysis63 is performed to evaluate the contribution of the proposed approach. For the ablation study, 20% data is used for experiments. The detailed analysis includes both ML and DL models evaluated with BOW, TF-IDF, and transfer features. Table 13 shows the comparisons of ML models like SVM, DT, KNC, and LR in terms of accuracy using all three features. Outcomes represent that the models using BOW and TF-IDF do not perform well, getting an accuracy of 43% to 87%.

Conversely, all models performed significantly better when we used transfer features; the accuracy ranged from 96% to 97%. The results of all the models with the transfer feature demonstrate the efficacy of the approach by extracting rich features that help in improving model performance. The outcomes of LSTM, GRU, and RNN with pre-trained word embedding and transfer features are shown in Table 14. Results represent the RNN performance improvement with transfer features from 93% to 95%, while the LSTM and GRU models increase from 94% to 96%.

These outcomes validate the design decision to employ transfer features, which improve generalization and semantic comprehension by combining contextual LST with DT. The ablation results show that the transfer feature technique greatly improves the performance of both deep learning and machine learning models. These results further demonstrate the supremacy of our integrated LGR architecture, which capitalizes on the combined strengths of LSTM, GRU, and RNN, and validates the contribution of each deep learning component.

State-of-the-art comparisons

Table 15 shows the comprehensive evaluation of the proposed approach with other state-of-the-art studies. We implemented and tested the proposed dataset with previous studies to ensure fair evaluation. The analysis is done with the previously published studies on the text classification domain on X (Twitter) from 2021 to 2024. The analysis demonstrates that the proposed approach outperforms by achieving a 99% accuracy score for deepfake posts sentiment analysis. This shows that the proposed approach is significantly better to achieve higher accuracy in determining the sentiments of posts on X (Twitter).

Practical deployment for deepfake content detection

Practical deployment of the proposed approach can help obtain the following objectives.

-

It can be used to track the emotional reactions of people toward fabricated speech on social media and other media platforms.

-

Escalation of harmful content can be carried out. For this purpose, harmful content can be detected and prioritized based on its degree of negativity.

-

The emotional response of people towards fake content can be monitored to understand the long-term psychological impact.

-

Warning systems can be defined to detect early signs of viral spread of deepfake content videos containing emotionally charged content.

Ethical considerations

This study understands ethical research practices, especially in collecting data from social media apps like Twitter67. The collected dataset preserves individual privacy and comprises tweets scraped from public sources and authenticated to protect individual privacy. The dataset does not target any personal information or any specific user at any stage of collection. The dataset is scraped against different keywords from publicly available tweets. This does not target any specific users, accounts, or other identifiers.

Furthermore, the goal of this study is to examine broad sentiment patterns related to deepfake-related content. The study complies with ethical standards for the use of digital data because all the data came from publicly available sources without any user interaction or private access. To further protect user privacy, all tweets were authenticated during preprocessing.

The collected data is anonymized and no disclosure of user identification is given in the manuscript. In addition, since the user can be identified by their geolocation, network data, etc., metadata such as geotags, user handles, etc. are not given in the manuscript.

In addition, data bias is also handled. Twitter users do not represent the global population and drawing general conclusions can be misleading. So, to mitigate data bias and representation, dataset limitations are pointed out here.

Limitations

Despite this study’s better performance and contributions, it is important to recognize the limitations to direct future developments:

-

The dataset used in this research is imbalanced, which can lead to biased learning that affects the model’s ability to perform sentiment classification.

-

The proposed hybrid LGR architecture performs well, but its high computational cost makes the model less effective for real-time applications.

-

The model has not been tested on multilingual datasets or cross-platform data, which limits the assessment of its generalizability to broader contexts.

-

Twitter data is not a representation of the global population and drawing general conclusions from the data can be misleading.

Future work

The following areas can be explored to further improve the accuracy of sentiment analysis concerning deepfake content.

-

Current approaches predominantly rely on textual data alone ignoring important information presented as emotional cures, audio, and other visuals in deepfakes. Such content can be combined with textual data to improve the detection of nuanced responses.

-

Content on social media is often accompanied by sarcasm, memes, etc. to represent sentiments. Integrating sarcasm detection with context-aware transformers can help improve the detection of deepfakes.

-

Time-series-based sentiment analysis is not investigated well, particularly concerning deepfakes. Modeling user sentiments as the user becomes aware that the content is deepfake is an important area of research.

-

Often, sentiment analysis approaches target English language and cross-lingual models are rather rare. Building cross-lingual approaches is necessary to enable global monitoring of deepfakes.

-

Improving sentiment labeling using transformer-based models and enhancing understandability in deepfake tweets sentiment analysis by incorporating explainable AI techniques is another important research domain

-

Ethics-aware sentiment analysis is very important because analyzing public comments on deepfakes related to sensitive issues like violence, sexual content may lead to unintentional amplification of harm. Human-centered AI with risk-aware interpretations can be adopted.

Conclusion and future work

Deepfake tweets are artificially generated texts intended to spread misinformation and misguide people. The sentiment detection of deepfake tweets is a challenging task. This study aims to address this problem and develop a system that effectively analyzes and detects sentiments related to deepfake tweets. A new large deepfake tweets-related dataset is scrapped using the Python SNScrape library. The TextBlob Python library is used for labeling the dataset into three classes. Machine learning (ML) and deep learning (DL) techniques are trained using BOW, TF-IDF, and word embedding features. Feature extraction is essential for the appropriate training of models and obtaining better results. This study contributes a transfer learning-based technique for feature extraction combining LSTM and DT models. In addition, a hybrid LGR model is proposed using LSTM, GRU, and RNN deep learning models. The results of ML, DL, and the proposed hybrid LGR models using accuracy, precision, recall, and F1 score, along with other metrics like geometric mean, Cohen’s kappa score, ROC AUC score, and Brier score. The results show that the machine learning model works best with the BOW feature, where LR achieves 87% accuracy, but its performance drops with the TF-IDF feature. The performance of LSTM and GRU with word embedding features is better, gaining 94% accuracy. The transfer learning technique performs exceptionally well, and SVM achieves a 96% accuracy score. In contrast, the proposed hybrid LGR model outperforms all previously used techniques by achieving the highest accuracy of 99%. All models are tested and verified using the k-fold cross-validation method. This highlights the primary strength of the study, the significant performance improvements of the proposed model across different experiments. Despite this, the limitation includes the imbalanced dataset, lexicon-based labeling, and the high computational cost of a hybrid model may affect real-time applications. Nonetheless, the findings show that integrating transfer learning with deep sequential models might help construct scalable, effective sentiment analysis systems for countering disinformation in sensitive fields.

Data availability

The data can be requested from the corresponding authors.

References

Weng, Z. & Lin, A. Public opinion manipulation on social media: Social network analysis of twitter bots during the covid-19 pandemic. Int. J. Environ. Res. Public Health 19(24), 16376 (2022).

Masood, M. et al. Deepfakes generation and detection: State-of-the-art, open challenges, countermeasures, and way forward. Appl. Intell. 53(4), 3974–4026 (2023).

Ternovski, J., Kalla, J., & Aronow, P.M. Deepfake warnings for political videos increase disbelief but do not improve discernment: Evidence from two experiments (2021).

Westerlund, M. The emergence of deepfake technology: A review. Technol. Innov. Manag. Rev. 9(11) (2019).

Qian, C. et al. Understanding public opinions on social media for financial sentiment analysis using ai-based techniques. Inf. Process. Manag. 59(6), 103098 (2022).

Fagni, T., Falchi, F., Gambini, M., Martella, A. & Tesconi, M. Tweepfake: About detecting deepfake tweets. PLoS ONE 16(5), 0251415 (2021).

Bhukya, R., & Vodithala, S. Deep learning based sarcasm detection and classification model. J. Intell. Fuzzy Syst. 1–14

Su, Y. & Kabala, Z. J. Public perception of chatgpt and transfer learning for tweets sentiment analysis using wolfram mathematica. Data 8(12), 180 (2023).

Zhao, Z., Liu, W. & Wang, K. Research on sentiment analysis method of opinion mining based on multi-model fusion transfer learning. J. Big Data 10(1), 155 (2023).

Safdar, K., Nisar, S., Iqbal, W., Ahmad, A., & Bangash, Y.A. Demographical based sentiment analysis for detection of hate speech tweets for low resource language. ACM Trans. Asian Low-Resour. Lang. Inf. Process. (2023).

Alslaity, A. & Orji, R. Machine learning techniques for emotion detection and sentiment analysis: current state, challenges, and future directions. Behav. Inf. Technol. 43(1), 139–164 (2024).

Truică, C.-O. & Apostol, E.-S. Misrobærta: transformers versus misinformation. Mathematics 10(4), 569 (2022).

Krishnan, A. Exploring machine learning and transformer-based approaches for deceptive text classification: A comparative analysis. arXiv preprint arXiv:2308.05476 (2023).

Ilyas, H., Javed, A. & Malik, K. M. Avfakenet: A unified end-to-end dense swin transformer deep learning model for audio-visual deepfakes detection. Appl. Soft Comput. 136, 110124 (2023).

Tesfagergish, S.G., Damaševičius, R., & Kapočiūtė-Dzikienė, J. Deep fake recognition in tweets using text augmentation, word embeddings and deep learning. In Computational Science and Its Applications–ICCSA 2021: 21st International Conference, Cagliari, Italy, September 13–16, 2021, Proceedings, Part VI 21, pp. 523–538 (2021). Springer

Rupapara, V. et al. Deepfake tweets classification using stacked bi-lstm and words embedding. PeerJ Comput. Sci. 7, 745 (2021).

Nadikattu, A. K. R. Machine learning vs deep learning models for detecting fake news: A comparative analysis on fake-newsnet dataset. Available at SSRN 4382241 (2023)

Thuseethan, S., Janarthan, S., Rajasegarar, S., Kumari, P., & Yearwood, J. Multimodal deep learning framework for sentiment analysis from text-image web data. In 2020 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT), pp. 267–274 (2020). IEEE

Twomey, J. et al. Do deepfake videos undermine our epistemic trust? a thematic analysis of tweets that discuss deepfakes in the russian invasion of ukraine. PLoS ONE 18(10), 0291668 (2023).

Mitrović, S., Andreoletti, D., & Ayoub, O. Chatgpt or human? detect and explain. explaining decisions of machine learning model for detecting short chatgpt-generated text. arXiv preprint arXiv:2301.13852 (2023).

Raza, A., Munir, K. & Almutairi, M. A novel deep learning approach for deepfake image detection. Appl. Sci. 12(19), 9820 (2022).

Alqarni, A. & Rahman, A. Arabic tweets-based sentiment analysis to investigate the impact of covid-19 in ksa: A deep learning approach. Big Data Cogn. Comput. 7(1), 16 (2023).

Najari, S., Salehi, M. & Farahbakhsh, R. Ganbot: A gan-based framework for social bot detection. Soc. Netw. Anal. Min. 12, 1–11 (2022).

Guo, B., Zhang, X., Wang, Z., Jiang, M., Nie, J., Ding, Y., Yue, J., & Wu, Y. How close is chatgpt to human experts? comparison corpus, evaluation, and detection. arXiv preprint arXiv:2301.07597 (2023).

Dukić, D., Keča, D., & Stipić, D. Are you human? detecting bots on twitter using bert. In 2020 IEEE 7th International Conference on Data Science and Advanced Analytics (DSAA), pp. 631–636. IEEE (2020).

Hajli, N., Saeed, U., Tajvidi, M. & Shirazi, F. Social bots and the spread of disinformation in social media: the challenges of artificial intelligence. Br. J. Manag. 33(3), 1238–1253 (2022).

Ainapure, B. S. et al. Sentiment analysis of covid-19 tweets using deep learning and lexicon-based approaches. Sustainability 15(3), 2573 (2023).

Bello, A., Ng, S.-C. & Leung, M.-F. A bert framework to sentiment analysis of tweets. Sensors 23(1), 506 (2023).

Waheed, A., Salam, A., Bangash, J.-I., & Bangash, M. Lexicon and learn-based sentiment analysis for web spam detection. IEEE-SEM (2021).

Mutinda, J., Mwangi, W. & Okeyo, G. Sentiment analysis of text reviews using lexicon-enhanced bert embedding (lebert) model with convolutional neural network. Appl. Sci. 13(3), 1445 (2023).

Adelani, D.I., Mai, H., Fang, F., Nguyen, H.H., Yamagishi, J., & Echizen, I. Generating sentiment-preserving fake online reviews using neural language models and their human-and machine-based detection. In Advanced Information Networking and Applications: Proceedings of the 34th International Conference on Advanced Information Networking and Applications (AINA-2020), pp. 1341–1354 (2020). Springer.

Ul Amin, S., Kim, B., Jung, Y., Seo, S. & Park, S. Video anomaly detection utilizing efficient spatiotemporal feature fusion with 3d convolutions and long short-term memory modules. Adv. Intell. Syst. 6(7), 2300706 (2024).

Ul Amin, S., Kim, Y., Sami, I., Park, S., & Seo, S. An efficient attention-based strategy for anomaly detection in surveillance video. Comput. Syst. Sci. Eng. 46(3) (2023).

Amin, S.U., Ullah, M., Sajjad, M., Cheikh, F.A., Hijji, M., Hijji, A., et al. Eadn: An efficient deep learning model for anomaly detection in videos. Mathematics (2022).

Amin, S. U., Hussain, A., Kim, B. & Seo, S. Deep learning based active learning technique for data annotation and improve the overall performance of classification models. Expert Syst. Appl. 228, 120391 (2023).

Wan, B., Wu, P., Yeo, C. K. & Li, G. Emotion-cognitive reasoning integrated bert for sentiment analysis of online public opinions on emergencies. Inf. Process. Manag. 61(2), 103609 (2024).

Danyal, M. M. et al. Proposing sentiment analysis model based on bert and xlnet for movie reviews. Multimedia Tools Appl. 83(24), 64315–64339 (2024).

Moghaddam, S. H. & Abbaspour, M. Friendship preference: Scalable and robust category of features for social bot detection. IEEE Trans. Depend. Secure Comput. 20(2), 1516–1528 (2022).

Feng, S. et al. Twibot-22: Towards graph-based twitter bot detection. Adv. Neural. Inf. Process. Syst. 35, 35254–35269 (2022).

Mirsky, Y. & Lee, W. The creation and detection of deepfakes: A survey. ACM Comput. Surveys (CSUR) 54(1), 1–41 (2021).

Usmani, S., Kumar, S. & Sadhya, D. Efficient deepfake detection using shallow vision transformer. Multimedia Tools Appl. 83(4), 12339–12362 (2024).

Rahman, M. M., Shiplu, A. I., Watanobe, Y., & Alam, M. A. Roberta-bilstm: A context-aware hybrid model for sentiment analysis. arXiv preprint arXiv:2406.00367 (2024).

Albladi, A., Uddin, M.K., Islam, M., & Seals, C. Twssenti: A novel hybrid framework for topic-wise sentiment analysis on social media using transformer models. arXiv preprint arXiv:2504.09896 (2025).

Omar, H. & Lasrado, L. A. Uncover social media interactions on cryptocurrencies using social set analysis (ssa). Proc. Comput. Sci. 219, 161–169 (2023).

Zhang, M., Li, X., Yue, S. & Yang, L. An empirical study of textrank for keyword extraction. IEEE Access 8, 178849–178858 (2020).

Rachman, F. H., Rintyarna, B. S., et al. Sentiment analysis of madura tourism in new normal era using text blob and knn with hyperparameter tuning. In 2021 International Seminar on Machine Learning, Optimization, and Data Science (ISMODE), pp. 23–27 (2022). IEEE

Aljedaani, W. et al. Sentiment analysis on twitter data integrating textblob and deep learning models: The case of us airline industry. Knowl.-Based Syst. 255, 109780 (2022).

Saad, E. et al. Determining the efficiency of drugs under special conditions from users’ reviews on healthcare web forums. IEEE Access 9, 85721–85737 (2021).

Khalid, M. et al. Gbsvm: sentiment classification from unstructured reviews using ensemble classifier. Appl. Sci. 10(8), 2788 (2020).

Yan, D., Li, K., Gu, S. & Yang, L. Network-based bag-of-words model for text classification. IEEE Access 8, 82641–82652 (2020).

Sjarif, N. et al. Sms spam message detection using term frequency-inverse document frequency and random forest algorithm. Proc. Comput. Sci. 161, 509–515 (2019).

Jing, S. et al. Correlation analysis and text classification of chemical accident cases based on word embedding. Process Saf. Environ. Prot. 158, 698–710 (2022).

Raza, A., Rustam, F., Siddiqui, H.U.R., Diez, I.d.l.T., Garcia-Zapirain, B., Lee, E., & Ashraf, I. Predicting genetic disorder and types of disorder using chain classifier approach. Genes 14(1), 71 (2022).

Monteith, S., Glenn, T., Geddes, J., Whybrow, P. C. & Bauer, M. Commercial use of emotion artificial intelligence (ai): implications for psychiatry. Curr. Psychiatry Rep. 24(3), 203–211 (2022).

Styawati, S., Nurkholis, A., Aldino, A.A., Samsugi, S., Suryati, E., & Cahyono, R.P. Sentiment analysis on online transportation reviews using word2vec text embedding model feature extraction and support vector machine (svm) algorithm. In 2021 International Seminar on Machine Learning, Optimization, and Data Science (ISMODE), pp. 163–167 (2022). IEEE.

Sarailidis, G., Wagener, T. & Pianosi, F. Integrating scientific knowledge into machine learning using interactive decision trees. Comput. Geosci. 170, 105248 (2023).

Putra, S.J., Gunawan, M.N., & Hidayat, A.A. Feature engineering with word2vec on text classification using the k-nearest neighbor algorithm. In 2022 10th International Conference on Cyber and IT Service Management (CITSM), pp. 1–6 (2022). IEEE.

Yuan, M. & Xu, Y. Feature screening strategy for non-convex sparse logistic regression with log sum penalty. Inf. Sci. 624, 732–747 (2023).

Singh, A., Dargar, S.K., Gupta, A., Kumar, A., Srivastava, A.K., Srivastava, M., Kumar Tiwari, P., Ullah, M.A., et al. Evolving long short-term memory network-based text classification. Computat. Intell. Neurosci. 2022 (2022).

Alvi, N., Talukder, K.H., & Uddin, A.H. Sentiment analysis of bangla text using gated recurrent neural network. In International Conference on Innovative Computing and Communications: Proceedings of ICICC 2021, Volume 2, pp. 77–86 (Springer, 2022).

Khan, A., Sohail, A., Zahoora, U. & Qureshi, A. S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 53, 5455–5516 (2020).

Buda, M., Maki, A. & Mazurowski, M. A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 106, 249–259 (2018).

Zufry, H. & Hariyanto, T. I. Comparative efficacy and safety of radiofrequency ablation and microwave ablation in the treatment of benign thyroid nodules: a systematic review and meta-analysis. Korean J. Radiol. 25(3), 301 (2024).

Rustam, F. et al. A performance comparison of supervised machine learning models for covid-19 tweets sentiment analysis. PLoS ONE 16(2), 0245909 (2021).

Murshed, B., Abawajy, J., Mallappa, S., Saif, M. & Al-Ariki, H. Dea-rnn: A hybrid deep learning approach for cyberbullying detection in twitter social media platform. IEEE Access 10, 25857–25871 (2022).

Sadiq, S., Aljrees, T., & Ullah, S. Deepfake detection on social media: Leveraging deep learning and fasttext embeddings for identifying machine-generated tweets. IEEE Access (2023).

Islam, M. M., & Shuford, J. A survey of ethical considerations in ai: navigating the landscape of bias and fairness. J. Artif. Intell. Gen. Sci. 1(1), 3006–4023 (2024).

Acknowledgements

The authors extend their appreciation to the Research Chair of Online Dialogue and Cultural Communication, King Saud University, Riyadh, Saudi Arabia for funding this research.

Funding

This research is funded by the Research Chair of Online Dialogue and Cultural Communication, King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

MK formulated the idea, performed data analysis, and wrote the original draft. MFM also contributed to the idea, curated the data, and helped with drafting. UA worked on data curation, conducted formal analysis, and designed the methodology. MS managed the project, handled the software, and created visualizations. SA secured funding for the research, contributed to visualizations, and conducted the initial investigation. IA supervised the study, validated the findings, and reviewed and edited the manuscript. All authors reviewed and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Khalid, M., Mushtaq, M.F., Akram, U. et al. Sentiment analysis for deepfake X posts using novel transfer learning based word embedding and hybrid LGR approach. Sci Rep 15, 28305 (2025). https://doi.org/10.1038/s41598-025-10661-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-10661-3