Abstract

With the rapid growth of multimodal data on the web, cross-modal retrieval has become increasingly important for applications such as multimedia search and content recommendation. It aims to align visual and textual features to retrieve semantically relevant content across modalities. However, this remains a challenging task due to the inherent heterogeneity and semantic gap between image and text representations. This paper proposes mutual contextual relation-guided dynamic graph network that integrates ViT, BERT and GCNN to construct a unified and interpretable multimodal representation space for image-text matching. The ViT and BERT features are structured into a dynamic cross-modal feature graph (DCMFG), where nodes represent image features and text features, and edges are dynamically updated based on mutual contextual relations that is neighboring relations extracted using KNN. The attention-guided mechanism refines graph connections, ensuring adaptive and context-aware alignment between modalities. The mutual contextual relation helps in identifying relevant neighborhood structures among image and text nodes, enabling the graph to capture both local and global associations. Meanwhile, the attention mechanism dynamically weights edges, enhancing the propagation of important cross-modal interactions. This emphasizes meaningful connections or edges among different modality nodes, improving the interpretability by revealing how image regions and text features interact. This approach overcome the limitations of existing models that rely on static feature alignment and insufficient modeling of contextual relationships. Experimental results on benchmark datasets MirFlickr-25K and NUS-WIDE are used to demonstrate significant performance improvements over state-of-the-art methods in precision and recall, validating its effectiveness for cross-modal retrieval.

Similar content being viewed by others

Introduction

With the rapid expansion of the internet, the volume of multimodal data has grown substantially in recent years. This surge has led to the development of various applications, including intelligent search engines and multimedia data management systems, designed to process and analyze vast amounts of multimodal information1,2. Among these advancements, cross-modal retrieval enabling searches across different data types such as images, text, videos, and audio—has emerged as a key area of research and innovation 3,4,5,6. Traditional content-based image retrieval (CBIR) methods establish semantic similarity connections but are restricted to single-modality scenarios. In contrast, cross-modal retrieval involves retrieving semantically related items in one modality (e.g., text) using a query from a different modality (e.g., image). This paper focuses on multi-labeled cross-modal retrieval, which plays a crucial role in various applications such as multimedia retrieval and e-commerce 7,8,9.

Bai et al. 10, used graph neural networks for cross-modal retrieval by directly optimizing the binary codes to minimize the quantization loss and retrieval error. Cross-modal retrieval, the task of aligning and retrieving semantically similar data from different modalities, such as images and text, has garnered increasing attention in the era of multimodal data. Cross-modal attention and generating a shared space mechanism are the common solutions for cross-modal retrieval 11,12,13. These methods transform the low dimensional feature vectors in to high dimensional feature vectors, so that semantically related data points have similar feature code bits. Majority of the research work is going on reducing the sematic gap between diverse modalities with distinct data distributions 14,15. Many cross-modal attention mechanisms have been proposed earlier. Lin et al. 16, proposed a probability-based semantics-preserving hashing (SePH), which generated one single unique hash code, considering the sematic consistency between different modality views. In Ref.17, authors used label consistent matrix factorization hashing (LCMFH). In Ref.18 Modal-Adversarial Hybrid Transfer Network (MHTN) was introduced, and in Ref.19 Semantic correlation maximization was employed for cross-modal retrieval. All these methods extract the features independent of training process, hence these methods may not have the satisfactory performance in many practical applications. In recent times, deep convolution neural networks (DCNNs) are used to extract fine features from data, which significantly improved the retrieval performance capability of the various frameworks 20,21,22,23,24. While these advancements have predominantly focused on single-modality retrieval, modern applications demand systems capable of bridging the semantic gap between different modalities, such as text and images, for effective cross-modal retrieval. In order to address this issue, researchers integrate the text and visual features into a shared embedding space, laid the foundation for cross-modal retrieval systems. Guo et al. 25, proposed Prompts-in-The-Loop (PiTL), a weakly supervised method to pre-train VL-models for cross-modal retrieval tasks. Chen et al. 26, UNITER, a Universal Image-Text Representation, learned through large-scale pre-training over four different image-text datasets for efficient cross-model retrieval. In Ref.27, the researchers proposed a method (BeamCLIP) that can effectively transfer the representations of a large pre-trained multimodal model (CLIP-ViT) into a small target model. Previous cross-modal retrieval methods faced limitations in feature extraction and alignment. CNN-based models lacked global context, while LSTM and Word2Vec struggled with contextual understanding. Traditional fusion techniques, like concatenation and MLPs, failed to model inter-modal relationships effectively. These approaches lacked attention mechanisms, leading to poor retrieval accuracy. While earlier approaches like the Graph Attention Network (GAT) 28 have been employed for feature fusion in cross-modal retrieval, they primarily operate on static and homogeneous graphs. GAT utilizes self-attention to assign weights to neighboring nodes, enabling localized feature aggregation. Jin et al. 29 proposes an end-to-end Graph Attention Network Hashing (GAT-H) framework for cross-modal retrieval. It integrates modality-specific encoders and a graph attention mechanism to learn compact hash codes across image and text modalities. However, such models fall short in capturing evolving cross-modal relationships.

To address the above mentioned problems, inspired by vision language model (VLM)30, we propose a novel approach using GCNN in the attention mechanism for the features extracted using ViT 31 and BERT32 for vision and language respectively to enhance the cross-modal feature alignment. A shared space mechanism is designed with these fine-grained features, to address the lack of fine-grained semantic alignment between local image regions and text tokens. The shared-space model called dynamic Cross-Modal Feature Graph (CMFG), which models mutual contextual relationships by constructing a heterogeneous graph where nodes represent image and text features. Unlike static approaches, our modal dynamically updates cross-modal relationships using K-nearest neighbor (KNN) based neighborhood selection across two modalities during training. This allows the graph structure to adapt based on growing feature representations, enabling context-aware alignment and reducing the semantic gap. This methodology may overcome the limits of conventional fusion methods by allowing the network to reason about the semantic relationships between visual and textual elements explicitly.

The model specific feature extractors used are:

-

ViT: Vision Transformer used for image feature extraction. These transformers are succeeded in capturing the effective feature from images through self-attention mechanism.

-

BERT: Bidirectional Encoder Representations from Transformers is used for text feature extraction. It provides the rich representations of semantic information from textual descriptions.

Joining these feature extraction mechanisms, we purpose a shared embedding space where features from these two different modalities features are mapped into an amalgamated representation.

-

GCNNs with attention mechanisms model the relationships between visual and textual features, emphasizing semantically relevant regions and words.

-

The attention mechanism prioritizes the most important cross-modal interactions, allowing the model to focus on meaningful correspondences while ignoring irrelevant noise.

-

This shared embedding space ensures that the model can effectively bridge the semantic gap between text and images, making it possible to retrieve semantically aligned images based on textual queries with high precision.

Our proposed framework offers the following contributions:

-

Using ViT and BERT for robust and scalable feature extraction, to ensure high-quality visual and textual embeddings.

-

A new mechanism called dynamic Cross-Modal Feature Graph (Dynamic CMFG) is introduced, in which the graph structure is adaptively built during training using K-nearest neighbor (KNN) search over the shared latent feature space. This enables the model to dynamically capture mutual contextual relationships across modalities rather than relying on fixed or manually defined relations.

-

The dynamic CMFG combines attention-based edge weighting that enhances the semantic alignment between image and text features, to allow the context-aware dissemination across two modalities.

In the subsequent sections, related works with detailed literature of ViT and BERT in "Related works", a detailed overview of the proposed framework in "Framework overview" including its architecture, training process, and evaluation methodology. Additionally, comparison of the performance of proposed method against state-of-the-art approaches to demonstrate its effectiveness in cross-modal retrieval tasks in “Experiments” and conclusion of the manuscript in "Conclusion".

Related works

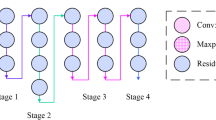

Vision transformer (ViT)

The foremost building blocks of transformer architectures, and more specific recent architecture is ViT introduced by Dosovitskiy et al. 33. Unlike CNN, which depends on convolutional operations to extract local features, ViT operates on sequences of image patches, treating an image as a series of non-overlapping patches and processing them as tokens. At first ViT, divides each image into M-fixed non-overlapping patches, flattens each patch, and project into higher dimensional space using a learnable linear projection. The process involved in ViT is explained clearly with the following equations:

Patch embedding

The given image \(X\epsilon {\mathbb{R}}^{WXHXC}\) (H-height, W-width and C-no.of channels)

No. of patches \(N=\frac{WXH}{{P}^{2}}\) (P-patch size)

\({x}_{i}={\mathbb{R}}^{{P}^{2}.C}\) (\({x}_{i}-\) Vector of Patches)

Project the patches into D-dimensional embedding space using a learnable matrix \(E\epsilon {\mathbb{R}}^{{(P}^{2}.C).D}\)

\({z}_{i}=E.{x}_{i}\) (\({z}_{i}\epsilon {\mathbb{R}}^{D}\) embedding of ith patch vector)

Adding positional embeddings

Positional embeddings are used to maintain the positional relationship among the patches. These embeddings restore the spatial context by encoding the location of each patch within the image.

where, \({Z}_{E}={\mathbb{R}}^{NXD}\) is the input to the transformer.

Multi-headed self attention mechanism(MSA)

This is a core part of the transformer architecture. It enables the model to focus on the most relevant parts of input sequence when making predictions. In ViT, MSA applies a self-attention mechanism to capture the relationships among different regions of an image. For a given image patches \(X\epsilon {\mathbb{R}}^{NXD}\) where, N is the number of patches, D is the embedding dimension, the self-attention mechanism works as follows:

Each token embedding is linearly projected into 3different vectors as Query(Q), Key(K), and Value(V).

where, \({W}_{Q},{W}_{K},{W}_{V}\epsilon {\mathbb{R}}^{DX{d}_{k}}\) are learnable weight matrices, \({d}_{k}\) is the dimension of query/key space.

Attention scores

It is a value between all pairs of tokens are computed as the dot product of the query and key vectors, scaled by \(\sqrt{{d}_{k}}\), to prevent from large values which can lead to gradient uncertainty.

\(A\in {\mathbb{R}}^{NXN}\) is the attention matrix, representing the attention weights between each pair of tokens.

The attention weights are used to compute a weighted sum of value vector, as

where,

-

\({head}_{i}=Self-Attention({Q}_{i},{K}_{i},{V}_{i})\).

-

\({W}_{o}\in {\mathbb{R}}^{(h.{d}_{k})XD}\) is a learnable weight matrix.

BERT

It is a transformer-based model architecture for NLP tasks by extracting bidirectional context 34. For each text input T, the BERT tokenizer converts the text into tokens and applies WordPiece tokenization to further break down into sub-words. Each sub-word is then mapped to its corresponding ID in BERT’s vocabulary to produce raw word features. These tokens are represented as embeddings that combine token embeddings \(\left({E}_{Token}\right)\), segment embeddings \(\left({E}_{Segment}\right)\), and positional embeddings \(\left({E}_{Position}\right)\).

The embedded input is processed through multi transformer encoder layers, here each layer uses multi-head self-attention to compute relationships between tokens. The attention scores are calculated as:

The final output from BERT provides contextualized representations for each token, with the [CLS] token representing the overall input sequence:

These representations are highly effective for tasks like text feature extraction in cross-modal retrieval, where textual embeddings from BERT are aligned with visual embeddings from models like ViT.

Graph analysis

GCNNs are effective to represent the data through graph, it has a wide range of applications 35,36,37. Xi et al. 38, worked on semi supervised model for hyperspectral image classification using cross-scale graph prototyping network. The authors designed a new self-branch attention mechanism to put more focus on critical features produced by multiple branches. Research on GCNNs has fascinated by many researchers due to their ability to effectively model non-Euclidean data structures, such as social networks, molecular graphs, and multimodal relationships, by capturing intricate dependencies and relational patterns among nodes through graph-based learning. Many recent works like, a low back-alignment spatial GCN for image classification 39, spatial–temporal GCN for emotion detection and classification 40, and quantum-based subgraph CNN to catch the global topological structure and local connectivity structure within a graph 41. Saho et al. 44 used canonical correlation analysis (CCA) to design the latent space. CCA pursues linear projections of two modalities, such that the correlation between their projected components is maximized. It learns transformation matrices for each modality to map their features into a common latent space where semantically aligned pairs are close in terms of correlation. But it is limited by its linearity, hence it is unable to capture complex non-linear relation between image and text features. In 45, the authors used VSE++ which tries improve the earlier visual-semantic embedding models by incorporating hard negative mining during training. It uses a triplet ranking loss that enforces matched image-text pairs to be closer in embedding space than mismatched ones. Hard negatives those that are closest to the query but incorrect are prioritized to improve discriminative power. Although VSE++ improves retrieval via hard negative mining, it relies on static embedding spaces and lacks the capacity to model deeper cross-modal contextual interactions dynamically.

Recent advancements have leveraged GCNNs for cross-modal retrieval by modeling interactions between modalities in graph structures. In such frameworks, nodes represent features extracted from images and text, while edges capture semantic relationships. GCNNs propagate information across these nodes, enabling better alignment and representation in a shared embedding space. For example, approaches integrating GCNNs with attention mechanisms improve the ability to capture fine-grained interdependencies, which is critical for aligning textual and visual modalities.

The ability of GCNNs to capture structured relationships between features makes them an ideal choice for tasks requiring fine-grained alignment between text and visual data. By integrating GCNNs into the attention mechanism, our framework leverages their strengths to improve semantic alignment and retrieval performance in cross-modal tasks.

Framework overview

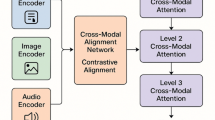

The shared embedded space plays a vital role in cross-model image retrieval by supporting an integrated representation of features from different modalities. In this space, both image and text features are mapped into a common vector space where their semantic relationships can be directly compared. This allows an image to be retrieved using a textual query, image query or both, providing whole interaction through modalities. The overall framework is shown in Fig. 1.

To achieve this, we introduce a new approach for attention mechanism called cross-model feature graph (CMFG) as shown in Fig. 2 in two ways, one is in static CMFG (SCMFG) and dynamic CMFG (DCMFG). The implementation of CMFGs as follows:

Static CMFG

Generally, a graph is represented as a set of nodes and edges, \(G=\{N,E\}\). The edge set E is defined as \(E=\{({n}_{i},{n}_{j})|{n}_{i},{n}_{j}\in N\}\), connected a pair of nodes in the node set N. An adjacency matrix \(A\in \{{\text{0,1}\}}^{NXN}\) can be used to define the connections in the graph, where \(A\left({n}_{i},{n}_{j}\right)=1\) indicates the presence of an edge between the nodes \({n}_{i},{n}_{j}.\)

CMFG: Let \(G=\{I,T,E\}\) is a two-sided graph, where I and T are image and text features as nodes and E is the set of edges that represent relationships between nodes of I and T. The node \(I=\{{i}_{1},{i}_{2},.\dots .{i}_{{K}_{I}}\}\) be the set of \({K}_{I}\) image feature vectors extracted using a Vision Transformer (ViT), where each \({i}_{j}\in {\mathbb{R}}^{d}\) and node \(T=\{{t}_{1},{t}_{2}\dots .{t}_{{K}_{T}}\}\) be the set of \({n}_{T}\) text feature vectors extracted using BERT, where each \({t}_{j}{\in {\mathbb{R}}}^{d}\).

Edge weights E represent the relationship between nodes, \(E=\{{e}_{it}|i,t\in G, i\ne t\}\) where \(i and t\) are the two different nodes, and an edge \({e}_{it}\) is computed using an attention mechanism. The attention score is given by

where, \({Q}_{i}={W}_{Q}{h}_{i}\) is the query vector for node i, \({K}_{t}={W}_{K}{h}_{t}\) is the key vector for node t, \({W}_{Q},{W}_{K} \in {\mathbb{R}}^{dX{d}_{k}}\) are learnable weight matrices, \({h}_{i} and {h}_{t}\) are the feature vectors for nodes i and t, respectively, and \({d}_{k}\) is the dimension of the query and key vectors.

After constructing the CMFG, as shown in Fig. 2, the features of each node are updated by aggregating information from its neighbors. Using Graph Convolutional Networks (GCNNs), the feature update for node i is:

Here, \(\mathcal{M}(i)\) is set of neighbors of node i, \({W}^{k}\) is the learnable weight matrix at layer k, and \(\rho\) is a non-linear activation function and it is ReLU here.

The final stage of representing the image and text features or nodes after K GCNN layers in a common shared embedding space is designed as,

The CMFG approach dynamically propagate information between nodes through weighted edges. By treating similarity scores as edge weights, the CMFG captures nuanced interactions between image and text features, enabling the model to align them effectively in the shared embedding space. The CMFG’s ability to model the cross-modal relationships in a structured and interpretable way establishes it as a powerful tool for efficient and accurate cross-modal retrieval.

Dynamic CMFG

From the above set of equations under the CMFG able to establish relationships between text and image features through a predefined adjacency structure. However, this kind of fixed structures fail to capture evolving semantic relationships during the training process. To overcome this limitation, we introduce a Dynamic Cross-Modal Feature Graph (CMFG), which updates node connections and edge weights by dynamically refining neighboring relations. The dynamic CMFG refers the ability of the graph structure to adaptively capture and update relationships between image and text features during the learning process. The difference between the proposed dynamic CMFG and static models is illustrated in Fig. 3. It depicts six feature nodes from image and text modalities, highlighting their alignment through attention weights in Fig. 3a, and showcasing dynamic connectivity based on KNN in Fig. 3b,c.

Comparison between standard Graph Attention Network (GAT) and the proposed Cross-Modal Feature Graph (CMFG). (a) In GAT, all nodes are of the same modality (image) with fixed local edges. (b,c) In CMFG, image and text nodes are dynamically connected based on semantic similarity using KNN and attention mechanism from iteration to iteration.

In this framework, each node in image modality \(i\) and text node \(t\) does not rely solely on fixed edges but instead its connectivity based on the neighboring context. The neighboring context is defined using KNN (K-Nearest Neighbor) to determine their top \(b\) closest neighbors with in the same modality:

For the databases used, the top b = 15 nearest neighbors were found to be optimal, with the value selected based on empirical observations and the dimensionality of the feature vectors. Using these two equations, the edges will define dynamically to ensure the semantically related nodes with in the same modality influence each other.

Given an initial adjacency matrix \({G}_{s}\), the dynamic CMFG defines the updated connectivity as follows:

where \(N(I,i,b)\) is a neighbor selection function that identifies the top b closest nodes in the same modality for a given node \(i\). Similarly, the text-to-image connectivity is defined as

This confirms that a node from one modality is connected to a node from the other modality only when they share mutual contextual relationships through their nearest neighbors within the same modality. Once the mutual relations are identified, we further refined the graph connections using an attention mechanism, which assigns different refined scores to edges.

For each edge weight between an image node i, and a text node t is dynamically adjusted as follows:

Here, \({Q}_{i}={W}_{q}{h}_{i}\)(Query) and \({K}_{t}={W}_{k}{h}_{t}\)(key) are feature transformations of the image and text nodes. The most essential connections receive higher attention scores according to the softmax mechanism and \({d}_{k}\) is a scaling factor that stabilizes the learning process. The term \(\alpha\) is a momentum term controlling how much past information is retained (similar to Exponential Moving Average).

With this step it ensures that, if two nodes are connected based on mutual contextual relations (Neighboring relations among nodes), the model highlights stronger connections through learning to identify which relations are meaningful.

At last, the dynamically weighted edges influence the message-passing process in the graph, where node embeddings are updated based on their most relevant neighbors. The updated node representation aggregates information from its connected nodes, weighted by the learned attention scores. This approach allows the CMFG to continuously adapt, ensuring that retrieval performance improves as the graph refines its structure throughout training. By integrating mutual contextual relations for dynamic edge formation and attention-based weighting for refined importance scoring, the proposed model effectively captures cross-modal relationships, making the retrieval process more robust and accurate.

The final embedding update is performed using the dynamically adjusted edge weights:

This equation ensures the following issues:

-

Nodes aggregate information from their most relevant neighbors.

-

Edge weights dynamically adjust based on learned importance scores.

-

The graph structure continuously evolves, ensuring more accurate cross-modal retrieval.

Experiments

In this section, the experimental setup, datasets description, evaluation metrics, and implementation details are discussed. Complete results and analyses of the experiments are also explained.

Datasets

Two popular cross-model (image-text) databases, NUS-WIDE 42, MIRFLICKR-25K 43 are often used in this proposed method experiment. NUS-WIDE contains 269,647 images and text description and MIRFlickr-25K contains 25,015 samples of image–text pair. These datasets are widely used to test the cross-modal systems that come with preprocessed image and text data. The images are already appropriately sized, and the associated text tags are cleaned and filtered.

NUS-WIDE: This dataset is designed for cross-modal retrieval and multi-label classification and the data is large-scale web images. The images were taken from Flickr and each image accompanied by textual tags with 81 semantic categories. The images in the database has multi-label annotation for each image, where each image can belong to multiple concepts such as “beach”, “sunset”, “sports” or “animal”. The dataset is widely used for image-to-text and text-to-image retrieval, making it valuable for deep learning, multimodal learning, and semantic understanding. Due to its real-world noisy text annotations, preprocessing is often required for optimal performance.

MIRFLICKR-25K: This dataset is good for evaluating cross-modal retrieval models, offering a real-world and diverse collection of images and text tags. It serves as a strong benchmark for testing multi-modal deep learning architectures in image-text retrieval tasks. It consists of 25,000 images collected from Flickr, each accompanied by metadata, including textual tags and annotations. The dataset contains real-world images sourced from Flickr, covering various categories like landscapes, objects, and people and each image is labeled with user-generated tags from Flickr. These tags describe image content and serve as textual representations for cross-modal learning. Some images have additional manually curated annotations for better semantic understanding. The dataset includes 15,000 training images and 10,000 test images.

The evaluation process is conducted using Google Colab Pro, which provides access to high-performance GPUs. The experiments are run on a Colab Pro instance equipped with an NVIDIA Tesla T4 or A100 GPU, Ubuntu-based environment, Intel Xeon processor, high-speed cloud storage, and 100 GB of RAM.

Performance analysis

A comparative analysis of numerous breakthrough methods was conducted to validate the dominance of Attention guided multimodal graph network (PM) over existing methods. These approaches include CCA 44, VSE++45, PiTL 25, UNITER 26, ALBEF 46 GAT-H29, and SimCLR 27. Out of these, CCA used handcrafted features, VSE++ and PiTL used CNN and RNN models for cross model features extraction, UNITER is a transformer-based model, ALBEF is a fusion based model, and SimCLR is a self-supervised method for cross-model retrieval. Retrieval performance metrics, Cross-model alignment metrics and Graph-based attention performance are 3 fundamental retrieval protocols used to evaluate the performance of the PM. For an image and text query and the respective top 5 retrieved results for static and dynamic CMFG are shown in Fig. 4a,b respectively.

Retrieval performance metrics

The mean average precision (mAP) is a typical performance indicator, which measures retrieval accuracy across queries. For example, for a query set Q, the mAP can be calculated as follows:

where N is the number of samples in the query set Q and P(j) is the average precision, which defined for a query is as follows:

\({P}_{q}\left(k\right)\) measures the precision of the top-k samples. Here, \({\alpha }_{q}(i)\)=1 represents the true neighbor of \(q(i)\), and \({\alpha }_{q}\left(i\right)=0\) represents it is not. The retrieval set consists of K instances. The value of |NPos| indicates the number of true neighbors in the retrieval set.

The experimental results of 2 retrieval tasks, Image \(\to\) Text and Text \(\to\) Image includes retrieving text from images and images from text has been presented in Table 1 for two benchmark datasets. The top-5 and top-10 retrieval results for both image and text queries from the NUS-WIDE and MIRFLICKR-25K datasets are shown in Figs. 5 and 6. The corresponding precision and recall curves for R@5 and R@10 are also clearly illustrated in these figures.

Cumulative matching characteristic (CMC) curve

The CMC curve measures the probability that the correct match appears within the top-K retrieved results. It is commonly used in cross-modal retrieval (e.g., image-to-text) and re-identification tasks as shown in Fig. 7.

For a given q and a set of retrieved outcomes R, the CMC value at rank k is defined as:

where, N is the total number of queries, \(1({rank}_{i}\le k)\) is an indicator function that returns 1 if the correct match is found within the top-k retrieved results, otherwise returns 0.

The future work may extend the proposed model by integrating advanced graph-based methods such as graph clustering 47 or fuzzy-entropy-based community detection 48, which may offer improved neighbor selection and better capture global graph structures compared to traditional KNN approaches.

Conclusion

In this work, a dynamic graph model DCMFG, an adaptive model for cross-modal image-text retrieval is introduced. This model ensures the dynamic edge weight updates in the graph structure based on mutual contextual relations for intra-modal and cross-modal dependencies. The proposed framework leverages ViT and BERT for image and text features and GCNN for structure feature extending. Additionally, an attention-based weighting mechanism refines feature interactions, improving retrieval accuracy. To enhance the robustness of the dynamic CMFG, we integrate neighborhood-based adaptive edge construction, where KNN is used to identify mutual contextual relationships between image and text features. The graph structure gets updated during training, and allowing the model to capture evolving feature dependencies. This leads to a more discriminative and semantically improved shared embedding space, ensuring effective retrieval performance. Experimental results on benchmark datasets showed a significant performance over state-of-the-art methods in terms of precision and recall. In end, the proposed Dynamic CMFG establishes a novel hypothesis for graph-based cross-modal retrieval, offering a powerful and interpretable solution that bridges the semantic gap between vision and language through structured, adaptive learning.

Data availability

This article used publicly available datasets, no private is used. The web links for the datasets used are given below. NUS-WIDE: https://www.kaggle.com/datasets/xinleili/nuswide?resource=download. MIRFLICKR-25K: https://press.liacs.nl/mirflickr/mirdownload.html.

References

Sucharitha, G., Nitin, A. & Sharma, S. C. Medical image retrieval using a novel local relative directional edge pattern and Zernike moments. Multimed. Tools Appl. 82(20), 31737–31757 (2023).

Qian, S., Zhang, T., Xu, C. Multi-modal multi-view topic-opinion mining for social event analysis. In Proceedings of the 24th ACM International Conference on Multimedia (2016).

Peng, Y., Huang, X. & Zhao, Y. An overview of cross-media retrieval: Concepts, methodologies, benchmarks, and challenges. IEEE Trans. Circuits Syst. Video Technol. 28(9), 2372–2385 (2017).

Qian, S., et al. Dual adversarial graph neural networks for multi-label cross-modal retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 3 (2021).

Zhan, Y.-W. et al. Supervised hierarchical deep hashing for cross-modal retrieval. In Proceedings of the 28th ACM International Conference on Multimedia (2020).

Liao, L., Yang, M. & Zhang, B. Deep supervised dual cycle adversarial network for cross-modal retrieval. IEEE Trans. Circuits Syst. Video Technol. 33(2), 920–934 (2022).

Chen, Z.-M. et al. Disentangling, embedding and ranking label cues for multi-label image recognition. IEEE Trans. Multimed. 23, 1827–1840 (2020).

Qian, S. et al. Integrating multi-label contrastive learning with dual adversarial graph neural networks for cross-modal retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 45(4), 4794–4811 (2022).

Zhu, J. et al. Adaptive multi-label structure preserving network for cross-modal retrieval. Inf. Sci. 682, 121279 (2024).

Bai, C. et al. Graph convolutional network discrete hashing for cross-modal retrieval. IEEE Trans. Neural Netw. Learn. Syst. 35(4), 4756–4767 (2022).

Wang, T., et al. Cross-modal retrieval: a systematic review of methods and future directions. arXiv preprint arXiv:2308.14263 (2023).

Zhou, K., Fadratul, H. H., & Hoon, G. K. The state of the art for cross-modal retrieval: a survey. IEEE Access. (2023).

Zhou, K., Hassan, F. H. & Gan, K. H. Pretrained models for cross-modal retrieval: experiments and improvements. Signal Image Video Process. 18(5), 4915–4923 (2024).

Zhang, J., et al. DSMCA: Deep supervised model with the channel attention module for cross-modal retrieval. In Proceedings of the 2022 5th International Conference on Data Storage and Data Engineering (2022).

Wang, L. et al. Joint feature selection and graph regularization for modality-dependent cross-modal retrieval. J. Visual Commun. Image Represent. 54, 213–222 (2018).

Lin, Z. et al. Cross-view retrieval via probability-based semantics-preserving hashing. IEEE Trans. Cybern. 47(12), 4342–4355 (2016).

Wang, D. et al. Label consistent matrix factorization hashing for large-scale cross-modal similarity search. IEEE Trans. Pattern Anal. Mach. Intell. 41(10), 2466–2479 (2018).

Huang, X., Peng, Y. & Yuan, M. MHTN: Modal-adversarial hybrid transfer network for cross-modal retrieval. IEEE Trans. Cybern. 50(3), 1047–1059 (2018).

Zhang, D., Li, W.-J. Large-scale supervised multimodal hashing with semantic correlation maximization. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 28, No. 1 (2014).

Guan, Z., et al. Cross-modal guided visual representation learning for social image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. (2024).

Zheng, C. et al. Adaptive partial multi-view hashing for efficient social image retrieval. IEEE Trans. Multimed. 23, 4079–4092 (2020).

Zeng, Z., & Wenji, M. A comprehensive empirical study of vision-language pre-trained model for supervised cross-modal retrieval. arXiv preprint arXiv:2201.02772 (2022).

Ji, Z., Kexin, C., & Wang, H. Step-wise hierarchical alignment network for image-text matching. arXiv preprint arXiv:2106.06509 (2021).

Mei, T. et al. Multimedia search reranking: A literature survey. ACM Comput. Surv. (CSUR) 46(3), 1–38 (2014).

Guo, Z., et al. PiTL: Cross-modal retrieval with weakly-supervised vision-language pre-training via prompting. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (2023).

Chen, Y.-C., et al. Uniter: Universal image-text representation learning. In European Conference on Computer Vision (Springer International Publishing, 2020).

Kim, B. et al. Transferring pre-trained multimodal representations with cross-modal similarity matching. Adv. Neural Inf. Process. Syst. 35, 30826–30839 (2022).

Hao, X., et al. Multi-feature graph attention network for cross-modal video-text retrieval. In Proceedings of the 2021 International Conference on Multimedia Retrieval (2021).

Jin, H. et al. An end-to-end graph attention network hashing for cross-modal retrieval. Adv. Neural Inf. Process. Syst. 37, 2106–2126 (2024).

Zhang, J., et al. Vision-language models for vision tasks: A survey. In IEEE Transactions on Pattern Analysis and Machine Intelligence (2024).

Gkelios, S., Boutalis, Y., & Chatzichristofis, S. A. Investigating the vision transformer model for image retrieval tasks. In 2021 17th International Conference on Distributed Computing in Sensor Systems (DCOSS) (IEEE, 2021).

Zheng, S., & Yang, M. A new method of improving bert for text classification. In Intelligence Science and Big Data Engineering. Big Data and Machine Learning: 9th International Conference, IScIDE 2019, Nanjing, China, October 17–20, 2019, Proceedings, Part II 9 (Springer International Publishing, 2019).

Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Xu, Z., Yu, T., & Li, P. Texture BERT for cross-modal texture image retrieval. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management. (2022).

Elshamli, A., Taylor, G. W. & Areibi, S. Multisource domain adaptation for remote sensing using deep neural networks. IEEE Trans. Geosci. Remote Sens. 58(5), 3328–3340 (2019).

Xie, G.-S., et al. Scale-aware graph neural network for few-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021).

Cai, D., & Wai, L. Graph transformer for graph-to-sequence learning. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, No. 05 (2020).

Xi, B. et al. Semisupervised cross-scale graph prototypical network for hyperspectral image classification. IEEE Trans. Neural Netw. Learn. Syst. 34(11), 9337–9351 (2022).

Bai, L. et al. Learning backtrackless aligned-spatial graph convolutional networks for graph classification. IEEE Trans. Pattern Anal. Mach. Intell. 44(2), 783–798 (2020).

Bhattacharya, U., et al. Step: Spatial temporal graph convolutional networks for emotion perception from gaits. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 02 (2020).

Zhang, Z. et al. Quantum-based subgraph convolutional neural networks. Pattern Recogn. 88, 38–49 (2019).

Chua, T.-S., et al. Nus-wide: a real-world web image database from national university of Singapore. In Proceedings of the ACM International Conference on Image and Video Retrieval (2009).

Huiskes, M. J., & Lew, M. S. The mir flickr retrieval evaluation. In Proceedings of the 1st ACM International Conference on Multimedia Information Retrieval (2008).

Shao, J., et al. Towards improving canonical correlation analysis for cross-modal retrieval. In Proceedings of the on Thematic Workshops of ACM Multimedia 2017. (2017).

Faghri, F., et al. Vse++: Improving visual-semantic embeddings with hard negatives. arXiv preprint arXiv:1707.05612 (2017).

Xie, C.-W., et al. Token embeddings alignment for cross-modal retrieval. In Proceedings of the 30th ACM International Conference on Multimedia (2022).

Yang, Y., et al. Integrating fuzzy clustering and graph convolution network to accurately identify clusters from attributed graph. IEEE Trans. Netw. Sci. Eng. (2024).

Yang, Y., et al. Link-based attributed graph clustering via approximate generative Bayesian learning. IEEE Trans. Syst. Man Cybern. Syst. (2025).

Author information

Authors and Affiliations

Contributions

G.S.: Cenceptualization, methodology and investigation, original draft. V.G.: Methodology validation, review and editing. A.S.N.: Software development. B.J.D.K.: Resource and project administration. V.S.: Data visualization. S.P.: Result analysis.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sucharitha, G., Kalyani, B.J.D., Raju, A.S.N. et al. Mutual contextual relation-guided dynamic graph networks for cross-modal image-text retrieval. Sci Rep 15, 34002 (2025). https://doi.org/10.1038/s41598-025-11045-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11045-3