Abstract

This study explores the application of deep learning to fungal disease diagnosis, focusing on an automated detection system for hyphae and spores in clinical samples. This study employs a combination of the YOLOX and MobileNet V2 models to analyze fungal fluorescence images. The YOLOX model is used to identify individual fungal spores and hyphae, and the MobileNet V2 model is employed to identify fungal mycelium. Finally, their combination yields the results of the two analysis processes, providing positive or negative results for the entire sample set. The proposed dual-model framework is evaluated in terms of the precision, recall, F1-score, and Kappa metrics. For the YOLOX model, the precision is 85% for hyphae and 77% for spores, and for the MobileNet V2 model, the precision is 83%. The recall value of the YOLOX model is 90% for hyphae and 85% for spores, and that of the MobileNet V2 model is 100%. The agreement of the proposed dual-model framework with the doctors’ evaluations in terms of precision, recall, and Kappa values is 92.5%, 99.3%, and 0.857, respectively. The high agreement value suggests the proposed dual-model framework’s ability to identify fungal hyphae and spores in fluorescence images can reach the level of clinicians. With the help of the proposed framework, the time and labor of fungal diagnosis can be significantly saved.

Similar content being viewed by others

Introduction

Fungi are common in nature, but a weakened immune system increases vulnerability to fungal infections1. Superficial fungal infections can affect the skin, hair, and nails of humans, whereas invasive fungal diseases (IFDs) involve systemic infections impacting various organs2. Over the past 20 years, the incidence and mortality rates of IFDs have consistently increased worldwide3,4. The IFDs have a hidden onset, non-specific clinical manifestations, and are often masked by symptoms of underlying diseases5,6. The most severe problem clinicians currently face in terms of IFD diagnosis is the lack of rapid and efficient diagnostic techniques for IFDs, which hinders providing patients with the best treatment opportunity7.

The IFD diagnostic methods are mainly based on microscopy, culture, and histopathology. However, there are not enough skilled clinicians in the field of fungal microscopy, causing high workloads for clinicians and potential misinterpretation of diagnostic results. This problem is even more pronounced in rural hospitals, where inexperienced technicians might miss infections or produce false positives, which could further affect the selection of subsequent treatment plans.

Over the past decade, deep learning (DL) has demonstrated remarkable progress across multiple disciplines, leading to its widespread application in both research and clinical practice. The medical imaging field has particularly benefited from recent DL advancements, yielding significant improvements in image segmentation, object detection, and classification tasks8,9. Although substantial research has focused on pathological image analysis, fungal image analysis has remained an understudied area despite its clinical relevance. Many researchers, including Zieliński et al10, have used deep learning in fungal identification, classifying bright-field microscopic images of fungi. This approach minimizes the need for biochemical tests, speeds up identification, and reduces diagnostic costs. Koo et al.11 conducted automated detection of superficial fungal infections from bright field microscopic images with 40 × and 100 × magnifications using the YOLO v4 object detection model. It was demonstrated that this object detection model can accurately detect hyphae in microscopic images. Rahman et al.12 presented a pioneering study that employed deep convolutional neural networks (CNNs) for classifying 89 pathogenic fungal genera from bright-field microscopic images. The research team trained and compared the performance of different CNN architectures, enhancing the potential for rapid and precise identification of fungal species. Naama et al.13 employed a decision support system for pathologists when diagnosing cutaneous fungal infections using PAS and GMS stains, which improved both accuracy and diagnosis speed while reducing the pathologists’ workload. Shubhankar et al.14,15 introduced an innovative meta-learning-based deep learning architecture named MeFunX, which combined the CNN and XGBoost models. The MeFunX model achieved a 92.49% accuracy in the early detection of fungal infections from microscopic images, outperforming state-of-the-art models, including the VGG16, ResNet, and EfficientNet models. Yilmaz et al.16 demonstrated that the VGG16/InceptionV3 models, with approximately 96% accuracy, could outperform clinicians in automated fungal detection from KOH microscopy, providing faster and more accurate diagnoses.

However, most of the aforementioned studies have predominantly focused on non-superficial fungi and used bright-field images rather than fluorescence images for fungi detection. In recent years, fewer studies have been conducted on AI-based identification of superficial fungi from bright-field images, but they have not comprehensively analyzed the integrated recognition of fungal spores, hyphae, and mycelium in superficial fungi, which is crucial for reflecting real-world clinical application scenarios. In addition, these studies have not investigated the consistency of interpretation among clinicians.

This study introduces an advanced deep-learning framework that uses fluorescence fungal images and integrates the YOLOX and MobileNet V2 models to identify fungal spores and hyphae from clinical samples. Based on the results, the proposed dual-model framework has significant application value in clinical practice in terms of improving the accuracy and alleviating clinicians’ workload.

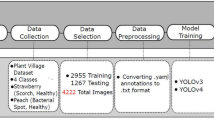

Materials and method

Materials

This study was conducted under the supervision of the Ethics Committee (KY2023-057) of Huashan Hospital, affiliated with Fudan University. Our study had adhered to the guidelines and principles stated in the’Declaration of Helsinki’. Written informed consent was obtained from the patients for the examination of their samples and the use of their clinical data. The clinical samples of superficial fungi prepared for fluorescence images were mainly collected from February 2023 to February 2024. Personal information was not required in this study. The fluorescence staining solution for fungal samples was provided by Jiangsu Life Time Biological Co., Ltd., and image scanning was performed at 10 × magnification using the intelligent fluorescence microscope from Shanghai TuLi Technology Co., Ltd.

Annotation and dataset

All the images had a resolution of 1,920 × 1,080 pixels and were annotated by two experienced clinicians. The annotation was performed using an in-house developed software named APTime, developed by SODA Data Technology Co., Ltd. A total of 942 images were labeled for constructing an object detection model, among which 813 images were divided into a training set and a validation set, and 129 images were used to construct an independent test set. For constructing the mycelium detection model, 689 images containing mycelium were collected and cropped into 600 × 600-pixel tiles with a 160-pixel overlap, resulting in a total of 1,351 mycelium-positive patches and 3,072 mycelium-negative patches. These images were divided into training, validation, and testing sets, as shown in Table 1. Finally, 70 negative samples were collected to evaluate the performance of the proposed framework.

Dual-model framework

The obtained fluorescence fungal images are analyzed by the two deep learning models, and the final result for a whole image is obtained by integrating the output results of the two models. First, the YOLOX model is used to identify scattered hyphae and spores17. The YOLOX-L is selected in this study as an object detection model to classify, locate, and count the spores and hyphae. The CSPNet model is used as a backbone.

For mycelium detection, the MobileNet V2 architecture is used18. The images, with the initial size of 1,920 × 1,080 pixels, are segmented into 160-pixel tiles with a 600 × 600-pixel overlap, which are then resized to a size of 512 × 512 pixels for further processing by the MobileNet V2 model. For the analysis of an entire image by the MobileNet V2 model, first, a classification result for each tile of an image is obtained, and then all the results of all the tiles are aggregated. If any tile indicates a positive result, the entire image is considered mycelium-positive, as illustrated in Fig. 1.

Model training

Image preprocessing included three key steps: (1) normalization through pixel value division by 255; (2) preservation of standard RGB channel ordering; and (3) random brightness adjustments with ± 20% of the original intensity to ensure robustness across varying illumination conditions.

In the YOLOX-L model’s training process, MixUp and Mosaic were used for data augmentation. Data augmentation for MobileNet training was performed by random flip, rotation, and blurring. In addition, the hue, saturation, and input image values were also randomly altered. During MobileNet training, batch-level balancing was used to ensure that training batches contained an equal number of positive and negative samples. This approach could effectively mitigate the impact of class imbalance during the optimization process. To mitigate overfitting in both the YOLOX and MobileNet V2 models during model training, this study implemented the dropout (rate = 0.5), label smoothing (ε = 0.001), and L2 weight regularization (λ = 0.001). These measures collectively improved the models’ generalization ability on unseen data while maintaining diagnostic accuracy.

Evaluation

This study conducted a comprehensive evaluation of the proposed model’s performance, examining various metrics: (1) the YOLOX model’s accuracy in detecting spores and hyphae; (2) the consistency of interpreting spores and hyphae among different clinicians; (3) the MobileNet V2 model’s accuracy in classifying mycelium; (4) the proposed dual-model framework’s accuracy in determining whether a sample image is positive or negative for any fungal form. To evaluate the YOLOX model’s performance (i.e., metric (1)), this study selected the Intersection over Union (IoU) metric to quantify the degree of overlap between the predicted and ground-truth bounding boxes. The YOLOX model’s performance in detecting targets was evaluated using the precision, recall, PR-curve, AP, mAP, and F1-score metrics. The F1-score index was also used to evaluate consistency among clinicians (i.e., metric (2)). For the evaluation of metrics (3) and (4), the precision, recall, Kappa, and F1-score indices were used as quantitative metrics.

Results

Evaluation of scattered spore and hypha detection ability of YOLOX model

This evaluation process involved 129 images containing scattered spores or hyphae, which were labeled and double-checked by two doctors to ensure data quality. The specific dataset contained various morphologies of spores and hyphae commonly seen in clinical practice. Among them, the spores included round, oval, and bowling ball-shaped spores in budding, and the hyphae included septate, non-septate hyphae, and branched hyphae, as shown in Fig. 2(a). Different shapes of objects used in the evaluation could all be accurately identified by the trained YOLOX model. The PR-curves and AP performances were separately evaluated for hyphae and spores at different IoU thresholds of 0.1, 0.3, 0.5, and 0.75, as presented in Fig. 2(b).

(a) Different forms of spores and hypha; (b) the PR curves of the YOLOX model in identifying spores and hypha for different IoU values, where the left diagram shows the PR curves for spore, and the right diagram depicts the PR curves for hyphae; in both diagrams, the horizontal coordinate indicates the recall rate, and the vertical coordinate shows the precision rate; (c) precision, recall, and F1-score results of the YOLOX model.

YOLOX model detects hyphae with the form of a box, but the hyphae have a characteristic form that cannot be displayed in accordance with the shape of the box because of its curved linear structure. Therefore, to reduce the false-negative rate, the model should be able to detect as many hyphae suspect areas as possible. Accordingly, in this study, the IOU value was set to the lowest value. When the IoU was set to 0.1, the recall value of the spore or hypha detection was the highest, as displayed in Fig. 2(c); the F1-score and AP values were 0.81 and 0.89 for spore, 0.88 and 0.92 for hyphae, respectively; the mAP was 0.9. The results indicated that the proposed model could accurately identify different morphologies of hyphae and spores. However, as spores were more susceptible to the background noise, the model exhibited superior performance in hypha detection compared to its performance in spore detection.

Evaluation of consistency among clinicians

Different clinicians might come to different conclusions when reading images under a microscope. To assess the consistency between their, three experienced clinicians annotated the same dataset, and their labeled results were compared using the F1-score metric as an evaluation indicator, as shown in Table 2. The comparison results of the three clinicians showed that the F1-score value agreement between any two of them was 80%–90%, and the agreement between the proposed AI model and any one doctor was also 80%–90%. Several factors contributed to the inconsistency between clinicians, as well as between clinicians and the proposed AI model, as illustrated in Fig. 3. First, the Bounding box size varied and did not strictly follow the target object’s edges drawn by different clinicians. In addition, for some cases where multiple spores or hyphae targets were intertwined, different clinicians drew different numbers of label boxes and classified them differently. For instance, one clinician might classify all the targets as a whole, while others might label each target within them separately. Further, the subjectivity factor was also introduced in some images that included targets with a very low fluorescence intensity or strong background noise.

Evaluation of fungal mycelium detecting ability of MobileNet V2 model

In preliminary analysis, this study tried to use the YOLOX model to detect all fungal forms, but the results indicated that the YOLOX model did not perform well in processing large targets with complex internal structures, such as fungal mycelium. Therefore, this study introduced the MobileNet V2 model for this task. Due to the characteristic of mycelium that comprised multiple entangled hyphae, as depicted in Fig. 4(a), it was difficult to identify and count a single hypha. However, a qualitative result of negative or positive mycelium was easier to obtain using a classification model. The precision, recall, and F1-score values of the classification model were 83%, 100%, and 93%, respectively, as presented in Figs. 4(b) and (c). As shown in Figs. 4(b) and (c), the MobileNet V2 model performed well in the mycelium classification task. The results indicated that the MobileNet V2 model had a high recall rate, meaning that it could hardly miss any mycelium.

Evaluation of fungal image positivity detection effect of proposed dual-model framework

For the evaluation of all the fungal forms used in this study, an ensemble workflow integrated the results of the spore and hypha detection model and the fungal mycelium classification model to obtain a final result of fungal negative or positive for the entire image. Fungal negative meant no hyphae or spores were identified. A total of 219 images were used in this evaluation. The precision, recall, F1-score, and Kappa values of the proposed model were 92.5%, 99.3%, 95.7%, and 0.857, respectively, as shown in Figs. 5(a) and (b). The results demonstrated that the proposed fluorescence fungal image analysis framework’s results were highly consistent with the evaluation results of clinicians, having a significant clinical reference value.

Discussion

In clinical translational research, immunofluorescence images have been widely applied to pathological tissue analysis to study tumor microenvironments19, due to their ability to detect multiple biomarkers simultaneously on a single tissue section. This has led to the development of numerous AI-based fluorescent image analysis methods20. In clinical diagnosis, there is an increasing trend of fluorescence imaging application in the clinical diagnosis of fungal infections due to its sensitivity and specificity. Notably, fluorescence images using fluorescence dye that specifically stains the component of the fungal cell wall are better at distinguishing fungi from the background than bright-field images, allowing for clearer observation of fungal morphology and improving a doctor’s efficiency. In this study, two deep learning models, the YOLOX and MobileNet V2 models, were combined to conduct an analysis of fungal fluorescence images. In model performance validation, both individual and ensemble models demonstrated high efficacy in identifying fungal spores, hyphae, and mycelium, achieving results comparable to those of clinicians. To the best of our knowledge, this is the first time that fungal mycelium has been detected, separate from spores or single hyphae using a different model. Fungal mycelium is a common fungal form in clinical samples, and its accurate detection is important for clinical diagnosis. With the widespread use of digital scanners in hospitals, a fully automated analysis workflow powered by deep learning technology would be a promising approach to alleviate the burden of hospitals.

Previous studies have primarily focused on non-superficial fungi, including non-cutaneous fungal species, which exhibit significant morphological differences compared to superficial fungi. Consequently, the AI algorithms required for their detection might also differ15,16. In addition, in certain clinical fungal diagnostic scenarios, the challenge lies in fungal classification, a task addressed through image classification algorithms12. Although some studies have conducted the AI-based identification of superficial fungi from bright-field images in recent years, they have not comprehensively analyzed the integrated recognition of fungal spores, hyphae, and mycelium in superficial fungi, which is crucial to reflect real-world clinical application scenarios. In contrast, this study employs a combination of different AI algorithms to identify diverse morphological variations of superficial fungi, aiming to determine the presence of fungal infection in clinical samples.

Although this study has achieved promising results, it has some limitations. First, the annotation process of fungus images is time-consuming and complex. Second, the generalization ability of the proposed hybrid model is limited by the training data, and fungus species are diverse and are known for their seasonal and regional distribution characteristics. Therefore, future research could explore the following aspects. First, the proposed model could be further optimized to improve its classification ability for small targets and complex morphology. For instance, different AI-based models, such as the YOLOv5, Faster R-CNN, and EfficientNet models, could be employed to improve detection performance. Second, the training dataset could be expanded and diversified to enhance the proposed model’s generalization ability. Third, more advanced augmentation methods, such as domain-specific augmentations (e.g., synthetic lesion generation via StyleGAN for medical images), could be used to improve detection performance to address the problems of class imbalances and rare cases.

In summary, the combination of the YOLOX and the MobileNet V2 models provides a more comprehensive and effective method for analyzing fluorescent fungal images. Future work could further explore the optimization and fusion of the two algorithms to expand the recognition range of fungal species, aiming to further improve the accuracy of these models. At the same time, the proposed AI-driven framework could be combined with fluorescence scanning technology to provide a comprehensive clinical solution for automated skin fungal identification to support diagnostic decision-making.

Data availability

The datasets generated and/or analyzed in this study are available from the corresponding authors on a reasonable request and with the necessary approval from the Ethics Committee.

References

Tao, C. et al. Advances in diagnostic techniques for clinical pathogenic fungi. Int. J. Lab. Med. 42, 2146–2150 (2021).

Yuan, F. et al. Analysis of superficial mycoses and their causative fungal species. J. Qingdao Uni. (Med. Sci.) 56, 477–480 (2020).

Denning, D. W., Kibbler, C. C. & Barnes, R. A. British society for medical mycology proposed standards of care for patients with invasive fungal infections. Lancet Infect. Dis. https://doi.org/10.1016/s1473-3099(03)00580-2 (2003).

Antinori, S. et al. Trends in the postmortem diagnosis of opportunistic invasive fungal infections in patients with AIDS. Am. J. Clin. Pathol. 132, 221–227 (2009).

Baddley, J. W., Stroud, T. P., Salzman, D. & Pappas, P. G. Invasive mold infections in allogeneic bone marrow transplant recipients. Clin. Infect. Dis. https://doi.org/10.1086/319985 (2001).

Bartlett, JohnA. Nosocomial bloodstream infections in US hospitals: Analysis of 24,179 cases from a prospective nationwide surveillance study. Infect. Dis. Clin. Pract. 12(6), 376 (2004).

Morrell, M., Fraser, V. J. & Kollef, M. H. Delaying the empiric treatment of candida bloodstream infection until positive blood culture results are obtained: A potential risk factor for hospital mortality. Antimicrob. Agents Chemother. 49, 3640–3645. https://doi.org/10.1128/aac.49.9.3640-3645.2005 (2005).

Dang, C. et al. deep learning-powered whole slide image analysis in cancer pathology. Lab Invest. 105(7), 104186. https://doi.org/10.1016/j.labinv.2025.104186 (2025) (Epub ahead of print. PMID: 40306572).

Albuquerque, C., Henriques, R. & Castelli, M. Deep learning-based object detection algorithms in medical imaging: Systematic review. Heliyon. 11(1), e41137. https://doi.org/10.1016/j.heliyon.2024.e41137 (2024) (PMID: 39758372; PMCID: PMC11699422).

Zieliński, B., Sroka-Oleksiak, A., Rymarczyk, D., Piekarczyk, A. & Brzychczy-Włoch, M. Deep learning approach to describe and classify fungi microscopic images. Medical Imaging with Deep Learning,Medical Imaging with Deep Learning (2020).

Koo, T., Kim, M. H. & Jue, M.-S. Automated detection of superficial fungal infections from microscopic images through a regional convolutional neural network. PLoS ONE https://doi.org/10.1371/journal.pone.0256290 (2021).

Rahman, M. A. et al. Classification of fungal genera from microscopic images using artificial intelligence. J. Pathol. Inf. https://doi.org/10.1016/j.jpi.2023.100314 (2023).

Rappoport, N. et al. A decision support system for the detection of cutaneous fungal infections using artificial intelligence. Pathol. – Res. & Pract. 162, 155480 (2024).

Shubhankar Rawat, Bhanvi Bisht, Virender Bisht, Nitin Rawat, Aditya Rawat. MeFunX: A novel meta-learning-based deep learning architecture to detect fungal infection directly from microscopic images. https://doi.org/10.1016/j.fraope.2023.100069.

Camilo Javier Pineda Sopo, Farshid Hajati, Soheila Gheisari. DeFungi: Direct mycological examination of microscopic fungi images. https://doi.org/10.48550/arXiv.2109.07322

A. Yilmaz, F. Goktay, R. Varol, G. Gencoglan, H. Uvet, Deep convolutional neural networks for onychomycosis detection. http://arxiv.org/abs/2106.16139 (2021)

Ge, Z., Liu, S., Wang, F., Li, Z. & Sun, J. YOLOX: Exceeding YOLO Series in 2021. (2021).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. MobileNetV2: Inverted residuals and linear bottlenecks. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. https://doi.org/10.1109/cvpr.2018.00474 (2018)

de Souza, N., Zhao, S. & Bodenmiller, B. Multiplex protein imaging in tumour biology. Nat. Rev. Cancer. 24(3), 171–191. https://doi.org/10.1038/s41568-023-00657-4 (2024) (Epub 2024 Feb 5 PMID: 38316945).

Stringer, C. & Pachitariu, M. Cellpose3: One-click image restoration for improved cellular segmentation. Nat. Methods. 22(3), 592–599 (2025).

Acknowledgements

We appreciate the support and participation of the physicians and patients in this study.

Funding

This work was supported by the Science and Technology Commission of Shanghai Municipality (22Y11905600).

Author information

Authors and Affiliations

Contributions

Conception and design: ZM. Experiment methodology and validation: CPL and CST. Data collection and annotation: RRS, ZM, and TWY. Data curation, writing original draft: RRS, TWY, XXY, and ZDD. Review, and/or revision of the manuscript: XXY, ZDD, CPL, and ZM. Final approval of manuscript: all authors.

Corresponding authors

Ethics declarations

Competing interests

Wenyu Tan, Peilin Chen, and Shiting Chen are employees of SODA Data Technology, Inc. Xiaoya Xu and Dadong Zhang are employees of 3D Medicines Inc. The remaining authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ren, R., Tan, W., Chen, S. et al. Deep learning application to hyphae and spores identification in fungal fluorescence images. Sci Rep 15, 27222 (2025). https://doi.org/10.1038/s41598-025-11228-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-11228-y