Abstract

Timely detection and regular maintenance of road cracks are critical for road and traffic safety. However, existing detection methods face challenges such as varying target scales, large model parameters, and poor adaptability to complex backgrounds. To address these issues, this study proposes an enhanced GSB-YOLO model. Inspired by the concepts of linear transformation and long-range attention mechanisms, a lightweight network structure was designed to reduce model parameters in the backbone network, thereby improving detection efficiency. Additionally, a novel SMC2f module was introduced in the neck structure, which calculates the “energy” of each neuron in the feature map, evaluates its contribution to the detection task, and dynamically assigns weighted coefficients. This method enhances the model’s detection robustness in complex backgrounds and effectively addresses the issue of insufficient emphasis on positive samples. Furthermore, through the optimization of the Path Aggregation Network (PAN) and the Bidirectional Feature Pyramid Network (BiFPN), efficient multi-scale feature fusion is achieved, further strengthening the model’s capacity to represent crack features at various scales. Experimental results indicate that the proposed GSB-YOLO model improves the mean average precision (mAP) in road crack detection tasks by 3.2%, demonstrating its significant application value in road crack detection and traffic safety assurance.

Similar content being viewed by others

Introduction

Timely detection of road cracks is essential for ensuring road safety and minimizing economic losses1. Cracks, a common pavement defect, pose a significant threat to highway safety2. Statistics show that China’s total highway mileage has reached 6.28 million kilometers, ranking first in the world3. In recent years, China has made significant progress in highway construction, with the total length of national highways expected to reach 5.8 million kilometers by 20304. The rapid development of highway infrastructure has led to an increase in road cracks and maintenance costs. According to reports, the Chinese transportation sector invested 1.29 trillion RMB in road maintenance in 2022 alone5. Additionally, the frequent passage of large vehicles and the effects of freeze-thaw cycles further exacerbate pavement cracks, leading to structural damage and impacting road safety6. Therefore, there is an urgent need for a timely and efficient method to detect road cracks to ensure smooth traffic flow and safe driving conditions7.

Early road crack detection methods primarily relied on traditional manual inspection and machine learning techniques. While manual inspection8 is intuitive and easy to operate, it is time-consuming, labor-intensive, and highly subjective, with accuracy largely dependent on the inspector’s expertise9. To reduce the workload and improve detection accuracy, machine learning methods for automated crack detection have been widely adopted in the field of traffic safety10.

Early machine learning methods mainly relied on manually extracted features to detect and locate cracks in images. Kaseko and Ritchie11 used threshold-based methods to identify crack areas, while Zhou et al.12 and Jiang et al.13 applied wavelet transformation for crack detection. Shi et al.14 and Yu et al.15 introduced random structured forests to enhance detection performance, and Alam et al.16 used a K-means-like approach for crack detection through classification at three different wavelengths. Although these early machine learning methods could detect road cracks, they had low efficiency and limited visual intuitiveness, increasing detection and maintenance costs and failing to meet current demands.

Recently, deep learning17 has gained widespread application in computer vision due to its strong representational capacity. Deep learning models, trained end-to-end, provide greater robustness and more accurate crack detection. These methods are mainly divided into two-stage18 and one-stage19 approaches. Two-stage methods use candidate region proposals to detect targets, leveraging the strengths of various model types. Li et al.20 and Zhou et al.21 proposed crack detection methods combining drones and the Faster R-CNN model22. Feng et al.23introduced a fully automated road crack detection method based on the Mask R-CNN model, improving detection accuracy by optimizing the feature pyramid network structure. While two-stage models achieve real-time crack detection to some extent, they require extensive region-based computations, making them less suitable for real-time application. In contrast, one-stage models eliminate the need for candidate region generation24allowing them to predict target classes and bounding boxes directly from the original image. This simplification of design and training is better suited for road crack detection25.

One-stage models26 have gained wide application in road crack detection due to their high detection speed and ease of deployment27. Deng et al.28 used the YOLOv2 model for automatic detection of concrete cracks, though the model structure was large. Liu et al.29 proposed an innovative YOLOv3 model incorporating a ResNet50vd deformable convolution (DCN) backbone and Bayesian hyperparameter optimization (HPO), reducing loss during training, but did not account for complex background conditions. Yu et al.30 proposed the YOLOv4-FPM model, which used focal loss to optimize the loss function, improving detection accuracy while addressing challenges posed by complex backgrounds, though detection speed still required improvement. Hu et al.31developed a new crack detection method using YOLOv5 and in-vehicle images, leveraging attention mechanisms and abundant gradient information to address issues with small crack blurriness and incomplete feature extraction, but the model’s lightweighting was not considered. Ye et al.32 presented an improved YOLOv7 model with three custom modules to address feature loss, small bounding boxes, and gradient issues, thereby increasing accuracy, though it did not account for varying crack sizes. Dang and Wang33 proposed a road crack detection method based on YOLOv8 combined with drones using the DyHead (Dynamic Head) strategy and integrating three types of attention mechanisms to effectively detect cracks of varying sizes, though this approach increased computational load.

To address these challenges, this study proposes the GSB-YOLO model, which incorporates several innovative improvements to enhance detection accuracy, reduce model complexity, and improve robustness. The key contributions of the proposed model are as follows:

-

1)

First, a lightweight feature extraction module is introduced to generate redundant “Ghost” features through simple linear operations. This approach significantly reduces the model’s scale and parameter count while retaining critical features generated by the primary convolution. By minimizing the number of feature maps under hardware constraints, the module improves computational efficiency without compromising detection performance.

-

2)

Second, a novel SMC2f module is integrated into the neck structure. This module evaluates the contribution of each neuron in the feature map by calculating its “energy” and dynamically assigns weighting coefficients based on this evaluation. This mechanism enhances the model’s robustness in complex backgrounds and effectively addresses the issue of inadequate representation of positive samples, thereby improving detection accuracy.

-

3)

Finally, the path aggregation network (PAN) is optimized to facilitate efficient multi-scale feature fusion through cross-scale connections. Additionally, weighted feature fusion is employed to further enhance feature representation. These optimizations enable the model to effectively detect various types of road cracks, ensuring improved performance in diverse detection scenarios.

Methods

Structure of YOLOv8n

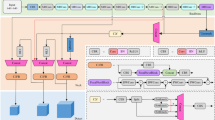

YOLOv8n, a state-of-the-art model in the YOLO series34, is widely recognized for its versatility in tasks such as target detection, instance segmentation, and image classification. The architecture of YOLOv8n is divided into four core components: Input, Backbone, Neck, and Detection Head, as illustrated in Fig. 1.

Input

The Input module employs the Mosaic data augmentation technique to enhance the robustness of the training dataset. By adaptively scaling and combining multiple images into a single training sample, this technique improves the model’s generalization ability while reducing computational complexity and boosting detection accuracy.

Backbone

This section performs image feature extraction using modules like CBS, C2f, and SPPF. CBS handles convolution with Conv, BN, and SiLU functions. YOLOv8 introduces the C2f module, replacing YOLOv5’s C3 to enhance gradient flow capture. SPPF rapidly pools spatial pyramid features to integrate data across varying scales.

Neck

This layer uses the FPN-PAN structure for feature fusion, combining high and low-level details. FPN uses a top-down method for up-sampling, while PAN connects features laterally to enrich semantic content.

Head

Anchored in an anchor-free matching mechanism, this component speeds up processing by directly regressing target centroids and dimensions at various scales and utilizes multi-scale feature maps for precise target classification and localization.

Enhancements in YOLOv8n model

Lightweighting mechanism

YOLOv8 uses traditional convolutional modules and C2f modules for high-quality feature extraction and down sampling. The main function of C2f modules is to facilitate feature fusion and cross-layer information transfer. However, traditional convolutional operations require many computations, especially when processing high-resolution images, which can slow down the inference speed and affect the real-time detection capability.

To address this challenge, this paper presents an improved GV2C2f module. The core concept of this module is to reduce redundant convolutional computation through a low-cost feature generation mechanism while enhancing feature transfer through efficient cross-stage information fusion. This design reduces computational complexity and model parameters while improving the diversity and effectiveness of feature characterization.

The GV2C2f module combines the Decoupled Fully Connected (DFC)35 attention mechanism with a linear ghost module designed to balance performance and lightweight architecture. The DFC attention mechanism uses a dynamic filter capsule to weigh the features, emphasizing important features while suppressing irrelevant information. This mechanism dynamically adjusts the model’s focus on different features to improve overall accuracy.

From a lightweight design perspective, the DFC attention mechanism decomposes the attention map into two fully connected layers that collect features along the horizontal and vertical directions, respectively. By decoupling the horizontal and vertical transformations, the computational complexity of the attention module is reduced to O(H2W + HW2), where W represents the width and H represents the height. This greatly improves the computational efficiency of the model. The specific implementation is shown in the following equation:

Where \(\:{F}^{H}\) and \(\:{F}^{W}\:\)represent weights, and the input features correspond to the original Z.

The GV2C2f module primarily configures structures with strides of 1 and 2. As shown in Fig. 2, the stride-1 configuration adopts an inverted bottleneck structure based on the GhostC2f, where the DFC attention mechanism operates in parallel with the first Ghost module. Subsequently, as illustrated in Fig. 3, these components are multiplied in an element-wise manner to enhance and expand the features. The enhanced features are then passed to the second Ghost module to generate output features. This process maximizes the capture of long-range dependencies between pixels in various spatial locations, thereby improving the module’s performance.

In the stride-2 configuration, depth wise separable convolution is introduced. After the parallel Ghost and DFC attention modules, the features are down sampled immediately to reduce the spatial size of the feature map. This design minimizes computational load and memory usage while expanding the receptive field, facilitating the capture of broader contextual information and reducing gradient loss. The “Ghost” module then restores the feature dimensions to ensure consistency with the input.

SMC2f structure

In road crack detection tasks, the complexity of background environments significantly increases detection difficulty. Attention modules help address this by focusing on key areas through the assignment of different weights to feature maps. Traditional attention mechanisms, such as CBAM36, typically generate 1-D or 2-D weights along either the channel or spatial dimensions. However, they treat each neuron uniformly, limiting the ability to capture discriminative features. The C2f module in the Neck layer reduces computational complexity by bypassing convolution operations for selected features while maintaining feature representation. Despite this, C2f has difficulty effectively selecting key features, making it challenging to differentiate objects from the background in complex environments, which ultimately impacts detection accuracy.

To address these limitations, this paper proposes a new SMC2f module, which is designed to suppress noisy background interference by calculating the energy of each neuron in the feature map. The core concept of the SMC2f module is to enable the adaptive adjustment of local features through an adaptive attention mechanism. This approach allows the model to focus on critical features within the target region while suppressing background interference, all while maintaining the feature fusion capabilities and computational efficiency of the original C2f module.

As shown in Fig. 4, this design operates as an integrated weight attention mechanism, generating three-dimensional attention values for feature maps without requiring additional parameters. In visual neuroscience, neurons with the highest information content often exhibit distinctive firing patterns compared to neighboring neurons, while active neurons frequently inhibit the function of adjacent neurons.

Drawing inspiration from this, the SMC2f module incorporates an energy function to evaluate the linear separability of neurons, aiding in the identification of key neurons.

This energy function is defined as follows:

Where \(\:t\) represents the target neuron, and \(\:{x}_{i}\) represents the other neurons in the same input feature channel \(\:\varvec{}\varvec{x}\in\:{\varvec{R}}^{HW\times\:C}\varvec{}\varvec{}\). Here, \(\:i\) indexes the spatial dimension, and \(\:M\) denotes the overall neuron count. \(\:{w}_{t}\) and \(\:{b}_{t}\) correspond to the transformation weights and biases, respectively. By calculating the exact solutions for the parameters \(\:{w}_{t}\) and \(\:{b}_{t}\), and incorporating them into Eq. (4), the minimum energy is derived as follows:

The expression in Eq. (5) suggests that a lower energy value reflects stronger linear distinction between neuron t and the surrounding neurons, indicating greater significance. Building on this attention mechanism, the SMC2f module can be defined as follows:

Where \(\:E\) represents the categorization function for every energy function \(\:e\in\:t\:\)across both the channel and spatial axes, with the sigmoid function limiting higher E values, ensuring that they do not undermine the comparative significance of each neuron. The integration of the SMC2f module into the Neck portion of the YOLOv8 model significantly enhances the model’s target detection capabilities without adding extra computational parameters.

Bidirectional Featur(1.6)e pyramid network

In the field of road crack detection, effectively extracting features across different scales is crucial, particularly in the presence of noise introduced by uneven lighting, surface textures, and sensor limitations. Such noise can obscure fine-grained crack features and reduce the reliability of feature representations, making robust multi-scale feature extraction essential for ensuring accurate and stable detection performance in real-world environments. The YOLOv8 model addresses scale discrepancies using the PAN-FPN structure, which enhances the integration of features from both high- and low-resolution layers. This structure is designed to preserve intricate details and increase the expressiveness of features, improving its effectiveness in detecting privacy-sensitive information. However, while PAN-FPN improves overall information fusion, it struggles to capture the finer details necessary for accurate road crack detection.

To overcome this limitation, this study introduces the Bidirectional Feature Pyramid Network (BiFPN)37, an advanced bidirectional feature fusion method. BiFPN effectively merges top-down and bottom-up feature flows, incorporating weighted contextual information. This approach not only ensures comprehensive detail capture and fusion but also enhances the semantic richness of the features.

The BiFPN approach improves the system’s ability to detect subtle variations in data, thereby enhancing the semantic interpretation of features. Additionally, it optimizes the contribution of each feature through an intelligent weighting mechanism, boosting overall model performance without significantly increasing the number of parameters. This strategic optimization enables a more robust and efficient detection of road crack. Figures 5 and 6 illustrate these enhancements and their impact.

BiFPN improves upon the original PANet by refining its structure to enhance feature extraction. It increases input nodes and adds additional connections when input and output nodes align at the same level within the network. This architecture uses adaptive weighting to learn the importance of each input feature, significantly boosting detection accuracy. BiFPN introduces bidirectional pathways—top-down and bottom-up—across the feature network layers, enabling efficient cross-scale feature fusion through an iterative process.

This approach addresses the challenge of resolution discrepancies, often overlooked in conventional fusion methods, by dynamically assigning learnable weights to each feature. These weights adjust based on each feature’s resolution and relevance, optimizing the fusion process. The weighting mechanism used in BiFPN is described in Eq. (6), which mathematically illustrates how weights are calculated and applied to enhance feature integration and overall network performance.

where \(\:O\:\)represents the output features, \({w_i}\) denotes node weights, and\({I_i}\) signifies input features. The learning rate \(\upvarepsilon =0.0001\) is set to ensure the stability of the values.

Experimental procedures

Dataset related settings

To better detect road cracks, our team created a dataset of 3000 images that were carefully collected using the Baidu map service and sophisticated web crawlers designed to scan and retrieve relevant data. In addition, we also further enriched the dataset with images taken from actual sites by drones. We utilized Labeling, a powerful annotation software, to meticulously classify and label the four different target categories in these images. Each annotation was carefully saved in txt file format to ensure consistency and ease of use, as shown in Fig. 7 of our documentation.

To rigorously validate our model, we strategically divide the dataset into three subsets according to the ratio of 8:1:1 training set, validation set, and testing set. This division ensures a comprehensive training process while providing sufficient data for validation and unbiased testing. This details the specific format of the training set labels, which includes basic information such as label categories and bounding box dimensions. These dimensions are precisely defined by the centroid coordinates, width and height, ensuring accurate positioning and size estimation of the target object in the image. This structured approach to dataset preparation and labeling is essential for effective training and subsequent performance evaluation of our model focusing on road cracks.

Evaluation settings and metrics

The experimental setup involved a high-performance NVIDIA GeForce RTX 4090 graphics card with 24 GB of VRAM, operating on a Linux system. The computational environment was powered by PyTorch version 1.7.0 and Python 3.8. Crucial configuration parameters for the experiments included setting the learning rate at 0.01, running a total of 300 training cycles, and implementing a momentum of 0.937. Additionally, the weight decay was set at 0.0005, and the experiments were conducted using a batch size of 32.

The effectiveness of the model in detecting privacy-related information was evaluated through several statistical metrics: precision, recall, mean average precision (mAP), and the F1 score. These metrics are essential for understanding both the accuracy and the reliability of the model in practical scenarios. As defined in Eq. (7) to (10):

Precision is defined as the proportion of correct positive predictions among all positive predictions made, serving as an indicator of the quality of positive identifications. Recall measures the percentage of actual positives that were correctly identified, reflecting the model’s ability to capture relevant data. The mAP metric provides an overall measure of the model’s precision across various levels of recall, offering insights into its performance under different conditions. Lastly, the F1 score, which is the harmonic mean of precision and recall, offers a single metric that balances both the precision and recall, providing a comprehensive view of the model’s overall effectiveness.

Furthermore, this study evaluates the model’s complexity by measuring 377 its parameter count, including both weights and biases. It also assesses the computational 378 load using FLOPs (Floating Point Operations per Second)38. These metrics help gauge the model’s efficiency during training and inference in deep learning tasks. The FLOPs count 380 greatly affects the processing speed in both training and inference stages of deep learning 381 models. A higher number of FLOPs suggests greater computational demands and more 382 stringent hardware requirements.

Pre- and Post-Training evaluations

We conducted comparative experiments, as shown in Table 1, to demonstrate the superior road crack detection capabilities of the GSB-YOLO model over the YOLOv8n sub-model.

Table 1 highlights the significant improvements achieved by the GSB-YOLO model compared to the YOLOv8n model, with increases in precision, recall, F1, and mAP by 2.4%, 4.4%, 3.5%, and 3.2%, respectively. These enhancements are attributed to three key innovations in GSB-YOLO architecture. First, the SMC2f module optimizes information extraction and weighting, enabling the model to more effectively focus on critical features, thereby enhancing its ability to distinguish road crack regions. Second, the BiFPN module introduces efficient multi-scale feature integration, combining information from various scales, ensuring the model maintains accuracy when detecting targets of different sizes. Lastly, the reduction in parameter count is primarily due to the integration of the GV2C2f module, which generates “Ghost features” to reduce redundant calculations in convolution operations. Compared to the YOLOv8n sub-model, these innovations significantly enhance the overall performance of the GSB-YOLO model, ensuring exceptional accuracy and robustness in road crack detection.

Furthermore, Figs. 8 and 9 demonstrate GSB-YOLO’s superior performance across different precision and recall settings, as evidenced by a larger area under the curve (AUC), solidifying its advantage over the YOLOv8n model in detection tasks.

Investigative ablation study

To visualize the impact of each module, we conducted ablation experiments, as shown in Table 2. These experiments systematically removed each component of the GSB-YOLO model to evaluate its individual contribution to overall performance. The findings highlight the significance of each module in enhancing detection accuracy and efficiency. Method (1): YOLOv8n + GV2C2f (lightweight backbone structure only). Method (2): YOLOv8n + GV2C2f + SMC2f (backbone + attention-enhanced neck structure). Method (3): Full GSB-YOLO model (Method 2 + BiFPN).

As shown in Table 2, compared to the baseline model, Method (1) reduces computational cost by 14.8%. This improvement is primarily attributed to the newly designed GV2C2f structure, which generates redundant Ghost features through linear transformation. While this optimization of the backbone network significantly reduces model size, it also results in a slight decrease in detection accuracy.

Moreover, as shown in Fig. 10, the addition of the SMC2f module further enhances the model’s detection capabilities, increasing the mAP by an additional 1.3%. The SMC2f module, integrated into the Neck layer of the architecture, plays a critical role in assessing the relevance of each neuron in the feature map. It calculates the “energy” or significance of each neuron to determine its contribution to the overall detection task. Based on this assessment, weighted coefficients are assigned, allowing the model to focus on the most influential neurons. This targeted approach optimizes extraction and processing, thereby improving the accuracy and efficiency of the model, particularly when dealing with complex or cluttered backgrounds.

In addition, the incorporation of the Bidirectional Feature Pyramid Network (BiFPN) module contributed a further 0.8% improvement in mAP. The BiFPN module is crucial for enhancing the flow of information between features at various scales within the network. By utilizing a bidirectional feature fusion strategy, the BiFPN module effectively strengthens the connections between low-level (fine details) and high-level (semantic information) features. This comprehensive integration enables the model to process multi-scale information more effectively, enhancing its ability to detect targets of different sizes and scales. As a result, the model demonstrates increased robustness and effectiveness, ensuring reliable performance across a broad spectrum of detection tasks and diverse image conditions.

Comparative performance of different architectures

To evaluate the GSB-YOLO model’s effectiveness in road crack detection, comparative experiments with other established models were conducted. Table 3 presents the detailed results.

Table 3; Fig. 11 illustrate the varying detection accuracy and mean Average Precision (mAP) levels of different models in road crack detection. The Faster-RCNN model achieves a detection accuracy of 78.4% with a mAP of 81.4%; however, due to its complex two-stage detection process, it faces challenges in recognizing fine features in complex backgrounds. In contrast, the SSD model shows a 2.4% improvement in mAP, attributed to its simplified network structure based on VGGNet, enhancing overall detection accuracy. YOLOv3, as a single-stage model, simplifies the detection process by directly predicting bounding boxes and class probabilities within a single convolutional network, thus reducing detection time. Building on this, YOLOv5 incorporates CSPNet as the backbone network, making the model more compact and efficient. YOLOv7 further improves mAP by 1.2% over YOLOv5, benefiting from faster convolution operations and enhanced fine-grained object detection capability. Among all the models compared, the GSB-YOLO model achieves the highest detection accuracy, proving to be the most effective model for road crack detection. Through a detailed analysis on a specific dataset, GSB-YOLO’s exceptional performance in this critical field is clearly demonstrated.

To objectively demonstrate the model’s improvement, we analyzed specific datasets. Figure 12 shows that the GSB-YOLO model is both more reliable and more effective at detecting road cracks, crucial for traffic safety.

To further validate the model’s generalization capability, we selected a portion of the RDD2022 dataset for verification. The RDD202239 dataset is a large-scale road damage detection source that includes images collected from diverse perspectives, such as vehicle-mounted devices, drones, and handheld devices. This dataset comprises 5,000 images, each annotated with specific types of road damage, including D00 (longitudinal cracks), D10 (transverse cracks), D20 (alligator cracks), and D40 (potholes). These images represent a wide range of road types, environmental conditions, and lighting variations. The experimental results in Table 4.

Figure 13 presents the experimental results of the RDD2022 dataset, comparing the performance metrics of the YOLOv8n and GSB-YOLO models. The chart employs four distinct colored bar graphs to represent different performance metrics: yellow for Precision (%), orange for Recall (%), red for F1 Score (%), and pink for mean Average Precision (mAP %). From the figure, it can be observed that the performance of the GSB-YOLO model is comparable to that of the YOLOv8n model across these four metrics, with GSB-YOLO slightly outperforming YOLOv8n in certain indicators. Both models achieve scores predominantly within the 80 − 100% range, demonstrating robust performance.

Discussion

To achieve more efficient and precise detection of road cracks, this study proposes an enhanced model based on GSB-YOLO. Early detection of road cracks is crucial for road safety and maintenance, as it helps prevent accidents, extends road service life, and significantly reduces maintenance costs. However, current detection methods exhibit poor adaptability in complex backgrounds and struggle with the multi-scale nature of cracks. Traditional deep learning detection models often involve large parameters and high computational demands, making them less suitable for real-time detection needs. Therefore, this study aims to develop a model architecture that balances efficiency and robustness to meet the practical requirements of road crack detection.

To this end, the GSB-YOLO model is designed with multiple innovative techniques to enhance detection performance. Firstly, inspired by linear transformation and long-range attention mechanisms, the model incorporates a lightweight network structure, reducing the parameter load in the backbone network and improving overall processing speed. Secondly, the SMC2f structure is introduced in the neck layer, calculating the “energy” of each neuron in the feature map and dynamically allocating weights, thereby enhancing adaptability and robustness in complex backgrounds. Additionally, through optimization of the Path Aggregation Network (PAN) and Bidirectional Feature Pyramid Network (BiFPN), GSB-YOLO achieves cross-scale feature fusion, strengthening the model’s ability to capture crack features of various scales.

Compared with single-stage and two-stage detection algorithms, the GSB-YOLO model demonstrates significant advantages. Single-stage detection algorithms (e.g., YOLO) typically excel in speed but often lack accuracy when dealing with complex backgrounds and fine cracks. Two-stage detection algorithms (e.g., Faster R-CNN) offer a certain precision advantage, especially in target localization, but their high computational complexity hinders real-time application. By improving the backbone structure and integrating multi-scale feature fusion mechanisms, GSB-YOLO combines the efficiency of single-stage algorithms with the high precision of two-stage algorithms. Experimental results show that GSB-YOLO not only achieves high mean average precision (mAP) in road crack detection tasks but also performs consistently across different environments, indicating strong application potential.

Despite the performance improvements in the GSB-YOLO model, it still has certain limitations. Firstly, the model’s adaptability under extreme lighting conditions (such as nighttime or highly reflective areas) is limited, with detection accuracy easily affected by light variations. Secondly, although the parameter load has been reduced through lightweight processing, GSB-YOLO still requires a certain number of computational resources, limiting its applicability on mobile and low-power devices. Additionally, when dealing with complex shapes or severely damaged cracks, the model’s recognition performance is less than ideal. This may stem from the model’s limitations in handling irregular shapes and highly similar backgrounds, highlighting the need for further enhancement in adapting to complex scenes.

Future research can further optimize the GSB-YOLO model in several ways. On one hand, the introduction of multimodal data (such as laser scanning or thermal imaging) could enhance detection performance in extreme environments, improving the model’s environmental adaptability. On the other hand, further advancements in lightweight design can be explored, using more sophisticated model compression and acceleration techniques to make GSB-YOLO more suitable for resource-constrained devices. Additionally, for handling irregular features, algorithms based on shape-adaptive attention or dynamic feature selection can be explored to strengthen the model’s detection capability for complex crack characteristics. Through these optimizations, the GSB-YOLO model is expected to further improve its robustness and applicability in real-world road crack detection, providing more reliable technical support for road maintenance and traffic safety40,41,42,43,44.

Conclusions

In this study, a new convolutional pattern was designed in the backbone layer, significantly reducing model parameters and achieving a lightweight model structure. Secondly, the SMC2f structure was developed to enhance feature extraction and detection performance, even in complex scenarios. The optimized Path Aggregation Network (PAN) enables efficient multi-scale feature fusion, enhancing the detection capability for various types of fractures. Experimental results indicate a significant improvement in mAP compared to traditional methods, demonstrating the effectiveness of this model. Despite its strong performance, the GBS-YOLO model still requires further refinement, especially in detecting very small or morphologically complex diseases. Future work will focus on further optimizing the model structure to improve detection capabilities for small-scale and complex disease fractures. Additionally, model compression techniques will be applied to reduce computational complexity, facilitating deployment on mobile devices and in resource-constrained environments.

Data availability

Data Availability StatementThis study utilized two datasets: one was collected and created by ourselves, and the other is the open-source RDD2022 dataset. Firstly, for the RDD2022 dataset, the access link is: https://crddc2022.sekilab.global/. This dataset contains the relevant data used in this study and is publicly accessible via the provided link. If you wish to obtain the dataset created by ourselves, please follow the principle: “The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.” You may contact the corresponding author via email at: yuhao.wang5@student.uq.edu.au to request access.

References

Gao, Y., Cao, H., Cai, W. & Zhou, G. Pixel-level road crack detection in UAV remote sensing images based on ARD-Unet. Measurement. 219, 113252 (2023).

Sekar, A. & Perumal, V. SS-GAN based road surface crack region segmentation and forecasting. Eng. Appl. Artif. Intell. 133, 108300 (2024).

He, X. et al. UAV-based road crack object-detection algorithm. Automation Construction. 154, 105014 (2023).

Ma, D. et al. Automatic detection and counting system for pavement cracks based on PCGAN and YOLO-MF. IEEE Trans. Intell. Transp. Syst. 23, 22166–22178 (2022).

Deng, L., Zhang, Qianni, Guo, J. & Liu, Y. An integrated method for road crack segmentation and surface feature quantification under complex backgrounds. Remote Sens. 15, 1530 (2023).

Cebon, D., VEHICLE-GENERATED ROAD & DAMAGE. A REVIEW. Veh. Syst. Dyn. 18, 107–150 (1989).

Mohan, A. P. & Poobal, S. Crack detection using image processing: A critical review and analysis. Alexandria Eng. J. 57, 787–798 (2018)

Cheng, H. D. & Miyojim, M. Automatic pavement distress setection system. Inf. Sci. 108, 219–240 (1998).

Bhardwaj, M., Khan, N. & Baghel, V. Fuzzy C-Means clustering based selective edge enhancement scheme for improved road crack detection. Eng. Appl. Artif. Intell. 136, 108955 (2024).

Yang, F. et al. Feature pyramid and hierarchical boosting network for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 21, 1525–1535 (2019).

Kaseko, M. S. & Ritchie, S. G. A neural network-based methodology for pavement crack detection and classification. Transp. Res. Part. C-emerging Technol. 1, 275–291 (1993).

Zhou, J., Huang, P. S. & Chiang, F. Wavelet-based pavement distress detection and evaluation. Opt. Eng. 45, 027007 (2006).

Jiang, X., Ma, Z. J. & Ren, W. Crack detection from the slope of the mode shape using complex continuous wavelet transform. Computer-Aided Civil Infrastructure Engineering. 27, 187–201 (2012).

Shi, Y., Cui, L., Qi, Z., Meng, F. & Chen, Z. Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 17, 3434–3445 (2016).

Yu, Y., Rashidi, M., Samali, B., Yousefi, A. M. & Wang, W. Multi-Image-Feature-Based hierarchical concrete crack identification framework using optimized SVM Multi-Classifiers and D-S fusion algorithm for Bridge structures. Remote Sens. 13, 240 (2021).

Alam, S. Y., Loukili, A., Grondin, F. & Rozière, E. Use of the digital image correlation and acoustic emission technique to study the effect of structural size on cracking of reinforced concrete. Eng. Fract. Mech. 143, 17–31 (2015).

Ye, X., Wang, L., Huang, C. & Luo, X. Wind turbine blade defect detection with a semi-supervised deep learning framework. Eng. Appl. Artif. Intell. 136, 108908 (2024).

Wu, W., Yin, Y., Wang, X. & Xu, D. Face detection with different scales based on faster R-CNN. IEEE Trans. Cybernetics. 49, 4017–4028 (2019).

Zhou, K. et al. Evaluation of BFRP strengthening and repairing effects on concrete beams using DIC and YOLO-v5 object detection algorithm. Construction Building Materials. 411, 134594 (2024).

Li, R. et al. Automatic Bridge crack detection using unmanned aerial vehicle and faster R-CNN. Construction Building Materials. 362, 129659 (2023).

Zhou, Q., Ding, S., Qing, G. W. & Hu, J. B. UAV vision detection method for crane surface cracks based on faster R-CNN and image segmentation. J. Civil Struct. Health Monit. 12, 845–855 (2022).

Ren, S., He, K., Girshick, R. B., Sun, J. & Faster, R-C-N-N. Towards Real-Time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1137–1149 (2015).

Feng, C., Zhang, H., Wang, H., Wang, S. & Li, Y. Automatic Pixel-Level crack detection on dam surface using deep convolutional network. Sensors 20 https://doi.org/10.3390/s20072069 (2020).

Xiang, X., Wang, Z. & Qiao, Y. An improved YOLOv5 crack detection method combined with transformer. IEEE Sens. J. 22, 14328–14335 (2022).

Su, P., Han, H., Liu, M., Yang, T. & Liu, S. MOD-YOLO: rethinking the YOLO architecture at the level of feature information and applying it to crack detection. Expert Syst. Appl. 237, 121346 (2023).

Xiaoxun, Z. et al. Research on crack detection method of wind turbine blade based on a deep learning method. Applied Energy. 328, 120241 (2022).

Jing, Y., Ren, Y., Liu, Y., Wang, D. & Yu, L. Automatic extraction of damaged houses by earthquake based on improved YOLOv5: A case study in Yangbi. Remote Sens. 14, 382 (2022).

Deng, J., Lu, Y. & Lee, V. C. S. Imaging-based crack detection on concrete surfaces using you only look once network. Struct. Health Monit. 20, 484–499 (2020).

Liu, Z. et al. Novel YOLOv3 model with structure and hyperparameter optimization for detection of pavement concealed cracks in GPR images. IEEE Trans. Intell. Transp. Syst. 23, 22258–22268 (2022).

Yu, Z., Shen, Y. & Shen, C. A real-time detection approach for Bridge cracks based on YOLOv4-FPM. Autom. Constr. 122, 103514 (2021).

Hu, H. et al. Road surface crack detection method based on improved YOLOv5 and vehicle-mounted images. Measurement. 229, 114443 (2024).

Ye, G. et al. Pavement crack instance segmentation using YOLOv7-WMF with connected feature fusion. Automation Construction. 160, 105331 (2024).

Dang, C. & Wang, Z. X. RCYOLO: an efficient small target detector for crack detection in tubular topological road structures based on unmanned aerial vehicles. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. 17, 12731–12744 (2024).

Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. ArXiv abs/2408.15857 (2024).

Tang, Y. et al. GhostNetV2: Enhance Cheap Operation with Long-Range Attention. ArXiv abs/2211.12905 (2022).

Woo, S., Park, J., Lee, J. Y. & Kweon, I. S. CBAM: Convolutional Block Attention Module. ArXiv abs/1807.06521 (2018).

Doherty, J., Gardiner, B., Kerr, E. & Siddique, N. H. BiFPN-YOLO: One-stage object detection integrating Bi-Directional feature pyramid networks. Pattern Recognit. 160, 111209 (2025).

Li, Y. et al. MicroNet: Improving Image Recognition with Extremely Low FLOPs. IEEE/CVF International Conference on Computer Vision (ICCV), 458–467 (2021)., 458–467 (2021). (2021).

Arya, D. M. et al. RDD: A multi-national image dataset for automatic Road Damage Detection. ArXiv abs/2209.08538 (2022). (2022).

Mercorelli, P. A. Fault detection and data reconciliation algorithm in technical processes with the help of Haar wavelets packets. Algorithms 10, 13 (2017).

Gao, X. Z., Ovaska, S., Wang, X. & Chow, M. Y. Multi-Level optimization of negative selection algorithm detectors with application in motor fault detection. Intell. Autom. Soft Comput. 16, 353–375 (2010).

Mercorelli, P. Recent advances in intelligent algorithms for fault detection and diagnosis. Sensors 24, 2656 (2024).

Feng, Z. & Zuo, M. J. Vibration signal models for fault diagnosis of planetary gearboxes. J. Sound Vib. 331, 4919–4939 (2012).

Mercorelli, P. Denoising and harmonic detection using nonorthogonal wavelet packets in industrial applications. J. Syst. Sci. Complex. 20, 325–343 (2007).

Funding

This research was funded by Project of Xizang Changdu Science and Technology (KLSFGAAW2020.003), Yunnan Fundamental Research Projects (202301AU070129), Yunnan Fundamental Research Projects (202301BD070001-212) and The Open Fund of Yunnan Key Laboratory of Tea Science (2022YNCX004).

Author information

Authors and Affiliations

Contributions

All authors have made substantial intellectual contributions to this work: Yuhao Wang conceived the research topic, developed the code, and drafted the manuscript; Heran Zhu provided major revisions to the manuscript and improved the conceptual design of the figures; Yirong Wang contributed to manuscript editing and data refinement; Jianping Liu was primarily responsible for data collection; Jun Xie performed data cleaning and analysis; Bi Zhao contributed to the conceptual framework and provided funding support; and Siyue Zhao finalized the manuscript and supervised revisions. All authors reviewed and approved the final version of the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, Y., Zhu, H., Wang, Y. et al. GSBYOLO: A lightweight Multi-Scale fusion network for road crack detection in complex environments. Sci Rep 15, 26615 (2025). https://doi.org/10.1038/s41598-025-11717-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11717-0