Abstract

Deep learning models have shown remarkable success in disease detection and classification tasks, but lack transparency in their decision-making process, creating reliability and trust issues. Although traditional evaluation methods focus entirely on performance metrics such as classification accuracy, precision and recall, they fail to assess whether the models are considering relevant features for decision-making. The main objective of this work is to develop and validate a comprehensive three-stage methodology that combines conventional performance evaluation with qualitative and quantitative evaluation of explainable artificial intelligence (XAI) visualizations to assess both the accuracy and reliability of deep learning models. Eight pre-trained deep learning models - ResNet50, InceptionResNetV2, DenseNet 201, InceptionV3, EfficientNetB0, Xception, VGG16 and AlexNet,were evaluated using a three-stage methodology. First, the models are assessed using traditional classification metrics. Second, Local Interpretable Model-agnostic Explanations (LIME) is employed to visualize and quantitatively evaluate feature selection using metrics such as Intersection over Union (IoU) and the Dice Similarity Coefficient (DSC). Third, a novel overfitting ratio metric is introduced to quantify the reliance of the models on insignificant features. In the experimental analysis, ResNet50 emerged as the most accurate model, achieving 99.13% classification accuracy as well as the most reliable model demonstrating superior feature selection capabilities (IoU: 0.432, overfitting ratio: 0.284). Despite the high classification accuracies, models such as InceptionV3 and EfficientNetB0 showed poor feature selection capabilities with low IoU scores (0.295 and 0.326) and high overfitting ratios (0.544 and 0.458), indicating potential reliability issues in real-world applications. This study introduces a novel quantitative methodology for evaluating deep learning models that goes beyond traditional accuracy metrics, enabling more reliable and trustworthy AI systems for agricultural applications. This methodology is generic and researchers can explore the possibilities of extending it to other domains that require transparent and interpretable AI systems.

Similar content being viewed by others

Introduction

Human survival is heavily dependent on agriculture, as it provides essential resources such as food and fiber for sustainability and development1. Rice is the predominant food crop in numerous regions around the world. Its yield is heavily influenced by the soil type, weather conditions, biological elements, and diseases caused by bacteria, fungi, and viruses, leading to substantial economic losses2. Proper management of these diseases is crucial to ensure food security.

Initially, traditional machine learning algorithms, such as support vector machines (SVM), k-nearest neighbors (kNN), random forests, and Nave Bayes, were used to detect and classify rice leaf diseases3,4,5,6. In recent years, deep learning has become the preferred method. Deep learning algorithms bypass the need for feature engineering by learning directly from raw data. Existing studies on rice leaf disease detection have used various methods such as custom convolutional neural networks (CNNs)7,8,9,10,11,12, transfer learning13,14,15,16,17,18,19,20,21,22,23,24, ensemble methods25,26,27, and hybrid methods28,29,30. The effectiveness of these models was evaluated using standard metrics, such as accuracy, precision, recall, and F1 score. A model is considered the best-performing model if it excels in these metrics compared to the other selected models. However, deep learning models only provide the final decision without any explanation of how the decision is made. Existing studies using deep learning methods frequently encounter challenges in interpreting the decision-making process, including identifying the features considered by the models and understanding the reasons for incorrect predictions and overfitting31. This lack of transparency raises concerns about the reliability among users who struggle to grasp the basis for model decisions32. A model is reliable when it focuses on the key features that have a significant and substantial influence on the final results while carefully avoiding and excluding less important features that provide little predictive value33. A reliable model fosters accountability, confidence, and trust, ensuring that model decisions are informed and justifiable in practical applications34,35,36,37.

To build trust and reliability, it is essential to assess the performance of models based on both decision-making capabilities and explanations behind making such decisions38. Explainable Artificial Intelligence (XAI) has become increasingly important as deep learning models have become more complex, highlighting the need for transparency and interpretability in decision-making processes32,33. XAI techniques help clarify model decisions by offering visual explanations of key features that influence predictions, allowing humans to better understand and trust these models. Explainable AI techniques such as Local Explanation Method using Nonlinear Approximation (LEMNA)39, Layerwise Relevance Propagation (LRP)40, SHapley Additive exPlanations (SHAP)41, Gradient-weighted Class Activation Mapping (GradCAM)42, Gradient-weighted Class Activation Mapping++ (GradCAM++), Backpropagation (BP)43, and Local Interpretable Model-agnostic Explanations (LIME)44 are being used to offer visual explanations to assess the list of features considered by a model and their importance45,46,47,48. XAI techniques generate feature heatmap images to visualize the list of features or regions considered by a model; within the test image, they are highlighted with different colors based on their importance in the final decision-making process49,50.

Qualitative analysis of XAI visualizations involves visually inspecting heatmaps or saliency maps to understand how models focus on significant features or regions within an image during decision making. This approach reveals insights into the model reasoning process by highlighting important areas for predictions and evaluating clarity, consistency, and alignment with domain knowledge51,52,53,54. However, qualitative analysis is limited by its subjective nature, as interpretations can vary between individuals, leading to inconsistent conclusions. It is also time-consuming and challenging to manually analyze a large number of visualizations, making scalability and reproducibility difficult55. Moreover, ranking models based on their focused features is problematic due to the lack of a standardized methodology for comparison. These limitations emphasize the importance of quantitative analysis, which objectively evaluates visualizations using metrics such as the match ratio56, IoU, the accuracy score57,58, and the Jaccard index59. Quantitative evaluation ensures reproducibility, scalability, and systematic assessment, offering more reliable and comprehensive insights into how well visualizations align with the decision making process of the model.

Table 1 presents a comparative overview of various deep learning approaches used for the detection of rice leaf diseases. This table lists the details of the deep learning approaches, relevant studies, and performance metrics used by researchers to evaluate classification performance. In addition, the table specifies whether XAI techniques were used to assess the reliability of the models. Although existing studies have applied XAI techniques such as Grad-CAM, Grad-CAM++, and LIME for the detection of rice leaf diseases, their analysis has been largely limited to qualitative visual interpretations. These studies rely primarily on subjective visual inspection of saliency or heatmaps, lacking standardized metrics to objectively assess whether the model cares about agronomically significant features. As a result, conclusions drawn from such visualizations are often inconsistent, non-reproducible, and difficult to scale. Furthermore, current research does not offer quantitative benchmarking of interpretability, which is critical for problems such as plant disease management. In contrast, our study addresses this gap by focusing on quantitative analysis of XAI visualizations, employing objective metrics to evaluate the alignment between model attention and domain-relevant features. This approach ensures a more rigorous, scalable, and interpretable deployment of AI models in agricultural decision-making.

To overcome these issues, our work introduces a novel three-stage methodology that integrates qualitative and quantitative techniques for a comprehensive evaluation of deep learning models. Although most of the previous studies have relied on qualitative methods for visual explanations, these techniques face limitations in terms of scalability, reproducibility, and trustworthiness. We address these limitations by introducing a generalizable quantitative evaluation methodology, utilizing similarity metrics: Intersection over Union (IoU), Dice Similarity Coefficients (DSC), Specificity, Matthews Correlation Coefficient (MCC), Pixel-wise Accuracy (PWA), and Mean Absolute Error (MAE). These metrics are applied to evaluate the reliability and interpretability of the feature selection process along with traditional classification performance. In addition, our methodology distinguishes itself by going beyond traditional qualitative assessments and incorporating quantitative measures to objectively validate the explanations provided by XAI techniques. This combination of classification and interpretability evaluation ensures that our methodology is robust and comprehensive. By providing a detailed comparison of visual explanations and their alignment with ground-truth images, we offer a more reliable and scalable solution. This novel integration of quantitative metrics and the focus on both classification and feature selection make our contributions unique and advance the field of XAI.

The primary objectives of this work are to:

-

Highlight the limitations of using conventional classification performance metrics alone for evaluating the performance of deep learning models.

-

Develop a new methodology for comprehensive evaluation of the performance of deep learning models in the context of rice leaf disease detection.

-

Using XAI methods to visualize the features (along with their significance) considered by the selected deep learning models.

-

Identify the best performing and reliable model by evaluating both classification accuracy and its ability to consider the most significant features for decision making.

In our work, we used the terms “interpretability” and “explainability” interchangeably, acknowledging that while they can denote distinct concepts, the nuances often overlap in the context of XAI70,71. Many researchers and practitioners also use these terms interchangeably, reflecting a common understanding that both aim to enhance understanding of model behavior and decision-making processes72.

The content of this paper is structured as follows. Section Introduction introduces the automatic detection of rice leaf diseases and associated discussions. Section Related Works provides related works related to this study. The methodology adopted in this study is described in Section Proposed methodology. Section Experimental analysis describes the experimental setup, results, and analysis of results. The related discussions and limitations are presented in Section Discussions. Finally, Section Conclusion and future directions presents the conclusive remarks and future directions.

Related works

In recent years, researchers have developed innovative methods for the automatic detection and classification of leaf diseases of plants. This section reviews and analyzes some of these approaches, focusing on how XAI techniques have been integrated to enhance the interpretability and usability of the model. Deng et al.54 proposed an ensemble method for the detection of rice leaf diseases and achieved an accuracy of 91%. To provide visual explanations, the authors used GradCAM, GradCAM++, and Guided Backpropagation techniques. Shovon et al.52 proposed a deep ensemble model for rice leaf disease detection, GradCAM and Score-CAM were used to provide visual explanations of the features considered by the model. Bijoy et al.51 proposed a lightweight deep convolutional neural network method for the detection of rice leaf diseases and achieved an accuracy of 99.81%. They used the GradCAM technique to visualize the regions in the images used for disease detection. Altabaji et al.53 developed a modified LeafNet for rice leaf disease detection, and an intermediate class activation map (ICAM) is used for visual explanations.

Wei et al.47 used SmoothGrad, LIME, and GradCAM and performed experiments on a fruit leaf disease dataset48 to interpret VGG, GoogleNet and ResNet models. Nahiduzzaman et al.46 proposed a lightweight parallel depth-wise separable CNN model for the detection of Mulberry leaf disease, and the SHAP technique was used to visualize the model feature explanations. The proposed model achieved an accuracy of 95.05%. Bhandari et al.45 used EfficientNetB5 to detect diseases of tomato leaves. This model achieved an accuracy of 99.07%. In this study, the authors used GradCAM and LIME techniques to visualize and interpret the predictions of the model. Bilal et al.81 developed a crop monitoring system that combines data-driven crop forecasting with a transfer learning model to detect pests and diseases, achieving strong results in multiple datasets. In82, the authors proposed a deep CNN architecture that incorporates fuzzy layers and optimization of chaotic particle swarms for the detection of cucumber disease. The proposed model achieved an accuracy of 98.00%.

Tariq et al.83 proposed a VGG16 deep learning model for classifying corn leaves. Layer-wise Relevance Propagation (LRP) was used to enhance the model’s transparency by generating intuitive and human-readable heat maps of input images. Natarajan et al.84 proposed a HerbNet model to classify leaves of plant diseases. The authors used occlusion sensitivity and GradCAM techniques to visualize and interpret the predictions of the model. Prashanthi et al.85 proposed a LEViT model to classify various leaf diseases of plants. To provide visual explanations of the model, the authors used the GradCAM technique. Al-Gaashani et al.86 proposed the EAMultiRes-DSPP model to improve the classification performance of plant diseases. CAM was used to visualize the discriminatory regions considered by the proposed model. In87, the authors proposed an MSCPNet to classify maize leaf diseases and used GradCAM for the model analysis.

Table 2 represents recent studies that have used XAI methods to compare the performance of classification models and interpret their results. Although these studies primarily focused on visualizing the features utilized by the models, they did not extensively assess and compare the ability of these methods to extract relevant features from the models. Only a few studies focused on quantitative analyzes and in their studies used the Jaccard index, accuracy score, and match ratio. In our proposed study, we incorporate several quantitative measures for a comprehensive evaluation.

Proposed methodology

The proposed methodology consists of three stages. Initially, eight pre-trained deep learning models were selected, and a rice leaf disease image data set was considered and pre-processed. These models were then trained using a transfer learning approach. Their classification performance was evaluated using conventional metrics such as accuracy, precision, recall, F1 score, and specificity to identify the best performing model.

In the second stage, the Local Interpretable Model-agnostic Explanations (LIME) method was used to visualize the features extracted by the models trained in the first stage. The effectiveness of feature selection for each model was then assessed using various similarity matching methods, including Intersection over Union (IoU), Dice Similarity Coefficients (DSC), Sensitivity, Specificity, Matthews Correlation Coefficient (MCC), Pixel-wise Accuracy (PWA) and Mean Absolute Error (MAE). This assessment aimed to determine the reliable model based on its feature selection capabilities.

In the third stage, a comparative analysis of the results of the first two stages was conducted to identify the models that consider the most significant features and exhibit high classification performance. To quantify the degree to which models rely on insignificant features, the overfitting ratio is used. By examining the overfitting ratio, we determined which models maintain high classification performance without overfitting, thereby ensuring better generalizability to real-world scenarios. The methodology used in this study is illustrated in Fig. 1.

First stage

Model selection

In this study, eight pre-trained models, ResNet5088, InceptionResNetV289, DenseNet 20190, InceptionV391, EfficientNetB092, Xception93, VGG1694 and AlexNet95, are used. These models are initialized with pre-trained weights from ImageNet96 before performing transfer learning. The selection of these specific models is motivated by the need to represent a wide range of complexities in deep learning architectures. By including models with varying numbers of layers and feature extraction capabilities, from simple models like AlexNet to more complex ones like InceptionResNetV2 and DenseNet 201, we ensured that the study covered various levels of model complexity.

The selected models were trained under a consistent experimental configuration to ensure fairness in comparison. The dataset, augmentation strategies, and hyperparameters were kept identical across models. The output layer of each model was modified to include four neurons, corresponding to the four classes of rice leaf diseases. This uniform training setup ensures that observed differences in performance or explainability are attributable to architectural differences, not experimental inconsistencies. The pre-trained models and their geometric features are tabulated in Table 3.

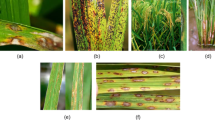

Data collection

Rice leaf disease data is collected from Kaggle97 repository, which is publicly available. This data set covers four main rice leaf diseases: Brown Spot (BS), Leaf Blast (LB), Bacterial Leaf Blight (BLB), and Leaf Scald (LSD). The external anatomy of rice plants and images depicting four types of rice leaf disease along with their symptoms are presented in Fig. 2.

Data augmentation & resizing

The collected rice leaf disease dataset contained 438 images in each class. We used data augmentation techniques to address limitations such as a smaller dataset size and class imbalance, which can lead to overfitting. These techniques, such as scaling, random flipping, cropping, rotating, and zooming, are applied to expand the size and diversity of the training dataset, resulting in a total of 4,016 images. After data augmentation, the number of images per class increased to 1,004 images. In data preprocessing, the images are resized to match the standard input size requirement of the respective deep learning models, i.e., 224 \(\times\) 224, 227 \(\times\) 227, or 299 \(\times\) 299 pixels. In our study, we split the dataset into a 60:40 ratio for training and testing purposes. This means 60% of the data was used to train the model and the remaining 40% was used to test. After splitting the data, we evaluated the performance of the model using several metrics, including classification accuracy, precision, recall, and the F1 score. This process was repeated 10 times and the average values of these performance metrics were calculated.

Transfer learning

We set the learning rate to 0.0001, the batch size to 32, and the number of epochs to 30. Categorical cross-entropy served as the loss function, while the softmax activation function was employed in the output layer. The output layer was replaced with four neurons representing four classes of rice leaf disease. The dropout function is used to prevent overfitting. We have selected these hyperparameter values based on our previous experience and domain knowledge14. Further experimentation is required to identify the optimal parameters to fine-tune the performance of the selected deep learning models. Advanced hyperparameter selection algorithms98,99 can be considered with increased computational overheads.

Performance evaluation using conventional metrics

In the first stage, conventional evaluation metrics named classification accuracy, precision, recall, and F1-score are used to measure the performance of the selected pre-trained models. The classification accuracy is used to quantify the effectiveness of the image classification model, calculated as the ratio of correctly classified images to the total number of classified images, as expressed in equation (1). Precision and recall are used to measure the accurate predictions of the models based on positive prediction rates, as defined in equations (2) and (3). The F1-score metric is used to evaluate the comprehensiveness and reliability of the model (4).

Here, True Positive (TP) is an image correctly classified as having a specific disease when it actually has that disease, while True Negative (TN) is an image correctly classified as not having a specific disease when it actually does not have that disease. False Positive (FP) is an image incorrectly classified as having a specific disease when it actually does not have that disease, and False Negative (FN) is an image incorrectly classified as not having a specific disease when it actually does have that disease.

Second stage

Generation of visual explanations by LIME

In this study, we used the LIME method to visualize the features considered by a deep learning model for decision-making44. We refer to these visual explanations produced by LIME as feature heatmap images. Feature heatmap images visualize influential features or regions and highlight their level of significance in decision making100,101. The importance of features is highlighted in the feature heatmap images by superimposing color-coded gradients. Each feature or region is assigned a color according to its significance, with warmer colors such as red denoting higher importance, while cooler colors such as blue indicate lower importance102.

LIME was selected for this study because of its unique ability to provide both visual explanations and rank feature importance indices, which are essential for masking and extracting the most influential regions of the input image. While other XAI techniques such as ICAM, Grad-CAM, and Grad-CAM++, generate heatmaps and saliency maps that help visualize model focus, they do not offer feature significance scores or explicit indices. This limits their utility for pixel-wise quantitative evaluation, which is critical for our framework. However, we acknowledge that LIME has known limitations, including instability across runs due to its reliance on random perturbations, and locality issues arising from its use of linear surrogate models to approximate complex decision boundaries. To mitigate these issues, we maintained consistent perturbation settings across all evaluations and averaged results across multiple samples.

LIME enhances feature visualization by providing detailed feature significance indices and values, essential to understand the contribution of each feature to model predictions. It generates perturbed instances to analyze how feature variations impact outputs, allowing for the quantification of feature importance. This capability enables the identification and masking of the required number of significant features, leading to deeper insights into the behavior of the model than other XAI methods GradCAM, and GradCAM++. Figures 3 and 4 compare three XAI techniques - GradCAM, GradCAM++, and LIME applied to selected deep learning models for leaf blast and leaf scald diseases, respectively. Each technique highlights the regions of infected rice leaves, showing how models focus on disease spots to make their predictions. Qualitative analysis of these XAI visualizations provides insights into model reasoning by highlighting key features but is subjective, time consuming, and lacks scalability. Its limitations emphasize the need for quantitative analysis, which offers objective, reproducible metrics to evaluate visualization accuracy, reliability, and alignment with model decision making.

Table 4 provides a detailed comparison of various XAI techniques used for visual and quantitative analysis of model decisions. This table presents key aspects such as visualization methods, their feature importance quantification capabilities, and extraction difficulty, while highlighting their suitability for visual and quantitative analysis. Based on the comparative analysis shown in Table 4, LIME is selected as the primary technique for our study due to its unique combination of feature importance quantification and feature extraction capabilities. As shown in the table, GradCAM and GradCAM++ excel in generating visual representations through heat maps and activation maps that show which image regions the model focuses on. In contrast, LIME provides a distinct advantage by quantitatively measuring the contribution of each feature to the final prediction through feature significance indices. This capability of LIME is particularly valuable for our research, as it enables precise measurement and interpretation of the decision making process of the model.

The visulization of the basic working mechanism and outcomes of LIME method is shown in Fig. 5. The images in Fig. 5 (a) displays the basic working mechanism of LIME, while Fig. 5(b) displays the masked image with the most significant 8 and 12 features generated from the feature heatmap images. Masked images are generated by masking out the most significant n features using their indices in feature heatmap images. These masked images are then converted into binary images by considering all background pixels as ’0’ and the remaining pixels as ’1’.

Performance evaluation using quantitative metrics

After generating masked binary images, the efficiency of the features considered by each model is measured using quantitative metrics to assess the similarity between the features selected by the models and the significant features marked in the ground-truth images. Quantitative metrics are used to compare two binary images to quantify the degree of similarity or overlap between the features represented by the two images. We used quantitative metrics such as IoU103, DSC104, Sensitivity, Specificity, MCC, PWA, and MAE defined in equations (5) - (11).

The intersection over union (IoU) is the area of intersection between the two regions over the area of their union. The IoU ranges from 0 to 1, where 0 indicates no similarity and 1 indicates perfect similarity between the two given binary images.

The Dice Similarity Coefficient (DSC) considers both the overlap and the individual sizes of the two images. The DSC ranges from 0 to 1, where 0 indicates no similarity and 1 indicates perfect similarity. It is calculated as follows:

Sensitivity or Recall is the ratio of the number of correctly predicted features (intersection of A and B) to the total number of pixels in the ground truth image (A). Sensitivity ranges from 0 to 1.

Specificity measures the ability of the model to correctly identify non-lesion pixels. It is the ratio of correctly classified negative pixels to the total number of actual negative pixels in the ground truth image. The specificity ranges from 0 to 1, where 0 indicates no similarity and 1 indicates perfect similarity.

The Matthews correlation coefficient (MCC) considers the ability of the model to correctly predict both the lesions and non-lesions. MCC ranges from -1 to 1, where 1 indicates perfect similarity, 0 indicates no correlation, and -1 indicates no similarity.

Pixel-wise accuracy (PWA) is the ratio of correctly classified pixels to the total number of pixels in the ground truth image.

The mean absolute error (MAE) quantifies the average magnitude of errors between the predicted and ground truth pixel values. It is calculated as the mean of the absolute differences between the corresponding pixel values in the two images.

where:

-

A is the pixel set corresponding to the lesion region in the ground truth binary image

-

B is the pixel set corresponding to the feature regions considered by a model

-

\(\lnot A\) is the pixels that do not belong to the lesion region in the ground truth binary image

-

\(\lnot B\) is all the pixels that are not considered as features by the model

-

n represents the total number of pixels in the images.

The intersection \((A \cap B)\) is the pixels present in both sets A and B, while the union \((A \cup B)\) is all the pixels present in either A or B. Here, |A|, |B| represent the total number of pixels in sets A, B, and \(|A \cap B|\), and \(|A \cup B|\) represents the total number of pixels in the intersection \(A \cap B\), and their union \(A \cup B\), respectively. \(|A \cap (\lnot B)|\) represents pixels that are part of the lesion region according to the ground truth but are not identified as the most significant features by the model, \(|(\lnot A) \cap B|\) represents pixels identified as the most significant features by the model but do not belong to the lesion region according to the ground truth. \(|(\lnot A) \cap (\lnot B)|\) pixels correctly identified as not belonging to the lesion region by both the model and the ground truth.

IoU and DSC measure the overlap between the two images, the specificity metric focuses on non-lesion pixels, and quantifies the ability of the model to accurately identify pixels that do not belong to the lesion area. Sensitivity measures the proportion of actual lesion pixels correctly identified by the model, indicating how well the model detects the lesion areas. MCC is a balanced metric that considers both lesion and non-lesion pixels. PWA measures the proportion of correctly classified pixels in the entire image, over the total number of pixels in ground truth. MAE measures the mean absolute difference between the pixel values of the ground truth and masked images, indicating the average magnitude of errors.

Third stage

In the third stage, we performed a comparative analysis between the results of the first and second stages. This analysis identified the best performing and reliable model that achieves the best classification performance while considering the most significant features. However, some models such as EfficientB0 achieved a high classification performance but failed to capture significant features. In contrast, models such as ResNet50 achieved good performance even when considering the least significant features. This indicates that they might be overfitting the training data.

To quantify the degree of overfitting, we introduced a new quantitative metric called the overfitting ratio defined in equation (12). The overfitting ratio ranges from 0 to 1, where 0 indicates that the predictions of the model perfectly align with the binarized ground truth, focusing only on the significant features, and 1 indicates that none of the predicted features overlap with the binarized ground truth, implying the model is focusing entirely on irrelevant features.

A higher overfitting ratio indicates that the model is focusing on the least significant feature details in the image and may not generalize well to unseen data. The models with the highest overfitting ratio are the most unreliable, while those with the lowest overfitting ratio are the most reliable.

where:

-

\(GT_B\) is the binarized ground truth image

-

\(MI_B\) is the binarized masked image

Example of Interpretation: Consider a rice leaf image with a bacterial leaf blight lesion, where the binarized ground truth image (\(GT_B\)) contains 1000 pixels marked as the diseased region. Suppose a model, using LIME, identifies 1200 pixels as significant features in its binarized masked image (\(MI_B\)), but only 600 of these pixels overlap with the ground truth.

The number of non-overlapping pixels is \(|MI_B - GT_B| = 1200 - 600 = 600\).

This result indicates that 50% of the features selected by the model are irrelevant to the actual diseased region, suggesting potential overfitting to non-disease-related patterns, such as background noise or healthy leaf areas. For comparison, ResNet50, as shown in Table 7, achieves a lower overfitting ratio of 0.284 for BLB with 10 features, indicating a stronger focus on relevant disease features and better reliability. In contrast, InceptionV3, with an overfitting ratio of 0.544, suggests greater reliance on insignificant features, reducing its generalizability.

The overfitting ratio quantifies how much the identified significant features of the model overlap with the actual ground truth significant features. High overfitting occurs when many features identified by the model do not correspond to the ground truth. A lower overfitting ratio indicates better model performance, with a higher correspondence between identified and ground truth features. The graphical representation of measuring overfitting is depicted in Fig. 6.

Quantitative metrics such as IoU and DSC measure feature overlap but do not specifically quantify the extent to which models focus on irrelevant features, a critical factor in evaluating overfitting. The overfitting ratio addresses this gap by providing a standardized quantitative measure of feature irrelevance, calculated as the proportion of non-overlapping pixels in the feature selection of the model relative to the ground truth. This is particularly valuable in agricultural applications like rice leaf disease detection, where subtle visual differences require precise feature identification, and overfitting to irrelevant features can lead to unreliable predictions in real-world scenarios with environmental variability.

Experimental analysis

This section presents the experimental analysis performed to evaluate both the classification performance and the interpretability of the selected models using a structured, quantitative approach. The evaluation is organized into three stages, with the corresponding metrics and their descriptions summarized in Table 5.

In the first stage, eight pre-trained deep learning models were fine-tuned on a pre-processed rice leaf disease dataset using transfer learning. Their classification performance was evaluated using conventional metrics such as accuracy, precision, recall, F1-score, and specificity, derived from the confusion matrix. These metrics assess the ability of the model to correctly classify healthy and diseased samples.

In the second stage, the interpretability of each model was examined using the Local Interpretable Model-agnostic Explanations (LIME) technique. The saliency maps generated by LIME were compared with expert-annotated ground truth masks to assess how accurately the models focused on disease-relevant regions. This pixel-level comparison was quantitatively measured using metrics including Intersection over Union (IoU), Dice Similarity Coefficient (DSC), Sensitivity, Specificity, Matthews Correlation Coefficient (MCC), Pixel-Wise Accuracy (PWA), and Mean Absolute Error (MAE).

In the third stage, the results from the previous stages were jointly analyzed to identify models that not only perform well but also rely on meaningful features. To support this analysis, a novel metric called the Overfitting Ratio was introduced. This metric quantifies the reliance on irrelevant regions by comparing the predicted saliency map with the ground truth, enabling the detection of overfitting in visual explanations. Together, these stages ensure the selection of models that are both accurate and interpretable for reliable use in real-world agricultural scenarios.

The experiments are carried out using the MATLAB R2023a platform on a workstation with an NVIDIA GeForce GTX 1080 Ti graphics card, 64 GB of RAM, and an Intel(R) Xeon(R) W-2125 processor with a clock speed of 4.00 GHz.

Performance comparison of transfer learning models with conventional metrics

In our study, we split the data set into a 60:40 ratio for training and testing purposes. This means 60% of the data was used to train the model and the remaining 40% was used to test. After splitting the data, we evaluated the performance of the model using several metrics, including classification accuracy, precision, recall, and the F1 score. We then repeated this process 10 times and subsequently computed the mean values of these performance metrics. A comparative analysis of the performance of selected pre-trained CNN models is presented in Table 6. The data in the table show that ResNet50 outperformed the other models in classification accuracy, achieving 99.13%, followed by InceptionResNetV2 and DenseNet201 with an accuracy of 98. 91% and 98. 75%, respectively. EfficientNetB0 and InceptionV3 achieved an accuracy greater than 95%, but VGG16 and AlexNet exhibited a lower classification accuracy compared to other pre-trained models.

In terms of precision, ResNet50 outperformed other models with the highest score of 98.12%, exceeding InceptionResNetV2 by 0.3%, EfficientNetB0 by 1.05%, VGG16 by 9.89%, and AlexNet by 12.54%. ResNet50 achieved the highest recall of 98.07%, which is higher than the other models. ResNet50 also scored the highest F1 score, which was 0.25% higher than InceptionResNetV2, 10.1% higher than VGG16, and 12.6% higher than the AlexNet. ResNet50 achieved the highest precision, recall, and F1 score among all models, demonstrating its superior classification performance on the selected dataset.

Fig. 7 illustrates the performance of the ResNet50 model on the rice leaf disease dataset through a confusion matrix and ROC curves. The confusion matrix exhibits high classification accuracy across the four disease classes with an overall accuracy of 99.13%. Most classes are predicted with near-perfect accuracy, with only minor misclassifications observed, particularly between Leaf Blast and Brown Spot. The ROC curves, generated using a one-vs-rest approach, further support the reliability of the model, showing AUC values above 0.95 for all classes. These results collectively confirm the strong discriminative ability and robustness of the ResNet50 model in multiclass leaf disease classification tasks.

Visualization of feature heatmap images and their masked images with most significant n features using various pre-trained CNN models using LIME: (a) Original leaf disease image; (b) Feature heatmap images generated by LIME method; (c) Masked images with most significant 10 features; (d) Masked images with most significant 12 features; (e) Masked images with most significant 14 features; (f) Masked images with most significant 16 features.

Performance comparison of feature selection capabilities with quantitative metrics

LIME is used to generate feature heatmap images that highlight feature regions in the images considered by a model for decision-making. We considered four classes of rice leaf disease, Brown Spot, Leaf Blast, Bacterial Leaf Blight, and Leaf Scald, for the XAI experiment. LIME is applied to visualize the features considered by the model for each class. However, in the experimental analysis, we present the complete results for the Brown Spot class as a representative example to maintain clarity and focus.

Models that exhibit high classification performance while effectively identifying the most significant features are likely to perform well in real-world scenarios. However, some models, despite achieving high accuracy, fail to capture these critical features, causing overfitting. Although these models may excel in training data, they are prone to underperform in real-world applications. This suggests that high classification accuracy alone is insufficient; models must also focus on the most relevant features to ensure reliability and robustness in real-world conditions.

To examine the decision-making process, we visualized and highlighted the most significant n features from the feature heatmap images. We have generated masked images with the most significant n features varying n from 8 to 18 in steps of 2 to analyze the impact of considering different numbers of features in the order of their significance. We chose moderate n values (e.g. 8, 10, 12, 14, 16, 18) to achieve a balance between capturing the most significant features and avoiding the inclusion of less significant ones. Selecting too few features risked missing the most significant feature regions, while selecting too many features diluted the explanation by including regions that were less significant. Further experimentation is required to identify the optimal number of features. The feature heatmap images and the masked images with the most significant n features of LIME for the image of the diseased leaf are shown in Fig. 8. The images in Fig. 8(a) is the original leaf disease image, Fig. 8(b) is the feature heatmap image generated by the LIME method, Fig. 8(c), Fig. 8(d), Fig. 8(e) and Fig. 8(f) are the masked images with most significant 10, 12, 14, and 16 features, respectively.

To calculate the similarity between two binary images, we experimented with the masked binary images of the most significant 10 features generated by LIME and the ground truth images. Initially, we have selected the pre-trained CNN model and diseased leaf image and used the LIME method to generate feature heatmap images. We have generated masked images with the most significant 10 features from these images and converted them into binary images by considering all background pixels as ’0’ and foreground pixels as ’1’. Next, quantitative metrics are applied between masked binary images and ground truth images to find agreement. The ground truth image is a reference image with manually segmented regions of interest using the graph cut algorithm in MATLAB segmentation toolbox105. Automatic image segmentation techniques can generate ground truth images of the diseased leaves. Subsequently, the similarity matching between the masked binary image of the most significant 10 features generated by LIME and the ground truth image is measured.

Intersection over Union of feature heatmap images with the most significant 10 features generated by LIME and ground truth: (a) Original BLB disease image; (b) Masked images with most significant 10 features; (c) Binary image of (b); (d) Ground truth image of (a); (e) Binary image of (d); (f) Intersection over Union of (c) and (e). Ground truth segmentation and masked binary image with the most significant 10 features from InceptionV3, EfficientNet B0, AlexNet, DenseNet201, Xception, VGG16, InceptionResNetV2, and ResNet50; Color coding: White: The overlapping area between the ground truth and the masked binary image. Pink: Pixels present in the masked binary image of the most significant 10 features. Green: Ground truth region.

Various quantitative metrics, such as IoU, DSC, sensitivity, specificity, MCC, PWA, and MAE, are used to measure agreement. Each metric provides a unique perspective on the degree of overlap and agreement between the features selected by the models and the ground truth. To further explore feature selection, similar experiments were conducted with masked binary images containing the most significant 12 and 14 features. The corresponding results are tabulated in Table 7.

Intersection over Union of feature heatmap images with the most significant 14 features generated by LIME and ground truth: (a) Original BLB disease image; (b) Masked images with most significant 14 features; (c) Binary image of (b); (d) Ground truth image of (a); (e) Binary image of (d); (f) Intersection over Union of (c) and (e). Ground truth segmentation and masked binary image with the most significant 14 features of InceptionV3, EfficientNet B0, AlexNet, DenseNet201, Xception, VGG16, InceptionResNetV2, and ResNet50; Color coding: White: The overlapping area between the ground truth and the masked binary image. Pink: Pixels present in the masked binary image of the most significant 14 features. Green: Ground truth region.

Taking into account the most significant 10 features, ResNet50 exhibited results with a high IoU of 0.432 and a DSC of 0.594. These values indicate a substantial match between the features considered by the model and the ground truth image. The high IoU and DSC values indicate more overlap between features considered by the model and ground truth regions.

Moreover, the ResNet50 model demonstrates balanced performance in terms of sensitivity, specificity, and MCC, indicating its ability to accurately identify both lesion and nonlesion pixels. Furthermore, the model achieves a PWA of 0.800, indicating that 80% of the pixels representing the actual features are correctly identified in the image. Furthermore, MAE is measured at 0.200, indicating a relatively low mean absolute difference between the pixel values of the ground truth and masked images.

In contrast, InceptionV3 exhibited a lower IoU of 0.295 and a DSC of 0.441, which indicates that the model considered less significant features, resulting in a poor match between the features considered by the model and the ground truth images. The low IoU and DSC values indicate a limited overlap between the features selected by the model and ground truth images. Furthermore, the model exhibits a pixel-wise accuracy of 0.712, indicating that approximately 71.2% of the pixels that represent the actual features are correctly identified across the entire image. Additionally, the mean absolute error is measured at 0.287, suggesting a higher average absolute difference between the pixel values of the ground truth and the masked images compared to other models.

The IoU value achieved in our experiments is 0.5. Several factors may contribute to this outcome, including imperfect binarization, spatial misalignments, improper feature extraction, and inconsistencies in ground truth labeling. Furthermore, limitations in XAI visualization techniques such as challenges in interpretability, lack of robustness, oversimplification of model behavior, and sensitivity to changes in input can also affect this result. These limitations may lead to misleading conclusions about the ability of the model to extract significant features. Further experimentation is essential to pinpoint the causes of these misalignments and to enhance model interpretation.

As the number of features increased to 12 and 14, ResNet50 maintained consistent performance, indicating its ability to select the most significant features effectively. However, EfficientNetB0 and InceptionV3 exhibited a high classification performance, but selected some less significant features. The performance comparison of the selected models on various quantitative metrics is shown in Fig. 9.

Existing XAI methods such as GradCAM, and GradCAM++ effectively visualize model-considered features and their significance using saliency maps and heatmaps. However, they do not provide feature significance values or indices, which complicates the identification of the most important features. In contrast, LIME not only visualizes these features but also offers feature significance indices and values, allowing us to accurately mask the most significant features identified by the model. Due to the inability to mask significant features using the other methods, we were unable to perform a comparative analysis.

Comparative visualization of feature heatmaps for masked images containing the most significant 10 and 14 features, identified by various pre-trained models for detecting rice leaf disease, is shown in Fig. 10 and Fig. 11. Each row corresponds to a model and includes six subimages: the original diseased leaf image, the most significant 10 and 14 masked images, a binary version of these masked images, the ground truth segmentation, a binary ground truth image, and an IoU overlay. The IoU overlay highlights the overlap between the predicted features and the ground truth, with white indicating an overlap area, pink indicating features identified by the model but not present in the ground truth, and green showing the actual diseased regions. ResNet50 provides the highest overlap, indicating strong agreement with the ground truth, while EfficientNetB0 and InceptionV3 show less overlap, indicating issues with accurately identifying diseased regions.

We incrementally selected the most significant n features (e.g., 8, 10, 12, 14, 16, 18) and observed that the IoU value improved initially with more features, indicating better alignment between the predictions of the model and the ground truth. However, beyond a certain point, adding more features introduced irrelevant ones, causing the IoU to decrease. This highlights the need for careful feature selection, as including too many features can reduce precision. Fig. 12 shows a graph representing the relationship between the number of selected features and the mean IoU values. The x-axis denotes the number of features, with increments of 8, 10, 12, 14, 16 and 18. The y-axis represents the mean IoU values, which reflect how well the predictions of the model align with the ground truth images. The graph shows that IoU values increase to 14 features, indicating that these are considered the most significant features. However, after 14 features, the IoU values started to decrease. This decrease indicates that the inclusion of additional features introduces less significant features, which negatively impacts IoU scores. Further experimentation is required to identify the optimal number of features to maintain a balance between relevance and accuracy.

Comparative analysis of model performance

We compared the results obtained in the first and second stages to identify the best performing and reliable models that effectively considered the most significant features and exhibited good classification performance. ResNet50 emerged with higher classification performance and effectively considered to have the most significant features compared to other pre-trained CNN models. However, while some models achieved high classification performance, they did not capture these significant features. In contrast, other models performed well even when considering the least significant features. InceptionV3 and EfficientNetB0 achieved a higher classification accuracy but considered less significant features compared to ResNet50. In contrast, VGG16 showed lower classification accuracy, but is considered to have more significant features compared to InceptionV3. This indicates that the models might be overfitting the training data.

Although InceptionV3 and EfficientNetB0 achieved good classification accuracy, more than 95%, they exhibited the lowest IoU, DSC, and PWA values. This indicates that these models might be considering less significant features, potentially due to overfitting, where the models considered the training data too well and were difficult to adapt to new unseen data. In this case, overfitting could have led the models to prioritize features of less significance to disease identification, resulting in a significantly lower overlap with the actual ground truth compared to the other models. Despite their high accuracy, the low similarity metrics of InceptionV3 and EfficientNetB0 raise concerns about reliabilty.

In existing studies, overfitting is often acknowledged but not quantified. We introduced a new metric called the Overfitting Ratio to quantitatively evaluate whether models prioritize significant features or concentrate on less relevant ones. The Overfitting Ratio ranges from 0 to 1, where 0 indicates that the predictions of the model perfectly align with the ground truth, demonstrating a focus on significant features. In contrast, a score of 1 means that there is no overlap between the predicted features and the ground truth, indicating that the model is entirely focused on irrelevant features. This quantitative approach provides a clearer understanding of how well models capture important information in their predictions.

We calculated the overfitting ratio for various pre-trained CNN models, considering different numbers of features (10, 12, and 14) identified as most significant by the LIME method. The mean overfitting ratio for all features of each model was then calculated and presented in Table 8.

From the results of the overfitting ratio, ResNet50 consistently achieved the lowest overfitting ratio in all disease classes, with mean values of 0.284, 0.325, and 0.385 for various numbers of features. This indicates that ResNet50 focuses on the most significant features within the diseased regions of the images. Since the model avoids overfitting to the least significant details, it is likely to perform well on unseen data, making it a more generalizable and reliable choice for real-world applications.

In contrast, InceptionV3 exhibited the highest overfitting ratio in all classes with mean values of 0.544, 0.552, and 0.569, respectively. A higher overfitting ratio indicates that the model selected features outside the diseased area. This dependence on less significant details could lead to performance degradation when introducing new data that are not present in the training set. Therefore, while InceptionV3 might achieve high classification accuracy in the training data, there is a risk of overfitting, making it less generalizable and potentially less reliable for real-world scenarios.

Finally, considering both classification performance and overfitting ratio, ResNet50 emerged as the optimal model for the detection of rice leaf diseases. It achieved the highest classification accuracy while exhibiting the lowest overfitting ratio. This indicates that ResNet50 effectively captures the most significant features within diseased regions, making it more likely to generalize well on unseen data.

Discussions

The objective of this study is not to create more efficient detection methods or to enhance the performance of existing ones. Instead, our focus is on developing a methodology for the quantitative assessment of XAI visualizations in image classification models. This addresses a gap in the literature, where most studies have relied solely on qualitative analysis. We introduced a methodology that evaluates the performance of deep learning models in terms of both classification accuracy and feature extraction capabilities. Future researchers can employ this evaluation procedure to assess their models not only on the basis of classification accuracy, but also to quantitatively validate XAI visualizations. This methodology is designed to be generic and applicable to image classification or recognition tasks and various domains such as healthcare, agriculture, ecology, environmental science, and more.

In our study, we proposed a three-stage methodology to verify the reliability of deep learning models. In the first stage, the transfer learning technique is used to train the eight selected pre-trained CNN models named ResNet50, InceptionResNetV2, DenseNet201, InceptionV3, EfficientNetB0, Xception, VGG16, and AlexNet on a rice leaf disease dataset. Conventional performance evaluation metrics such as classification accuracy, precision, recall, and F1 score were used to assess the efficiency of the models. Among these metrics, ResNet50 consistently outperformed the other models, achieving a high classification accuracy of 99.13%. It is observed from the first stage results that ResNet50 exhibits the highest classification performance.

In the second stage, we used an LIME technique to provide visual explanations, assessing the ability of the model to consider the most significant features. With the LIME method, we visualized the features considered by the models using feature heatmaps. Additionally, we generated masked images highlighting the ’n’ most significant features identified from the feature heatmap images.

To measure the similarity between the most significant features considered by the models and the actual features in the ground truth, we used several quantitative metrics such as IoU, DSC, sensitivity, specificity, MCC, PWA and MAE. From the results, it is observed that ResNet50 has a higher IoU, DSC, and PWA than all the other models. This indicates that the features considered by the ResNet50 model overlap well with the features of the ground truth image. However, InceptionResNetV2, VGG16, DenseNet201 and AlexNet also performed well. Although InceptionV3 and EfficientNetB0 models provide high classification performance, they had the lowest IoU, DSC, and PWA values. This indicates that the features considered by these models do not overlap well with the ground truth image features, which leads to overfitting.

In the third stage, we performed a comparative analysis (qualitative and quantitative analysis) between the results of the first and second stages. This analysis identified the best-performing and most reliable model that achieves the best classification performance while considering the most significant features. However, some models achieved high classification performance, but did not capture these significant features. In contrast, other models achieved good performance even when considering the least significant features, indicating that they might be overfitting the training data.

We identified the best-performing and reliable model that provides the best classification performance and considers the most significant features using the overfitting ratio as a metric. From the analysis of overfitting ratios, it is observed that the InceptionV3 and EfficientNetB0 models had the highest overfitting ratios. Despite their good classification performance, these models select the least significant features, making them the most unreliable models. ResNet50 has the lowest overfitting ratio and maintains good classification performance, which means that it selects the most significant features of the image. Such models are the most reliable and work well in real-time scenarios.

However, there are several limitations in this study that need to be addressed in future work. We used a publicly available dataset with four classes of rice diseases. Although this dataset is beneficial for initial explorations, it lacks the diversity found in real-world scenarios. Real images often include various environmental factors, such as noise and blur, which can affect the performance of the model106. Future work may consider using larger and more diverse datasets that include a wider range of disease classes and real-world conditions to improve the robustness and generalizability of the model. In addition, some rice leaf diseases and nutrient deficiencies exhibit visual similarities. For example, bacterial leaf streaks are similar to nitrogen deficiency, and brown spot lesions can be mistaken for potassium deficiency symptoms, which require careful identification to avoid misclassification.

We used only a few data augmentation techniques, while more advanced techniques could also be used to improve accuracy107. Similarly, the selection of pre-trained models in our study was done randomly and not based on specific performance metrics. We aimed to include models with varying depths and feature extraction capabilities to represent both simpler and more complex architectures. Future research may adopt a more systematic selection process, additional models, and more advanced architectures, and explore advanced versions of the selected models to improve the accuracy of classification. We set the hyperparameter values on the basis of our previous experience, but did not focus on the hyperparameter tuning process. There is no proper procedure for determining the number of features the model considers, which could potentially limit the ability of the model to capture the most significant features for accurate classification.

For visual explanations, our study used the LIME method to visualize the most significant features considered by the models. Although LIME provided valuable information, it has limitations, such as its dependence on local fidelity and potential instability in explanations108. These limitations would have been taken into account in our study, possibly affecting the reliability of the feature importance visualizations. Other XAI methods, such as GradCAM, GradCAM++ also provide a more complete understanding of the significant features that influence model decisions4. Furthermore, only a few quantitative metrics were used to assess the similarity between the ground truth images and the most significant feature images identified by the models, suggesting that additional metrics could enhance the robustness of the evaluation109. The study only experimented with a limited set of features (10, 12 and 14), and exploring a broader feature space could potentially improve model performance.

Another limitation of this study is the dependence on the LIME technique to generate feature visualizations. Although LIME is widely adopted for its model-agnostic and interpretable nature, it is inherently sensitive to input perturbations and random sampling. This can lead to inconsistent explanations, particularly for repeated runs or for similar inputs. Therefore, results based on LIME visualizations should be interpreted with caution and ideally validated with complementary methods.

Furthermore, although the proposed three-stage methodology is general in design and could theoretically be applied to other domains such as healthcare, remote sensing, ecology, and industrial quality inspection, its transferability may be constrained by the availability of reliable ground truth annotations for interpretability comparison. The performance of the proposed methodology may also vary depending on the visual characteristics and complexity of the target dataset. Customization of XAI methods and quantitative metrics may be required in such cases.

In fact, it would be beneficial to conduct preliminary experiments that analyze model behavior without relying on segmentation, as this could provide deeper insights into the model decision-making process. However, it is important to note that such qualitative analyses can be subjective and may lead to further debate regarding the results. We also acknowledge that reliance on segmentation can introduce transparency issues. Further experimental analysis is needed to study this behavior, which is beyond the scope of our current work.

Additionally, XAI methods should ideally indicate the exact zones of explanation, enabling users to observe which features or regions of the input image influenced the output. However, this depends on the capability of the existing XAI methods currently available. A study of the capabilities of these XAI visualizations is also beyond the scope of this work.

Conclusion and future directions

It is evident that although certain models showed strong classification performance, they frequently underperformed in practical applications due to overfitting to the training dataset. It clearly shows the drawbacks of relying solely on traditional classification performance metrics to assess deep learning models. To address this issue, we introduced a three-phase method that assesses not only decision making capabilities but also the decision making process.

In the first stage, we applied transfer learning techniques and identified the best performing model using conventional classification performance evaluation metrics. ResNet50 stood out as the superior model in terms of classification performance. In the second stage, we used the LIME method, an XAI, to visualize and assess the significance of the features considered by the models. We used quantitative metrics such as IoU, DSC, sensitivity, specificity, MCC, PWA, and MAE to measure the similarity between the features selected by the model and the ground truth. ResNet50 achieved high scores in IoU, DSC, and PWA, indicating its ability to select the most significant features. In contrast, InceptionV3, despite its high classification performance, showed the lowest scores on these metrics, indicating its selection of less significant features.

In the third stage, we used the overfitting ratio to quantify the degree to which models relied on insignificant features. This stage identifies the best performing and reliable model using quantitative and qualitative analysis of the results obtained from the first two stages. The findings of this study highlight the critical role of XAI in the examination and development of models that are not only accurate but also robust and generalizable, especially for real-time decision-making system applications such as disease classification in the medical and agricultural sectors.

Future studies may focus on several key approaches to address the limitations identified in current research. Future studies may consider datasets with a larger number of images and more classes to enhance the robustness and generalizability of our models. Additionally, while we employed basic data augmentation techniques, incorporating advanced techniques such as Generative Adversarial Networks (GAN) and Conditional Generative Adversarial Networks (CGAN) may improve the performance of the model.

Our study utilized eight pre-trained models, but future work may consider other state-of-the-art models for the same task based on availability of computational resources. We used the graph-cut segmentation algorithm to generate ground truth images. However, exploring automatic segmentation techniques and methodologies may reduce the burden of ground truth image generation. Furthermore, in our experiments, we have considered a limited set of feature sizes (8, 10, 12, 14, 16 and 18), but finding the optimal number of features that must be considered by the model remains a challenge.

In terms of model interpretability, while we used the LIME method to visualize the most significant features, future studies may explore other visualization techniques to provide a deeper and comprehensive understanding of the features influencing model decisions. In addition, researchers must focus on developing methods for refining the models to reduce overfitting and improve their generalization capabilities.

Future studies may address the limitations of current XAI models by conducting experiments that evaluate model behavior without relying on the extraction of the most significant features. Researchers are encouraged to investigate transparency issues related to segmentation techniques to improve the interpretability and performance of the model. This involves comparative studies to identify best practices and develop transparent methodologies. Furthermore, enhancing existing XAI methods to accurately indicate zones of explanation is crucial, potentially through innovative visualization techniques and algorithm development. To extend this study, future works may incorporate statistical significance testing, evaluate computational efficiency using hardware-level metrics such as FLOPs, and assess deployment feasibility in resource-constrained environments.

Data availability

In this work, the publicly available dataset was used. The data set is available through the link to Kaggle https://www.kaggle.com/datasets/dedeikhsandwisaputra/rice-leafs-disease-dataset.

Code availability

The code developed and used in this study has been uploaded and is available on GitHub and MATLAB File Exchange. These repositories include all the necessary scripts, tools, and instructions required to replicate the results presented in this work. The repository can be accessed via the following links: Github: https://shorturl.at/Github_Quant_XAI MATLAB File Exchange link: https://shorturl.at/MATLAB_Quant_XAI

References

Dethier, J.-J. & Effenberger, A. Agriculture and development: A brief review of the literature. Economic systems 36, 175–205 (2012).

Seck, P. A., Diagne, A., Mohanty, S. & Wopereis, M. C. Crops that feed the world 7: Rice. Food security 4, 7–24 (2012).

Arnal Barbedo, J. G. Digital image processing techniques for detecting, quantifying and classifying plant diseases. SpringerPlus 2, 1–12 (2013).

Simhadri, C. G., Kondaveeti, H. K., Vatsavayi, V. K., Mitra, A. & Ananthachari, P. Deep learning for rice leaf disease detection: A systematic literature review on emerging trends, methodologies and techniques. Information Processing in Agriculture (2024).

Khairnar, K. & Dagade, R. Disease detection and diagnosis on plant using image processing-a review. International Journal of Computer Applications 108, 36–38 (2014).

Ramesh, S. et al. Plant disease detection using machine learning. In 2018 International conference on design innovations for 3Cs compute communicate control (ICDI3C), 41–45 (IEEE, 2018).

Singh, S. P., Pritamdas, K., Devi, K. J. & Devi, S. D. Custom convolutional neural network for detection and classification of rice plant diseases. Procedia Computer Science 218, 2026–2040 (2023).

Bhimavarapu, U. Prediction and classification of rice leaves using the improved pso clustering and improved cnn. Multimedia Tools and Applications 1–14 (2023).

Chen, J., Chen, W., Zeb, A., Yang, S. & Zhang, D. Lightweight inception networks for the recognition and detection of rice plant diseases. IEEE Sensors Journal 22, 14628–14638 (2022).

Chen, L., Zou, J., Yuan, Y. & He, H. Improved domain adaptive rice disease image recognition based on a novel attention mechanism. Computers and Electronics in Agriculture 208, 107806 (2023).

Haridasan, A., Thomas, J. & Raj, E. D. Deep learning system for paddy plant disease detection and classification. Environmental Monitoring and Assessment 195, 120 (2023).

Upadhyay, S. K. & Kumar, A. A novel approach for rice plant diseases classification with deep convolutional neural network. International Journal of Information Technology 1–15 (2022).

Patel, B. & Sharaff, A. Automatic rice plant’s disease diagnosis using gated recurrent network. Multimedia Tools and Applications 1–20 (2023).

Simhadri, C. G. & Kondaveeti, H. K. Automatic recognition of rice leaf diseases using transfer learning. Agronomy 13, 961 (2023).

Yang, L. et al. Googlenet based on residual network and attention mechanism identification of rice leaf diseases. Computers and Electronics in Agriculture 204, 107543 (2023).

Al-Gaashani, M. S., Samee, N. A., Alnashwan, R., Khayyat, M. & Muthanna, M. S. A. Using a resnet50 with a kernel attention mechanism for rice disease diagnosis. Life 13, 1277 (2023).

Stephen, A., Punitha, A. & Chandrasekar, A. Designing self attention-based resnet architecture for rice leaf disease classification. Neural Computing and Applications 35, 6737–6751 (2023).

Zhang, C., Ni, R., Mu, Y., Sun, Y. & Tyasi, T. L. Lightweight multi-scale convolutional neural network for rice leaf disease recognition. Computers, Materials & Continua 74 (2023).

Narmadha, R., Sengottaiyan, N. & Kavitha, R. Deep transfer learning based rice plant disease detection model. Intelligent Automation & Soft Computing 31 (2022).

Sudhesh, K., Sowmya, V., Kurian, S. & Sikha, O. Ai based rice leaf disease identification enhanced by dynamic mode decomposition. Engineering Applications of Artificial Intelligence 120, 105836 (2023).

Aggarwal, M. et al. Pre-trained deep neural network-based features selection supported machine learning for rice leaf disease classification. Agriculture 13, 936 (2023).

Haruna, Y., Qin, S. & Mbyamm Kiki, M. J. An improved approach to detection of rice leaf disease with gan-based data augmentation pipeline. Applied Sciences 13, 1346 (2023).

Aggarwal, M. et al. Lightweight federated learning for rice leaf disease classification using non independent and identically distributed images. Sustainability 15, 12149 (2023).

Jain, S. et al. Automatic rice disease detection and assistance framework using deep learning and a chatbot. Electronics 11, 2110 (2022).

Kondaveeti, H. K., Ujini, K. G., Pavankumar, B. V. V., Tarun, B. S. & Gopi, S. C. Plant disease detection using ensemble learning. In 2023 2nd International Conference on Computational Systems and Communication (ICCSC), 1–6 (IEEE, 2023).

Yang, L. et al. Stacking-based and improved convolutional neural network: a new approach in rice leaf disease identification. Frontiers in Plant Science 14, 1165940 (2023).

Ahad, M. T., Li, Y., Song, B. & Bhuiyan, T. Comparison of cnn-based deep learning architectures for rice diseases classification. Artificial Intelligence in Agriculture 9, 22–35 (2023).

Zhang, Y., Zhong, L., Ding, Y., Yu, H. & Zhai, Z. Resvit-rice: A deep learning model combining residual module and transformer encoder for accurate detection of rice diseases. Agriculture 13, 1264 (2023).

Rajpoot, V., Tiwari, A. & Jalal, A. S. Automatic early detection of rice leaf diseases using hybrid deep learning and machine learning methods. Multimedia Tools and Applications 1–27 (2023).

Jiang, F., Lu, Y., Chen, Y., Cai, D. & Li, G. Image recognition of four rice leaf diseases based on deep learning and support vector machine. Computers and Electronics in Agriculture 179, 105824 (2020).

Islam, S. R., Eberle, W., Ghafoor, S. K. & Ahmed, M. Explainable artificial intelligence approaches: A survey. arXiv preprint arXiv:2101.09429 (2021).

Minh, D., Wang, H. X., Li, Y. F. & Nguyen, T. N. Explainable artificial intelligence: a comprehensive review. Artificial Intelligence Review 1–66 (2022).

Gerlings, J., Shollo, A. & Constantiou, I. Reviewing the need for explainable artificial intelligence (xai). arXiv preprint arXiv:2012.01007 (2020).

Razak, S. F. A., Yogarayan, S., Sayeed, M. S. & Derafi, M. Agriculture 5.0 and explainable ai for smart agriculture: A scoping review. Emerging Science Journal 8, 744–760 (2024).

Naga Srinivasu, P., Ijaz, M. F. & Woźniak, M. Xai-driven model for crop recommender system for use in precision agriculture. Computational Intelligence 40, e12629 (2024).

Mohan, R. J., Rayanoothala, P. S. & Sree, R. P. Next-gen agriculture: integrating ai and xai for precision crop yield predictions. Frontiers in Plant Science 15, 1451607 (2025).

Shams, M. Y., Gamel, S. A. & Talaat, F. M. Enhancing crop recommendation systems with explainable artificial intelligence: a study on agricultural decision-making. Neural Computing and Applications 36, 5695–5714 (2024).

Zhang, Y., Weng, Y. & Lund, J. Applications of explainable artificial intelligence in diagnosis and surgery. Diagnostics 12 (2022).

Guo, W. et al. Lemna: Explaining deep learning based security applications. In proceedings of the 2018 ACM SIGSAC conference on computer and communications security, 364–379 (2018).

Bach, S. et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one 10, e0130140 (2015).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. Advances in neural information processing systems 30 (2017).

Selvaraju, R. R. et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, 618–626 (2017).

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034 (2013).

Ribeiro, M. T., Singh, S. & Guestrin, C.“why should i trust you?” explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, 1135–1144 (2016).

Bhandari, M., Shahi, T. B., Neupane, A. & Walsh, K. B. Botanicx-ai: Identification of tomato leaf diseases using an explanation-driven deep-learning model. Journal of Imaging 9, 53 (2023).

Nahiduzzaman, M. et al. Explainable deep learning model for automatic mulberry leaf disease classification. Frontiers in Plant Science 14 (2023).

Wei, K. et al. Explainable deep learning study for leaf disease classification. Agronomy 12, 1035 (2022).

Hughes, D., Salathé, M. et al. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv preprint arXiv:1511.08060 (2015).

Zhou, C., Zhong, Y., Zhou, S., Song, J. & Xiang, W. Rice leaf disease identification by residual-distilled transformer. Engineering Applications of Artificial Intelligence 121, 106020 (2023).

Kisten, M., Ezugwu, A.E.-S. & Olusanya, M. O. Explainable artificial intelligence model for predictive maintenance in smart agricultural facilities. IEEE Access 12, 24348–24367. https://doi.org/10.1109/ACCESS.2024.3365586 (2024).

Bijoy, M. H. et al. Towards sustainable agriculture: A novel approach for rice leaf disease detection using dcnn and enhanced dataset. IEEE Access (2024).

Shovon, M. S. H. et al. Plantdet: A robust multi-model ensemble method based on deep learning for plant disease detection. IEEE Access (2023).

Altabaji, W. I., Umair, M., Tan, W.-H., Foo, Y.-L. & Ooi, C.-P. Comparative analysis of transfer learning, leafnet, and modified leafnet models for accurate rice leaf diseases classification. IEEE Access (2024).

Deng, R. et al. Automatic diagnosis of rice diseases using deep learning. Frontiers in Plant Science 12, 701038 (2021).

Rahimiaghdam, S. & Alemdar, H. Evaluating the quality of visual explanations on chest x-ray images for thorax diseases classification. Neural Computing and Applications 1–17 (2024).

Quach, L.-D., Quoc, K. N., Quynh, A. N., Thai-Nghe, N. & Nguyen, T. G. Explainable deep learning models with gradient-weighted class activation mapping for smart agriculture. IEEE Access 11, 83752–83762 (2023).

Alqahtani, Y. et al. An improved deep learning approach for localization and recognition of plant leaf diseases. Expert Systems with Applications 230, 120717 (2023).

Mankodiya, H. et al. Od-xai: Explainable ai-based semantic object detection for autonomous vehicles. Applied Sciences 12, 5310 (2022).

Kimori, Y. A morphological image preprocessing method based on the geometrical shape of lesions to improve the lesion recognition performance of convolutional neural networks. IEEE Access 10, 70919–70936 (2022).

Lu, Y., Zhang, X., Zeng, N., Liu, W. & Shang, R. Image classification and identification for rice leaf diseases based on improved woacw_simplenet. Frontiers in Plant Science 13, 1008819 (2022).