Abstract

Constrained by the closed structure, it is difficult to visually detect the insulation defects inside large oil-immersed transformers. For this issue, this paper uses a self-developed micro-robot for visual inspection. Since the defects to be detected have different shapes, large differences in size, and complex background, accurately and quickly detecting the defect target is the key for the micro-robot to complete the internal inspection. To this end, a novel network consisting of C2f-DySnake module and Efficient Pyramid module for Carbon Trace Segmentation (CDEP-CTSeg) is proposed in this paper. To address the overexposure and color distortion of carbon traces resulting from variable imaging distances and fluctuating lighting conditions, an improved Retinex image enhancement algorithm is proposed for image preprocessing. It can enhance local contrast and detail at multiple scales while improving overall contrast and brightness. Furthermore, to tackle the inaccurate boundary segmentation of complex carbon traces, such as branch-like forms or other elongated continuous forms, the C2f-DySnake module is integrated into the backbone of the YOLOv8 framework, which is capable of capturing features of branched structures by adaptively focusing on elongated and curved local details. Additionally, to address the missed detection of small carbon traces resulting from significant size variations, an EHSPAN feature pyramid network is designed and integrated into the Neck network. It improves the model’s ability to capture relevant characteristics and reduces missed detections for smaller targets by merging selected information with high-level features. Experimental results showed that compared with the traditional YOLOv8-seg model, the recall rate, precision, and mAP50 of the proposed CDEP-CTSeg network were improved by 2.3%, 2.8%, and 2.8% respectively. Furthermore, the proposed network improved the Average Precision of clustered and dendritic carbon traces by 1.9% and 3.7% respectively. It indicates that the proposed CDEP-CTSeg network achieves fast and accurate segmentation of insulation defects inside the transformer, which contributes to the stable operation of large oil-immersed transformers.

Similar content being viewed by others

Introduction

Large oil-immersed transformers are one of the critical hub devices in the power system. Operational status assessment of the transformers can enable preventive measures to avoid major electricity accidents. This is of great significance for enhancing the safety and stability of the power system. However, due to the metal-closed shell of large power transformers, internal inspection without disassembling the transformer has become extremely challenging. The traditional analysis of dissolved gases in oil (e.g., the three-ratio method, the modified three-ratio method1,2 etc.) makes it difficult to accurately determine the location, type, and severity of internal defects inside the transformers3,4,5,6. With advancement in robotics and artificial intelligence, micro-robots can enter the interior of large oil-immersed transformers for visual inspection without damaging the internal structure, thus more intuitively identifying the location of insulation defects. However, due to the complex internal structure of transformers, the extensive range of inspection, and the varying shapes and sizes of detected defects (e.g., transformer surface discharge carbon traces), intelligent and quick detection of insulation defects under the complex background has become the key for the micro-robot to efficiently complete transformer internal inspection tasks.

In recent years, the gradual maturity of image segmentation algorithms based on deep learning has provided effective new ideas for the detection of transformer internal insulation status. Instance segmentation algorithms can not only recognize target categories and distinguish different targets of the same category but also segment targets of different categories, presenting good performance in defect detection7,8,9. Existing instance segmentation methods are mainly divided into two-stage and single-stage instance segmentation10,11,12,13. Two-stage instance segmentation methods are mainly divided into detection-based and segmentation-based categories. For Mask R-CNN, a representative detection-based method, image targets are first framed by a detector, then pixel-level masks are generated for each instance within the candidate region. Although having significant advantages in accuracy, but this method is difficult to be trained and deployed due to the slow inference speed, complex model, and high computational requirements, limiting its application on low-performance engineering equipment. In contrast, the segmentation-based two-stage method skips the detection step, directly performs pixel-level semantic segmentation on the image, and clusters pixels into instances to obtain masks. However, this method heavily relies on mask annotations during training, resulting in lower segmentation accuracy and poor generalization ability. Single-stage instance segmentation can simultaneously achieve target classification, detection, and mask generation, with higher frame rates and smaller model sizes, making it easier to train, adjust, and deploy in engineering applications. YOLACT is the first successful single-stage model for instance segmentation, dividing the instance segmentation task into predicting prototype masks and mask coefficients as two parallel sub-tasks, thereby improving the model’s inference efficiency14. Subsequently, WANG et al. proposed SOLO, which distinguished and segmented instances through central position and object size15. Additionally, SOLO used a Feature Pyramid Network to assign target instances of different scales to different FPN levels for prediction, thereby alleviating the problem of target overlap to some extent16. Xie et al. proposed the PolarMask model, which transforms distance and angle using polar coordinates, where the origin of polar coordinates represents the instance center17. It obtained exact point positions on the contour and represented dense distance regression with the distance from the origin to the contour points, which could achieve separate processing of center samples and dense distance regression. Kirillov et al. proposed the PointRend model to obtain high-quality instance masks, whose lightweight prediction branch could initially obtain a rough mask, then select boundary points, and enrich the details of the boundaries through repeated layer-by-layer iterations18.

The YOLO series algorithms were initially proposed by Redmon et al. in 2016, primarily targeting real-time object detection tasks19. The development of its instance segmentation capability began in 2020, with YOLOv5-seg proposed by the Ultralytics team based on the YOLACT framework20. YOLOv5-seg achieved a balance between inference speed and segmentation accuracy with lightweight structures and optimized training strategies. Subsequently, YOLOv8-seg further improved both segmentation precision and efficiency. Currently, YOLO series segmentation models exhibit outstanding performance in cross-domain instance segmentation tasks21,22,23: for automatic construction monitoring, they enable real-time status monitoring of construction sites24; in agriculture domain, they accurately identify crop diseases and contribute to intelligent agricultural optimization25; and for infrastructure inspection, they efficiently complete defect identification and evaluation of various infrastructures26. In power industry applications, this technology is mainly used for transmission line condition monitoring27 and insulator defect detection28. However, with respect to transformer insulation condition monitoring, the segmentation of carbon traces generated by oil-paper insulation surface discharge still faces severe technical challenges. Due to the randomness of spatial location and discharge intensity, the morphology of carbon traces is highly complex with significant size variations. It poses much stricter requirements for the model’s global perception and local feature extraction capabilities.

Given the limitations of current methods in effectively detecting the internal insulation state of the transformers, this paper proposes the CDEP-CTSeg network for carbon trace segmentation. The main contributions of this paper are as follows:

-

1.

To address the blurriness and indistinct carbon trace features that occur in the dark environments inside the transformers, this paper proposes an enhanced Retinex algorithm for preprocessing images. It evaluates local contrast at multiple scales to enhance detail while improving both contrast and brightness, effectively alleviating overexposure and color distortion.

-

2.

To address the inaccurate boundary segmentation in complex carbon trace defects, the C2f-DySnake module is integrated into the backbone network. This modification enables the model to accurately capture features of tree-like structures by adaptively focusing on thin and curved local geometries, thereby enhancing its capability to segment details in images characterized by elongated continuous forms like tree-like carbon traces.

-

3.

To address the missed detections of small carbon traces resulting from considerable size variability, an EHSPAN feature pyramid network is designed and integrated into the Neck network. It assigns greater weights to higher-level features and filters out lower-level information with a channel attention mechanism, which improves the model’s ability to capture relevant characteristics and reduces missed segmentations for smaller targets by merging selected information with high-level features.

Design of segmentation network for detecting carbon traces

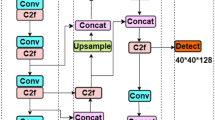

Baseline framework

In this paper, YOLOv8-seg is chosen as the baseline framework for carbon trace segmentation. The YOLOv8 network consists of a Backbone layer as the main body, a Neck layer for feature enhancement, and a Head layer at the output end. The network structure is shown in Fig. 1. Here is a brief explanation of each part of YOLOv8-seg.

The Backbone is made up of CBS modules, C2f modules, and SPPF modules, adopting the CSP concept from YOLOv5. The main modification is the replacement of the C3 module with the C2f module. The C2f structure enhances the feature fusion capability of the convolutional neural network, increases inference speed, and achieves further lightweight design.

The Neck part refers to the ELAN structure originating from the YOLOv729, replacing the C3 module from YOLOv5 with the more gradient-rich C2f structure. This allows for richer gradient information while maintaining a lightweight design and adjusts the number of channels according to the model scale, significantly improving model performance. SPPF fuses features at different scales. The Neck primarily serves for feature fusion, making full use of features extracted by the backbone network with an FPN + PAN structure, enhancing semantic expression and localization capabilities across multiple scales.

The instance segmentation head of YOLOv8-seg adopts a decoupled multi-branch architecture. This module receives multi-scale feature inputs from the feature pyramid network and employs processing heads with identical structures but independent parameters for feature maps of different resolutions, which realizes adaptive segmentation of multi-scale targets. Each segmentation head contains three parallel subnetworks, each of which consists of two convolutional modules and one 2D convolutional layer. These subnetworks are responsible for predicting bounding boxes, target categories, and target masks respectively. During training process, three subnetworks are used to calculate bounding box loss, classification loss, and mask loss respectively. This decoupled design allows each branch to focus on feature learning for specific tasks, avoiding interference between different tasks. Additionally, the YOLOv8-seg segmentation head generates a Prototype Mask based on the feature map closest to the original image size. This feature map serves as the foundation for primitive segmentation features and supports subsequent mask prediction. This structure design enables the network to effectively perform pixel-level segmentation while maintaining detection capabilities.

Currently, YOLOv8 faces issues of missed detection and false detection when dealing with the complex, diverse, and irregular features of discharge carbon trace defects under the complex internal background of the transformer. To address this issue and improve the model’s segmentation efficiency for defects, this paper designs a novel segmentation network CDEP-CTSeg:

-

1.

To tackle the problem of inaccurate segmentation of complex continuous carbon trace boundaries such as dendritic carbon traces, this paper introduces the C2f-DySnake module into the backbone network. This module can accurately capture the characteristics of dendritic structures by adaptively focusing on slender and curved local structures, thereby significantly enhancing the model’s detail segmentation capability when processing elongated continuous images like dendritic carbon traces.

-

2.

To address the issue of smaller carbon traces being easily missed resulting from large differences in carbon trace sizes, this paper designs the EHSPAN feature pyramid. Based on the HS-FPN model and combined with PANet features, EHSPAN adds a bottom-up fusion path to the original top-down fusion path. This pyramid enhances the model’s feature extraction capability by assigning weights to high-level features, using the ELA attention mechanism for channel attention module filtering to remove low-level feature information, and then fusing the processed information with high-level features. Additionally, a P2 detection layer is added on this basis to further enhance the network model’s ability to capture small targets, effectively improving the issue of small target missed detection.

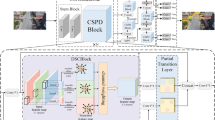

The improved network model structure is shown in Fig. 2.

Improvement of the backbone network based on the DySnakeConv module

Based on the characteristics of elongated and continuous dendritic carbon traces in the dataset used in this paper, this study proposes a novel CDEP-CTSeg network by incorporating a dynamic serpentine convolution module, DySnakeConv.

The DySnakeConv is primarily designed for the segmentation of tubular structures, such as blood vessels, roads, and branches. The features of the dendritic carbon traces in our dataset are similar to these structures, making them well-suited for the advantages of dynamic serpentine convolution. The main challenge in the segmentation process stems from the delicate local structural features and complex global morphological variations. By adaptively focusing on elongated and curved local structures, dynamic serpentine convolution accurately captures the characteristics of dendritic structures, enabling precise segmentation.

Next, we will introduce how Dynamic Snake Convolution extracts local features of dendritic structures: Given a standard 2D convolution coordinate as K, with the center coordinate being: Ki = (xi, yi) A 3 × 3 convolution kernel K with dilation 1 is represented as:

To enable the convolutional kernel to more flexibly focus on the elongated and continuous features of dendritic carbon traces, a deformation offset Δ is introduced. However, if the model is allowed to freely learn the deformation offset, the receptive field often deviates from the target, especially under dendritic structures. Therefore, we use an iterative strategy to sequentially select the next observation position for each target to be processed, thereby ensuring the continuity of attention and preventing the receptive field from extending too far due to large deformation offsets.

In DySnakeConv, we straighten the standard convolution kernel in the x - and Y-axis directions. Considering each convolution kernel of size 9, and taking the X-axis direction as an example, the specific position of each grid in K is expressed as: \({K_{i \pm c}}=\left( {{x_{i \pm c}},{y_{i \pm c}}} \right)\), where\(c \in \left\{ {0,1,2,3,\left. 4 \right\}} \right.\) representing the horizontal distance to the central grid. The selection of each grid position in the convolution kernel K is an additive process. Starting from the center position, the position away from the center grid depends on the position of the previous grid: \({K_{i+1}}\)compared with\({K_i}\), the offset \(\Delta =\left\{ {\delta \left| {\delta \in [ - 1,1]} \right.} \right\}\) is increased. Therefore, the offsets need to be summed to ensure that the convolution kernel conforms to the linear morphology. Figure 3 changes in the X-axis direction:

The formula in the y-axis direction becomes:

Since the offset Δ is usually a fraction, bilinear interpolation is implemented as follows:

K represents the fractional position in Formula 2 and Formula 3, K’ iterates over all integer spatial positions, B is the bilinear interpolation kernel, which is divided into two one-dimensional kernels, as shown below:

As shown in Fig. 3, due to the changes in two dimensions (x-axis and y-axis), DySnakeConv covers a range of 9 × 9 during the deformation process. The design of DySnakeConv aims to better adapt to elongated continuous structures, thereby enhancing the perception of key features.

The C2f-DySnake module is obtained by replacing the convolution in the C2f module, and its structural diagram is shown in Fig. 4.

Improvement of neck network based on HS-FPN pyramid

Considering the unique characteristics of dimensional differences among the targets to be detected, an advanced feature selection fusion pyramid HS-FPN is designed to facilitate multi-level integration. In HS-FPN, high-level features act as weights to filter low-level feature information through a channel attention module. The filtered information is merged with high-level features, thereby enhancing the model’s feature expression capability. This section will first describe the working principle of the HS-FPN pyramid. Based on this, the article subsequently improves the HS-FPN to make it more effective for the characteristics of internal discharge carbon trace in transformers30.

The structure of HS-FPN is shown in Fig. 5. Consisting of the feature selection module and the feature fusion module. Firstly, feature maps of different scales undergo a screening process in the feature selection module. Then, through the SFF(selective feature fusion) mechanism, the high-level and low-level information in these feature maps are collaboratively integrated. This fusion produces features with rich semantic content, which is very useful for detecting subtle features in carbon trace images, thereby enhancing the model’s detection capability.

Attention Mechanism

Attention mechanisms in computer vision refer to the process of weighting important regions in the input data during image recognition, object detection, semantic segmentation, and so on, which aims to improve the accuracy and performance of the model. The principle of attention mechanisms can be understood as the model focusing on important information from the input data while ignoring irrelevant information to achieve specific tasks. Therefore, attention mechanisms can adjust the degree of focus on each input data by weighting different inputs, thereby enhancing model performance.

The ELA (Efficient Local Attention) module accurately captures the location of regions of interest, maintains the dimensionality of the input feature map channels, and retains its lightweight characteristics, as shown in Fig. 6. Similar to Channel Attention CA, ELA uses strip pooling in the spatial dimension to obtain horizontal and vertical feature vectors, maintaining a narrow kernel shape to capture long-range dependencies and prevent unrelated areas from affecting label predictions, thus generating rich target position features in each direction31,32. ELA independently processes the aforementioned feature vectors in each direction to obtain attention predictions, which are then combined using multiplication operations to ensure accurate location information of the region of interest. Specifically, in the second step, one-dimensional convolutions are applied separately to interact locally with the two feature vectors, allowing adjustment of the kernel size to indicate the range of local interactions. The resulting feature vectors undergo group normalization GN and non-linear activation functions to produce positional attention predictions in both directions33. The final positional attention is obtained by multiplying the positional attention from both directions. Compared to two-dimensional convolutions, one-dimensional convolutions are more suitable for processing sequential signals and are lighter and faster. GN exhibits comparable performance and stronger generalization ability compared to Batch Normalization BN.

The purpose of the Efficient Local Attention (ELA) module is to enhance the accurate recognition of regions of interest or important object locations in deep CNN. To explain its working principle clearly, this paper will restate the two steps involved in CA: embedding coordinate information and generating coordinate attention.

In the first step, strip pooling is used instead of spatial global pooling to capture long-range spatial dependencies: considering the output of a convolutional block denoted as \({R^{H \times W \times C}}\), where H, W, and C represent the height, width, and channel dimensions (i.e. the number of convolutional kernels). To apply strip pooling, average pooling is performed on each channel within two spatial ranges: horizontally (H,1) and vertically (1, W). This results in the outputs of the \(Cth\) channel at height H and the \(Wth\) channel at width being represented by Formulas 6 and 7 respectively.

In the second step, the feature maps generated by Formulas 6 and 7 are aggregated into a new feature map. This new feature map is then fed into the shared transformation function and batch normalization (BN). This process can be expressed as follows:

In the above description, cascading operations [-;-] along spatial dimensions are represented. \(\delta\) stands for nonlinear activation function. After encoding the horizontal and vertical directions, the intermediate feature map is obtained, which is expressed as \({R^{C/{\text{r}} \times (H+W)}}\). Then, \({f^h} \in {R^{C/r \times H}}\) and \({f^w} \in {R^{C/r \times W}}\) are obtained along the spatial dimension. In addition, two additional 1 × 1 convolution transformations, denoted \({F_h}\),\({F_w}\).They are applied to generate a tensor with the same number of channels as the input X.

To reduce the complexity of additional modules, the number of channels in f is usually reduced by an appropriate reduction rate. The resulting output of \(g_{c}^{h}\)and \(g_{c}^{w}\)serves as the attention weights corresponding to the horizontal and vertical directions, respectively. Finally, the output of the CA module can be expressed as Y.

Through Formulas 8, 9, and 10, we can note that the reduction in channel dimensions is intended to reduce the complexity of the model, but this leads to an indirect relationship between channels and their corresponding weights, which may adversely affect the overall attention prediction. In addition, batch normalization (BN) is not conducive to the effective generalization of coordinate attention (CA), while GN can solve these shortcomings. The positioning information embedded in Formulas 6 and 7 is a sequence signal in the channel, and 1D convolution is usually more suitable than 2D convolution for processing these sequence signals. 1D convolution is not only good at processing sequential signals but also more lightweight than 2D convolution. In the case of CA, although 2D convolution is used twice, it uses a 1 × 1 convolution kernel, which limits feature extraction capabilities. Therefore, ELA uses 1D convolution with a kernel size of 5 or 7, which effectively enhances the interactive capability of positioning information embedding. This improvement enables the entire ELA to accurately target areas of interest.

Based on the embedding of positioning information obtained from Formulas 6 and 7, ELA uses a novel coding method to generate accurate location attention maps. The process is described in detail as follows:

The \({z_h}\) and \({z_w}\) obtained by Formulas 6 and 7 not only capture the global sensory fields but also contain precise location information. To make use of these features effectively, we have designed a simple processing method. We apply one-dimensional convolution to enhance position information in both horizontal and vertical directions. We then use g to process the enhanced position information, resulting in a representation of position attention in both horizontal and vertical directions, as described in Formulas 12 and 13.

In the above description, we use \(\sigma\) to represent the nonlinear activation function, \({F_h}\)and \({F_w}\)to represent the one-dimensional convolution. We choose to set the convolution kernel size of \({F_h}\) and \({F_w}\)to either 5 or 7. In order to strike a balance between performance and the number of parameters, the number of groups for a one-dimensional convolution is usually chosen as \(i{n_p} lanes\) or \(i{n_p} lanes/8\). The position attention representation in the horizontal and vertical directions is denoted by\({y^h}\)and\({y^w}\)respectively. Finally, we can obtain the output of the ELA module by applying Formula 14, expressed as Y.

Feature selection module

In this process, the attention module and the Dimension Matching (DM) module play a key role. The attention module first processes the input feature map. After this feature map is processed by two pooling layers -global average pooling and global Max pooling, the resulting features are combined together. Then, the Sigmoid activation function is used to determine the weight value of each channel, which results in the weight of each channel.

Pooling can reduce the dimension of the feature map and reduce the dimension of the feature map. It eliminates redundant data, compresses features, and reduces the number of parameters. And achieve translation, rotation, and scale invariance. In the attention module, global average pooling and global Max pooling are used to calculate the average and maximum value of each channel. The main purpose of Max pooling is to extract the most relevant data from each channel, while average pooling is to uniformly obtain all data from the feature maps to minimize excessive loss. Therefore, the combination of these two pooling methods makes it convenient to extract the most representative information from each channel while minimizing the information loss. The filtered feature maps are subsequently generated by multiplying the weight information with the corresponding scale feature maps for dimension matching. Dimensional matching of feature maps across various scales is crucial before feature fusion, as they have different numbers of channels. To achieve this, the DM module applies a 1*1 convolution to reduce the number of channels per scale feature map to 256.

Feature Fusion Module

The multi-scale feature maps generated by the Backbone network contain rich semantic information, but the object localization is relatively coarse. In contrast, low-scale features provide precise object locations but contain limited semantic information. To solve this problem, we can directly sum the pixel values of the upsampled high-level features and low-scale features to add semantic information to each layer. However, this technique does not perform feature selection and simply adds the pixel values of multiple feature layers. To solve this problem, the authors proposed the SFF module. This module filters the necessary semantic information contained in the low-scale features by using high-level features as weights. A schematic diagram of the SFF module structure is shown in Fig. 7.

Given an input high-level feature \({f_{high}} \in {R^{C \times H \times W}}\)and an input low-level feature\({f_{low}} \in {R^{C \times {H_1} \times {W_1}}}\), the high-level feature is expanded using a transposed convolution (T-Conv) with a step size of 2 and a kernel size of 3 × 3, resulting in a feature size of \({f_{\mathop {high}\limits^{ \wedge } }} \in {R^{C \times 2H \times 2W}}\). Then, in order to unify the dimensions of high-level features and low-level features, the bilinear interpolation method is used to upsample or downsample high-level features to obtain \({f_{att}} \in {R^{C \times {H_1} \times {W_1}}}\). Then, the CA module is used to convert the high-level features into the corresponding attention weights, to filter the low-level features when obtaining features with consistent dimensions. Finally, the filtered low-level features were fused with the high-level features to enhance the feature representation ability of the model and obtain the. \({f_{out}} \in {R^{C \times {H_1} \times {W_1}}}\)

Formulas 15 and 16 illustrate the fusion

Based on the HS-FPN feature fusion pyramid network, the EHSPAN feature pyramid designed in this paper makes the following improvements:

-

1.

In the task of instance segmentation, it is necessary to accurately identify the edge of the object, and the shallow network contains a large number of detail features such as edges. In order to give full play to the feature fusion ability of the pyramid, this paper combines the HS-FPN pyramid with PANet to obtain HS-PAN. On the basis of the original top-down fusion path, a bottom-up fusion path was added, and the edge detail features in the bottom layer were used to enrich the feature information and reduce the loss of details.

-

2.

Based on the introduction of ELA’s efficient local attention mechanism, it can be seen that it is an improved attention module proposed to solve the defects of the CA attention mechanism. Therefore, this paper will use the ELA module to replace the CA module in the original model network to obtain better performance.

-

3.

Given the small target discharge traces existing in the discharge carbon traces inside the transformer in this paper, the P2 segmentation head is added to the P3, P4, and P5 scale segmentation heads in the original structure to improve the segmentation ability of small targets.

The structural diagram of the EHAPAN feature pyramid is shown in Fig. 8.

Image preprocessing of transformer carbon trace defect

The interior of the transformer constitutes a closed space filled with transformer oil, where the environment is dark. Pure transformer oil presents a slightly transparent pale-yellow hue. However, with the extension of its usage time, the oil color gradually transforms into light brown. These circumstances lead to issues such as low contrast, color distortion, poor clarity, and uneven illumination in the carbon trace images collected by the internal inspection robot under the oil. To address these problems, this paper proposes the application of the Retinex image enhancement technology for the optimization of the collected images34.

Retinex is an algorithm frequently utilized in image enhancement, with its core concept being the adjustment of the contrast and brightness of the image while preserving detailed information. Retinex is a combination of two words: retina and cortex. Hence, the Retinex theory is often referred to as the retina-cortex theory. The initial model based on the Retinex theory put forward a human visual system (i.e.HVS), explaining why the human eye does not correspond to the wavelength and brightness of light. In this theory, the color information observable of an object is determined by two factors: the object’s own reflective property and the intensity of the light surrounding the object. However, in accordance with the theory of color constancy, an object has its inherent attribute that is not influenced by light. The color of an object is determined by its ability to reflect light of different wavelengths. The fundamental idea of the Retinex theory is that the intensity of the light determines the dynamic range of all the pixel points in the original image, while the inherent property of the original image is determined by the object’s own reflection coefficient. That is to assume that the product of the reflected image and the illuminated image results in the original image, as shown in Fig. 9. Therefore, the approach of Retinex is to eliminate the influence of light and retain the inherent attributes of the object35.

According to Retinex theory, an image is composed of an illumination image and a reflection image. The illuminance image refers to the information of the incident component of the object, denoted by L (x, y). The reflected image refers to the reflected part of the object, denoted by R(x, y). When an original image data l(x, y) is obtained, the calculated R(x, y) is the enhanced image. It is expressed as:

At present, the main implementations of the Retinex algorithm are single-scale Retinex (SSR), multi-scale Retinex (MSR), multi-scale adaptive gain Retinex (MSRCR), multi-scale Retinex algorithm with color protection (MSRCP).

Single scale Retinex (SSR) algorithm assumes that the illumination changes in the image mainly focus on the low-frequency components, so the image only needs to be filtered once to remove the low-frequency components, and then the obtained high and medium-frequency components are enhanced36.

Multi-scale Retinex algorithm (MSR) decomposes the image into images of different scales, and the image of each scale is enhanced to retain the detailed information of different scales37.

Multi-scale adaptive gain Retinex algorithm (MSRCR) combines the previous two algorithms. Firstly, it decomposes the image into different scales, then uses an adaptive gain function to enhance the image of each scale, and finally reconstructs the image to achieve the enhancement effect38.

In order to solve this problem, this paper chooses a Multi-scale Retinex algorithm with color protection (MSRCP). MSRCP algorithm not only enhances the local contrast of the enhanced image but also uses the color restoration function to adjust the color information of the enhanced image. It overcomes the shortcomings of SSR and MSR in noisy images, such as local detail color distortion and poor overall visual effect39.

The MSRCP algorithm takes the intensity channel image as the original image, and maps it to each channel by the ratio of the intensity image and the original RGB, to preserve the original image color distribution while enhancing the image. The intensity image is obtained as shown in the following formula:

where\({I_R}\), \({I_G}\), \({I_B}\) are the RGB channel images of the original image respectively.

MSRCP is based on the improvement of the MSR algorithm and quantifies the reflection component obtained by MSR. The quantization range is 0 ~ 255, and the quantization expression is as follows:

whereis the maximum value of \({R_{MSR}}(x,y)\), \(min\) is the minimum value of \({R_{MSR}}(x,y)\), \({R_{MSR}}(x,y)\) is the expression of MSR, and the expression is:

where\({I_i}(x,y)\) is the input image and \({\omega _n}\) is the weighting coefficient of the nth scale. \({F_n}(x,y)\) is the Gaussian filter function at the nth scale.

Apply the Gamma brightness adjustment to the reflection component:

where γ is the correction parameter.

In the above equation, \({I_{n{t_1}}}\) is the single-channel image after Retinex processing, and then the magnification factor A is introduced to restore the color, so that the image is similar to the color of the original image.

where \({I_R}\), \({I_G}\), \({I_B}\) are the RGB channel images of the original image, and i is the pixel index.

Each channel of MSRCP can be obtained by the amplification factor:

where\({I_{R,G,B}}(x,y)\)represents the RGB space image of the original image, and \({R_{MSCRP}}(x,y)\) represents the MSRCP enhanced image obtained at last.

The original image of the discharge carbon trace of the insulating cardboard collected in the experiment and the image of the discharge carbon trace of the insulating cardboard processed by MSR and MSRCP algorithms are shown in Fig. 10. It can be seen that compared with the original images, the image enhanced by MSRCP algorithm is enhanced in terms of clarity, brightness, contrast and so on, and the color retention effect is better. It can be seen that the MSRCP image enhancement algorithm can effectively enhance the effect of the carbon trace image collected in the experiment.

Experiment

Construction of transformer carbon trace defect sample library

Given the scarcity of obtainable carbon traces from internal transformer defects in practical scenarios—which hinders the construction of large-scale datasets—this study systematically acquired carbon trace images through a self-built transformer partial discharge simulation platform. It significantly expands the insulation defect dataset. This approach provides sufficient data support for subsequent deep-learning-based instance segmentation models of carbon traces.

As depicted in Fig. 11, the experimental circuit consists of three primary components: test model, voltage boosting platform, and high-speed camera. The needle-plate electrode model was used as the test model, which comprised nylon screws, acrylic panels, nylon brackets, front electrodes, grading rings, and connecting rods, as shown in Fig. 12. Standard transformer pressboards (25 cm × 15 cm) were secured onto acrylic sheets via adjustable-angle nylon brackets to flexibly control the tilt angle. The experimental system employs an SB-10KVA/100KV test transformer with a 10 kΩ protective resistor, and are placed in transparent acrylic containers to ensure observable test procedures. Karamay Type 25 transformer oil was used as the dielectric medium. Image acquisition was conducted by using an HTSUA134GC/M high-speed camera, with its 1.3-megapixel resolution at 211 fps to ensure clear visualization of discharge dynamics.

Following platform setup, the experiment personals applied voltage in stepwise increments at a rate of 2 kV/min, maintaining each voltage level statically for 10 min until surface discharge occurred on the insulating pressboard and generated carbon traces, as shown in Fig. 13. Through iterative experimentations, carbon traces with diverse morphologies were successfully prepared, with their formation processes fully documented by the high-speed camera. Finally, two typical discharge carbon traces were obtained: dendritic and clustered discharge carbon traces. Accordingly, a comprehensive image repository of 3,658 samples was established—2,329 dendritic carbon traces and 1,329 clustered ones.

To ensure model training quality, all carbon trace samples were carefully annotated using the LabelImg tool. Instance segmentation required multi-point annotation for each carbon trace’s detailed features, which will significantly help the segmentation model to learn intricate characteristics. Thereby it could achieve more precise segmentation of carbon trace. After sample annotation and data augmentation, the final instance segmentation dataset comprised 3,658 images: 2329 dendritic and 1329 clustered carbon traces. Then the datasets were partitioned into training, validation, and test sets at a ratio of 8:1:1, providing the data foundation for subsequent model training.

Dataset preparation

In this paper, the carbon trace images were collected through the transformer partial discharge simulation experiment, and a total of 3658 images were screened to prepare the transformer internal discharge carbon trace data set. After MSCRP preprocessing, the images are allocated according to the ratio of training set: validation set: test set = 7:2:1. Then LabelImg is used to label the data set to obtain the data set of typical defects discharge carbon traces in transformers.

Experiment environment and configuration

The operating system used in this experiment is Windows 11, the CPU is corei5-13400f, the GPU is NVIDIA GeForce 4060ti, the video memory is 16GB, the deep learning framework is Pytorch 2.1.0, and the programming language is Python 3.8. The training parameters are set as follows: batch_size is set to 2, the initial learning rate is set to 0.01, the input image size is set to 1280 × 1280, and the number of training rounds is 200 epochs.

Performance parameter index

To effectively and intuitively show the effectiveness of the proposed CDEP-CTSeg network, mIoU(Mean Intersection over Union), Precision (P), Recall (R), mean average precision, mAP), number of model parameters (Params), total floating-point operations (FLOPs) and frames Per Second (FPS) were used as the evaluation indicators of model performance.

The mIoU is one of the most commonly used evaluation metrics in instance segmentation. This metric calculates the average IoU of all categories, where IoU (Intersection over Union) represents the ratio of intersection and union between the predicted result and the true label, and mIoU is a value between 0 and 1, with closer to 1 indicating better algorithm performance. The formula is as follows:

where M is the segmentation region of the instance predicted by the model, G is the segmentation region of the true instance, N is the total number of instances, and \(Io{U_i}\) is the \(IoU\) of the\(ith\)instance.

P, R can be calculated by comparing the segmentation results with the true labels. P denotes the proportion of the number of true examples in all predicted positive examples, and R denotes the proportion of the number of true examples in all actual positive examples. Both are calculated as follows:

where TP stands for True Positive, FP stands for False Positive and FN stands for False Negative.

The calculation results of P and R form the P-R curve. It shows the relationship between the Precision and recall of the model under different thresholds. The closer the P-R curve is to the upper right corner of the axis, the better the performance of the model is, the better it can correctly identify the positive samples, and the higher the precision value of correctly classifying the positive samples, while the closer to the right means that the model has poor recognition ability for the positive samples, that is, poor recall ability. The P-R curve can help us evaluate the performance of the model at different thresholds and choose the appropriate threshold to balance precision and recall. For model comparison or selection, we can perform a quantitative assessment by comparing the area under the P-R curve (called Average Precision, AP), where higher AP values indicate better model performance. Secondly, for each class, the Average Precision (AP) is calculated, and the maximum AP value is taken as the AP value of the class. Finally, the AP values of all classes are averaged to obtain the final mAP (mean Average Precision), which represents the average precision over multiple classes. mAP values also include mAP@0.5 and mAP@0.5:0.95, in image segmentation mAP@0.5 refers to the average accuracy at an IoU threshold of 0.5, and mAP@0.5 measures the average accuracy of the model in pixel-level segmentation. mAP@0.5:0.95 in segmentation refers to the average accuracy as the IoU threshold varies from 0.5 to 0.95. Compared to mAP@0.5, mAP@0.5:95 provides a more comprehensive assessment of the model’s performance at different IoU thresholds, providing an evaluation for more stringent segmentation requirements.

FPS (i.e. Frames Per Second), is used to evaluate the processing speed of the model on a given hardware, that is, the number of images per second that can be processed. This metric is very important for fast segmentation, which can only be satisfied if the processing speed is fast.

In addition, to evaluate a neural network model built with a deep learning framework, in addition to the above metrics, the complexity of the model must also be considered. The total number of floating-point operations (i.e. FLOPs) and the number of model parameters (Params) are usually used to describe the complexity of the model.

Results and analysis

Ablation experiment

To further verify the effectiveness of the improved scheme in this paper, an ablation experiment is designed to evaluate the impact of each module on the overall performance of the algorithm by gradually replacing the improved modules. The experimental design allows us to quantify the specific contribution of each improvement, which clearly demonstrates the importance of the improvement to the actual performance improvement.

In this paper, the YOLOv8n-seg is used as the baseline model. The results of some typical defect discharge carbon trace data sets are shown in Table 1.

It can be seen from Table 1 that after gradually introducing various improvement measures into the proposed CDEP-CTSeg model, the segmentation performance on the carbon trace data set has been significantly improved. Firstly, after replacing the C2f-DySnake module on the baseline model, the precision rate is improved by 1.4%, the recall rate is improved by 1.1%, and the mAP50 rate is improved by 1.5%. It can be seen that the Average Precision of clustered itself has reached a high level, increasing by 1.3%. The Average Precision of dendritic is improved by 1.7%, which achieves a significant improvement. After introducing the EHSPAN feature pyramid, the precision rate is increased by 2.1%, the recall rate is increased by 0.9%, and the mAP50 rate is increased by 2.5%. Finally, mAP50 is increased by 2.8% under the combined effect of C2f-DySnake and EHSPAN, the Average Precision of clustered is increased by 1.9%, the Average Precision of dendritic is increased by 3.7%, the recall rate is increased by 2.3%, and the precision is increased by 2.8%.

Figure 14 provides a comparative analysis of segmentation results, intuitively demonstrating substantial improvements in carbon trace segmentation. There is a clear segmentation contrast between the YOLOv8-Seg model in Fig. 14(a) and the CDEP-CTSeg model in Fig.14(b). Segmentation results of dendritic carbon trace segmentation reveals that while YOLOv8-Seg successfully captures primary carbon structures, CDEP-CTSeg achieves superior boundary definition while maintaining feature integrity, as shown in the region marked by Arrow ①. Its segmentation contours adhere more faithfully to natural dendritic morphologies, particularly exhibiting enhanced capability in detecting delicate branch connections. Conversely, in the region marked by Arrow ②, the YOLOv8-Seg model produces coarse segmentation edges and omits multiple slender branches. For clustered carbon trace, the CDEP-CTSeg network also demonstrates significant advantages, obtaining more precise segmentation within the highlighted white box. These comparative results conclusively validate that through innovative architecture design, the CDEP-CTSeg effectively improves segmentation performance for typical transformer carbon traces. Particularly, it demonstrated powerful representation capability for carbon traces with complex morphological features.

In this study, typical partial discharge carbon traces inside large oil-immersed transformers are systematically verified based on actual industrial scenarios. For the carbon trace detection problem under complex working conditions inside the transformer, this paper collected authentic carbon trace images generated by partial discharge inside large transformers for identification and segmentation. As shown in Fig. 15, the proposed algorithm showed excellent feature extraction capability under the complex background interferences such as complex structure of the transformer interior, oil deposition, and insulation material texture. The method is not only able to accurately identify the complete contours of various types of carbon traces, but also can effectively distinguish carbon traces from the background, which realizes the accurate segmentation and localization of discharge defects inside the transformer. In particular, the algorithm showed stable detection performance when dealing with tiny discharge traces and carbon trace samples with fuzzy edges. This validation process fully proves the reliability and applicability of the proposed segmentation model in the actual engineering environment, and provides an effective technical solution for the intelligent detection of power equipment.

Comparison of improved performance of EHSPAN

Next, the performance of the efficient feature fusion pyramid EHSPAN proposed in this paper is tested, and the structure of the feature pyramid is modified step by step to prove the superiority of EHSPAN. The YOLOv8n-seg is still selected as the benchmark model, and the results on the data set of typical defects discharge carbon traces inside the transformer are shown in Table 2.

It can be seen from Table 2 that with the step-by-step improvement of the feature pyramid, the indicators of the model are steadily improving. Compared with the baseline model of YOLOv8n-seg, after the improvement of HSFPN, the mAP50 is increased by 0.4%, and the Average Precision of clustered carbon traces is increased by 1.9%, but the Average Precision of dendritic carbon traces is decreased by 1.2%, and the precision is decreased by 6.4%, and the difference is large. It can be seen that the pyramid has a good effect on the features of clustered carbon traces but is poor in the targeting of dendritic carbon traces. Based on this, it is improved. With the introduction of the ELA attention mechanism, PANet fusion, and the addition of the P2 detection head, the mAP can be seen to rise steadily, and finally, it is increased by 2.5% compared with the baseline model. In addition, the segmentation Average Precision of dendritic carbon traces and clustered carbon traces is improved, the segmentation Average Precision of dendritic carbon traces is increased by 3.4%, and that of clustered carbon traces is increased by 1.7%.

Comparison test of different algorithms

To further verify the performance of the improved method in this paper, the improved instance segmentation model proposed in this paper is compared with other models under the same hardware and environment configuration, including: YOLOv3tiny-seg40, YOLO5s-seg, YOLOv6s-seg, YOLOv7tiny-seg41 YOLOv9s-seg, YOLOv11s-seg, YOLOv12s-seg. The segmentation results of different algorithms are shown in Table 3. It can be seen from the table that compared with other models of similar size, the CDEP-CTSeg has obvious advantages in mAP. Its mAP is 18.1%, 9.2%, 10.6%, 13.2%, 5% higher than YOLOv3tiny-seg, YOLO5s-seg, YOLOv6s-seg, YOLOv7tiny-seg, YOLOv9s-seg, YOLOv11s-seg, YOLOv12s-seg respectively. The proposed model demonstrates significant advantages with a 4.7% point gain in Precision compared to the second-best performing YOLOv6s-seg variant.

CDEP-CTSeg exhibited superior performance over the YOLO series models for instance segmentation. To further verify these advancements, we conducted cross-architecture comparative experiments with three state-of-the-art segmentation models (UNet3+42, SwinUNet43, DeepLabV3+44), selected based on their enhanced compatibility with YOLO-series data protocols to ensure the comparability of experiment results. As evidenced in Table 3, CDEP-CTSeg achieved dominant efficiency-accuracy trade-offs. It attained the highest segmentation accuracy with minimal computational load (35.4 GFLOPs), outperforming the second-best model UNet3 + by 8.9% points in mAP while surpassing DeepLabV3 + by 6.7% points in Precision.

Through multi-model comparative experiments, Fig. 16 systematically demonstrates the performance variations of different YOLO-series models in carbon trace segmentation. For sample #014, while achieving complete carbon trace detection, model (d) exhibits partial segmentation defects in the region marked by arrow ①, and model (e) displays significant detection omissions in the area indicated by arrow ②. For sample #173, only the proposed CDEP-CTSeg model attains complete detection, with models (b)-(e) showing severe detection omissions, and models (f)-(h) demonstrating minor detection omissions in the white-boxed region marked by arrow ③. For sample #794, models (b)-(f) all exhibit notably incomplete segmentation within the white-boxed area indicated by arrow ④. Finally, for sample #015, models (b)(c)(e)(g)(h) present extensive detection omissions, model (d) achieves relatively complete segmentation but contains localized detection omissions in the white-boxed region noted by arrow ⑥, while only models (a) and (f) fully segment the carbon trace features. Crucially, the CDEP-CTSeg in Fig. 16(a) not only accurately segments complex topological structures but also attains boundary refinement in the region adjacent to the white box noted by arrow ⑤. These results comprehensively validate the dual advantages of the proposed method in structural integrity preservation and boundary segmentation accuracy.

Figure 17 compares the segmentation capabilities of YOLO-series models with dendritic carbon trace samples. Segmentation results demonstrate that CDEP-CTSeg, YOLOv9s-seg, and YOLOv11s-seg all successfully detect primary carbon trace structures. However, there are detailed differences between these models. Notebly, the CDEP-CTSeg model identifies significantly more fine dendritic structures, capturing a broader spectrum of carbon trace features, as shown in Fig. 17(a). Consequently, when processing carbon traces with intricate characteristic structures, CDEP-CTSeg achieves superior segmentation precision compared to other YOLO models, demonstrating enhanced preservation of complex topological features in carbon traces. These comparative results comprehensively validate the technological superiority of the proposed model in segmenting complex carbon trace images.

To comprehensively evaluate the performance optimization of the proposed model, this study visualized the variation trend of mean Average Precision (mAP) during the training process of each model, as illustrated in Fig. 17. The experimental results demonstrate that the proposed CDEP-CTSeg model exhibits significant performance improvement compared to the baseline YOLOv8n-seg model, with its mAP curve consistently maintaining a higher level. Furthermore, when compared to traditional detection models, our model continuously achieves superior mAP values throughout the training process, which sufficiently proves its excellence in the task of discharge carbon traces segmentation. These experimental results validate the effectiveness of the proposed improvement strategies in enhancing the segmentation accuracy of the model.

Subsequently, to avoid performance variations caused by dataset format discrepancies, Fig.19exclusively presents carbon trace segmentation results of three mainstream models: DeepLabV3+, UNet3+, and SwinUNet. For clear visualization, dendritic carbon traces are denoted in red while clustered carbon traces in green. Comparative analysis reveals suboptimal performance across all three models, exhibiting pronounced segmentation omissions and false-positive detections. Experimental results demonstrate that the CDEP-CTSeg exhibit significant advantages in segmentation accuracy and robustness over these mainstream algorithms. The proposed model not only effectively suppresses segmentation omission and false-detection rates, but also delivers exceptional capability in processing complex-shaped carbon traces. These quantitative and qualitative results jointly validate the superior segmentation capability of the CDEP-CTSeg model for discharge carbon traces, providing a practical solution for intelligent diagnostics of oil-immersed transformer.

Discussion

This paper presents an advanced instance segmentation network, CDEP-CTSeg, for the visual detection of internal insulation defects in large transformers. This approach is complemented by a self-developed micro-robot designed for internal inspection. The complex internal environment of transformers, characterized by insufficient lighting, varying imaging distances, and fluctuating lighting conditions, often leads to blurred images and indistinct carbon trace features. To address these challenges, we propose an improved Retinex image enhancement algorithm that effectively enhances local contrast and detail representation while improving overall brightness and contrast. The efficacy of this algorithm is demonstrated in Fig. 1. Additionally, to improve the accuracy of boundary segmentation for complex carbon traces, the C2f-DySnake module is integrated into the backbone network. This module adaptively focuses on elongated and curved local details, thereby enhancing the model’s ability to segment branch-like or continuous elongated structures. Furthermore, to reduce the missed detection of small carbon traces caused by significant size variations, the EHSPAN feature pyramid network is incorporated into the Neck network. By assigning greater weights to higher-level features and filtering out lower-level information through a channel attention mechanism, the model’s capability to detect smaller targets is significantly improved. Experimental results show that compared to the traditional YOLOv8-seg model, the proposed CDEP-CTSeg model achieves improvements of 2.3%, 2.8%, and 2.8% in recall rate, precision, and mAP50, respectively. Moreover, the segmentation accuracy for clustered and dendritic carbon traces is increased by 1.9% and 3.7%, respectively. These improvements are validated in Tables 1 and 2, and Fig.1. Comparative analysis with several existing instance segmentation models further demonstrates the superior performance of the proposed model in terms of segmentation accuracy and defect detection, as shown in Table 3; Figs. 16, 17 and 17. These findings indicate that the CDEP-CTSeg instance segmentation network excels in rapidly and accurately detecting transformer internal insulation states, which is crucial for ensuring the stable operation of large power transformers.

Conclusions

-

1.

In order to solve the low brightness and poor definition of carbon trace images caused by the dark internal environment of the transformer and insufficient supplementary light, the MSRCP algorithm was designed to preprocess the collected image. After preprocessing, the image has been significantly improved in terms of clarity, brightness, and contrast, which makes the characteristic information of carbon traces more obvious. Experimental results showed that the MSRCP image enhancement algorithm could effectively improve the quality of carbon trace images.

-

2.

Aiming at the problem of inaccurate boundary segmentation when dealing with complex carbon traces with the traditional segmentation networks, this paper introduced the C2f-DySnake module into the backbone network to enhance the detail segmentation ability when dealing with elongated continuous images (such as dendritic carbon traces). Compared with the original network, the precision, recall, and mAP50 were improved by 1.4%, 1.1%, and 1.5% respectively. The comparative results of the segmentation experiments showed that the C2f-DySnake module significantly improved the segmentation accuracy and detail performance when dealing with complex and elongated images, achieving more accurate and fine segmentation results.

-

3.

To address the missed segmentation of small targets resulting from the considerable size variety of carbon traces, the EHSPAN feature pyramid was designed in this paper. The experimental results showed that compared with the baseline model, the mAP50 of dendritic and clustered carbon traces were increased by 3.4% and 1.7% respectively. The ablation experiment indicated that the feature pyramid significantly improved the segmentation ability of small objects.

Data availability

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

References

Wajid, A. et al. Comparative performance study of dissolved gas analysis (DGA) methods for identification of faults in power transformer. Int. J. Energy Res. 2023 (9960743). https://doi.org/10.1155/2023/9960743 (2023).

Zhang, W., Zeng, Y., Li, Y. & Zhang, Z. Prediction of dissolved gas concentration in transformer oil considering data loss scenarios in power system. Energy Rep. 9, 186–193. https://doi.org/10.1016/j.egyr.2022.10.389 (2023).

Tang, Z. & Jian, X. Thermal fault diagnosis of complex electrical equipment based on infrared image recognition. Sci. Rep. 14, 5547. https://doi.org/10.1038/s41598-024-56142-x (2024).

Guerbas, F. et al. Neural networks and particle swarm for transformer oil diagnosis by dissolved gas analysis. Sci. Rep. 14, 9271. https://doi.org/10.1038/s41598-024-60071-0 (2024).

Nanfak, A. et al. Hybrid DGA method for power transformer faults diagnosis based on evolutionary k-Means clustering and dissolved gas subsets analysis. IEEE Trns Dielectr. Electr. Insul. 30, 2421–2428. https://doi.org/10.1109/TDEI.2023.3275119 (2023).

Jia, S. et al. Detection technology of partial discharge in transformer based on optical signal. Energy Rep. 9, 98–106. https://doi.org/10.1016/j.egyr.2022.10.369 (2023).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444. https://doi.org/10.1038/nature14539 (2015).

Schmidhuber, J. Deep learning in neural networks: an overview. Neural Netw. 61, 85–117. https://doi.org/10.1016/j.neunet.2014.09.003 (2015).

Liu, W. et al. A survey of deep neural network architectures and their applications. Neurocomputing 234, 11–26. https://doi.org/10.1016/j.neucom.2016.12.038 (2017).

He, K., Gkioxari, G., Dollar, P., Girshick, R. & Mask, R-C-N-N. IEEE Trans. Pattern Anal. Mach. Intell. 42, 386–397. https://doi.org/10.1109/TPAMI.2018.2844175 (2020).

Hafiz, A. M. & Bhat, G. M. A survey on instance segmentation: state of the Art. Int. J. Multimed Inf. Retr. 9, 171–189. https://doi.org/10.1007/s13735-020-00195-x (2020).

Gu, W., Bai, S. & Kong, L. A review on 2D instance segmentation based on deep neural networks. Image Vis. Comput. 120, 104401. https://doi.org/10.1016/j.imavis.2022.104401 (2022).

Dai, J., He, K., Li, Y., Ren, S. & Sun, J. Instance-Sensitive Fully Convolutional Networks. in Computer Vision – ECCV 534–549 (Springer International Publishing, Cham, 2016). (2016). (eds. Leibe, B., Matas, J., Sebe, N. & Welling, M.) https://doi.org/10.1007/978-3-319-46466-4_32

Bolya, D., Zhou, C., Xiao, F. & Lee, Y. J. YOLACT Real-time Instance Segmentation. in 2019 IEEE/CVF International Conference on COMPUTER VISION (ICCV) 9156–9165 (IEEE, New York, 2019). ) 9156–9165 (IEEE, New York, 2019). (2019). https://doi.org/10.1109/ICCV.2019.00925

Wang, X., Zhang, R., Shen, C., Kong, T. & Li, L. S. O. L. O. A simple framework for instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 44, 8587–8601. https://doi.org/10.1109/TPAMI.2021.3111116 (2022).

Lin, T. Y. et al. Feature Pyramid Networks for Object Detection. in 30TH IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 936–944 (IEEE, New York, 2017). ) 936–944 (IEEE, New York, 2017). (2017). https://doi.org/10.1109/CVPR.2017.106

Xie, E. et al. PolarMask: Single Shot Instance Segmentation with Polar Representation. in. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020) 12190–12199 (IEEE Computer Soc, Los Alamitos, 2020). (2020). https://doi.org/10.1109/CVPR42600.2020.01221

Kirillov, A., Wu, Y., He, K., Girshick, R. & PointRend Image Segmentation as Rendering. in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020) 9796–9805 (IEEE Computer Soc, Los Alamitos, 2020). https://doi.org/10.1109/CVPR42600.2020.00982

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 779–788 (IEEE, New York, 2016). (2016). https://doi.org/10.1109/CVPR.2016.91

Huang, H., Zhou, X., Cao, J., He, R. & Tan, T. Vision Transformer with Super Token Sampling. Preprint at (2024). https://doi.org/10.48550/arXiv.2211.11167

Wang, C. Y., Yeh, J. & Liao, H. Y. M. YOLOv9: learning what you want to learn using programmable gradient information. In COMPUTER VISION - ECCV 2024, PT XXXI Vol. 15089 (eds Leonardis, A. et al.) 1–21 (Springer International Publishing Ag, 2025). https://doi.org/10.1007/978-3-031-72751-1_1.

Khanam, R. & Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. Preprint at (2024). https://doi.org/10.48550/arXiv.2410.17725

Tian, Y., Ye, Q. & Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. Preprint at (2025). https://doi.org/10.48550/arXiv.2502.12524

Bai, R., Wang, M., Zhang, Z., Lu, J. & Shen, F. Automated construction site monitoring based on improved YOLOv8-seg instance segmentation algorithm. IEEE Access. 11, 139082–139096. https://doi.org/10.1109/ACCESS.2023.3340895 (2023).

Yue, X. et al. Improved YOLOv8-Seg network for instance segmentation of healthy and diseased tomato plants in the growth stage. Agriculture-Basel 13, 1643. https://doi.org/10.3390/agriculture13081643 (2023).

Zhang, Z., Zou, Y., Tan, Y. & Zhou, C. YOLOv8-seg-CP: a lightweight instance segmentation algorithm for chip pad based on improved YOLOv8-seg model. Sci. Rep. 14, 27716. https://doi.org/10.1038/s41598-024-78578-x (2024).

Peng, L., Wang, K., Zhou, H., Ma, Y. & Yu, P. YOLOv7-CWFD for real time detection of bolt defects on transmission lines. Sci. Rep. 15, 1635. https://doi.org/10.1038/s41598-024-81386-y (2025).

Xu, Z. & Tang, X. Transmission line insulator defect detection algorithm based on MAP-YOLOv8. Sci. Rep. 15, 10288. https://doi.org/10.1038/s41598-025-92445-3 (2025).

Wang, C. Y., Bochkovskiy, A. & Liao, H. Y. M. YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. Preprint At. https://doi.org/10.48550/arXiv.2207.02696 (2022).

Chen, Y. et al. Accurate leukocyte detection based on deformable-DETR and multi-level feature fusion for aiding diagnosis of blood diseases. Comput. Biol. Med. 170, 107917. https://doi.org/10.1016/j.compbiomed.2024.107917 (2024).

Hou, Q., Zhou, D. & Feng, J. Coordinate Attention for Efficient Mobile Network Design. in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2021 13708–13717 (IEEE Computer Soc, Los Alamitos, 2021). https://doi.org/10.1109/CVPR46437.2021.01350

Hou, Q., Zhang, L., Cheng, M. M., Feng, J. S. & Pooling Rethinking Spatial Pooling for Scene Parsing. in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 4002–4011 (IEEE Computer Soc, Los Alamitos, 2020). https://doi.org/10.1109/CVPR42600.2020.00406

Wu, Y., He, K. & Group Normalization Int. J. Comput. Vis. 128, 742–755. https://doi.org/10.1007/s11263-019-01198-w (2020).

Li, M., Liu, J., Yang, W., Sun, X. & Guo, Z. Structure-Revealing Low-Light image enhancement via robust retinex model. IEEE Trans. Image Process. 27, 2828–2841. https://doi.org/10.1109/TIP.2018.2810539 (2018).

Sun, Y. et al. Low-Illumination image enhancement algorithm based on improved Multi-Scale retinex and ABC algorithm optimization. Front. Bioeng. Biotechnol. 10, 865820. https://doi.org/10.3389/fbioe.2022.865820 (2022).

un, S. et al. A study of pathological image detail enhancement method based on improved single scale retinex. J. Med. Imaging Health Inf. 8 (6), 1051–1056. https://doi.org/10.1166/jmihi.2018.2396 (2018).

Serp, J. et al. The molten salt reactor (MSR) in generation IV: overview and perspectives. Prog Nucl. Energy. 77, 308–319. https://doi.org/10.1016/j.pnucene.2014.02.014 (2014).

Rahman, Z. U. & Jobson, D. J. Retinex processing for automatic image enhancement. J. Electron. Imaging. 13, 100–110. https://doi.org/10.1117/1.1636183 (2004).

Tang, C. et al. Efficient underwater image and video enhancement based on retinex. Signal. Image Video Process. 13, 1011–1018. https://doi.org/10.1007/s11760-019-01439-y (2019).

Choi, J., Chun, D., Kim, H. & Lee, H. J. Gaussian YOLOv3: An Accurate and Fast Object Detector Using Localization Uncertainty for Autonomous Driving. in 2019 IEEE/CVF International Conference on Computer Vision (ICCV 2019) 502–511IEEE Computer Soc, Los Alamitos, (2019). https://doi.org/10.1109/ICCV.2019.00059

Wang, C. Y., Bochkovskiy, A. & Liao, H. Y. M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 7464–7475IEEE Computer Soc, Los Alamitos, (2023). https://doi.org/10.1109/CVPR52729.2023.00721

Huang, H. et al. Unet 3+: A Full-Scale Connected Unet for Medical Image Segmentation. in. IEEE International Conference on Acoustics, Speech, and Signal Processing 1055–1059 (IEEE, New York, 2020). (2020). https://doi.org/10.1109/icassp40776.2020.9053405

Cao, H. et al. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. Preprint at (2021). https://doi.org/10.48550/arXiv.2105.05537

Chen, L. C., Zhu, Y., Papandreou, G., Schroff, F. & Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation, in Computer Vision - ECCV, PT VII (eds. Ferrari, V., Hebert, M., Sminchisescu, C. & Weiss, Y.) vol. 11211 833–851 (Springer International Publishing Ag, Cham, 2018). https://doi.org/10.1007/978-3-030-01234-2_49

Acknowledgements

This research was funded by the National Natural Science Foundation of China (No. 51907102).

Author information

Authors and Affiliations

Contributions

Conceptualization, H.J. and J.L.; methodology, H.J. and J.L.; software, P.H. and J.L.; validation, P.H. and J.L.; formal analysis, X.L.; investigation, H.J.; resources, H.J.; data curation, P.H. and J.L.; writing—original draft preparation, J.L. and Z. S.; writing—review and editing, X.L. and L.L.; visualization, X.L.; supervision, L.L; project administration, H.J.; funding acquisition, H.J. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Consent for publication

All authors agreed to publish this manuscript.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ji, H., Li, J., Han, P. et al. An instance segmentation network for discharging carbon traces inside oil-immersed transformers with boundary and detail features enhancement. Sci Rep 15, 32196 (2025). https://doi.org/10.1038/s41598-025-15894-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-15894-w