Abstract

Accurate segmentation of malocclusion is crucial in orthodontic diagnosis and treatment planning, but existing deep learning methods seriously affect the reliability of clinical applications due to poor robustness and feature confusion between neighboring tooth classes when dealing with malocclusion. To address this problem, a U-shaped 3D dental model segmentation method based on hierarchical feature guidance is proposed. First, a feature-guided deep encoder architecture is constructed, which introduces a normalization method that combines the local mean with the global standard deviation. And a push–pull strategy is employed to optimize point cloud density, adjusting the standard deviation variation to meet the point cloud density requirements of different regions. Second, an inverted bottleneck global feature extraction flow was designed to guide the encoder in learning the overall features of the dentition and jaw through dynamic scaling of deep-level features,thereby enhancing the semantic recognition of malformations. Finally, an interpolation method is used to decode the high-level dental semantic information layer by layer to reconstruct the spatial structural features of the high-resolution dental mesh. Experimental results on a self-constructed malformed dental dataset show that the proposed method achieves an overall accuracy (OA) of 96.6% and a mean intersection over union (mIoU) of 90.8%, respectively, which are 3.4% points and 8.2% points higher than that of PointNet, 11.3% points and 26% points higher than that of MeshSegNet, and 2.2% points and 5.6% points higher than that of PointeNet ,and the number of model parameters is only 1.54 M. Meanwhile, on the public datasets Teeth3DS and 3D-IOSSeg, the OA of the proposed method reaches 96.4% and 90.1%, and the mIoU reaches 94.5% and 86.8%, respectively. These performance advantages indicate that the proposed method can better meet the development of intelligent virtual orthodontics.

Similar content being viewed by others

Introduction

In virtual orthodontic systems, the accurate segmentation of dental models is fundamental to achieving efficient treatment planning1. With the advancement of orthodontics, the role of automation technology in the treatment process has become increasingly prominent. The segmentation of dental models is a critical step in tooth path planning and is essential for predicting soft tissue deformation and formulating personalized treatment plans. Therefore, there is a need for an automatic, accurate and efficient segmentation method to enhance the precision of orthodontic treatment and provide strong support for clinical practice.

In recent years, point cloud deep learning2 has gradually emerged as a research hotspot in the field of 3D dental model segmentation. Compared to traditional methods, point cloud deep learning approaches can directly process raw 3D mesh dental data, eliminating the need for complex data format conversions between point clouds and mesh surfaces, thereby simplifying the data pretreatment process. However, dental models are usually characterized by complex appearance, morphological similarity, and unique shapes. Malformed teeth are often irregular in form, abnormally positioned, and asymmetrically structured. And the existing deep learning algorithms tend to be complex in the feature aggregation module, relying too much on complex local and global feature extractors, and not fully considering the irregularity of the point cloud. Therefore, when processing malocclusion data, the existing methods have the following problems: it is difficult to effectively extract complex geometric features in malocclusion data, which in turn affects the accuracy of orthodontic plan formulation; key details are easily lost when processing sparse regions, which can trigger the risk assessment of gingival recession; and the confusion of neighboring category features between different categories of malocclusion can lead to fragmented erroneous slices of faces in the segmentation results, which in turn constraining the efficiency of the digital diagnosis and treatment process.

To address the aforementioned issues, this paper proposes a hierarchical feature-guided U-shaped 3D dental model segmentation network, which effectively enhances the fusion and representation capabilities of global and local features while reducing the interference of irregular data on model performance. Meanwhile, the model architecture is simplified to reduce the reliance on complex local feature extractors and enhance the adaptability to complex tooth morphology. And self-constructed malocclusion model data covering nine major categories: wisdom teeth, High and low drop teeth, missing teeth, erupting small teeth, diastema, dental crowding, implant screw residual roots, Da Vinci-like black triangles, and crown deformities.

The main contributions of this paper are as follows:

-

a feature-guided deep encoder architecture is constructed. After sampling and grouping operations, a novel normalization method is introduced to effectively address data irregularity, improve sampling and grouping efficiency. And a push–pull strategy is used to optimize the point cloud density by adjusting the standard deviation variation to adapt to the point cloud density requirements in different regions.

-

Drawing on the inverted bottleneck idea, a global feature extraction block is designed through deep-level feature dynamic scaling, combined with residual connections and max-pooling, to guide the encoder in learning the overall characteristics of the dental model and enhance global feature representation.

-

The results of several classical segmentation methods are presented on the self-constructed deformity dataset and the public datasets of Teeth3DS and 3D-IOSSeg, and are thoroughly compared and analyzed.

The rest of the paper is structured as follows: the “Related work” section provides a comprehensive overview of related research work. Then, “Methods” section elaborates on the various components of the proposed methodology. Subsequently, “Experiments results and analyses” section delves into the details of the experiments. Finally, “Discussion” section summarizes the entire paper.

Related work

Due to the exceptional surface modeling capabilities of intraoral scanners (IOS), 3D intraoral scanning technology has been widely applied in the fields of prosthodontics and orthodontics. To assist clinicians in achieving precise diagnosis and treatment, researchers in recent years have dedicated efforts to developing intelligent algorithms for the automatic segmentation of different tooth types in 3D intraoral scan data. Existing tooth model segmentation methods can be broadly categorized into two main classes: traditional methods and deep learning-based methods:Traditional methods are mainly based on manual geometric features, including those based on curvature-based3, contour-based4, geodesic-based5, and harmonic field-based6 approaches, and segmentation by 3D-2D projection transformation7. Although these methods can capture certain geometric information of teeth, their performance is limited when dealing with complex tooth morphologies, and they are susceptible to noise, malformations, or defects. With the rapid advancement of deep learning techniques, neural network-based segmentation methods have achieved significant progress in terms of automation and accuracy. They can be classified into methods based on convolutional neural networks (CNNs) or graph convolutional networks (GCNs), methods based on Transformer, and methods based on dual-stream networks according to their core network characteristics and processing approaches.

CNN or GCN methods

Initially, some approaches, inspired by voxelization, converted 3D intraoral scan data into regular structures, which were then directly processed by CNN. However, this indirect feature transformation method, as a compromise solution, leads to the loss of original geometric information and affects the quality of tooth segmentation. Meanwhile, some tooth segmentation methods that combine geometric information and CNNs have also emerged. For example, Xu8 et al. used a two-stage hierarchical approach to extract features from intraoral scan data and relied on CNNs to extract different geometric features, which ultimately yielded fine tooth segmentation results. Zanjani9 et al. borrowed the idea of PointCNN10 and proposed a neural network that directly utilizes the original tooth and jaw model without downsampling, which achieves accurate tooth segmentation . However, the network model has a high dependence on large-scale dental datasets and has the problem of high memory occupancy during operation, which limits its practical application scenarios to some extent.

Sun et al.11proposed an automatic segmentation and dense correspondence network, which can be stacked on existing GCN. They introduced an instance-aware geodesic mapping to encode spatial relationships with the remaining dental models, thereby enhancing GCN-based tooth segmentation and dense correspondence features. Lian et al.12proposed MeshSegNet, an extension of PointNet13. Unlike PointNet, this method directly takes mesh cells as input, explicitly displaying local structures. However, MeshSegNet consumes significant computational resources due to the multiplication of large adjacency matrices, resulting in low training and inference efficiency. Xu et al.14 designed a simple point cloud residual multi-layer perceptron network, PointMLP. Without integrating complex local geometry extractors, it can achieve highly competitive performance solely through the proposed lightweight geometric affine module. Li et al.15 proposed an end-to-end deep neural network called MBESegNet. To reduce the ambiguity of mesh feature representation near tooth boundaries, the network’s multi-scale bidirectional enhancement module learns multi-scale feature representations by integrating local contextual information of different sizes from various neighborhoods. Gu, L. et al.16 proposed PointE-Net, a lightweight architecture and low-training-cost framework improved upon PointMLP. Zhao et al.17 proposed a graph attention convolutional network for 3D dental model segmentation, which consists of two parallel branches. By further fusing the features of the two branches, complementary features are learned to predict the final segmentation results. However, this method only addresses the issue of tooth misalignment in malformed teeth. Zhang et al.18 proposed a method called TSGCN, which utilizes a dual-stream architecture based on GCN to learn coordinate features and normal features, further improving performance compared to other methods. Nevertheless, there is still room for improvement in its ability to extract and process global features. Zhao et al.19 proposed a region-aware graph convolutional network (RAGCNet) for 3D tooth segmentation. The network designs a region-aware module (RAM) using an attention mechanism for feature extraction and fusion. However, it still exhibits certain limitations when handling special cases such as tooth loss and malformations.

Transformer-based methods

In the task of tooth segmentation, traditional CNN and GCN have made some progress, but they still face several key challenges, such as handling the disorderliness of point cloud data, capturing long-range dependencies, and distinguishing similar features in complex geometric structures. To address these issues, the Transformer architecture has gained attention due to its ability to effectively capture detailed features in complex geometric structures. Guo et al.20applied Transformer to 3D point cloud deep learning and proposed the Point Cloud Transformer (PCT) algorithm. This method generates distinguishable features through a coordinate embedding module and designs an offset attention module that combines self-attention with offset values, demonstrating good performance in general tooth segmentation tasks. Xiong et al.21 proposed TFormer, a method based on a 3D Transformer architecture to extract local and global contextual information for distinguishing tooth and gingival boundaries. They also designed a novel point curvature geometry-guided loss to refine boundary details and improve segmentation accuracy. However, this method exhibits limitations when applied to models with missing teeth or wisdom teeth.Xiong et al.22 proposed a novel method called TSegFormer, which employs a multi-task 3D Transformer architecture to capture local and global dependencies in tooth and gingival point clouds. Additionally, they introduced a point curvature-based geometry-guided loss for end-to-end boundary optimization, avoiding traditional two-stage post-processing steps.

Dual-stream network-based methods

To improve the accuracy and robustness of segmentation, dual-stream networks have garnered attention due to their ability to effectively integrate different types of features. Zhang et al.23proposed TSGCNet, which accomplishes dental model segmentation tasks through a dual-stream graph convolutional network. This method integrates complementary information from coordinates and normal vectors to learn discriminative geometric representations for mesh face classification. However, the algorithm exhibits missegmentation in models with 12 teeth. Li et al.2315 proposed an end-to-end deep neural network called MBeSegNet. To reduce the ambiguity of mesh feature representation near tooth boundaries, the network introduces a multi-scale bidirectional enhancement module, which learns multi-scale feature representations by combining local contextual information of different sizes from various neighborhoods. However, the study only utilized a dataset of 160 dental models and demonstrated effectiveness primarily on normal teeth. Ma et al.24 proposed a tooth segmentation method based on multiple geometric feature learning, enhancing the ability to distinguish different mesh geometric features by designing multiple geometric feature learning modules. Nevertheless, the limited number of samples used in the experiments restricts its generalization ability and robustness. Lin et al.25 proposed a dual-branch geometric attention network (DBGANet). This network captures tooth geometric structures and boundary information through 3D coordinates and normal vectors, while modeling global context in the C branch to distinguish adjacent teeth. The method performs well on the Teeth3DS dataset and a self-constructed dataset of 40 dental models, but its effectiveness is limited in complex and atypical cases, leading to failure issues. Jin et al.26 proposed a novel learnable method to optimize coarse results obtained from existing 3D tooth segmentation algorithms. This optimization framework introduces a dual-stream network called TSRNet (Tooth Segmentation Refinement Network) to correct defective boundaries and distance maps extracted from coarse segmentation. The method significantly improves the coarse results of baseline approaches, achieving state-of-the-art performance.

A comparison of related methods is shown in Table 1. In summary, in the task of tooth segmentation, CNN or graph convolution-based methods can integrate geometric information for local and global feature extraction, making them suitable for regular data. Dual-stream network methods improve segmentation accuracy by fusing different types of geometric features and demonstrate strong performance in boundary refinement and multi-scale feature learning. Transformer-based methods capture long-range dependencies through self-attention mechanisms and achieve boundary refinement via geometry-guided losses, excelling in general segmentation tasks. However, these methods still exhibit the following shortcomings: (1) The use of a single max-pooling strategy in global feature extraction often leads to feature confusion between adjacent tooth categories, limiting feature discriminability. (2) Due to the limited scale of datasets, the performance of these models on malformed tooth data is constrained, making it difficult to meet the demands of diverse clinical scenarios. (3) Although the segmentation accuracy is improved by introducing a fine local feature extraction mechanism, the training and inference efficiency decreases significantly along with the increase of network complexity, and at the same time, it fails to adequately address the key issues of disorder and irregularity of point cloud data. In contrast to the aforementioned methods, this paper constructs a self-built dataset of malformed dental models and designs a novel network architecture capable of effectively identifying semantic features of various malformed teeth. The proposed method demonstrates the ability to perform tooth segmentation tasks rapidly, efficiently, automatically, and with high precision, significantly enhancing the model’s adaptability and robustness in complex dental structures.

Methods

Overall network structure design

The overall network architecture of the proposed method is illustrated in Fig. 1. It adopts an encoder-decoder structure and efficiently extracts dental geometric features with a minimal number of learnable parameters. The input point cloud data for this network is an N\(\times\)15 feature matrix, where the 15-dimensional features correspond to the centroid coordinates, normal vectors, and the coordinates of the three vertices of each triangular mesh. The output is an N \(\times\) 17 matrix, where 17 denotes the number of semantic categories of dentition. The specific processing flow is as follows:

-

(1)

The 15-dimensional input features are mapped to a higher-dimensional space 66 dimensions through an embedding layer, thereby providing richer feature representations for subsequent network processing. Subsequently, the network progressively extracts both global features and local details of the point cloud through four downsampling and four upsampling operations.

-

(2)

In the feature-guided deep encoder, the farthest point sampling (FPS) strategy ensures that the sampled points are spatially representative by iteratively calculating the minimum Euclidean distance from each point in the point cloud to the currently selected set of points and selecting the point with the largest distance as the new key point. Then, the K-nearest neighbor search (KNN) method is used to determine its neighborhood for each key point, and the square distance between pairs of points is computed by the square-distance function, and k=32 nearest neighbors are selected within the radius of 2.5 times the average point distance to effectively extract local geometric features. A normalization method is then applied, combining the local mean with the global standard deviation to eliminate scale variations and offsets in local features, ensuring spatial consistency and uniformity of the features. Simultaneously, a push–pull strategy is used to optimize the point cloud density by adaptively adjusting the values of \(\alpha _1\) of \(\beta _1\). Additionally, a residual structure is introduced in the feature extraction block, preserving more high-dimensional features through skip connections, mitigating the gradient vanishing problem, and enhancing the transmission and fusion capabilities of the features.

-

(3)

During the downsampling process at each stage, an inverted bottleneck global feature extraction stream is designed to guide the encoder in learning the overall features of the dental model through deep-level feature dynamic scaling. The generated fused features serve as the input for the next stage of downsampling, promoting global consistency of features and effective transmission of contextual information.

-

(4)

In the decoder, an interpolation method is employed to progressively decode high-level dental semantic information, reconstructing the spatial structural features of high-resolution dental meshes. Through a multi-scale feature fusion mechanism, low-resolution features and high-resolution features are effectively integrated, generating richer multi-scale contextual information, thereby providing comprehensive feature representation for subsequent tasks.

Finally, the network maps the features of each point to the corresponding category labels through a classification head, achieving precise semantic segmentation of the tooth regions in the point cloud.

Feature-guided deep encoder

The “irregularity” of point cloud data is primarily manifested as the non-uniform distribution of points in space, often constrained by the precision of scanning devices and environmental factors. The design of the normalization method draws inspiration from point cloud normalization27 and batch normalization techniques, combining their advantages in feature standardization and distribution adjustment. By dynamically adjusting the mean and standard deviation, it facilitates rapid network convergence and enhances training stability. In the context of point cloud processing, this normalization strategy helps to uniformly distribute features in local regions, improving the model’s generalization capability and performance.

The architecture of the downsampling network in the deep encoder is illustrated in Fig. 2. During downsampling, non-learnable components such as Farthest Point Sampling (FPS) and K-Nearest Neighbors (KNN) are initially employed. While FPS and KNN-based neighborhood aggregation can extract local features, they struggle to address the issue of feature inconsistency caused by uneven point cloud density, which negatively impacts feature learning and model training. Following FPS and KNN, a clustering operation is further introduced to enhance the capture of local point cloud structures. To resolve the issue of feature inconsistency, a normalization operation is proposed for the grouped points. Finally, deep-level features are effectively extracted through residual blocks, mitigating the vanishing gradient problem in deep networks. Detailed steps of the normalization process will be elaborated in the next subsection.

Normalization methods

The workflow of the normalization method is illustrated in Fig. 3, with the specific procedural steps outlined as follows:

-

Step 1:

Mean Calculation First, compute the mean \(\mu _{1}\) of the input point set \(\text {new}_-\text {points}\), which is used to normalize the clustered point set. The mean can be calculated in two ways: local mean and global mean. Local Mean: The local mean is computed for the features of each sampled point.

$$\begin{aligned} \mu _1 = \frac{1}{S} \sum _{s=1}^S \text {new}\_\text {points}[s] \end{aligned}$$(1)Among them,S is the number of sampled points,and \(\text {new}_-\text {points}[s]\) represents the local mean of the features for the S-th sampled point, preserving the spatial information of each sampling point. Global mean:The global mean is computed based on the entire batch of points,aiming to eliminate inter-batch variations.

$$\begin{aligned} \mu _1 = \frac{1}{B \times S} \sum _{b=1}^B \sum _{s=1}^S \text {new}\_\text {points}[b,s] \end{aligned}$$(2)Where B is the batch size,S is the number of sampling points per. The global mean facilitates the standardization of the entire dataset, ensuring that the feature scales of all point clouds are unified.

-

Step 2:

Standard Deviation Calculation Compute the standard deviation of the point set \(\text {grouped}_-\text {points}\) to measure the dispersion of each point relative to the mean. The standard deviation can also be categorized into local standard deviation and global standard deviation. Local Std:For each sampled point, the local standard deviation is calculated within its neighborhood.

$$\begin{aligned} \sigma _1 = \frac{1}{K} \sum _{k=1}^K \left( \text {grouped}\_\text {points}[k] - \mu _1 \right) ^2 \end{aligned}$$(3)Where K is the number of neighboring points for each sampling point, \(\text {grouped}\_\text {points}[k]\) indicates the characteristics of the K-th neighboring point, and the local standard deviation can reflect the distribution characteristics of the neighborhood around each point. Global std:computed across the entire dataset to avoid the influence of local variations.

$$\begin{aligned} \sigma _1 = \frac{1}{B \cdot S \cdot K} \sum _{b=1}^B \sum _{s=1}^S \sum _{k=1}^K \left( \text {grouped}\_\text {points}[b,s,k] - \mu _1 \right) ^2 \end{aligned}$$(4)where B is the batch size, S is the number of sampling points, and K is the number of neighboring points for each sampling point. The global standard deviation helps to unify the features of individual point clouds and eliminate bias due to local variations.

-

Step 3:

Normalization operation The point set \(\text {grouped}_-\text {points}\) is normalized by the local mean and global standard deviation. The normalization formula is:

$$\begin{aligned} \text {grouped}\_\text {points}' = \frac{\text {grouped}\_\text {points} - \mu _1}{\sigma _1 + \epsilon } \end{aligned}$$(5)Where \(\epsilon\) is a small constant (usually is \(1e^{-5}\)) used to avoid division by zero errors. The core of the normalization operation is to make each feature of the point cloud have zero mean and unit variance by subtracting the mean and dividing by the standard deviation.

-

Step 4:

Affine Transformation To recover the scale and offset of the normalized point cloud, adjustments are made using affine transformations. The formula for affine transformation is:

$$\begin{aligned} \text {grouped}\_\text {points}'' = \alpha _1 \cdot {grouped}\_\text {points}' + \beta _1 \end{aligned}$$(6)where \(\alpha _1\) and \(\beta _1\) are learnable parameters that control the scale and offset of the normalized point cloud. By learning these parameters, the network can adaptively adjust the features of the point cloud to make it more suitable for the data distribution of the feature task. The initial value \(\alpha _1\) is usually set to 1 and \(\beta _1\) is set to 0, indicating that no scale adjustment or bias is performed in the initial state. During training, the network computes the loss function based on the segmentation task and calculates the gradient for each parameter by backpropagation. The gradients computed by backpropagation are passed to the optimizer Adam, which uses them to update the values of \(\alpha _1\) and \(\beta _1\):

$$\begin{aligned}&\alpha \leftarrow \alpha - \eta \frac{\partial L}{\partial \alpha } \end{aligned}$$(7)$$\begin{aligned}&\beta \leftarrow \beta - \eta \frac{\partial L}{\partial \beta } \end{aligned}$$(8)where \(\eta\) is the learning rate,\(\frac{\partial L}{\partial \alpha }\)and \(\frac{\partial L}{\partial \beta }\) are the gradients computed by backpropagation.

Optimized density for push–pull strategy

In order to adaptively adjust the values \(\alpha _1\) of \(\beta _1\) and of the affine transform in step 4 of the previous subsection, the point cloud density is optimized using a push–pull strategy. The original point cloud dataset is x and its standard deviation is \(\sigma _x\). After normalization, the point cloud dataset becomes \(\hat{x}\) and its standard deviation is \({\sigma _{\hat{x}}}\). To measure the change in the density of the point cloud, define the ratio of the change in standard deviation \(\Delta\).

This ratio reflects the variation of the standard deviation of the point cloud data and determines the variation of the density of the point cloud.

According to the difference in the standard deviation change ratio1 , the density of the point cloud data was adjusted by using a push–pull strategy as shown in Fig. 4. When it is \(\Delta > 1\), it means that the standard deviation increases, the density of the point cloud decreases, and the normalization increases the distance between the points by pushing them apart, i.e., Eq:

where \(\alpha\) is a learnable parameter to control the increase of distance between points, \(\mu\) and \(\sigma _x\) are the mean and standard deviation of the point cloud, respectively, and \(\beta\) is an offset parameter.

In this way, the distance between the points is increased and the density is decreased, which is suitable for high density point cloud areas; when \(\Delta < 1\), the standard deviation decreases and the density of the point cloud increases, the normalization will “bring the points closer” and reduce the distance between the points; when \(\Delta = 1\), the standard deviation does not change, the density of the point cloud stays the same, the normalization does not carry out any operation, and the density of the point cloud does not change.

Inverted bottleneck global feature extraction

Global features play a crucial role in tooth segmentation tasks, significantly reducing missegmentation regions between adjacent teeth and enhancing overall segmentation accuracy and robustness. However, efficiently capturing global features while preserving key semantic information remains a major challenge in this field. Although PointNet13 does not fully consider local region features and contextual correlations, its efficiency and simplicity in global feature extraction provide important insights for our work.

Based on this, an inverted bottleneck global feature extraction structure, as illustrated in Fig. 5, is designed to guide the encoder in learning the overall features of the dental model. In this global stream, drawing inspiration from the inverted bottleneck concept, the representation capability of global features is effectively enhanced through multi-stage feature expansion and compression, combined with residual connections and max-pooling operations.

Specifically, for the input point cloud feature \(F_p \in \mathbb {R}^{N \times d_{im}}\), a preliminary dimensionality mapping is first achieved through a Multilayer Perceptron (MLP), resulting in the feature representation \(F_g \in \mathbb {R}^{N \times 64}\).

Subsequently, the feature is fed into the core module, the MLP Block, where global features are efficiently extracted via the inverted bottleneck mechanism. In the MLP Block, starting from an initial dimension of 64, the feature undergoes two dimensionality expansions and one dimensionality compression?the feature is first expanded into high-dimensional spaces of 128 and 512:

Here,\(W_1 \in \mathbb {R}^{64 \times 128}\) and \(W_2 \in \mathbb {R}^{128 \times 512}\) represent weight matrices, and \(\sigma (\cdot )\) denotes the activation function (ReLU). This process corresponds to the expansion phase of the bottleneck structure.

Next, the inverted bottleneck process is completed by compressing the feature back to its original dimension of 64:

where \(W_3 \in \mathbb {R}^{512 \times 64}\) is the weight matrix for the compression stage. Finally, the global features are integrated into \(W_g^{global} \in \mathbb {R}^{N \times d_{out}}\) by the maximum pooling operation, i.e.

This inverted bottleneck structure effectively retains the key information in the original features while capturing the high-dimensional global feature relationships, significantly improves the efficiency and relevance of global feature extraction, and exhibits superior results in reducing the adjacent teeth error facets.

Experiments results and analyses

Dataset construction

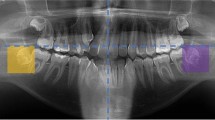

Self-constructed malocclusion dataset by collecting and organizing oral sweep data from real cases. As shown in Table 2, the distribution of various categories within the dataset is clearly presented. Among them, due to the small amount of data on implant screw residual roots, we expanded it to twice the expansion multiple of other deformities during training. Figure 6 focuses on the visualization of malformed teeth in the dataset, displaying the specific morphological characteristics of dental anomalies in an intuitive graphical format. This dataset comprises 1103 half-jaw malformed dental models, covering nine major categories of anomalies: wisdom teeth, High and low drop teeth, missing teeth, erupting small teeth, diastema, dental crowding, implant screw residual roots, crown deformities, and Da Vinci-like black triangles. Among these, wisdom teeth data are divided into four scenarios: full scans of two wisdom teeth, partial scans of two wisdom teeth, full scans of one wisdom tooth, and partial scans of one wisdom tooth. Data on missing teeth include missing wisdom teeth and single and multiple missing teeth, with the most severe cases involving only eight remaining teeth. Erupting small teeth data primarily originate from collections during the tooth replacement period in children. Dental crowding data encompass various conditions such as narrow dental arches, excessive interdental spacing, and malpositioned teeth. Da Vinci-like black triangle data reflect triangular gaps caused by gingival recession or loss of periodontal tissue.

Compared to 3DTeethSeg2228, the proposed dataset contains a significant number of malformed teeth. Additionally, 3DTeethSeg22 is obtained through indirect scanning and includes plaster bases. In contrast, the proposed dataset utilizes intraoral scanning technology, which effectively reduces errors introduced during the mold-making process. This technology also enables the acquisition of more precise oral structure data, making it closer to real-world oral environments. Furthermore, when processing these malformed dental models, the segmentation task faces considerable challenges due to the common manifestations of dental anomalies, such as tooth crowding, misalignment, or large-scale displacement.

The original dental models contain approximately 300,000 triangular meshes stored in STL file format. Due to limitations in training resources, downsampling is typically required to reduce the model size while preserving the main morphological features of the dental models. Balancing computational efficiency and geometric detail, and referencing mainstream dataset pre-processing standards, the number of triangular meshes is uniformly reduced to around 10,000 faces to meet the requirements of the segmentation task. Mesh Labeler(4.3.1) software is used as the annotation tool, allowing direct coloring of target regions on 3D data. To further enrich the training dataset, traditional data augmentation methods are employed, including random rotation, translation, and scaling. The original dataset was expanded to five times its original size, with the implant screw residual roots data expanded to ten times its original size, resulting in a dataset containing 5540 samples. After data augmentation, the dataset is divided into training, validation, and test sets in a 6:2:2 ratio to ensure sufficient model training and accurate evaluation.

Implementation details and evaluation metrics

The hardware environment for this experiment includes an NVIDIA A10 GPU, with the operating system being Linux 10 (64-bit). On the software side, the experiment is based on the Python 3.10.14 programming language, utilizing Torch 2.3.1 and CUDA 12.1 for deep learning acceleration support. During model training, the loss function selected is the Generalized Dice Loss (GDL), which is widely adopted for its robustness in multi-class segmentation tasks and its effectiveness in handling class imbalance issues. The optimizer chosen is Adam, with the number of training iterations set to 200 epochs, a batch size of 12, and an initial learning rate of 0.001. To further enhance training stability and efficiency, the learning rate scheduler employs the ReduceLROnPlateau strategy provided by PyTorch, where the learning rate is adjusted by a factor of 0.1 if the validation performance metric shows no significant improvement over 7 consecutive epochs.

To validate the performance of the proposed method, the primary evaluation metrics selected are Overall Accuracy (OA) and mean Intersection over Union (mIoU), with their formulas defined as follows:

where TP, TN, FP, and FN represent true positives, true negatives, false positives, and false negatives, respectively, and C denotes the total number of semantic categories.

Comparative experimental results

Classical point cloud segmentation methods (e.g., PointNet13), state-of-the-art point cloud segmentation methods (e.g., PointMLP14 and PointeNet16), as well as algorithms specifically designed for 3D tooth segmentation (e.g., MeshSegNet12 and MBeshegNet15), were systematically compared on three datasets. To ensure the fairness and reliability of the evaluation results, all baseline methods were trained under identical experimental conditions, including consistent data pre-processing , hyperparameter settings, and training strategies.

Self-constructed malformed dataset

On the self-constructed dataset containing nine major malformation categories, the experimental results of various methods in terms of overall accuracy (OA) and mean intersection over union (mIoU) are presented in Table 3, quantitatively validating the superiority of the proposed method. From Table 1, the following observations can be made:

-

(1)

Significant Performance Advantage: The proposed method significantly outperforms other models in both overall accuracy (OA) and mean intersection over union (mIoU), fully demonstrating its effectiveness.

-

(2)

Compared to the foundational method in 3D point cloud segmentation, PointNet13, and the classical method in 3D tooth segmentation, MeshSegNet12, the proposed method shows substantial improvements in both OA and mIoU, with increases of 3.4% points and 11.3% points in OA, and 8.2% points and 26% points in mIoU, respectively.

-

(3)

Compared to recent point cloud segmentation methods such as PointMLP14, PointeNet16, and the tooth segmentation method MBeshegNet15, the proposed method demonstrates significant advantages in both OA and mIoU. Specifically, compared to PointMLP14and PointeNet16, the proposed method improves OA by 2.3% points and 2.2% points, and mIoU by 6.3% points and 5.6% points, respectively. Compared to MBeshegNet15, the proposed method achieves improvements of 20.5% points in OA and 41.2% points in mIoU, fully demonstrating its superiority in 3D tooth segmentation tasks.

-

(4)

To assess the computational efficiency of the proposed method, its parameter count,test the inference time and floating-point operations (FLOPs) are compared with those of other methods. The proposed method achieves high segmentation performance on the self-constructed malformed dental dataset while significantly reducing computational costs. Compared to MBeshegNet15, the computational cost is reduced by 78%. In terms of parameter count, the proposed method reduces parameters by 56%, 14%, 91%, and 89% compared to PointNet13, MeshSegNet12, PointMLP14, and PointeNet16, respectively, all of which are significantly lower than other methods. In terms of computational cost, reductions of 44%, 94%, 79%, and 78% are achieved, substantially lowering FLOPs and improving inference efficiency and computational resource utilization. The segmentation performance of the proposed method remains at a high level despite the reduction in the number of parameters, computation and inference time, proving its superior balance between computational efficiency and performance, making it suitable for practical 3D tooth segmentation scenarios.

Figure 7 presents the visualization of segmentation results achieved by the proposed method on different malformed tooth categories, including seven typical types of malformed teeth. Each case demonstrates its unique characteristics and the challenges encountered in the segmentation task.

The first case involves an atypical tooth type, namely the “Da Vinci black triangle” data, where gingival recession or loss of periodontal tissue leads to triangular gaps between teeth. This type of dataset significantly increases the difficulty of tooth boundary identification. The proposed method accurately captures the tooth boundaries near the gap regions, whereas other methods tend to produce erroneous segmentation patches near the gaps.

The second case represents a common distribution of 14 normal teeth. In this case, except for MeshSegNet12 and MBeshegNet15, other methods demonstrate satisfactory segmentation results, indicating that such tooth structures pose lower difficulty for segmentation algorithms.

The third case involves a malformed tooth with missing teeth, specifically the absence of the first molar and first premolar (left and right). This malformed tooth is prone to incorrect semantic segmentation near the missing tooth regions. In contrast, the proposed method effectively identifies the semantics of teeth in the missing regions, avoiding large-scale segmentation errors, while other methods exhibit varying degrees of missegmentation in these areas.

The fourth case features teeth with diastema. For this type of malformed tooth, both MeshSegNet12 and the proposed method perform well, although MeshSegNet12 still shows some incompleteness in boundary region identification.

The fifth case involves redundant patches, which may arise from noise interference or occlusion during the scanning process. The geometric features of these redundant regions resemble those of tooth surfaces, making them highly likely to interfere with segmentation results. In this case, except for MeshSegNet12and the proposed method, other methods incorrectly identify redundant patches as tooth features. However, it should be noted that algorithms including MeshSegNet12, MBeshegNet15, and the proposed method all exhibit incomplete identification in the crown regions of the teeth.

The sixth case pertains to abnormal dental arches and severe tooth loss. In this scenario, PointNet13, PointMLP14, and PointeNet16demonstrate relatively high segmentation performance, but still produce minor semantic errors in some adjacent tooth regions.

The seventh case involves malformed teeth with dental crowding. Due to their tight arrangement and overlapping positions, such teeth impose higher demands on the segmentation model’s ability to distinguish between them. The results show that, except for PointeNet16and the proposed method, other methods exhibit large-scale semantic errors in the overlapping regions of crowded teeth. In contrast, the proposed method demonstrates stronger robustness and precision in this scenario.

Teeth3DS dataset

The Teeth3DS dataset is the first publicly available dataset focused on 3D tooth segmentation and annotation, created during the 3DTeethSeg challenge at the 2022 International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI). Teeth3DS28 consists of 1800 intraoral scan data samples. In the dataset, normal dental occlusion accounts for 51%, while mild to moderate malocclusion constitutes 33%, and severe malocclusion represents only 1%. The types of malocclusion include wisdom teeth, uneven tooth levels, wisdom teeth, High and low drop teeth, missing teeth, erupting small teeth, diastema and mild crowding. Among the cases of missing teeth, mild cases account for 95%. In this study, the dataset containing 1800 samples is divided into training, validation, and test sets in a 6:2:2 ratio. The original format of the dataset is OBJ, accompanied by corresponding label files in JSON format. Since the self-constructed dataset uses the VTP format, the OBJ files are converted to VTP format for unified processing. The experimental results of various methods in terms of overall accuracy (OA) and mean intersection over union (mIoU) are presented in Table 4.

As shown in Table 4, since the dataset primarily consists of normal tooth data, all methods except MeshSegNet [11] and MBeshegNet [27] demonstrate satisfactory segmentation performance. However, the proposed method achieves the best OA and mIoU.

The visualization of segmentation results for various methods on this dataset is presented in Fig. 8. Given that the dataset mainly contains normal tooth samples, In this study, four types of typical malformed tooth cases among severe malformation cases were selected for comparative analysis. The experimental results indicate the following: In Case 1 (erupting small teeth), all methods except MeshSegNet12 and MBeshegNet15 show good segmentation performance, producing only a small number of redundant patches. In Case 2 (missing second molar and first premolar), the proposed method accurately identifies the semantic information of the missing regions, while other methods generally exhibit erroneous patches in adjacent teeth. In Case 3 (misaligned left dentition with a depressed second molar), despite the increased segmentation difficulty due to the abnormal tooth curvature, the proposed method still demonstrates superior performance, with only minor incomplete identification in the crown region of the second molar. In Case 4 (diastema with missing second molar), MBeshegNet15 shows large-scale recognition errors, MeshSegNet12 exhibits incomplete identification of incisors, while the proposed method maintains stable segmentation performance.

The Teeth3DS dataset generally includes samples with plaster base structures, which significantly increases the complexity of the segmentation task, particularly in the precise identification of tooth boundary regions. Nevertheless, the experimental results demonstrate that the proposed method exhibits superior segmentation performance across various malformed tooth cases, fully validating its adaptability and robustness in complex scenarios.

3D-IOSSeg dataset

The 3D-IOSSeg dataset provides a fine-grained tooth segmentation dataset29, containing 180 intraoral scan samples, with 120 samples in the training set and 60 samples in the test set. Each sample is annotated with detailed mesh units, covering various dental anomalies such as missing teeth,overlapping, misalignment, and malocclusion. Also, the dataset covers a wide age range of the population (0–50 years). Due to the small size of the dataset, the training set was augmented to 3600 samples, while the test set remained the original 60 samples. The original data format is PLY, which was converted to VTP format to maintain consistency with the self-constructed dataset. The experimental results of various methods in terms of OA and mIoU are presented in Table 5.

As shown in Table 5, the proposed method achieves the best performance in both OA and mIoU, with values of 94.5% and 86.8%, respectively. Compared to other methods, the proposed method outperforms the second-best PointeNet14by 1.3% points in OA and PointeNet16 by 2.5% points in mIoU.

The visualization of segmentation results for various methods on this dataset is presented in Fig. 9. It can be observed that: In Case 1 (missing first premolar), other methods produce erroneous patches of the first premolar in the second premolar region, while only the proposed method achieves accurate segmentation. In Case 2 (dental crowding with wisdom teeth), although all methods fail to identify the left wisdom tooth due to the scarcity of wisdom tooth samples, the proposed method demonstrates significantly higher segmentation accuracy in the crowded tooth region compared to other methods. In Case 3 (diastema with missing teeth), the proposed method effectively avoids the erroneous patches commonly seen in other methods, exhibiting stronger robustness.

Ablation experimental results

To evaluate the effectiveness of the normalization method and the push–pull strategy, we designed a series of ablation experiments. By comparing model performance under different configurations, we conducted an in-depth analysis of the impact of each component on the overall performance of the model.

Effectiveness of normalization

First, ablation experiments were conducted on the normalization method to assess the impact of local mean, global mean, local standard deviation, and global standard deviation on model performance. The experimental results are shown in Table 6. When feature extraction is performed directly after FPS and k-NN without any normalization, the OA and mIoU are reduced by 5% points and 7.5% points, respectively, compared to the proposed method. When global mean and local standard deviation are used for normalization, the model’s OA and mIoU are reduced by 2.2% points and 4.1% points, respectively, compared to the proposed method. When local mean and global standard deviation are used for normalization, the OA and mIoU reach 96.6% and 90.8%, respectively. This indicates that the local mean can better capture detailed features of local regions, adapting to the non-uniform distribution of point cloud data. The global standard deviation provides a stable normalization baseline, enhancing global consistency. This combination maintains local details while improving global consistency, thereby enhancing the overall performance of the model.

To validate the effectiveness of the push–pull strategy, we compared the model performance with and without this strategy. The experimental results are shown in Table 7. In the absence of the normalization strategy, \(\alpha _1\) \(\beta _1\) was set to fixed values of 1 and 0. After introducing the push–pull strategy, \(\alpha _1\) \(\beta _1\) was dynamically adjusted based on the rate of change in the standard deviation. The model’s OA and mIoU improved from 89.4% and 81.1% to 96.6% and 90.8%, respectively. This significant performance improvement demonstrates the effectiveness of the push–pull strategy in adjusting point cloud density and optimizing feature distribution.

Effectiveness of global inverted bottleneck

To comprehensively evaluate the impact of the inverted bottleneck global feature extraction stream on network performance, a comparative analysis was conducted on network structures with and without this stream. The experimental results are shown in Table 8. After incorporating the inverted bottleneck global feature extraction stream, the OA significantly increased from 92.6 to 96.6%, an improvement of 4% points. The mIoU for segmentation improved from 85.1 to 90.8%, an increase of 5.7% points. This notable performance enhancement demonstrates that the inverted bottleneck global feature extraction stream can effectively capture global contextual information in point cloud data, reduce redundant features, and aggregate multi-scale geometric features. Additionally, this design enables the extraction of global semantic feature distributions in dental models, deeply mining long-range dependencies and global features, thereby exhibiting higher performance and robustness in segmentation tasks.

Discussion

This paper proposes a hierarchical feature-guided U-shaped 3D dental model segmentation method. In the feature-guided deep encoder, the normalization process combining local mean and global standard deviation, along with the push–pull strategy for optimizing point cloud density, effectively enhances the discriminative ability of malformed tooth features. Additionally, the designed inverted bottleneck global feature extraction stream further guides the encoder to learn the overall features of the dental model, improving the model’s recognition of global features in malformed teeth. Experimental results demonstrate that the proposed method excels in segmentation accuracy, speed, and stability, meeting the practical needs of the orthodontic field for efficient and precise segmentation technology.

Although the proposed method achieves superior performance compared to other comparative methods, there are still some limitations. This is mainly reflected in the fact that the long-tailed distribution characteristics of the dataset lead to problems with inadequate identification of wisdom teeth, identification of implant screw residual roots as teeth, and incomplete identification of terminal crowns in some of the data. To address these limitations, future research will focus on improvements in two aspects: on one hand, enhancing the model’s recognition capability for low-frequency categories by introducing a class-weighted loss function; on the other hand, adopting generative adversarial networks (GAN) to strengthen the model’s robustness against data distribution differences. Meanwhile, the proposed method will be integrated into the orthodontic workflow through algorithmic interfaces, and scanner variability will be mitigated via data standardization in preprocessing. In the future, it is necessary to explore the deep integration with extended reality technology30 to develop a more anatomically specific dental training system. These improvements are expected to enhance the accuracy and stability of the model in various tooth type segmentation tasks, while expanding its application value in clinical practice and providing more reliable technical support for digital orthodontic treatment.

Data availability

The datasets analyzed in the paper are sourced from the Teeth3DS dataset: https://github.com/abenhamadou/3DTeethSeg22_challenge and the 3D-IOSSeg dataset: https://github.com/MIVRC/Fast-TGCN. The acquisition address of the Mesh Labeler is: https://github.com/Tai-Hsien/Mesh_Labeler/blob/main/README.md.

References

Ma, T.; Li, J.; Dang, Z.; Li, Y.; Li, Y. A Dual-Stream Dental Panoramic X-Ray Image Segmentation Method Based on Transformer Heterogeneous Feature Complementation [J]. Technologies, 13, 293 https://doi.org/10.3390/technologies13070293 (2025).

Ma T, Zeng Y, Pei W, Li C, Li Y. Tooth position prediction method based on adaptive geometry optimization [J]. PLoS One, 20(7), e0327498 https://doi.org/10.1371/journal.pone.0327498 (2025).

Wu, K., Chen, L., Li, J. & Zhou, Y. Tooth segmentation on dental meshes using morphologic skeleton. Comput. Graph. 38, 199–211 (2014).

Yaqi, M. & Zhongke, L. Computer aided orthodontics treatment by virtual segmentation and adjustment. In 2010 International Conference on Image Analysis and Signal Processing, 336–339 (IEEE, 2010).

He, T. et al. Geonet: Deep geodesic networks for point cloud analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6888–6897 (2019).

Hwang, J., Park, S., Lee, S. & Shin, Y.-G. Robust harmonic field based tooth segmentation in real-life noisy scanned mesh. In Medical Imaging 2019: Image Processing, vol. 10949, 599–605 (SPIE, 2019).

Wongwaen, N. & Sinthanayothin, C. Computerized algorithm for 3D teeth segmentation. In 2010 International Conference on Electronics and Information Engineering, vol. 1, V1–277 (IEEE, 2010).

Xu, X., Liu, C. & Zheng, Y. 3D tooth segmentation and labeling using deep convolutional neural networks. IEEE Trans. Vis. Comput. Graph. 25, 2336–2348 (2018).

Zanjani, F. G. et al. Mask-MCNet: Tooth instance segmentation in 3D point clouds of intra-oral scans. Neurocomputing 453, 286–298 (2021).

Li, Y. et al. PointCNN: Convolution on x-transformed points. Adv. Neural Inf. Process. Syst. 31 (2018).

Sun, D. et al. Automatic tooth segmentation and dense correspondence of 3D dental model. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part IV 23, 703–712 (Springer, 2020).

Lian, C. et al. Deep multi-scale mesh feature learning for automated labeling of raw dental surfaces from 3D intraoral scanners. IEEE Trans. Med. Imaging 39, 2440–2450 (2020).

Qi, C., Su, H., Mo, K. & Guibas, L. Pointnet: Deep learning on point sets for 3D classification and segmentation; proceedings of the proceedings of the IEEE conference on computer vision and pattern recognition (2017).

Ma, X., Qin, C., You, H., Ran, H. & Fu, Y. Rethinking network design and local geometry in point cloud: A simple residual MLP framework. arXiv preprint arXiv:2202.07123 (2022).

Li, Z., Liu, T., Wang, J., Zhang, C. & Jia, X. Multi-scale bidirectional enhancement network for 3D dental model segmentation. In 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), 1–5 (IEEE, 2022).

Gu, L. et al. Pointenet: A lightweight framework for effective and efficient point cloud analysis. Comput. Aid. Geom. Des. 110, 102311 (2024).

Zhao, Y. et al. 3D dental model segmentation with graph attentional convolution network. Pattern Recogn. Lett. 152, 79–85 (2021).

Zhang, L. et al. TSGCNet: Discriminative geometric feature learning with two-stream graph convolutional network for 3D dental model segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6699–6708 (2021).

Zhao, Y. et al. Few sampling meshes-based 3D tooth segmentation via region-aware graph convolutional network. Expert Syst. Appl. 252, 124255 (2024).

Guo, M.-H. et al. PCT: Point cloud transformer. Comput. Vis. Med. 7, 187–199 (2021).

Xiong, H. et al. Tformer: 3D tooth segmentation in mesh scans with geometry guided transformer. arXiv preprint arXiv:2210.16627 (2022).

Xiong, H. et al. Tsegformer: 3D tooth segmentation in intraoral scans with geometry guided transformer. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 421–432 (Springer, 2023).

Zhang, L. et al. Tsgcnet: Discriminative geometric feature learning with two-stream graph convolutional network for 3D dental model segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6699–6708 (2021).

Ma, T., Yang, Y., Zhai, J., Yang, J. & Zhang, J. A tooth segmentation method based on multiple geometric feature learning. In Healthcare, vol. 10, 2089 (MDPI, 2022).

Lin, Z. et al. DBGANet: Dual-branch geometric attention network for accurate 3D tooth segmentation. IEEE Trans. Circuits Syst. Video Technol. 34, 4285–4298 (2023).

Jin, H., Shen, Y., Lou, J., Zhou, K. & Zheng, Y. TSRNet: A dual-stream network for refining 3D tooth segmentation. IEEE Transactions on Visualization and Computer Graphics (2024).

Zheng, S., Pan, J., Lu, C. & Gupta, G. Pointnorm: Dual normalization is all you need for point cloud analysis. In 2023 International Joint Conference on Neural Networks (IJCNN), 1–8 (IEEE, 2023).

Ben-Hamadou, A. et al. 3DTeethSeg’22: 3D teeth scan segmentation and labeling challenge. arXiv preprint arXiv:2305.18277 (2023).

Li, J. et al. A fine-grained orthodontics segmentation model for 3D intraoral scan data. Comput. Biol. Med. 168, 107821 (2024).

Toni, E., Toni, E., Fereidooni, M. & Ayatollahi, H. Acceptance and use of extended reality in surgical training: An umbrella review. Syst. Rev. 13, 1–28 (2024).

Acknowledgements

This work was supported by the National Key R&D Program of China (No. 2022ZD0119005), and in part by the Shaanxi Natural Science Fundamental Research Program Project (No. 2022JM-508).

Author information

Authors and Affiliations

Contributions

Tian Ma and Xiaoyuan Wei wriote the main content, Jiechen Zhai provided criticism and editing, and the remaining members reviewed and edited the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ma, T., Wei, X., Zhai, J. et al. Feature-guided multilayer encoding–decoding network for segmentation for 3D intraoral scan data. Sci Rep 15, 32129 (2025). https://doi.org/10.1038/s41598-025-16360-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16360-3