Abstract

Over the past decades, the use of fiber-reinforced polymers (FRPs) for retrofitting existing structures has become a widespread practice. More recently, however, the use of FRP bars as the primary reinforcement in new structures—especially concrete columns—has attracted global attention. Various equations have been proposed to predict the compressive strength of columns reinforced with FRP bars. However, these equations typically estimate axial strength without directly accounting for eccentricity. In this study, additional important parameters are considered: eccentricity of axial load, types of longitudinal and transverse reinforcement, and column height. To this end, a dataset of 525 samples is compiled, and machine learning methods—including artificial neural networks (ANN), gene expression programming (GEP), the group method of data handling (GMDH), and multiple linear regression (MLR)—are employed. Among these, ANN yielded the best predictive performance with an R2 value of 0.974. Using the GEP, GMDH, and MLR methods, three predictive equations are proposed. Of these, the GMDH and GEP approaches demonstrate relatively high accuracy, with R2 values of 0.966 and 0.942, respectively. The proposed equations can be used to predict the strength of reinforced concrete (RC) columns under axial loading with or without eccentricity, for various cross-sectional shapes and different types of longitudinal and transverse reinforcement (steel and FRP).

Similar content being viewed by others

Introduction

With the emergence of FRP bars and their adoption in the construction industry, numerous studies have investigated their performance in various structural members. Among these, columns have received particular attention as one of the most critical structural elements.

The use of longitudinal and transverse reinforcement made of GFRP in columns, subjected to concentric and eccentric loading, has been investigated by numerous researchers 1,2,3,4. Hosseini and Sadeghian 5 proposed a deformability index by studying different configurations of transverse reinforcement, such as bar diameter and overlap length. Hadi et al. 6 examined various loading conditions in these columns and reported that neglecting the contribution of FRP bars to compressive strength leads to a significant discrepancy between analytical and experimental results. Due to the limited number of studies on the behavior of columns reinforced with BFRP bars, various parameters—including the percentage of longitudinal reinforcement, eccentricity, stirrup spacing, and stirrup diameter—have been investigated. These were compared with the corresponding parameters in similar columns reinforced with GFRP and steel bars 7,8,9,10. Researchers have also evaluated the incorporation of fibers in concrete for columns reinforced with GFRP bars 11,12. Alanazi et al. 13 further studied the effect of using steel tubes in GFRP-reinforced columns.

With numerous experimental studies conducted on columns reinforced with FRP, some researchers have proposed design equations to reduce the need for costly and repetitive experimental work 4,14,15. With the rise of neural networks and the advancement of machine learning, various models and equations have since been developed to predict the behavior of structural elements. Machine learning employs different techniques for data classification and prediction and is capable of learning relatively complex relationships from large volumes of information and data 16,17. To date, machine learning methods have been used to predict various characteristics of columns, including the compressive strength of concrete-filled steel tube (CFST) columns 18,19,20, columns confined with FRP wraps 21,22,23,24,25,26,27,28,29,30, the bond strength between FRP bars and concrete 31,32,33, and the strength of columns reinforced with FRP bars 34,35,36,37,38,39.

In the context of strength prediction for FRP-reinforced columns using machine learning, Arora et al. 40 applied an artificial neural network (ANN) to estimate the axial capacity of FRP-reinforced concrete columns and compared the results with 14 existing analytical models. All 242 data points used in their study involved columns under concentric loading; eccentric loading was not considered. Performance indices indicated that the proposed ANN model achieved the highest accuracy among the models, with a correlation coefficient (R) of 0.9758. Zhang et al. 41 investigated the behavior of hollow concrete columns (HCCs) using machine learning techniques such as light gradient boosting (LGB), extreme gradient boosting (XGB), and categorical gradient boosting (CGB). Among these, the CGB model demonstrated the highest accuracy, with all models showing less than 10% error compared to experimental results. Cakiroglu et al. 37 compiled 117 experimental data points to develop machine learning models including Random Forest, Kernel Ridge Regression, Categorical Gradient Boosting, Support Vector Machine, Gradient Boosting Machine, Lasso Regression, Adaptive Boosting, and Extreme Gradient Boosting. All columns in their dataset were subjected to concentric loading. Among the models tested, Gradient Boosting Machine, Random Forest, and XGBoost delivered the highest predictive accuracy. Tarawneh et al. 42 used an artificial neural network to design slender FRP-reinforced columns. Their model incorporated a factor to account for slenderness and introduced slenderness curves to support the design process. They also proposed a genetic expression programming (GEP)-based equation to predict the slenderness ratio, concluding that incorporating this ratio reduced the correlation between axial strength and slenderness. Balili et al. 36 proposed neural network and theoretical models to estimate the axial capacity of GFRP-reinforced columns incorporating glass fibers, using a dataset of 275 samples. The maximum errors reported for the neural network and theoretical models were 1.9% and 3.2%, respectively. Huang et al. 38 used 151 experimental data points on FRP-reinforced concrete columns and concluded that the backpropagation artificial neural network (BP-ANN) outperformed other mathematical models in terms of accuracy and minimized computational error.

Several equations have been proposed to predict the axial strength of FRP-reinforced concrete columns. Some of these are recommended by established design guidelines, while others have been developed by individual researchers. Certain equations account for the contribution of FRP bars to the axial strength of columns; however, several codes conservatively neglect this contribution. This conservative approach is based on experimental findings showing that FRP bars typically sustain only about 5% to 10% of the column’s maximum load 3. Table 1 presents a selection of well-known equations reported in the literature.

As can be seen, CSA-S806-12 does not account for the contribution of FRP bars to the axial strength of columns, whereas other equations do consider this component. In these equations, \(P_{n}\) is the nominal axial strength of column, \(A_{g}\) is the gross sectional area of columns, \(A_{FRP}\) is sectional area of FRP bars, \(E_{FRP}\) is the modulus of elasticity of FRP bars, \(\varepsilon_{fg}\) and \(\varepsilon_{p}\) are both the ultimate strain of FRP bars and \(f^{\prime}_{c}\) is the compressive strength of concrete. The structure of these equations is generally similar; the main differences lie in the values of the coefficients used.

A review of existing models and equations for estimating the compressive strength of FRP-reinforced concrete columns reveals that none directly account for the effects of bending (i.e., load eccentricity), column height, or the type and characteristics of the transverse reinforcement. Therefore, this study aims to develop more comprehensive equations that incorporate all of these parameters.

Objectives and outline of the research

An evaluation of the existing studies on FRP-reinforced columns reveals certain ambiguities and important limitations. One key limitation is the lack of direct consideration of the effect of eccentricity on the compressive strength of FRP-reinforced columns. Furthermore, in the reported studies, FRP bars have typically been used simultaneously for both longitudinal and transverse reinforcement. As a result, combinations involving FRP bars as transverse reinforcement and steel bars as longitudinal reinforcement—and vice versa—have not been thoroughly examined.

This study aims to address these gaps by proposing comprehensive formulas to predict the axial capacity and flexural strength of columns reinforced with FRP bars. The proposed formulas account for eccentricity, the shape of the column cross-section, and the use of FRP bars in either longitudinal and/or transverse reinforcement.

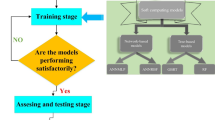

Figure 1 illustrates the fundamental steps undertaken in this study. First, a dataset of 525 samples was compiled, consisting of 12 input parameters and one output. In the next step, Pearson correlation coefficients were used to evaluate the relationships between input variables and their correlation with the output parameter. Additionally, cumulative frequency plots were generated.

Four modeling approaches were employed: artificial neural networks (ANN), gene expression programming (GEP), the group method of data handling (GMDH), and multiple linear regression (MLR). Among these, three methods yielded predictive equations. The performance of the proposed models was then evaluated and compared with existing equations using standard efficiency metrics to identify the most accurate approach for predicting the axial capacity of FRP-reinforced columns.

Datasets of the study

Collection of experimental data

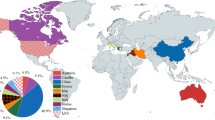

A total of 525 datasets were collected from 66 reported experimental studies 1,2,4,6,14,15,36,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103. The data include columns with various cross-sectional shapes, such as circular, square, and rectangular sections. These columns are reinforced with longitudinal FRP bars, transverse FRP bars, or both. Both concentric and eccentric loading conditions are considered, and the transverse reinforcement consists of either ties or spirals. The experimental data cover a wide range of parameters, with the maximum axial load (Pmax) serving as the output variable. Table 11 summarizes the test references, number of specimens, column shapes, materials of longitudinal and transverse reinforcements, and load eccentricity.

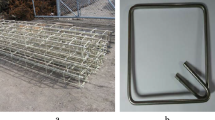

A schematic view of the experiment and the parameters considered as inputs are shown in Fig. 2. Twelve input parameters are taken into account, which are listed alongside the output variable in Table 2. The predictive models in this study were validated using a conventional train/test split approach: 70% of the dataset (367 data points) was used for training, while the remaining 30% (158 data points) was reserved for testing.

Table 3 presents the statistical characteristics of the dataset, including the maximum, minimum, mean, standard deviation, and coefficient of variation for each parameter, based on 525 selected data points.

Figure 3 presents the correlation matrix of the collected datasets, based on the Pearson correlation coefficient 104. This matrix illustrates the relationships between the various parameters. For the selected dataset, the correlation between the input variables and Pmax ranges from –0.48 to 0.65.

The highest correlation is observed between \((A_{g} ,P_{max} )\), \((A_{g} ,A_{t} )\) , and \((A_{g} ,A_{f} )\) , with a coefficient of 0.65. This indicates that an increase in will have the most significant positive impact on Pmax compared to the other parameters. The second most influential parameter is (the cross-sectional area of the stirrup), with a correlation coefficient of 0.59. According to the figure, the e/D ratio exhibits the strongest negative correlation with Pmax, at –0.48. This means that as the e/D ratio increases, Pmax decreases, and among all parameters, this ratio has the greatest negative influence on the compressive strength of the column.

Figure 4 presents the distribution of data points across various ranges for each input parameter, along with their corresponding cumulative percentages. As shown in Fig. 4a, the majority of columns in the dataset have cross-sectional areas smaller than 5000 mm2, and approximately 90% of the columns have areas less than 10,000 mm2. Beyond this threshold, the number of data points declines sharply.

Figure 4b illustrates that most of the columns have heights ranging between 1000 and 2000 mm, followed by a smaller but notable portion with heights under 1000 mm. Columns exceeding 2000 mm in height comprise only about 5% of the total dataset. Regarding concrete compressive strength, no column was constructed with strength below 20 MPa. Approximately 60% of the columns fall within the 20–40 MPa range. A total of 131 columns have compressive strengths between 40 and 80 MPa, and 62 of these fall within the 60–80 MPa subset. Columns with compressive strengths above 80 MPa account for only about 3% of the dataset. In terms of longitudinal reinforcement, nearly 90% of the columns have reinforcement areas less than 2000 mm2, with only 10% exceeding this value. The yield strength of both longitudinal and transverse reinforcement bars shows a similar distribution. The majority of samples have reinforcement yield strengths between 500 and 1500 MPa, followed by a significant portion in the 1500–2000 MPa range. The elastic modulus of the longitudinal reinforcement is less than 50 GPa in approximately 36% of the dataset, whereas most samples fall within the 50–100 GPa range. A similar trend is observed for the transverse reinforcement bars. The distribution of load eccentricity, shown in Fig. 4i, indicates that about 70% of the samples either have no eccentricity or exhibit eccentricity less than 0.2 times the section height (a total of 369 samples). The next most frequent group includes samples with an e/D ratio between 0.2 and 0.4. Overall, 54% of the samples are subjected to concentric loading, while 46% are eccentrically loaded. As shown in Fig. 4j, approximately 70% of the columns have stirrup spacing less than 100 mm. For 25% of the samples, spacing falls between 100 and 200 mm, while only about 5% have stirrup spacing greater than 200 mm. The cross-sectional area of transverse reinforcement bars is less than or equal to 200 mm2 in 84% of the dataset. Finally, regarding stirrup type (Fig. 4l), only four columns were constructed without stirrups. Conventional stirrups were used in 278 samples, while spiral stirrups were used in 243 samples.

Methodology

Artificial neural network

Neural networks are composed of simple operational elements that work together in parallel. These elements are inspired by biological nervous systems. In nature, the functionality of neural networks is determined by the way components are connected.

After configuring or training the neural network, applying a specific input to it results in a specific output. As shown in the Fig. 5, the network adjusts based on the correspondence and alignment between the input and the target until the network’s output matches our desired output (target). Generally, a large number of these input–output pairs are used in this process, referred to as supervised learning, to train the network.

How neural network works redrawn from 105.

In the Fig. 6, a model of neuron with a single input is shown. The input p is applied to the neuron and is weighted by multiplying it by the weight “w”, resulting in a weighted input that is then fed into the transfer function f to produce the final output. By adding a bias to the neuron structure, a biased neuron is created. The bias input is a constant value (l), and the bias value is added to the product “w.p”, effectively shifting the function to the left.

The model of a neuron with one input redrawn from 105.

At least one neuron and sometimes more, make up a network layer. A neural network has at least the input layer, the hidden layer and the output layer. All of the layers in the network consist of nodes or neurons that work like the ones in the brain. The number of nodes in each layer varies with both how many features there are in the input and what the model is expected to do. The input layer takes external data, then sends it to the following layer so that various computations can be performed. This layer mainly receives data, moves it forward and processes nothing itself. Such a layer is able to receive text, sound and visual data. The hidden layer which lies between the input and output layers, is vital for the model. A neural network is built with at least one hidden layer and extra ones are included if the problem is more difficult. Putting in extra layers makes the network bigger and more complex to calculate. They take care of processing the data that was inputted from the layer before. The last layer, known as the output layer, computes the result that the network will give. Basically, it gets input from the hidden layers, works on it and produces the output that will be used 106.

In this study, two layers were considered for the network, including the hidden layer and the output layer. In order to optimize and select the best network, different neurons from 1 to 20 were selected and trained for the hidden layer. The results of mean square error and values of R for networks with various neurons are depicted in Figs. 7 and 8. The network type was selected as feed-forward backprop. Training and adaption learning functions were selected as TRAINLM and LEARNGDM, respectively. The performance function is also considered MSE. Transfer functions for hidden and output layers were considered tan-sigmoid and pure linear, respectively. For the selection of the best network, the amounts of MSE and R were considered (the lowest amount of MSE and the closest amounts of R to one). As can be seen in Figs. 7 and 8, the network with the number of 12 neurons in the hidden layer was considered the optimum network. The schematic view of the best network is shown in Fig. 9.

The training process stops if the validation set error increases for six consecutive iterations. In Fig. 10, related to the optimized network, it is shown that this stopping occurred at iteration 22. Also, no overfitting occurred until iteration 16, where the best performance on the validation set was achieved.

Multiple linear regression

A statistical model known as “linear regression” calculates the linear relationship between a scalar response (dependent variable), and one or more explanatory variables (regressors or independent variables). Multiple linear regression refers to the procedure where there are more than one explanatory variable while simple linear regression deals with just one 107. In multiple linear regression, the parameters of a linear model are estimated with the help of an objective function and variable values. In linear regression, the considered model is a linear relationship in terms of model parameters. For the calculation of multiple linear regression, the following formula is used:

For every observation \(i = 1,2,.....,n\)

The number of observations of dependent and independent variables are considered n and p, respectively.

Consequently, \(y_{i}\) is the ith observation of dependent variable. The jth independent variable’s ith observation is denoted as \(x_{ij}\) (\(j = 1,2,.....,p\)). \(\beta_{0}\) is the constant term (y-intercept), \(\beta_{p}\) is the slope coefficients for each explanatory variable, whereas the ith independent identically distributed normal error is denoted by \(\varepsilon_{i}\). As is evident, this is more than just a straightforward line equation. In actuality, it’s a hyperplane Eq. 108.

In this study, the training and testing data consist of 70 and 30 percent of the whole data, respectively. After training the model, the coefficient of each input parameter and value of intercept were determined and the final equation for estimation of columns strength based on the MLR method is as follow:

Evaluation metrics for MLR for training and testing data are computed and presented in Table 4.

Group method of data handling

Group method of data handling (GMDH) was invented by Professor Alexey G. Ivakhnenko in 1968 109. An external test criterion is used by these GMDH algorithms to search for and choose the fittest polynomial equation. With a second-order polynomial, the ANN-GMDH method builds a group of neurons. The approximate function \(\mathop f\limits^{ \wedge }\) with the output \(\mathop y\limits^{ \wedge }\) produced by combining the second-order polynominals of all neurons, is proposed for a set of inputs \(x = \left\{ {x_{1} ,x_{2} ,x_{3} ,....} \right\}\) in order to make the least error with the real output (y). So y can be described as follows for M experimental data with n output and one target:

The output variables which are predicted by GMDH can be described for any input vector of \(X = (x_{i1} ,x_{i2} ,x_{i3} ,....,x_{in} )\), as follows:

Minimizing the square of error between y and \(\mathop y\limits^{ \wedge }\) should be done by the ANN-GMDH method, Put differently:

The connection between the variables that are input and output which is known as the polynomial Ivakhnenko, can be represented in the following way using the polynomial function:

This polynomial with two variables can be presented as follows:

Regression techniques are used to identify the unknown coefficients \(a_{i}\) in order to minimize the discrepancy between the computed values and the actual output for each input variable \(x_{i}\) and \(x_{j}\). It is possible to adapt the inputs to all pairings of input–output sets optimally by obtaining coefficients for each function G (i.e., each created neuron) that minimize the total neuron error.

In this study, with the aim of using the GMDH method to predict data, GMDH Shell software (GMDH Shell DS 3.8.9) 110 was used. Furthermore, this software drastically cuts down on computation time by not requiring any prior data standardization. Using neural networks, it can create a candidate model with high prediction strength by identifying and considering non-linear interactions and connections between data. Additionally, it can provide a useful equation to predict desirable outputs. After running the software with the input and output data, the computed parameters of the software are presented in Table 5. In Fig. 11, the predicted values by the GMDH shell and their distance from the actual values are shown. The proposed formula by GMDH Shell is presented in Eq. 8.

The parameters defined as model inputs, which are listed in Table 3, are also considered as inputs for the formula. To use Eq. 8, the input parameters are substituted into the formula, and the mathematical operations are performed in the following order: exponentiation, square root, multiplication, division, addition, and subtraction. To simplify the formula, Eq. 8 is divided into four parts, which are then summed together. Accordingly, the parameters \(f_{1} ,f_{2} ,f_{3} \,\,\,and\,\,f_{4}\) are first calculated in sequence and finally added together to obtain the desired compressive strength. \(f_{1} ,f_{2} ,f_{3} \,\,\,and\,\,f_{4}\) are defined in the appendix.

In Fig. 12, the effect of each input parameter on output can be seen. Gross area of cross section, area of transverse reinforcement, and area of longitudinal bars are the most effective parameters with direct effect, so increasing these parameters will increase the compressive strength of the column, while the “e/D” parameter has the most negative effect on the output, so by increasing this parameter, the compressive strength of the column will decrease. This figure also shows that fyl, Et, and S are other parameters with adverse impacts on the axial capacity of the column.

Gene expression programming

Gene Expression Programming is an algorithm for creating mathematical models and computer programs that are based on evolutionary computations and drawn from the principles of natural evolution. Ferreira created this technique in 1999, and it was formally unveiled in 2001 111. To address the shortcomings of the first two genetic algorithms, the GEP algorithm really combines the dominant viewpoints of the two. In this method, the chromosomes’ phenotype—a tree structure with varying length and size—is similar to the genetic programming algorithm, while their genotype has a linear structure similar to the genetic algorithm. Therefore, the GEP algorithm, by overcoming the limitation of the dual role of chromosomes in the previous algorithms, provides the possibility of applying multiple genetic operators with the guarantee of the permanent health of the child chromosomes, and with a speed faster than genetic programming (GP) due to the higher structural diversity than genetic algorithm (GA), it expands the space of possible answers to search more thoroughly. From this perspective, GEP has really been successful in surpassing the first and second thresholds—the replicator threshold and the phenotype threshold—assumed in natural evolution processes.

With this approach, a collection of functions and terminals are used to model different phenomena. The primary arithmetic functions (division, multiplication, addition, and subtraction), trigonometric functions, and other mathematical functions (sin, cos, x2, exp, etc.) or functions defined by user that may be appropriate for the model’s elucidation typically make up a set of functions. Constant values and the independent variables in the problem make up the set of terminals. This approach typically relies on a genetic algorithm to select data points based on the merit function, using the population of data as a source. Certain operators (genes) are also used to perform genetic variations. Unlike GA and GP, GEP uses multiple genetic operators to replicate data simultaneously. The renowned Roulette wheel is used to choose the data. The purpose of duplication is to store multiple relevant pieces of data from one generation to the next. The mutation operator aims to optimize the targeted chromosomes internally through random optimization.

A chromosome, which may include one or more genes, represents an individual in GEP. A single-gene chromosome is referred to as a monogenic chromosome, while a chromosome made up of several genes is called a multigenic chromosome. A multigenic chromosome is particularly helpful for breaking down difficult problems into smaller, more manageable components, each of which is encoded by a different gene. This allows for the modular creation of intricate, hierarchical structures. Every gene in a multigenic chromosome is translated into a sub-ET (expressed tree), and the sub-ETs are usually combined into one by a linking function, also known as a linker 112,113. For modeling, some parameters such as the number of chromosomes, head size, number of genes, and linking function that are considered in this study are presented in Table 6. In this table, all the parameters are considered default except head size, chromosomes, and genes. Head size and genes should be determined before modeling, but the number of chromosomes can be changed during training to improve the accuracy of the model. After modeling with different head sizes and chromosomes, these parameters are selected as 14 and 4, respectively. The number of chromosomes is also changed between 50 and 500 to improve the accuracy of the model. Root mean square error (RMSE) is also considered for the type of fitness function of error.

After modeling, the values predicted by GEP along with their experimental values are presented in Figs. 13a and b for training and testing data, respectively. In these figures, the difference between the actual and estimated values of each data is relatively small. Figures 13a and b confirm the accuracy and capability of this model in predicting the results. So based on this conclusion, the equation based on GEP, which is presented in Eq. (9), can be used reliably to predict the axial capacity of FRP-reinforced RC columns in the coming applications.

A tree diagram of the model proposed by GEP is illustrated in Fig. 14. As can be seen, this model consists of four sub-ETs, which represent the number of chromosomes. For the equation, these sub-ETs should be appended together by a linking function, which is considered multiplication in this study.

By multiplying sub-ETs, the final equation for predicting the axial capacity of FRP-reinforced columns is presented in Eq. (9). Regarding Eq. 9, first, the input parameters are inserted, and the values of \(g_{1} ,g_{2} ,g_{3} \,\,\,and\,\,g_{4}\) are calculated. Finally, the compressive strength is obtained by multiplying these four parameters. \(g_{1} ,g_{2} ,g_{3} \,\,\,and\,\,g_{4}\) are defined in the appendix.

The sensitivity analysis done by the software is presented in Fig. 15. As the figure shows, the most important parameter that can affect the compressive strength of the column is e/D, with a percentage effect of 34.54. The second and third positions of effect are for Ag and fc, with a percentage of 26.54 and 17.37, respectively. The figure also shows that fyl and El had no effect on the axial capacity of the column.

Comparison of the proposed formulas

Following the modeling process, the performance of each algorithm was evaluated using standard error metrics and efficiency criteria, including mean absolute percentage error (MAPE), coefficient of determination (R2), root mean square error (RMSE), and mean absolute error (MAE), as recommended in 23 reference. These metrics are defined by the following expressions:

In these equations, n denotes the number of data points—in this study, 525—and Pmodel and Pactual represent the axial capacity predicted by the machine learning model and the experimentally measured capacity, respectively. Lower values of MAE, MAPE, and RMSE, along with R2 values approaching 1, indicate higher predictive accuracy. The performance metrics for the different models are summarized in Table 7. Among the four evaluated machine learning algorithms—artificial neural networks (ANN), multiple linear regression (MLR), the group method of data handling (GMDH), and gene expression programming (GEP)—the ANN model demonstrated the highest predictive performance. On the test dataset, it achieved the lowest MAE (130.13), RMSE (211.45), and MAPE (11.05%), along with a high R2 value of 0.96.

In contrast, the MLR model exhibited the weakest performance, suggesting a highly nonlinear relationship between the input parameters and the target variable. Both the GMDH and GEP models also showed strong predictive capabilities, as reflected in their error metrics.

Furthermore, the mean and standard deviation of prediction errors, also presented in Table 7, were lowest for the ANN, GMDH, and GEP models. This further confirms their robustness and reliability in predicting the axial capacity of FRP-reinforced columns. In comparison, the average and standard deviation of errors for the MLR model were approximately six and sixteen times higher than those of the ANN model, respectively, reinforcing its relative inadequacy for modeling complex nonlinear behavior in this context.

In Figs. 16 and 17, the performance of the models in predicting different data points is shown for the training and testing datasets, respectively. Each point represents one data sample, and based on its location, the accuracy of the models can be assessed. In general, the closer the points are to the 45-degree line (green line), the more accurate the model’s predictions. If all points lie exactly on this line, it indicates that the predicted values are identical to the experimental values.

Figures 16 and 17 demonstrate that the ANN model has the best performance, as most of its points are clustered near the 45-degree line. Overall, the ANN, GMDH, and GEP algorithms show the best predictive performance for both the training and testing datasets. In contrast, MLR delivers the weakest results among the evaluated models, with many of its outputs falling beyond the 40% error line.

As shown in Table 8, only four data points have errors greater than 60% for the ANN model, and the majority of its predictions (89.7% of the data) have errors below 20%. For GMDH and GEP, 76% and 73% of the data, respectively, fall within the same error range. The number of data points across different error intervals is nearly identical for GMDH and GEP, but GMDH performs slightly better, as it has more data points with errors under 40% (481 compared to 479).

MLR, on the other hand, has the highest number of predictions with errors greater than 60% among all models, confirming its comparatively lower accuracy.

In order to better understand the formulas presented in this study, a column with various eccentricities from the study by Hadhood et al. 2 is selected in this section, and the compressive strengths and moments are estimated using the proposed formulas. The results obtained from each equation, alongside their corresponding experimental values, are presented in Table 9. The axial load–moment diagram from the experiment, as well as the results of each method, are illustrated in Fig. 18. As shown, the results from the GMDH and GEP formulas are very close to the experimental data, while the MLR results show a relatively significant discrepancy. This example demonstrates the acceptable accuracy of the formulas proposed in this study.

Comparison of different formulas

As mentioned earlier, all existing equations (some of which are presented in Table 1) predict only the sectional capacity of columns under axial load without directly considering eccentricity. The performance of these equations is compared using various metrics, including MAE, MAPE, RMSE, and R2, with the results shown in Table 10. It can be seen that the equations developed by Mohammad et al. (B), Tobbi et al., and AS-3600 are more accurate than the others, with R2 values of 0.9, 0.883, and 0.883, respectively. Among these, the equation by Mohammad et al. (B) performs best due to its lower MAE, RMSE, and MAPE values. Table 10 also shows that the equations proposed in this study not only account for eccentricity but also exhibit higher accuracy compared to the existing equations in predicting the axial capacity of columns.

The performance of these equations is also compared to a machine learning model developed by Arora et al. 40 using ANN. As can be seen, the ANN model done by Arora et al. 40 demonstrates the best performance among the existing equations, owing to its higher R2 value. When compared with the models proposed in this study 40 , the ANN and GMDH models show greater accuracy in prediction, while the GEP model performs comparably. Overall, given that all models have R2 values above 0.9, it can be concluded that they all demonstrate strong performance in predicting the compressive strength of columns reinforced with FRP bars under concentric loading.

In Fig. 19, the dispersion of predicted data from both the existing equations and the proposed equations (GMDH and GEP) is compared. In this figure, each point represents the prediction error for a given data sample. As mentioned earlier, the error range for the proposed equations is within ± 20%, indicating relatively high accuracy in estimating the axial capacity of the columns. In contrast, for the existing equations, most points lie far from the 45-degree line. Even for the most accurate among them—Mohammad et al. (B)—the error range is generally between ± 40% and ± 60%.

The Taylor diagram for the models employed in this study is presented in Fig. 20. This diagram provides a comprehensive visual summary of model performance by simultaneously illustrating the correlation coefficient, standard deviation, and root-mean-square error (RMSE). The horizontal and vertical axes represent the standard deviation of the predicted values, while the black dashed lines denote the Pearson correlation coefficient. Additionally, the blue contours correspond to RMSE values, as outlined by references 114,115.

The accuracy of each model is indicated by its proximity to the reference point on the diagram. Models that lie closer to the origin and along higher correlation coefficient lines (i.e., smaller angular deviation from the x-axis) demonstrate superior predictive performance.

Among the models analyzed, the artificial neural network (ANN) exhibited the highest overall accuracy, with a correlation coefficient exceeding 0.97, a well-aligned standard deviation, and minimal RMSE—indicating its strong reliability in predicting the axial load capacity of FRP-reinforced columns.

The GMDH and GEP models also showed commendable performance, with correlation coefficients of approximately 0.96 and 0.94, respectively. These models strike a favorable balance between predictive accuracy and model interpretability, making them viable for practical engineering applications.

In contrast, the multiple linear regression (MLR) model showed the weakest performance, with a correlation coefficient of roughly 0.6 and a comparatively larger standard deviation. This suggests a limited ability to capture the complex behavior of FRP-reinforced columns under axial loading.

Overall, the Taylor diagram corroborates the results obtained from other statistical metrics and visually reinforces the superior performance of the ANN model, along with the strong predictive capability of the GMDH and GEP models.

Summary and conclusions

This study employs machine learning techniques to develop predictive models for estimating the compressive strength of concrete columns reinforced with fiber-reinforced polymer (FRP) bars. To achieve this, experimental data from multiple sources were compiled, resulting in a curated dataset of 525 data points. Twelve input parameters were selected for model development. Notably, several critical variables—such as the ratio of eccentricity to the diameter or height of the cross-section (e/D), the column height (H), and the mechanical properties, type, and spacing of transverse reinforcement—were incorporated. These variables have not been explicitly addressed in previously published empirical equations.

The relationships between the input variables and their influence on the output were examined using a correlation matrix. The analysis revealed that column cross-sectional area had the strongest positive correlation with compressive strength, while eccentricity exhibited the most significant negative effect. The distribution of input variables across various intervals was also analyzed, both numerically and as percentages.

Four machine learning algorithms were applied: Artificial Neural Network (ANN), Multiple Linear Regression (MLR), Group Method of Data Handling (GMDH), and Gene Expression Programming (GEP). The models were trained and tested using standard performance metrics. Among them, ANN and GMDH yielded the most accurate predictions, with R2 values of 0.981 and 0.973 for the training set and 0.960 and 0.949 for the test set, respectively. The GEP model also demonstrated satisfactory performance, achieving R2 values above 0.93 for both datasets. In contrast, the MLR model exhibited the weakest predictive capability. Further analysis of error distribution and standard deviation showed that the ANN model achieved the highest overall accuracy, with an R2 of 0.974 and a standard deviation of 10.22. Notably, approximately 90% of the predictions generated by the ANN model fell within an error range of 0–20%.

In addition, several existing empirical formulas for estimating the compressive strength of FRP-reinforced columns were reviewed. Since most of these equations do not directly consider the effect of eccentricity, only the subset of data involving concentric loading was used for comparison. The compressive strength values obtained from both the existing formulas and the proposed models were compared for this subset.

To develop generalized prediction equations, the GMDH, GEP, and MLR methods were employed. Among these, the equations derived from the GMDH and GEP methods demonstrated relatively high accuracy, with R2 values of 0.966 and 0.942, respectively. These proposed equations were benchmarked against those available in existing design codes and published studies. The comparison confirmed that the GMDH- and GEP-based equations outperformed all other existing formulas in terms of predictive accuracy. Importantly, these models are capable of estimating both axial load capacity and moment strength for a wide range of input parameters, offering a substantial improvement over current empirical equations. The proposed equations are also straightforward to implement in engineering practice.

Given that the models were trained on 525 data points within defined parameter ranges, future work can improve their generalizability and precision by expanding the dataset and broadening the scope of the input variables. As one of the first studies to directly incorporate eccentricity effects in modeling the compressive strength of FRP-reinforced columns, this work provides a valuable reference for researchers aiming to develop more advanced models—not only for FRP-reinforced members but also for those reinforced with conventional steel.

Data availability

The datasets used and/or analyzed during the current study are available from Mohammad Haji (haji.mohammad@ut.ac.ir), upon reasonable request.

References

Elchalakani, M. & Ma, G. Tests of glass fibre reinforced polymer rectangular concrete columns subjected to concentric and eccentric axial loading. Eng. Struct. 151, 93–104 (2017).

Hadhood, A., Mohamed, H. M. & Benmokrane, B. Experimental study of circular high-strength concrete columns reinforced with GFRP bars and spirals under concentric and eccentric loading. J. Compos. Constr. 21, 04016078 (2017).

Afifi, M. Z., Mohamed, H. M. & Benmokrane, B. Axial capacity of circular concrete columns reinforced with GFRP bars and spirals. J. Compos. Constr. 18, 04013017 (2014).

Tobbi, H., Farghaly, A. S. & Benmokrane, B. Concrete columns reinforced longitudinally and transversally with glass fiber-reinforced polymer bars. ACI Struct. J. 109, 551 (2012).

Sadat Hosseini, A. & Sadeghian, P. Transverse reinforcement configurations in GFRP-reinforced concrete columns: Experiments and damage mechanics-based modeling. J. Build. Eng. 104, 112368 (2025).

Hadi, M. N. S., Karim, H. & Sheikh, M. N. Experimental investigations on circular concrete columns reinforced with GFRP bars and helices under different loading conditions. J. Compos. Constr. 20, 04016009 (2016).

Elmesalami, N., Abed, F. & Refai, A. E. Concrete columns reinforced with GFRP and BFRP bars under concentric and eccentric loads: experimental testing and analytical investigation. J. Compos. Constr. 25, 04021003 (2021).

Abed, F., Ghazal Aswad, N. & Obeidat, K. Effect of BFRP ties on the axial performance of RC columns reinforced with BFRP and GFRP rebars. Compos. Struct. 340, 118184 (2024).

Obeidat, K., Ghazal Aswad, N. & Abed, F. Confinement efficiency of BFRP versus steel ties in RC columns: experimental study. Eur. J. Environ. Civ. Eng. https://doi.org/10.1080/19648189.2025.2501059 (2025).

Liu, S. et al. Experimental and numerical study of concrete columns reinforced with BFRP and steel bars under eccentric loading. J. Compos. Constr. 29, 04025010 (2025).

Balla, T. M. R., Saharkar, S. & Suriya Prakash, S. Role of fiber addition in GFRP-reinforced slender RC columns under eccentric compression: An experimental and analytical study. J. Compos. Constr. 28, 04024010 (2024).

Sajedi, S. F., Saffarian, I., Pourbaba, M. & Yeon, J. H. Structural behavior of circular concrete columns reinforced with longitudinal GFRP rebars under axial load. Buildings 14, 988 (2024).

Alanazi, M. A., Abbas, H., Elsanadedy, H., Almusallam, T. & Al-Salloum, Y. Effect of inner steel tube on compression performance of GFRP-reinforced concrete columns. Adv. Struct. Eng. https://doi.org/10.1177/13694332251327838 (2025).

Mohamed, H. M., Afifi, M. Z. & Benmokrane, B. Performance evaluation of concrete columns reinforced longitudinally with FRP bars and confined with FRP hoops and spirals under axial load. J. Bridg. Eng. 19, 04014020 (2014).

Hadhood, A., Mohamed, H. M. & Benmokrane, B. Strength of circular HSC columns reinforced internally with carbon-fiber-reinforced polymer bars under axial and eccentric loads. Constr. Build. Mater. 141, 366–378 (2017).

Kioumarsi, M., Azarhomayun, F., Haji, M. & Shekarchi, M. Effect of shrinkage reducing admixture on drying shrinkage of concrete with different w/c ratios. Materials Basel. 13, 5721 (2020).

Chen, L. et al. Axial compressive strength predictive models for recycled aggregate concrete filled circular steel tube columns using ANN, GEP, and MLR. J. Build. Eng. 77, 107439 (2023).

Yu, F. et al. Predicting axial load capacity in elliptical fiber reinforced polymer concrete steel double skin columns using machine learning. Sci. Rep. 15, 12899 (2025).

Bardhan, A. et al. A novel integrated approach of augmented grey wolf optimizer and ANN for estimating axial load carrying-capacity of concrete-filled steel tube columns. Constr. Build. Mater. 337, 127454 (2022).

Xu, C. et al. Numerical and machine learning models for concentrically and eccentrically loaded CFST columns confined with FRP wraps. Struct. Concr. https://doi.org/10.1002/suco.202400541 (2024).

Doran, B., Yetilmezsoy, K. & Murtazaoglu, S. Application of fuzzy logic approach in predicting the lateral confinement coefficient for RC columns wrapped with CFRP. Eng. Struct. 88, 74–91 (2015).

Cascardi, A., Micelli, F. & Aiello, M. A. An Artificial Neural Networks model for the prediction of the compressive strength of FRP-confined concrete circular columns. Eng. Struct. 140, 199–208 (2017).

Naderpour, H., Nagai, K., Fakharian, P. & Haji, M. Innovative models for prediction of compressive strength of FRP-confined circular reinforced concrete columns using soft computing methods. Compos. Struct. 215, 69–84 (2019).

Sangeetha, P. & Shanmugapriya, M. GFRP wrapped concrete column compressive strength prediction through neural network. SN Appl. Sci. 2, 2036 (2020).

Shang, L., Isleem, H. F., Almoghayer, W. J. K. & Khishe, M. Prediction of ultimate strength and strain in FRP wrapped oval shaped concrete columns using machine learning. Sci. Rep. 15, 10724 (2025).

Arabshahi, A., Gharaei-Moghaddam, N. & Tavakkolizadeh, M. Development of applicable design models for concrete columns confined with aramid fiber reinforced polymer using Multi-Expression Programming. Structures 23, 225–244 (2020).

Arabshahi, A., Gharaei-Moghaddam, N. & Tavakkolizadeh, M. Proposition of new applicable strength models for concrete columns confined with fiber reinforced polymers. SN Appl. Sci. 1, 1677 (2019).

Prakash, I. & Nguyen, T. A. Predicting the maximum load capacity of circular RC columns confined with fibre-reinforced polymer (FRP) using machine learning model. J. Sci. Transp. Technol. https://doi.org/10.58845/jstt.utt.2023.en.3.4.25-42 (2023).

Moodi, Y. Providing models of the compressive strength of square and rectangular (S/R) concrete confined using genetic programming. Numer. Methods Civ. Eng. 9, 16–27 (2025).

Ghasri, M., Ghasemi, M. & Salarnia, A. Leveraging the power of hybrid and standalone machine learning for enhanced FRP-confined concrete columns strength prediction. J. Soft Comput. Civ. Eng. 9, 60–96 (2025).

Fakharian, P. et al. Bond strength prediction of externally bonded reinforcement on groove method (EBROG) using MARS-POA. Compos. Struct. 349–350, 118532 (2024).

Su, M., Zhong, Q., Peng, H. & Li, S. Selected machine learning approaches for predicting the interfacial bond strength between FRPs and concrete. Constr. Build. Mater. 270, 121456 (2021).

Zaermiri, N., Sohrabi, M. R. & Moodi, Y. Modeling the ultimate strength of the bond between concrete and FRP using K-means clustering method and kriging method. J. Struct. Constr. Eng. 11, e197499 (2025).

Hamid, F. L. & Yousif, A. R. Structural behavior of concrete columns reinforced with BFRP bars: Experimental study and predictive models. Iran. J. Sci. Technol. Trans. Civ. Eng. https://doi.org/10.1007/s40996-024-01522-6 (2024).

Hamid, F. L., Yousif, A. R. & Hassan, B. R. Innovative approaches to predicting maximum load-carrying capacity of fiber-reinforced polymer reinforced concrete columns using machine learning techniques. Iran. J. Sci. Technol. Trans. Civ. Eng. https://doi.org/10.1007/s40996-025-01726-4 (2025).

Baili, J. et al. Experiments and predictive modeling of optimized fiber-reinforced concrete columns having FRP rebars and hoops. Mech. Adv. Mater. Struct. 30, 4913–4932 (2023).

Cakiroglu, C., Islam, K., Bekdaş, G., Kim, S. & Geem, Z. W. Interpretable machine learning algorithms to predict the axial capacity of FRP-reinforced concrete columns. Materials Basel. 15, 2742 (2022).

Huang, L., Chen, J. & Tan, X. BP-ANN based bond strength prediction for FRP reinforced concrete at high temperature. Eng. Struct. 257, 114026 (2022).

Nouri, Y., Ghanizadeh, A. R., Safi Jahanshahi, F. & Fakharian, P. Data-driven prediction of axial compression capacity of GFRP-reinforced concrete column using soft computing methods. J. Build. Eng. 101, 111831 (2025).

Arora, H. C. et al. Axial capacity of FRP-reinforced concrete columns: Computational intelligence-based prognosis for sustainable structures. Buildings 12, 2137 (2022).

Zhang, J. et al. Machine learning for the prediction of the axial load-carrying capacity of <scp>FRP</scp> reinforced hollow concrete column. Struct. Concr. https://doi.org/10.1002/suco.202400886 (2025).

Tarawneh, A., Almasabha, G., Saleh, E., Alghossoon, A. & Alajarmeh, O. Data-driven machine learning methodology for designing slender FRP-RC columns. Structures 57, 105207 (2023).

Canadian Standards Association (CSA). Design and Construction of Building Structures with Fibre Reinforced Polymers (S806–12 (R2017)); (2012).

AS 3600; Concrete Structures. Standards Australia: Sydney, Australia. (2018).

Raza, A., Khan, Q. & Uz, Z. Structural behavior of GFRP-reinforced circular HFRC columns under concentric and eccentric loading. Arab. J. Sci. Eng. 46, 4239–4252 (2021).

Salah-Eldin, A., Mohamed, H. M. & Benmokrane, B. Structural performance of high-strength-concrete columns reinforced with GFRP bars and ties subjected to eccentric loads. Eng. Struct. 185, 286–300 (2019).

Salah-Eldin, A., Mohamed, H. M. & Benmokrane, B. Axial-flexural performance of high-strength-concrete bridge compression members reinforced with basalt-FRP bars and ties: Experimental and theoretical investigation. J. Bridg. Eng. 24, 04019069 (2019).

Rafique, U., Ali, A. & Raza, A. Structural behavior of GFRP reinforced recycled aggregate concrete columns with polyvinyl alcohol and polypropylene fibers. Adv. Struct. Eng. 24, 3043–3056 (2021).

Hadhood, A., Mohamed, H. M. & Benmokrane, B. Flexural stiffness of GFRP-and CFRP-RC circular members under eccentric loads based on experimental and curvature analysis. ACI Struct. J 115, 1185–1198 (2018).

Hadi, M. N. S., Ahmad, H. & Sheikh, M. N. Effect of Using GFRP Reinforcement on the Behavior of Hollow-Core Circular Concrete Columns. J. Compos. Constr. 25, (2021).

AlAjarmeh, O. S. et al. Compressive behavior of axially loaded circular hollow concrete columns reinforced with GFRP bars and spirals. Constr. Build. Mater. 194, 12–23 (2019).

Elchalakani, M., Dong, M., Karrech, A., Mohamed Ali, M. S. & Huo, J.-S. Circular Concrete Columns and Beams Reinforced with GFRP Bars and Spirals under Axial, Eccentric, and Flexural Loading. J. Compos. Constr. 24, (2020).

Hadhood, A., Mohamed, H. M. & Benmokrane, B. Failure envelope of circular concrete columns reinforced with glass fiber-reinforced polymer bars and spirals. ACI Struct. J. 114, 1417–1428 (2017).

Elchalakani, M. et al. Experimental Investigation of Rectangular Air-Cured Geopolymer Concrete Columns Reinforced with GFRP Bars and Stirrups. J. Compos. Constr. 23, 04019011 (2019).

Othman, Z. S. & Mohammad, A. H. Behaviour of Eccentric Concrete Columns Reinforced with Carbon Fibre-Reinforced Polymer Bars. Adv. Civ. Eng. 2019, 1–13 (2019).

Ahmad, H., Sheikh, M. N. & Hadi, M. N. S. Behavior of GFRP bar-reinforced hollow-core polypropylene fiber and glass fiber concrete columns under axial compression. J. Build. Eng. 44, 103245 (2021).

Tobbi, H., Farghaly, A. S. & Benmokrane, B. Behavior of concentrically loaded fiber-reinforced polymer reinforced concrete columns with varying reinforcement types and ratios. ACI Struct. J. 111, 375–386 (2014).

Tobbi, H., Farghaly, A. S. & Benmokrane, B. Strength model for concrete columns reinforced with fiber-reinforced polymer bars and ties. ACI Struct. J. 111, 789–798 (2014).

Afifi, M. Z., Mohamed, H. M. & Benmokrane, B. Strength and Axial Behavior of Circular Concrete Columns Reinforced with CFRP Bars and Spirals. J. Compos. Constr. 18, 04013035 (2014).

Afifi, M. Z., Mohamed, H. M. & Benmokrane, B. Theoretical stress–strain model for circular concrete columns confined by GFRP spirals and hoops. Eng. Struct. 102, 202–213 (2015).

Afifi, M. Z., Mohamed, H. M., Chaallal, O. & Benmokrane, B. Confinement model for concrete columns internally confined with carbon FRP spirals and hoops. J. Struct. Eng. 141, 04014219 (2015).

AlAjarmeh, O. S., Manalo, A. C., Benmokrane, B., Karunasena, W. & Mendis, P. Axial performance of hollow concrete columns reinforced with GFRP composite bars with different reinforcement ratios. Compos. Struct. 213, 153–164 (2019).

Tu, J., Gao, K., He, L. & Li, X. Experimental study on the axial compression performance of GFRP-reinforced concrete square columns. Adv. Struct. Eng. 22, 1554–1565 (2019).

Duy, N. P., Anh, V. N., Anh, N. M. T. & Eduardovich, P. A. Load-Carrying Capacity of Short Concrete Columns Reinforced with Glass Fiber Reinforced Polymer Bars Under Concentric Axial Load. Int. J. Eng. Adv. Technol. 9, 1712–1719 (2019).

Alwash, N. A. & Jasim, A. H. Behavior of short concrete columns reinforced by CFRP bars and subjected to eccentric load. Int. J. Civ. Eng. Technol. 6, 15–24 (2015).

Ahmad, A., Bahrami, A., Alajarmeh, O., Chairman, N. & Yaqub, M. Investigation of Circular Hollow Concrete Columns Reinforced with GFRP Bars and Spirals. Buildings 13, 1056 (2023).

AlAjarmeh, O. S., Manalo, A. C., Benmokrane, B., Karunasena, W. & Mendis, P. Effect of spiral spacing and concrete strength on behavior of GFRP-reinforced hollow concrete columns. J. Compos. Constr. 24, 04019054 (2020).

Elchalakani, M., Karrech, A., Dong, M., Mohamed Ali, M. S. & Yang, B. Experiments and finite element analysis of GFRP reinforced geopolymer concrete rectangular columns subjected to concentric and eccentric axial loading. Structures 14, 273–289 (2018).

Hamid, F. L. & Yousif, A. R. Behavior of Short and Slender RC Columns with BFRP Bars under Axial and Flexural Loads: Experimental and Analytical Investigation. J. Compos. Constr. 28, (2024).

Hadhood, A., Mohamed, H. M. & Benmokrane, B. Axial load-moment interaction diagram of circular concrete columns reinforced with CFRP bars and spirals: Experimental and theoretical investigations. J. Compos. Constr. 21, 0402372 (2017).

Hadhood, A., Mohamed, H. M., Ghrib, F. & Benmokrane, B. Efficiency of glass-fiber reinforced-polymer (GFRP) discrete hoops and bars in concrete columns under combined axial and flexural loads. Compos. Part B Eng. 114, 223–236 (2017).

Hadhood, A., Mohamed, H. M. & Benmokrane, B. Assessing stress-block parameters in designing circular high-strength concrete members reinforced with FRP bars. J. Struct. Eng. 144, 04018182 (2018).

Hales, T. A., Pantelides, C. P. & Reaveley, L. D. Experimental evaluation of slender high-strength concrete columns with GFRP and hybrid reinforcement. J. Compos. Constr. 20, 04016050 (2016).

Hasan, H. A., Sheikh, M. N. & Hadi, M. N. S. Performance evaluation of high strength concrete and steel fibre high strength concrete columns reinforced with GFRP bars and helices. Constr. Build. Mater. 134, 297–310 (2017).

Hasan, H. A. et al. Performance evaluation of normal- and high-strength concrete column specimens reinforced longitudinally with different ratios of GFRP bars. Structures 47, 1428–1440 (2023).

Ahmed, A. & Masmoudi, R. Axial Response of Concrete-Filled FRP Tube (CFFT) Columns with Internal Bars. J. Compos. Sci. 2, 57 (2018).

Zhou, J.-K., Lin, W.-K., Guo, S.-X., Zeng, J.-J. & Bai, Y.-L. Behavior of FRP-confined FRP spiral reinforced concrete square columns (FCFRCs) under axial compression. J. Build. Eng. 45, 103452 (2022).

Xu, J., Wu, Z., Jia, H., Yu, R. C. & Cao, Q. Axial compression of seawater sea sand concrete columns reinforced with hybrid FRP–stainless steel bars. Mag. Concr. Res. 75, 685–702 (2023).

Zeng, J.-J. et al. Behaviour of FRP spiral-confined concrete and contribution of FRP longitudinal bars in FRP-RC columns under axial compression. Eng. Struct. 281, 115747 (2023).

Khorramian, K. & Sadeghian, P. Experimental and analytical behavior of short concrete columns reinforced with GFRP bars under eccentric loading. Eng. Struct. 151, 761–773 (2017).

Maranan, G. B., Manalo, A. C., Benmokrane, B., Karunasena, W. & Mendis, P. Behavior of concentrically loaded geopolymer-concrete circular columns reinforced longitudinally and transversely with GFRP bars. Eng. Struct. 117, 422–436 (2016).

Gouda, M. G., Mohamed, H. M., Manalo, A. C. & Benmokrane, B. Experimental investigation of concentrically and eccentrically loaded circular hollow concrete columns reinforced with GFRP bars and spirals. Eng. Struct. 277, 115442 (2023).

Pantelides, C. P., Gibbons, M. E. & Reaveley, L. D. Axial Load Behavior of Concrete Columns Confined with GFRP Spirals. J. Compos. Constr. 17, 305–313 (2013).

Erfan, A. M., Algashb, Y. A. & El-Sayed, T. A. Experimental and analytical behavior of HSC columns reinforced with basalt FRP bars. Int. J. Sci. Eng. Res. 10, 240–260 (2019).

Li, P., Jin, L., Zhang, J. & Du, X. Size effect tests on axial compressive behavior of BFRP-reinforced concrete columns. Eng. Struct. 281, 115785 (2023).

Raza, A. et al. On the structural performance of recycled aggregate concrete columns with glass fiber-reinforced composite bars and hoops. Polymers Basel. 13, 1508 (2021).

Rashedi, A. et al. Glass FRP-reinforced geopolymer based columns comprising hybrid fibres: Testing and FEA modelling. Polymers Basel. 14, 324 (2022).

El-Sayed, T. A., Abdallah, K. S., Ahmed, H. E. & El-Afandy, T. H. Structural behavior of ultra-high strength concrete columns reinforced with basalt bars under axial loading. Int. J. Concr. Struct. Mater. 17, 43 (2023).

Karim, H., Noel-Gough, B., Sheikh, M. N. & Hadi, M. N. S. Strength and ductility behavior of circular concrete columns reinforced with GFRP bars and helices. (2015).

Tabatabaei, A., Eslami, A., Mohamed, H. M. & Benmokrane, B. Strength of compression lap-spliced GFRP bars in concrete columns with different splice lengths. Constr. Build. Mater. 182, 657–669 (2018).

Zhang, X. & Deng, Z. Experimental study and theoretical analysis on axial compressive behavior of concrete columns reinforced with GFRP bars and PVA fibers. Constr. Build. Mater. 172, 519–532 (2018).

Xiong, Z. et al. Axial performance of seawater sea-sand concrete columns reinforced with basalt fibre-reinforced polymer bars under concentric compressive load. J. Build. Eng. 47, 103828 (2022).

Brown, J. Glass fibre reinforced polymer bars in concrete compression members. (2015).

Elmesalami, N. Z. A. Experimental Investigation of Frp-Reinforced Concrete Columns under Concentric and Eccentric Loading. (2019).

El-Gamal, S. & AlShareedah, O. Behavior of axially loaded low strength concrete columns reinforced with GFRP bars and spirals. Eng. Struct. 216, 110732 (2020).

Xue, W., Peng, F. & Fang, Z. Behavior and design of slender rectangular concrete columns longitudinally reinforced with fiber-reinforced polymer bars. ACI Struct. J. 115, 311–322 (2018).

Abdelazim, W., Mohamed, H. M. & Benmokrane, B. Inelastic second-order analysis for slender GFRP-reinforced concrete columns: Experimental investigations and theoretical study. J. Compos. Constr. 24, 04020016 (2020).

Khorramian, K. & Sadeghian, P. Experimental investigation of short and slender rectangular concrete columns reinforced with GFRP bars under eccentric axial loads. J. Compos. Constr. 24, 04020072 (2020).

ElMessalami, N., Abed, F. & El Refai, A. Response of concrete columns reinforced with longitudinal and transverse BFRP bars under concentric and eccentric loading. Compos. Struct. 255, 113057 (2021).

Fan, X. & Zhang, M. Behaviour of inorganic polymer concrete columns reinforced with basalt FRP bars under eccentric compression: An experimental study. Compos. Part B Eng. 104, 44–56 (2016).

Salah-Eldin, A., Mohamed, H. M. & Benmokrane, B. Effect of GFRP reinforcement ratio on the strength and effective stiffness of high-strength concrete columns: experimental and analytical study. J. Compos. Constr. 24, 04020055 (2020).

Karim, H., Sheikh, M. N. & Hadi, M. N. S. Axial load-axial deformation behaviour of circular concrete columns reinforced with GFRP bars and helices. Constr. Build. Mater. 112, 1147–1157 (2016).

Bakouregui, A. S., Mohamed, H. M., Yahia, A. & Benmokrane, B. Explainable extreme gradient boosting tree-based prediction of load-carrying capacity of FRP-RC columns. Eng. Struct. 245, 112836 (2021).

Cohen, I. et al. Pearson correlation coefficient. Noise Reduct. speech Process. 1–4 (2009).

Gurney, K. An Introduction to Neural Networks (CRC Press, 2018). https://doi.org/10.1201/9781315273570.

Walczak, S. & Cerpa, N. Artificial Neural Networks. in Encyclopedia of Physical Science and Technology 631–645 (Elsevier, 2003). https://doi.org/10.1016/B0-12-227410-5/00837-1

Freedman, D. A. A simple regression equation has on the right-hand side an intercept and an explanatory variable with a slope coefficient. A multiple regression e right hand side, each with its own slope coefficient. Stat. Model. Theory Pract. 26 (2009).

Eberly, L. E. Multiple Linear Regression. in 165–187 (2007). https://doi.org/10.1007/978-1-59745-530-5_9

Ivakhnenko, A. G. Polynomial Theory of Complex Systems. IEEE Trans. Syst. Man. Cybern. SMC-1, 364–378 (1971).

GMDH Shell 3: Platform for inductive modeling (2020). http://www.gmdhshell.com/.

Ferreira, C. Gene Expression Programming in Problem Solving. in Soft Computing and Industry 635–653 (Springer London, 2002). https://doi.org/10.1007/978-1-4471-0123-9_54

Ferreira, C. Gene expression programming: a new adaptive algorithm for solving problems. arXiv Prepr. cs/0102027 (2001).

Zhong, J., Feng, L. & Ong, Y.-S. Gene Expression Programming: A Survey [Review Article]. IEEE Comput. Intell. Mag. 12, 54–72 (2017).

Moodi, Y., Hamzehkolaei, N. S. & Afshoon, I. Machine learning models for predicting compressive strength of eco-friendly concrete with copper slag aggregates. Mater. Today Commun. 46, 112572 (2025).

Moodi, Y., Hamzehkolaei, N. S. & Afshoon, I. Intelligent Models for Predicting the Compressive Strength of Green Concrete Made with Fine and Coarse Grains of Waste Copper Slag. (2024).

Funding

The authors did not receive funding for conducting this study.

Author information

Authors and Affiliations

Contributions

Mohammad Haji: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. Mohammad Sadegh Marefat: Conceptualization, Formal analysis, Investigation, Methodology, Project administration, Supervision, Validation, Writing – review & editing. Ali Kheyroddin: Conceptualization, Investigation, Supervision, Validation, Writing – review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Parameters \(f_{1} ,f_{2} ,f_{3} \,\,\,and\,\,f_{4}\) in the equation proposed by the GMDH method (Eq. 8) for calculating the compressive strength of FRP-reinforced columns are presented below.

Parameters \(g_{1} ,g_{2} ,g_{3} \,\,\,and\,\,g_{4}\) in the equation proposed by the GEP method (Eq. 9) for calculating the compressive strength of FRP-reinforced columns are presented below.

In the following, references used for data collection, the number of samples from each reference, and the general characteristics of the columns, including cross-sectional shape, materials of longitudinal and transverse reinforcements, type of stirrups, and loading eccentricity are provided (Table 11).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Haji, M., Marefat, M.S. & Kheyroddin, A. Axial strength prediction of FRP reinforced concrete columns under concentric and eccentric loading using machine learning models. Sci Rep 15, 33299 (2025). https://doi.org/10.1038/s41598-025-17150-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-17150-7