Abstract

To address the issues of low detection accuracy, false detection, and missing detection, as well as the challenge of modeling lightweight scenes caused by the overlapping occlusion of roadside targets and distant targets in autonomous driving scenarios, an improved small target detection algorithm for autonomous driving based on YOLO11 is proposed. Firstly, it embedded the Channel Transposed Attention in the C3k2 module, proposed the C3CTA module, and replaced the C3k2 module in the Backbone network to improve the feature extraction ability and strengthen the detection ability in the case of target occlusion. Secondly, the Diffusion Focusing Pyramid Network is introduced to improve the Neck part, enhance the understanding ability of small targets in complex scenes, and effectively solve the problem that it is difficult to extract vehicle target features. Finally, a Lightweight Shared Convolutional Detection Head is introduced to reduce the number of model parameters and achieve lightweight requirements. The experimental results show that the Precision, Recall, mAP@0.5, and mAP@0.5–95 of the improved algorithm on the world’s first DAIR-V2X-I dataset reach 85.7%, 79.4%, 85.3%, and 61.3%, which is 4.0% higher than the baseline model. 3.1%, 2.4%, 2.8%. Higher detection accuracy is achieved, which proves the effectiveness of the improvement. Proposed a new solution approach for target detection in complex autonomous driving scenarios.

Similar content being viewed by others

Introduction

Object detection is one of the core algorithms in an automatic driving system. Especially in complex scenes, object occlusion and recognition of distant small targets have always been an important challenge in research and application. The autonomous driving environment is usually full of various dynamic factors, including occlusion, light changes, different weather conditions, and diverse traffic participants, which makes object detection very difficult in practical applications1. Therefore, designing an efficient algorithm that can effectively detect objects in these complex situations is not only the key to the development of the technology, but also the basis for the safe and reliable operation of autonomous driving technology. At present, object detection algorithms can be mainly divided into two categories: traditional object detection methods and object detection methods based on deep learning2. Traditional object detection methods rely on manually extracted features and classifiers, and these methods recognize and classify objects through manually designed features. Although these methods can achieve certain results in some simple scenes, they perform poorly in complex and dynamic environments3. In contrast, object detection methods based on deep learning have been widely used in recent years and have gradually become the mainstream technology of autonomous driving systems4. Deep learning algorithms can automatically learn complex features through large-scale data training, so as to achieve more efficient and accurate target recognition. Convolutional Neural Network (CNN) performs well in feature extraction and image classification. Deep learning methods not only improve the accuracy of detection, but also better deal with diversity and dynamics in complex environments5. For example, object detection algorithms based on deep learning, such as Faster R-CNN, YOLO, SSD, etc., can process a large amount of data in a short time, and have strong robustness and adaptability, especially in the face of occlusion, illumination change, and long-distance targets6. And in this kind of deep learning algorithm, the YOLO series algorithm has gradually become the mainstream algorithm for automatic driving target detection with a high degree of real-time performance, a small amount of calculation, and high detection accuracy7.

Avşar et al.8 used deep learning technology to detect and track moving vehicles in roundabouts, trying to improve the performance of traffic monitoring systems in complex traffic scenarios. However, although this method improves the detection accuracy to a certain extent, it still faces the contradiction between algorithm accuracy and computational complexity in practical applications. XU et al.9 introduced a standardized attention module to improve the object detection ability of YOLOv5 in complex traffic environments, especially for the detection of smaller objects. Although this method has made significant progress in improving the accuracy, it still has certain limitations when dealing with difficult small samples and overlapping samples. Ocher et al.10 proposed the YOLOv8 model. This new version significantly improves the effect of object detection by optimizing the backbone network. Although YOLOv8 performs well in multiple tasks, further optimizing its performance in dynamic and severely occluded traffic environments, and balancing accuracy and efficiency are still the research directions for further development of the algorithm.

Compared with other models, the YOLO model shows better recognition performance and identifies the state of the vehicle target. However, the latest YOLO model and the above studies have encountered some technical challenges and difficulties in the detection task of autonomous driving11, which can be briefly summarized as follows:

1) In the traffic scene of vehicle-road cooperative perception, there are long-distance targets and small targets in the side view of the road, and the recognition accuracy is low, and the features is few. The scene information obtained by the sensors in the side view of the road is limited, which also limits their ability to perceive the targets comprehensively and accurately in the complex traffic environment12.

2) In the process of vehicle driving, vehicles on both sides of the road surface often overlap and interleave, or are blocked by some large obstacles, which greatly affects the detection accuracy of the algorithm13.

3) In autonomous driving scenarios, the computing performance of edge devices is often poor, and the processing speed of some models with large amounts of calculation is slow, which makes it difficult to meet the real-time requirements of traffic scenes14.

Although YOLOv5 and YOLOv8 performs well in multiple tasks, further optimizing its performance in dynamic and severely occluded traffic environments, and balancing accuracy and efficiency are still the research directions for further development of the algorithm. Specifically, the challenges of accurately detecting small and occluded targets (addressed by our DFPN and C3CTA modules) and the computational burden on edge devices (addressed by our LSCD head) remain largely unsolved by these existing approaches.

Based on the above analysis, this paper uses YOLO11 to improve and propose a new object detection algorithm, YOLO-FLC. The main contributions include three aspects:

1) A Diffusion Focusing Pyramid Network is proposed, which significantly improves the expression ability of multi-scale features and retains feature details at different scales by introducing a specially designed feature focusing module and feature diffusion mechanism. The FoFe module (Focus Features Module) is used to explicitly enhance the context information transmission of small targets, thereby avoiding the loss of details during the multi-layer transmission process. Using parallel depth separable convolution to capture the context information of different receptive fields, and by integrating through an adaptive gating mechanism, it outperforms the traditional upsampling/downsampling + concatenation approach. At the same time, frequency domain processing is introduced in feature extraction to enhance the ability to retain high-frequency details (such as edges and textures).The ability to understand small targets in complex scenes was enhanced, and the problem that it was difficult to extract vehicle target features was effectively solved.

2) In order to effectively learn the details in the image, the Channel Transposed Attention is added to the Backbone network, and a new C3CTA module is constructed. The C3CTA module, based on the original C3k2 structure of YOLO11, incorporates a dual-branch attention mechanism: spatial-frequency attention (SFA) and channel transposed attention (CTA). Traditional attention mechanisms (such as SE and CBAM) usually perform channel or spatial modeling through global average pooling or max pooling. However, CTA establishes richer cross-channel dependencies in the channel dimension through transposition operations, avoiding information loss. The SFA branch was the first to introduce frequency-domain projection in object detection. By enhancing the high-frequency components and applying spatial window attention, it significantly improved the model’s ability to perceive details and occluded objects. C3CTA achieves more comprehensive feature enhancement through parallel processing of spatial-frequency and channel attention, while existing methods mostly adopt serial or single-branch structures.It effectively solves the problem of low detection accuracy and missed detection in the case of overlapping and interleaving targets.

3) In order to solve the problem of slow Detection caused by a large amount of model calculation, we propose a new Lightweight Shared Convolutional Detection (LSCD) Head, which can effectively solve the problem of a large amount of model calculation. The model is lightweight.The LSCD detection head achieves a balance between accuracy and efficiency through weight sharing and scale adaptive layers. Multiple detection heads share the same set of convolutional weights, significantly reducing the number of parameters and computational load. In contrast, traditional detection heads (such as the YOLO series) usually design separate heads for each scale. Explicitly adjusting the scale of the regression branch to improve the positioning accuracy of multi-scale targets, and avoiding the scale inconsistency problem caused by simple fusion in structures such as FPN.

Related work

Deep learning object detection models are mainly divided into two categories: one-stage and two-stage. The one-stage detection model uses the regression method to directly input the input image into the convolutional neural network, and then outputs the classification and localization results of the target at the same time. The detection accuracy of this type of model is relatively slightly lower, but the advantage is that the detection speed is faster15. With the continuous iteration and optimization of the single-stage detection algorithm, its detection accuracy has been significantly improved, which is enough to cope with the target detection tasks with high detection accuracy requirements. Typical representatives of single-stage detection models include YOLO (You Only Look Once) and Single Shot MultiBox Detector (SSD)16.

The operation process of the two-stage detection model is different. It first uses a convolutional neural network to obtain candidate regions, and then completes the detection and classification work based on these regions. Compared with the one-stage detection model, the two-stage detection model has higher detection accuracy, but the detection speed is relatively slow. Common two-stage detection models are Regions with CNN features (RCNN), region-based Fast convolutional neural network (Fast RCNN), region-based Faster convolutional neural network (Faster RCNN), Spatial Pyramid Pooling Networks (SPPNet), etc17.

Given the advantages of fast detection speed and stronger real-time performance of single-stage detection algorithms, YOLO series models have been widely used in video or real-time image object detection tasks. Therefore, the YOLO11 model is selected in the study carried out for the traffic vehicle detection task.

Although the current object detection algorithms have high accuracy, they still have shortcomings in practical applications. For dense targets, occlusion, complex backgrounds (such as different brightness and angle), and low-resolution images, the stability of model detection is not good. In addition, when the detection program is applied under different camera angles, the image information of distant small targets in the top view is less, and the network model has difficulty extracting features, resulting in low accuracy or missed detection18.

In view of the above problems, many scholars have improved the algorithm. For example, Lu et al.19 proposed an aerial image vehicle detection method based on YOLO deep learning algorithm, processed three public data sets, and constructed an aerial image data set suitable for YOLO training, so that the trained model had a good test effect on small objects, rotating objects and compact objects, but did not improve the algorithm itself. Limited application scope; Zhao et al.20 proposed the YOLO-Lite algorithm to reduce the computing power overhead of the YOLO network model by changing the input image size, reducing the number of convolution layers, and removing the batch normalization layer, so as to adapt to the detection application without GPU devices and improve the detection speed, but the algorithm effect is not ideal. Fang et al.21 proposed the Tinier-YOLO algorithm based on Tiny-YOLO-v3, introduced the Fire module and SqueezeNet model to reduce the amount of parameters, added the straight-through layer, and merged the feature map of the previous layer to obtain fine-grained features, so as to improve the detection speed and maintain the accuracy. However, due to the lightweight of the model, the performance is not up to standard. The existing feature pyramid structure effectively integrates multi-scale features, but it still falls short in enhancing and diffusing the features of tiny targets in complex occlusion scenarios. Our designed DFPN employs the feature focusing and feature diffusion mechanisms, aiming to more explicitly strengthen the propagation of context information for small targets.Pan et al.22 adopted the YOLOv5 + CA attention model to add the CA attention mechanism to the last layer of the backbone network to reduce the interference of irrelevant information and let the algorithm focus on relevant feature information. However, from the results, the detection accuracy of the network model for small targets still needs to be improved.Although attention mechanisms are widely used to enhance feature extraction, most of these mechanisms have trade-offs in terms of computational complexity and the sufficiency of cross-channel interaction of features. Our proposed C3CTA module, through the channel shunting mechanism, aims to achieve more effective channel information integration with lower computational costs.

The proposed method

YOLO11

YOLO11 inherits and extends the advantages of the YOLO family of models, especially making significant improvements in the architecture design of backbone and neck networks. These improvements greatly improve the feature extraction ability of the model, which improves the accuracy of object detection and performs better in handling complex tasks. Compared to previous versions, YOLO11 implements a more refined and efficient architecture by design, which allows models to not only be processed faster but also maintain the best balance between accuracy and performance. This architectural optimization, especially the tradeoff between computation and performance, makes YOLO11 an extremely useful model in a variety of application scenarios. The structure diagram of the YOLO11 model is shown in Fig. 1.

Specifically, YOLO11 achieves higher Mean Average Precision (mAP) on the COCO dataset, which means that it is able to identify more types of objects and make more accurate localization in object detection tasks. Moreover, YOLO11 significantly optimizes the number of parameters. Compared with YOLOv8m, YOLO11 reduces the number of parameters by 22%. This reduction in the number of parameters not only improves computational efficiency but also makes YOLO11 more efficient to run on a variety of devices, especially in resource-constrained environments, without sacrificing model accuracy. YOLO11 is innovative in a number of ways, especially in the core modules of the architecture. Compared with YOLOv8, YOLO11 makes several important optimizations in the network structure. Firstly, YOLO11 replaces the original C2f module with the C3K2 module, which significantly enhances the model’s ability to extract feature information and improves the accuracy of object detection. Secondly, after the SPPF module (spatial pyramid pooling), YOLO11 adds the C2PSA module to further optimize the effect of multi-scale feature fusion and help the model better deal with objects of different sizes and scales. In addition, YOLO11 introduces the head design concept of YOLOv10 into the detection head of YOLO11, which greatly reduces redundant calculations and improves computational efficiency through the depthwise separable convolution method. Deptwise separable convolution reduces the computational complexity of the model by decomposing the standard convolution operation, allowing YOLO11 to achieve a significant improvement in execution speed without sacrificing detection accuracy. Although YOLO11 can improve the detection accuracy and reduce the number of parameters compared with the previous YOLO model, it also has great challenges in the vehicle detection scene in the complex environment of unmanned driving.

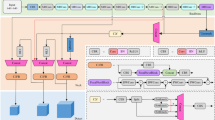

YOLO-FLC

In autonomous driving scenarios, object occlusion and small object detection have always been significant challenges in the field of computer vision. In order to solve these problems, this paper makes innovative improvements on the basis of the YOLO11 model. This paper proposes a new network model, YOLO-FLC (YOLO with Feature Diffusion and Lightweight Shared Channel Attention). The core innovations of this model include the following aspects: First, an advanced Channel Transfer Attention mechanism23 is introduced into the backbone network, and a new C3CTA (Channel Transposed Attention) network module is constructed. This module can adaptively select and transfer important channel information in multi-level feature maps, so as to improve the recognition ability of the model for different targets. Especially in the case of occlusion or overlap between targets, the C3CTA module can help the network to distinguish the target better and significantly improve the detection performance. Secondly, in the Neck part of the Network, the Diffusion Focusing Pyramid Network24 is proposed. The network enhances the context information of features at different scales by introducing a feature focusing module and a feature diffusion mechanism. The feature focusing module can highlight important local information, while the feature diffusion mechanism enhances the propagation of global information by diffusion. In this way, the feature map of each scale can contain more detailed context information, especially when dealing with small targets, which can effectively improve its detection accuracy25. Finally, we use a Lightweight Shared Convolutional Detection Head (LSCD) to replace the original detection head in the model, which can improve the recognition ability and accuracy of the model for different targets while reducing the amount of calculation. To optimize the performance of the detection head, we add a Scale layer to the network. By scaling the feature map, the objects of different scales can be more accurately captured by the detection head, so as to further improve the multi-scale detection ability of the model. With these improvements, the YOLO-FLC model can better cope with complex scenes and environments in autonomous driving tasks, especially when dealing with object occlusion or detecting small objects, and achieves significant performance improvement. The structure of the improved YOLO11 model is shown in Fig. 2, which shows how the new network modules are integrated into the overall architecture of the model.

C3CTA module design.

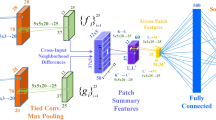

The traditional C3k2 module combines the variable convolution kernel and the channel separation strategy, which makes the model have a strong ability in feature extraction and can adapt to targets of different scales and shapes. However, in the face of scenes with complex background information, especially in the case of overlapping and occlusion between vehicle targets, traditional feature extraction methods still have certain limitations, resulting in insufficient detection performance. In order to further improve the feature extraction ability of the Backbone network, the Channel Diverting Attention (CTA) mechanism was introduced into the Backbone network, and a new C3CTA module was constructed. The core idea of the channel shifting attention mechanism is to automatically assign a weight to each channel by modeling the relationship between channels inside the feature map. Specifically, the network learns which feature channels are more important for the current task and enhances the influence of these channels, while suppressing those channels that are irrelevant or less important for the current task. This mechanism can help the network to focus on the key information in the image when dealing with scenes with complex backgrounds and overlapping targets, especially when the vehicle targets are occluded or overlapping each other, and improve the detection performance.By introducing the CTA mechanism, the C3CTA module not only enhances the attention of the network to the useful feature channels, but also effectively suppresses the interference of irrelevant background information, so as to improve the detection ability of the model in complex environments, especially when the background is cluttered and there is overlap between the targets, showing stronger robustness. This improvement greatly enhances the network’s ability to identify and detect vehicle targets in complex scenes, especially in practical application scenarios such as automatic driving, which can significantly improve the accuracy and stability of target detection. The C3CTA module consists of two main computational branches operating in parallel: first, the SFA branch, which processes features through the space-frequency attention mechanism, and second, the CTA branch, which applies channel transpose attention for cross-channel feature refinement.

Figure 3 shows the structure diagram of C3CTA as well as the CTA module. The detailed process of the Spatial Frequency Attention (SFA) mechanism is as follows. The SFA mechanism first converts the input features into a frequency domain representation. Given the input feature map \(\:\text{X}\in\:{\text{R}}^{\text{B}\times\:\text{C}\times\:\text{H}\times\:\text{W}}\), the frequency projection is computed as follows:

Where \(\:{\text{X}}_{1},{\text{X}}_{2}=\text{S}\text{p}\text{l}\text{i}\text{t}\left({\text{Conv}}_{1}\left(\text{X}\right)\right)\) and residual blocks enhance the high-frequency components. The spatial attention mechanism uses a window-based approach to capture local and global dependencies:

Where RPE represents the relative position encoding calculated by dynamic position deviation:

SFA integrates spatial attention, convolutional features, and frequency information through adaptive gating:

The CTA mechanism operates on the channel dimension to capture the relationship between channels:

Where \(\:\text{T}\) is a learnable temperature parameter that controls attention sharpness. The next step is dual-path feature enhancement. CTA uses dual-path feature processing:

Next is the Dual-frequency Aggregated feedforward Network (DFFN), which enhances the feature representation with frequency-aware processing:

The full C3CTA forward pass integrates two branches:

Feature Diffusion Focused Pyramid Network.

In the backbone network, the input image is processed by multiple downsampling and convolution modules, and a multi-scale feature map is generated using a bottom-up approach. In the scene of roadside target detection, due to the variable size and uneven distribution of targets, most of the common targets in the image are small targets. In order to improve the detection accuracy, this study innovated the Neck part of the backbone network, proposed a new feature diffusion-focused pyramid network (DFPN), and added the FoFe (Focus Features) Module to the Neck part. Through the customized feature focusing module and feature diffusion mechanism, our method can introduce richer context information into the feature maps at each scale. This approach ensures that features at different scales not only preserve local details but also effectively capture global information, thus improving the accuracy and robustness of subsequent object detection and classification tasks. In this way, the model can better understand complex scenes and improve the recognition ability of objects at different scales.

The custom feature focusing module is designed to support processing three different scales of input, and an internally integrated inception-style module is used to further optimize feature extraction. The module uses a set of parallel deep convolution operations, which can effectively capture rich information across multiple scales, and then enhance the adaptability of the network to multi-scale objects. Through this structure, the network can not only extract useful information in feature maps with different resolutions, but also combine multi-level context to improve the overall performance of the model.

Under the action of the feature diffusion mechanism, the features with rich context information are further diffused into different detection scales. Through this mechanism, the network is able to transfer important context information across multiple scales, thus ensuring that useful information at other scales can be utilized at each scale. This process of information diffusion can significantly improve the performance of the model in complex scenes, especially in the face of occlusion, overlap, and other challenges, which can effectively improve the accuracy and reliability of object detection.

The FoFe module consists of a small kernel convolution to capture local information, followed by a series of parallel depthwise separable convolutions to capture contextual information across multiple scales. Formally, FoFe modules can be mathematically represented as follows.

Here, \(\:{\text{L}}_{\text{l}-1,\text{n}}\in\:{\text{R}}^{\frac{1}{2}{\text{C}}_{\text{l}}\times\:{\text{H}}_{\text{l}}\times\:{\text{W}}_{\text{l}}}\) is the local feature extracted by ks×ks convolution, while \(\:{\text{Z}}_{\text{l}-1,\text{n}}^{\left(\text{m}\right)}\in\:{\text{R}}^{\frac{1}{2}{\text{C}}_{\text{l}}\times\:{\text{H}}_{\text{l}}\times\:{\text{W}}_{\text{l}}}\) is the contextual feature extracted by the mth k(m)×k(m) depthwise separable convolution (DWConv).

We set ks = 3 and k(m) = (m + 1)×2 + 1. For n = 0, we have \(\:{\text{X}}_{\text{l}-1,\text{n}}^{\left(2\right)}\)=\(\:{\text{X}}_{\text{l}-1}^{\left(2\right)}\). Then, local features and context features are fused by 1 × 1 convolution to characterize the relationship between different channels:

Where \(\:{\text{P}}_{\text{l}-1,\text{n}}\in\:{\text{R}}^{\frac{1}{2}{\text{C}}_{\text{l}}\times\:{\text{H}}_{\text{l}}\times\:{\text{W}}_{\text{l}}}\)denotes the output feature. The 1 × 1 convolution is used as a channel fusion mechanism that aims to integrate features with different receptive field sizes. In this way, our FoFe module is able to effectively capture a wide range of contextual information while keeping the details of local texture features unaffected. The specific structure of FoFe (Focus Features Module) module is shown in Fig. 4.

Lightweight shared convolutional Detection head.

The Lightweight Shared Convolution Detection Head (LSCD) effectively improves the detection ability and computational efficiency of the model by using the hierarchical shared convolution structure and multi-scale feature fusion method. Specifically, the LSCD module receives feature maps from different scales (e.g., P3, P4, P5), and makes full use of different granularity information by fusing these multi-scale features, so as to obtain richer context information. The advantage of multi-scale feature fusion is that the network can process and synthesize feature maps from different resolutions at the same time, which represent the information of objects at different scales, helping the model to better capture the details and global information of the target, thereby improving the detection accuracy, especially when dealing with targets with multiple scales. In addition, the LSCD module adopts the design of shared convolutional layers, and this strategy not only reduces the number of parameters but also significantly improves the computational efficiency. In traditional detection models, each detection head usually requires an independent convolutional layer, which leads to a significant increase in the number of parameters and the calculation of the model. However, LSCD reduces redundant calculations by sharing the convolution operation, where multiple detection heads share the same convolution weights. This design effectively reduces the computational burden of the model, so that it can maintain low latency and real-time response ability while ensuring efficient calculation, and is suitable for application scenarios that require high-speed reasoning. The structural diagram of the LSCD is shown in Fig. 5. In the LSCD module, in order to solve the problem faced by different detection heads when dealing with inconsistent target scales, we introduce a Scale Layer. The function of this scale layer is to perform feature scaling on the output of the regression branch, which in turn adjusts the scale of the object in order to localize objects of different sizes more accurately. Due to the scale difference of objects, traditional detection methods may have differences in dealing with small and large objects, while the scale layer ensures that the model can better deal with multi-scale objects by adaptively adjusting the feature map. A similar mechanism can also be found in the FPN (Feature Pyramid Networks) architecture, where multi-scale feature fusion is used to improve the detection performance of the model for objects of different sizes. FPN combines feature maps of different levels to adapt to objects of different sizes. However, the scale layer in LSCD is not only a simple feature fusion, but also provides more flexibility and controllability on this basis, which can more accurately adjust the relationship between each scale and improve the adaptability to different target sizes. The introduction of a scale layer optimizes the existing multi-scale fusion method. Compared with traditional structures such as FPN, the scale layer provides more fine-grained scale adjustment capabilities for LSCD, which enhances the applicability of shared convolution in multi-scale object detection. More importantly, this design reduces the negative impact on the quality of the feature map, because it makes the objects of different sizes be more accurately expressed and located in a unified feature space by adjusting and enhancing the features of different scales.

In the classification branch, the LSCD model predicts the probability of each target class by using 1 × 1 convolutional layers. This design allows the network to make independent predictions about the class of each pixel location while working in concert with other branches, such as the localization branch. To ensure that the model can effectively handle the task of object localization and classification separately, the weights of the convolutional layers of the classification branch and the regression branch are independent. This independence allows the network to focus on different tasks in the learning process and optimize the localization accuracy and classification accuracy, respectively, thus avoiding the mutual interference between the two.

In the output stage, the model combines the results of the classification branch and the regression branch to obtain the actual keypoint coordinates by decoding the predicted keypoint features. This process ensures the improvement of positioning accuracy and makes the final detection results more accurate, especially when dealing with fine-grained targets. It can ensure the accurate positioning of key points. This method effectively improves the overall accuracy of object detection, especially in complex scenes. In addition, the LSCD model adopts the weight design of shared convolutional layers, which significantly reduces the number of parameters and computational complexity of the model. By sharing weights, the model avoids redundant convolution calculations, thereby improving computational efficiency and reducing memory usage.

Experiment and analysis

Datasets and Data processing.

The dataset used in this study is the world’s first large-scale time-series vehicle-road collaborative autonomous driving dataset, DAIR-V2X-I, released by the Institute of Artificial Intelligence (AIR) of Tsinghua University in February 2022. The dataset covers a variety of scenes in Beijing’s advanced autonomous driving demonstration zone, including urban roads, highways, and 28 intersections, involving different weather and lighting conditions, such as sunny, rainy, foggy, day, and night scenes. The DAIR-V2X-I dataset consists of image data with a total of ten categories: cars, trucks, vans, buses, pedestrians, cyclists, tricycles, motorcycles, carts, and traffic barrel cones. See Fig. 6 for an example dataset. The final dataset consists of 7,058 images, which are randomly divided into a training set (5,081 images, approximately 70%), a validation set (565 images, approximately 10%), and a test set (1,412 images, approximately 20%) with a ratio of 7:1:2.

Experimental environment and evaluation metrics.

The experimental environment is the operating system is Windows11, the processor is Intel i5-12600KF, the memory size is 32GB, and the graphics card is NVIDIA GeForce RTX 3080Ti 12GB graphics card for training. The development tools are VsCode, Python version 3.10.14, Pytorch version 2.2.2, and CUDA version 12.1. The image size during training is 640*640, the initial learning rate is 0.01, the batch is 16, and the epochs are 150.The experimental environment is shown in Table 1, and the training parameters are presented in Table 2.

Since in the scenario of road target detection, it is often necessary to have a more lightweight model and a faster detection speed, we used the lightweight version of YOLO11n for all the experimental parts in this paper.

The experiment will evaluate the performance of different models from the following aspects: Precision, Recall, mean Average Precision (mAP), detection speed (FPS), Params, GFLOPs. It is calculated as follows:

Where TP (True Positives) represents the number of instances that were correctly predicted as the target, FP (False Positives) represents the number of instances that were incorrectly predicted as the target, and FN (False Negatives) represents the number of target instances that were not correctly predicted. mAP is the most commonly used evaluation metric in object detection tasks. It is the Average AP (Average Precision) over all classes. AP itself is to calculate the average of the precision of a certain category under different recall rates, which is usually calculated under different IoU (Intersection over Union) thresholds, and the IoU thresholds of 0.5 and 0.95 are used for calculation in this experiment.

Experiment and result analysis

Comparison of ablation experiments

In order to verify the effectiveness of the C3CTA module, DFPN network, and LSCD detection head, it is added one by one to the benchmark model YOLO11, and the parameters, mAP@0.5 and FPS, are considered. The results of the comparative experiment are shown in Table 3. The ablation experiments clearly demonstrated the positive impact of adding modules on the model’s performance. Compared to the baseline (Group 1, 83.3% mAP@0.5), adding any single module from DFPN, C3CTA, or LSCD (Groups 2–4) could improve the accuracy (reaching 84.2%, 83.9%, and 84.1% respectively). The combination of adding modules led to a more significant performance leap: any two-module combination (Groups 5–7) increased mAP@0.5 to above 84.8%, with the highest reaching 85.2% (DFPN + C3CTA). Most importantly, integrating all three modules simultaneously (Group 8) achieved the best performance (85.3% mAP@0.5), with an improvement of 2.0% points compared to the baseline. Notably, this highest performance was achieved with a parameter size (2.51 M) that was lower than the baseline (2.61 M) and most other combinations (such as DFPN + C3CTA at 2.77 M), highlighting the synergistic optimization of model efficiency while significantly improving detection accuracy through module combinations.

Comparison of attention experiments

In order to verify the effect of adding the attention mechanism to the C3k2 module, we introduce a variety of attention mechanisms into the YOLO11 basic model, and conduct performance comparison experiments through the mAP@0.5 index. We also conducted a comprehensive comparison using both CTA attention and our improved C3CTA.The results show that the overall performance of the model using the CTA attention mechanism is significantly improved in the detection task, and its mAP@0.5 value is significantly higher than that of other attention mechanisms. Therefore, YOLO11-C3CTA performs the best in all experiments. The detailed experimental results can be seen in Table 4.

Lightweight detection head comparative experiment

To further illustrate the advantages of our LSCD in the road detection scenario, we conducted a comparative experiment with other outstanding detection heads.The specific numerical values of the experiment are shown in Table 5.The experimental results show that among all the comparison models, YOLO11-LSCD achieved the best performance in all the performance indicators. Its mAP@0.5 reached 84.1%, higher than all the other variants. At the same time, it also had the highest precision rate (P: 83.2%) and recall rate (R: 77.8%), demonstrating stronger detection accuracy and robustness. Moreover, in the stricter mAP@0.5–0.95 indicators, YOLO11-LSCD also led with a score of 60.4%, further verifying its excellent comprehensive detection ability in multi-scale and complex scenarios.

Comparison of excellent model experiments

In order to prove the advantages of the proposed algorithm, the proposed algorithm is trained and tested with other excellent object detection algorithms. The specific experimental comparison values are shown in Table 6.Based on the provided experimental data, our model demonstrates significant advantages in multiple core performance indicators, and its overall performance surpasses that of the existing mainstream models. Precision (85.7%) and Recall (79.4%) both ranked first, outperforming Mamba-YOLO-B (85.6%/79.1%) and RT-DETR-r18 (85.5%/78.9%). Both mAP@0.5 (85.3%) and mAP@0.5–95 (61.3%) reached the highest values, verifying the model’s superiority in terms of localization accuracy and multi-scale generalization ability. FPS (167.5) far exceeds the computationally-intensive models Mamba-YOLO-B (118) and RT-DETR-r18 (101), approaching the real-time performance level of lightweight YOLOv5n/v8n (173–177 FPS). The parameter count (2.51 M) is significantly lower than Mamba-YOLO-B (21.79 M) and RT-DETR + 18 (19.88 M), even lower than YOLOv8n (2.71 M), indicating that the model structure is highly streamlined. The computational power (7.6 GFLOPs) is less than that of Mamba-YOLO-B (49.6) and RT-DETR-r18 (57.0), being only 1/6 of them, which meets the low computational power requirements of vehicle-mounted devices. Compared with lightweight models (such as YOLO12n/YOLO13n), our model achieves an improvement of approximately 3% in accuracy metrics (such as mAP@0.5 increasing by 2.1–2.3%), while maintaining a similar inference speed. Compared with models of the same precision level (such as Mamba-YOLO-B), the inference speed of Ours has increased by 42%, and the parameter quantity has been reduced by 11.5%, achieving a breakthrough of “high precision + low complexity”. The Ours model has achieved triple breakthroughs in terms of accuracy, speed, and lightweighting in the autonomous driving scenario. Its efficient feature extraction and optimized architecture design provide a better solution for real-time road target detection, and are particularly suitable for deployment on vehicle platforms with limited computing power.

Visual comparison of detection effect

In order to more intuitively verify the performance of the proposed algorithm in the actual scene, the representative scenes in the DAIR-V2X-I validation set are selected, including the presence of occlusion, dense stacking, low brightness, and complex scenes. For visual comparison with the YOLO11 baseline model, the results are shown in Fig. 7. The picture on the left is the YOLO11 model detection, and the picture on the right is the YOLO-FLC model detection, in which the missed detection is circled for easy viewing. From the perspective of the number of detected targets and the accuracy of detection boxes, the proposed algorithm significantly reduces the false detection rate and missed detection rate, especially in the recognition of small targets. This shows that the proposed algorithm has stronger robustness and practical application value when dealing with different scenes, target occlusion and other problems.

Conclusion

Aiming at the problems of low detection accuracy, missed detection, and false detection caused by overlapping targets on the roadside in autonomous driving scenes, this paper proposes an object detection model based on improved YOLO-FLC. Based on the benchmark model YOLO11, the C3CTA module is embedded in the C3k2 module, and the C3CTA module is constructed and added to the backbone network, which can make more effective use of channel information, strengthen the detection ability of target occlusion, and improve the detection accuracy. The feature diffusion focused Pyramid Network (DFPN) was introduced into the Neck network to strengthen the feature extraction ability for targets and improve the detection level for small targets. The original detection head was replaced by LSCD to reduce the number of parameters. According to the experimental results, the detection accuracy of the YOLO-FLC model proposed in this paper is improved to 85.3%, which is 2.4% higher than that of YOLO11. The model achieves unity in detection accuracy, model size, and detection speed, which can meet the needs of autonomous driving scenarios. In the future, we will further improve the effectiveness of the algorithm in special weather conditions to meet more situations in vehicle driving, so that the model can better adapt to the scene detection task of autonomous driving in complex situations.

Data availability

The data sets analyzed in the study are openly available in[DAIR-V2X-I] at https://air.tsinghua.edu.cn/DAIR-V2X/index.html.

References

Sundaresan Geetha, A. et al. Comparative analysis of YOLOv8 and YOLOv10 in vehicle detection: performance metrics and model Efficacy[J]. Vehicles 6 (3), 1364–1382 (2024).

Singh, K., Parihar, A. S. & Bff Bi-stream feature fusion for object detection in hazy environment. SIViP 18, 3097–3107. https://doi.org/10.1007/s11760-023-02973-6 (2024).

Mahaur, B.Mishra K K. Small-Object detection based on Yolov5 in autonomous driving Systems[J]. Pattern Recognit. Lett. 168, 115–122 (2023).

Bakirci, M. Enhancing vehicle detection in intelligent transportation systems via autonomous UAV platform and YOLOv8 integration[J]. Appl. Soft Comput. 164, 112015 (2024).

WANG C, Y. & BOCHKOVSKIY A, LIAO H Y M. Yolov7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]. Vancouver: IEEE/CVF Conference on Computer Vision and Pattern Recognition, (2023).

Nie, H. et al. A lightweight remote sensing small target image detection algorithm based on improved yolov8[J]. Sensors 24 (9), 2952 (2024).

Bao D C, Gao R J. & Signal Yed-yolo: An Object Detection Algorithm for Automatic Driving[J]. Image Video Process., 18(10): 7211–7219. (2024).

Ma, P. et al. ISOD: improved small object detection based on extended scale feature pyramid network. Vis. Comput. 41, 465–479. https://doi.org/10.1007/s00371-024-03341-2 (2025).

CHEN J R, KAO S H, HE, H. et al. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks[C]// Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Vancouver, BC, Canada: IEEE, (2023).

HAMZENEJADI, M. H. MOHSENI H. Fine-Tuned YOLOv5 for Real-Time Vehicle Detection in UAV Imagery: Architectural Improvements and Performance Boost[J]231 (Expert Systems with Applications, 2023).

Singh, K. & Anil Singh Parihar MRN-LOD: multi-exposure refinement network for low-light object detection. J. Vis. Commun. Image Represent. 99, 104079 (2024).

Alif, M. A. R. Yolov11 for vehicle detection: Advancements, performance, and applications in intelligent transportation systems[J]. arxiv preprint arxiv:2410.22898, (2024).

Bakirci, M. & Bayraktar, I. YOLOv9-enabled vehicle detection for urban security and forensics applications[C]//2024 12th International Symposium on Digital Forensics and Security (ISDFS). IEEE, : 1–6. (2024).

Zhou, S. et al. Deep learning-based vehicle detection and tracking from roadside lidar data through robust affinity fusion[J]. Expert Syst. Appl. 279, 127338 (2025).

Kang, L. et al. YOLO-FA: Type-1 fuzzy attention based YOLO detector for vehicle detection[J]. Expert Syst. Appl. 237, 121209 (2024).

Dai, T. et al. FreqFormer: Frequency-aware Transformer for Lightweight Image Super-resolution. IJCAI. ijcai. org (2024).

Han, K. et al. Ghostnet: More features from cheap operations. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. (2020).

Chen, J. et al. Run, don’t walk: chasing higher FLOPS for faster neural networks. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. (2023).

Singh, K. & Anil Singh, P. Image Decomposition for Object Detection in Low-light Environment. 2023 International Conference on Advances in Computation, Communication and Information Technology (ICAICCIT). IEEE, (2023).

Hanzla, M., Yusuf, M. O. & Jalal, A. Vehicle surveillance using U-NET segmentation and DeepSORT over aerial images[C]//2024 International Conference on Engineering & Computing Technologies (ICECT). IEEE, : 1–6. (2024).

H. Sun, G. Yao, S. Zhu, L. Zhang, H. Xu and J. Kong, "SOD-YOLOv10: Small Object Detection in Remote Sensing Images Based on YOLOv10," in IEEE Geoscience and Remote Sensing Letters, vol. 22, pp. 1-5, 2025.

Zhao, X. et al. ITD-YOLOv8: an infrared target detection model based on YOLOv8 for unmanned aerial vehicles[J]. Drones 8 (4), 161 (2024).

Yang, M. & Fan, X. YOLOv8-lite: A lightweight object detection model for real-time autonomous driving systems[J]. IECE Trans. Emerg. Top. Artif. Intell. 1 (1), 1–16 (2024).

Liu, Q. et al. YOLOv8-CB: dense pedestrian detection algorithm based on in-vehicle camera[J]. Electronics 13 (1), 236 (2024).

Sun, S. et al. Multi-YOLOv8: an infrared moving small object detection model based on YOLOv8 for air vehicle[J]. Neurocomputing 588, 127685 (2024).

Liu, Y., Shao, Z. & Hoffmann, N. Global attention mechanism: retain information to enhance channel-spatial interactions[J]. (2021). arxiv preprint arxiv:2112.05561.

Woo, S. et al. Cbam: Convolutional block attention module[C]//Proceedings of the European conference on computer vision (ECCV). : 3–19. (2018).

Ouyang, D. et al. Efficient multi-scale attention module with cross-spatial learning[C]//ICASSP 2023–2023 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, : 1–5. (2023).

Liu, X. et al. Efficientvit: Memory efficient vision transformer with cascaded group attention[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. : 14420–14430. (2023).

Tan, M., Pang, R., Le, Q. V. & Efficientdet Scalable and efficient object detection[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. : 10781–10790. (2020).

Liu, S. et al. Path aggregation network for instance segmentation[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. : 8759–8768. (2018).

Dai, X. et al. Dynamic head: Unifying object detection heads with attentions[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. : 7373–7382. (2021).

Yang, G. et al. AFPN: Asymptotic feature pyramid network for object detection[C]//2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, : 2184–2189. (2023).

Wang, Z. et al. Mamba YOLO: SSMs-based YOLO for Object detection[J]240605835 (arxiv, 2024). arxiv e-prints.

Ge, Z. et al. Yolox: Exceeding yolo series in 2021. arxiv preprint arxiv:2107.08430 (2021).

Zheng, Z., Zhao, J., Fan, J. et al. A complex roadside object detection model based on multi-scale feature pyramid network. Sci Rep 15, 15992 (2025). https://doi.org/10.1038/s41598-025-99544-1.

Huang, S. et al. Deim: Detr with improved matching for fast convergence. Proceedings of the Computer Vision and Pattern Recognition Conference. (2025).

Yao, X. et al. FBRT-YOLO: Faster and Better for Real-Time Aerial Image Detection. Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 39. No. 8. 2025.

Funding

This research was supported by Intelligent Manufacturing Engineering Laboratory - Shandong Province Higher Education Characteristic Laboratory(PT2025KJS002).

Author information

Authors and Affiliations

Contributions

Conceptualization, D.W. and F.L.; methodology, H.L.; validation, S.L. and H.L.; writing—original draft preparation, E.L.; writing—review and editing, E.L.and D.W.All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liang, E., Wei, D., Li, F. et al. Object detection model of vehicle-road cooperative autonomous driving based on improved YOLO11 algorithm. Sci Rep 15, 32348 (2025). https://doi.org/10.1038/s41598-025-18263-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-18263-9