Abstract

The efficient and cost-effective purification of natural gas, particularly through adsorption-based processes, is critical for energy and environmental applications. This study investigates the nitrogen (N2) adsorption capacity across various Metal-Organic Frameworks (MOFs) using a comprehensive dataset comprising 3246 experimental measurements. To model and predict N2 uptake behavior, four advanced machine learning algorithms—Categorical Boosting (CatBoost), Extreme Gradient Boosting (XGBoost), Deep Neural Network (DNN), and Gaussian Process Regression with Rational Quadratic Kernel (GPR-RQ)—were developed and evaluated. These models incorporate key physicochemical parameters, including temperature, pressure, pore volume, and surface area. Among the developed models, XGBoost demonstrated superior predictive accuracy, achieving the lowest root mean square error (RMSE = 0.6085), the highest coefficient of determination (R2 = 0.9984), and the smallest standard deviation (SD = 0.60). Model performance was rigorously validated using statistical metrics and graphical analysis. Trend consistency with experimental data confirmed that XGBoost accurately captures the effect of pressure on N₂ uptake. Additionally, SHAP (Shapley Additive Explanations) analysis identified temperature as the most influential factor in adsorption prediction. Finally, an outlier assessment using the Leverage method indicated that approximately 94% of the data points were statistically valid and within the model’s applicability domain.

Similar content being viewed by others

Introduction

Rising concerns about global warming, driven by harmful greenhouse gas emissions, have increased the focus on clean energy research. Natural gas, primarily consisting of methane, is an affordable and environmentally friendly fossil fuel. Methane (CH4), the primary component of natural gas, is viewed as a significant alternative to petroleum1. Nonetheless, separating methane (CH4) from nitrogen (N2) is the most challenging and crucial step in enriching low-concentration coal bed methane (CBM), as both components exhibit similar kinetic diameters (0.381 nm for methane and 0.364 nm for nitrogen) and their critical temperatures are quite low. Nevertheless, numerous natural gas reserves hold high levels of nitrogen, necessitating upgrading to meet pipeline standards, which require a nitrogen concentration below 4%2. The upgrading of natural gas is closely related to the separation of olefins and paraffins, which stands out as one of the most urgent industrial challenges that could have significant economic implications3. The process of separating methane from nitrogen is also important in enhanced oil recovery, where N2 is introduced into the reservoir and subsequently extracted as part of the gas stream together with other petroleum gases. In addition, separating methane from nitrogen is essential for upgrading landfill gas (LFG), coal bed gas, and natural gas to attain a profitable energy content for methane.

As a result, there is a need for advancing technologies for gas separation and purification. The recovery of nitrogen is currently a crucial challenge across multiple fields, including energy, environmental, and medical applications. Examples include its role in oil extraction, air separation processes, and the generation of hydrogen from industrial gases produced in the steel manufacturing sector4.

Various technologies have been investigated for the separation and purification of coal-based methane. Nitrogen can be extracted from methane using cryogenic distillation, which is an expensive and energy-demanding process. The application of adsorption processes and membrane technologies presents a viable alternative for selectively capturing N2 in a cost-effective and energy-efficient manner2,5.

Adsorbents are crucial for the effective separation of CH4 and N2 in PSA (Pressure Swing Adsorption) technology6. The process of separation happens as a result of differences in molecular weight, dipole, shape, polarity, and quadrupole moments, which lead to some molecules being more tightly bound to the adsorbent surface, or due to the pores being excessively small to accommodate larger molecules. Materials with porous structures that operate on the principle of physisorption may be suitable for separating and purifying these gases. Currently, the most commonly used adsorbents for the separation of CH4 and N2 gases include activated carbon, carbon molecular sieves, and zeolite molecular sieves. For instance, titanium silicate ETS-47 stands out as one of the few porous materials capable of efficiently separating nitrogen from methane through kinetic mechanisms, thanks to the slight size difference between the two molecules (3.8 Å for CH4 compared to 3.64 Å for N2)2. Creating a porous material that effectively balances high equilibrium preference for N2 compared to CH4, substantial N2 adsorption capacity, and ease of regeneration poses a significant challenge for separation technologies. According to studies on the adsorption characteristics of CH4–N2 binary gas mixtures8,9, the ideal adsorbent must exhibit both high structural selectivity and excellent thermal stability.

Among various porous materials, metal-organic frameworks (MOFs) have garnered substantial focus due to their extensive design flexibility and high porosity9,10,11. These frameworks are a relatively recent category of multifunctional crystalline materials, created by the self-assembly of metal ions or clusters with organic ligands. Designing porous MOFs with unsaturated transition metal sites that strongly bind N2 provides a promising route to achieving N2-selective capture at equilibrium due to the easy control of both the reactivity and the concentration of these sites. Due to their remarkable modular design, wide-ranging and captivating topologies, and tunable pore properties, MOFs have been extensively researched across numerous fields, including gas storage12, separation13, catalysis14, photochemistry15, chemical sensing16, and others.

Furthermore, novel MOF materials featuring high methane capacity and exceptional CH4/N2 adsorption selectivity have been developed in recent years. For instance, Zhou et al.17 developed an innovative molecular sieve known as MAMS-1, which is characterized by its flexible mesh design that utilizes the molecular door effect. This unique structure enables the molecular door to adjust its position in response to varying temperatures. At a low temperature of 113 K, this sieve displayed a kinetic selectivity ratio for N2 over CH4 greater than 3. In addition, Sumer et al.18 conducted molecular simulations to study the adsorption and diffusion of CH4/N2 mixtures across 102 different MOFs, assessing their performance for both adsorption-based and membrane-based separations. Among these, three MOFs-BERGAI01, PEQHOK, and GUSLUC- demonstrated the highest selectivity for adsorption.

Conversely, laboratory experiments tend to be costly, labor-intensive, and time- intensive.

Alternatively, it is strongly advised to create more comprehensive and robust models. One could posit that soft computing techniques may yield reliable solutions in comparison to traditional methods19. Machine learning (ML) methods are highly effective for modeling the mathematical connections between variables and objectives within extremely intricate datasets20,21,22,23,24,25,26. Numerous advanced models can be utilized to derive useful solutions for various issues without the need for experimental investigations. Several studies have investigated the application of artificial intelligence (AI) techniques in modeling gas adsorption and uptake. For instance, Khosrowshahi examined the role of natural product-derived porous carbons in CO2 capture as a strategy for climate change mitigation27. Rahimi employed support vector machines and genetic algorithms to predict and optimize hydrogen and CO2 concentrations during biomass steam gasification using a calcium oxide adsorbent28. Similarly, Wang et al. utilized GRNN and XGBoost models to accurately predict hydrogen adsorption in coal, highlighting the potential of machine learning to enhance hydrogen storage methods29. Recently, there has been a surge in research on gas storage within MOFs, reflecting the increasing interest in advanced materials for energy applications. Machine learning models, particularly XGBoost, have been used effectively to forecast hydrogen wettability in geological storage reservoirs, showing high accuracy (R2 = 0.941) and strong correlation with real data30.

In the context of CO2 adsorption in MOFs, Dashti et al.31 introduced several ML models, identifying the radial basis function (RBF) as the most effective approach. Their study utilized a dataset comprising 506 data points collected from the literature, covering information on 13 different MOFs. Subsequently, Li et al.32 employed a dataset with 348 data points, achieving a correlation coefficient of 0.9 using their Random Forest (RF) model for CO₂ adsorption prediction. In addition, Larestani et al.33 applied white-box ML algorithms, namely group method of data handling (GMDH), gene expression programming (GEP), and genetic programming (GP), to develop accurate models for assessing the CO2 adsorption capacity of MOFs. Their models achieved a root mean square error (RMSE) of 2.77 and a coefficient of determination (R2) of 0.8496. Furthermore, Naghizadeh et al.34 employed various ML techniques, such as convolutional neural network (CNN), deep neural network (DNN), and Gaussian process regression with Rational Quadratic Kernel (GPR-RQ), to model the hydrogen storage capacity in MOFs. Notably, their results highlighted the outstanding performance of the GPR-RQ model, yielding an impressive R2 value of 0.99. Table 1 summarizes recent studies in gas adsorption modeling using numerous machine learning techniques. This table outlines the key contributions, input parameters, R2 values, applied machine learning models, targeted gases, and material types used in each study.

Based on the available evidence, previous studies have not employed innovative models to predict the efficiency of MOFs in storing N2 gas. The primary contribution and novelty of this study lie in the utilization of innovative methods to predict the N2 uptake under varying operational circumstances. Another notable aspect of this study is the collection of a comprehensive dataset consisting of 3246 data points, offering detailed information on N2 adsorption in various MOFs (65 types) under diverse conditions. This approach represents a significant step forward in integrating machine learning with MOF research, opening new avenues for optimizing MOF properties and enhancing their application in N2 storage technologies. The dataset encompasses essential factors such as pore volume, surface area, pressure, and temperature. Subsequently, advanced and robust techniques such as Categorical Boosting (CatBoost), Extreme gradient boosting (XGBoost), DNN, and GPR-RQ are utilized to forecast the efficiency of N2 uptake by the MOFs. Subsequently, the effectiveness of the models is assessed using a range of statistical and visual evaluations. Moreover, additional trend examinations are carried out to validate the most well-established model. Evaluating feature importance is essential for comprehending how input variables affect the prediction of the target variable in ML applications. Thus, the SHAP (Shapley Additive explanations) method is applied to investigate the intricate relationships between features and their importance. Ultimately, the Leverage method is applied to appraise the reliability and applicability of the most accurate predictive model. A comprehensive illustration of the technical procedure is presented in Fig. 1.

Dataset collecting

As mentioned previously, this study aims to predict N2 storage in MOFs using numerous ML algorithms. For this project, a comprehensive dataset was compiled, comprising 3246 real data points with pressures reaching up to 1054.7 bar and temperatures up to 473 K, sourced from previous studies46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68. Before producing the final target, an impeccable dataset, crucial corrective steps were undertaken during the data pre-processing phase, including data cleaning and integration, to facilitate the creation of more accurate predictive models. The dataset was then grouped into two separate sets: one for training, encompassing 2596 data points, and one for testing, with 650 data points for all algorithms. All models were evaluated on the same independent test set, separate from training and validation data. Performance metrics (R2, RMSE, MAE) reported are based exclusively on this test set to ensure unbiased comparison.

The models used input parameters such as pore volume and surface area of the various MOFs, as well as temperature and pressure. Comprehensive explanations of the dataset are provided in Tables 2 and 3. In addition, Fig. 2 demonstrates the Box plots representing input and output variables.

Modeling techniques

Categorical boosting (CatBoost)

The CatBoost algorithm, a recent development in gradient boosting decision trees (GBDT), relies heavily on the integration of categorical columns, which is a key aspect of its modeling process. This algorithm is specially optimized for structured data and performs exceptionally well in handling categorical features69. In addition, it utilizes oblivious decision trees, a type characterized by level-wise growth. This change involves using a vectorized representation of the tree, allowing it to be tested in a brief period. Various processing techniques are commonly employed in the CatBoost model. Two key techniques include target-based statistics and one_hot_max_size (OHMS). In a dynamically expanding tree, a grid search is necessary for each branch to identify the most significant changes applied to each feature of the CatBoost method69. Understanding CatBoost implementation fundamentally relies on distinguishing between testing and training datasets70. An essential benefit of the CatBoost model is its use of random permutations to predict leaf values when determining the tree structure, effectively mitigating overfitting. When analyzing categorical features, the CatBoost algorithm leverages the entire training dataset for its learning process. For each sample, numerical transformations of features are executed, with the target value calculated initially. Next, the sample’s weight and priority are factored into the process69,71. The network’s predicted output is derived using Eq. (1)69:

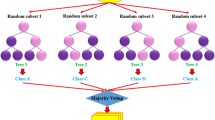

where Rn represents the disjoint region associated with the tree leaves, xi serves as the explanatory variable, and H denotes the decision tree function. As mentioned above, CatBoost mitigates overfitting by utilizing ordered boosting, regularization, and early termination. This ensures the efficient management of categorical features and enhances model performance. The algorithm’s flowchart representation is shown in Fig. 3.

Extreme gradient boosting (XGBoost)

XGBoost, an open-source framework, is a prominent tool in ML that delivers an efficient, versatile, and portable approach to implementing gradient-boosted decision trees. XGBoost is highly regarded for its outstanding performance, scalability, and ability to handle diverse data types and tasks, including regression, classification, ranking, and predictive modeling challenges. In other words, in tree-based ensemble methods, a group of classification and regression trees (CARTs) is used to align with the training data by minimizing a regularized objective function. To elaborate on the structure of a Classification and Regression Tree (CART), it is composed of (I) a root node, (II) internal nodes, and (III) leaf nodes, as shown in Fig. 4. Based on the binary splitting approach, the initial node node, which contains the total dataset, is split into internal nodes, with the leaf nodes representing the concluding classes72.

Deep neural network (DNN)

DNNs represent a crucial subset of artificial intelligence technology that leverages a multi-layer architecture to learn and represent complex features. The complexity of interconnected neurons somewhat mirrors the intricacy of biological neurons in the brain73. The presence of multiple hidden layers allows for the combination of components from earlier layers, enabling the creation of networks that can handle complex data while utilizing fewer neurons74. Over the past decade, DNN frameworks have enabled significant achievements across various fields. Unlike traditional neural networks, DNNs feature non-linear hidden layers that are capable of learning intricate non-linear connections between input data and target variables. Figure 5 illustrates the structure of a DNN algorithm. The input variable is processed through multiple layers to generate the output. The output from each layer acts as the input for the subsequent layer, where an activation function, incorporating weights and biases, adjusts the input in each connected neuron to calculate the result for the current layer. These activation functions empower neurons to model non-linear relationships, enabling neural networks to capture intricate interactions between variables.

Gaussian process regression (GPR)

The GP model has emerged as a popular choice for tackling challenges in non-linear classification and regression due to its widespread application75. The Gaussian process refers to a set of random variables, such that any finite group adheres to a Gaussian distribution, serving as a natural generalization76. In the last ten years, neural networks have rapidly advanced in addressing complex issues in petroleum geology and reservoir engineering. However, their flexibility makes them prone to overfitting, which can be mitigated by weight regularization, though tuning its parameters remains challenging. An increasingly popular mathematical technique, the Bayesian Network (BN), also known as Bayes Net, is a probabilistic framework that has garnered significant attention for addressing the complexity as mentioned above 77. GPR is a powerful kernel-based framework designed to learn hidden relationships among numerous variables in the training dataset, ensuring it is particularly effective for addressing complex, non-linear prediction problems78. In every GPR framework, the GP employs a non-linear multivariate Gaussian function to model the data, as shown in Eq. (2)79:

The vector \(x=({x_1},{x_2},...,{x_k})\) represents the input variables, \(\Sigma\)signifies convergence, and \(\mu\)represents the mean of the dataset. Given randomly chosen training and testing data, the GPR process yields an output value as described below79:

In the given equations, y represents the desired output, while \(k(x,x^{\prime})\)functions as the convergence operator, and m(x) serves as the mean function. GPR is easy to implement, automatically adapts for improved variable prediction, and offers flexibility by accommodating non-parametric inferences. An essential characteristic of any GPR method is its ability to mitigate overfitting80. Additionally, GPR techniques can interpret the predictive distribution associated with the test dataset81.

Results and discussion

Intelligent schemes

Based on the provided information, the models were designed with four input parameters and structured to generate the N2 uptake in MOFs as the target output. These methods were formulated utilizing a comprehensive dataset comprising 3246 experimental observations. Consequently, the intelligent models were trained using 2596 data points and later evaluated against the remaining 650 data points. Subsequently, the errors in the developed models are examined through various approaches, including statistical and visual analyses. Notably, K-fold cross-validation was implemented to mitigate overfitting and ensure model validation. It is crucial to highlight that determining the ideal features and values for the necessary hyperparameters of the previously mentioned models is a key aspect of the model development process. To guarantee strong performance and reduce the risk of overfitting, all machine learning models developed in this work underwent methodical hyperparameter tuning. Each method employed a grid search approach combined with five-fold cross-validation. The mean squared error (MSE) on the validation data was minimized. Early stopping was incorporated into the DNNs to halt training upon reaching a performance plateau on the validation set. Early stopping rounds, as well as subsample and depth constraints, were used to regularize the XGBoost and CatBoost models. For the GPR-RQ, kernel parameters were optimized using the fmin_l_bfgs_b algorithm. The optimal hyperparameters identified in this study are available in Table 4.

Statistical model assessment

After constructing the models, the effectiveness and exactness of each algorithm can be assessed through various standard statistical measures. In the present work, root mean square error (RMSE), standard deviation (SD), mean absolute error (MAE), correlation coefficient (R2), and mean bias error (MBE) were applied to measure each algorithm’s effectiveness in predicting N2 uptake and to benchmark its performance against other models. The aforementioned parameters are outlined below22:

Here, \(Z_{i}^{{exp}}\) and \(Z_{i}^{{cal}}\) denote the ith actual and forecasted N2 storage values, respectively. \({\bar {Z}_i}\)signifies the mean of the observed data, with N indicating the dataset’s size. The labels ‘cal’ and ‘exp’ as superscripts indicate the calculated and experimental data points, respectively. Table 5 provides a detailed overview of the statistical error values for the models developed in this study, broken down into three sets: training, testing, and overall. As indicated by this table, all constructed models demonstrated predictions that were closely aligned with the observed values. This table clearly shows that the XGBoost model exhibits the highest R2 and the lowest RMSE (R2 = 0.9984, RMSE = 0.6941) compared to all other advanced models established in this study. To evaluate whether there was a meaningful statistical difference between the forecasted and real values, a paired t-test was performed for the XGBoost model. The analysis revealed no significant difference (t(3245) = 0.146, p = 0.884), exhibiting strong alignment with empirical observations. Moreover, the Pearson correlation coefficient between the anticipated and real values was calculated to be 0.999, demonstrating a robust linear association.

The GPR-RQ and CatBoost models followed, achieving (R2 = 0.9984, RMSE = 0.6941) and (R2 = 0.9968,RMSE = 0.8607), respectively. In comparison to the other three models, the DNN model demonstrates a lower level of prediction accuracy. The DNN model, despite being ranked fourth in accuracy, demonstrates a satisfactory level of precision.

Graphical model assessment

In the cross plot, the model’s forecasted data points are plotted against the experimental data along a 45-degree line (unit-slope line) that intersects the origin of the diagram. The reliability and validity of the developed models are assessed by the extent to which the data points cluster along the unit-slope line. Figure 6 displays the cross plots for all four smart models created in this study. According to Fig. 6a–d, all four models designed to predict N2 uptake are deemed acceptable and valid, as they show a satisfactory alignment between the predicted and observed values. Besides, it is evident that the estimations from XGBoost and GPR-RQ models, closely match the actual target values.

As an additional validation approach, Fig. 7 presents the error distribution plots for the established algorithms, depicting the residual errors between each predicted value and its corresponding actual value. In this plot, the smaller the deviation of points from the zero-error line, the more reliable the model is considered to be. The results suggest that all advanced approaches discussed in this study are reliable and credible, as the calculated error values are predominantly clustered around the zero-error line. This figure illustrates that XGBoost and GPR-RQ models demonstrate promising results, featuring minimal errors and an absence of a discernible error trend. However, the XGBoost model exhibits a more concentrated grouping of points near the zero-error line, indicating reduced residual errors and greater accuracy. Depending on the predictions from the XGBoost model (Fig. 7a), it is clear that the data points fall within a narrow error range (between − 7.63 and 9.86).

Another graphical method to assess the models’ predictive performance is to plot a cumulative frequency curve. This involves plotting the cumulative frequency of data points against the absolute error values obtained from the models, as shown in Fig. 8. According to this diagram, over 90% of the data points can be forecasted by the XGBoost and GPR-RQ models with an absolute error of around 0.30. Consequently, this graph demonstrates that these models accurately predict N2 storage in MOFs.

After that, Fig. 9 illustrates the Taylor plots for the models analyzed in this research. This plot demonstrates the correlation between the predicted and observed behaviors by integrating three statistical metrics: SD, RMSE, and Pearson’s correlation coefficient (r). The SD is associated with the distance from the origin, while RMSE indicates the distance to the observed data points, and r represents the azimuthal angle, respectively.

Based on Fig. 9, the point associated with the suggested XGBoost model is closest to the observed point. This indicates the superior performance of this model across all models analyzed in this research, despite the fact that the accuracy of other models is comparable and satisfactory.

Group error analysis

This procedure starts by categorizing independent variables into distinct categories based on the extent of their changes. The RMSE values for the target variable are later calculated for each range and illustrated in a chart. Figure 10 illustrates group-error plots for predicting N2 uptake in MOFs using four proposed models. These plots are based on four independent variables: temperature (K), pressure(bar), pore volume (cm3/g), and surface area (m2/g).

As shown in Fig. 10a, all models exhibit the highest error within the surface area range of 4704–6240 m2/g. In addition, XGBoost outperforms with lower RMSE in the range of 3171–4704 as a superior model. Additionally, the group error diagram based on pressure indicates that the systems have the highest deviation in the range of 0.00084–100 bar, while the lowest error is observed between 100 and 1054.7 bar. Furthermore, the XGBoost model consistently demonstrates lower error values across all pore volume ranges.

As illustrated in Fig. 10a, for all models, the highest error occurs in the surface area range of 4704–6240 m2/g. In addition, the group error diagram based on pressure in Fig. 10b indicates that the systems exhibit the least deviation within the range of 0.00084–100 bar, while the highest error occurs in the range of 100–1054.7 bar. For Fig. 10c, the constrained XGBoost model demonstrated lower error values across all pore volume ranges in comparison to other models. Additionally, as shown in Fig. 10d, the suggested XGBoost model showcases high accuracy for temperatures within the range of 209 to 473 K, compared to temperatures below 209 K.

Shapely explanation plot (SHAP)

SHAP Analysis, developed by Lundberg and Lee82, is an extensive strategy for interpreting ML models that introduces the idea of Shapley additive explanations. Rooted in game theory, SHAP enables detailed insight into model behavior by quantifying the influence of each variable on the forecasting results. In simple terms, the Shapley value is a procedure for illustrating the comparative influence and impact of individual input features in generating the final output. It’s worth noting that the XGBoost model demonstrates superior accuracy in the current context when compared to the other models. As a result, XGBoost has been utilized for performing SHAP analysis. Figure 11 provides a comprehensive visualization of the SHAP analysis results. First, Fig. 11a illustrates the significance of input variables in understanding how the attributed features affect the predictions of the target variable. The results presented here are derived by averaging the Shapley values across the entire dataset. This figure indicates the mean absolute influence of each variable, emphasizing temperature as the most impactful parameter in predicting N2 uptake in MOFs. In addition, Fig. 11b shows summary plots that illustrate the relationship of the respective variable and the arrangement of SHAP values for a specific feature. In this plot, the y-axis displays the input variables used in the analysis, arranged by their significance, while the x-axis represents the corresponding SHAP values. The color of the dots reflects their size, ranging from small to large, with each dot representing a sample from the database. The x-axis shows the level of model output attributed to SHAP values for each feature, corresponding to changes in feature magnitude. Additionally, it should be noted that the lower section of the plot represents the variables with the least effect, while the upper section highlights the variables with the greatest impact. Based on this figure, temperature exerts a considerable influence, whereas pore volume has a minimal effect on the output. It can be concluded that summary plots play a crucial role in SHAP analysis, as they not only display the prioritization of input variables based on their significance but also illustrate their correlation with the target variable. In fact, in this figure, the gradient of colors spans from red to blue, where deeper red hues signify larger eigenvalues and deeper blue hues signify smaller eigenvalues. A wider range of colors corresponds to a more prominent impact of the characteristic, signaling its heightened significance in shaping the model’s forecasts.

Furthermore, to deepen the analysis of how each variable influences the target, Shapley values were evaluated. Figure 12 presents the Shapley values associated with the model’s input factors considered in this research, namely surface area, pressure, temperature, and pore volume. As depicted in Fig. 12, the Shapley values for the surface area and pore volume rise as the corresponding feature values increase, implying that higher feature values are associated with elevated N2 uptake in MOFs. However, for temperature, the impact is negative, and its trend declines as the feature value increases.

Model trend analysis

To verify the forecasting ability of the created model in aligning with the anticipated physical trends of N2 uptake with changes in pressure, the N2 uptake forecasts produced by the XGBoost model are presented relative to pressure. Fig. 13 depicts how the N2 adsorption varies as pressure increases. The results are shown for three MOFs— Bio-MOF1 (surface area = 1680, pore volume = 0.75), Bio-MOF1@TEA (surface area = 1220, pore volume = 0.55), and Bio-MOF1@TMA (surface area = 1460, pore volume = 0.65)— both experimentally and via modeling. As shown in this figure, increasing pressure raises gas density, enhancing molecular collisions with MOF surfaces and increasing adsorption. This observed behavior closely matches the predictions made by the XGBoost model. This strong alignment showcases the model’s capability to precisely depict how pressure the N2 uptake.

Outlier identification of the XGBoost model

Detecting outliers using the leverage approach is an important method for identifying anomalous data points that might differ notably from the rest of the dataset. A further goal of the previously discussed approach is to assess the validity and reliability of the database being modeled83,84. Within this framework, the method utilizes the values R, indicating standardized residuals, along with H, denoting the hat matrix. These values are derived from the observed and forecasted outputs of the XGBoost framework. All Hat indexes are determined using the Hat matrix (H) as follows85.

Here, X denotes a matrix of size (p × q), where p corresponds to the number of data samples and q refers to the model’s input parameters. The matrix T signifies the transpose of X. Besides, the warning leverage value (H*) and standardized residuals are computed as below86:

Data points with a hat value between 0 and H*, and a standardized residual SR ranging from − 3 to 3, are considered valid. Conversely, data with SR values exceeding 3 or falling below − 3 are regarded as suspected data. As displayed in Fig. 14, most data points are located within the range of 0 ≤ H ≤ H* and − 3 ≤ R ≤ 3. As a result, only 2.1% of the data points were detected as falling beyond the model’s designed range, which is negligible given the large volume of data points utilized in constructing the model. Therefore, the findings of this method show that the XGBoost approach developed exhibits strong reliability and accuracy in predicting N2 uptake.

Conclusions

In this study, four advanced machine learning models— CatBoost, XGBoost, Deep Neural Network (DNN), and Gaussian Process Regression (GPR)— were developed to predict nitrogen (N2) uptake in a range of Metal-Organic Frameworks (MOFs). A curated dataset comprising over 3246 experimental entries from 65 distinct MOFs was used, incorporating four key input features: pore volume, surface area, pressure, and temperature.

The findings indicate that XGBoost consistently Surpasses the other models in terms of predictive accuracy, achieving the highest (R2 = 0.9984) and the lowest (RMSE = 0.6085). The comparative analysis ranks model performance as follows: XGBoost > GPR-RQ > CatBoost > DNN. SHAP analysis revealed temperature as the most influential factor in predicting N₂ adsorption, whereas pore volume had the least impact. Moreover, trend analysis confirmed that the XGBoost model accurately captures the physical relationship between pressure and nitrogen uptake, where increased pressure leads to greater adsorption due to enhanced gas density and intermolecular interactions. Finally, leverage analysis using the William plot indicated that approximately 94% of the dataset is located within the model’s applicability domain, confirming the reliability and robustness of the established models.

These findings highlight the potential of machine learning approaches particularly XGBoost, for accurately modeling gas adsorption behavior in porous materials and supporting the design of next-generation MOFs for efficient gas purification processes.

Data availability

All the data have been collected from literature. We cited all the references of the data in the manuscript. However, the data will be available from the corresponding author on reasonable request.

References

Simon, C. M. et al. The materials genome in action: identifying the performance limits for methane storage. Energy Environ. Sci. 8 (4), 1190–1199 (2015).

Cavenati, S., Grande, C. A. & Rodrigues, A. E. Separation of CH4/CO2/N2 mixtures by layered pressure swing adsorption for upgrade of natural gas. Chem. Eng. Sci. 61 (12), 3893–3906 (2006).

Ruthven, D. M. Molecular sieve separations. Chem. Ing. Tech. 83, 1–2 (2011).

Ghanbari, H., Pettersson, F. & Saxén, H. Optimal operation strategy and gas utilization in a future integrated steel plant. Chem. Eng. Res. Des. 102, 322–336 (2015).

Kuznicki, S. M. et al. A titanosilicate molecular sieve with adjustable pores for size-selective adsorption of molecules. Nature 412 (6848), 720–724 (2001).

Wales, J., Hughes, D., Marshall, E. & Chambers, P. A review on the application of metal–organic frameworks (MOFs) in pressure swing adsorption (PSA) nitrogen gas generation. Ind. Eng. Chem. Res. 61 (27), 9529–9543 (2022).

Majumdar, B., Bhadra, S., Marathe, R. & Farooq, S. Adsorption and diffusion of methane and nitrogen in barium exchanged ETS-4. Ind. Eng. Chem. Res. 50 (5), 3021–3034 (2011).

Liu, B. & Smit, B. Comparative molecular simulation study of CO2/N2 and CH4/N2 separation in zeolites and metal rganic frameworks. Langmuir. 25(10), 5918–5926 (2009).

Mulgundmath, V. P., Tezel, F., Hou, F. & Golden, T. Binary adsorption behaviour of methane and nitrogen gases. J. Porous Mater. 19, 455–464 (2012).

Bai, Y. et al. Zr-based metal–organic frameworks: design, synthesis, structure, and applications. Chem. Soc. Rev. 45 (8), 2327–2367 (2016).

Furukawa, S., Reboul, J., Diring, S., Sumida, K. & Kitagawa, S. Structuring of metal–organic frameworks at the mesoscopic/macroscopic scale. Chem. Soc. Rev. 43 (16), 5700–5734 (2014).

Peng, Y. et al. Methane storage in metal–organic frameworks: current records, surprise findings, and challenges. J. Am. Chem. Soc. 135 (32), 11887–11894 (2013).

Holcroft, J. M. et al. Carbohydrate-mediated purification of petrochemicals. J. Am. Chem. Soc. 137 (17), 5706–5719 (2015).

Peng, L. et al. Highly mesoporous metal–organic framework assembled in a switchable solvent. Nat. Commun. 5 (1), 4465 (2014).

Mallick, A. et al. Solid state organic amine detection in a photochromic porous metal organic framework. Chem. Sci. 6 (2), 1420–1425 (2015).

Peng, J. et al. A supported Cu (I)@ MIL-100 (Fe) adsorbent with high CO adsorption capacity and CO/N2 selectivity. Chem. Eng. J. 270, 282–289 (2015).

Ma, S., Sun, D., Wang, X. S. & Zhou, H. C. A mesh-adjustable molecular sieve for general use in gas separation. Angew. Chem. Int. Ed. 46 (14), 2458–2462 (2007).

Sumer, Z. & Keskin, S. Adsorption-and membrane-based CH4/N2 separation performances of MOFs. Ind. Eng. Chem. Res. 56 (30), 8713–8722 (2017).

Naghizadeh, A. et al. Modeling thermal conductivity of hydrogen-based binary gaseous mixtures using generalized regression neural network. Int. J. Hydrog. Energy. 59, 242–250 (2024).

Ben Seghier, M. E. A., Ouaer, H., Ghriga, M. A., Menad, N. A. & Thai, D. K. Hybrid soft computational approaches for modeling the maximum ultimate bond strength between the corroded steel reinforcement and surrounding concrete. Neural Comput. Appl. 33 (12), 6905–6920 (2021).

Thrampoulidis, E., Mavromatidis, G., Lucchi, A. & Orehounig, K. A machine learning-based surrogate model to approximate optimal Building retrofit solutions. Appl. Energy. 281, 116024 (2021).

Naghizadeh, A. et al. White-box methodologies for achieving robust correlations in hydrogen storage with metal-organic frameworks. Sci. Rep. 15 (1), 4894 (2025).

Hentabli, M., Amar, M. K. & Belhadj, A. E. Improved Cupressus sempervirens L. galls for methylene blue removal: adsorption kinetics optimisation using the DA-LS algorithm, characterisation, and machine learning modeling. Int. J. Environ. Anal. Chem. 1–26 (2024).

Lakhdari, C. et al. Meta-heuristic optimization for drying kinetics and quality assessment of capparis spinosa buds. Chem. Eng. Commun. 211 (12), 1864–1883 (2024).

Omari, F. et al. Dragonfly algorithm–support vector machine approach for prediction the optical properties of blood. Comput. Methods Biomech. BioMed. Eng. 27 (9), 1119–1128 (2024).

Bouzidi, A. et al. Artificial neural network approach to predict the colour yield of wool fabric dyed with limoniastrum monopetalum stems. Chem. Afr. 7 (1), 99–109 (2024).

Khosrowshahi, M. S. et al. Natural products derived porous carbons for CO2 capture. Adv. Sci. 10 (36), 2304289 (2023).

Rahimi, M. & Salaudeen, S. A. Optimizing hydrogen-rich gas production by steam gasification with integrated CaO-based adsorbent materials for CO2 capture: machine learning approach. Int. J. Hydrog. Energy. 95, 695–709 (2024).

Wang, Y. et al. Low-carbon advancement through cleaner production: A machine learning approach for enhanced hydrogen storage predictions in coal seams. Renew. Energy. 241, 122342 (2025).

Thanh, H. V., Rahimi, M., Dai, Z., Zhang, H. & Zhang, T. Predicting the wettability rocks/minerals-brine-hydrogen system for hydrogen storage: Re-evaluation approach by multi-machine learning scheme. Fuel 345, 128183 (2023).

Dashti, A., Bahrololoomi, A., Amirkhani, F. & Mohammadi, A. H. Estimation of CO2 adsorption in high capacity metal – organic frameworks: applications to greenhouse gas control. J. CO2 Util. 41, 101256 (2020).

Li, X. et al. Applied machine learning to analyze and predict CO2 adsorption behavior of metal-organic frameworks. Carbon Capture Sci. Technol. 9, 100146 (2023).

Larestani, A. et al. Toward reliable prediction of CO2 uptake capacity of metal–organic frameworks (MOFs): implementation of white-box machine learning, Adsorption. 30(8), 1985–2003 (2024).

Naghizadeh, A. et al. Exploring advanced artificial intelligence techniques for efficient hydrogen storage in metal organic frameworks. Adsorption. 31(2), 42 (2025).

Fathalian, F., Aarabi, S., Ghaemi, A. & Hemmati, A. Intelligent prediction models based on machine learning for CO2 capture performance by graphene oxide-based adsorbents. Sci. Rep. 12 (1), 21507 (2022).

Abdi, J., Hadavimoghaddam, F., Hadipoor, M. & Hemmati-Sarapardeh, A. Modeling of CO2 adsorption capacity by porous metal organic frameworks using advanced decision tree-based models. Sci. Rep. 11 (1), 24468 (2021).

Maleki, A. et al. Machine learning-driven design of high-performance activated carbons for enhanced Co2 capture. Available at SSRN 5237982.

Meng, M., Zhong, R. & Wei, Z. Prediction of methane adsorption in shale: Classical models and machine learning based models. Fuel. 278, 118358 (2020).

Pardakhti, M., Moharreri, E., Wanik, D., Suib, S. L. & Srivastava, R. Machine learning using combined structural and chemical descriptors for prediction of methane adsorption performance of metal organic frameworks (MOFs). ACS Combin. Sci. 19(10), 640–645 (2017).

Borja, N. K., Fabros, C. J. E. & Doma, B. T. Jr Prediction of hydrogen adsorption and moduli of metal–organic frameworks (MOFs) using machine learning strategies. Energies. 17(4), 927 (2024).

Davoodi, S. et al. Machine-learning models to predict hydrogen uptake of porous carbon materials from influential variables. Sep. Purif. Technol. 316, 123807 (2023).

Ahmed, A. & Siegel, D. (eds) Predicting hydrogen storage in MOFs via machine learning. Patterns. 2(7), 100291 (2021).

Wu, X., Xiang, S., Su, J. & Cai, W. Understanding quantitative relationship between methane storage capacities and characteristic properties of metal–organic frameworks based on machine learning. J. Phys. Chem. C. 123 (14), 8550–8559 (2019).

Salehi, K., Rahmani, M. & Atashrouz, S. Machine learning assisted predictions for hydrogen storage in metal-organic frameworks. Int. J. Hydrog. Energy. 48 (85), 33260–33275 (2023).

Longe, P. O., Davoodi, S., Mehrad, M. & Wood, D. A. Robust machine-learning model for prediction of carbon dioxide adsorption on metal-organic frameworks. J. Alloys Compd. 1010, 177890 (2025).

An, J. & Rosi, N. L. Tuning MOF CO2 adsorption properties via cation exchange. J. Am. Chem. Soc. 132 (16), 5578–5579 (2010).

Chen, Y. et al. A new MOF-505@ GO composite with high selectivity for CO2/CH4 and CO2/N2 separation. Chem. Eng. J. 308, 1065–1072 (2017).

Cho, H. Y., Yang, D. A., Kim, J., Jeong, S. Y. & Ahn, W. S. CO2 adsorption and catalytic application of Co-MOF-74 synthesized by microwave heating. Catal. Today. 185 (1), 35–40 (2012).

Furukawa, H. et al. Ultrahigh porosity in metal-organic frameworks. Science. 329 (5990), 424–428 (2010).

He, D., Liu, J., Shi, H. T. & Xin, Z. Design and synthesis of different sized polyhedral channel MOF materials for the separation of CO2/N2. Mater. Lett. 366, 136512 (2024).

Li, B. et al. Enhanced binding affinity, remarkable selectivity, and high capacity of CO2 by dual functionalization of a rht-type metal–organic framework. Angew. Chem. Int. Ed. 51 (6), 1412–1415 (2012).

Li, C. N. et al. Green synthesis of MOF-801 (Zr/Ce/Hf) for CO2/N2 and CO2/CH4 separation. Inorg. Chem. 62 (20), 7853–7860 (2023).

Meng, W. et al. Tuning expanded pores in metal–organic frameworks for selective capture and catalytic conversion of carbon dioxide. ChemSusChem. 11(21), 3751–3757 (2018).

Mishra, P., Edubilli, S., Mandal, B. & Gumma, S. Adsorption of CO2, CO, CH4 and N2 on DABCO based metal organic frameworks. Microporous Mesoporous Mater. 169, 75–80 (2013).

Mishra, P., Mekala, S., Dreisbach, F., Mandal, B. & Gumma, S. Adsorption of CO2, CO, CH4 and N2 on a zinc based metal organic framework. Sep. Purif. Technol. 94, 124–130 (2012).

Munusamy, K. et al. Sorption of carbon dioxide, methane, nitrogen and carbon monoxide on MIL-101 (Cr): volumetric measurements and dynamic adsorption studies. Chem. Eng. J. 195, 359–368 (2012).

Mutyala, S., Yakout, S. M., Ibrahim, S. S., Jonnalagadda, M. & Mitta, H. Enhancement of CO 2 capture and separation of CO 2/N 2 using post-synthetic modified MIL-100 (Fe). New J. Chem. 43 (24), 9725–9731 (2019).

Noguera-Díaz, A. et al. Flexible zifs: probing guest‐induced flexibility with CO2, N2 and ar adsorption. J. Chem. Technol. Biotechnol. 94 (12), 3787–3792 (2019).

Samira, S. & Mansoor, A. High CO2 adsorption capacity and CO2/CH4 selectivity by nanocomposites of MOF-199 (2017).

Singh, N. et al. Shaping of MIL-53-Al and MIL-101 MOF for CO2/CH4, CO2/N2 and CH4/N2 separation. Sep. Purif. Technol. 341, 126820 (2024).

Sun, T., Hu, J., Ren, X. & Wang, S. Experimental evaluation of the adsorption, diffusion, and separation of CH4/N2 and CH4/CO2 mixtures on Al-BDC MOF. Sep. Sci. Technol. 50 (6), 874–885 (2015).

Wang, X., Wang, Y., Lu, K., Jiang, W. & Dai, F. A 3D Ba-MOF for selective adsorption of CO2/CH4 and CO2/N2. Chin. Chem. Lett. 32 (3), 1169–1172 (2021).

Wu, X., Yuan, B., Bao, Z. & Deng, S. Adsorption of carbon dioxide, methane and nitrogen on an ultramicroporous copper metal–organic framework. J. Colloid Interface Sci. 430, 78–84 (2014).

Xu, F. et al. Ultrafast room temperature synthesis of gro@ HKUST-1 composites with high CO2 adsorption capacity and CO2/N2 adsorption selectivity. Chem. Eng. J. 303, 231–237 (2016).

Yu, D., Yazaydin, A. O., Lane, J. R., Dietzel, P. D. & Snurr, R. Q. A combined experimental and quantum chemical study of CO 2 adsorption in the metal–organic framework CPO-27 with different metals. Chem. Sci. 4 (9), 3544–3556 (2013).

Zhang, W., Huang, H., Zhong, C. & Liu, D. Cooperative effect of temperature and linker functionality on CO 2 capture from industrial gas mixtures in metal–organic frameworks: a combined experimental and molecular simulation study. Phys. Chem. Chem. Phys. 14 (7), 2317–2325 (2012).

Zhang, X., Zheng, Q. & He, H. Multicomponent adsorptive separation of CO2, CH4, N2, and H2 over M-MOF-74 and AX-21@ M-MOF-74 composite adsorbents. Microporous Mesoporous Mater. 336, 111899 (2022).

Zhang, Y., Hu, G., Gao, X., Zhang, Z. & Cui, P. Simulation study on functional group-modified Ni‐MOF‐74 for CH4/N2 adsorption separation. J. Comput. Chem. 45 (17), 1515–1524 (2024).

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V. & Gulin, A. CatBoost: unbiased boosting with categorical features. Adv. Neural Inform. Process. Syst. 31, (2018).

Hancock, J. T. & Khoshgoftaar, T. M. CatBoost for big data: an interdisciplinary review. J. Big Data. 7 (1), 94 (2020).

Duplyakov, V. et al. Data-driven model for hydraulic fracturing design optimization. Part II: inverse problem. J. Petrol. Sci. Eng. 208, 109303 (2022).

Sagi, O. & Rokach, L. Approximating XGBoost with an interpretable decision tree. Inf. Sci. 572, 522–542 (2021).

Lawhern, V. J. et al. EEGNet: a compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 15 (5), 056013 (2018).

Bengio, Y. Learning Deep Architectures for AI Ed (Now Publishers Inc, 2009).

Wilson, A. G., Knowles, D. A. & Ghahramani, Z. Gaussian process regression networks, arXiv preprint arXiv:1110.4411 (2011).

Boyle, P. Gaussian processes for regression and optimisation, Doctoral dissertation. Victoria University of Wellington (2007).

Gibbs, M. N. Bayesian Gaussian Processes for Regression and Classification (Citeseer, 1998).

Alruqi, M., Sharma, P., Deepanraj, B. & Shaik, F. Renewable energy approach towards powering the CI engine with ternary blends of algal biodiesel-diesel-diethyl ether: Bayesian optimized Gaussian process regression for modeling-optimization. Fuel. 334, 126827 (2023).

Noori, M., Hassani, H., Javaherian, A. & Amindavar, H. 3D seismic fault detection using the Gaussian process regression, a study on synthetic and real 3D seismic data. J. Petrol. Sci. Eng. 195, 107746 (2020).

Mahdaviara, M., Rostami, A., Keivanimehr, F. & Shahbazi, K. Accurate determination of permeability in carbonate reservoirs using Gaussian process regression. J. Petrol. Sci. Eng. 196, 107807 (2021).

Jamei, M., Ahmadianfar, I., Olumegbon, I. A., Karbasi, M. & Asadi, A. On the assessment of specific heat capacity of nanofluids for solar energy applications: application of Gaussian process regression (GPR) approach. J. Energy Storage. 33, 102067 (2021).

Zhou, L. Progress and problems in hydrogen storage methods. Renew. Sustain. Energy Rev. 9 (4), 395–408 (2005).

Amiri-Ramsheh, B., Safaei-Farouji, M., Larestani, A., Zabihi, R. & Hemmati-Sarapardeh, A. Modeling of wax disappearance temperature (WDT) using soft computing approaches: Tree-based models and hybrid models. J. Petrol. Sci. Eng. 208, 109774 (2022).

Lv, Q. et al. Modeling thermo-physical properties of hydrogen utilizing machine learning schemes: viscosity, density, diffusivity, and thermal conductivity. Int. J. Hydrog. Energy. 72, 1127–1142 (2024).

Zheng, H., Mahmoudzadeh, A., Amiri-Ramsheh, B. & Hemmati-Sarapardeh, A. Modeling viscosity of CO2–N2 gaseous mixtures using robust tree-based techniques: extra tree, random forest, gboost, and LightGBM. ACS Omega. 8 (15), 13863–13875 (2023).

Mohammadi, M. R. et al. Modeling the solubility of light hydrocarbon gases and their mixture in Brine with machine learning and equations of state. Sci. Rep. 12 (1), 14943 (2022).

Author information

Authors and Affiliations

Contributions

A.N.: Writing-original draft, methodology, investigation, visualization, data, A.J.: Writing-original draft, methodology, investigation, visualization, data, F.H.: Writing-original draft, methodology, visualization, software, S.A.: Writing-review and editing, validation, conceptualization, methodology, supervision, A.A.: Writing-review and editing, validation, conceptualization, data. A.H.: Methodology, validation, supervision, writing-review and editing, software, A.M.: Writing-review and editing, validation, conceptualization, software.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Naghizadeh, A., Jafari-Sirizi, A., Hadavimoghaddam, F. et al. Toward accurate prediction of N2 uptake capacity in metal-organic frameworks. Sci Rep 15, 35073 (2025). https://doi.org/10.1038/s41598-025-18299-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-18299-x