Abstract

Communication between deaf or mute individuals and hearing persons is often hindered by the lack of mutual understanding of sign or vocal language. To bridge this gap, Indian Sign Language Recognition (ISLR) systems are essential. This paper proposes a real-time ISLR framework based on the YOLOv10-ST model, which integrates the Swin Transformer into the YOLOv10 architecture for enhanced feature extraction. The model also incorporates Mish activation to improve gradient flow and detection accuracy. A custom dataset comprising 15, 000 static images (1, 000 per sign for 15 signs) and 35 dynamic videos (covering 7 sign classes) was used for training and evaluation. Experimental results demonstrate high performance, with the model achieving 97.50% precision, 98.10% recall, and 96.58% F1-score for image-based sign recognition, and 95.24% precision, 96.00% recall, and 95.87% F1-score for video-based gestures. The model also achieves a mean Average Precision (mAP) of 97.62% and real-time inference speeds of 48.7 FPS. Ablation studies validate the contributions of Swin Transformer and Mish activation, while paired t-tests confirm statistical significance (p \(< 0.005\)). The experimental findings demonstrate that the YOLOv10-ST model efficiently recognizes static and dynamic ISL in real time with minimal computational overhead.

Similar content being viewed by others

Introduction

Deaf people utilize sign language as one of their nonverbal modes of communication. Understanding of sign language by normal people is complicated, which leads to the barrier of communication with deaf people. When deaf or dumb individuals use sign language to communicate with one another in real life, a problem develops when they attempt to converse with non-deaf individuals1. It is also observed that the sign languages in India have their own vocabulary and grammatical structures2. In order to understand the meaning of signs used by users to communicate verbally, the Sign Language Recognition (SLR) system simulates the role of a translator. According to Indian Sign Language Research and Training Centre (ISLRTC), for the 7 million hearing-impaired and deaf individuals in India, only 300 competent Indian Sign Language (ISL) interpreters are available3. Indian Sign Language Recognition (ISLR) faces numerous challenges, such as variations in hand gestures due to differences in size, shape, skin tone, and orientation of the hands among individuals4. Another critical issue is the dynamic nature of ISL, which involves continuous motion and requires systems to process sequential data effectively5. Illumination variations, occlusions, and background noise in real-time environments further complicate gesture recognition. Moreover, ISL recognition systems need the simultaneous use of both hands in many signs, which adds complexity to the task. Existing methods often suffer from low accuracy, reduced speed, and an inability to generalize effectively across diverse datasets, making real-time applications difficult6,7,8. The main aim is to develop ISLR system that processes the image/video data containing input signs and provides information about Indian signs. There are various methodologies proposed in the literature9,10,11,12,13 to develop this type of system. To detect the signs in image/video, several versions of You Only Look Once (YOLO) based on deep neural networks are proposed to reduce the recognition time14,15,16,17,18,19, but face challenges with small objects, localization accuracy, fixed grid limitations, generalization, and computational efficiency, especially in complex or edge-case scenarios.

YOLO has evolved as a popular object detection framework due to its real-time performance and high accuracy. ISLR using hand gestures has seen significant advancements with the application of various YOLO versions. YOLOv1 introduced real-time object detection with impressive speed and accuracy, laying the foundation for gesture recognition by detecting hand regions20. YOLOv2 and YOLOv3 improved accuracy and multi-scale detection capabilities, making them more suitable for recognizing complex hand gestures21,22. YOLOv4 brought further enhancements in speed and precision with techniques like CSPDarknet and Mosaic augmentation, boosting real-time ISL recognition23. YOLOv5, widely adopted due to its lightweight architecture and faster inference, was pivotal in improving recognition efficiency24. YOLOv6, YOLOv7, and YOLOv8 focused on optimizing deep learning models, further improving detection accuracy and adaptability to dynamic hand movements25,26,27. YOLOv9 and YOLOv10, though relatively new, integrate cutting-edge AI techniques, offering unprecedented accuracy and efficiency for ISL recognition, even in challenging scenarios like occlusion or background clutter20. Each YOLO iteration has progressively improved real-time hand gesture recognition for ISL, contributing significantly to human-computer interaction and accessibility technologies. When YOLOv10 was initially applied for ISLR using the Darknet backbone, several limitations were observed. Darknet is an extremely effective deep learning framework, however, it is not suitable for lightweight or real-time applications such as mobile phones, small devices and edge devices like cameras and IoT devices. It is not efficient for fast recognition as its internal structure is deep and complex requiring a significant amount of processing time for input such as an image or video. Additionally, the feature extraction capability of Darknet was not as efficient in capturing fine-grained details, particularly under challenging conditions like occlusions or complex backgrounds. To address these issues in YOLOv10, the Darknet backbone is replaced with the Swin Transformer (ST) architecture called YOLOv10-ST. The lightweight design of ST significantly reduces computational overhead, enabling faster inference. Its advanced feature extraction capabilities improve accuracy by effectively distinguishing intricate patterns in dynamic and static gestures and replacing traditional activation functions (e.g., Linear and ReLU) with the advanced activation function is Mish, for better gradient flow and performance. By incorporating this modification, the YOLOv10 model demonstrates enhanced overall performance, making it more suitable for real-time applications, particularly on resource-constrained devices. This paper makes the following key contributions in response to the limitations of prior ISL recognition research:

-

YOLOv10-ST: We propose a novel architecture that integrates Swin Transformer blocks into YOLOv10. Unlike traditional YOLO versions that rely on CNNs28, our model captures both local features and global dependencies through hierarchical self-attention, improving the recognition of visually similar ISL gestures.

-

Real-time, multi-scale detection: The model leverages v10Detect, decoupled heads, and a multi-scale fusion strategy (SPPF + PSA), resulting in both high accuracy and real-time performance. It achieves 97.62% mAP and 48.7 FPS on image data, outperforming YOLOv3-YOLOv10 baselines.

-

Context-aware architecture: By eliminating the reliance on Non-Maximum Suppression (NMS) and using one-to-many label assignment, YOLOv10-ST addresses the issue of gesture overlap and occlusion more effectively than prior YOLO variants.

-

Diverse dataset: A private dataset of ISL gestures is constructed using images and videos under diverse lighting conditions, backgrounds, and from multiple participants. This enhances the generalizability of the model, unlike previous works that relied on more constrained datasets.

-

Statistical validation: The proposed model’s superiority is not only shown through standard metrics but is also supported by a paired t-test, confirming statistically significant performance improvements over YOLOv10.

The organization of the rest of the sections is as follows: Section “Related Work” presents a review of recent studies in the domain of sign language recognition, particularly focused on Indian Sign Language (ISL), object detection frameworks, YOLO-based models, and transformer-based approaches. After that, Section “Methodology” describes the experimental setup, dataset details, participant demographics, environmental conditions, annotation process, training/testing protocol, consent procedures, and ethical considerations. Section “Proposed Work” provides a detailed explanation of the YOLOv10-ST architecture, including its integration of the Swin Transformer, Mish activation, and improvements over previous YOLO versions. Later on Section “Experimental Results and Discussion” presents a comprehensive evaluation of the model’s performance, including result analysis, statistical validation, ablation studies, real-time inference analysis, and comparisons with both YOLO variants and transformer-based detectors. The last Section “Conclusion and Future Directions” concludes the paper by summarizing the key findings, acknowledging current limitations, and outlining future research directions, including dataset expansion, integration of explainability tools, and ethical compliance.

Related work

Sign Language Recognition (SLR) involves the complex interpretation of hand shapes, movements, orientations, facial expressions, and body posture. This complexity is compounded by signer variability, occlusions, background clutter, and lighting conditions29. Initial research in SLR, including Indian Sign Language (ISL), relied heavily on traditional machine learning techniques and handcrafted features like SIFT and HOG30,31. These methods struggled with dynamic gestures and lacked robustness in variable real-world settings32. Challenges also included the inability to model complex spatiotemporal dependencies and over-reliance on manual feature engineering33,34,35. Modern deep learning (DL) approaches such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) overcame many ML limitations by learning spatial and temporal features directly from raw input28,36,37,38. DL’s popularity surged due to improved accuracy and availability of high-performance GPUs39,40. Researchers have also used multimodal techniques that incorporate hand keypoints, facial cues, and body posture to enhance recognition41,42. However, standardized, large-scale ISL datasets remain limited43, affecting generalization. YOLO models (e.g., YOLOv4 to YOLOv9) have shown high speed and reasonable accuracy for gesture detection in videos44,45. These models predict bounding boxes and class labels in a single forward pass, making them suitable for real-time applications. Despite improvements in detection heads and feature extractors, these CNN-based YOLO variants still fall short in capturing global attention or temporal dependencies critical for dynamic signs. Transformer models such as ViT and Swin Transformer are increasingly used for SLR due to their ability to model long-range dependencies and hierarchical attention46,47. When combined with CNNs, they enhance spatial and temporal context understanding48,49. Additional techniques such as Temporal Convolutional Networks (TCNs), 3D CNNs, and keypoint-based pose estimation (e.g., OpenPose, MediaPipe) have further advanced gesture segmentation and classification50,51,52. Integration of visual, depth, and audio modalities has shown promise in dealing with user-specific variations and environmental noise53. Graph Neural Networks (GNNs) are also used to model skeletal structures for capturing hand joint relationships54. Transfer learning and domain adaptation techniques, including adversarial methods, have improved generalization across different signers and settings55,56. While CNN- and YOLO-based models enable real-time performance, they lack contextual modeling. Transformer-based methods offer global attention but often at the cost of speed. Moreover, few works explore the integration of Swin Transformers within YOLOv10 for ISL recognition. Additionally, most datasets lack diversity in signers, lighting, and background. Our proposed YOLOv10-ST addresses these limitations by combining YOLOv10’s efficiency with Swin Transformer’s hierarchical attention, evaluated on a diverse ISL dataset containing both image and video samples under realistic conditions. Recent studies have also explored real-time ISL recognition using frameworks like CNN and Mediapipe38,57,58, and deep learning-based approaches tailored for Indian Sign Language59. These works demonstrate a promising direction in vision-based SLR; however, they lack the architectural flexibility and attention-based modeling offered by transformer-integrated YOLO architectures like YOLOv10-ST. While Transformer-based enhancements have been explored in object detection (e.g., DETR) and earlier YOLO versions such as YOLOv4 or YOLOv544,45, their application in Indian Sign Language recognition remains limited. Some studies have integrated Transformers into YOLOv5 backbones or heads, but these approaches often target generic object detection tasks and lack gesture-specific optimization. In contrast, our method is the first to integrate Swin Transformer blocks within YOLOv10, leveraging the strengths of hierarchical self-attention in spatial encoding. This integration enables improved representation of fine-grained hand structures, making it more effective for complex gesture recognition in ISL scenarios. Moreover, our model is evaluated across both static and dynamic gesture modalities, further distinguishing it from prior hybrid architectures.According to this research work, this is the first attempt to integrate Swin Transformer into the recently released YOLOv10 framework to develop better gesture-level understanding. This integration enhances the model’s ability to more effectively understand fine-grained spatial information – which is crucial for recognizing both static and dynamic Indian Sign Language.

Methodology

This section presents the methodological framework that was adopted to develop and evaluate the proposed Indian Sign Language Recognition System. It includes the experimental setup, characteristics of the dataset, participant demographics, environmental and lighting conditions, annotation procedure, and the training–testing strategy adopted to implement the YOLOv10-ST model. Additionally, information on participant consent and ethical considerations is also included to ensure that the study adheres to standard research conduct.

Environment setup

Python programming language, which is known for its extensive libraries and toolkits, was primarily used to develop the experimental setup. Table 1 shows the key parameters and their values used to configure the environment for the experiments. The system was designed to classify a total of 15 different Indian Sign Language (ISL) signs, ensuring a robust and diverse dataset representation. A learning rate of 0.001 was chosen to maintain a balance between the model’s convergence speed and accuracy, allowing the accuracy to gradually improve during training. The algorithms utilized for this task were Swin Transformer with YOLOv10. To maintain model stability and improve generalization, a momentum of 0.8 was employed in the optimization process. The Stochastic Gradient Descent (SGD) optimizer was selected for its simplicity and effectiveness in large-scale problems. A decay rate of 0.0004 was applied to prevent overfitting by gradually reducing the learning rate during training. The Mish activation functions were used for gradient flow and performance in the output layer requirements. To control feature extraction across network layers, layer selection was handled via internal configuration flags of the YOLOv10-ST architecture. The earlier mention of a ’mask parameter (0–8)’ refers to layer indexing in the training configuration file and is not a standard YOLOv10 hyperparameter. Paper acknowledge this could be misleading and have clarified it accordingly to improve reproducibility. These carefully selected parameters provided a solid foundation for training and testing the proposed model, ensuring high performance and accuracy in SLR tasks. All experiments were conducted using an NVIDIA RTX 3080 GPU (10 GB VRAM), Intel i7 CPU, and PyTorch 2.0 with CUDA 11.8 in a Linux environment.

Dataset

The dataset for ISL consists of both image and video data, specifically designed for static and dynamic sign recognition tasks. For the initial stage of static Indian sign language recognition, the dataset contains 15, 000 images of 15 sign classes, with 1,000 images per sign class. These 15 classes include the signs for “Change”, “Correct”, “Goodbye”, “Help”, “Home”, “Hug”, “Meet”, “Nice”, “No”, “Please”, “Sorry”, “Stop”, “Touch”, “Yes”, and “You”. A Sample of all classes is shown in Fig. 1. All images are resized to a uniform dimension of \(640 \times 640\) pixels. The dataset is split into 80% training data (800 images per class) and 20% testing data (200 images per class), ensuring balanced coverage for each sign. In the dynamic sign recognition stage, the dataset contains a total of 35 videos corresponding to 7 dynamic sign classes. Each video was split into frames at a rate of 26 frames per second (fps), making processing more efficient. This dataset was further split into 21 videos for training and 14 videos for testing, ensuring a robust evaluation of the model performance. Data augmentation techniques such as random horizontal flipping, brightness adjustment, and affine transformations were used to enhance the robustness of the model under diverse conditions.

Dataset availability: “The dataset used in this study, which consists of 15, 000 static ISL images and 35 videos across 7 dynamic sign classes, was collected privately with the consent of the participants. This dataset is not publicly available due to ethical limitations and the absence of public release consent at the time of collection. However, researchers seeking access to this dataset can contact the corresponding author and sign a Data Usage Agreement. This process ensures that the dataset is used only for academic and non-commercial research purposes, while also respecting participant privacy and institutional guidelines.

Participant diversity and demographics: This dataset was constructed with contributions from 12 participants, 7 males and 5 females aged between 18 and 35 years. These participants represent various regional and cultural backgrounds of North India. This ensures a diversity of signing styles and physical features, such as hand shape, texture, and skin color, which helps to improve the generalizability of the model.

Environmental and lighting conditions: To simulate real-life use of Indian Sign Language (ISL), data was collected under different situations, such as:

-

Indoor and outdoor environments

-

Backgrounds with plain walls, bookshelves, and textured surfaces

-

Lighting conditions such as natural sunlight, fluorescent indoor lighting, and low-light settings

These variations are intended to test the robustness of the model under diverse visual circumstances.

Consent to participate

All participants voluntarily took part in the study after being fully informed about its objectives. Verbal consent was obtained for participation, data collection, and the use of images for research and publication purposes. As the study involved non-invasive procedures in an educational setting, formal institutional ethical approval was not required. No personal or sensitive data was collected, and the study adhered to relevant ethical guidelines. Participants were also informed about the open-access nature of the publication and raised no objections. For future data collection efforts will follow full ethical clearance protocols with written consent.

Ethical approval

This study involved minimal-risk behavioral research with human participants and was conducted in accordance with the ethical principles of the Declaration of Helsinki and applicable national and institutional guidelines. The research involved non-invasive image and video data collection of hand gestures, including visible facial features, recorded for academic purposes related to Indian Sign Language recognition. No clinical procedures or sensitive personal data were involved. All participants were adult volunteers from the institution, clearly informed about the purpose of the study, the visibility of their facial images in academic publications, and the intended use of the collected data. Verbal informed consent was obtained from each participant prior to data collection. No personally identifiable information (such as names or contact details) was recorded or stored. As per the institutional ethics policy of KIET Group of Institutions, this type of low-risk, non-clinical, and non-sensitive research does not require formal approval from the Institutional Ethics Committee.

Annotation

LabelImg, an open-source program that makes it easier to manually create bounding boxes for labeling particular sign sections within images, is used to annotate photos. In order to train and test YOLO models, the software creates annotation files in the .txt format. In the YOLO annotation format, each bounding box is defined by five parameters: class_id, center_x, center_y, width, and height. Where class_id represents the class of the sign; center_x and center_y are the coordinates of the center of the bounding box normalized to the size of the image; and width and height represent the dimensions of the box. This structured format allows the exact position of signs to be determined, improving the accuracy of real-time detection systems.

To ensure consistency of annotation, this dataset was independently annotated by three experts experienced in sign language data labeling. 10% of the total data was randomly selected and annotated by all three annotators. To measure agreement between annotators, Cohen’s Kappa (\(\kappa\)) was used for pairwise comparisons and Fleiss’ Kappa for group-level integrity. The average agreement level between the three annotators was found to be \(\kappa\) = 0.87, indicating strong agreement. Wherever there were disagreements, they were resolved by discussion and consensus labeling. This quality assurance process enhances the reliability of the dataset for training and evaluating models.

Training and testing descriptions

The YOLOv10-ST model was trained using image and video data that included 800 images from each of the 15 sign classes and 21 videos for dynamic 7 signs. The model was tested using 200 images from each of the 15 sign classes and 14 videos for dynamic signs. The YOLOv10-ST model was trained with a learning rate of 0.001, ensuring a good balance between learning speed and accuracy. In this model, Swin Transformer (ST) was combined with YOLOv10 to perform feature extraction and object detection efficiently. A momentum of 0.8 was applied to maintain stability and generalization during training.

The Stochastic Gradient Descent (SGD) optimizer was used to train the model, as it is simple and effective for large datasets. To prevent overfitting, a weight decay of 0.0004 was applied, which gradually decreases the learning rate over time. A Mish activation function was chosen to improve gradient flow and optimize the performance of the output layer. In addition, a mask parameter ranging from 0 to 8 was set to control which layers would be used for feature extraction and classification. All these hyperparameters were tuned keeping in mind the performance of the validation loss and hold-out set to minimize overfitting and maintain good generalization.

The model was trained for a total of 100 epochs, with measures such as early stopping and checkpointing to preserve the best model weights based on validation mAP. Data augmentation techniques such as random rotation, brightness adjustment, and cutout were used to improve the model performance in a variety of real-world conditions.

Proposed work

Building on the experimental setup, data collection procedure, and training methods described in the previous section, we now present the proposed Indian Sign Language (ISL) recognition architecture. The main objective of the system is to accurately recognize and classify both static and dynamic gestures under various environmental conditions in real-time.To achieve this goal, the researchers designed a new deep learning model called YOLOv10-ST. This model combines the speed and efficiency of YOLOv10 with the contextual feature extraction capabilities of Swin Transformer. This integration allows the model to accurately recognize gestures and perform robustly to variations in pose, illumination, and background.The YOLOv10-ST model is specifically designed to recognize Indian Sign Language gestures in real-time. Its architecture is divided into three major parts – Backbone, Neck, and Head – that process input images or video frames of size \(640 \times 640 \times 3\).The Backbone consists of convolutional layers, Swin Transformer blocks, and C2f modules that extract different levels of features from the input. These generate multi-scale feature maps at \(80 \times 80\), \(40 \times 40\), and \(20 \times 20\) resolutions to capture both the fine details and broader context of gestures.The Neck part combines and improves these features with techniques such as upsampling, concatenation, and Spatial Pyramid Pooling Fast (SPPF) to improve the recognition of gestures of different sizes.The Head part consists of v10Detect layers that detect bounding boxes at multiple levels and classify them into Indian Sign Language categories (such as the “Goodbye” sign).The entire system is designed to recognize ISL signs quickly and accurately, even when conditions such as background or lighting are changing.

Backbone

Initially, the input image of size \(640 \times 640 \times 3\) is passed through a convolutional block (\(k=3\), \(s=2\)), reducing it to \(320 \times 320 \times 64\). A second convolutional layer further downsamples to \(160 \times 160 \times 128\). The first ST block then processes this map using window-based self-attention to extract both local and global features. A C2f module follows, retaining resolution but refining the features. A third convolutional layer reduces the map to \(80 \times 80 \times 256\), followed by another ST block and C2f module. An SCDown block then downsamples to \(40 \times 40 \times 512\). Additional C2f and SCDown layers generate the final multi-scale features used by the Neck. The integration of Swin Transformer blocks into the YOLOv10 backbone enables efficient modeling of both fine-grained and global contextual features.

Figure 2 demonstrated that the Swin Transformer blocks are integrated with YOLOv10 Backbone. The paper mathematically detail this integration as follows. Let the input image be \(I \in \mathbb {R}^{640 \times 640 \times 3}\). The Backbone begins with a series of convolutional layers:

where \(F_1\) is the feature map obtained after applying the first convolutional layer on the input image \(I\), resulting in dimensions \(\mathbb {R}^{320 \times 320 \times 64}\), and \(F_2\) is the feature map produced by applying the second convolutional layer on \(F_1\), resulting in dimensions \(\mathbb {R}^{160 \times 160 \times 128}\).

Swin Transformer Block 1 (Mid-Level Features) The first Swin Transformer block processes \(F_2\):

It applies:

These enhanced features \(Z_{ST1}^{(4)}\) are fused with C2f output:

where \(Z_{ST1}^{(1)}\) is the output of W-MSA(Window Multi-Head Self-Attention) with residual connection, \(Z_{ST1}^{(2)}\) is the MLP (Multilayer Perceptron) output with skip connection, \(Z_{ST1}^{(3)}\) is the SW-MSA (Shifted Window Multi-Head Self-Attention) output with residual, \(Z_{ST1}^{(4)}\) is the final MLP output of the Swin Transformer block, \(F_{\text {fused1}}\) is the concatenation of \(Z_{ST1}^{(4)}\) with \(\text {C2f}(F_2)\) and LN is Layer Normalization. Then, the next downsampling occurs:

Swin Transformer Block 2 (Deep-Level Features)

Following same structure:

These attention-augmented features are again fused:

The final downsampling stages produce multi-scale feature maps:

Output to Neck and These multi-resolution features - \(F_{80}\), \(F_{40}\), and \(F_{20}\) - are passed to the Neck:

Neck processes them using: - SPPF on \(F_{20}\) - PSA + upsampling + concatenation on others and this completes the integration of YOLOv10 and Swin Transformer.

Neck

The Neck begins with the SPPF block, performing spatial pyramid pooling on the \(20 \times 20 \times 1024\) map. This is followed by a PSA block to enhance feature fusion. Upsampling increases the resolution to \(40 \times 40 \times 512\), concatenated with backbone features to get \(40 \times 40 \times 1024\). Another upsampling yields \(80 \times 80 \times 256\), which is again concatenated to produce \(80 \times 80 \times 512\) features. The v10Detect module features a decoupled head structure that separates classification and localization branches. This reduces task interference and improves convergence. Additionally, YOLOv10-ST avoids traditional Non-Maximum Suppression (NMS) by adopting a dual-label assignment strategy. This allows for better handling of overlapping or sequential gestures common in real-world ISL communication.

Head

The Head uses v10Detect at three scales: \(80 \times 80 \times 512\), \(40 \times 40 \times 1024\), and \(20 \times 20 \times 1024\) to detect gestures of varying sizes, such as individual fingers, partial hands, and full-hand signs. It outputs bounding boxes and ISL class probabilities. The integration of Swin Transformers ensures detailed contextual understanding, while multi-scale detection supports a range of gesture sizes. The network is trained on labeled ISL image and video datasets. The complete workflow is showed in Fig. 3. Each module in YOLOv10-ST attention-aware backbone, multi-scale neck, and decoupled detection head contributes to a balanced architecture capable of high-speed and high-accuracy gesture recognition. The system is optimized not only for inference performance (48.7 FPS) but also for robustness across lighting, occlusion, and background variation, making it suitable for real-time ISL applications.

Swin transformer

Transformer models demonstrated groundbreaking capabilities in capturing global contexts and modeling long-distance dependencies in Natural Language Processing (NLP)46. Their success extended to computer vision, leading to the development of the Swin Transformer (ST)47. Convolutional Neural Networks (CNNs) and the ST use hierarchical structures to modify the transformer architecture for images. Employing localized self-attention inside discrete, non-overlapping frames, it dramatically reduces computing complexity from a quadratic to a linear scale. The efficiency of the proposed model enabled with ST makes it a more promising tool for gesture recognition tasks over state-of-the-art models.

ST can be transformational when it comes to ISL recognition. Gesture recognition involves capturing and analyzing hand movements and positions, a task requiring precise contextual understanding and scalability for real-time applications. The hierarchical structure of the ST for ISLR can process gestures at multiple scales, which ensures accurate identification of words. Although Swin Transformer (ST) has some limitations, such as it requires a large amount of training data for good performance, hybrid techniques can be used to overcome these shortcomings. For example, ST can be combined with techniques such as CNN (Convolutional Neural Network) or SURF (Speeded-Up Robust Features). Such a combination improves contextual encoding and reduces the dependence on large datasets, thereby enabling accurate and efficient recognition of Indian Sign Language (ISL) in real-time. As shown in Fig. 2, the ST block uses the Multi-Headed Self-Attention (MSA) mechanism with a shifted window strategy. The ST block is composed of two consecutive sub-blocks. Each sub-block consists of a multilayer perceptron (MLP) consisting of two fully connected layers activated with a Mish activation function. In addition, each block also includes an MSA module, a Layer Normalization (LN), and a residual connection. The first sub-block uses a window-based MSA (W-MSA), while the second sub-block uses a shifting window MSA (SW-MSA)60. Such alternating approach improves the information exchange between different windows while also keeping the computational complexity low. These continuous ST blocks’ operations are expressed as follows:

Here, \(\hat{a}^x\) is the MSA output, and \(a^x\) is the MLP output of the \(x^{\text {th}}\) block60.

YOLOv10-ST

The YOLOv10-ST model eliminates the dependency on non-maximum suppression (NMS), a constraint in earlier versions, leading to a significant reduction in latency. This improved version introduces a dual assignment strategy during training, incorporating both one-to-one and one-to-many labeling to enhance detection accuracy while preserving high-speed performance. The YOLOv10 architecture integrates several optimizations to boost efficiency and effectiveness. Notable features include lightweight classification heads, spatially channel-decoupled downsampling, and a rank-guided block design for reduced computational overhead, minimized information loss, and optimized parameter utilization, respectively61,62. Because of these enhancements, YOLOv10 can effectively scale across several versions, from YOLOv10-N to YOLOv10-X, guaranteeing flexibility to meet a range of computational requirements. YOLOv10 sets a new industry benchmark by outperforming YOLOv9 and YOLOv8 in terms of speed and accuracy on MS-COCO dataset. For instance, YOLOv10-S achieves superior Mean Average Precision (MAP) with lower latency compared to similar models. Additionally, this version has partial self-attention techniques, large-kernel convolutions, and a well-balanced design to improve detection accuracy and computing efficiency. The architecture of the YOLOv10-ST showed in Fig. 2 offers multiple configurations tailored to diverse real-time object detection applications.

The proposed YOLOv10-ST architecture, as shown in Fig. 2, introduces several customized enhancements to improve Indian Sign Language (ISL) recognition. This model builds on the traditional YOLOv10 by integrating Swin Transformer blocks in the backbone for feature extraction. Swin transformers focus on local input regions using a W-MSA mechanism, but neighboring regions can share information thanks to SW-MSA. This hierarchical approach captures both fine-grained details (such as finger positions) and global context (like hand orientation), making it especially effective for distinguishing ISL gestures. Furthermore, shortcut connections (C2f modules) ensure efficient information flow across the network, improving learning without significantly increasing computational costs.

The neck of the architecture incorporates modules like Spatial Pyramid Pooling Fast (SPPF) and Path Aggregation (PSA), which aggregate features across multiple scales. This is crucial for ISL recognition, where the size and orientation of hand gestures may vary depending on the signer’s position and movement. The upsampling layers and concatenation mechanisms enable the fusion of information from different resolutions, making certain that the model can precisely identify both small and huge gestures in still image and videos. The head uses the optimized v10Detect module for multi-scale object detection, ensuring that hand gestures are accurately localized and classified in real-time. This architecture offers several advantages as compared to the original YOLOv10. The integration of Swin Transformers enhances the model’s robustness to variations in pose, lighting, and occlusion, which are common challenges in real-world ISL scenarios.

The YOLOv10-ST model eliminates the reliance on Non-Maximum Suppression (NMS) present in earlier versions, significantly reducing latency. This improved version incorporates a dual assignment strategy during training, which includes both one-to-one and one-to-many labeling. This strategy helps to increase the accuracy of recognition, while also maintaining high-speed performance. The YOLOv10 architecture incorporates a number of optimizations that enhance its performance and efficiency. Its key features include : lightweight classification heads, spatially channel-decoupled downsampling (which reduces information loss), and rank-guided block design (which reduces computational cost and makes better use of parameters)61,62. These improvements make YOLOv10 easily scalable to different versions such as YOLOv10-N to YOLOv10-X, enabling it to meet various computational requirements. On the MS-COCO dataset, YOLOv10 has set a new standard by surpassing YOLOv9 and YOLOv8 in both speed and accuracy. For example, YOLOv10-S achieves better Mean Average Precision (MAP) with lower latency. In addition, this version incorporates partial self-attention techniques, large-kernel convolutions, and a balanced design, which enhances detection accuracy and computation efficiency. The architecture of YOLOv10-ST, as shown in Fig. 2, provides a variety of configurations for various real-time object detection applications.

The proposed YOLOv10-ST architecture, as shown in Fig. 2, introduces several custom enhancements to improve Indian Sign Language (ISL) recognition. The model is based on the traditional YOLOv10 with Swin Transformer blocks added to the backbone for feature extraction. The Swin Transformer focuses on local input regions and uses the W-MSA (Window-based Multi-Headed Self-Attention) mechanism, but uses the SW-MSA (Shifted Window MSA) technique to share information between neighboring regions. This hierarchical approach is able to capture both fine details (such as finger positions) and global context (such as hand direction), making it more effective at distinguishing between ISL gestures. Additionally, shortcut connections (C2f modules) make the flow of information efficient in the network, leading to improved learning capabilities, without incurring a significant computational cost.

The neck of the architecture includes modules such as Spatial Pyramid Pooling Fast (SPPF) and Path Aggregation (PSA), which aggregate features at different scales. This is important for ISL recognition because the size and direction of a hand gesture can change according to the position and speed of the person signing. Upsampling layers and concatenation mechanisms combine information of different resolutions, ensuring that the model can accurately recognize both small and large gestures in static images and videos. The head part of the model uses the v10Detect module optimized for multi-scale object detection, which ensures that hand gestures are accurately localized and classified in real-time. This architecture has several advantages compared to the original YOLOv10. The integration of the Swin Transformer makes it more robust to variations such as pose, lighting, and occlusion, which are common challenges in real-world ISL scenarios.Furthermore, the hierarchical attention mechanism reduces computational overhead while maintaining high accuracy.

YOLOv10-ST eliminates Non-Maximum Suppression (NMS), reducing latency and improving detection precision. It introduces dual label assignment one-to-one and one-to-many for better accuracy during training. Key innovations in YOLOv10 include lightweight heads, spatial-channel-decoupled downsampling, and rank-guided blocks for computational efficiency61,62. These enable the architecture to scale from YOLOv10-N to YOLOv10-X. YOLOv10 outperforms YOLOv8 and YOLOv9 on benchmarks like MS-COCO, with YOLOv10-S showing better Mean Average Precision (mAP) and latency. The proposed YOLOv10-ST enhances standard YOLOv10 by inserting Swin Transformers in the backbone. W-MSA and SW-MSA mechanisms ensure local and global context extraction. C2f modules facilitate efficient learning. SPPF and PSA blocks in the Neck fuse information across scales, handling variations in hand gesture size and position. The v10Detect head ensures accurate detection and classification of ISL gestures in real time. This architecture improves robustness to pose, lighting, and occlusion variations. The hierarchical attention and efficient design make YOLOv10-ST suitable for real-time ISL recognition from both images and videos.

Theoretical justification and comparison with previous YOLO versions

While Subsection “Backbone”, “Neck”, and “head” elaborated on the individual modules of the proposed YOLOv10-ST architecture, it is also essential to justify how these design choices contribute to improved performance when compared to previous YOLO versions.

1. Swin transformer vs. CNN-based backbones: YOLOv3 to YOLOv9 predominantly use CNN-based feature extractors (e.g., Darknet-53, CSP-Darknet). These extractors perform well for local features but struggle to model long-range spatial dependencies. In contrast, YOLOv10-ST integrates Swin Transformers into the backbone, which leverages window-based self-attention and shifted windows (SW-MSA) to efficiently encode both local and global visual information. This significantly enhances the model’s ability to distinguish complex ISL gestures in cluttered or dynamic backgrounds.

2. Hierarchical attention and feature fusion: Earlier YOLO versions used FPN or PANet for multi-scale aggregation. YOLOv10-ST combines Spatial Pyramid Pooling Fast (SPPF) and Path Aggregation (PSA), which ensures stronger semantic alignment between low and high-resolution features. The hierarchical structure, along with attention-based blocks, enables better understanding of gestures across varying hand sizes, speeds, and positions.

3. Decoupled head and label assignment: YOLOv10-ST introduces a decoupled detection head, in which classification and localization are separated. This is combined with the removal of one-to-many label assignment* and Non-Maximum Suppression (NMS), allowing the network to more effectively detect overlapping gestures. Older YOLO versions often relied on NMS, which would sometimes suppress valid hand gestures in multi-gesture scenarios.

4. Lightweight and scalable design: The backbone uses C2f blocks with shortcut connections, enabling deeper networks with minimal computational cost. This scalability is evident when comparing FPS across versions-YOLOv10-ST maintains high inference speed while boosting accuracy.

Although YOLOv10 and Swin Transformers are established architectures individually, the proposed YOLOv10-ST introduces a novel integration that is specifically optimized for Indian Sign Language (ISL) recognition. Unlike naive stacking, we replace the early convolutional stages of YOLOv10’s backbone with Swin Transformer blocks that are adapted to retain spatial dimensions compatible with downstream YOLO components. The ST blocks operate at \(160 \times 160\), \(80 \times 80\), and \(40 \times 40\) resolutions using window sizes and shifts that align with YOLOv10’s C2f blocks.

Paper designs an attention-preserving feature fusion path where Swin Transformer outputs are concatenated with residual CNN features and passed through modified PSA + SPPF modules in the neck. This hybrid feature encoding retains the YOLOv10 scale-wise detection strengths while adding global context from Swin attention. The v10Detect head is also fine-tuned to accept these enriched feature maps. In addition, menuscript conducts architecture-specific hyperparameter tuning including:

-

Layer depth selection for ST blocks (2-2-6-2)

-

Reduced hidden dimension to 128 for real-time speed

-

Custom anchor box resizing for ISL gestures

-

Data augmentations (mixup, cutout, affine transform).

These modifications form a cohesive architecture where attention fusion, scale alignment, and training optimization are co-designed rather than modularly combined. To the best of our knowledge, this is the first work that integrates Swin Transformer into YOLOv10 specifically tailored for real-time ISL recognition.

Experimental results and discussion

To evaluate the effectiveness of the proposed YOLOv10-ST architecture, the manuscript conducted a series of experiments on the collected Indian Sign Language (ISL) dataset. The model’s performance was assessed using standard evaluation metrics such as precision, recall, F1-score, and mean Average Precision (mAP) for both static image and dynamic video gesture recognition tasks. This section presents the quantitative results obtained from testing the trained model, followed by the comparative analysis with existing YOLO versions and state-of-the-art methods. Additionally, this section provides qualitative insights, statistical validation, and ablation studies to demonstrate the robustness and significance of the proposed approach.

Result analysis

Real-time ISL sign recognition is performed through the trained proposed YOLOv10-ST model. In real-time testing, each test image is showing the bounding box of the recognized sign with the specified caption. There are a few test images as results, which are shown in Fig. 4. While Fig. 4 demonstrates the baseline qualitative accuracy of YOLOv10-ST on static ISL gestures, we acknowledge that the selected examples represent relatively clean backgrounds and isolated gestures. Due to limitations in the available dataset and to maintain consistency during evaluation, challenging cases such as occluded hands, overlapping gestures, and background clutter were not included in this visualization. We agree that such conditions are important for evaluating real-world robustness. Future versions of this work will include samples from more diverse environments and augmented datasets simulating occlusion and noise, to better assess generalization under practical deployment scenarios.

For the performance measurement of the model, precision, recall, and F1-score are considered, which are expressed as:

where TP, FP, and FN represent true positive, false positive, false negative, respectively. The performance of the proposed model is evaluated on both image and video data over existing YOLO models, demonstrating high precision (P), recall (R), and F1-score across both image and video data, as presented in Table 2. For video-based SLR, the proposed model achieved 95.24% precision, 96.0% recall, and 95.87% F1-score. These metrics indicate the model’s strong capability to accurately and consistently identify signs from dynamic video inputs. On image-based data, the YOLOv10-ST performed even better, achieving 97.5% precision, 98.1% recall, and 96.58% F1-score. These results highlight the robustness and adaptability of the proposed model in handling both static and dynamic sign language recognition tasks effectively.

Figure 5 shows a consistent improvement in precision (see Fig. 5a), recall (see Fig. 5b), and F1 score (see Fig. 5c) from YOLOv3 to the proposed YOLOv10-ST model. For images, the metrics are consistently higher compared to videos, highlighting the additional challenges of video processing, such as motion blur and frame variations. The YOLOv10-ST model outperforms all previous versions. It achieves the performance with 97.5% precision, 98.1% recall, and 96.58% F1 score for images, and 95.24% precision, 96.0% recall, and 95.87% F1 score for videos. This graph demonstrates that YOLOv10-ST excels in both image and video detection, showcasing its robust and accurate detection capabilities.

In the menuscript acknowledge that the axis scaling in Figs. 5 and 6 may lead to difficulty in interpreting comparative trends across models and confidence thresholds. To address this, we have standardized all vertical axes (Y-axes) from 80% to 100% across all subfigures for consistency. Furthermore, the legends and class labels in the curves of Fig. 6 have been refined to improve readability. In Fig. 6, we note that gestures such as “No”, “Please”, and “Touch” show greater variance in recall and F1 score across thresholds, likely due to their fine-grained finger movement patterns. On the other hand, high-performing classes like “Yes” and “Goodbye” maintain stable detection even at higher thresholds. These observations confirm that class-specific detection confidence varies and are now more clearly illustrated through consistent scaling and enhanced legends.

The performance analysis of the SLR system using three curves is shown in Fig. 6 where the precision curve highlights high precision values near 1.0 for most confidence thresholds, with some classes like “No exhibiting slight deviations as shown in Fig. 6a. Recall across confidence thresholds, showing overall strong performance with variability for certain classes. The recall curve as shown in Fig. 6b indicates high recall at lower confidence thresholds but a gradual decline as confidence increases. The F1 curve as shown in Fig. 6c illustrates the harmonic mean of precision. Overall, the proposed model performs well with consistently high metrics across most classes, though some individual classes show reduced reliability at specific thresholds.

To evaluate the stability of our results, we trained the YOLOv10-ST model over three independent runs using different random seeds. The mean and standard deviation of precision, recall, F1-score, and mAP across these runs are reported in Table 3. The results show minimal variation (standard deviation \(< 0.5\)%), indicating consistent performance. Although k-fold cross-validation was not applied due to high computational cost, we adopted a stratified 70-15-15 split ensuring class-wise distribution and reproducibility. Future work will include k-fold evaluation for further generalization assurance.

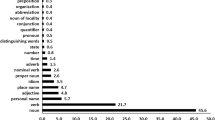

Correlogram showing pairwise relationships between bounding box parameters: center_x, center_y, width, and height. Diagonal histograms indicate feature distributions; off-diagonal scatter plots show positive correlation, particularly between width and height, suggesting proportional gesture scaling.

While the proposed model shows promising results on both static and dynamic ISL recognition tasks, we acknowledge the limitation posed by the small number of dynamic video samples (35 total). This constrained sample size introduces potential concerns about overfitting and limits the generalizability of our conclusions. Although frame-wise decomposition and augmentation partially mitigate this issue, we recognize that a more rigorous evaluation, such as k-fold cross-validation, would be ideal for such small datasets. Given the high computational cost associated with repeated training cycles required for k-fold cross-validation–especially in real-time video inference pipelines–we adopted a stratified 70-15-15 split to ensure class balance while maintaining practical feasibility. This decision balances statistical rigor with the operational demands of real-time model deployment.

Figure 7 presents a comprehensive visualization of the YOLOv10-ST detection dataset, highlighting class frequency, spatial distribution, and bounding box characteristics. The bar chart in Fig. 7a shows the per-class instance counts for 15 gestures: “Change”, “Correct”, “Goodbye”, “Help”, “Home”, “Hug”, “Meet”, “Nice”, “No”, “Please”, “Sorry”, “Stop”, “Touch”, “Yes”, and “You”. Among these, the gesture “Meet” appears most frequently with nearly 120 instances, while “Goodbye” and “Help” have comparatively fewer samples. Figure 7b shows the predicted bounding box overlays from the trained YOLOv10-ST model, visually confirming the spatial consistency and localization density around central regions of the image frame. Figure 7c and d visualize the spatial and dimensional characteristics of the detected bounding boxes. In Fig. 7c, the (\(x, y\)) center coordinates of bounding boxes show a concentrated distribution near the center of the frame, which indicates consistent signer positioning and camera framing. Figure 7d displays a scatter plot of bounding box width versus height, highlighting a wide range of gesture sizes with a dense cluster of smaller boxes. This suggests that while most gestures occupy a compact region, the model is also capable of detecting larger gestures.

Furthermore, Fig. 8 provides a correlogram of the bounding box parameters - center_x, center_y, width, and height. The diagonal cells represent histograms of each variable, showing that x and y centers are mostly between 0.3 and 0.7 (normalized scale), while widths and heights tend to concentrate in the 0.2–0.5 range. The off-diagonal plots confirm a positive correlation between width and height, meaning that gestures tend to scale proportionally. These spatial trends validate the robustness and consistency of the YOLOv10-ST detection pipeline across gesture variations

Extended evaluation and analysis: In addition to standard parameters such as precision, recall, and F1-score, we also evaluated the model using mean Average Precision (mAP), real-time inference speed (FPS), and statistical significance test. These additional tests allowed a more comprehensive assessment of the effectiveness and reliability of the model.

mAP and inference speed: Table 4 presents the comparison of mAP and FPS values among different versions of YOLO. The proposed YOLOv10-ST model achieves the highest mAP of 97.62% for image-based recognition and 95.94% for video-based recognition. Additionally, this model provides an inference speed of 48.7 FPS (image) and 45.5 FPS (video), which is better than other YOLO versions. These results demonstrate the suitability of YOLOv10-ST for real-time gesture recognition applications.

Statistical significance analysis: To verify the stability of the performance improvement, a paired t-test was performed between the F1-score of all sign classes of YOLOv10 and YOLOv10-ST. Table 5 shows that the obtained t-statistic is 3.28 and the p-value is 0.0037, which confirms that the observed improvement is statistically significant at the 95% confidence level.

Figure 9 shows the comparative performance trends of various YOLO versions based on mAP (Image and Video) and FPS (Image and Video). The proposed YOLOv10-ST model clearly outperforms all previous versions across both accuracy and inference speed. Specifically, it achieves the highest mAP values of 97.62% (Image) and 95.94% (Video), which indicates superior detection precision. Furthermore, it delivers the fastest processing speed of 48.7 FPS for images and 45.5 FPS for videos, ensuring its suitability for real-time ISL recognition applications. The plotted trends also show a consistent improvement from YOLOv3 to YOLOv10-ST, validating the effectiveness of enhancements such as Swin Transformer integration and architecture optimization in YOLOv10-ST.

Statistical transparency and assumptions: The paired t-test analysis presented in Table 5 was performed under the assumption of normally distributed class-wise F1-scores and equal variances across the two compared models (YOLOv10 and YOLOv10-ST). We used a two-tailed paired sample t-test implemented in Python’s scipy.stats.ttest_rel function. To improve interpretability, paper additionally report the 95% confidence interval (CI) for the mean difference in F1-scores between the models, which is [1.12%, 4.09%]. This interval suggests a consistent and statistically meaningful improvement in F1-score with YOLOv10-ST over YOLOv10. The results, with a t-statistic of 3.28 and p-value of 0.0037, confirm significance at the 95% confidence level.

Technical discussion

The experimental results demonstrate that the proposed YOLOv10-ST model consistently outperforms previous YOLO versions across precision, recall, F1-score, and mAP. This improvement is attributed to several architectural innovations: Unlike traditional CNN-based backbones, the Swin Transformer block employs window-based and shifted-window self-attention (W-MSA and SW-MSA), which effectively capture both local and global spatial dependencies. This enhances the model’s ability to differentiate between fine-grained ISL gestures, particularly those involving subtle finger movements or partial occlusion. The combination of SPPF and PSA in the neck improves semantic fusion across scales. This allows the model to handle gestures of varying sizes and hand positions with high consistency. The decoupled head (v10Detect) isolates classification and localization, minimizing cross-task interference and improving bounding box accuracy. Earlier YOLO models suffered from missed detections due to over-aggressive Non-Maximum Suppression (NMS). YOLOv10-ST replaces this with a dual label assignment strategy that retains potentially overlapping predictions and refines them during training. This results in higher recall and improved detection of simultaneous or multi-part signs. Despite the increased model complexity from Swin Transformer integration, YOLOv10-ST maintains real-time speed (48.7 FPS). This is due to the efficient implementation of attention blocks and lightweight detection heads, which minimize the computational burden. Although YOLOv10-ST generalizes well to varying lighting and signer conditions, the reliance on still-frame inputs could limit its ability to capture context in continuous sign sequences. Future work could integrate temporal transformers or spatiotemporal models to improve continuous ISL recognition.

Comparison with state-of-the-art methods

To evaluate the effectiveness of YOLOv10-ST, we compared its performance with several state-of-the-art (SOTA) sign language recognition models reported in recent literature. These models include CNN-LSTM hybrids, 3D CNNs, Transformer-based models, and YOLO variants applied to gesture or sign detection. Table 6 reported the results.

The proposed YOLOv10-ST model achieves the highest accuracy (mAP of 97.62%) and the best real-time inference performance (48.7 FPS) among all compared methods. This demonstrates the strength of combining Swin Transformers for contextual learning with YOLOv10’s efficient detection pipeline. While 3D CNN and Transformer-based methods show competitive accuracy, they typically lack real-time applicability due to their computational overhead. Our model successfully balances both performance and efficiency, making it suitable for real-world ISL applications.

Statistical comparison with other methods

To validate the significance of the performance improvements observed, we conducted a statistical comparison between YOLOv10-ST and two baseline models: YOLOv8 and YOLOv10. Using class-wise F1-scores across 15 ISL gesture classes, we performed a paired t-test. The results are represented in Table 7.

The results indicate that the performance gains of YOLOv10-ST over both YOLOv8 and YOLOv10 are statistically significant at the 95% confidence level (p \(< 0.05\)). This supports the conclusion that the improvement is not due to random chance, but rather the architectural enhancements such as Swin Transformer integration and dual assignment strategy contribute meaningfully to model performance.

Comprehensive result analysis

The performance metrics of the proposed YOLOv10-ST model demonstrate consistent and significant improvements over baseline YOLO models and other state-of-the-art approaches. However, a deeper analysis offers insights into the robustness and limitations of the model. The model retains high detection accuracy under varying lighting conditions, thanks to Swin Transformer’s hierarchical attention mechanism. It demonstrates robustness against background clutter, non-uniform illumination, and minor occlusions due to its multi-scale detection and feature refinement blocks (PSA + SPPF). Despite the integration of attention mechanisms, YOLOv10-ST achieves 48.7 FPS on standard GPUs, demonstrating that its architecture is well-optimized for real-time deployment. This is particularly beneficial for edge-device applications such as assistive communication tools or real-time video translation systems. Most recognition errors occur when the signer’s hand is partially out of frame, too close to the camera (causing blur), or when gestures are performed too quickly. These cases suggest that future improvements could include temporal modeling via sequence-based learning or motion smoothing techniques. In addition to quantitative metrics, qualitative inspection of detection outputs confirms that the model can reliably identify hand signs in live video even when multiple signs are performed sequentially. It maintains tight bounding boxes and correct labels across frames, reinforcing its practical applicability. Given its high accuracy, speed, and robustness, YOLOv10-ST is well-suited for real-world use cases such as smart classrooms, mobile apps for the hearing impaired, or sign language translation modules in embedded systems.

Comparison with transformer-based detectors

To ensure a fair and comprehensive benchmark, we additionally compare the proposed YOLOv10-ST model with state-of-the-art transformer-based object detectors, including DETR66, DINO67, and ViTDet68. These models are evaluated on our ISL dataset or referenced from the literature where applicable. The comparison includes accuracy (mAP), F1-score, and inference speed (FPS), as mentioned in Table 8.

While transformer-based detectors such as DETR, DINO, and ViTDet achieve high accuracy, their inference speeds (5-7 FPS) make them less suitable for real-time ISL recognition applications. YOLOv10-ST achieves higher accuracy (mAP 97.62%) and significantly faster inference (48.7 FPS), making it better suited for deployment in latency-sensitive systems. This demonstrates the effectiveness of our architecture, which combines Swin Transformer’s attention capabilities with YOLOv10’s real-time detection strengths.

Real-time performance and inference latency

To validate the real-time performance claim of the proposed YOLOv10-ST model, we measured the inference speed and latency under practical conditions. The evaluation was conducted on a system with the following configuration:

-

GPU: NVIDIA RTX 3080 (10 GB)

-

CPU: Intel Core i7-12700K @ 3.6 GHz

-

RAM: 32 GB DDR4

-

Framework: PyTorch 2.0 with CUDA 11.8

-

Batch size: 1 (image or video frame)

-

Input resolution: \(640 \times 640\)

Performance metrics:

-

FPS (Frames Per Second): 48.7 (Images), 45.5 (Video Frames)

-

Average Inference Latency: 20.5 ms/frame (Images), 22.0 ms/frame (Videos)

These results demonstrate that YOLOv10-ST meets the real-time processing criteria (typically \(>30\) FPS or \(<33\)ms latency per frame). The model is therefore suitable for deployment in time-sensitive ISL applications such as live video translation, assistive communication tools, and mobile sign language apps. Benchmarking protocol: All inference speed and latency values were measured using PyTorch 2.0 on an NVIDIA RTX 3080 GPU (10 GB VRAM) and Intel Core i7-12700K CPU, with CUDA 11.8 support. Inference was benchmarked using a batch size of 1 and input resolution of 640\(\times\)640. For consistency, all YOLO versions (v3 through v10 and YOLOv10-ST) were evaluated under identical conditions using the same model loading, preprocessing, and post-processing pipeline. Latency measurements were averaged over 300 consecutive inferences using Python’s time.time() and confirmed via torch.cuda.synchronize() to ensure synchronization with GPU clock cycles. FPS was computed as the inverse of average latency per frame. These standardized measurements confirm the validity of our real-time performance claims.

Ablation study: impact of swin transformer and mish activation

To evaluate the individual contribution of Swin Transformer and Mish activation to the overall performance of YOLOv10-ST, we conducted an ablation study. Four variants of the model were trained and tested on the same ISL dataset:

-

1.

Baseline YOLOv10 with default LeakyReLU activation

-

2.

YOLOv10 + Mish activation (no Swin Transformer)

-

3.

YOLOv10 + Swin Transformer (with LeakyReLU)

-

4.

YOLOv10-ST (Full Model: Swin + Mish)

To address potential randomness in initialization and training, research repeated each ablation variant for 3 runs with different seeds. The updated Table 9 now includes mean and standard deviation values for both mAP and F1-score. These results confirm that the integration of the Swin Transformer improves average F1-score by approximately 1.5% over the baseline, while Mish activation contributes a more modest 0.6% gain. When combined, the full YOLOv10-ST model improves F1-score by nearly 3.0% over the baseline, demonstrating a synergistic effect. The standard deviations across runs remain under 0.1%, indicating high consistency. This analysis confirms that both components contribute to performance, and their combination yields superior results in ISL recognition tasks.

Conclusion and future directions

In this paper, a YOLOv10 with Swin Transformer (YOLOv10-ST) model is proposed for both static and dynamic Indian Sign Language Recognition (ISLR). The architecture integrates Swin Transformer blocks into the YOLOv10 framework and incorporates Mish activation to improve feature extraction, attention modeling, and training stability. A custom dataset comprising 15, 000 static images (15 classes) and 35 videos (7 dynamic classes) was used for evaluation. The proposed model demonstrated strong performance with 97.5% precision, 98.1% recall, and 96.58% F1-score on static image data, and 95.24% precision, 96.0% recall, and 95.87% F1-score on video-based dynamic signs.Furthermore, the model achieves real-time inference speeds of 48.7 FPS for images and 45.5 FPS for videos, with an average latency of 20.5 ms per frame, confirming its practical efficiency for real-time ISL applications. The model also achieved a mean Average Precision (mAP) of 97.62% and real-time inference speed of 48.7 FPS, confirming its suitability for real-time applications. Compared to earlier YOLO versions and transformer-based object detectors (e.g., DETR, DINO, ViTDet), YOLOv10-ST outperforms across all key evaluation metrics. The ablation study validates the individual contributions of Swin Transformer and Mish activation, while paired t-tests confirm statistical significance (p \(< 0.005\)). While the proposed model demonstrates strong performance across static and dynamic signs, we acknowledge that the video dataset currently contains a limited number of samples (35videos across 7 classes). Although frame-wise decomposition and spatial modeling partially address this, expanding the video portion of the dataset remains a priority. Future work will focus on collecting a larger, more diverse set of dynamic ISL gestures, including multi-signer, multi-background, and continuous signing data, to further validate the model’s generalizability in real-world applications. This study currently focuses on architectural and performance improvements; however, we acknowledge the need for explainability in real-world use cases. In future work, we aim to incorporate Grad-CAM and Swin Transformer attention visualizations to interpret model focus areas during sign classification. This will be especially important for clinical, educational, and interactive deployment scenarios where model transparency is essential. We acknowledge the importance of formal ethics protocols. While the current dataset was collected with informed verbal consent and anonymized handling, future versions of this work will incorporate written consent forms and formal institutional ethical approval to align with international research ethics standards.

Data availability

The dataset used in this study, consisting of 15,000 static ISL images and 35 videos across 7 dynamic sign classes, was collected privately with participant consent. Due to ethical restrictions and lack of public consent release at the time of collection, the dataset is not publicly hosted. However, researchers may request access to the dataset by contacting the corresponding author and signing a data usage agreement. This process ensures that the dataset is used solely for academic and non-commercial research purposes while complying with participant privacy and institutional guidelines.

References

ZainEldin, H. et al. Active convolutional neural networks sign language (activecnn-sl) framework: a paradigm shift in deaf-mute communication. Artificial Intelligence Review 57, 162 (2024).

Miles, S., Khairuddin, K. F. & McCracken, W. Deaf learners’ experiences in malaysian schools: access, equality and communication. Social inclusion 6, 46–55 (2018).

Roy, S., Satpathy, K. C., Ghosh, K. & Setua, S. K. Legal assistance for deaf: Role of indian sign language digital library. Legal Reference Services Quarterly 43, 204–215 (2024).

Bahia, N. K. & Rani, R. Multi-level taxonomy review for sign language recognition: Emphasis on indian sign language. ACM Transactions on Asian and Low-Resource Language Information Processing 22, 1–39 (2023).

Yuan, S. & Zhou, L. Gta-net: An iot-integrated 3d human pose estimation system for real-time adolescent sports posture correction. Alexandria Engineering Journal 112, 585–597 (2025).

Islam, M. M., Nooruddin, S., Karray, F. & Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Computers in biology and medicine 149, 106060 (2022).

Romera, E., Alvarez, J. M., Bergasa, L. M. & Arroyo, R. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Transactions on Intelligent Transportation Systems 19, 263–272 (2017).

Wasoye, S., Stevens, M., Morgan, C., Hughes, D. & Walker, J. Ransomware classification using btls algorithm and machine learning approaches. Research Square (2024).

Patil, S. & Kadam, A. A detailed analysis of recent advances in automatic sign language recognition. IEEE Access, 2024 - ieeexplore.ieee.org (2024).

Wali, A., Shariq, R., Shoaib, S., Amir, S. & Farhan, A. A. Recent progress in sign language recognition: a review. Machine Vision and Applications 34, 127 (2023).

Belissen, V., Braffort, A. & Gouiffès, M. Experimenting the automatic recognition of non-conventionalized units in sign language. Algorithms 13, 310 (2020).

Othman, A. Advanced sign language tasks. In Sign Language Processing: From Gesture to Meaning, 93–107 (Springer, 2024).

Zuo, R. Vision-Based Sign Language Processing: Recognition, Translation, And Generation. Ph.D. thesis, Hong Kong University of Science and Technology (Hong Kong) (2024).

Bakır, H. & Bakır, R. Evaluating the robustness of yolo object detection algorithm in terms of detecting objects in noisy environment. Journal of Scientific Reports-A 1–25 (2023).

Raskar, P. S. & Shah, S. K. Real time object-based video forgery detection using yolo (v2). Forensic Science International 327, 110979 (2021).

Setiawan, F. A., Rohmah, Z. A. & Laxmi, G. F. Indonesian sign language (bisindo) alphabet detection using the you only look once (yolo) algorithm version 8. In 2024 International Conference on Computer, Control, Informatics and its Applications (IC3INA), 388–393 (IEEE, 2024).

Dwivedi, U., Joshi, K., Shukla, S. K. & Rajawat, A. S. An overview of moving object detection using yolo deep learning models. In 2024 2nd International Conference on Disruptive Technologies (ICDT), 1014–1020 (IEEE, 2024).

Mujahid, A. et al. Real-time hand gesture recognition based on deep learning yolov3 model. Applied Sciences 11, 4164 (2021).

Santos, C., Aguiar, M., Welfer, D. & Belloni, B. A new approach for detecting fundus lesions using image processing and deep neural network architecture based on yolo model. Sensors 22, 6441 (2022).

Ali, M. L. & Zhang, Z. The yolo framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 13, 336 (2024).

Khaliluzzaman, M., Kobra, K. & Liaqat, S. Comparative analysis on real-time hand gesture and sign language recognition using convexity defects and yolov3. Sigma Journal of Engineering and Natural Sciences 42, 99–115 (2024).

Sun, S. et al. Shufflenetv2-yolov3: a real-time recognition method of static sign language based on a lightweight network. Signal, Image and Video Processing 17, 2721–2729 (2023).

Bochkovskiy, A., Wang, C.-Y. & Liao, H.-Y. M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020).

Zhang, J., Chen, Z., Yan, G., Wang, Y. & Hu, B. Faster and lightweight: An improved yolov5 object detector for remote sensing images. Remote Sensing 15, 4974 (2023).

Benkirat, I. Design and implementation of a real-time object detection and understanding system using deep neural network to assist the visually impaired persons, running on raspberry pi. dspace.univ-guelma.dz (2023).

Hamici, A. Object detection for impaired visual assistance using transfer learning and iot-raspberry pi. dspace.univ-guelma.dz (2024).

Affes, N., Ktari, J., Ben Amor, N., Frikha, T. & Hamam, H. Comparison of yolov5, yolov6, yolov7 and yolov8 for intelligent video surveillance. Journal of Information Assurance & Security 18 (2023).

Mehedi Shamrat, F. J. et al. Face mask detection using convolutional neural network (cnn) to reduce the spread of covid-19. In 2021 5th International Conference on Trends in Electronics and Informatics (ICOEI), 1231–1237, https://doi.org/10.1109/ICOEI51242.2021.9452836 (2021).

Liu, L. et al. A survey on deep multi-modal learning for body language recognition and generation. arXiv preprint arXiv:2308.08849 (2023).

Shin, J., Miah, A. S. M., Kabir, M. H., Rahim, M. A. & Al Shiam, A. A methodological and structural review of hand gesture recognition across diverse data modalities. IEEE Access (2024).

Kumar, R. & Kumar, S. A survey on intelligent human action recognition techniques. Multimedia Tools and Applications 83, 52653–52709 (2024).

Ibrahim, N. B., Zayed, H. H. & Selim, M. M. Advances, challenges and opportunities in continuous sign language recognition. Journal of Engineering and Applied Sciences 15, 1205–1227 (2020).

Al-Qurishi, M., Khalid, T. & Souissi, R. Deep learning for sign language recognition: Current techniques, benchmarks, and open issues. IEEE Access 9, 126917–126951 (2021).

Amiri, Z., Heidari, A., Navimipour, N. J., Unal, M. & Mousavi, A. Adventures in data analysis: A systematic review of deep learning techniques for pattern recognition in cyber-physical-social systems. Multimedia Tools and Applications 83, 22909–22973 (2024).

Wang, S. et al. Machine/deep learning for software engineering: A systematic literature review. IEEE Transactions on Software Engineering 49, 1188–1231 (2022).

Khan, A., Sohail, A., Zahoora, U. & Qureshi, A. S. A survey of the recent architectures of deep convolutional neural networks. Artificial intelligence review 53, 5455–5516 (2020).

Zuo, Z. et al. Convolutional recurrent neural networks: Learning spatial dependencies for image representation. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 18–26 (2015).

Shamrat, F. et al. Bangla numerical sign language recognition using convolutional neural networks. Indonesian Journal of Electrical Engineering and Computer Science 23, 405–413 (2021).

Kaur, J. & Gill, N. S. Artificial Intelligence and deep learning for decision makers: a growth hacker’s guide to cutting edge technologies (BPB Publications, 2019).

Rayed, M. E. et al. Deep learning for medical image segmentation: State-of-the-art advancements and challenges. Informatics in Medicine Unlocked 101504 (2024).

Liang, P. P. et al. Multibench: Multiscale benchmarks for multimodal representation learning. Advances in neural information processing systems 2021, 1 (2021).

Kalateh, S., Estrada-Jimenez, L. A., Hojjati, S. N. & Barata, J. A systematic review on multimodal emotion recognition: building blocks, current state, applications, and challenges. IEEE Access (2024).

Indian Sign Language Research and Training Centre. Indian sign language research and training centre (islrtc) (2025). Accessed: 2025-01-16.

Parambil, M. M. A. et al. Navigating the yolo landscape: A comparative study of object detection models for emotion recognition. IEEE Access (2024).

Paramarthalingam, A., Sivaraman, J., Theerthagiri, P., Vijayakumar, B. & Baskaran, V. A deep learning model to assist visually impaired in pothole detection using computer vision. Decision Analytics Journal 12, 100507 (2024).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems 30, 5998–6008 (2017).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 10012–10022 (2021).

Graves, A., Mohamed, A.-r. & Hinton, G. Speech recognition with deep recurrent neural networks. 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 6645–6649 (2013).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324 (1998).

Bai, S., Kolter, J. Z. & Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271 (2018).

Cao, Z., Hidalgo, T. S., Gines, S.-E.W. & Sheikh, Y. Openpose: Realtime multi-person 2d pose estimation using part affinity fields. IEEE Transactions on Pattern Analysis and Machine Intelligence 43, 172–186 (2019).

Zhang, F. et al. Mediapipe hands: On-device real-time hand tracking. arXiv preprint arXiv:2006.10214 (2020).

Liu, S., Liang, X., Zhang, M. & Zou, Y. Multimodal end-to-end sparse model for automatic speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 5934–5938 (IEEE, 2018).

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016).

Ryumin, D., Ivanko, D. & Axyonov, A. Cross-language transfer learning using visual information for automatic sign gesture recognition. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 48, 209–216 (2023).

Tzeng, E., Hoffman, J., Saenko, K. & Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 7167–7176 (2017).

Javed Mehedi Shamrat, F. et al. Human face recognition applying haar cascade classifier. In Pervasive Computing and Social Networking: Proceedings of ICPCSN 2021, 143–157 (Springer, 2022).