Abstract

Recently developed pathology foundation models, pretrained on large-scale pathology datasets, have demonstrated excellent performance in various downstream tasks. This study evaluated the utility of pathology foundation models combined with multiple instance learning (MIL) for kidney pathology analysis. We used 242 hematoxylin and eosin-stained whole slide images (WSIs) from the Kidney Precision Medicine Project (KPMP) and Japan-Pathology Artificial Intelligence Diagnostics Project databases as the development cohort, comprising 47 healthy controls, 35 acute interstitial nephritis, and 160 diabetic kidney disease (DKD) slides. External validation was performed using 83 WSIs from the University of Tokyo Hospital. Pretrained pathology foundation models were utilized as patch encoders and compared with ImageNet-pretrained ResNet50. Using the extracted patch features, we trained MIL models to classify diagnoses. In internal validation, all foundation models outperformed ResNet50, achieving area under the receiver operating characteristic curve (AUROC) over 0.980. In external validation, the performance of ResNet50 markedly dropped, which was in contrast to all foundation models. Visualization of attention heatmaps confirmed that foundation models accurately recognized diagnostically relevant structures. In overt proteinuria (albuminuria ≥ 300 mg/gCre or proteinuria ≥ 1000 mg/gCre) prediction task, foundation models also outperformed ResNet50. We successfully integrated pathology foundation models with MIL to achieve robust diagnostic performance.

Similar content being viewed by others

Introduction

The diagnosis of kidney diseases requires interpreting diverse pathological features, such as inflammation, atherosclerosis, and metabolic changes, which demands significant effort and expertise. The advent of whole-slide imaging (WSI) and advances in deep learning have spurred intensive research in digital pathology. In particular, image analysis with convolutional neural networks (CNNs) has been successfully applied to kidney pathology, enabling segmentation of functional structures such as glomeruli and tubules, as well as quantitative analysis of kidney pathology and its association with clinical outcomes1,2,3. These approaches are expected to facilitate efficient kidney histopathological analysis and reduce the time and effort required for evaluation. However, these segmentation-based approaches heavily depend on manually annotated datasets for supervised training, posing a significant limitation to scalability. Self-supervised learning (SSL)4 has been introduced as a powerful approach for extracting meaningful representations from unlabeled large datasets. In kidney pathology, the use of SSL for effective feature extraction from glomerular images with limited annotations has been reported5. Recently, pathology foundation models, trained using SSL with Vision Transformer (ViT)6 architectures, have demonstrated remarkable performance in various downstream tasks compared with ImageNet-trained CNN-based models7,8,9,10,11. A key difference between CNNs and ViTs is their inductive biases. CNNs extract spatial patterns using small convolutional kernels across multiple layers and have strong inductive biases, which leads to high performance even with small datasets. However, this inductive bias in turn prevents CNNs from fully leveraging large-scale datasets. In contrast, ViTs have minimal inductive biases, which enable them to outperform CNNs when trained on large-scale datasets12. Pathology foundation models can be used as patch-level encoders to extract meaningful features from image patches, and these extracted features can be applied to downstream tasks such as classification and segmentation.

One of the major computational challenges in WSI analysis is the massive data size, making direct slide-level analysis infeasible. The standard solution is dividing each slide into smaller image patches to facilitate computational processing. Multiple instance learning (MIL) is an effective approach for slide-level classification, as it aggregates information from individual patches without requiring patch-level annotations. In heterogeneous tissue slides, where diagnostic value varies widely among patches, MIL excels by learning to focus on important patches for classification.

In this study, we utilized pathology foundation models as encoders to extract features from image patches and employed MIL to aggregate patch-level features for classifying expert pathologist-derived disease categorizations and predicting a clinical outcome. Rather than conducting detailed assessments of glomerular lesions commonly performed in prior studies, we aimed to investigate whether slide-level supervision can identify diagnostically informative patches without patch-level labels. We therefore focused on acute interstitial nephritis (AIN) and diabetic kidney disease (DKD), in which discriminative pathological findings are distributed across the slide, and we evaluated overt proteinuria (albuminuria ≥ 300 mg/gCre or proteinuria ≥ 1000 mg/gCre) as the clinical outcome. We benchmarked the performance of pathology foundation models against ImageNet-pretrained ResNet5013, a widely used CNN-based model, and assessed robustness to inter-institutional variability by comparing performance with and without stain normalization.

Methods

Dataset

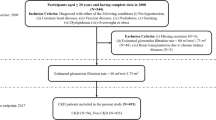

We conducted two analyses: a main disease-classification analysis with external validation and an exploratory clinical-outcome prediction analysis using a single dataset without external validation.

In the main analysis, we developed a diagnostic model for classifying healthy control (HC), AIN, and DKD using H&E-stained WSIs (Fig. 1a). Training data were obtained from two publicly available datasets: the Kidney Precision Medicine Project (KPMP, accessed on 20th January 2025)14 and the Japan-Pathology Artificial Intelligence Diagnostics Project (JP-AID, accessed on 20th January 2025)15. The KPMP dataset comprised 26 HC slides from 21 patients, 26 AIN slides from 12 patients, and 151 DKD slides from 70 patients. From the JP-AID database, we collected H&E-stained WSIs comprising 21 HC slides, nine DKD slides, and nine AIN slides. For external validation, an independent dataset of H&E-stained WSIs was obtained from biopsy slides collected between 2009 and 2025 at the University of Tokyo Hospital (the UT dataset). The UT dataset included 39 HC slides, 17 AIN slides, and 30 DKD slides. Patients with other coexisting kidney diseases were excluded. Diagnoses were based on adjudicated diagnoses (KPMP) and expert pathologist-derived diagnoses (JP-AID and UT). Most HC cases were derived from transplanted kidney biopsies. Detailed dataset description and selection flowcharts are presented in Supplementary Methods and Figures S1-S3. This study protocol adhered to the Declaration of Helsinki. Research Ethics Committee of the Faculty of Medicine of the University of Tokyo approved the study protocol (approval number 2024526NI) and waived the requirement for written informed consent. Instead, all participants were given the opportunity to opt out of the study.

Overview of study cohorts and workflow of slide-level classification using multiple instance learning. (a) The diagram illustrates the diagnostic model training and evaluation process. The KPMP dataset (total 203 HE WSIs from 103 patients) and the JP-AID dataset (total 39 HE WSIs from 39 patients) across three diagnostic categories were used for model training and internal validation via fivefold cross validation. The development cohort was randomly split into five subsets while ensuring a balanced distribution of diagnostic categories across the folds. Multiple slides from the same patient were kept in the same subset. In each fold, four subsets were used for training, and the remaining subset was used for validation. The 5 trained models were subsequently evaluated on the UT dataset, an external validation cohort comprising 86 H&E-stained WSIs from 86 patients. (b) DKD cases from the KPMP dataset were used for developing the classification model for overt proteinuria in the exploratory analysis. Overt proteinuria was defined as proteinuria ≥ 1000 mg/gCre or albuminuria ≥ 300 mg/gCre. For proteinuria classification, six slides from three patients were excluded due to missing data. (c) The workflow for WSI analysis involves four steps: (1) Patch extraction is conducted by dividing WSIs into patches (256 × 256 pixels) at 20 × magnification. Background patches are removed during preprocessing. (2) Feature extraction is performed using pretrained encoders (ResNet50, UNI, UNI2-h, Prov-Gigapath, Phikon, Virchow, and Virchow2). (3) The extracted patch features are aggregated using four MIL methods (max-pooling, ABMIL, TransMIL, and CLAM). (4) The slide-level classifier predicts the diagnostic category. KPMP kidney precision medicine project, H&E hematoxylin and eosin, WSIs whole slide images, JP-AID Japan-Pathology Artificial Intelligence Diagnostics, HC healthy control, AIN acute interstitial nephritis, DKD diabetic kidney disease, UT the University of Tokyo Hospital, MIL multiple instance learning, ABMIL attention-based MIL, TransMIL Transformer based MIL, CLAM clustering-constrained attention multiple instance learning.

In the exploratory analysis, we evaluated our pipeline’s ability to predict clinical outcome (overt proteinuria defined as albuminuria ≥ 300 mg/gCre or proteinuria ≥ 1000 mg/gCre) using DKD slides from the KPMP dataset (Fig. 1b). Six slides from three cases were excluded because of missing values. In this exploratory analysis, external validation using the UT dataset was not performed due to significant class imbalance. Most DKD cases in the UT dataset had overt proteinuria.

Image preprocessing

To prepare images for analysis, each WSI was divided into non-overlapping tiles using Slideflow version 3.0216 with the Libvips backend at 20x (128 μm, 256 pixels). Background was removed using Otsu’s thresholding17, Gaussian blur filtering (sigma = 2, threshold = 0.02), and whitespace filtering (< 45% tissue content). Slides containing fewer than 100 patches were excluded to ensure consistent image quality and diagnostic reliability. Analyses were primarily performed without stain normalization, and in the main analysis we compared performance with and without stain normalization. Thumbnails of representative slides from each dataset are shown in Supplementary Figure S4. Variations in staining protocols and slide scanning methods introduce color heterogeneity, posing challenges in digital pathology analysis18. However, recently developed pathology foundation models trained on pathology slides with diverse color variations have demonstrated robust performance even without stain normalization7,9,19,20. Given these findings, we opted to primarily analyze the images without stain normalization and assess the robustness of foundation models.

Multiple instance learning

For slide-level classification analysis, we adopted the MIL approach. MIL is a weakly supervised learning framework that leverages slide-level labels rather than instance-level labels for learning. In the MIL framework, each WSI is treated as a bag with a slide-level label, whereas the individual patches within the slide that serve as an instance are not labeled. The overall workflow is illustrated in Fig. 1c. First, feature vectors were extracted from the extracted patches using pretrained pathology foundation models, including UNI7, UNI2-h7, Prov-Gigapath10, Phikon11, Virchow8, and Virchow29, which were pretrained on large-scale pathology slides using SSL. As a baseline, we used ResNet5013 pretrained on the ImageNet. A summary of the encoders is presented in Supplementary Table S1. ResNet50 was built from the Torchvision library with ImageNet-pretrained weghts21. The pretrained weights of pathology foundation models were downloaded from Huggingface22. In this study, we employed four aggregation methods: max pooling, attention-based MIL (ABMIL)23, transformer-based MIL (TransMIL)24, clustering-constraint attention multiple instance learning (CLAM)25. Max pooling assumes that the single most indicative patch in a bag determines the bag label. ABMIL uses an attention mechanism to perform weighted aggregation of patch features, with weights trained by a neural network. TransMIL utilizes a transformer mechanism to learn spatial relationships between patches via self-attention. CLAM incorporates instance-level clustering into its attention mechanism to enhance feature representation learning. Among the two variants of CLAM, we employed the multi-branch variant of CLAM (CLAM-MB), which computes the class-specific attention weights for each class. In the exploratory analysis, aggregation of patch features was performed using CLAM.

Model development and validation

Each model was trained to predict the diagnostic category (the main analysis) or overt proteinuria (the exploratory analysis). The hyperparameters used for model development are listed in Supplementary Table S2. To develop the model, we performed five-fold cross-validation, in which the dataset was randomly split into five subsets while ensuring a balanced distribution of diagnostic categories across the folds. Multiple slides from the same patient were kept in the same subset. In each fold, four subsets were used for training, and the remaining subset was used for validation. This process was repeated five times. The model was trained for 50 epochs per-fold, and the model with the lowest validation loss was selected. In the main analysis, the five trained models were subsequently validated using the external UT dataset to assess their generalizability. All computations were performed using Python 3.9.12 and PyTorch 1.11.0. Details of the computational environment were provided in the Supplementary Methods.

Visualization

Among the four aggregation methods, CLAM-based models were used for visualization. CLAM-MB leverages an attention mechanism to assign a weight to each patch. Based on these attention scores, an attention heatmap is generated to visualize diagnostically relevant regions within the slide. CLAM-MB employs a multi-branch design to learn class-specific attention weights. For each class label, the model generates a distinct attention heatmap that highlights important regions for its classification. We used Slideflow-Studio16 to visualize attention heatmaps with the Turbo colormap, where warmer colors (yellow to red) represent higher attention scores while cooler colors (green to blue) indicate lower attention scores. Additionally, we identified the top nine important patches for classification according to their attention scores.

Statistical analysis

Quantitative or ordinal data are expressed using medians and interquartile ranges or means and 95% confidence intervals (95% CI). Qualitative data are expressed as numbers and percentages. Performance metrics, including accuracy, macro-averaged F1 score, and area under the receiver operating characteristic curve (AUROC) were calculated to assess the predictive performance of the trained models. Accuracy is the proportion of correct classifications out of total classifications. F1 score is the harmonic mean of precision (the proportion of true positive predictions out of all positive predictions) and recall (the proportion of true positive predictions out of all actual positive samples). Macro-averaged F1 score is the arithmetic mean of all per-class F1 scores. The results from the five cross-validation folds were aggregated and reported as the mean and 95%CI. All metric calculations and statistical analyses were conducted using the scikit-learn package.

Results

Main analysis

Clinical characteristics of the development cohort

The clinical characteristics of the KPMP and JP-AID cohorts used in the main analysis are presented in Table 1. In the KPMP cohort, 19 of 21 HC cases were zero-hour transplant kidney biopsies. In the JP-AID cohort, 19 of 21 HC cases were transplant kidney biopsies: 6 were zero-hour, 8 were one-hour post-transplant, and 5 were obtained beyond the perioperative period. The JP-AID cohort does not provide detailed clinical information other than age, sex, and diagnosis. For eGFR calculations in the KPMP dataset, where eGFR data are provided in ranges (e.g., 40–50 ml/min/1.73 m2), the midpoint eGFR was used (e.g., 45.5 ml/min/1.73 m2). For AIN cases in the KPMP cohort, baseline eGFR values and AKI severity were presented. Regarding AKI severity of AIN cases in the KPMP cohort, Kidney Disease: Improving Global Outcomes (KDIGO) AKI stages26 were provided, with four patients classified as Stage 2 and eight patients as Stage 3. In the KPMP cohort, DKD cases were characterized by older age and a higher prevalence of hypertension.

Model development and internal validation

Based on the development cohort (the KPMP and JP-AID datasets), we developed and evaluated models to predict slide-level diagnosis (HC, AIN, or DKD) using fivefold cross validation. We performed a comparative evaluation of seven encoders (ResNet50, UNI, UNI2-h, Phikon, Prov-Gigapath, Virchow, Virchow2) with four MIL methods (max pooling, ABMIL, TransMIL, and CLAM). The results are summarized in Fig. 2. Among the MIL methods tested, CLAM consistently showed high performance across all seven encoders. In contrast, max pooling underperformed relative to the other MIL methods, although the performance did not decline when Virchow and UNI2-h were used as encoders. Regarding encoders, all pathology foundation models outperformed ResNet50.

Model performance for disease classification in the development cohort. Performance metrics (Accuracy, F1 score, and AUROC) of models using seven encoders (ResNet50, UNI, UNI2-h, Prov-Gigapath, Phikon, Virchow, and Virchow2) and four aggregation methods (max pooling, ABMIL, TransMIL, and CLAM) in the internal validation cohort are presented. Data are reported as means and 95% confidence intervals. AUROC area under the receiver operating characteristic curve, ABMIL attention-based multiple instance learning, TransMIL transformer-based multiple instance learning, CLAM clustering-constraint attention multiple instance learning.

External validation

To investigate the robustness and generalizability of the developed models, we assessed their performance on the external validation cohort (the UT dataset). The characteristics of the UT dataset are described in Table 1. All HC cases in the UT dataset were one-hour transplant kidney biopsies. Compared with the development cohort, the AIN cases in the UT dataset consisted of older patients, with a greater proportion of females. Additionally, patients with DKD in the UT dataset had lower eGFR levels and a higher prevalence of overt proteinuria. The model performance is summarized in Fig. 3. The external validation results followed a similar trend to those observed in the development cohort: CLAM outperformed the other MIL methods, and all pathology foundation models surpassed ResNet50. For AUROC, max pooling-based models showed substantial decline on external validation, whereas ABMIL, TransMIL, and CLAM largely maintained comparable performance. An exception was observed with ResNet50 and Phikon. For ResNet50, the decline was attenuated only with CLAM: the remaining aggregation methods experienced comparable performance drops. For Phikon, relatively larger performance decreases with ABMIL, TransMIL, and CLAM were observed compared with other pathology foundation models. For accuracy and F1 scores, max pooling also exhibited a pronounced performance drop; ABMIL, TransMIL, and CLAM declined to a lesser extent. When using UNI2-h or Virchow as the encoder, the magnitude of performance decline with max pooling was similar to that of the other aggregation methods. With ResNet50, the decline was attenuated only with CLAM: the remaining aggregation methods experienced similar performance drops. We next assessed the impact of stain variability by applying Macenko stain normalization to all patches27. After stain normalization, each model was trained on the development cohort and validated on the external validation cohort using CLAM. As shown in Table 2, stain normalization had a negligible impact on the performance of models based on UNI, UNI2-h, Virchow, and Virchow2, whereas other encoder-based models demonstrated improved performance after stain normalization. These findings indicate the limited generalizability of ImageNet-pretrained ResNet50 and highlight the advantages of pathology foundation models, particularly UNI, UNI2-h, Virchow and Virchow2, for external applicability.

Model performance for disease classification in the external validation cohort. Performance metrics (Accuracy, F1 score, and AUROC) of models using seven encoders (ResNet50, UNI, UNI2-h, Prov-Gigapath, Phikon, Virchow, and Virchow2) and four aggregation methods (max pooling, ABMIL, TransMIL, and CLAM) in the external validation cohort are presented. Data are reported as means and 95% confidence intervals. AUROC area under the receiver operating characteristic curve, ABMIL attention-based multiple instance learning, TransMIL transformer-based multiple instance learning, CLAM clustering-constraint attention multiple instance learning.

Visualization of the developed models

To understand how the developed models identified diagnostically important pathological features, we sampled slides from patients diagnosed with HC, AIN, and DKD in the UT cohort. Using CLAM, which outperformed other MIL methods, we evaluated seven encoders. For each encoder, the best model with the highest AUROC out of five folds in the external validation was used. We created attention heatmaps and identified the top nine patches with the highest attention scores per slide (Fig. 4). Analysis of these high-attention patches revealed that classification of HC generally relied on recognizing healthy tubules (Fig. 4a). Notably, the Phikon-based model did not assign high attention scores to tubular structures, which may explain its inferior performance in the external validation. Interestingly, UNI2-, Prov-Gigapath-, Virchow-, and Virchow2-based models highlighted patches exhibiting coagulative tubular cell necrosis. This is consistent with the fact that HC samples obtained from zero-hour or one-hour kidney transplant biopsies frequently show ischemia and/or ischemia–reperfusion injury. Since normal tubules may also appear in varying degrees in AIN and DKD, ischemia–reperfusion injury is more specific to transplanted kidneys. Regarding AIN classification (Fig. 4b), all models focused on regions of inflammatory cell infiltration, except for the ResNet50-based model, which incorrectly emphasized largely normal tubules. For diagnosing DKD (Fig. 4c), Virchow- and Vichow2-based models specifically focused on glomerular alterations, whereas other encoders highlighted tubulointerstitial and vascular changes. More detailed observations of heatmaps from ResNet50- and Virchow2-based models are presented in Fig. 5. A sample slide was obtained from a patient diagnosed with AIN in the UT cohort, which is the same slide as shown in Fig. 4b. The Virchow2-based model correctly classified this slide as AIN, whereas the ResNet50-based model misclassified it as HC. Attention scores for each diagnostic label (HC, AIN, and DKD) were used to visualize the regions of focus for the models in the disease classification task. In the whole slide view, the Virchow2-based model appropriately captured AIN-related histological features with high attention scores shown in red, covering most of the tissue in the attention heatmap for AIN (Fig. 5a). In Region A, where the tissue structure remained relatively intact, the attention heatmap for HC assigned high scores, whereas the attention maps for AIN and DKD assigned low scores. Region B exhibited prominent inflammatory cell infiltration within the interstitium, with high attention scores for AIN and low scores for HC. The attention map for DKD showed elevated scores only in the glomerular regions. These findings indicate that the Virchow2-based model successfully identified key histological patterns associated with each diagnostic category. In contrast, the attention heatmaps generated by the ResNet50-based model failed to capture diagnostically important structures, leading to misclassification of this slide as HC (Fig. 5b). While this model correctly assigned high attention scores to Region A for HC classification, it also assigned high scores to Region B, indicating a failure to recognize inflammatory cell infiltration.

Attention heatmaps and top nine high-attention patches. HC (a), AIN (b), and DKD (c) slides in the UT dataset were used to visualize how each model built on seven encoders (ResNet50, UNI, UNI2-h, Prov-Gigapath, Phikon, Virchow, and Virchow2) extracted features from image patches. Attention scores for the true label (HC, AIN, or DKD) were used to visualize attention heatmaps with Turbo colormap, where warmer colors (yellow to red) represent higher attention scores, and cooler colors (green to blue) indicate lower attention scores. The top nine patches with the highest attention scores were identified. Scale bar = 500 μm. HC healthy control, AIN acute interstitial nephritis, DKD diabetic kidney disease.

Attention heatmaps of models using Virchow2 and ResNet50 in the disease classification analysis. A sample slide was obtained from a patient diagnosed with AIN in the UT cohort. Attention scores for each diagnostic label (HC, AIN, and DKD) were used to visualize the regions of focus for the models in the disease classification task. Heatmaps were generated using the Turbo colormap. Attention heatmaps of the Virchow2-based model (a) and the ResNet50-based model (b) for each label (HC, AIN, and DKD) are shown. The original H&E-stained images were used as references. H&E hematoxylin–eosin, HC healthy control, AIN acute interstitial nephritis, DKD diabetic kidney disease. Scale bar = 100 μm.

Exploratory analysis

In the exploratory analysis, we developed predictive models with DKD cases from the KPMP dataset using fivefold cross-validation, focusing on overt proteinuria (albuminuria ≥ 300 mg/gCre or proteinuria ≥ 1000 mg/gCre). In the exploratory experiment, we employed CLAM as the MIL method. The results of the exploratory analysis are summarized in Fig. 6. All foundation models showed higher performance than ResNet50, and in particular, UNI2-h demonstrated the highest performance with an AUROC of 0.872 (95%CI 0.794–0.949). We then visualized attention heatmaps to determine which histological structures were highlighted by each model. Attention heatmaps from the ResNet50-based model and the best-performing UNI2-h-based model are shown in Fig. 7. The slide was derived from a patient with overt proteinuria, and both models correctly classified this as “overt proteinuria”. Whereas the ResNet50-based model assigned high attention scores across most patches, UNI2-h focused on patches containing glomeruli with mesangial matrix expansion and nodular lesions, as well as arteriolar hyalinosis.

Model performance for classification of overt proteinuria. Performance metrics (Accuracy, F1 score, and AUROC) of models using seven encoders (ResNet50, UNI, UNI2-h, Prov-Gigapath, Phikon, Virchow, and Virchow2) with CLAM in the KPMP-DKD cohort for the overt proteinuria classification task are presented. Data are reported as means and 95% confidence intervals. AUROC area under the receiver operating characteristic curve. Data are shown in mean (95%CI).

Attention heatmaps of models using ResNet50 and UNI2-h for overt proteinuria classification. Attention scores for classification of overt proteinuria were used to visualize the regions of focus in the model. Heatmaps were generated using the Turbo colormap. The sample slide was obtained from a patient diagnosed with DKD who had overt proteinuria. The original H&E-stained images were used as references. Glomeruli with mesangial matrix expansion and nodular lesions (arrows were seen (arrows) and arteriolar hyalinosis (arrowhead) are noted in the enlarged view. H&E, hematoxylin–eosin. Scale bar = 100 μm.

Discussion

In this study, we demonstrated that pathology foundation models pretrained on large-scale pathological image datasets are highly effective feature extractors for kidney pathology analysis compared with ResNet50 pretrained on ImageNet. Furthermore, we successfully integrated foundation models with the MIL framework to achieve high diagnostic performance without requiring patch-level annotations. MIL aggregates patch-level features without relying on patch-level labels, making it a practical approach for large-scale histopathological analysis, where detailed manual annotation is infeasible. Among the MIL methods evaluated, CLAM showed the best performance. CLAM aggregates patch-level features through attention pooling and calculates both slide-level classification and instance-level losses to better separate positive and negative instances, enabling the model to efficiently learn discriminative features from important patches.

As for disease diagnosis prediction, foundation models’ superiority was consistent when applied to the external validation cohort from a different institution. A major challenge in computational pathology is inter-institutional staining variability, which has been shown to hinder robust feature extraction28. Remarkably, our findings indicate that foundation models effectively extracted meaningful features, even without stain normalization, demonstrating robustness against staining variations. This advantage was particularly evident when compared with ResNet50, which exhibited a marked performance drop in external validation. Among the pathology foundation models, UNI, UNI2-h, Virchow, and Virchow2 showed robust performance regardless of whether stain normalization was applied. This finding is consistent with a recent study showing greater robustness of UNI2-h and Virchow229. Attention heatmap analysis further revealed that ResNet50 was less effective in extracting pathological features compared with the pathology foundation models. Of note, Virchow and Virchow2 specifically focused on glomeruli in DKD classification, suggesting that these encoders successfully recognized glomerular structures. This finding may be attributed to their substantially larger training datasets compared with other pathology foundation models. Understanding the factors that influence the performance of foundation models in kidney pathology analysis is crucial for optimizing pretraining strategies and developing more effective models.

Diagnosis of kidney pathology typically relies on a combination of multiple stains. In this study, we focused on AIN and DKD, both of which exhibit distinct morphological features on H&E-stained slides. This explains why the model for the disease classification task demonstrated a good performance despite being trained solely on H&E-stained slides. For other kidney diseases such as lupus nephritis and membranous nephropathy, integrating multiple stains may be crucial for improving diagnostic accuracy. Indeed, a recent study showed that combining multiple stains enhanced the prediction of clinical remission in lupus nephritis30. The foundation models used in this study were trained primarily on H&E slides. Therefore, to improve the diagnostic performance in kidney pathology, developing domain-specific pathology foundation models trained on large-scale, multi-stained slides is an important next step.

In the exploratory analysis, we attempted to predict overt proteinuria in patients with DKD using the same pipeline as in the main analysis. Pathology foundation models also demonstrated higher performance than ResNet50. In the attention heatmap analysis, the ResNet50-based model assigned high attention scores across most patches, which may reflect overfitting to the training data. In contrast, the UNI2-h based model focused on glomeruli with mesangial matrix expansion and nodular lesions, as well as arteriolar hyalinosis. As nodular lesions and severe arteriolar hyalinosis have been reported to relate to higher proteinuria levels31, the UNI2-h-based model’s focus on these regions is pathologically plausible. Although the analysis was limited to proteinuria prediction in this study, the model’s ability to capture histologically meaningful features suggests that this approach could be extended to forecasting renal prognosis if sufficiently large WSI datasets linked to longitudinal clinical data were available.

This study has several limitations that warrant consideration. First, we used zero-hour and one-hour transplant kidney biopsies as healthy controls, which exhibited mild ischemic and/or ischemia–reperfusion injury, indicating that they may not fully represent normal kidney histology. Some models assigned high attention scores to coagulative tubular necrosis when classifying HC, likely reflecting the inclusion of transplant biopsies in the HC category. Note that zero-hour and one-hour transplant kidney biopsies are wedge biopsies; this sampling difference may have influenced model learning. Nevertheless, as shown in Fig. 5, the model correctly identified normal regions in the needle-biopsy AIN case. This may be attributed to the inclusion of needle-biopsy cases in the JP-AID HC cases. Second, while we excluded cases with coexisting kidney diseases based on pathology reports, some DKD and AIN slides contained atherosclerotic lesions, likely attributable to aging or hypertension, raising the possibility that the model learns hypertensive changes rather than diabetic changes. In fact, visualization analysis revealed that the models identified patches with interstitial fibrosis as important for DKD classification (Fig. 2c). Of note, the models based on Virchow and Virchow2 recognized glomerular alterations as important features for DKD diagnosis, indicating that the pathological features prioritized by the model may differ depending on the encoder. Third, our model was limited to classifying HC, AIN, and DKD. Since glomerular diseases such as IgA nephropathy and membranous nephropathy account for the majority of kidney biopsy cases, AIN and DKD cover only a minor subset. Therefore, the present models are not immediately applicable in clinical practice. Although our results suggest that pathology foundation models can recognize certain pathological findings (e.g., mesangial matrix expansion, interstitial inflammation), their ability to distinguish fine-grained lesions, such as discriminating mesangial hypercellularity with matrix expansion in IgA nephropathy from mesangial matrix expansion in DKD, remains unknown. Developing such fine-grained classifiers typically requires lesion-level annotations, which impose a substantial labeling burden and restrict recognition to predefined pathological patterns. In contrast, the weakly supervised framework used in this study could identify histologic signals including previously unrecognized features. Of note, such analyses require sufficiently large datasets, and any newly identified features would need validation with ancillary testing (e.g., immunohistochemistry) to establish their biological and clinical significance. Fourth, the relatively small number of slides used in this study may limit the generalizability of our findings. However, even with this limited training set, the pathology foundation models effectively extracted meaningful features and demonstrated good diagnostic performance in the external cohort.

In conclusion, we utilized pathology foundation models as feature extractors and demonstrated superior performance in the slide-level classification of kidney specimens compared with ImageNet-pretrained ResNet50. Their robustness against staining variability and inter-institutional differences highlights their potential for real-world clinical implementation. Since detailed annotations are not required, this approach has the potential to be extended to diagnosis of other diseases and prediction of clinical outcomes.

Data availability

The results here are in part based upon data generated by the Kidney Precision Medicine Project, accessed on January 20, 2025 (https://www.kpmp.org). KPMP is funded by the National Institute of Diabetes and Digestive and Kidney Diseases (Grant numbers: U01DK133081, U01DK133091, U01DK133092, U01DK133093, U01DK133095, U01DK133097, U01DK114866, U01DK114908, U01DK133090, U01DK133113, U01DK133766, U01DK133768, U01DK114907, U01DK114920, U01DK114923, U01DK114933, U24DK114886, UH3DK114926, UH3DK114861, UH3DK114915, UH3DK114937). The KPMP dataset used in this study is publicly available at https://atlas.kpmp.org/. The JP-AID dataset is available at https://jpaid.jp/ upon request, following the prescribed procedures. The UT dataset is not publicly available due to data protection regulations.

References

Jayapandian, C. P. et al. Development and evaluation of deep learning–based segmentation of histologic structures in the kidney cortex with multiple histologic stains. Kidney Int. 99, 86–101 (2021).

Holscher, D. L. et al. Next-Generation Morphometry for pathomics-data mining in histopathology. Nat. Commun. 14, 470 (2023).

Pilva, P., Bulow, R. & Boor, P. Deep learning applications for kidney histology analysis. Curr. Opin. Nephrol. Hypertens 33, 291–297 (2024).

Chen, T., Kornblith, S. & Norouzi, M. et al. A simple framework for contrastive learning of visual representations. arXiv:2002.05709 (2020).

Abe, M. et al. Self-supervised learning for feature extraction from glomerular images and disease classification with minimal annotations. J. Am. Soc. Nephrol. 36, 471–486 (2025).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929 (2020).

Chen, R. J. et al. Towards a general-purpose foundation model for computational pathology. Nat. Med. 30, 850–862 (2024).

Vorontsov, E. et al. Virchow: A million-slide digital pathology foundation model. arXiv:2309.07778 (2023).

Zimmermann, E. et al. Virchow2: Scaling Self-Supervised Mixed Magnification Models in Pathology. arXiv:2408.00738 (2024).

Xu, H. et al. A whole-slide foundation model for digital pathology from real-world data. Nature 630, 181–188 (2024).

Filiot, A. et al. Scaling self-supervised learning for histopathology with masked image modeling. medRxiv 2023.07.21.23292757.

Li, J. et al. Transforming medical imaging with Transformers? A comparative review of key properties, current progresses, and future perspectives. Med. Image Anal. 85, 102762 (2023).

He, K. et al. Deep residual learning for image recognition. IEEE Conf. Comput. Vis. Pattern Recogn. (CVPR) 2015, 770–778 (2016).

de Boer, I. H. et al. Rationale and design of the kidney precision medicine project. Kidney Int. 99, 498–510 (2021).

Sakai Yasuhiro, K. M., Masashi, F., & Akihiko, Y. Development of artificial intelligence to help pathological diagnosis-Japan pathology AI diagnostics (JP-AID) project. Impact 6, 40–42 (2019).

Dolezal, J. M. et al. Slideflow: Deep learning for digital histopathology with real-time whole-slide visualization. BMC Bioinformatics 25, 134 (2024).

Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9, 62–66 (1979).

Schömig-Markiefka, B. et al. Quality control stress test for deep learning-based diagnostic model in digital pathology. Mod. Pathol. 34, 2098–2108 (2021).

Vorontsov, E. et al. A foundation model for clinical-grade computational pathology and rare cancers detection. Nat. Med. 30, 2924–2935 (2024).

Wolflein, G. et al. Benchmarking pathology feature extractors for whole slide image classification. arXiv:2311.11772v5 (2024).

TorchVision: PyTorch’s Computer Vision library. In, GitHub repository, GitHub (2016).

Wolf, T. et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arXiv:1910.03771 (2019).

Maximilian Ilse, J. M.T. & Welling, M. Attention-based Deep Multiple Instance Learning. arXiv:1802.04712v4 (2018).

Zhuchen Shao, H. B. et al. TransMIL: Transformer based correlated multiple instance learning for whole slide image classification. arXiv:2106.00908v2 (2021).

Lu, M. Y. et al. Data-efficient and weakly supervised computational pathology on whole-slide images. Nat. Biomed. Eng. 5, 555–570 (2021).

KDIGO Clinical Practice Guideline for Acute Kidney Injury. Kidney Int. Suppl. 2 (2012).

Macenko, M. et al. A method for normalizing histology slides for quantitative analysis. IEEE Int. Symp. Biomed. Imaging Nano Macro 2009, 1107–1110 (2009).

Boschman, J. et al. The utility of color normalization for AI-based diagnosis of hematoxylin and eosin-stained pathology images. J. Pathol. 256, 15–24 (2022).

Edwin D., de Jong, E. M. & Teuwen, J. Current Pathology Foundation Models are unrobust to Medical Center Differences. arXiv:2501.18055 (2025).

Cheng, C. et al. Multi-stain deep learning prediction model of treatment response in lupus nephritis based on renal histopathology. Kidney Int. 107, 714–727 (2025).

Rodriguez-Rodriguez, R. et al. The role of vascular lesions in diabetes across a spectrum of clinical kidney disease. Kidney Int. Rep. 6, 2392–2403 (2021).

Acknowledgements

We sincerely thank Prof. Matthias Kretzler from Division of Computational Medicine and Bioinformatics, University of Michigan, Ann Arbor, Michigan for his helpful advice. We also thank Dr. Isao Matsui from Osaka University, Dr. Ryo Inuzuka, Dr. Masaya Sato, Dr. Hiroaki Ikushima, and Dr. Syohei Hanaoka from the University of Tokyo Hospital for their guidance and insightful discussions that have contributed to the completion of this study. We appreciate Kenichi Hashimoto from Division of Urology of the University of Tokyo in collecting the materials. This research was conducted using the JP-AID database of the Japanese Society of Pathology.

Funding

This study was supported by Cross-ministerial Strategic Innovation Promotion Program (SIP) on “Integrated Health Care System” Grant Number JPJ012425.

Author information

Authors and Affiliations

Contributions

YK and IM conceived and designed the study. YK, IM, and DY collected clinical data and kidney biopsy specimens. YK, IM, and HA digitized kidney biopsy sections. YK performed data analysis, deep learning, and visualization. Y.K. wrote the manuscript. IM, SK, and TT edited the manuscript. SK, HK, TU, NT, TT, and MN supervised the study.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kurata, Y., Mimura, I., Kodera, S. et al. Multiple instance learning using pathology foundation models effectively predicts kidney disease diagnosis and clinical classification. Sci Rep 15, 35298 (2025). https://doi.org/10.1038/s41598-025-19297-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-19297-9