Abstract

The increasing frequency and sophistication of cyber-attacks have exposed significant shortcomings in conventional detection systems, emphasizing the urgent need for more advanced Cyber Threat Intelligence (CTI) capabilities. While Open-Source Intelligence (OSINT) has become a cornerstone for early threat identification, the manual analysis of such unstructured data remains labor-intensive and susceptible to error, limiting its effectiveness. To address these challenges, this study introduces an AI-driven system designed for real-time detection and analysis of cyber threat information on Twitter. The approach integrates a hybrid feature extraction technique that combines Bidirectional Encoder Representations from Transformers (BERT) with Iterated Dilated Convolutional Neural Networks (ID-CNNs). To enhance feature relevance and remove noise and redundant features, the Binary Tree Growth (BTG) algorithm is employed as feature selection. Classification is performed using a bi-directional temporal convolutional network (BiTCN), which is well-suited for modeling sequential data. Experimental evaluations show that the proposed model achieves strong performance, with accuracy rates of 99.01% on dataset 1 and 97.63% on dataset 2 using 10-fold cross-validation. The proposed ID-BERT-BiTCN outperforms other machine learning (ML) and existing models. These results highlight the model’s potential to enhance the effectiveness of CTI by enabling timely and accurate threat detection from social media sources.

Similar content being viewed by others

Introduction

Digital technologies have profoundly reshaped how people connect, communicate, and share information across the globe. In this landscape, social media platforms stand out as vital arenas for large-scale interaction, collaboration, and the dissemination of information1. However, this widespread adoption has simultaneously expanded the attack surface for cybercriminals, who exploit these platforms for a range of malicious activities2. Threat actors frequently create fraudulent accounts, distribute malware-laden links, and impersonate reputable entities to manipulate users and gain unauthorized access to sensitive information. These tactics often involve leveraging publicly available user data, including behavioral patterns, affiliations, and preferences, for social engineering and reconnaissance purposes. The increasing frequency and sophistication of such cyber threats have made cybersecurity a critical priority for both public and private sector organizations3. Recent estimates place the economic impact of cybercrime at approximately 0.8% of global GDP, underscoring the scale and urgency of the issue4. Within this context, Security Operations Centers (SOCs) must operate with enhanced situational awareness, relying on timely and actionable intelligence to effectively safeguard digital infrastructure5.

This has led to the growing importance of Cyber Threat Intelligence (CTI), a domain dedicated to the systematic collection, processing, and analysis of threat data to anticipate and counter cyber-attacks6,7. CTI transforms raw technical artifacts, such as intrusion detection logs, malware signatures, and phishing indicators, into structured intelligence through established analytical methodologies employed by cybersecurity professionals8. One essential branch of CTI is Open Source Intelligence (OSINT)9, which harnesses publicly accessible data from platforms such as social networks, blogs, forums, and online publications to detect and interpret threat signals. Twitter, in particular, stands out as a valuable source of Open-Source Intelligence (OSINT). It hosts a continuous stream of updates from cybersecurity experts, organizations, and everyday users who share information about new vulnerabilities, exploits, and ongoing attacks. This real-time data can be systematically collected and analyzed to identify and track cyber events. Such analysis also aids in understanding threat actors’ motivations, behaviors, and tactics, techniques, and procedures (TTPs)10. As a result, developing reliable and automated methods to extract relevant threat intelligence from these platforms has become a key area of research in cybersecurity11.

Recent research in cybersecurity increasingly focuses on analyzing Twitter data to uncover security vulnerabilities and exploits. Several key studies have highlighted the importance of this approach. For example, Ruohonen et al.12 underscore the need to identify the root causes of vulnerabilities, while Abdelhaq et al.13 emphasize the development of effective mitigation strategies. Sabottke et al.8 utilized Twitter’s Streaming API in combination with a Support Vector Machine (SVM) model to track CVE (Common Vulnerabilities and Exposures) mentions. Their system achieved early warnings for real-world exploits with a median lead time of two days. Similarly, Kergl et al.14 proposed a crowdsourced detection method based on security-specific keywords to identify newly emerging vulnerabilities and zero-day exploits. Several machine learning-based research15,16,17 have leveraged the use of Twitter for cyber-security threat detection by developing models that identify security-related content from social media streams. Notably, Sceller et al.18 introduced a centroid-based classifier capable of detecting security-relevant tweets even without explicit CVE mentions, achieving an F1 score of 64%. Queiroz et al.19 proposed an SVM classifier to distinguish between security alerts and general discussions, reporting an accuracy of 94%, although misclassification remains a concern. Moreover, deep learning models20,21,22,23 are applied to detect traffic incidents on Twitter, outperforming previous approaches. Real-time detection of IT security information is explored in4 using CNN and BiLSTM models, showing strong classification performance. A multi-task learning approach based on IDCNN and BiLSTM is proposed in24, while25 employs locality-sensitive hashing and incremental clustering for dynamic event detection.

Despite notable progress in leveraging social media data for cyber threat intelligence, several gaps remain in current research. First, many existing studies rely on conventional machine learning models such as SVMs or keyword-based classifiers9,10,16, which lack the ability to capture deep contextual semantics and often lead to high false positive rates. Second, while deep learning approaches such as CNNs and BiLSTMs4,17,18,19,20,21 have demonstrated improved performance, they often focus on single-model architectures that either capture linguistic context or sequential patterns, but rarely both in a unified manner. Third, most prior research overlooks the problem of feature redundancy and noise in large-scale Twitter streams, which reduces efficiency and weakens real-time applicability. Finally, there is limited evidence of frameworks that combine contextual understanding, hierarchical pattern extraction, and temporal dependency modeling within a single pipeline for real-time cyber threat detection.

To address these challenges, we propose a novel hybrid framework (ID-BERT-BiTCN) that integrates contextual representation learning (BERT), hierarchical feature extraction (ID-CNNs), optimized feature selection (BTG algorithm), and sequential modeling (BiTCN). This combination is distinct from prior work and is specifically designed to improve contextual understanding, reduce false positives, and enhance real-time processing capability. By filling this gap, our study advances the state of Cyber Threat Intelligence (CTI), providing a scalable and accurate solution for detecting emerging threats on Twitter. In this study, we first introduce a threat intelligence framework that leverages deep neural network architectures to process streaming data and identify security-relevant information from tweets. The system collects tweets from a curated set of accounts via Twitter’s streaming API and applies keyword-based filtering to eliminate unrelated content. To construct a robust feature representation, we integrate Bidirectional Encoder Representations from Transformers (BERT) with Iterated Dilated Convolutional Neural Networks (ID-CNNs). While BERT captures deep contextual language features, ID-CNNs efficiently extract hierarchical patterns using dilated convolutions, thereby avoiding significant computational overhead. To optimize the model and reduce processing complexity, we propose a novel Binary Tree Growth (BTG) feature selection algorithm based on the k-nearest neighbors (kNN) approach. The final classification leverages a Bidirectional Temporal Convolutional Network (BiTCN), which excels in modeling sequential dependencies. Experimental results demonstrate that our model achieves a high accuracy of 99.01% on dataset 1 and 97.63% on dataset 2, surpassing current benchmarks. These findings underscore the framework’s potential to significantly advance Cyber Threat Intelligence (CTI) by enabling rapid, accurate threat detection from real-time social media data. The significant contributions of this research can be summarized as follows:

-

1.

Novel hybrid feature extraction framework that integrates Bidirectional Encoder Representations from Transformers (BERT) with Iterated Dilated Convolutional Neural Networks (ID-CNNs) to capture both deep contextual semantics and hierarchical sequential patterns from unstructured Twitter data.

-

2.

Introduction of the Binary Tree Growth (BTG) feature selection algorithm, which effectively reduces noise and redundant features, thereby enhancing efficiency and robustness in cyber threat detection.

-

3.

Application of a Bi-directional Temporal Convolutional Network (BiTCN) for classification, a model well-suited to sequential dependencies in streaming data, improves detection accuracy and stability.

-

4.

The integrated ID-BERT-BiTCN framework achieves state-of-the-art performance (accuracy of 99.01% on dataset 1 and 97.63% on dataset 2), significantly outperforming existing machine learning and deep learning models.

Overall, in this study, we integrate a framework that combines contextual language understanding (BERT), hierarchical pattern extraction (ID-CNNs), optimized feature selection (BTG), and sequential modeling (BiTCN), which will significantly enhance the accuracy, robustness, and real-time capability of cyber threat detection from Twitter, outperforming existing machine learning and deep learning approaches.

Materials and methods

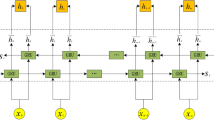

The suggested model’s design is presented in this part, as shown in Fig. 1. A detailed explanation of every element that makes up the model is given to provide a thorough comprehension.

Benchmark dataset

In statistical machine learning, selecting appropriate benchmark datasets is a fundamental step in the development and evaluation of deep learning-based predictive models. To ensure fair training and comparative assessment of our proposed model against existing state-of-the-art approaches, we utilized benchmark datasets derived from two primary sources: the publicly available dataset on Kaggle by Syed Abbas Raza (https://www.kaggle.com/datasets/syedabbasraza/suspicious-tweets/data) and the dataset introduced by Dionisio et al.4. Table 1 presents the total number of tweets using both datasets, including cyber threats and non-cyber threats.

The dataset 1 comprises 48,783 tweets, with 40,838 labeled as cyber threats and 7,945 labeled as non-cyber threats. In contrast, the dataset 2 comprises 37,538 tweets, consisting of 24,301 cyber threat tweets and 13,237 non-cyber threat tweets. While still imbalanced, the independent set is comparatively more balanced, offering a better reflection of real-world data for evaluating the model’s generalization capabilities.

Data preprocessing

To ensure consistency and improve the quality of text data sourced from Kaggle, we applied a series of fundamental preprocessing steps. These steps aimed to standardize the format and eliminate irrelevant or potentially misleading content. Emojis were removed using a custom regular expression to minimize ambiguity, while URLs and unnecessary punctuation were stripped to avoid noise in the analysis. All text was converted to lowercase to maintain uniformity across the dataset. Additionally, the NLTK library26 was employed for stop-word removal, and the Snowball stemmer was applied to reduce words to their root forms. These preprocessing procedures played a crucial role in refining the raw tweet data into a more structured and analyzable format. Following these steps, the cleaned dataset comprised 40,838 tweets labeled as cyber threats and 7,945 as non-cyber threats.

Similarly, the preprocessing dataset by Dionisio et al.4 was constructed through the manual curation of Twitter accounts and data collection via the Twitter API, spanning two distinct periods: November 21, 2016, to March 27, 2017, and June 1, 2018, to September 1, 2018. To ensure the relevance of the content, a set of predefined cybersecurity-related keywords was used to filter the streamed tweets. These relevant keywords associated with cybersecurity threats include Malware, Worms, Ransomware, Scammers, Viruses, and Hackers. Furthermore, the dataset was manually annotated to support two main tasks: Named Entity Recognition (NER) for identifying cybersecurity-specific entities and binary classification to determine whether a tweet conveys threat-related information. The final step in this stage involves a straightforward preprocessing routine designed to normalize tweet content. This includes converting all text to lowercase and eliminating hyperlinks and non-essential special characters. However, certain characters such as periods (.), hyphens (-), underscores (_), and colons (:) are retained, as they frequently appear in identifiers, version numbers, and technical component names. Representative examples of labeled tweets are presented in Table 2.

Feature encoding schemes

In the cyber threat intelligence (CTI) field, the increasing reliance on unstructured textual data ranging from threat reports to system logs demands sophisticated techniques for meaningful interpretation and analysis. Traditional machine learning approaches, primarily optimized for structured numerical inputs, face limitations when applied directly to text-based inputs encoded as images or raw strings, especially when spatial and temporal dependencies must be preserved. To address this challenge, feature extraction becomes a critical preprocessing step that translates raw textual representations into informative vectors suitable for model ingestion. Recent advancements highlight the effectiveness of hybrid architectures that blend deep learning with natural language processing (NLP) techniques. In particular, transformer-based models, such as BERT (Bidirectional Encoder Representations from Transformers), have demonstrated superior performance in extracting context-rich features from unstructured cybersecurity texts.

Bidirectional encoder representations from Transformers (BERT)

This work uses BERT to capture rich and discriminative features27. The core architecture of this framework is illustrated in Fig. 2, which outlines the sequence of operations from text encoding to final prediction. Several studies have demonstrated that fine-tuning BERT for specific cybersecurity tasks, such as threat classification and incident prediction, improves the accuracy and efficiency of detection systems. By leveraging BERT’s contextual embedding, CTI platforms can automatically parse and interpret threat data at scale, reducing the manual overhead traditionally required by analysts. Moreover, the automated extraction of actionable insights from diverse data sources, ranging from technical advisories to real-time social media feeds, enhances the proactive capabilities of modern security infrastructures. As the volume and complexity of textual threat data continue to grow, integrating NLP models like BERT offers a scalable solution for automating the interpretation and classification of cyber threats, reinforcing the analytical backbone of contemporary CTI frameworks. The ability of BERT to model contextual relationships through its self-attention mechanism makes it particularly well-suited for extracting semantic patterns from cyber threat narratives. This enables a detailed analysis of interactions among various threat components, including malware types, attack strategies, and vulnerable infrastructures. Such contextual comprehension is vital for developing accurate and responsive threat detection models.

The BERT28 framework was utilized to encode textual threat data, treating each sentence as a sequence of tokenized words. A special classification token (“[CLS]”) was appended to the beginning of each sequence to aggregate the semantic representation used for downstream predictions. To ensure uniformity across inputs, each tokenized sequence was padded to a fixed length of 200 using the “[PAD]” token and segmented using the “[SEP]” delimiter. The contextual embeddings generated by BERT were further processed using global average pooling to produce a fixed-size feature vector of 1024 dimensions. These dense representations capture the holistic meaning of the input text, enabling effective integration with deep learning models. The resulting vectors serve as input to the initial layer of the proposed classification architecture, where threat prediction tasks are subsequently carried out.

Iterated dilated convolutional neural networks (ID-CNNs)

In natural language processing (NLP), convolutional neural networks (CNNs) are adapted to operate over sequences of word vectors rather than on spatially structured pixel grids, as in computer vision. Each word in a sentence is typically represented by a high-dimensional embedding vector, often comprising hundreds of features, while the sentence itself forms a one-dimensional sequence. Consequently, CNNs applied in NLP are designed as one-dimensional filters that slide over this sequence to extract local contextual patterns. Formally, the output ct of the convolutional operator applied to each word Xt is defined as:

Where ⊕ is vector concatenation, 2r + 1 is the filter width w. Therefore, the effective input width of every token is limited by w. To enlarge the effective input width, dilated convolution20 transforms one token for every δ input, where δ is the dilation width. The dilated convolution operator is defined as:

Dilated convolutions with δ > 1 capture a broader context than standard convolutions without adding parameters. To extend this further, ID-CNN stacks multiple dilated layers, passing outputs sequentially to capture global context. While stacking more dilated convolution layers enhances global context capture, it also increases the risk of over-fitting, especially on smaller datasets due to added parameters. Strubell et al.7 proposed reusing the same filter across layers with varying dilation rates to address this. This recursive approach maintains a fixed parameter count while allowing the network to deepen, forming what is known as Iterated Dilated Convolutional Neural Networks (ID-CNNs). This makes them especially effective for detecting complex patterns such as multi-word threat indicators, named entities, and cyber-specific expressions in security-related textual data, thereby enhancing the performance of NLP-driven cybersecurity systems.

Our study employed an Iterated Dilated Convolutional Neural Network (ID-CNN) module comprising three dilated convolutional layers, each designed to extract hierarchical features from the input text. The dilation rates are set to 1, 2, and 3 across the first, second, and third layers. This progressive configuration enables each position in the sequence to aggregate rich contextual information from past and future tokens, exponentially expanding the receptive field without significantly increasing the number of parameters. Each layer employs a consistent number of 128 convolutional filters, yielding a 128-dimensional feature vector per token. The dilation block is iterated four times to balance model depth and prevent overfitting, which is particularly important for smaller cybersecurity datasets. This iterative approach enables deeper contextual encoding while maintaining parameter efficiency, thereby enhancing the model’s ability to extract meaningful features from cybersecurity-related text.

Binary tree growth (BTG) algorithm for feature selection

To address the complexity of cyber threat detection, this study employs a hybrid approach that combines the features of BERT and IDCNN, capturing both semantic and spatial-temporal characteristics of input data. These enriched features are then classified using Bi-Directional Temporal Convolution, which effectively models bidirectional dependencies for accurate cyber threat detection. However, these diverse features may also include irrelevant, noisy, or redundant information, which can degrade model performance29,30,31. To address this, an essential preprocessing step known as feature selection is applied to retain only the most informative features.

In this study, we employed the Binary Tree Growth (BTG) algorithm as a practical feature selection method to improve prediction accuracy and computational efficiency. The BTG algorithm is a discrete (binary) adaptation of the Tree Growth Algorithm introduced by Cheraghalipour et al.32. The algorithm begins by randomly initializing a population of candidate solutions, each represented as a binary vector (or tree). The quality of each tree is evaluated using a fitness function that balances two objectives: minimizing prediction error and reducing the number of selected features. The fitness function is defined as:

Where, \(\:{L}_{error}\) is the learning error rate, \(\:\left|{\updelta\:}\right|\) is the number of selected features, \(\:\left|{\uprho\:}\right|\) is the total number of features, and β is a weighting factor (i.e., 0 < β < 1) that controls the trade-off between accuracy and dimensionality reduction. Once the fitness (\(\:F\)) values are computed, the population is ranked in ascending order of fitness. The top-ranked trees are grouped into the first category (T1), and new trees are generated for this group using:

Here, Ni represents the tree (i.e., candidate solution) at the ith position in the population, \(\:\vartheta\:\) denotes the tree’s power decay rate, r is a random value in the range [0, 1], and t indicates the current iteration number. If the newly generated tree exhibits a better fitness score than the existing one, it replaces the current tree; otherwise, the original tree is retained for the next generation. The second group (T2) consists of moderately performing trees. For each tree in T2, the two closest trees (based on Euclidean distance) from the combined T1 and T2 groups are identified:

In this context, \(\:{N}_{{T}_{2}}\) denotes the current tree under consideration in the second group, while Ni represents the tree at the i-th position within the population. It is important to note that the Euclidean distance is considered infinite when \(\:{N}_{{T}_{2}}=i\), i.e., when the index T2 = i, to avoid self-selection. Subsequently, the two nearest trees, denoted as x1 and x2, corresponding to the minimum calculated distances di, are selected. A linear combination of these selected trees is then computed as:

Here, the parameter λ controls the relative influence of the closest trees in the linear combination. Based on this, the tree’s position within the second group is updated using the following Eq.

Where α represents the angle distribution parameter, constrained within the range [0, 1], the T3 least fit trees from the third group are removed and replaced with newly generated trees. The value of T3 is computed using the following Eq.

A masking operator enhances further exploration to produce new T4 candidates near the best solutions. These are merged with the existing population, and the top T individuals are retained for the next generation based on their fitness scores. This iterative process continues until a predefined stopping condition is met. For binary feature selection, a transfer function converts the real-valued positions of trees into probabilities, determining whether a feature is selected or not. In our implementation, a sigmoid function was adopted. In this study, we integrated the k-nearest neighbors (KNN) algorithm to evaluate the fitness of feature subsets due to its simplicity and computational efficiency. The value of k was empirically set to 3. As a result of the BTG-based selection, a refined feature set comprising 440 dimensions was obtained, significantly reducing input complexity while preserving essential predictive information.

SMOTE method

In this work, we address the class imbalance problem by employing the Synthetic Minority Over-sampling Technique (SMOTE ), initially introduced by Chawla et al.33. The core concept of SMOTE is to generate synthetic minority class samples by interpolating between existing ones, which are then incorporated into the dataset. To further refine the oversampled data, we utilize the Edited Nearest Neighbors (ENN) method34, which removes redundant or noisy instances. Specifically, ENN eliminates a majority-class sample if more than half of its K nearest neighbors belong to the minority class; similarly, if all K neighbors differ from the sample’s class, it is also discarded.

For feature representation, we use Bidirectional Encoder Representations from Transformers (BERT) with Iterated Dilated Convolutional Neural Networks (ID-CNNs) to comprehensively capture sequence characteristics. By applying SMOTE in combination with ENN to the extracted features, we obtain a balanced and cleaner dataset, leading to more reliable and effective classification performance.

Architecture of the bidirectional Temporal convolutional network

In recent years, Temporal Convolutional Networks (TCNs) have emerged as a powerful approach for modeling sequence data35. Traditional convolutional networks often fall short of capturing long-range dependencies within extended sequences due to constraints in kernel size36. TCNs overcome this limitation by incorporating advanced convolutional techniques, such as causal and dilated convolutions, which enable them to manage long-term dependencies more effectively while avoiding gradient vanishing problems during training37. Beyond their predictive strength, TCNs offer practical benefits like low memory usage, efficient parallel processing, and faster training times. However, standard TCNs are inherently unidirectional; they process information only from the past toward the future. This can lead to an incomplete understanding of context, especially for data where both past and future information are relevant for accurate interpretation38,39.

To address this limitation, this study introduces a Bidirectional Temporal Convolutional Network (BiTCN) designed to capture dependencies from both directions. BiTCN provides a richer contextual understanding by processing information in both forward and backward directions, enhancing the model’s ability to learn relationships between amino acid residues across varying lengths. Its adaptable receptive field allows it to model sequences efficiently without significantly increasing computational costs. BiTCN has also demonstrated strong generalization across sequences of different lengths40. The complete architecture of the BiTCN model is illustrated in Fig. 3.

Model training

This study trained the BiTCN model using benchmark datasets, with the corresponding hyperparameter configurations outlined in Table 3. The training begins by feeding an input feature matrix into the model, which then passes through three dilated causal convolutional layers with dilation rates of 1, 2, and 4. A normalization layer is applied to enhance convergence speed, and the ReLU activation function is used to mitigate the vanishing gradient issue41.

After evaluating several hyper-parameter combinations, we finalized the training setup using the Adam optimizer with a learning rate of 0.003, 40 epochs, and a batch size of 64. We incorporated a dropout rate of 0.3, L1 regularization, and LASSO techniques to enhance generalization and prevent over-fitting. The model is optimized using binary cross-entropy as the loss function, with uniform kernel initialization for the convolutional filters. The final output layer employs a sigmoid activation function30 to classify the input into the target binary class.

Performance evaluation

In deep learning, multiple performance metrics are employed to evaluate the effectiveness of computational models from various perspectives31. The evaluation typically begins with a confusion matrix, which provides insight into true positives, true negatives, false positives, and false negatives during training42. While accuracy (ACC) is a common and straightforward metric, it may not be sufficient for imbalanced datasets43,44,45. For this study, to ensure a comprehensive assessment of our proposed model, we employed commonly used performance measures, including Accuracy (ACC), Specificity (SP), Sensitivity (SN), and Matthews Correlation Coefficient (MCC). These metrics using the Chou symbol are defined as:

Where T+ symbolizes true positives, F+ symbolizes false positives, T− Symbolizes true negatives, and F− false negatives, respectively.

Results and discussion

Experimental setup

In this study, the experiments were conducted on a high-performance Dell workstation equipped with an Intel Core i7 processor running at 3.3 GHz and 16 GB of RAM. The experimental setup utilized the Python programming language, chosen for its extensive ecosystem of libraries for deep learning and data analysis. Model development and evaluation utilized Keras and TensorFlow to build convolutional neural network architectures efficiently. The Transformers library was employed to implement Bidirectional Encoder Representations from Transformers (BERT), which was combined with Iterated Dilated Convolutional Neural Networks (ID-CNNs) for hybrid feature extraction. Additionally, the Binary Tree Growth (BTG) algorithm was integrated for feature selection. Scikit-learn supported data preprocessing and performance evaluation, while Matplotlib facilitated visualization of training metrics and statistical analysis. This configuration provided a robust and flexible environment to validate the effectiveness and computational efficiency of the proposed model.

Performance analysis

In this section, we used to assess the suggested model’s efficacy concerning various feature extraction techniques, including Bidirectional Encoder Representations from Transformers (BERT) and Iterated Dilated Convolutional Neural Networks (ID-CNNs), as well as hybrid features (i.e., features obtained both before and after feature selection) using both datasets (i.e., datasets 1 and 2). Table 4 displays the outcomes of our suggested model’s evaluation across several sequence formation strategies. Using dataset 1, the proposed model outperformed its peers, especially when using hybrid traits instead of individual ones. The proposed model achieved a 98.76% success rate before feature selection, with an MCC of 0.955. We used a feature selection strategy to lower the dimensionality of the hybrid feature space, which further boosted performance. The result was an MCC of 0.964 and an accuracy of 99.01%.

Further, Table 4 highlights the performance gains in individual and hybrid feature sets using dataset 2. The proposed model consistently outperformed individual feature methods when leveraging hybrid features. Specifically, it attained an average accuracy of 97.55% before feature selection with an MCC of 0.946, which increased further to 99.63% and an MCC of 0.948 after feature selection. These experimental results confirm that the proposed model’s predictive effectiveness using both datasets with optimal hybrid features.

Utilizing LIME on samples selected at random after BTG feature selection (A) Dataset 1-Kaggle (B) Dataset 2-Kaggle Dionisio4.

To further investigate the role of various characteristics, we executed a LIME research46 on a randomly selected instance after the BTG analysis for group categorization; the results are shown in Fig. 4. Class 0 represents a negative class (orange: Non-Cyber Threats), while Class 1 represents a positive class (blue: Cyber Threats).

Moreover, we evaluate the derived features using t-distributed Stochastic Neighbor Embedding (t-SNE)40 on both datasets. Both global relationships and local structural features are captured successfully by this method. Figure 5 shows t-SNE visualization before BTG, showing that there is overlap between the positive and negative cyber threats in the hybrid features. But after BTG-selected optimal features, the t-SNE maps showed clear clusters for pos + and neg- threats, with very few false positives and negatives overlapping in the optimal hybrid features. Similarly, using dataset 2 as shown in Fig. 6, t-SNE visualization before BTG, the t-SNE maps showed apparent overlapping of the positive and negative cyber threats, but after BTG selected optimal features, the t-SNE maps showed clear clusters for pos+ and neg− threats.

The t-SNE visualization of the proposed model using Dataset 2-Dionisio4.

Additionally, Fig. 7 presents a confusion matrix that provides further insight into the performance of the proposed model, showcasing its effectiveness in the prediction with the selected feature vector on both datasets.

Confusion metrics of the proposed model using (A) Dataset 1-Kaggle, (B) Dataset 2-Kaggle Dionisio4.

Moreover, we have compared the proposed feature selection algorithm with metaheuristic algorithms, including Salp Swarm Algorithm (SSA), Grey Wolf Optimizer (GWO), Whale Optimization Algorithm (WOA), and Particle Swarm Optimization (PSO), as shown in Table 5. From Table 5, the Binary Tree Growth (BTG) algorithm shows superior performance compared to other algorithms. BTG consistently achieves higher accuracy, F1-score, precision, recall, and MCC across both datasets. For example, on Dataset 1, BTG attains an accuracy of 99.01% and an F1-score of 99.17%, outperforming PSO, which achieved 98.61% accuracy and 98.91% F1-score. Similarly, on Dataset 2, BTG maintains the highest F1-score (97.94%) among all compared algorithms. These results demonstrate that BTG not only improves classification performance but also effectively reduces noise and redundant features, making it a robust and efficient choice for real-time cyber threat detection in social media streams.

Other learning classifiers comparison

In this section, we compare the proposed model’s performance against other commonly used ML methods using hybrid features. These ML algorithms include Convolutional Neural Network (CNN)47, Deep Neural Network (DNN)43, Support Vector Machine (SVM)48, and Extreme Gradient Boosting (XGB)42. Table 6 shows the performance of these classifiers using both datasets.

From Table 6, the proposed ID-BERT-BiTCN model achieved the highest accuracy of 99.01% and 97.63% on both datasets, outperforming all other classifiers. CNN ranked second with an accuracy of 98.44% and 97.16% respectively. In terms of MCC, which indicates model stability, ID-BERT-BiTCN recorded the highest value of 0.964 and 0.948, followed by CNN at 0.944 and 0.938, respectively, on both datasets. Overall, ID-BERT-BiTCN surpasses other ML models, improving the average success rate by 0.78% and 0.84% on both datasets, respectively.

Performance evaluation and ablation study

To evaluate the contribution of each component and design choice in the proposed ID-BERT-BiTCN framework, we conducted a comprehensive ablation study. Table 7 summarizes the results across three perspectives: (i) component ablation, (ii) classifier comparison, and (iii) feature selection algorithm comparison.

In the component-level analysis, removing either BERT or ID-CNN features leads to a noticeable drop in performance, highlighting the importance of both contextual representation and hierarchical feature extraction. Feature selection using the proposed Binary Tree Growth (BTG) algorithm further improves accuracy and F1-score compared to hybrid features without selection, demonstrating its effectiveness in reducing noise and redundancy. Classifier comparison confirms that the integrated BiTCN module contributes significantly to modeling sequential dependencies, as the full ID-BERT-BiTCN consistently outperforms CNN, DNN, XGBoost, and SVM classifiers on both datasets. Finally, among the tested optimization algorithms for feature selection, BTG achieves the highest performance metrics, outperforming SSA, GWO, WOA, and PSO, which validates its superiority for this task.

Overall, this ablation study demonstrates that each module in the proposed pipeline, including contextual embedding, hierarchical extraction, optimized feature selection, and sequential classification, plays a critical role in achieving the high accuracy and robustness of the ID-BERT-BiTCN framework.

Comparison with existing state-of-the-art models

This section compares the proposed model with existing benchmark models. Table 8 presents a comparative analysis of our proposed ID-BERT-BiTCN model against several state-of-the-art methods on Dataset 1, focusing on key performance metrics such as F1-score and recall. Table 8 highlights the performance of models developed by Mahaini et al.11, Alves et al.16, Dionisio et al.23, and Alsodi et al.21. Mahaini et al. achieved an F1-score of 90%, while Alves et al. and Dionisio et al. reported recall scores of 90.00% and 94.00%, respectively. Notably, Alsodi et al. attained an F1-score of 99.00%, setting a high benchmark for performance. Our proposed model, ID-BERT-BiTCN, outperforms these methods with an F1-score of 99.17%, indicating superior ability to balance precision and recall. This result demonstrates the effectiveness of integrating BERT’s contextual language understanding with the temporal feature extraction capabilities of a bidirectional Temporal Convolutional Network (BiTCN).

Table 9 provides a performance comparison of F1 scores achieved by different models on Dataset 2. Dionisio et al.4 reported an F1 score of 92.20%, while Bekhouche et al.22 achieved a significantly higher score of 97.40%. Our proposed model, ID-BERT-BiTCN, outperforms these results with an F1 score of 97.94%. This improvement, although incremental, demonstrates the effectiveness and robustness of our model in accurately detecting relevant patterns, achieving better precision-recall balance than prior methods. The results affirm the potential of ID-BERT-BiTCN as a state-of-the-art approach for cybersecurity-related classification tasks.

Conclusion

Online social networks (OSNs) have become critical platforms for both legitimate communication and malicious cyber activities. As such, identifying cybersecurity-related accounts and tracking their activities can support cyber threat intelligence (CTI), enhance attack prevention and detection, and provide insights into the effectiveness of cybersecurity awareness efforts. In this study, we proposed the ID-BERT-BiTCN framework, which integrates Bidirectional Encoder Representations from Transformers (BERT) and Iterated Dilated Convolutional Neural Networks (ID-CNNs) for robust feature extraction, employs the Binary Tree Growth (BTG) algorithm for effective feature selection, and leverages a Bi-directional Temporal Convolutional Network (BiTCN) for sequential classification. Comparative evaluations on two benchmark datasets demonstrate that the proposed model consistently outperforms existing machine learning and deep learning methods, achieving superior accuracy and the highest F1-scores of 99.17% and 97.94%. These results validate our research hypothesis and highlight the originality of combining contextual representation, hierarchical pattern extraction, optimized feature selection, and temporal dependency modeling within a unified framework. By doing so, this study sets a new benchmark for real-time and accurate CTI from social media sources.

Despite these promising results, several limitations remain. First, the model relies on curated datasets that may not fully represent the evolving dynamics of real-world Twitter streams. Second, the training process, though optimized with BTG feature selection, is still computationally demanding, potentially limiting large-scale or resource-constrained applications. Third, this study focuses primarily on Twitter data, and broader applicability across multiple OSNs requires further investigation. As future directions, we aim to (i) refine hyperparameter configurations and explore distributed parallel training to reduce computational complexity, (ii) extend the framework to include multiple OSINT platforms such as Reddit, Telegram, and forums to strengthen coverage against cross-platform threats, and (iii) incorporate explainable AI (XAI) methods to enhance the interpretability of threat detection results for cybersecurity professionals. These steps will not only improve scalability and efficiency but also increase the practical value of the framework in real-world CTI operations49,50,51.

Data availability

The data used in this study is sourced from the publicly available link https://www.kaggle.com/datasets/syedabbasraza/suspicious-tweets/data and the dataset introduced by Dionisio et al.4.

References

Mavaluru, D. et al. Deep convolutional neural network based real-time abnormal behavior detection in social networks. Comput. Electr. Eng. https://doi.org/10.1016/j.compeleceng.2023.108987 (2023).

Michael Onyema, E. et al. Remote monitoring system using slow-fast deep Convolution neural network model for identifying anti-social activities in surveillance applications. Meas. Sens. https://doi.org/10.1016/j.measen.2023.100718 (2023).

Chen, H., Lee, S. & Jeong, D. Application of a FL time series Building model in mobile network interaction anomaly detection in the internet of things environment. Comput. Intell. Neurosci. https://doi.org/10.1155/2022/2760966 (2022).

DIonisio, N., Alves, F., Ferreira, P. M. & Bessani, A. Towards end-to-end cyberthreat detection from twitter using multi-task learning. In Proceedings of the International Joint Conference on Neural Networks. https://doi.org/10.1109/IJCNN48605.2020.9207159 (2020).

Saheed, Y. K., Abdulganiyu, O. H. & Tchakoucht, T. A. Modified genetic algorithm and fine-tuned long short-term memory network for intrusion detection in the internet of things networks with edge capabilities. Appl. Soft Comput. 155, 111434. https://doi.org/10.1016/j.asoc.2024.111434 (2024).

Kayode Saheed, Y. & Ebere Chukwuere, J. CPS-IIoT-P2Attention: explainable Privacy-Preserving with scaled Dot-Product attention in Cyber-Physical System-Industrial IoT network. IEEE Access. 13, 81118–81142. https://doi.org/10.1109/ACCESS.2025.3566980 (2025).

Saheed, Y. K., Misra, S. & CPS-IoT-PPDNN A new explainable privacy preserving DNN for resilient anomaly detection in Cyber-Physical Systems-enabled IoT networks. Chaos Solitons Fractals. 191, 115939. https://doi.org/10.1016/j.chaos.2024.115939 (2025).

Sabottke, C., Suciu, O. & Dumitras, T. Vulnerability disclosure in the age of social media: Exploiting twitter for predicting real-world exploits. In Proceedings of the 24th USENIX Security Symposium 1041–1056 (2015).

Evangelista, J. R. G., Sassi, R. J., Romero, M. & Napolitano, D. Systematic literature review to investigate the application of open source intelligence (OSINT) with artificial intelligence. J. Appl. Secur. Res. https://doi.org/10.1080/19361610.2020.1761737 (2021).

Sun, N. et al. Cyber threat intelligence mining for proactive cybersecurity defense: A survey and new perspectives. IEEE Commun. Surv. Tutorials. https://doi.org/10.1109/COMST.2023.3273282 (2023).

Mahaini, M. I. & Li, S. Detecting cyber security related Twitter accounts and different sub-groups. In Proceedings of the 2021 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 599–606 (ACM, 2021). https://doi.org/10.1145/3487351.3492716

Ruohonen, J., Hyrynsalmi, S. & Leppänen, V. A mixed methods probe into the direct disclosure of software vulnerabilities. Comput. Hum. Behav. https://doi.org/10.1016/j.chb.2019.09.028 (2020).

Abdelhaq, H., Sengstock, C. & Gertz, M. EvenTweet: Online localized event detection from Twitter. Proc. VLDB Endow. https://doi.org/10.14778/2536274.2536307 (2013).

Kergl, D., Roedler, R. & Rodosek, G. D. Detection of zero day exploits using real-time social media streams. In Advances in Intelligent Systems and Computing. https://doi.org/10.1007/978-3-319-27400-3_36 (2016).

Rodriguez, A. & Okamura, K. Generating real time cyber situational awareness information through social media data mining. In 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC) 502–507 (IEEE, 2019). https://doi.org/10.1109/COMPSAC.2019.10256

Alves, F., Bettini, A., Ferreira, P. M. & Bessani, A. Processing tweets for cybersecurity threat awareness. Inf. Syst. https://doi.org/10.1016/j.is.2020.101586 (2021).

Rodriguez, A. & Okamura, K. Enhancing data quality in real-time threat intelligence systems using machine learning. Soc. Netw. Anal. Min. https://doi.org/10.1007/s13278-020-00707-x (2020).

Sceller, Q., Le, Karbab, E. M. B., Debbabi, M. & Iqbal, F. SONAR: Automatic detection of cyber security events over the twitter stream. In ACM International Conference Proceeding Series. https://doi.org/10.1145/3098954.3098992 (2017).

Queiroz, A., Keegan, B. & Mtenzi, F. Predicting software vulnerability using security discussion in social media. In European Conference on Information Warfare and Security, ECCWS (2017).

Dabiri, S. & Heaslip, K. Developing a Twitter-based traffic event detection model using deep learning architectures. Expert Syst. Appl. https://doi.org/10.1016/j.eswa.2018.10.017 (2019).

Alsodi, O., Zhou, X., Gururajan, R., Shrestha, A. & Btoush, E. Cyber threat detection on twitter using deep learning techniques: IDCNN and BiLSTM Integration. In 2024 Twelfth International Conference on Advanced Cloud and Big Data (CBD) 375–379. (IEEE, 2024). https://doi.org/10.1109/CBD65573.2024.00073

Bekhouche, M. E. A. & Adi, K. Advanced Real-Time detection of cyber threat information from tweets. In: (eds Adi, K., Bourdeau, S., Durand, C., Viet Triem Tong, V., Dulipovici, A., Kermarrec, Y. et al.) Foundations and Practice of Security. Cham: Springer Nature Switzerland; 18–33. (2025).

Dionisio, N., Alves, F., Ferreira, P. M. & Bessani, A. Cyberthreat detection from twitter using deep neural networks. In 2019 International Joint Conference on Neural Networks (IJCNN) 1–8 (IEEE, 2019). https://doi.org/10.1109/IJCNN.2019.8852475

Fang, Y., Gao, J., Liu, Z. & Huang, C. Detecting cyber threat event from Twitter using IDCNN and BiLSTM. Appl. Sci. https://doi.org/10.3390/app10175922 (2020).

Sani, A. M. & Moeini, A. Real-time event detection in Twitter: A case study. In 2020 6th International Conference on Web Research, ICWR 2020. https://doi.org/10.1109/ICWR49608.2020.9122281 (2020).

Rifano, E. J. et al. Text summarization Menggunakan library natural Language toolkit (NLTK) berbasis Pemrograman python. Ilk J. Comput. Sci. Appl. Inf. https://doi.org/10.28926/ilkomnika.v2i1.32 (2020).

Wang, R., Jin, J., Zou, Q., Nakai, K. & Wei, L. Predicting protein–peptide binding residues via interpretable deep learning. Bioinformatics 38, 3351–3360. https://doi.org/10.1093/bioinformatics/btac352 (2022).

Lin, K., Quan, X., Jin, C., Shi, Z. & Yang, J. An interpretable Double-Scale attention model for enzyme protein class prediction based on transformer encoders and Multi-Scale convolutions. Front. Genet. 13. https://doi.org/10.3389/fgene.2022.885627 (2022).

Khan, S., Uddin, I., Noor, S., AlQahtani, S. A. & Ahmad, N. N6-methyladenine identification using deep learning and discriminative feature integration. BMC Med. Genomics. 18, 58. https://doi.org/10.1186/s12920-025-02131-6 (2025).

Noor, S., AlQahtani, S. A. & Khan, S. XGBoost-Liver: an intelligent integrated features approach for classifying liver diseases using ensemble XGBoost training model. Comput. Mater. Contin. 83, 1435–1450. https://doi.org/10.32604/cmc.2025.061700 (2025).

Khan, S. et al. Deep-ProBind: binding protein prediction with transformer-based deep learning model. BMC Bioinform. 26, 88. https://doi.org/10.1186/s12859-025-06101-8 (2025).

Cheraghalipour, A., Hajiaghaei-Keshteli, M. & Paydar, M. M. Tree growth algorithm (TGA): A novel approach for solving optimization problems. Eng. Appl. Artif. Intell. 72, 393–414. https://doi.org/10.1016/j.engappai.2018.04.021 (2018).

Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. SMOTE: synthetic minority Over-sampling technique. J. Artif. Intell. Res. 16, 321–357. https://doi.org/10.1613/jair.953 (2011).

Tang, B. & He, H. ENN: extended nearest neighbor method for pattern recognition [Research Frontier]. IEEE Comput. Intell. Mag. 10, 52–60. https://doi.org/10.1109/MCI.2015.2437512 (2015).

Shaikh, A. K., Nazir, A., Khalique, N., Shah, A. S. & Adhikari, N. A new approach to seasonal energy consumption forecasting using Temporal convolutional networks. Results Eng. 19, 101296. https://doi.org/10.1016/j.rineng.2023.101296 (2023).

Zhang, Y., Ma, Y. & Liu, Y. Convolution-Bidirectional Temporal convolutional network for protein secondary structure prediction. IEEE Access. 10, 117469–117476. https://doi.org/10.1109/ACCESS.2022.3219490 (2022).

Yuan, L., Ma, Y. & Liu, Y. Ensemble deep learning models for protein secondary structure prediction using bidirectional Temporal Convolution and bidirectional long short-term memory. Front. Bioeng. Biotechnol. https://doi.org/10.3389/fbioe.2023.1051268 (2023).

Saheed, Y. K., Misra, S. & Chockalingam, S. Autoencoder via DCNN and LSTM Models for Intrusion Detection in Industrial Control Systems of Critical Infrastructures. In 2023 IEEE/ACM 4th International Workshop on Engineering and Cybersecurity of Critical Systems (EnCyCriS) 9–16 (IEEE, 2023). https://doi.org/10.1109/EnCyCriS59249.2023.00006

Saheed, Y. K., Abdulganiyu, O. H., Majikumna, K. U., Mustapha, M. & Workneh, A. D. ResNet50-1D-CNN: A new lightweight resNet50-One-dimensional Convolution neural network transfer learning-based approach for improved intrusion detection in cyber-physical systems. Int. J. Crit. Infrastruct. Prot. 45, 100674. https://doi.org/10.1016/j.ijcip.2024.100674 (2024).

Akbar, S., Zou, Q., Raza, A. & Alarfaj, F. K. iAFPs-Mv-BiTCN: predicting antifungal peptides using self-attention transformer embedding and transform evolutionary based multi-view features with bidirectional Temporal convolutional networks. Artif. Intell. Med. 151, 102860. https://doi.org/10.1016/j.artmed.2024.102860 (2024).

Khan, S. et al. Sequence based model using deep neural network and hybrid features for identification of 5-hydroxymethylcytosine modification. Sci. Rep. 14, 9116. https://doi.org/10.1038/s41598-024-59777-y (2024).

Khan, S. et al. XGBoost-enhanced ensemble model using discriminative hybrid features for the prediction of sumoylation sites. BioData Min. 18, 12. https://doi.org/10.1186/s13040-024-00415-8 (2025).

Khan, F. et al. Prediction of recombination spots using novel hybrid feature extraction method via deep learning approach. Front. Genet. 11, 1052. https://doi.org/10.3389/fgene.2020.539227 (2020).

Inayat, N. et al. iEnhancer-DHF: identification of enhancers and their strengths using optimize deep neural network with multiple features extraction methods. IEEE Access. 9, 40783–40796. https://doi.org/10.1109/ACCESS.2021.3062291 (2021).

Ahmad, W. et al. Intelligent hepatitis diagnosis using adaptive neuro-fuzzy inference system and information gain method. Soft Comput. 23, 10931–10938. https://doi.org/10.1007/s00500-018-3643-6 (2019).

Qiu, Y. & Zhou, J. Short-term rockburst prediction in underground project: insights from an explainable and interpretable ensemble learning model. Acta Geotech. 18, 6655–6685. https://doi.org/10.1007/s11440-023-01988-0 (2023).

Tomala, M. & Staniec, K. Modelling of ML-Enablers in 5G radio access Network-Conceptual proposal of computational framework. Electronics 12, 481. https://doi.org/10.3390/electronics12030481 (2023).

Tran, T-X., Nguyen, V-N. & Le, N. Q. K. Incorporating natural language-based and sequence-based features to predict protein sumoylation sites. In Lecture Notes in Networks and Systems 74–88. https://doi.org/10.1007/978-3-031-36886-8_7 (2023).

Khan, S. et al. Optimized feature learning for Anti-Inflammatory peptide prediction using parallel distributed computing. Appl. Sci. 13, 7059. https://doi.org/10.3390/app13127059 (2023).

Noor, S. et al. Optimizing performance of parallel computing platforms for large-scale genome data analysis. Computing 107, 86. https://doi.org/10.1007/s00607-025-01441-y (2025).

Khan, S., Khan, M., Iqbal, N., Li, M. & Khan, D. M. Spark-Based parallel deep neural network model for classification of large scale RNAs into PiRNAs and Non-piRNAs. IEEE Access. 8, 136978–136991. https://doi.org/10.1109/ACCESS.2020.3011508 (2020).

Acknowledgements

Ongoing Research Funding program - Research Chairs (ORF-RC-2025-5300), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work. SK and SN wrote the main manuscript, and SAQ, NA and ND debugged the code and provided the datasets.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Khan, S., Dilshad, N., Ahmad, N. et al. Integrating AI in security information and event management for real time cyber defense. Sci Rep 15, 35872 (2025). https://doi.org/10.1038/s41598-025-19689-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-19689-x

This article is cited by

-

Application of generative adversarial networks (GAN) and reinforcement learning in drug classification

Discover Computing (2026)

-

LightDTA: lightweight drug-target affinity prediction via random-walk network embedding and knowledge distillation

Molecular Diversity (2026)

-

Privacy preserving epileptic seizure recognition using federated and explainable machine learning

Discover Computing (2026)