Abstract

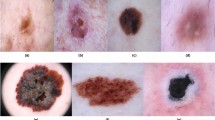

The classification of human skin disorders, particularly benign and malignant skin cancer, is thoroughly examined in this study with a focus on protecting data privacy. Traditional visual diagnosis of skin disorders is often subjective and complicated by the varying colors, textures, and shapes of lesions. To address these challenges, we propose a privacy-preserving and explainable deep learning (DL) architecture that leverages secure federated learning (FL) on distributed medical data sources without exposing private patient information, ensuring compliance with data protection regulations. Real-world decentralized scenarios are simulated by dividing a skin image dataset into two classes and distributing it among three clients. The Federated Averaging (FedAvg) method is employed to train the VGG19 model—a well-established convolutional neural network (CNN)—over 25 federated communication rounds, after pretraining on ImageNet and fine-tuning for binary classification. To enhance robustness and diversity, dermatology datasets, such as Kaggle, are often used in similar studies for performance evaluation. Additionally, explainable AI (XAI) techniques, such as Gradient-weighted Class Activation Mapping (Grad-CAM), are incorporated to improve transparency and assist clinicians in visualizing and interpreting the model’s decision-making process. Experimental results demonstrate that the federated approach maintains data privacy while achieving high classification performance. This work highlights the potential of combining explainability and FL to develop reliable and privacy-conscious AI solutions for dermatological diagnosis.

Similar content being viewed by others

Introduction

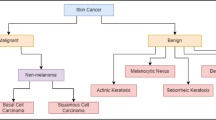

Millions of new cases of skin cancer are diagnosed each year, making it one of the most prevalent types of cancer worldwide. Melanoma, a malignant form of skin cancer, can be particularly deadly if not detected early1. The skin functions as a protective barrier for internal organs and structures, emphasizing the significant impact that even minor disturbances can have on the body’s systems. The varying appearance and severity of skin lesions reflect the diverse etiology of skin disorders2. Although early detection is essential for increasing patient survival rates, traditional diagnostic methods mostly rely on dermatologists’ subjective and error-prone visual evaluation3. Both benign and malignant (melanoma) are forms of skin cancer that, when caught early, are almost always curable. Examining the afflicted skin area physically to rule out other possible causes is the first step in diagnosing melanoma. Global estimates suggest that 10 million people would lose their lives to cancer in 20203. The use of computational technologies in the diagnosis of skin cancer has grown in popularity, with advances in Artificial Intelligence (AI) and image processing being essential for precise lesion assessment and early identification. When paired with Machine Learning (ML) algorithms, methods including digital mammography, histopathology, and skin tomosynthesis have significantly improved diagnostic accuracy.

Melanoma cancer cases have been steadily rising over the past few years. A small surgical procedure can improve the chances of recovery if the cancer is discovered early. Dermatologists frequently employ the popular non-invasive dermoscopic imaging method to assess pigmented skin lesions4. By enlarging the lesion’s position or surface, dermoscopy makes the structure easier for the dermatologist to examine5,6. The development of automated and precise diagnostic systems has been greatly aided by recent developments in AI, especially DL. Skin cancer classification is one of the medical imaging tasks where CNNs have demonstrated remarkable performance7. Among these, VGG16, a deep CNN that has already been trained on ImageNet, has been extensively utilized for Transfer Learning (TL) because of its robust feature extraction capabilities and straightforward architecture8. By optimizing VGG16 on datasets of dermoscopic images, researchers have been able to differentiate between benign and malignant lesions with high accuracy9. A central agent is in charge of supplying raw data in the conventional healthcare system. This system continues to have serious hazards and issues as a result. Among the most pressing issues are security and privacy. In conjunction with AI, the system would have multiple agent collaborators who could effectively communicate with their target host. The biological data include information specific to each patient, including information about blood activity, skin response, respiration response, heart activity, brain activity, facial expressions, and various other vital signals. Because information might be gathered as part of a national research project, which presents a risk to both individual and national privacy, this kind of data raises serious privacy concerns10. Recent advancements in ML and data-driven modeling have significantly enhanced predictive capabilities in energy-related applications. For instance, Ahsan Ali et al.11 demonstrated the potential of federated ML to predict hydrogen storage in Dibenzyltoluene (DBT), a liquid organic hydrogen carrier, thereby offering a novel approach to decentralized energy system optimization. ML techniques have increasingly been employed in the field of energy storage to enhance prediction accuracy and optimize system performance. Notably, Ahsan Ali et al.12 applied ML models to predict hydrogen storage in DBT, a promising liquid organic hydrogen carrier, highlighting the potential of data-driven approaches in advancing hydrogen-based energy systems.

Yasir Ali et al.13 introduced a two-step defense against poisoning attacks in FL. It is a defense method for FL. Its main goal is to reduce false positives in detecting poisoned models. The method works in two steps. First, it uses two thresholds instead of one to avoid wrongly rejecting borderline models. Second, it checks the past performance of these models to decide if they are safe or poisoned. To stay accurate over many learning rounds, the thresholds are adjusted automatically based on changes in model behavior. Abdul Majeed et al.14 provided a thorough examination of FL, going into its fundamentals, paradigm changes, real-world difficulties, and significant advancements. The authors also discussed the trade-offs related to FL’s integration with other technologies, cutting-edge solutions, studies on its reliability, and possible future research approaches. Hwang, S. O. et al.15 reviewed the use of FL in healthcare, including COVID-19. It explained the need, architecture, and applications of FL in the medical domain. It also discussed evolving technologies like federated analytics and swarm learning, along with major challenges and vulnerabilities. Finally, it suggested future research areas to improve the reliability and security of FL in healthcare. Deebak B. D. et al.16 FL-based lightweight two-factor authentication framework with privacy preservation for mobile sink in the social IoMT. Electronics, 12(5), 1250 introduces a lightweight two-factor authentication framework for smart eHealth with strong privacy protection. Using FL Layered Authentication (FLLA) and FL, it defends against major cyber-attacks and learns data features securely. Tests on the Modified National Institute of Standards and Technology database (MNIST) and Fashion-MNIST show high accuracy ranging from 89.83% to 93.41%.

Our study aims to classify skin lesions into benign and malignant categories by presenting a comprehensive, privacy-preserving framework for automated skin cancer identification. The suggested framework is based on the well-known VGG16 CNN architecture and capitalizes on the advantages of secure Deep Transfer Learning (DTL) and FL. Our framework incorporates Grad-CAM to enhance interpretability and transparency. Grad-CAM allows researchers and doctors to see the areas of input skin photos that have the biggest impact on the model’s predictions. Because it provides visual explanations for every categorization, this interpretability not only increases confidence in AI-based diagnostic tools but also aids in clinical decision-making. In order to enhance interpretability and transparency, we incorporate Grad-CAM into our system. By using Grad-CAM, researchers and physicians can see which areas of input skin photos have the biggest impact on the model’s predictions. This interpretability helps clinical decision-making by providing visual explanations for each classification, while also fostering confidence in AI-based diagnostic tools.

The remainder of this work is organized as follows. Section 2 reviews prior studies and identifies the literature gap that this research seeks to fill. Section 3 details the methodology adopted. Section 4 describes the Results and simulation. Finally, Sect. 5 concludes the paper and highlights future work.

Literature review

The author suggested the Hybrid 6 network had the best accuracy (88.29%) and sensitivity (85.19%). According to the authors’ findings17, DL models can accurately classify cancer and perform on par with dermatologists. Nevertheless, several aspects that reduce accuracy and the possibility for improvement have been clarified. The author’s objective: An AI-based approach integrates specialized diagnostic knowledge into primary care. CNN is one of the most appropriate Artificial Neural Networks (ANN) implementations, particularly for medical imaging data. Previous research suggests that in clinical settings, ANN can effectively help doctors’ treatments.

The author suggested model, which was based on the MobileNetV2 and Long Short-Term Memory (LSTM) method, was effective in classifying and detecting skin diseases with little effort and processing power18. The accuracy rate of 85.34% is encouraging. With a stride2 mechanism, the author intended for the MobileNetV2 architecture to function with a portable device. The LSTM module with MobileNetV2 would improve prediction accuracy by preserving the prior timestamp data, and the model is computationally efficient. The model would be resilient if weight optimizations were used to incorporate information about the present condition.

Another important advancement in the diagnosis of skin cancer was the introduction of a two-phase framework that included both segmentation and classification stages for the detection of melanoma lesions19. The researchers used a hybrid DL architecture for the segmentation phase, which successfully improved the accuracy of the segmentation and subsequent classification tasks, as shown in Table 1. To increase learning from the segmented lesion regions, a Dilated Residual Network (DRN) in conjunction with handcrafted features was used to create the classification model. Three different DL models were integrated, which increased the system’s resilience. Experiments on two benchmark datasets, The International Skin Imaging Collaboration (ISIC) 2017 and ISBI 2016, showed encouraging outcomes, with accuracy rates of 85.3% on ISIC 2017 and 88.9% on ISBI 2016.

A recent study investigated a multimodal method to improve diagnostic accuracy by combining clinical and dermoscopic pictures for skin lesion classification. Together, the authors’20 hybrid CNN architectures produced an 89.5% classification accuracy. This implies that utilizing a variety of imaging modalities might greatly increase the dependability of systems for detecting skin cancer. The study did, however, also draw attention to some of the major drawbacks of multimodal learning, specifically the added difficulty of preprocessing different kinds of images and the greater challenge of developing models that continue to perform well across a range of inputs.

An innovative two-stage ensemble approach was presented in a study21 to enhance the categorization of skin melanoma from dermoscopic images. By combining five effective classification models, the suggested framework improved overall decision-making accuracy through ensemble learning. Furthermore, the researchers created a tailored lesion segmentation method that produced targeted lesion masks, enabling the resizing of the input images with more focus on the lesion region. In order to better fuse the outputs of the various models, a novel ensemble architecture with locally connected layers was created to combine predictions from the various models. Strong classification performance from the suggested system’s experimental evaluation on the ISIC 2017 challenge dataset proved its efficacy.

In comparative research22, the efficacy of FL in medical picture categorization was assessed against that of conventional DL models trained on centralized datasets. Despite underlying data heterogeneity, the researchers showed that FL, which used common models and parameters across six different institutions, achieved performance levels that were on par with centralized training techniques. This demonstrates FL’s versatility and generalizability in situations involving a range of clinical settings. Additionally, FL greatly lowers systemic privacy issues by sending only model weights and updates rather than raw data. Conventional DL models like VGG19 (71.0%), ResNet50 (77.0%), ResNeXt50 (80.0%), SE-ResNet50 (66.0%), and SE-ResNeXt50 (76.0%) demonstrated differing degrees of accuracy when evaluated on an external test set using pooled data.

Numerous DL-based systems have been created to automate the process of detecting melanoma early on, which is crucial for improving patient survival results. The application of FL for melanoma classification was investigated by the author23, who used data from a single centralized source to simulate the FL process. The difficulties usually connected with non-IID data distributions, like variations in picture properties among several clinical sites, were circumvented by this arrangement. The research found that FL can be a good privacy-preserving substitute with little performance loss, with a classification accuracy of 83.74% when using traditional CL and a similar accuracy of 83.02% when utilizing FL. To efficiently handle non-IID data circumstances that are frequently found in actual federated setups.

In light of recent advancements in the diagnosis of skin cancer, Ozdemir and Pacal26 introduced a robust hybrid DL architecture that effectively addresses the challenges of multiclass skin lesion categorization. Their approach combines ConvNeXtV2 blocks with separable self-attention processes to improve computing efficiency and feature extraction. Specifically, the ConvNeXtV2 modules are used in the early stages to extract fine-grained local features, while the separable self-attention components focus on global contextual information in subsequent layers, prioritizing diagnostically significant regions with minimal computational overhead. To reconcile local detail recognition with global feature understanding, this study demonstrates the expanding trend of merging convolutional and transformer-based architectures. Additionally, it highlights how well hybrid models and TL techniques work to advance automated skin cancer diagnosis.

Contribution

This research study primarily contributes in the following ways:

-

Multiple decentralized clients, such as clinics or hospitals (Client 1, Client 2, Client 3), can collaboratively train a deep learning (DL) model using the proposed federated learning (FL) framework without sharing private skin image data.

-

This approach protects patient privacy and complies with data protection regulations, such as the Health Insurance Portability and Accountability Act (HIPAA) and the General Data Protection Regulation (GDPR), while maintaining high diagnostic accuracy.

-

The model leverages explainable AI (XAI) techniques, such as Grad-CAM, to visualize and interpret the CNN’s decision-making process.

-

By highlighting the regions of skin scans that influenced the model’s predictions, this enhances clinical trust and transparency, aiding dermatologists in patient diagnosis.

Methodology

This article presents a method for classifying skin tumors into benign and malignant categories using a deep learning (DL) approach. The proposed framework integrates federated learning (FL) with a pre-trained CNN model, VGG19. Traditionally, centralized machine learning requires the consolidation of sensitive patient data in a single location. To enhance the interpretability of model predictions, explainable AI (XAI) techniques, such as Grad-CAM, are incorporated. This privacy-preserving and interpretable solution aims to improve skin cancer diagnosis. The workflow of the proposed methodology is summarized in Table 2.

We adopt asynchronous FL, in which many clients coordinate to train the global model but never exchange raw data. Each client trains the VGG19 on its local dataset and then periodically shares only the model parameters with a central server for aggregation through FedAvg, as shown in Fig. 1.

In the proposed Methodology, each client has a dataset27 of photos of skin cancers. To protect data privacy, these datasets are not shared with outside parties. VGG19 extracts features from the images (deep CNN layers capture edges, textures, and lesion shapes). In this article, every client uses a VGG19 model, which is pre-trained on ImageNet and optimized for classifying skin cancers. At each client, these elements are incorporated into the local model. Each client uses their data to locally train their models using the VGG19 model for 20 epochs on their local dataset per round. Mini-batches of 30 photos are used for training. After local training, instead of sending the raw data to the global server, clients submit model changes (weights or gradients).

FedAvg is carried out by the central server. It compiles all of the client updates. The local model parameters are averaged to produce a new global model. This concludes one FL cycle. In this proposed Methodology, there are 25 rounds in the federated training loop. The global model learns from all distributed datasets, increasing its accuracy. Interpretability through the use of Grad-CAM, which identifies areas of the image that affected the model’s judgment.

Dataset

The Kaggle dataset27 used in this study contains images of skin lesions from the ISIC dataset28, classified as either benign or malignant. The dataset is intended to identify potentially malignant tumors and represents a common binary classification problem in dermatology. As shown in Table 3, the dataset comprises a total of 3,297 images, of which 1,497 are malignant and 1,800 are benign.

Data preprocessing

DL models such as VGG19 and MobileNet, pre-processing processes that all photos into a standard input shape. All photos are downsized to 224 × 224 pixels to match the input dimension of VGG19, as shown in Fig. 5. Using transforms, normalization is applied. Normalize using ImageNet’s mean and standard deviation values to match the expectations of the pretrained model. PyTorch tensors are created from images by applying transforms. This guarantees that the input data is consistent, clean, and appropriate for TL models.

Data distribution

To simulate an FL experience. The dataset is distributed into 3 clients receives a balanced but different subset of the dataset. This partition simulated decentralized data environments like multiple clients, i.e., client 1, client 2, client 3 (Shown in Table 4). Distribution ensures that every client has samples from both classes, i.e., shown in Figs. 2, 3, and 4; however, the actual implementation logic may still result in a non-IID nature.

Data preparation

The dataset for each client is encapsulated in a DataLoader, with a batch size of 30 to enable effective mini-batch training. Each client in the federated learning loop performs local training using these DataLoaders. After each round, the global model is evaluated on a global test set, which is created from the remaining 20% of the original data.

Data split

The dataset is divided into 80% training data and 20% testing data. For federated learning, 80% of the training data is further distributed among three clients, while the 20% test data is reserved for evaluating the global model. This division ensures a fair validation of model performance, as only a portion of the data is used for centralized assessment.

Model training

Model architectures

CNNs include architectures such as VGG19, which consists of sixteen convolutional layers and three fully connected (dense) layers. This architecture, originally developed for the ImageNet competition29, employs small 3 × 3 convolutional filters. In this methodology, a dataset26, such as ISIC27, provides high-resolution dermoscopic images. All images are resized to 224 × 224 pixels for compatibility with VGG19. Preprocessing includes normalization of pixel values and data augmentation techniques (e.g., flipping, rotation, and zooming) to improve model generalization. VGG19 applies Rectified Linear Unit (ReLU) activations after successive 3 × 3 convolutions, with spatial resolution progressively reduced by max-pooling layers. The earlier layers capture low-level features (such as edges and textures), while deeper layers extract more complex characteristics (such as lesion shape and irregular borders). The final convolutional output is flattened and passed through two or three dense layers. The output layer is adapted for either binary classification (e.g., benign vs. malignant) or multi-class classification (e.g., melanoma, etc.). Due to the limited availability of labeled dermoscopic data, VGG19 is pretrained on ImageNet. For skin cancer classification, the final dense layer is replaced, while earlier layers are either frozen or fine-tuned to adapt the model to the specific task (Fig. 5).

Federated learning setup

The classification of skin cancer into benign and malignant categories, while preserving data privacy across multiple client devices, is achieved using a federated learning (FL) architecture. The FL system simulates real client data by utilizing localized datasets from several client centers.

Each of the three clients hosting a component of the skin cancer dataset participates in the training process, as shown in Fig. 6. The foundation of TL in this decentralized setting is the VGG19 model, which has already been trained on ImageNet.

In a federated training loop, each optimizer is evaluated across 25 communication rounds using the FedAvg algorithm, as shown in Table 5. The model’s performance is assessed using each client’s local test set.

Three popular optimizers are utilized and compared in our federated configuration to determine how various optimization strategies affect model performance.

Stochastic gradient descent (SGD)

SGD is renowned for being simple and stable. SGD is frequently used as a baseline in FL implementations. Even though it converges more slowly, it frequently works effectively in situations with noisy or unbalanced data30.

RMSprop

RMSprop, an adaptive optimizer that modifies the learning rate for every parameter, works well with non-stationary objectives common in FL contexts and is especially well-suited for image data31.

AdamW

Particularly in vision applications, AdamW, a variation of the Adam optimizer with decoupled weight decay, is renowned for its enhanced generalization and quicker convergence. It has demonstrated impressive outcomes in medical images32.

Grad-CAM implementation

Grad-CAM creates heatmaps that show the key areas of an image that influence a model’s choice33. In the proposed methodology, the adoption of Grad-CAM improves the understanding of skin cancer classification models based on DL. By visualizing model awareness, we aim to ensure that the model’s predictions match clinically significant aspects in dermoscopic images, as shown in Fig. 7.

After training, Grad-CAM is used to produce visual justifications for the model’s predictions. The learnt VGG19 model was applied to every test image to predict class scores’ gradients that were computed about the last convolutional layer. Each feature map was given a weight by globally averaging the gradients. To preserve positive contributions, a ReLU activation was applied to a weighted sum of feature maps. To determine which regions had the most influence on the forecast, the generated heatmap was placed on the original images, as shown in Fig. 8.

Results & simulation

The suggested outcomes of the proposed FL system for skin cancer detection, utilizing the VGG19 deep TL model, are shown in this part. This approach is made to be highly accurate across non-IID (non-independent and identically distributed) data sources, explainable, and privacy-preserving. Google’s Colab was used for model training, leveraging a graphics processing unit (GPU) with NVIDIA (short for next vision) to speed up computation and facilitate effective DL model training.

Experimental setup

Three clients were used in the studies, and each one used a different optimizer: AdamW, SGD, and RMSprop. To train local models using dermatological datasets, the performance of these optimizers was evaluated across 25 communication rounds, as shown in Table 6. These models’ output was then combined into an FL framework, which encouraged cooperation without affecting data privacy.

Initial phase (rounds 1–3)

As models start to learn, client accuracy is initially low (testing: 47–54% and training: 46–49%). Due to TL from VGG19, global accuracy is comparatively high from the beginning (85–90%). While RMSprop rapidly achieves high training accuracy (95.19%), Client-2 (SGD) demonstrates early strength in testing accuracy (91.26% in Round 3).

Mid convergence phase (rounds 4–15)

Every client becomes better over time. Starting with Round 6, RMSprop continuously achieves 100% training accuracy as shown in Table 6. Although they fluctuate, SGD and AdamW are robust, stabilizing at 88–91% testing accuracy. The range of global testing accuracy is 85–87%. By Round 15, Global Training Accuracy has increased from 90.7% to 94.6%.

Last stabilization and peak performance phase (rounds 16–25)

Accuracy RMSprop for client testing is approximately 87–90%, SGD is 88–91%, and AdamW is 85–88%. Accuracy RMSprop for client training is 100%, indicating ongoing optimal local learning. Accuracy is maintained at 94–97% by SGD and AdamW. The peak global testing accuracy (Round 19) is 87.06%0.96.45% is the maximum global training accuracy (Round 22). The model has a strong generalization and is well-convergent by Round 25. The narrow performance difference between training and testing suggests good balance and little overfitting, as shown in Table 7 and global performance in Table 8.

Accuracy measures the proportion of correct predictions among all predictions34,35,36.

Where \(\:xa,\:xb,\:ya\:\&\:yb\) represent the true positive, true negative, false positive, and false negative, respectively.

Precision: Measures of how many of the predicted positives are positive34,35,36.

Recall: Measures of how many actual positives were correctly identified34,35,36.

Specificity: Measures how many of the actual negatives were correctly identified as negative34.

Miss Classification Rate: Measures how many predictions were wrong out of all predictions36.

F1 score: Harmonic mean of precision and recall34.

Client-wise training accuracy

Figure 9 shows the training accuracy across 25 federated learning (FL) rounds for three clients using different optimizers. Client 3 (RMSprop) achieved the highest accuracy of 100% by round 5. Clients 1 (AdamW) and 2 (SGD) stabilized at around 93–96% accuracy, demonstrating slower convergence.

Client-wise training loss

Figure 10 illustrates how the training loss for three clients, using different optimizers, decreased over 25 federated learning (FL) rounds. Client 3 (Green – RMSprop) exhibited faster convergence and more effective optimization, with a sharp initial drop in loss (from ~ 1.0 to < 0.1 by round 3) and consistently low loss throughout. Client 1 (Blue – AdamW) and Client 2 (Orange – SGD) showed higher initial loss and greater volatility, but their loss gradually decreased and stabilized around 0.1–0.2 over time.

Client-wise testing accuracy

Using a different optimizer for each of the 25 FL cycles, this graph shows the evolution of testing accuracy for three customers. The best testing accuracy, continuously falling between 88% and 92%, was attained by Client-2 (Orange-SGD). Throughout the training process, it exhibits consistent testing performance and outstanding generalization. It began with a sharp increase and stayed between 87 and 90%, as shown in Fig. 11. Although there are some variations, overall performance is steady and shows high test results and good generalization, while lagging behind Client_2 in subsequent rounds. Initially having a lower accuracy 55%, Client-1 (Blue – AdamW) rapidly increased to 85–88% showing greater variability and slower generalization than the other clients.

Client-wise testing loss

Figure 12 shows the testing loss for three clients across 25 federated learning (FL) rounds. Client 3 (Green – RMSprop) consistently maintains the lowest testing loss, typically between 0.2 and 0.4, indicating good generalization and model reliability. Client 1 (Blue – AdamW) exhibits moderate loss, generally between 0.4 and 0.7, with a slight upward trend, suggesting that the generalization error increases over rounds. By the final rounds, Client 2 (Orange – SGD) shows overfitting or unstable testing performance, with the highest and most variable testing loss, exceeding 1.0.

Client-wise accuracy across federated rounds

Figure 13 illustrates the accuracy progression of three clients over 25 federated learning (FL) rounds. Clients 1 and 2 steadily improve, stabilizing at 90–96% accuracy, while Client 3 quickly achieves and maintains near 100% accuracy. These discrepancies suggest a non-IID data distribution across clients. Overall, all clients achieve high accuracy, demonstrating the effectiveness of FL with VGG19 for skin cancer detection while preserving data privacy.

Client-wise loss across federated rounds

Figure 14 shows the client-wise loss trends for the classification of skin cancer for a total of 25 FL rounds. All three clients show a significant drop in loss throughout the initial rounds, indicating rapid model development. Client 3 achieves the lowest and most consistent loss early on, coming close to zero, suggesting improved learning performance, possibly due to a more balanced or high-quality local dataset. In contrast, clients 1 and 2 show more swings and slightly higher loss numbers, which could be the result of local variance or issues with non-IID data. Overall, the VGG19-based FL configuration’s effectiveness is attested to by the stabilizing and decreasing loss for each client.

Federated training accuracy over rounds

Global test accuracy and global training accuracy over 25 FL rounds are contrasted in this graph. Strong learning performance on training data is indicated by the training accuracy (blue line), which begins at about 87% and increases quickly, reaching 94% by round 4. It then varies slightly between 94% and 96% as shown in Fig. 15. On the other hand, the test accuracy (orange line) starts at about 85% and stays mostly constant over the course of the rounds, ranging from 84% to 87%. This shows a slight discrepancy between training and test performance, indicating that although the model is learning well on the training data, its generalization to unseen data is consistent but improves more slowly.

Confusion matrix

The Client-1 training confusion matrix in Figure 1 illustrates how successfully Client-1’s model completed a binary classification job using the training dataset. The model’s exceptional learning skills are demonstrated by the high number of accurate predictions—359 benign and 344 malignant occurrences were correctly categorized. There were just a few instances of misclassification: sixteen benign samples were incorrectly categorized as cancer, while nine malignant samples were projected to be benign. The low overall error rate indicates that the model has learnt from the training data successfully and performs well in distinguishing between benign and malignant instances.

The Client-1 testing confusion matrix, as illustrated in Fig. 16, shows how well Client-1’s model performed on the testing dataset for a binary classification task. On unseen data, the model shows strong generalization. 8 malignant instances were mistakenly categorized as benign, and 17 benign cases were mistakenly labelled as malignant. Comparing it to the training set reveals, in Fig. 17, a slight generalization gap, even if the number of misclassifications is still rather low. The model appears to be dependable for accurately detecting both benign and malignant cases since it strikes a fair balance between sensitivity and specificity.

The confusion matrix in Fig. 18 illustrates how well Client-2’s model performed on the training dataset for skin cancer binary classification. The accuracy of the model on the training data is extremely good. 11 cases of false positives occur, in which benign samples are mistakenly identified as cancer.

There are also 15 false negatives, which occur when cancerous cases are mistakenly classified as benign. There are 26 misclassifications in all, with false negatives being especially important to reduce in medical diagnosis because failing to detect a malignant case might have detrimental effects.

Figure 19 shows the confusion matrix that shows how well Client_2’s model performed on the test dataset. With a large number of accurate predictions for both benign (83) and malignant (81), the model exhibits significant generalization ability. Still, it occasionally makes mistakes, misclassifying 7 cancer samples as benign (False Negatives) and 12 benign samples as malignant (False Positives). Despite their rarity, these errors are noteworthy, particularly in crucial applications such as medical diagnosis. The test data reveal a marginally higher propensity to incorrectly identify benign instances as cancer as compared to the training phase.

The confusion matrix illustrates Client_3’s model’s performance on the training dataset (Fig. 20). According to the matrix, the model accurately identified all 380 benign cases and all 348 malignant cases, demonstrating that it made no classification errors on the training data. This flawless classification indicates that the model has learnt the training data very well.

The confusion matrix displayed in Fig. 21 illustrates how well Client_3’s model performed on the test dataset. With high true positive (75) and true negative (84) numbers, the model exhibits good classification performance. However, it incorrectly identified 8 cancer cases as benign (false negatives) and 16 benign cases as malignant (false positives). Even though the model is often successful in recognizing both benign and malignant occurrences, it can still be improved.

The confusion matrix in Fig. 22 shows the global model’s training performance in the FL configuration. With 1,368 benign and 1,246 malignant cases accurately identified, the model exhibits good classification abilities. Only a small percentage of samples were misclassified: 47 malignant lesions were mistakenly classified as benign, whereas 72 benign lesions were mistakenly labelled as malignant. These numbers point to a model performance that is balanced and does not exhibit any discernible bias towards either class. The global model has successfully generalized knowledge from all clients, and it learns well from the combined distributed data during training, as evidenced by the low misclassification rates and high number of right predictions.

The confusion matrix in Fig. 23 illustrates the assessment of the global model’s performance on the testing dataset. The model effectively identified most test samples; 303 benign and 185 malignant instances were accurately predicted. Nevertheless, it incorrectly identified 19 malignant patients as benign and 57 benign cases as malignant. There are more false positives (benign predictions that turn out to be malignant) than false negatives; this suggests a minor propensity to overpredict malignancy. Despite this, the global model has a strong capacity for generalization on unseen data, preserving a favorable equilibrium between sensitivity and specificity. The model performs well in classification even after training, as evidenced by the overall low error rate.

Table 9 presents a comprehensive overview of all evaluation metrics for each client (Client 1, Client 2, and Client 3) as well as for the globally aggregated federated learning (FL) model. Client 1 achieved training and testing accuracies of 96.57% and 86.34%, respectively, while Client 2 recorded 96.42% and 89.56%. Client 3 attained a training accuracy of 95.66% and a testing accuracy of 86.52%.

Clients 1, 2, and 3 reported precision values of 95.73%, 96.15%, and 100% during training, 83.81%, 87.10%, and 82.42% on the test data, respectively. These precision numbers show a similar pattern. 76.45% is the testing precision of the global FL model. Testing recall varies between 90.36% and 92.04%, and the global model’s recall (sensitivity) is 90.69%. For training, all clients have recalls (sensitivity) above 97%, with Client 3 reaching 100%.

Specificity for Client 1, Client 2, and Client 3 during training is above 95%, reaching 100% for Client 3, and testing specificity ranges between 80.46% and 87.37%. The global model yields 95.00% specificity on training and 84.17% on testing. The misclassification rates remain low in training—between 0% and 3.57%—while testing misclassification ranges from 10.38% to 13.66%, with the global model at 13.48%.

With all training scores above 96% and testing F1 scores ranging from 0.8620 to 0.8950, and 0.8293 for the global model, F1 scores demonstrate the model’s balance between precision and recall. With the help of a thorough Table 10, this performance report offers a clear comparison between clients and the federated model, emphasizing consistently good training performance and marginally lower but competitive testing results.

Table 10 compares various studies on federated learning (FL) and deep learning (DL) approaches for cancer detection. While most previous studies, including those using MobileNet, ResNet, and lesion segmentation techniques, achieved accuracies between 83% and 85%, they still exhibited relatively high misclassification rates. Moreover, interpretability was limited, as most approaches did not incorporate explainable AI (XAI).

In contrast, the proposed VGG19 with FL not only improved accuracy to 86.52% but also integrated XAI, ensuring transparency and enhanced interpretability of the results. By increasing accuracy, reducing misclassification, and adding explainability, the proposed approach outperforms prior methods, providing a more reliable and interpretable model for cancer detection.

Conclusion & future work

This study presents a comprehensive approach for detecting skin cancer by integrating explainable AI (XAI), privacy-preserving federated learning (FL), and deep learning (DL). Using a transfer learning (TL) model based on VGG19, we achieved high classification accuracy for the two main types of skin cancer: malignant and benign. The FL system enabled decentralized training across three simulated clients without exchanging raw patient data, thereby protecting privacy while maintaining model performance. Grad-CAM was employed to visually explain the model’s predictions, providing transparency and interpretability for clinical decision-makers. Overall, the results demonstrate that combining XAI with federated DL offers an effective and ethically sound solution for dermatological diagnosis.

In the future, generalizability can be validated by extending the current simulated federated setup to real-world federated environments that incorporate data from multiple dermatology clinics or hospitals. More advanced FL approaches, such as FedProx, personalized FL, or clustered FL, could be explored to handle non-IID data distributions and improve consistency across diverse datasets. Lightweight and efficient models, such as Swin Transformers, Vision Transformers (ViTs), or EfficientNet, may be used to evaluate accuracy and computational requirements. Combining FL with differential privacy or homomorphic encryption can further enhance the security of model updates and reduce the risk of information leakage. Additionally, other explainability techniques, such as Integrated Gradients, SHAP (Shapley Additive Explanations), or LIME (Local Interpretable Model-agnostic Explanations), could be applied to assess and improve interpretability. To increase clinical relevance, the model could be extended to classify multiple types of skin lesions (e.g., melanoma, basal cell carcinoma, seborrhoeic keratosis) rather than limiting it to binary classification.

Data availability

The data used to support the findings of this study are available from the corresponding authors upon request.

References

Siegel, R. L., Giaquinto, A. N. & Jemal, A. Cancer statistics, 2024. Cancer J. Clin. 74 (1), 12–49. https://doi.org/10.3322/caac.21820 (2024).

Ain, Q. U. et al. Privacy-aware collaborative learning for skin cancer prediction. Diagnostics 13 (13), 2264. https://doi.org/10.3390/diagnostics13132264 (2023).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542 (7639), 115–118. https://doi.org/10.1038/Nature21056 (2017).

Zafar, M. et al. Skin lesion analysis and cancer detection based on machine/deep learning techniques: A comprehensive survey. Life 13 (1), 146. https://doi.org/10.3390/life13010146 (2023).

Bindhu, A. & Thanammal, K. K. Segmentation of Skin Cancer Using Fuzzy U-network Via Deep Learning26100677 (Sensors, 2023).

Prouteau, A. & André, C. Canine melanomas as models for human melanomas: clinical, histological, and genetic comparison. Genes 10 (7), 501 (2019).

Tajbakhsh, N. et al. Convolutional neural networks for medical image analysis: full training or fine tuning? IEEE Trans. Med. Imaging. 35 (5), 1299–1312. https://doi.org/10.1109/TMI.2016.2535302 (2016).

Simonyan, K. & Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, Apr. 10, 2015. doi: 10.48550. arXiv preprint arXiv.1409.1556. (2023).

Codella, N. et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (ISIC). (2019). arXiv preprint arXiv:1902.03368.

Yaqoob, M. M. et al. Symmetry in privacy-based healthcare: a review of skin cancer detection and classification using federated learning. Symmetry 15 (7), 1369 (2023).

Ahsan Ali, M. A., Khan & Choi, H. Hydrogen storage prediction in dibenzyltoluene as liquid organic hydrogen carrier empowered with weighted federated machine learning. Mathematics 10, no. 20 : 3846. (2022).

Ali, A., Khan, M. A., Abbas, N. & Choi, H. Prediction of hydrogen storage in dibenzyltoluene empowered with machine learning. J. Energy Storage. 55, 105844 (2022).

Ali, Y., Han, K. H., Majeed, A., Lim, J. S. & Hwang, S. O. An optimal Two-Step approach for defense against poisoning attacks in federated learning. IEEE Access. https://doi.org/10.1109/ACCESS.2025.3556906 (2025).

Majeed, A. & Hwang, S. O. A multifaceted survey on federated learning: fundamentals, paradigm shifts, practical issues, recent developments, partnerships, trade-offs, trustworthiness, and ways forward. IEEE Access. 12, 84643–84679 (2024).

Hwang, S. O. & Majeed, A. Analysis of federated learning paradigm in medical domain: taking COVID-19 as an application use case. Appl. Sci. 14 (10), 4100 (2024).

Deebak, B. D. & Hwang, S. O. Federated learning-based lightweight two-factor authentication framework with privacy preservation for mobile sink in the social IoMT. Electronics 12 (5), 1250 (2023).

Rasheed, A. et al. Automatic eczema classification in clinical images based on a hybrid deep neural network. Comput. Biol. Med. 147, 105807 (2022).

Srinivasu, P. N. et al. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors 21 (8), 2852 (2021).

Jayapriya, K. & Jacob, I. J. Hybrid fully convolutional networks-based skin lesion segmentation and melanoma detection using deep features. Int. J. Imaging Syst. Technol. 30 (2), 348–357 (2020).

Dutta, A., Kamrul Hasan, M. & Ahmad, M. Skin lesion classification using a convolutional neural network for melanoma recognition. In Proceedings of International Joint Conference on Advances in Computational Intelligence: IJCACI 2020 (pp. 55–66). Springer Singapore. (2021).

Ding, J., Song, J., Li, J., Tang, J. & Guo, F. Two-stage deep neural network via ensemble learning for melanoma classification. Front. Bioeng. Biotechnol. 9, 758495 (2022).

Lee, H. et al. Federated learning for thyroid ultrasound image analysis to protect personal information: validation study in a real health care environment. JMIR Med. Inf. 9 (5), e25869 (2021).

Agbley, B. L. Y., Li, J., Haq, A. U., Bankas, E. K., Ahmad, S., Agyemang, I. O., …Latipova, S. (2021, December). Multimodal melanoma detection with federated learning.In 2021, 18th International Computer Conference on wavelet active media technology and information processing (ICCWAMTIP) (pp. 238–244). IEEE.

Gouda, W., Sama, N. U., Al-Waakid, G., Humayun, M. & Jhanjhi, N. Z. Detection of skin cancer based on skin lesion images using deep learning. In Healthcare (Vol. 10, No. 7, 1183). MDPI. (2022), June.

Sae-Lim, W., Wettayaprasit, W. & Aiyarak, P. Convolutional neural networks using MobileNet for skin lesion classification. In 2019 16th international joint conference on Computer Science and software engineering (JCSSE) (pp. 242–247). IEEE. (2019)., July https://doi.org/10.1109/JCSSE.2019.8864155

Ozdemir, B. & Pacal, I. A robust deep learning framework for multiclass skin cancer classification. Sci. Rep. 15 (1), 4938 (2025).

https://www.kaggle.com/datasets/rm1000/skin-cancer-isic-images

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. ArXiv Preprint arXiv:1409 1556. https://doi.org/10.48550/arXiv.1409.1556 (2014).

McMahan, B., Moore, E., Ramage, D., Hampson, S. & Arcas, B. A. y Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics (pp. 1273–1282). PMLR. (2017), April.

Tieleman, T. Lecture 6.5-rmsprop: divide the gradient by a running average of its recent magnitude. COURSERA: Neural Networks Mach. Learn. 4 (2), 26 (2012).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. ArXiv Preprint arXiv :171105101. (2017).

Selvaraju, R. R. et al. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision (pp. 618–626). (2017).

Powers, D. M. Evaluation: from precision, recall and F-measure to ROC, informedness, markedness, and correlation. (2020). arXiv preprint arXiv:2010.16061.

Nasir, M. U. et al. IoMT-based osteosarcoma cancer detection in histopathology images using transfer learning empowered with blockchain, fog computing, and edge computing. Sensors 22 (14), 5444 (2022).

Nasir, M. U. et al. Multiclass classification of thalassemia types using complete blood count and HPLC data with machine learning. Sci. Rep. 15 (1), 26379 (2025).

Funding

No Funding is involved in this study.

Author information

Authors and Affiliations

Contributions

Study conception and design: Naila Sammar Naz, Muhammad Hassaan Mehmood, Fahad Ahmed, and Munir Ahmad; data collection: Naila Sammar Naz and Muhammad Hassaan Mehmood; analysis and interpretation of results: Salman Muneer, Ateeq Ur Rehman, Waleed M. Ismael, and Khan Muhammad Adnan; draft manuscript preparation: Fahad Ahmed and Munir Ahmad; Supervision: Ateeq Ur Rehman, Waleed M. Ismael, and Khan Muhammad Adnan. All authors reviewed the results and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Naz, N.S., Mehmood, M.H., Ahmed, F. et al. Privacy preserving skin cancer diagnosis through federated deep learning and explainable AI. Sci Rep 15, 36094 (2025). https://doi.org/10.1038/s41598-025-19905-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-19905-8