Abstract

Lacosamide, a widely used antiepileptic drug, suffers from poor solubility in conventional solvents, which limits its bioavailability. Supercritical carbon dioxide (SC-CO₂) has emerged as an environmentally friendly substitute solvent for pharmaceutical processing. In this study, the solubility of Lacosamide in SC-CO₂ was modeled and predicted using several machine learning techniques, including Gradient Boosting Decision Tree (GBDT), Multilayer Perceptron (MLP), Random Forest (RF), Gaussian Process Regression (GPR), Extreme Gradient Boosting (XG Boost), and Polynomial Regression (PR). These models have the ability to model nonlinear relationships. Experimental solubility information within a large span of pressures and temperatures were employed for model training and validation. The findings suggested that all applied models were competent in providing reliable predictions, with GBDT (R2 = 0.9989), XG Boost (R2 = 0.9986), and MLP (R2 = 0.9975) exhibiting the highest accuracy, achieving the highest coefficient of determination (R2). Overall, combining experimental data with advanced machine learning algorithms offers a powerful approach for predicting and optimizing drug solubility in supercritical systems, thereby facilitating the design of scalable pharmaceutical processes.

Similar content being viewed by others

Introduction

Lacosamide is a new- modern class of antiepileptic drugs, primarily indicated to alleviate partial-onset seizures and diabetic neuropathy. It exerts its therapeutic effect through a specific mechanism achieved by preferentially enhancing the slow inactivation of voltage-gated sodium channels, thereby stabilizing neuronal membranes and preventing repetitive abnormal discharges. Compared to other antiepileptic drugs, Lacosamide offers predictable pharmacokinetics, high oral bioavailability, and limited drug–drug interactions, making it an effective option both in monotherapy and adjunctive therapy1,2,3.

Micronization of poorly soluble pharmaceuticals drugs is a well-established strategy to enhance their oral bioavailability. Smaller particles provide a larger specific surface area, which accelerates dissolution according to the Noyes–Whitney equation, thereby increasing the concentration gradient across the gastrointestinal membrane and facilitating faster absorption. Moreover, nanoparticles can improve wetting properties, enhance cohesion, and in some cases enter cells via endocytosis, further promoting systemic exposure. Several studies have confirmed that nanosizing techniques such as nanocrystals, nanosuspensions, and supercritical fluid methods significantly enhance dissolution and bioavailability of drugs with low aqueous solubility4,5,6,7,8. One method of particle size reduction is the use of SC-CO₂. When choosing one of these methods, the solubility of the drug substance in SC-CO₂ must be determined either experimentally or by modeling. The solubility of many drugs in SC-CO₂ has been measured and reported using laboratory methods9,10,11,12,13,14,15,16.

However, laboratory methods for measuring the solubility of pharmaceuticals in SC-CO₂ have problems such as cost and time. Empirical and semi-empirical density-based models and models based on the equation of state can be suitable alternatives to laboratory methods if they have acceptable accuracy. The prediction of pharmaceutical solubility in SC-CO₂ using classical equations of state (EOS), such as Peng–Robinson or Soave–Redlich–Kwong, remains challenging due to several intrinsic limitations. EOS models primarily account for bulk phase interactions and van der Waals forces, yet they often fail to capture specific molecular interactions such as hydrogen bonding, polarity effects, or functional group contributions that are critical for complex drug molecules. Additionally, the accuracy of EOS decreases near the critical point of CO₂, where small variations in temperature or pressure led to large, nonlinear changes in solvent density and solvation capacity. Classical EOS also do not incorporate the solid-state properties of the drug such as particle size, crystallinity, or surface energy which strongly influence solubility, especially for nanosized or poorly crystalline pharmaceuticals. Moreover, most EOS formulations require compound-specific interaction parameters, which are frequently unavailable for novel drugs, limiting their predictive capability. Consequently, while EOS provide a thermodynamically consistent framework, their application to SC-CO₂ solubility often necessitates empirical corrections or hybrid approaches17,18.

Machine learning (ML) offers significant advantages over traditional empirical, semi-empirical, and equation-of-state (EOS) models for predicting pharmaceutical solubility in SC-CO₂. Unlike conventional approaches, ML models can learn complex, nonlinear relationships between multiple variables such as temperature, pressure, solvent density, molecular descriptors, and solid-state properties without requiring explicit formulation of thermodynamic equations. This capability allows for highly accurate predictions even in regions where EOS fail, such as near the critical point of CO₂ or for drugs with polar or complex molecular structures. Furthermore, ML models can generalize across different compounds and experimental conditions once trained on sufficient datasets, reducing the need for extensive new experimental measurements.

Modeling the solubility of oxycodone hydrochloride19, busulfan20, anti-cancer21, tacrolimus22, rifampin23, benzodiazepine24, fludrocortisone acetate25, lornoxicam26, and capecitabine27 in SC-CO2 have been investigated using various machine learning methos. In this study, the solubility of Lacosamide in SC-CO2 was modeled using the Gradient Boosting Decision Tree (GBDT), Multilayer Perceptron (MLP), Random Forest (RF), Gaussian Process Regression (GPR), Extreme Gradient Boosting (XG Boost), and Polynomial Regression (PR) machine learning methods. 80% of the laboratory data was leveraged to train the model and 20% was used for validation. Parameters mean squared error (MSE), mean absolute error (MAE), standard deviation (SD) and coefficient of determination (R2) were used to check the accuracy of the model in estimating solubility.

Theory and methodology

Solubility data

The laboratory data required for training and testing the model were used from previous work28. These data are displayed in Table 1. By changing the temperature, pressure, and density of carbon dioxide, the values of the Lacosamide molar fraction have been measured and reported experimentally.

Machine learning models

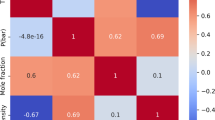

Three experimental parameters were used as input features for all machine learning models: temperature (T, K), pressure (P, MPa), and CO2 density (ρ, kg m–3). The dataset comprised 28 experimental samples obtained under strictly controlled thermodynamic conditions, covering four temperature levels (308, 318, 328, and 338 K) and seven pressure levels for each temperature (12–30 MPa), corresponding to a CO2 density range of 384.2–929.7 kg m–3. The prediction target was the solubility of Lacosamide in supercritical CO2, expressed as the dimensionless mole fraction (y2).

Each experimental point represented the mean of three replicate measurements to ensure repeatability and minimize random error. The dataset exhibited excellent internal consistency with no missing, duplicate, or anomalous entries; therefore, no additional preprocessing or outlier-removal procedures (e.g., IQR/Z-score filtering, normalization, or transformation) were required.

A simple train–test split (80% training, 20% testing) was employed for performance evaluation. To ensure representative thermodynamic coverage in both subsets, different data-splitting strategies (e.g., stratified or random sampling) were applied depending on the model type.

Gradient boosting decision tree (GBDT)

Gradient Boosting Decision Trees (GBDT) constitute a sophisticated ensemble learning framework wherein each successive decision tree is trained to reduce the residual errors or equivalently, the negative gradient of a chosen differentiable loss function in a stage-wise fashion. This iterative construction allows the ensemble to progressively refine its predictive power by addressing the shortcomings of preceding learners29. The approach is particularly effective in financial applications such as credit scoring, where GBDT consistently delivers high accuracy and computational efficiency compared to conventional models like logistic regression or random forests by focusing on bias reduction through sequential refinement. To enhance robustness in real-world scenarios with imbalanced classes, variants of GBDT have been proposed that incorporate data augmentation strategies for minority classes, including SMOTE to improve detection capability, such as in lithology identification tasks, where GBDT combined with SMOTE significantly outperforms baseline methods30.

A random train–test split with an 80%/20% ratio was applied using a fixed random seed (42) to ensure reproducibility. GBDT models were trained directly on physical feature values without explicit scaling, as tree-based algorithms are generally scale-invariant. Hyperparameter optimization was performed using RandomizedSearchCV (20 iterations, 3-fold cross-validation). The finalized model consisted of 100 trees, a maximum depth of 3, and a learning rate of 0.1.

Multilayer perceptron (MLP)

A multilayer perceptron (MLP) is a traditional feedforward artificial neural network architecture that consists of an input layer, one or more hidden layers, and an output layer, in which each neuron is fully connected to the neurons of the following layer and employs a nonlinear activation function, such as the sigmoid or rectified linear unit. MLPs are trained using the backpropagation algorithm in conjunction with gradient descent to iteratively adjust weights and biases by propagating error signals backward through the network. A cornerstone in neural network theory, MLPs are universal function approximators: given at least one hidden layer and a non-polynomial activation function, they can approximate any continuous function on a compact domain to arbitrary precision. This theoretical foundation underscores the versatility of MLPs in modeling complex, nonlinear relationships across domains such as classification, regression, and function approximation31.

A stratified sampling approach based on temperature was employed to ensure proportional representation of all four temperature levels in both the training and test sets. Accordingly, 80% of the data (22 samples) were used for training and 20% (6 samples) for validation. This strategy maintained balanced physical coverage and prevented omission of any temperature condition during model training.

MLP models used MinMax scaling (0–1 range) for both input features and the target variable to improve convergence when handling small mole-fraction values. The network architecture consisted of two hidden layers with 10 and 6 neurons, respectively, and a single output neuron. The tanh activation function was applied in all hidden layers, and the model was trained using the LBFGS solver, which employs a fixed internal step size and does not include a user-adjustable learning rate.

Random forest (RF)

The Random Forest (RF) algorithm is an ensemble machine learning method based on decision trees, used for both classification and regression tasks. In this approach, multiple decision trees are constructed using random sampling of the data (bootstrap sampling) and a random subset of features at each split. The final prediction is determined by aggregating the outputs of all trees, using majority voting for classification and averaging for regression. This method reduces overfitting, improves robustness to data variability, and allows the assessment of feature importance32.

Random Forest (RF) models employed StandardScaler to standardize input features, ensuring balanced numerical ranges for engineered polynomial and interaction terms. The RF ensemble consisted of 2000 trees with a maximum depth of 20, and max_features = “sqrt”.

Hyperparameter optimization was performed using GridSearchCV with 5-fold cross-validation, applying the R2 metric as the performance criterion.

Gaussian process regression (GPR)

Gaussian Process Regression (GPR) is a non-parametric, probabilistic machine learning method employed for regression analysis that provides not only predictions but also quantified uncertainty estimates. In GPR, a Gaussian process is defined as a family of random variables in which any finite number are jointly Gaussian distributed is used as a prior over functions. The mapping between inputs and outputs is represented by specifying a mean function (commonly set to zero) and a covariance function, or kernel, which encodes prior assumptions regarding properties such as smoothness, periodicity, and other structural characteristics of the function. Given training data, GPR computes the posterior distribution over functions, allowing predictions at new points to include both a mean estimate and a confidence interval. Its flexibility and ability to capture complex, nonlinear relationships make it valuable in fields such as engineering, geostatistics, and robotics, though computational cost scales cubically with the number of training points, which can limit its application to very large datasets. Commonly employed kernels include the Radial Basis Function (RBF), Matérn, and periodic kernels, each controlling the function’s smoothness and correlation structure33.

For the GPR model, an 80%/20% random train-test split was used with a fixed random seed (42) to ensure reproducibility. Input features were standardized using z-score scaling, and the target variable was log-transformed to enhance kernel stability. The model employed an anisotropic RBF kernel with length scales [1000, 1.54, 3.27] for temperature (T), pressure (P), and CO₂ density (ρ), combined with a WhiteKernel for noise correction. Kernel hyperparameters were optimized through 20 restarts of log-marginal likelihood maximization.

Extreme gradient boosting (XG Boost)

Extreme Gradient Boosting (XG Boost) is a high-performance ensemble learning algorithm that extends the gradient boosting framework through advanced regularization (both L1 and L2), second-order Taylor approximation of the loss function, and system-level optimizations such as parallel processing and cache-efficient computation. These enhancements significantly boost both predictive accuracy and computational efficiency in a variety of domains34.

For the XG Boost model, an 80%/20% random train-test split was applied with a fixed random seed (42) to ensure reproducibility. Input features were standardized, and target values were log-transformed to improve gradient convergence. Hyperparameters were optimized using GridSearchCV with 5-fold cross-validation and RMSE as the scoring metric. The final model employed 200 estimators, a maximum depth of 7, a learning rate of 0.1, subsample = 1.0, colsample_bytree = 1.0, and objective = “reg: squarederror”.

Polynomial regression (PR)

Polynomial Regression (PR) is a flexible, yet interpretable extension of linear regression that model’s relationships between variables using polynomial terms e.g., \({x^2},~{x^3}~\)while remaining linear in its coefficients and estimable via ordinary least squares. This approach enables fitting curvilinear trends effectively, though higher-degree polynomials can increase risks of overfitting and multicollinearity unless managed carefully (e.g., via lower-degree models or regularization)35.

A random train-test split with an 80%/20% ratio was applied, using a fixed random seed (42) for reproducibility. Several feature scaling methods (Standard, Robust, and MinMax) were tested, and the one yielding the best cross-validation performance was selected. Polynomial degrees from 1 to 4 were evaluated, and the optimal degree (1) was determined based on 5-fold cross-validation.

Statistical error analysis

To assess the predictive capability of the machine learning models, statistical error analysis was conducted via comparison with the predicted solubility values (\({y_{model}})~\)with the corresponding collected data (\({y_{exp}})\). The Mean Squared Error (MSE) measures the average of the squared differences between predictions and experimental values, placing higher penalties on large deviations; lower MSE indicates better model precision.

The Mean Absolute Error (MAE), in contrast, represents the average of the absolute deviations and thus provides a more interpretable measure of the typical prediction error, being less sensitive to outliers than MSE.

The Standard Deviation of errors (SD) quantifies the dispersion of the residuals, offering insight into the robustness and trustworthiness of the predictions; reduced SD values imply more stable predictive performance.

Finally, the coefficient of determination (R2) assesses the proportion of variance in the experimental data that is elucidated by the model, where values closer to 1 indicate stronger agreement between predicted and experimental solubility.

Together, these statistical indicators provide a comprehensive evaluation of model accuracy, robustness, and generalization capability in predicting Lacosamide solubility in SC-CO2.

Results and discussion

Statistical data related to modeling the solubility of Lacosamide in SC-CO2 using different models GBDT, MLP, RF, GPR, XG Boost, and PR are given in Table 2. The results revealed that GBDT and XG Boost achieved superior accuracy, with the lowest MSE values and the highest R2 scores, indicating an excellent fit to the data. MLP also demonstrated strong generalization capabilities, particularly in the test dataset, as reflected by its low MAE. In contrast, RF and PR showed comparatively weaker performance, with higher error rates and lower R2 values. These outcomes stress the importance of model selection in optimizing predictive accuracy and reliability in machine learning applications.

The superior performance of certain machine learning models in this study can be attributed to their structural advantages and advanced learning mechanisms. Models such as GBDT and XG Boost utilize gradient boosting frameworks that iteratively correct prediction errors, enabling them to characterize complex nonlinear associations in the data. Their built-in regularization techniques also help mitigate overfitting, enhancing generalization across datasets. Similarly, the MLP, as a deep neural network, benefits from multiple hidden layers and nonlinear activation functions, allowing it to learn intricate patterns and dependencies. These architectural strengths collectively contribute to lower error metrics and higher predictive accuracy, underscoring the importance of model design in achieving robust and reliable outcomes in data-driven applications. The weaker performance of RF (Random Forest) and PR (Polynomial Regression) in this study can be attributed to their structural limitations in handling complex data patterns. Although RF leverages an ensemble of decision trees, it lacks the iterative boosting mechanism found in models like GBDT and XG Boost, which helps refine predictions and reduce errors. As a result, RF may struggle to capture intricate nonlinear relationships, especially in datasets with high variability36. On the other hand, PR relies on fitting polynomial functions to the data, which can be overly simplistic or excessively sensitive depending on the chosen degree. This model is prone to underfitting when the polynomial degree is too low and overfitting when it is too high, leading to reduced generalization and higher prediction errors. These constraints limit the adaptability and precision of RF and PR, resulting in comparatively poorer outcomes.

In the Fig. 1(a)- (f), the comparison between the predicted solubility values obtained from different models and the corresponding experimental data is illustrated. As can be observed, the scatter of data points around the parity line (y = x) reflects the accuracy of each model in reproducing the experimental results. Among the investigated methods, models such as GBDT, XG Boost, and MLP demonstrated closer agreement with the experimental values, with most of their predictions falling near the parity line, indicating their strong capability in capturing the nonlinear dependencies between pressure, temperature, and drug properties. In contrast, models like RF and PR exhibited larger deviations from the parity line, highlighting their limitations in accurately modeling such a complex system. These findings emphasize the importance of selecting appropriate model architectures and employing advanced algorithms for reliable solubility prediction under supercritical conditions.

The solubility of the Lacosamide in SC-CO2 was investigated at four different temperatures, as illustrated in Fig. 2. The results in parts (a) to (f) of Fig. 2 correspond to models GBDT, MLP, RF, GPR, XG Boost, and PR, respectively. The experimental mole fractions are represented by discrete points, while the solid lines correspond to the model predictions. A clear temperature-dependent trend is observed, where drug solubility increases with rising pressure at each isotherm, consistent with the enhanced solvent density at higher pressures. Moreover, the comparison between experimental data and model results indicates a satisfactory agreement, as the predicted curves closely follow the experimental points across the studied pressure range. Minor deviations at higher pressures can be attributed to limitations in capturing complex solute–solvent interactions, yet the overall consistency confirms the reliability of the proposed model for describing the solubility behavior of the drug in SC-CO2.

Internal validation was performed on the Lacosamide dataset to assess the predictive performance and quantify uncertainty of the XG Boost model. The dataset consisted of 28 experimental samples, including temperature (T), pressure (P), CO2 density (ρ), and mole fraction (y2). Model evaluation employed 5-fold cross-validation and bootstrap analysis (100 iterations), yielding a cross-validated RMSE of 0.0664 ± 0.0255 and a mean R2 of 0.9336 ± 0.0667. The final model achieved excellent predictive accuracy (R2 ≈ 0.9999) and exhibited strong consistency with thermodynamic trends, with correlations of 0.9883 for pressure and 0.8918 for density. These results demonstrate that the model reliably captures the nonlinear relationships inherent in the system and provides physically meaningful predictions within the experimental range.

Conclusion

This study demonstrated that machine learning methods can serve as reliable tools for predicting the solubility of Lacosamide in SC-CO₂, thereby reducing the reliance on costly and time-consuming experimental measurements. The solubility of Lacosamide in SC-CO₂ was modeled using six machine learning models (GBDT, MLP, RF, GPR, XG Boost, and PR). The GBDT model demonstrated superior performance compared to other models with respect to prediction accuracy, indicated by statistical metrics including R2, MSE, and MAE in the training and test data were obtained as 0.9999, \(1.90 \times {10^{ - 14}}\), and \(1.17 \times {10^{ - 7}}~\)and 0.9935, \(1.73 \times {10^{ - 11}}\), and \(2.81 \times {10^{ - 6}}\), respectively. The MSE, and MAE values are not only low, but also very near to each other, which shows that the GBDT model has high accuracy in evaluating solubility. After this model (GBDT), two models, XG Boost and MLP, with the highest R2 and the lowest MSE and MAE values, respectively, were obtained as the best models for appraisement the solubility of Lacosamide in SC-CO2. These findings highlight the potential of integrating experimental data with advanced learning techniques to support the rational design of nanoparticle production processes using supercritical fluids. Future work may extend this framework to other pharmaceutical compounds, enabling broader application in scalable and sustainable drug processing technologies.

Data availability

All data generated or analysed during this study are included in this published article.

References

Carona, A. et al. Pharmacology of lacosamide: from its molecular mechanisms and pharmacokinetics to future therapeutic applications. Life Sci. 275, 119342. https://doi.org/10.1016/j.lfs.2021.119342 (2021).

Curia, G., Biagini, G., Perucca, E. & Avoli, M. Lacosamide: a new approach to target voltage-gated sodium currents in epileptic disorders. CNS Drugs. 23, 555–568. https://doi.org/10.2165/00023210-200923070-00002 (2009).

Cawello, W. Clinical pharmacokinetic and pharmacodynamic profile of lacosamide. Clin. Pharmacokinet. 54, 901–914. https://doi.org/10.1007/s40262-015-0276-0 (2015).

Esfandiari, N. Production of micro and nano particles of pharmaceutical by supercritical carbon dioxide. J. Supercrit. Fluids. 100, 129–141. https://doi.org/10.1016/j.supflu.2014.12.028 (2015).

Askarizadeh, M., Esfandiari, N., Honarvar, B., Sajadian, S. A. & Azdarpour, A. Kinetic modeling to explain the release of medicine from drug delivery systems. ChemBioEng. Rev. 10, 1006–1049. https://doi.org/10.1002/cben.202300027 (2023).

Sajadian, S. A., Esfandiari, N. & Padrela, L. CO2 utilization as a gas antisolvent in the production of Glibenclamide nanoparticles, Glibenclamide-HPMC, and Glibenclamide-PVP composites. J. CO2 Utilization. 84, 102832. https://doi.org/10.1016/j.jcou.2024.102832 (2024).

Askarizadeh, M., Esfandiari, N., Honarvar, B., Sajadian, S. A. & Azdarpour, A. Thermodynamic assessment for Rivaroxaban nanoparticle production using gas Anti-solvent (GAS) process: synthesis and characterization. J. Mol. Liq. 424, 127125. https://doi.org/10.1016/j.molliq.2025.127125 (2025).

Saadati Ardestani, N. et al. Supercritical fluid extraction from Zataria multiflora Boiss and impregnation of bioactive compounds in PLA for the development of materials with antibacterial properties. Processes 10, 2563 (2022).

Askarizadeh, M., Esfandiari, N., Honarvar, B., Sajadian, S. A. & Azdarpour, A. Solubility of Teriflunomide in supercritical carbon dioxide and co-solvent investigation. Fluid. Phase. Equilibria. 590, 114284. https://doi.org/10.1016/j.fluid.2024.114284 (2025).

Askarizadeh, M., Esfandiari, N., Honarvar, B., Ali Sajadian, S. & Azdarpour, A. Binary and ternary approach of solubility of Rivaroxaban for preparation of developed nano drug using supercritical fluid. Arab. J. Chem. 17, 105707. https://doi.org/10.1016/j.arabjc.2024.105707 (2024).

Sajadian, S. A., Ardestani, N. S., Esfandiari, N., Askarizadeh, M. & Jouyban, A. Solubility of favipiravir (as an anti-COVID-19) in supercritical carbon dioxide: an experimental analysis and thermodynamic modeling. J. Supercrit. Fluids. 183, 105539. https://doi.org/10.1016/j.supflu.2022.105539 (2022).

Sajadian, S. A. et al. Mesalazine solubility in supercritical carbon dioxide with and without cosolvent and modeling. Sci. Rep. 15, 3870. https://doi.org/10.1038/s41598-025-86004-z (2025).

Sajadian, S. A., Esfandiari, N., Saadati Ardestani, N., Amani, M. & Estévez, L. A. Measurement and modeling of the solubility of Mebeverine hydrochloride in supercritical carbon dioxide. Chem. Eng. Technol. 47, 811–821. https://doi.org/10.1002/ceat.202300449 (2024).

Sajadian, S. A. et al. Solubility Measurement and Correlation of Alprazolam in Carbon Dioxide with/without Ethanol at Temperatures from 308 to 338 K and Pressures from 120 to 300 bar. J. Chem. Eng. Data. 69, 1718–1730. https://doi.org/10.1021/acs.jced.3c00587 (2024).

Rojas, A. et al. Solubility of Oxazepam in supercritical carbon dioxide: experimental and modeling. Fluid. Phase. Equilibria. 585, 114165. https://doi.org/10.1016/j.fluid.2024.114165 (2024).

Sodeifian, G., Bagheri, H., Aghaei, B. G., Golshani, H., Rashidi-Nooshabadi, M. S. Solubility modeling of Levofloxactin hemihydrate in supercritical CO2 green solvent using Soave-Redlich-Kwong EoS and density-based models. J. Taiwan Inst. Chem. Eng. 173, 106156. https://doi.org/10.1016/j.jtice.2025.106156 (2025).

Setoodeh, N., Darvishi, P. & Ameri, A. A thermodynamic approach for correlating the solubility of drug compounds in supercritical CO2 based on Peng-Robinson and Soave-Redlich-Kwong equations of state coupled with Van der Waals mixing rules. J. Serbian Chem. Soc. (2019).

Najafi, M. et al. Thermodynamic modeling of the Gas-Antisolvent (GAS) process for precipitation of finasteride. J. Chem. Petroleum Eng. 54, 297–309. https://doi.org/10.22059/jchpe.2020.300747.1311 (2020).

Sodeifian, G. et al. Thermodynamic modeling and solubility assessment of oxycodone hydrochloride in supercritical CO2: semi-empirical, EoSs models and machine learning algorithms. Case Stud. Therm. Eng. 55, 104146. https://doi.org/10.1016/j.csite.2024.104146 (2024).

Sadeghi, A. et al. Machine learning simulation of pharmaceutical solubility in supercritical carbon dioxide: prediction and experimental validation for Busulfan drug. Arab. J. Chem. 15, 103502. https://doi.org/10.1016/j.arabjc.2021.103502 (2022).

Altalbawy, F. M. A. et al. Universal data-driven models to estimate the solubility of anti-cancer drugs in supercritical carbon dioxide: correlation development and machine learning modeling. J. CO2 Utilization. 92, 103021. https://doi.org/10.1016/j.jcou.2025.103021 (2025).

Razmimanesh, F. et al. Measuring the solubility of tacrolimus in supercritical carbon dioxide (binary and ternary systems), comparing the performance of machine learning models with conventional models. J. Mol. Liq. 415, 126295. https://doi.org/10.1016/j.molliq.2024.126295 (2024).

Peyrovedin, H., Sajadian, S. A., Bahmanzade, S., Zomorodian, K. & Khorram, M. Studying the Rifampin solubility in supercritical CO2 with/without co-solvent: experimental data, modeling and machine learning approach. J. Supercrit. Fluids. 218, 106510. https://doi.org/10.1016/j.supflu.2024.106510 (2025).

Oghenemaro, E. F. et al. Comprehensive theoretical analysis of the solubility of two benzodiazepine drugs in supercritical solvent; thermodynamic and advanced machine learning approaches. J. Mol. Liq. 434, 128000. https://doi.org/10.1016/j.molliq.2025.128000 (2025).

Hani, U. et al. Computational intelligence modeling of nanomedicine preparation using advanced processing: solubility of fludrocortisone acetate in supercritical carbon dioxide. Case Stud. Therm. Eng. 45, 102968. https://doi.org/10.1016/j.csite.2023.102968 (2023).

Zhang, M. & Mahdi, W. A. Development of SVM-based machine learning model for estimating lornoxicam solubility in supercritical solvent. Case Stud. Therm. Eng. 49, 103268. https://doi.org/10.1016/j.csite.2023.103268 (2023).

Obaidullah, A. J. & Almehizia, A. A. Machine learning-based prediction and mathematical optimization of capecitabine solubility through the supercritical CO2 system. J. Mol. Liq. 391, 123229. https://doi.org/10.1016/j.molliq.2023.123229 (2023).

Esfandiari, N. & Ali Sajadian, S. Solubility of lacosamide in supercritical carbon dioxide: an experimental analysis and thermodynamic modeling. J. Mol. Liq. 360, 119467. https://doi.org/10.1016/j.molliq.2022.119467 (2022).

Sanni, S. E., Okoro, E. E., Sadiku, E. R. & Oni, B. A. In Current Trends and Advances in Computer-Aided Intelligent Environmental Data Engineering (eds. Gonçalo, M. & Joshua, O. I.) 129–160 (Academic Press, 2022).

Liu, W., Fan, H. & Xia, M. Step-wise multi-grained augmented gradient boosting decision trees for credit scoring. Eng. Appl. Artif. Intell. 97, 104036. https://doi.org/10.1016/j.engappai.2020.104036 (2021).

Chan, K. Y. et al. Deep neural networks in the cloud: review, applications, challenges and research directions. Neurocomputing 545, 126327. https://doi.org/10.1016/j.neucom.2023.126327 (2023). https://doi.org/https://doi.org/

Breiman, L. Random & Forests. Mach. Learn. 45, 5–32 https://doi.org/10.1023/A:1010933404324 (2001).

Yang, A. et al. Insight to the prediction of CO2 solubility in ionic liquids based on the interpretable machine learning model. Chem. Eng. Sci. 297, 120266. https://doi.org/10.1016/j.ces.2024.120266 (2024).

Niazkar, M. et al. Applications of XGBoost in water resources engineering: a systematic literature review (Dec 2018–May 2023. Environ. Model. Softw. 174, 105971. https://doi.org/10.1016/j.envsoft.2024.105971 (2024).

Deprez, M. & Robinson, E. C. In In Machine Learning for Biomedical Applications 41–65 (eds. Deprez, M. et al.) (Academic, 2024).

Strobl, C., Boulesteix, A. L., Zeileis, A. & Hothorn, T. Bias in random forest variable importance measures: illustrations, sources and a solution. BMC Bioinform. 8, 25. https://doi.org/10.1186/1471-2105-8-25 (2007).

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

Author information

Authors and Affiliations

Contributions

N.E.: Writing, Investigation, Methodology, Software, Review and Editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Esfandiari, N. Investigation of Lacosamide solubility in supercritical carbon dioxide with machine learning models. Sci Rep 15, 42392 (2025). https://doi.org/10.1038/s41598-025-26467-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-26467-2