Abstract

Falling is an emergency situation that can result in serious injury or even death, especially in the absence of immediate assistance. Therefore, developing a model that can accurately and promptly detect falls is crucial for enhancing quality of life and safety. In the field of object detection, while YOLOv8 has recently made notable strides in detection accuracy and speed, it still faces challenges in detecting falls due to variations in lighting, occlusions, and complex human postures. To address these issues, this study proposes the SDES-YOLO model, an improvement based on YOLOv8. By incorporating a multi-scale feature extraction pyramid (SDFP), occlusion-aware attention mechanism (SEAM), an edge and spatial information fusion module (ES3), and a WIoU-Shape loss function, the SDES-YOLO model significantly enhances fall detection performance in complex scenarios. With only 2.9M parameters and 7.2 GFLOPs of computation, SDES-YOLO achieves an mAP@0.5 of 85.1%, representing a 3.41% improvement over YOLOv8n, while reducing parameter count and computation by 1.33% and 11.11%, respectively. These results indicate that SDES-YOLO successfully combines efficiency and precision in fall detection. Through these innovations, SDES-YOLO not only improves detection accuracy but also optimizes computational efficiency, making it effective even in resource-constrained environments.

Similar content being viewed by others

Introduction

Falls represent a significant health issue across all stages of life, particularly affecting children developing motor skills and older adults who face increased risks due to age-related factors. While often considered a natural part of life, falls carry substantial health and economic burdens globally. In the elderly, the economic impact of falls is especially pronounced, with related annual costs in the United States alone estimated to reach approximately $50 billion for fatal and non-fatal falls1,2. These economic pressures are exacerbated by the demands for hospitalization, rehabilitation, and long-term care, imposing severe strains on healthcare systems and societal resources3. Research indicates that falls are not only a physical health issue but also have psychological impacts, including fear of falling, which can lead to reduced activity levels and further health deterioration4. The documented relationship between previous falls and the likelihood of subsequent severe injuries underscores the increased risk for individuals with a fall history5,6. This cyclic nature of falls necessitates effective prevention strategies, especially for high-risk groups like older adults, who may experience increased morbidity and mortality due to fall-related injuries7. Hence, fall detection has emerged as a critical research area given its substantial global health and economic implications.

The development of wearable devices and sensor networks has significantly advanced the detection of human posture and fall events. Wearable technology integrates sensors and wireless communication to monitor human movement and posture, providing real-time feedback and high detection accuracy. These devices can use various interaction methods, such as gestures and biofeedback mechanisms, to enhance users’ awareness of their posture, which is particularly beneficial for individuals with chronic pain or posture issues8. For instance, the G-STRIDE foot-mounted inertial sensor has been clinically validated to accurately measure walking parameters and assess fall risk, highlighting its potential for enhancing gait analysis in elderly populations.9. However, challenges remain in user compliance, as individuals might forget to wear these devices or be discouraged by the need for frequent recharging10.

Conversely, fixed sensor nodes deployed in specific environments can effectively monitor changes in human posture by tracking the center of gravity and motion trajectories. Although offering valuable data for overall posture assessment and fall detection, these systems face challenges related to high deployment costs and environmental interference11,12. For example, the use of inductive textile sensors to monitor back movement has been explored, but issues such as distinguishing similar postures limit accuracy13. Additionally, integrating various sensor types, including pressure sensors and inertial measurement units, has been proposed to enhance the robustness of posture detection systems14,15. Despite these advancements, the need for effective and cost-efficient solutions for widespread adoption in everyday environments remains critical16.

Given the critical need for effective fall detection solutions, advancements in object detection technology play a pivotal role in enhancing detection precision and responsiveness. Object detection algorithms are generally categorized into two types: two-stage and single-stage algorithms. Two-stage algorithms first generate region proposals and then classify these regions. Among the most notable two-stage methods is R-CNN, which extracts region proposals via selective search and then runs a convolutional neural network for classification17. Fast R-CNN improves this by sharing region proposal computations and using a softmax classifier18, while Faster R-CNN integrates a region proposal network (RPN) to further enhance efficiency19. On the other hand, single-stage algorithms, such as YOLO (You Only Look Once), bypass the region proposal step completely, directly predicting bounding boxes and class probabilities from the entire image, significantly boosting speed20. YOLOv3 further improves this approach by introducing multi-scale predictions to detect objects of various sizes21. Similarly, SSD (Single Shot Multibox Detector) generates predictions from multiple feature maps at different resolutions, detecting objects in a single network pass22. RetinaNet introduces a novel loss function, Focal Loss, to address class imbalance, significantly enhancing the detection accuracy of small objects in dense scenes23. Recently, EfficientDet has gained popularity for its scalable design that balances accuracy and computational efficiency across different resource levels24. Another innovative approach is the Transform-based model DETR, which replaces region proposals and non-maximum suppression with a transformer architecture, directly predicting object locations and labels25. These diverse algorithms illustrate the evolving landscape of object detection, balancing speed, accuracy, and scalability. Compared to two-stage algorithms, single-stage algorithms like YOLO offer faster detection speeds and lower computational costs, making them advantageous for many real-time applications. This study selected YOLO as our baseline model due to its superior performance on benchmark tasks, higher accuracy compared to SSD, and significant improvements in structure simplicity and inference speed relative to DETR. To address challenges in fall detection caused by occlusion and posture variation, this study proposes a high-precision and lightweight fall detection algorithm-SDES-YOLO.

Our contributions are as follows:

-

1.

This study proposes a new multi-scale feature extraction pyramid (SDFP) to replace the traditional SPPF module. The SDFP module utilizes convolution operations for feature extraction, capturing finer detail features. In contrast, the traditional SPPF module’s pooling operations may lose critical detail information.

-

2.

This study introduces an occlusion-aware attention mechanism (SEAM) to improve the detection head. The SEAM mechanism enhances detection robustness in occluded scenes, thereby more effectively handling complex scenarios and boosting overall model detection performance.

-

3.

This study proposes a novel ES3 module that combines edge and spatial information, replacing the first two convolutional blocks in the backbone structure. This improvement helps the model better understand image content, significantly enhancing detection accuracy.

-

4.

This study combines the Wise-IoU and Shape-IoU loss functions to propose the WIoU-Shape loss function. This method particularly focuses on detecting complex postures, optimizing IoU computation, and improving the detector’s overall performance and accuracy.

The subsequent sections of this paper are structured as follows: The methodology section introduces the proposed SDES-YOLO model. The experimental results and analysis section describes the experimental setup, evaluation metrics, and analyzes the results. Finally, the conclusion section summarizes the research findings and discusses future research directions.

Related work

In the domain of fall detection, computer vision-based models have made significant advancements, leveraging various algorithms to enhance real-time recognition, accuracy, and adaptability across different environments. These models offer robust, non-invasive methods to monitor elderly populations and reduce false alarms. Convolutional Neural Networks (CNNs) play a critical role due to their effectiveness in processing and classifying visual data. For example, Kandukuru et al. developed a system using optimized optical flow images for real-time posture analysis to detect falls without relying on object detection, while Yu et al. proposed a CNN-based system that achieved high fall detection accuracy by analyzing human postures captured by monocular cameras26,27. Another approach involves integrating deep learning with depth feature fusion in computer vision (DFFCV-FDC), which combines deep learning models and Gaussian filtering to reduce false positives in assisted living environments (Almukadi et al.)28. Hasan et al. utilized Recurrent Neural Networks (RNNs) with Long Short-Term Memory (LSTM) networks to capture the temporal dynamics of human movements, achieving highly accurate fall detection in real-time scenarios29. Additionally, Tsai et al. introduced a rapid foreground segmentation method to analyze human shapes and center of mass under varying lighting conditions, achieving high accuracy without using object detection techniques30.

Some models incorporate multiple visual sensors or multimodal data to enhance robustness. For instance, Espinosa-Loera et al. used multiple cameras and CNNs to improve detection accuracy through multi-angle analysis, while Sharma et al. combined machine vision with deep learning and infrared sensors to effectively detect falls in low-visibility environments, focusing on disabled individuals31,32. For indoor fall detection, Zhang et al.’s YOLACT-based dual-network method employed human contour extraction and CNNs for classification, excelling in reducing false positives. Meanwhile, Nguyen et al. integrated motion features such as shape and velocity to distinguish falls from daily activities, achieving high accuracy with a single camera setup33,34. Dual-stream frameworks also contributed to fall detection advancements, such as using human skeleton data and motion history for high accuracy (Mobsite et al.) and spatio-temporal convolutional autoencoders for maritime fall detection focusing on scene motion rather than object detection (Bakalos et al.)35,36.

Recent developments in deep learning have extended to action recognition fields, like the CG-Net framework that combines pose estimation and action classification, achieving impressive accuracy and recall rates (Cheng et al.)37. Techniques for image processing and feature extraction have also improved, for example, He Xu et al. used DeeperCut for fall recognition38. Tracking and posture recognition algorithms have consistently enhanced fall detection, such as Kokkinos et al.’s stable human tracker based on geometry-rich hybrid models to adapt to dynamic visual conditions, and Zeng et al.’s combination of Random Forest models with MPU6050 sensor data, achieving over 90% reliability39,40.

Overall, these models represent a broad spectrum of technologies applied to fall detection through computer vision, continuously improving the safety of vulnerable populations. Although current fall detection algorithms have made progress, challenges remain in detecting small targets and handling multiple occlusions. Additionally, in public settings, fall detection algorithms must not only maintain high accuracy but also provide real-time responsiveness.

Methods

To address the limitations of existing models in detecting human falls effectively, this study proposes an improved YOLO-based model (SDES-YOLO) built upon YOLOv8. The overall network architecture of the proposed model comprises three main components: the backbone, the neck, and the head, as illustrated in Fig. 1.

To enhance detection progress, the proposed approach introduces a multi-scale feature extraction module called SDFP, which replaces the SPPF module at the last segment of the backbone. SDFP utilizes convolution operations for feature extraction, compensating for the detail loss that might occur during the pooling operations of the traditional SPPF, thereby finely extracting multi-scale features.To tackle the challenge of detecting falls in occluded scenarios, the proposed approach integrates an occlusion-aware attention mechanism (SEAM) into the detection head. Conventional convolutional blocks at the initial stages might fail to capture crucial initial features sufficiently, impacting subsequent detection accuracy. Therefore, this study designs the ES3 module to replace the first two convolutional blocks in the backbone. The ES3 module effectively combines edge and spatial information, aiding the model in accurately parsing image content while maintaining high precision with a minimal increase in computational load. Finally, the proposed approach combines Wise-IoU and Shape-IoU loss functions to significantly enhance the model’s detection performance, particularly for targets with complex postures. The sections covering SDFP, SEAM-Head, ES3, and WIoU-Shape will provide detailed explanations of each component.

SDFP (multi-scale feature extraction pyramid)

Dilated convolution (also known as atrous convolution)41 is a technique where spaces (dilations) are inserted between the convolutional kernel elements, expanding the receptive field without increasing the computational load, as illustrated in Fig. 2. By adjusting the dilation rate, dilated convolutions can capture features from a broader range while maintaining spatial resolution. This is particularly important for feature extraction in multi-scale targets and complex scenes. Shared convolution42 is an optimized convolution operation where the same convolutional kernel is shared across multiple branches, achieving computational efficiency. Specifically, multiple feature maps are convolved using the same kernel with different dilation rates, thereby capturing features at various scales. This method reduces computational resources while extracting rich feature information. In our SDFP module, the shared convolution is implemented by applying a single set of kernel weights across all branches. These branches process feature maps using different dilation rates, enabling multi-scale feature extraction. By reusing the same kernel weights instead of learning separate weights for each dilation rate, shared convolution reduces the number of parameters and computational overhead while preserving consistent feature representation. This approach enhances efficiency and ensures that features captured at various scales are complementary, improving overall performance in complex detection tasks.

To counteract the potential loss of detail information in the pooling operations of the original YOLOv8’s SPPF module, this study proposes a new SDFP module to replace it. The SDFP module implements a feature pyramid structure based on shared convolution and dilated convolution, as shown in Fig. 3, to enhance feature extraction efficacy. The implementation process is as follows: First, the module processes the input features with a convolution operation using standard kernels with a stride of 1, halving the number of channels. This initial convolution aims to reduce the computational burden while effectively extracting key information. Next, the processed feature map is passed through a shared convolution layer. This layer uses a 3×3 kernel with a stride of 1 and appropriate padding to ensure the output feature map retains the same spatial dimensions. The shared convolution layer conducts multiple convolutions at different dilation rates to capture multi-scale information. Specifically, the output features from the initial convolution are stored in a list. Subsequently, at each preset dilation rate, the same shared convolutional kernel is applied to the feature map to perform dilated convolution. The feature maps from each dilation operation are padded according to the dilation rate and kernel size to ensure consistent dimensions with the original features. The dilated convolution steps thus increase the receptive field, capturing information from a broader scope. Finally, all the feature maps generated by the dilated convolutions are concatenated along the channel dimension to form a new feature map. This concatenated feature map contains multi-scale information, which is then further integrated using an additional convolutional layer with a 1×1 kernel to adjust the channel count as required by subsequent network layers. This process allows the SDFP module to effectively capture multi-scale feature information without a significant increase in computational load, thereby enhancing the model’s feature extraction capabilities and overall performance. This shared convolution and dilated convolution-based feature pyramid structure significantly improve the model’s performance in handling images with varied scales and complexities, especially in complex scene target detection tasks.

SEAM-Head

Attention mechanisms43 are particularly important in deep learning because they allow the model to selectively focus on the crucial parts of the input while ignoring irrelevant information. By computing attention weights, the model can highlight important features, thereby improving detection accuracy based on rich feature representation. Additionally, attention mechanisms can capture not just local information but also aggregate features on a global scale, enhancing the model’s overall recognition capability. This mechanism helps the model achieve better performance when dealing with complex scenes and multi-scale targets.

The SEAM Attention Mechanism44 is designed to address inter-class occlusion issues, which can lead to misalignment, local confusion, and missing features. To tackle these challenges, this study integrates the SEAM module into the detection head of YOLOv8 to enhance the model’s fall detection capability. The module’s design has three main objectives: achieving multi-scale detection, emphasizing key areas in the image, and downplaying background regions. The main structure of the SEAM module, as depicted in Fig. 4, comprises three components: first, it utilizes depthwise separable convolution with residual connections. Depthwise separable convolution operates by separating channels, which reduces the number of parameters and learns the importance of different channels, but it overlooks the relationships between channels. To address this, the output from different depthwise convolutions is subsequently combined through a pointwise (1x1) convolution, facilitating inter-channel information fusion. Next, two fully connected layers are used to further integrate information from each channel. These layers model the dependencies between occluded and non-occluded targets by capturing the long-range correlations in spatial and channel dimensions. Specifically, the fully connected layers act as attention weight generators, learning to emphasize features from visible regions while reweighting and recovering information from occluded regions. This process helps to propagate contextual information and compensate for losses caused by occlusions, enhancing the network’s ability to reconstruct incomplete features. The output logit values from the fully connected layers undergo exponential transformation, expanding the value range from [0, 1] to [1, e]. This exponential normalization provides a monotonic mapping relationship, making the results more tolerant to positional errors and enhancing the model’s robustness. Finally, the output from the SEAM module is used as attention weights, multiplied with the original features, enabling the model to handle occlusion issues more effectively. In the SEAM module structure depicted in Fig. 4, the input image is first processed by three Channel and Spatial Mixing Modules (CSMM) with different patch sizes (6, 7, and 8). These modules extract multi-scale features through convolution operations. The extracted features are then combined and the feature map size reduced through average pooling. A multi-layer fully connected network then expands the channels, enhancing important features and downplaying less important ones. The right side of the structure illustrates the CSMM process in detail: the image first passes through an embedding layer, followed by a GELU activation function and a Batch Normalization layer, then through depthwise convolution and pointwise convolution, further enhancing feature expressiveness. The processed feature map is finally applied as attention weights to the original feature map, enabling the model to better focus on target areas, particularly in handling occlusion and multi-scale target detection issues. By integrating the SEAM module into the detection head of YOLOv8, the model not only improves fall detection performance but also achieves higher accuracy and better robustness without significantly increasing the computational burden.

SEAM Illustration. The left side shows the overall structure of SEAM, while the right side illustrates the design of CSMM (Channel and Spatial Mixing Module). CSMM captures multi-scale features using various patch sizes and employs depthwise separable convolution to capture spatial and channel relationships effectively.

ES3 (edge and spatial information fusion module)

The traditional initial convolutional blocks in image processing rely solely on standard convolutional kernels and fixed receptive fields, which may fail to adequately capture multi-scale and complex initial features. Specifically, standard convolutions operate within fixed local areas and their receptive fields are limited by the kernel size, thus ineffective in capturing important information at different scales and across wide ranges in the image. Additionally, standard convolutional blocks may not perform well in handling edge details and complex patterns, as they lack sensitivity to high-frequency changes and image gradients. This limitation can lead to insufficient feature representation during the initial stages of detection tasks, subsequently affecting the detection accuracy. These deficits are particularly evident when dealing with images characterized by real-time variations and intricate details, thereby constraining overall model performance.

To overcome these drawbacks, this study designed the ES3 (Edge and Spatial Information) module to replace the first two convolutional blocks in the backbone, as shown in Fig. 5. The ES3 module effectively combines edge information with spatial information by performing a series of operations to progressively extract and fuse input features, enhancing feature representation. The implementation process is as follows: Initially, the module performs an initial convolution operation on the input features using a convolution layer with a large field of view. The convolutional kernel size is 3×3 with a stride of 2, covering local areas of the input image via a sliding window approach, thereby effectively capturing more details and local features. The resulting feature map from this initial convolution contains basic information from the input image and provides a good foundation for subsequent processing. The feature map from the convolution operation is then split into two parallel branches: the Sobel branch and the pooling branch. In the Sobel branch, the module applies the classic Sobel operator for edge detection. The Sobel operator identifies edges in the image by calculating pixel gradients. This process involves defining two 3×3 Sobel convolution kernels to compute gradients in the x and y directions, respectively, as illustrated in Eq. (1) and Eq. (2). These Sobel kernels are converted into 3D convolution kernels and applied to the feature maps to compute gradients in the x and y directions, as shown in Eq. (3) and Eq. (4). These convolution kernels remain fixed during training, ensuring efficient extraction of edge information without adding computational burden. The output feature map from the Sobel branch contains rich edge details, aiding the model in capturing important features from the input image.

Sobel Operator in \(\text {X}\) Direction:

Sobel Operator in \(\text {Y}\) Direction:

Gradient Computation Methods:

Where \(\text {A}\) represents the original image, \(G_{x}\) and \(G_{\textrm{y}}\) represent the image gradient values after horizontal and vertical edge detection, \(\text {G}\) represents the horizontal and vertical gradient values for each pixel in the image.

Apart from the Sobel branch, the ES3 module includes a pooling branch. The pooling branch extracts significant information from the feature map using a max pooling operation with a kernel size of 2×2 and a stride of 1. This branch employs asymmetric padding (0 pixels on the left and top, 1 pixel on the right and bottom), selecting the maximum value in each local area, thereby reducing the feature map size and enhancing feature prominence. Max pooling helps retain key feature points while minimizing irrelevant information, making subsequent feature extraction more focused and efficient. The feature maps from the Sobel and pooling branches are then concatenated along the channel dimension, doubling the feature map’s channel count. This concatenated feature map is subsequently fused through an additional convolution operation with a 3×3 kernel and a stride of 2. This step reduces the channel count of the feature map and continues extracting key information. Concurrently, the convolution layers reduce the spatial dimensions of the feature map, integrating local features into higher-level abstract features. Finally, a 1×1 convolution operation modifies the feature map’s channel count to meet the requirements of subsequent network layers. In this manner, the ES3 module efficiently maintains high-level parsing of input features while extracting edge and spatial information, thereby enhancing the model’s overall performance and accuracy. The modular design of the ES3 module effectively processes and integrates initial features, improving detection accuracy and robustness without significantly increasing computational load, particularly excelling in handling occlusion and multi-scale detection tasks.

WIoU-Shape loss function

The original YOLOv8 loss function, CIoU, uses the relative proportions of the width and height of the predicted and ground-truth boxes45, rather than their absolute values. This aspect ratio describes relative values and can introduce some ambiguity. Furthermore, CIoU does not account for the balance between easy and hard samples. For this reason, the proposed approach employs the Wise-IoU loss function as a replacement for CIoU in the SDES-YOLO model. In CIoU, IoU represents the Intersection over Union, \(\text {w}\) and \(\text {h}\) denote the predicted box’s width and height, \(_{W}gt\) and \(\text {hgt}\) indicate the ground-truth box’s width and height, \(\text {b}\) and \(b^{gt}\) represent the center points of the predicted and actual boxes, and \(\rho\) denotes the Euclidean distance between \(\text {b}\) and \(b^{gt}\). \(w^{c}\) and \(h^{c}\) represent the width and height of the smallest enclosing box for the predicted and ground-truth boxes. The CIoU expression is as follows:

Wise-IoU (WIoU) introduces a novel evaluation method by using a dynamic non-monotonic focusing mechanism that measures the “out-of-bounds” quality of anchor boxes instead of the traditional IoU (Intersection over Union)46. Furthermore, this mechanism incorporates an intelligent gradient gain allocation strategy to reduce competition pressure among high-quality anchor boxes while minimizing the negative gradient impact of low-quality samples. This approach allows WIoU (Weighted IoU) to focus on moderate-quality anchor boxes, thereby effectively improving the overall efficiency of the detection system. \(\mathscr {R}_{WIoU}\) significantly amplifies ordinary-quality anchor boxes. When anchor boxes overlap well with target boxes, \(\mathscr {L}_{loU}\) significantly reduces \(\mathscr {R}_{WIoU}\)’s focus on high-quality anchor boxes and their distance between center points.Assuming the position in the target box corresponding to (\(\text {x}\), \(\text {y}\)) is (\(x_{gt}\), \(y_{gt}\)), WIoUv1 is expressed as:

Additionally, allocating smaller gradient gains for significantly out-of-bounds anchor boxes effectively prevents large harmful gradients from low-quality samples. Using \(\beta\), a non-monotonic focusing coefficient is constructed and applied to WIoUv1:

In human fall detection, accurately extracting human posture and motion features is crucial due to the complexity and variability of falls and potential occlusions and perspective changes in monitoring environments. Shape-IoU, through more accurate bounding box regression, improves the detection model’s ability to recognize fall behavior, especially in complex environments47. The SDES-YOLO model employs the Shape-IoU loss function and combines it with WIoUv3 to further enhance the model’s fall detection capability. The Shape-IoU formula can be derived from Fig. 6:

Where scale is a scaling factor related to the scale of detected objects in the dataset, \(x_c^{gt}\) and \(y_{c}^{gt}\) are the coordinates of the ground truth center point, and \(x_{c}\) and \(y_{c}\) are the coordinates of the predicted box center point. \(ww\) and \(hh\) are horizontal and vertical weighting coefficients related to the shape of the ground truth box. The corresponding bounding box regression loss is as follows:

Experimental results and analyses

Experimental environments and dataset

The deep learning environment and the framework used for the experiments, as shown in Table 1, have been applied to other networks with the same configuration. The network hyperparameters, detailed in Table 2, were also used for other models in the experiments section to ensure consistency and fair comparisons across all evaluated approaches.

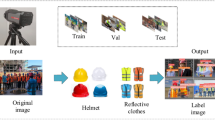

To ensure the robustness and generalization of SDES-YOLO across diverse scenarios, this study utilized a publicly available dataset that encompasses a wide range of real-world conditions48. The dataset comprises 4,497 images collected from various sources, featuring diverse lighting conditions, shooting angles, and environmental contexts, including both indoor and outdoor scenes. The dataset covers five primary application scenarios: elderly care monitoring, workplace safety, public safety, assisted living facilities, and sports injury detection, demonstrating its broad applicability. The dataset includes a range of image sizes, ensuring representation across small, medium, large, and jumbo-sized images. Furthermore, it features multiple aspect ratios, such as tall, square, wide, and very wide formats, allowing the model to handle scale and perspective variations effectively. These variations improve the model’s adaptability to real-world deployments. The images were carefully labeled with high-quality annotations to capture fall-related activities, including challenging scenarios such as occlusions, variations in human posture, and small-scale targets. Examples of these scenarios are illustrated in Figure 7, which showcases the diversity of the dataset across multiple dimensions. For training and evaluation, the dataset was split into training, validation, and test sets in a 7:2:1 ratio, ensuring a balanced distribution of scenarios in each subset. Specifically, 70% of the data was allocated for training to provide the model with sufficient examples to learn diverse features and patterns. 20% was reserved for validation to fine-tune hyperparameters and prevent overfitting during training. The remaining 10% was used for testing to provide an unbiased assessment of the model’s generalization ability on unseen data. All input images were resized to 640×640 to maintain consistency during training. By leveraging this openly accessible dataset, SDES-YOLO ensures generalization to complex and unseen environments. Further details on how to access the dataset can also be found in the Data availability section of this paper.

Evaluation metrics

This study evaluates object detection performance using Precision, Recall, mAP, GFLOPs, and model parameters. True Positives (TP), False Positives (FP), False Negatives (FN), and True Negatives (TN) define classification outcomes. These metrics provide a comprehensive assessment of accuracy and efficiency.

Precision measures the ability to correctly identify positive samples. High precision indicates fewer false positives, while low precision reflects a higher rate of incorrect positive predictions. It is expressed as follows:

Recall measures the proportion of actual positive samples accurately identified by the classifier. High recall means most positive instances are detected, while low recall indicates many are missed. It is expressed as follows:

Average Precision (AP) quantifies object detection performance by calculating the area under the Precision-Recall curve across varying thresholds. Higher AP values indicate superior detection accuracy. It is calculated as:

Mean Average Precision (mAP) averages AP values across all classes in multi-class tasks. mAP@0.5, a common benchmark, is calculated at an IoU threshold of 0.5 to evaluate detection performance. It is expressed as:

Where \(\text {N}\) is the total number of classes, and \(AP_{i}\) is the average precision for class.

GFLOPs measure computational complexity in deep learning models, representing the number of floating-point operations (in billions). Higher GFLOPs indicate greater model complexity and longer training or inference times.

The number of parameters represents the learnable components in a model, impacting storage, computation, and training time. More parameters enhance feature learning but increase resource demands.

Ablation experiments

To comprehensively evaluate the contribution of each module in SDES-YOLO, this study performed extensive ablation studies. These experiments systematically analyze the performance impact of the proposed components, including the multi-scale feature extraction pyramid (SDFP), the occlusion-aware attention mechanism (SEAM), the edge and spatial information fusion module (ES3), and the WIoU-Shape loss function. The results, summarized in Table 3, clearly demonstrate the incremental benefits of each module. Initially, replacing the traditional SPPF module with the SDFP module yielded an mAP@0.5 improvement of 1.8 percentage points, highlighting its effectiveness in capturing multi-scale features. Subsequently, integrating the SEAM mechanism into the detection head improved detection robustness under occlusions while reducing computational complexity, as evidenced by a slight decrease in the number of parameters and GFLOPs. Further replacing the first two convolutional blocks in the backbone with the ES3 module enhanced the model’s ability to process spatial and edge information, leading to an additional accuracy gain of 1.3 percentage points. Finally, substituting the CIoU loss function with the WIoU-Shape loss function provided a 1.0 percentage point boost in accuracy, particularly benefiting the detection of complex human postures. Overall, the combination of these enhancements increased mAP@0.5 by 3.41%, while reducing the parameter count by 1.33% and the computational load by 11.11%, compared to the baseline YOLOv8n model. This systematic ablation study underscores the critical role each component plays in improving the precision, efficiency, and robustness of SDES-YOLO.

The proposed WIoU-Shape loss function demonstrates consistently superior performance across multiple dimensions by achieving lower overall losses throughout the training process, including box loss, classification loss (CLS loss), and distribution focal loss (DFL loss), as shown in Figure 8. Unlike other mainstream loss functions, WIoU-Shape is specifically designed to incorporate shape-specific factors, which enable it to better adapt to diverse object geometries and improve detection performance in complex scenarios. This is particularly evident in situations involving challenging human postures, where WIoU-Shape excels in providing accurate bounding box regression and robust optimization. By accelerating convergence and reducing overall training loss, this innovation enhances optimization stability, ensuring consistent performance improvements across different metrics. Moreover, WIoU-Shape enables SDES-YOLO to achieve higher mAP@0.5 compared to other loss functions while maintaining computational efficiency, as evidenced in Table 4. These results highlight the critical role of WIoU-Shape in addressing key limitations of existing loss functions and driving significant advancements in the overall performance of SDES-YOLO, especially in challenging real-world applications.

Comparative experiment

In this section, this study comprehensively compares our proposed SDES-YOLO algorithm with several mainstream models, including YOLOv5s, YOLOv6n49, YOLOv7-tiny50, YOLOv8n, RT-DETR-R1851, YOLOv9s52, YOLOv10s53, and YOLOv11s, as shown in Table 5. Compared to YOLOv5s, SDES-YOLO improves Precision by 6.04% and mAP@0.5 by 4.93%, while reducing parameters and GFLOPs by 57.70% and 54.43%, respectively, demonstrating its ability to maintain high accuracy with reduced computational costs due to the integration of the Spatial-Dense Feature Pyramid (SDFP) module for enhanced multi-scale feature extraction. Against YOLOv6n, SDES-YOLO achieves higher Precision, Recall, and mAP@0.5 by 4.72%, 3.68%, and 7.58%, respectively, while reducing parameters by 29.96% and GFLOPs by 38.98%, highlighting the role of the Edge-Spatial Enhancement (ES3) module in refining spatial and edge information. Compared to YOLOv7-tiny, which shows lower Precision, Recall, and mAP@0.5, SDES-YOLO improves these metrics by 14.83%, 16.75%, and 21.88%, respectively, with reductions of 50.65% in parameters and 44.62% in GFLOPs, showcasing its lightweight design and the robustness provided by the WIoU-Shape loss function in optimizing complex object detection. Against YOLOv8n, SDES-YOLO improves Precision and mAP@0.5 by 4.72% and 3.41%, while reducing parameters and GFLOPs by 1.33% and 11.11%, demonstrating better performance through optimized computation and the integration of the SEAM-Head for improved occlusion robustness. Compared to RT-DETR-R18, SDES-YOLO outperforms in Precision by 9.48% and mAP@0.5 by 9.38%, while drastically reducing parameters and GFLOPs by 85.30% and 87.71%, indicating that our model achieves significantly higher accuracy with far lower computational complexity, which is crucial for real-time applications. Against YOLOv9s, although SDES-YOLO shows a slight Precision improvement of 0.60%, it achieves a 3.28% increase in mAP@0.5 and reduces parameters and GFLOPs by 58.63% and 73.03%, highlighting its lightweight design and computational efficiency enhanced by the WIoU-Shape loss function. Compared to YOLOv10s, SDES-YOLO achieves slightly lower Precision (by 1.06%) but improves Recall and mAP@0.5 by 0.53% and 2.78%, respectively, while reducing parameters and GFLOPs by 63.11% and 70.49%, demonstrating its trade-off between complexity and accuracy driven by the ES3 module. Finally, against YOLOv11s, SDES-YOLO achieves a comparable Precision but higher mAP@0.5 by 0.12%, while reducing parameters and GFLOPs by 68.49% and 66.20%, respectively, underscoring its ability to balance high detection accuracy with lower computational demands, supported by the integration of SDFP, SEAM-Head, and the WIoU-Shape loss function. These comparisons highlight how SDES-YOLO leverages its novel modules and loss function to deliver superior performance across various evaluation metrics, maintaining accuracy while significantly reducing computational complexity.

Fig. 9 illustrates the mAP@0.5 (Mean Average Precision at an IoU threshold of 0.5) changes over the training epochs for different YOLO models, including YOLOv5s, YOLOv6n, YOLOv7-tiny, YOLOv8n, RT-DETR-R18, YOLOv9s, YOLOv10s, YOLOv11s, and our proposed SDES-YOLO, on the human fall dataset. As shown in the figure, the mAP@0.5 values for all models gradually increase with the number of training epochs and eventually stabilize. The SDES-YOLO model (represented by the solid red line) demonstrates faster convergence and a higher final mAP@0.5 throughout the training process, indicating superior performance in the object detection task. Among the compared models, YOLOv7-tiny shows the worst performance, with the slowest convergence and lowest final mAP@0.5, highlighting its limitations in feature extraction and learning capacity. RT-DETR-R18 ranks second to last, with slower convergence and lower final accuracy, reflecting its higher computational complexity and reduced efficiency for real-time applications. YOLOv6n, despite being lightweight, exhibits the third lowest performance due to slower convergence and lower precision. The other models, including YOLOv5s, YOLOv8n, YOLOv9s, YOLOv10s, and YOLOv11s, perform better but still fall short of SDES-YOLO, which benefits from its innovative modules such as SDFP, ES3, SEAM-Head, and WIoU-Shape loss function. These enhancements enable SDES-YOLO to achieve faster convergence, higher accuracy, and lower computational costs, making it a highly effective solution for real-time object detection tasks.

Detection results and analysis

As shown in Fig. 10, the object detection results of different models in three distinct scenarios. The first column contains the original images, the second column presents the detection results from the baseline model YOLOv8n, and the third column shows the results from our improved model SDES-YOLO. The first row displays a conventional scene with no significant occlusion or clutter. Both the baseline model YOLOv8n and SDES-YOLO accurately detect the target objects in this scenario. The second row illustrates an occluded scene where some targets are partially obscured by other objects. While the baseline model YOLOv8n can detect the targets, its detection accuracy is relatively low. In contrast, SDES-YOLO demonstrates higher detection accuracy, successfully and precisely identifying the targets in this scenario. The third row presents a scene with small targets and occlusions. Here, the targets are smaller in size and partially obscured. Although the baseline model YOLOv8n detects the targets in most cases, its detection accuracy is lower. Comparatively, SDES-YOLO shows a superior ability to detect and annotate these small, occluded targets, highlighting its advantage in handling complex scenes. Overall, the results indicate that the improved SDES-YOLO model exhibits higher detection accuracy and robustness across various complex scenarios. It particularly excels in detecting small and occluded targets, showcasing its superior performance in challenging environments.

Eigen-CAM is a class activation mapping method based on Principal Component Analysis (PCA)54, which provides visual interpretability for the predictions made by the proposed SDES-YOLO model. It highlights discriminative regions in the input images that contribute most to the model’s decisions, enabling us to visually verify whether the model focuses on relevant areas, such as human bodies or falling postures, during detection. Compared to Grad-CAM55 and Grad-CAM++56, Eigen-CAM demonstrates advantages in generating clearer and more interpretable class activation maps. Grad-CAM generates class activation maps using gradient information from convolutional neural network models, effectively locating important regions within an image. Grad-CAM++, on the other hand, improves the gradient weighting mechanism to enhance precision and fine-grained feature extraction. In contrast, Eigen-CAM applies Principal Component Analysis to extract principal components from convolutional feature maps, focusing on the most discriminative regions of the input image. This approach retains the advantages of both Grad-CAM and Grad-CAM++ while also denoising and highlighting key features through dimensionality reduction, resulting in more interpretable activation maps. Additionally, Eigen-CAM offers computational efficiency, as the process of solving for principal components is straightforward, enabling faster generation of activation maps. These characteristics make Eigen-CAM a robust and effective tool for enhancing the transparency and explainability of the SDES-YOLO model, providing qualitative evidence that supports its robustness and generalization capabilities.

In Fig. 11, the heatmap performance of the baseline model YOLOv8n and the improved SDES-YOLO model across different scenarios is showcased. Each row represents a type of scene: the first row is a conventional scene, the second row shows an occluded scene, and the third row features small, occluded targets. The left column displays the original images, the middle column presents the heatmaps generated by YOLOv8n, and the right column shows those from SDES-YOLO. In conventional scenes, both YOLOv8n and SDES-YOLO accurately identify targets, but the SDES-YOLO heatmaps are more focused, reflecting a higher attention to the targets. For occluded scenes, YOLOv8n’s heatmaps depict some signal confusion with a dispersed focus, failing to effectively concentrate on the targets. Conversely, SDES-YOLO accurately pinpoints the target areas, maintaining detection precision even in complex backgrounds. Finally, in scenes with small, occluded targets, YOLOv8n misses the fallen target on the right, showing weak heatmap performance. In contrast, SDES-YOLO maintains high brightness and focus, better emphasizing target areas in these challenging scenarios. Overall, SDES-YOLO demonstrates significant advantages in handling occlusions and small target detection, showcasing its stability and effectiveness across varying complexities.

Conclusion

In this study, our work proposed SDES-YOLO, a high-precision and lightweight model designed for fall detection in complex environments. By integrating a multi-scale feature extraction pyramid (SDFP), an occlusion-aware attention mechanism (SEAM), an edge and spatial information fusion module (ES3), and the Wise-ShapeIoU loss function, SDES-YOLO achieved substantial performance improvements over existing models. The experimental results demonstrate that SDES-YOLO achieves an mAP@0.5 of 85.1%, with a parameter count of 2.9M and computational load of 7.2 GFLOPs. Compared to the baseline model YOLOv8n, it improves precision by 3.41%, reduces parameters by 1.33%, and lowers computational complexity by 11.11%. Furthermore, it outperforms the latest models, including RT-DETR, YOLOv9, YOLOv10, and YOLOv11, demonstrating its robustness, generalization capability, and adaptability in complex scenarios.

This research highlights SDES-YOLO’s applicability in various practical domains, such as elderly care, public safety, workplace monitoring, and sports injury detection, demonstrating its potential for deployment in real-world scenarios. Future work will focus on optimizing the model to further reduce computational demands and enhance processing speed. Efforts will also be directed towards incorporating video datasets to evaluate scalability and robustness, ultimately enabling deployment on mobile platforms such as drones and robots to support real-time applications.

Data availability

The dataset and code used in this study are publicly available at https://github.com/hxq211/SDES-YOLO/tree/main.

References

Florence, C. S. et al. Medical costs of fatal and nonfatal falls in older adults. Journal of the American Geriatrics Society 66, 693–698. https://doi.org/10.1111/jgs.15304 (2018).

Davis, J. C. et al. Comparing the cost-effectiveness of the otago exercise programme among older women and men: a secondary analysis of a randomized controlled trial. PLoS one 17, e0267247. https://doi.org/10.1371/journal.pone.0267247 (2022).

Hoffman, G. J., Hays, R. D., Shapiro, M. F., Wallace, S. P. & Ettner, S. L. The costs of fall-related injuries among older adults: Annual per-faller, service component, and patient out-of-pocket costs. Health services research 52, 1794–1816. https://doi.org/10.1111/1475-6773.12554 (2017).

McLean, K., Day, L. & Dalton, A. Economic evaluation of a group-based exercise program for falls prevention among the older community-dwelling population. BMC geriatrics 15, 1–11. https://doi.org/10.1186/s12877-015-0028-x (2015).

Kim, M.-S., Jung, H.-M., Lee, H.-Y. & Kim, J. Risk factors for fall-related serious injury among korean adults: A cross-sectional retrospective analysis. International journal of environmental research and public health 18, 1239. https://doi.org/10.3390/ijerph18031239 (2021).

Davis, J. C. et al. International comparison of cost of falls in older adults living in the community: a systematic review. Osteoporosis international 21, 1295–1306. https://doi.org/10.1007/s00198-009-1162-0 (2010).

Davis, J. C. et al. Comparing the cost-effectiveness of the otago exercise programme among older women and men: a secondary analysis of a randomized controlled trial. PLoS one 17, e0267247. https://doi.org/10.1371/journal.pone.0267247 (2022).

Kuo, Y.-L., Huang, K.-Y., Kao, C.-Y. & Tsai, Y.-J. Sitting posture during prolonged computer typing with and without a wearable biofeedback sensor. International journal of environmental research and public health 18, 5430. https://doi.org/10.3390/ijerph18105430 (2021).

Álvarez, M. N. et al. Assessing falls in the elderly population using g-stride foot-mounted inertial sensor. Scientific reports 13, 9208. https://doi.org/10.1038/s41598-023-36241-x (2023).

Kim, S. H. et al. Measurement and correction of stooped posture during gait using wearable sensors in patients with parkinsonism: a preliminary study. Sensors 21, 2379. https://doi.org/10.3390/s21072379 (2021).

Min, W., Cui, H., Han, Q. & Zou, F. A scene recognition and semantic analysis approach to unhealthy sitting posture detection during screen-reading. Sensors 18, 3119. https://doi.org/10.3390/s18093119 (2018).

Ranavolo, A. et al. The sensor-based biomechanical risk assessment at the base of the need for revising of standards for human ergonomics. Sensors 20, 5750. https://doi.org/10.3390/s20205750 (2020).

García Patiño, A., Khoshnam, M. & Menon, C. Wearable device to monitor back movements using an inductive textile sensor. Sensors 20, 905. https://doi.org/10.3390/s20030905 (2020).

Liu, G., Li, X., Xu, C., Ma, L. & Li, H. Fmcw radar-based human sitting posture detection. IEEE Access[SPACE]https://doi.org/10.1109/ACCESS.2023.3312328 (2023).

Zhao, J., Obonyo, E. & G. Bilén, S. Wearable inertial measurement unit sensing system for musculoskeletal disorders prevention in construction. Sensors 21, 1324, https://doi.org/10.3390/s21041324 (2021).

Ranavolo, A. et al. The sensor-based biomechanical risk assessment at the base of the need for revising of standards for human ergonomics. Sensors 20, 5750. https://doi.org/10.3390/s20205750 (2020).

Girshick, R., Donahue, J., Darrell, T. & Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, 580–587, https://doi.org/10.48550/arXiv.1311.2524 (2014).

Girshick, R. Fast r-cnn. arXiv preprint arXiv:1504.08083https://arxiv.org/pdf/1504.08083.pdf (2015).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE transactions on pattern analysis and machine intelligence 39, 1137–1149, https://doi.org/10.48550/arXiv.1506.01497 (2016).

Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, https://doi.org/10.48550/arXiv.1506.02640 (2016).

Redmon, J. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767https://doi.org/10.48550/arXiv.1804.02767 (2018).

Liu, W. et al. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, 21–37, https://doi.org/10.1007/978-3-319-46448-0_2 (Springer, 2016).

Lin, T. Focal loss for dense object detection. arXiv preprint arXiv:1708.02002https://doi.org/10.48550/arXiv.1708.02002 (2017).

Tan, M., Pang, R. & Le, Q. V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 10781–10790, https://doi.org/10.48550/arXiv.1911.09070 (2020).

Carion, N. et al. End-to-end object detection with transformers. In European conference on computer vision, 213–229, https://doi.org/10.1007/978-3-030-58452-8_13 (Springer, 2020).

Kandukuru, T. K., Thangavel, S. K. & Jeyakumar, G. Computer vision based algorithms for detecting and classification of activities for fall recognition on real time video. In 2024 3rd International Conference on Artificial Intelligence For Internet of Things (AIIoT), 1–6, https://doi.org/10.1109/AIIoT58432.2024.10574542 (IEEE, 2024).

Yu, M., Gong, L. & Kollias, S. Computer vision based fall detection by a convolutional neural network. In Proceedings of the 19th ACM international conference on multimodal interaction, 416–420, https://doi.org/10.1145/3136755.3136802 (2017).

Almukadi, W. S. et al. Deep feature fusion with computer vision driven fall detection approach for enhanced assisted living safety. Scientific Reports 14, 21537. https://doi.org/10.1038/s41598-024-71545-6 (2024).

Hasan, M. M., Islam, M. S. & Abdullah, S. Robust pose-based human fall detection using recurrent neural network. In 2019 IEEE International Conference on Robotics, Automation, Artificial-intelligence and Internet-of-Things (RAAICON), 48–51, https://doi.org/10.1109/RAAICON48939.2019.23 (IEEE, 2019).

Tsai, T.-H., Wang, R.-Z. & Hsu, C.-W. Design of fall detection system using computer vision technique. In Proceedings of the 2019 4th international conference on robotics, control and automation, 33–37, https://doi.org/10.1145/3351180.3351191 (2019).

Espinosa, R. et al. A vision-based approach for fall detection using multiple cameras and convolutional neural networks: A case study using the up-fall detection dataset. Computers in biology and medicine 115, 103520. https://doi.org/10.1016/j.compbiomed.2019.103520 (2019).

Sharma, S., Singh, V. & Sarkar, D. Machine vision enabled fall detection system for specially abled people in limited visibility environment. In 2023 3rd Asian Conference on Innovation in Technology (ASIANCON), 1–6, https://doi.org/10.1109/ASIANCON58793.2023.10270769 IEEE, (2023).

Zhang, L., Fang, C. & Zhu, M. A computer vision-based dual network approach for indoor fall detection. Int. J. Innov. Sci. Res. Technol 5, 939–943, https://doi.org/10.38124/IJISRT20AUG551 (2020).

Nguyen, V. A., Le, T. H. & Nguyen, T. T. Single camera based fall detection using motion and human shape features. In Proceedings of the 7th Symposium on Information and Communication Technology, 339–344, https://doi.org/10.1145/3011077.3011103 (2016).

Mobsite, S., Alaoui, N., Boulmalf, M. & Ghogho, M. A deep learning dual-stream framework for fall detection. In 2023 International Wireless Communications and Mobile Computing (IWCMC), 1226–1231, https://doi.org/10.1109/IWCMC58020.2023.10182736 (IEEE, 2023).

Bakalos, N., Katsamenis, I. & Voulodimos, A. Man overboard: Fall detection using spatiotemporal convolutional autoencoders in maritime environments. In Proceedings of the 14th PErvasive Technologies Related to Assistive Environments Conference, 420–425, https://doi.org/10.1145/3453892.3461326 (2021).

Cheng, Y., Chen, G., Zhou, Q., Liu, C. & Cai, C. Cg-net: A novel end-to-end framework for fall detection from videos. In 2023 8th International Conference on Computer and Communication Systems (ICCCS), 984–988, https://doi.org/10.1109/ICCCS57501.2023.10151063 IEEE, (2023).

Xu, H., Shen, L., Zhang, Q. & Cao, G. Fall behavior recognition based on deep learning and image processing. International Journal of Mobile Computing and Multimedia Communications (IJMCMC) 9, 1–15. https://doi.org/10.4018/IJMCMC.2018100101 (2018).

Kokkinos, M., Doulamis, N. D. & Doulamis, A. D. Local geometrically enriched mixtures for stable and robust human tracking in detecting falls. International Journal of Advanced Robotic Systems 10, 72. https://doi.org/10.5772/54049 (2013).

Zeng, Z., Xu, K., He, G. & Feng, H. Human fall detection algorithm based on random forest and mpu6050. In Fourth International Conference on Computer Vision and Data Mining (ICCVDM 2023), vol. 13063, 529–534, https://doi.org/10.1117/12.3021463 SPIE, (2024).

Yu, F. Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122https://doi.org/10.48550/arXiv.1511.07122 (2015).

Wang, X. & Stella, X. Y. Tied block convolution: Leaner and better cnns with shared thinner filters. In Proceedings of the AAAI Conference on Artificial Intelligence 35, 10227–10235. https://doi.org/10.1609/aaai.v35i11.17226 (2021).

Bahdanau, D. Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473https://api.semanticscholar.org/CorpusID:11212020 (2014).

Yu, Z. et al. Yolo-facev2: A scale and occlusion aware face detector. Pattern Recognition 155, 110714. https://doi.org/10.1016/j.patcog.2024.110714 (2024).

Zheng, Z. et al. Distance-iou loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI conference on artificial intelligence 34, 12993–13000. https://doi.org/10.1609/aaai.v34i07.6999 (2020).

Tong, Z., Chen, Y., Xu, Z. & Yu, R. Wise-iou: bounding box regression loss with dynamic focusing mechanism. arXiv preprint arXiv:2301.10051https://doi.org/10.48550/arXiv.2301.10051 (2023).

Zhang, H. & Zhang, S. Shape-iou: More accurate metric considering bounding box shape and scale. arXiv preprint arXiv:2312.17663https://doi.org/10.48550/arXiv.2312.17663 (2023).

Projects, R. U. Fall detection dataset. https://universe.roboflow.com/roboflow-universe-projects/fall-detection-ca3o8 (2023). Visited on 2024-12-22.

Li, C. et al. Yolov6: A single-stage object detection framework for industrial applications. arXiv preprint arXiv:2209.02976https://doi.org/10.48550/arXiv.2209.02976 (2022).

Wang, C.-Y., Bochkovskiy, A. & Liao, H.-Y. M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 7464–7475, https://doi.org/10.48550/arXiv.2207.02696 (2023).

Zhao, Y. et al. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16965–16974, https://doi.org/10.1109/CVPR52733.2024.01605 (2024).

Wang, C.-Y., Yeh, I.-H. & Liao, H.-Y. M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv preprint arXiv:2402.13616https://doi.org/10.48550/arXiv.2402.13616 (2024).

Wang, A. et al. Yolov10: Real-time end-to-end object detection. arXiv preprint arXiv:2405.14458https://doi.org/10.48550/arXiv.2405.14458 (2024).

Muhammad, M. B. & Yeasin, M. Eigen-cam: Class activation map using principal components. In 2020 international joint conference on neural networks (IJCNN), 1–7, https://doi.org/10.1109/IJCNN48605.2020.9206626 IEEE, (2020).

Selvaraju, R. R. et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, 618–626, https://doi.org/10.1007/s11263-019-01228-7 (2017).

Chattopadhay, A., Sarkar, A., Howlader, P. & Balasubramanian, V. N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In 2018 IEEE winter conference on applications of computer vision (WACV), 839–847, https://doi.org/10.1109/WACV.2018.00097 IEEE, (2018).

Acknowledgements

This work was partly supported by the National Science Foundation of China (62272311,62102262).

Author information

Authors and Affiliations

Contributions

X.H. and X.L. conceptualized the study. X.H. developed the methodology. Z.J. wrote the software. X.H. drafted the original manuscript. X.L. and L.Y. reviewed and edited the manuscript. X.L. and L.Y. provided supervision. H.J. and W.W. curated the data. R.C. and H.B. conducted the investigation. M.Z. provided resources. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Huang, X., Li, X., Yuan, L. et al. SDES-YOLO: A high-precision and lightweight model for fall detection in complex environments. Sci Rep 15, 2026 (2025). https://doi.org/10.1038/s41598-025-86593-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86593-9

Keywords

This article is cited by

-

Navigating beyond sight: a real-time 3D audio-enhanced object detection system for empowering visually impaired spatial awareness

Signal, Image and Video Processing (2025)