Abstract

Rock mass mechanical parameters are essential for the design and construction of underground engineering projects, but parameters obtained through traditional methods are often unsuitable for direct use in numerical simulations. The back analysis method, based on displacement monitoring, has emerged as a new approach for determining rock mass parameters. In this study, an experimental scheme was developed using orthogonal and uniform experimental designs to obtain training samples for the neural network. A GA-PSO-BP neural network model (GPSO-BP) was proposed, combining the fast convergence of the particle swarm optimization (PSO) algorithm and the global optimization capability of the genetic algorithm (GA). This model was applied to invert the rock mass parameters E, μ, φ, and c for deep-buried tunnels. The results indicate that the GPSO-BP neural network model outperforms the BP, GA-BP, and PSO-BP neural network models in terms of faster convergence and higher accuracy. It also shows superior performance in handling small datasets and complex problems, achieving better data fitting and the highest score in rank analysis. The DDR curve further confirms the GPSO-BP model’s computational efficiency. When the rock mass parameters derived from this model are applied to forward numerical simulations, the average error across four monitoring projects is only 4.34%, outperforming the other three models. Thus, this study provides an effective method for improving the accuracy of rock mass parameter inversion in underground engineering.

Similar content being viewed by others

Introduction

The mechanical parameters of rock masses are crucial for the design and construction of underground engineering, which directly impacts both the safety and cost-effectiveness of the project1,2,3. Typical methods for determining these parameters include in-situ testing, geophysical exploration, laboratory testing, and so on4,5. However, the inherent discontinuity, heterogeneity, and anisotropy of rock masses introduce a degree of subjectivity and conservatism in parameter estimation6,7,8,9. Furthermore, parameters obtained through traditional methods are frequently unsuitable for direct use in numerical simulations; instead, they must be converted using empirical formulas before being applied in numerical calculations10,11,12,13.

In response to these challenges, a growing number of scholars are turning to the extensive monitoring data collected on-site to conduct back analysis of rock mechanics parameters14,15,16. The three main aspects of this approach are displacement back analysis, stress back analysis, and load back analysis17,18. Among these, the displacement back analysis method is frequently used as the recommended technique for back analysis of rock parameters because it benefits from more intuitive, easily obtained, and large quantities of displacement data. This method can be traced back to the 1970s when Kavanagh et al.19 introduced the theory of displacement back analysis and used finite element analysis and measured displacement values to conduct back analysis of the elastic modulus of the rock mass. Following its creation, displacement back analysis incorporated the BP neural network, which soon gained popularity as a technique for this use case20.

The iterative process in displacement back analysis is mostly solved by an optimization algorithm21. With advancements in intelligent technologies, methods such as genetic algorithms (GA)22,23,24,25, particle swarm optimization (PSO)26,27,28,29, simulated annealing (SA)30, etc. have been integrated into back analysis, significantly enhancing the speed and accuracy of these processes. Feng et al. incorporated GA into artificial neural networks, establishing an evolutionary neural network method, which they successfully validated through a typical case31. Deng et al. replaced the time-consuming finite element method with a BP neural network and used GA as an optimization tool to improve global search capabilities, successfully applying this approach to the back analysis of the slope rock mass at the permanent ship lock of the Three Gorges Project32. Zhuang et al. improved GA by incorporating absolute percentage error as the target parameter while considering weights. They proposed an IAGA-BP inversion analysis method that effectively addressed the problem of displacement weight allocation between the dam foundation and the dam shoulder. This method was successfully applied to the inversion of the dam body and foundation parameters at the Yangfanggou Hydropower Station33. Hashash et al. compared the advantages of GA and SelfSim algorithm in inverting soil material properties, indicating that GA optimization is more constrained by the soil constitutive model, while SelfSim optimization is less constrained by the soil constitutive model. This leads to GA often overestimating surface settlement, while SelfSim calculates more accurately23. Moreira et al. evaluated the performance of evolutionary strategies in the inversion of mechanical parameters of underground structures, finding that GA demonstrates beneficial robustness and efficiency, thus alleviating some limitations of classical algorithms34. Rechea et al. compared the performance of two minimization algorithms, gradient descent, and GA, in the analysis of deep excavation displacement data. The results demonstrated that both methods are capable of producing smooth and unique solutions35. Ma et al. proposed a discrete element mechanics parameter back analysis method based on PSO, validated through typical landslide cases36. Li et al. optimized PSO and combined it with support vector machines (SVM) for rock mechanics parameter inversion, achieving an error margin of just 0.91% compared to the assumed actual parameters27. Miranda et al. compared traditional optimization algorithms with evolutionary optimization techniques in the back analysis of mechanical parameters for the hydroelectric powerhouse cavern of Venda Nova II built in Portugal, showing that both methods can effectively obtain optimal parameter sets and offer insights into the characteristics of the rock mass37. Wang et al. combined GA with SA to form a modified GSA algorithm, which improved the ability and solution quality of optimization problems. The model has been applied in the rock mechanics parameter back analysis of the underground powerhouse on the right bank of Wudongde Hydropower Station and has achieved good results30.

With the development of machine learning and algorithms, more and more novel methods are being proposed and applied in the inversion of surrounding rock parameters. Gao et al. introduced a novel neural network based on the black hole algorithm as an alternative to traditional neural networks. Their comparison revealed that the new network is an effective approach for determining the mechanical parameters of surrounding rock masses in underground tunnels17. Tang et al. developed a non-linear optimization technique (NOT) that demonstrated the capability to accurately and efficiently predict deformation caused by subsequent excavation in the provided cases38. He et al. enhanced the non-dominated sorting genetic algorithm II (NSGA-II) by integrating it with the GA for application in slope stability assessment. The improved model exhibited a significant Pareto front shift and yielded smaller errors compared to the original NSGA-II model39. Huang et al. proposed a deep learning method called Long short-term memory (LSTM) to invert slope stability with five control factors. The results showed that LSTM overcomes the problem of traditional machine learning being difficult to extract global features40.

Based on the existing literature, this study establishes a GA-PSO-BP neural network model (GPSO-BP) for mechanical parameters by integrating GA, PSO, and BP neural networks. First, the orthogonal and uniform experimental designs are employed to design experimental schemes that simulate excavation to construct learning samples. Thereafter, the GA and PSO are integrated into the BP neural network to optimize the weights and thresholds. The surrounding rock parameters are passed through the model inverted using displacement monitoring data collected on-site and then fed into numerical simulation software for forward analysis. Finally, a comparison between the simulation results and the data from the on-site monitoring shows that the suggested approach produces good results.

Construction of back-analysis method

BP neural network

The BP neural network, a type of feedforward neural network, operates through a process of information forward propagation and error backpropagation13,33. The basic principle involves calculating the deviation between the predicted output and the actual value during forward propagation, followed by backpropagating the error using gradient descent to adjust the network’s weights and thresholds. Through repeated training, the network’s error is minimized until it meets the desired criteria. Studies have shown that a three-layer BP neural network can approximate nonlinear functions with high accuracy, which is why the BP neural network in this study adopts a three-layer structure. The diagram of the three-layer BP neural network structure is shown in Fig. 1.

Where, \({x}_{i}\) is the input layer node, \({H}_{j}\) is the hidden layer node, \({O}_{k}\) is the output layer node, \({\omega }_{ij}\) is the weight from the input layer to the hidden layer, \({\omega }_{jk}\) is the weight from the hidden layer to the output layer, \({a}_{j}\) is the threshold of the hidden layer, \({b}_{k}\) is the threshold of the output layer, \({Y}_{k}\) is the expected output value, \({e}_{k}\) is the error between the expected output and the actual output of the neural network, \(m\) is the number of input layer nodes, \(l\) is the number of hidden layer nodes, \(n\) is the number of output layer nodes, \({f}_{1}\) is the transfer function from the input layer to the hidden layer, \({f}_{2}\) is the transfer function from the hidden layer to the output layer, where \(i=\text{1,2},\dots ,m;j=\text{1,2},\dots ,l;k=\text{1,2},\dots ,n\).

The evolution steps of a BP neural network are as follows: (1) Determine the structure of the BP neural network. (2) Input the learning samples, randomly generate the weights and thresholds, and calculate the output. (3) Calculate the prediction error. (4) Backpropagate the error to adjust the weights and thresholds of the neural network. (5) Repeat steps (2) to (4), continuously refining the mapping relationship until the error reaches the predetermined target or other termination conditions are met.

However, the BP neural network also has some limitations: (1) It takes a lot of sample data to achieve high training accuracy; (2) Under some initial conditions, it is prone to get trapped in local optima; (3) The rate of convergence is comparatively slow.

Improvement methods

To solve the problem of the large sample demand in BP neural networks, scholars frequently employ orthogonal experimental design to construct a sample database, from which a subset of data is randomly selected for training and the remaining part for testing13,41. However, this randomness leads to variability in the samples used by the BP neural network in each iteration, making it challenging to ensure reproducibility. To overcome this challenge, this study introduces uniform experimental design alongside orthogonal experimental design. In this approach, orthogonal experimental design is used to generate the learning samples for the BP neural network, while uniform experimental design provides the testing samples. This method significantly reduces the number of required experiments while maintaining accuracy and comparability by choosing representative test points instead of in-depth experiments.

PSO can reduce the learning time required for BP neural network training and enhance accuracy. However, standard PSO is prone to issues such as getting stuck in local optima and premature convergence. On the other hand, GA is a random search algorithm that explores multiple feasible solutions within the solution space of a problem through selection, crossover, and mutation operations, aiming to find the global optimal solution. By combining GA with PSO, the weights and thresholds of the BP neural network can be optimized, resulting in a prediction model that benefits from low computational complexity, fast convergence speed, and robust global convergence performance.

Orthogonal uniform design GA-PSO-BP neural network model

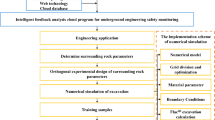

The orthogonal uniform design GA-PSO-BP neural network model (GPSO-BP) mainly includes orthogonal experimental design, uniform experimental design, GA, PSO, and BP neural network. The flowchart is shown in Fig. 2, and the specific implementation steps are as follows:

-

(1)

Construct learning samples. The range of parameter values needs to be determined first. Orthogonal experimental design and uniform experimental design are used to construct simulation schemes. These schemes are calculated using numerical software to generate displacement values, which serve as learning samples for the neural networks.

-

(2)

Determine the structure of the BP neural network. The weights and thresholds of the neural network are the parameters to be optimized, and they are used to generate the initial population \(U\).

-

(3)

Introduce PSO to optimize the population \(U\). The mean square error (MES) between the neural network’s output and the on-site measured value is used as the fitness evaluation index. A smaller fitness value indicates a smaller error and better adaptability. After each update of the population, the fitness values are sorted in ascending order and divided into three groups: \({U}_{1}\), \({U}_{2}\), \({U}_{3}\).

$$Fitness=\frac{1}{n}\sum_{i=1}^{n}{({t}_{i}-{a}_{i})}^{2}$$(1)where, \(Fitness\) is the fitness function, \({t}_{i}\) is the actual output value of the i-th training sample, \({a}_{i}\) is the expected value of the i-th training sample, \(n\) is the number of training sets.

-

(1)(1)(1)(4)

Introduce GA to enhance the global search capability. The standard GA uses the roulette wheel method, which has a high degree of randomness and may eliminate better individuals. Therefore, for \({U}_{1}\) with the best adaptability, the replication is directly performed. For \({U}_{2}\) with moderate fitness, crossover operation is performed with crossover probability \(pc\), which includes velocity crossover and position crossover, as shown in the following equation. For \({U}_{3}\) with the worst adaptability, mutation operation is performed with mutation probability \(pm\). The fitness values of the offspring particles after crossover and mutation operations will be compared with those of parent particles, retaining the particles with smaller fitness values for the next iteration50.

$$\left\{\begin{array}{c}{v}_{i}\left(t+1\right)={\uptheta }_{1}{v}_{i}\left(t\right)+(1-{\uptheta }_{1}){v}_{j}(t)\\ {v}_{j}\left(t+1\right)=(1-{\uptheta }_{1}){v}_{i}\left(t\right)+{\uptheta }_{1}{v}_{j}(t)\end{array}\right.$$(2)$$\left\{\begin{array}{c}{x}_{i}\left(t+1\right)={\uptheta }_{2}{x}_{i}\left(t\right)+(1-{\uptheta }_{2}){x}_{j}(t)\\ {x}_{j}\left(t+1\right)=(1-{\uptheta }_{2}){x}_{i}\left(t\right)+{\uptheta }_{2}{x}_{j}(t)\end{array}\right.$$(3)where, \({\theta }_{1}\) and \({\theta }_{2}\) are random values between [0, 1].

-

(5)

Update individual extremum and global extremum. The fitness of the new particle will be compared with those of individual and global extremum, which will preserve better fitness.

-

(6)

Repeat steps (3) to (5) until the accuracy requirements are met or other termination conditions are satisfied.

-

(7)

Train and test the GPSO-BP neural network. The optimal weights and thresholds are fed into the BP neural network for training and testing. After repeated iterations, the desired neural network is achieved.

-

(8)

Evaluate the inversion effect. The displacement measurement data are input into the model to obtain the optimal inversion parameters. Forward calculations are then performed using these parameters to obtain displacement values, which are compared with the measured data.

Numerical example

To verify the superiority and effectiveness of the GPSO-BP neural network, a widely used tunnel excavation model was used for simulation. The circular tunnel has a radius of 3 m, and the analysis area extends to 15 m, which is five times the tunnel radius. Due to symmetry, only 1/4 of the cross-section was analyzed. The model grid and measure points are shown in Fig. 3. The initial ground stress was set as \({\sigma }_{x}={\sigma }_{y}=10.0 \text{MPa}\), and the mechanical parameters of the surrounding rock were \(E=1.9 \text{GPa}\), \(\mu =0.32\), \(\varphi =27^\circ\), and \(c=1.1 \text{MPa}\). The forward analysis was calculated in Flac3D software, and the displacement at the measure points is shown in Table 1.

The range of surrounding rock parameters in the back analysis is as follows: \(1.5 \text{GPa}<E<2.5 \text{GPa}\), \(0.2<\mu <0.4\), \(27^\circ <\varphi <40^\circ\), and \(0.7 \text{MPa}<c<1.7 \text{MPa}\). These parameters are divided into five equal parts, and experimental schemes are constructed through orthogonal and uniform experimental design. The displacement values are then calculated using Flac3D to generate training and testing samples for the neural network. The displacement monitoring values at the measure points are used as input data, while the surrounding rock parameters as output data for back analysis. According to Formula (4), the number of hidden layer nodes is determined to be 12 through the trial-and-error method. The BP neural network structure is defined as 9-12-4. The learning factors of PSO are set to \(c1=c2=1.49\), the population size is 60, and the number of iterations is 400. The crossover probability for GA is set to 0.4, and the mutation probability is set to 0.1. All other parameters are kept at their default values.

where, \(l\) is the number of hidden layer nodes, \(m\) is the number of input layer nodes, \(n\) is the number of output layer nodes, \(a\) is a constant between 1 and 10.

A comparison was made between BP, GA-BP, PSO-BP, and GPSO-BP neural networks. The relative error calculation results for the rock mechanics parameters are displayed in Table 2. According to the table, the GPSO-BP back analysis produces smaller errors compared to BP, GA-BP and PSO-BP neural networks, with an average relative error of only 3.32% across the four parameters, significantly lower than 11.14% for BP, 5.17% for GA-BP and 6.03% for PSO-BP neural networks. The fitness curves for the GA-BP, PSO-BP and GPSO-BP neural networks are illustrated in Fig. 4. It can be seen that as the number of iterations increases, the fitness values of all GA-BP, PSO-BP and GPSO-BP decrease. Moreover, the fitness of the GPSO-BP converges faster, with a smaller final fitness value, indicating that the GPSO-BP neural network proposed in this study offers faster convergence and higher accuracy in inverse analysis.

Application in Zhaotong tunnel project

The GPSO-BP neural network is applied to the back analysis of surrounding rock parameters in the Zhaotong Tunnel, offering predictions and guidance for subsequent construction.

Engineering background

The Zhaotong Tunnel, a key project connecting the Chongqing and Kunming high-speed railways, has a total length of 16.26 km. The DK377 + 300 ~ 350 section of the main tunnel belongs to the gas outburst zone, where conventional geophysical detection equipment cannot be used. And the surrounding rock mainly composed of shale with well-developed joints and fissures, presents challenges for sampling. Consequently, the mechanical parameters of this section’s surrounding rock must be determined using a displacement back analysis method. The regional location of the Zhaotong Tunnel and the palm surface are shown in Fig. 5.

The regional location of the Zhaotong Tunnel and the palm surface51.

On-site monitoring plan and data

The main tunnel of this section is constructed using the three-step method. After completing the excavation and initial support of the tunnel face, reflective monitoring points are installed at the arch crown and 80 cm above each of the three steps. A total station is then used to measure the settlement of the surrounding rock at the arch crown and the convergence at both sides after the initial support. The layout of the tunnel construction sections and monitoring points is shown in Fig. 6. The on-site monitoring of section DK377 + 330 is shown in Fig. 7.

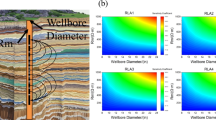

Tunnel excavation alters the initial stress field of the surrounding rock, leading to displacement that can be divided into three parts, as shown in Fig. 8. Before excavation reaches the monitoring point, an initial deformation, denoted as S0, has already occurred. After the excavation reaches the monitoring points but before the installation of monitoring instruments, an additional instantaneous deformation, referred to as S1, takes place. Once the monitoring points are installed and until the displacement eventually stabilizes, the measured deformation corresponds to the actual deformation of the tunnel observed on-site, identified as the S2 deformation segment. The relative displacement simulated by Flac3D is recorded starting from the moment of excavation, referred to as S1 + S2. Since the installation of on-site displacement monitoring points typically occurs after the completion of the initial support—usually lagging behind the tunnel face by half a day to a full day—determining S1 is difficult to determine. Therefore, this study omits the S1 deformation segment to maintain a consistent relationship between the relative deformation obtained from Flac3D simulations and the on-site measured displacement.

Establishment and calculation of numerical models

The horizontal diameter of the Zhaotong Tunnel’s main tunnel is 15.04 m, and the vertical diameter is 13.23 m. The numerical calculation model of the tunnel typically spans 3–5 times the tunnel’s span. Considering the presence of a parallel guide tunnel located 35 m to the left and 70 m ahead of the main tunnel, the model’s analysis scope is appropriately expanded. The model dimensions are 200 m in the horizontal direction, 160 m in the vertical direction, and 50 m in the axial direction. It is divided into 299,013 nodes and 289,600 elements, as shown in Fig. 9. The average burial depth of this section is 900 m. Based on on-site hydraulic fracturing stress testing and stress inversion analysis of the Zhaotong Tunnel, the maximum horizontal principal stress \({\sigma }_{H}\) is determined to be 35.9 MPa, the minimum horizontal principal stress \({\sigma }_{h}\) is 20.3 MPa, and the vertical principal stress \({\sigma }_{v}\) is 28.6 MPa. The displacement of the bottom surface of the model boundary is fixed in the x, y, and z directions, and the normal displacement is constrained around it. The top surface is a free surface with no displacement constraints.

The tunnel excavation employs a three-step excavation method, with excavation progressing in 2 m increments per cycle. The middle interface of the model is chosen for displacement monitoring to minimize boundary effects in the simulation. Cable elements are used for the anchoring, while shell elements are used for initial support. The material constitutive model is based on the Mohr–Coulomb criterion. The schematic diagram of the excavation and support is shown in Fig. 10.

The surrounding rock in this section is situated at the boundary between Grade IV and Grade V rock. Based on the "Code for Design of Railway Tunnels" (TB 1003-2016), as well as geological conditions, geological exploration data, and design data for the Zhaotong Tunnel, the range of mechanical parameters for the surrounding rock has been established. To simplify the simulation, the strengthening effects of steel mesh and steel arches on the initial support are incorporated into the initial support concrete42. The calculation formula is as follows.

where, \(E{\prime}\) is the elastic modulus of the converted initial support concrete, \({E}_{c}\) is the elastic modulus of the original initial support concrete, \({E}_{s}\) is the elastic modulus of the steel, \({A}_{s}\) is the cross-sectional area of the steel arch, and \({A}_{c}\) is the cross-sectional area of the concrete.

After calculation, the mechanical parameters of the surrounding rock, initial support, and bolt are presented in Table 3.

Learning sample construction

To develop a mature GPSO-BP neural network, it is essential to train and test the network with a certain number of learning samples. Based on the defined range of surrounding rock parameters, 5 levels are designed for each parameter. A total of 25 mechanical parameter schemes are constructed as training samples for the neural network using the L25 (54) orthogonal experimental design table. Additionally, 5 mechanical parameter schemes are created as test samples for the neural network using the U5 (54) uniform design table. These 30 sets of mechanical parameter schemes are imported into the Flac3D model for excavation calculations, generating the apparent displacement values of the tunnel for various mechanical parameters. The learning samples of neural network are presented in Table 4.

Back analysis of surrounding rock parameters

The performance of the regression prediction in the algorithm is significantly impacted by the number of nodes in the hidden layer. According to Formula (1), the number of hidden layer nodes is determined to be 10. The transfer function from the input layer to the hidden layer is set to the logsig function, while the transfer function from the hidden layer to the output layer is set to the purelin function. The grid training function is set to the improved L–M algorithm. The parameters of BP, PSO, and GA were selected through extensive exploratory experiments and grid search methods in the early stage. The learning rate (\(\alpha\)) for the neural network is set to 0.01, with a network error threshold of 0.0001. The learning factors for PSO parameters are set to \(c1=c2=1.49\), with a population size of 60 and 400 iterations. For the GA parameters, the crossover probability is set to 0.6, and the mutation probability is set to 0.2. All other parameters are maintained at their default values. The apparent displacement values are used as input samples for the neural network, while the rock mechanics parameters are used as output samples. The BP, PSO-BP, GA-BP and GPSO-BP neural networks are trained separately.

Performance evaluation of different neural network models

The fitness evolution curves of the GA-BP, PSO-BP, and GPSO-BP neural networks are shown in Fig. 11. It can be observed that the fitness curves are consistent with the numerical examples, with the GPSO-BP model converging the fastest and achieving the highest accuracy. Additionally, after 200 iterations, the fitness curves for all three models plateau, indicating no further reduction in error. This confirms that 400 iterations are sufficient for effective optimization.

To assess the performance of different models, seven widely used performance indices, namely Coefficient of determination (R2), Variance account for (VAF), Willmott’s Index of agreement (WI), Mean absolute error (MAE), Mean bias error (MBE), Root mean square error (RMSE), Mean absolute percentage error (MAPE) are calculated. Their mathematical expressions are provided in Eqs. (6) to (12). MAE, MBE, and RMSE are based on the error category, while R2, VAF, and WI are based on the accuracy category. The correlation between the predicted and expected values for the test set was computed and presented in Table 5 to evaluate the models. However, the overall assessment is based on Rank Analysis43,44. In this system, each model is assigned a score based on its accuracy ranking for each output parameter45,46. The model with the highest accuracy receives 4 points, while the least accurate receives 1 point. The overall performance of each model is determined by summing the scores for all parameters in the test set. The final ranking of each model is then calculated based on its total score.

where, \({y}_{i}\) and \({\widehat{y}}_{i}\) represent the \(i\)-th expected value and predicted value, respectively; \(n\) denotes the number of samples in the dataset.

As shown in the Table 5, the GPSO-BP model achieves the highest rank with a total score of 98 points, followed by the GA-BP and PSO-BP models. The BP model performs the worst, with only 45 points. When analyzing the scores for individual output parameters, GPSO-BP remains the best-performing model for the outputs \(E\), \(\mu\), and \(c\). However, it is noteworthy that for the \(\varphi\) output, the GA-BP model scores 27 points, outperforming GPSO-BP’s score of 20 points. Considering the overall performance of the models, GPSO-BP still demonstrates the best results.

In terms of the coefficient of determination (R2), all four models show good fitting performance for output parameters \(E\) and \(\varphi\), with values greater than 0.82. However, the R2 value for output \(c\) in the BP model is only 0.1619, which could be attributed to insufficient training samples. While the GA-BP and PSO-BP models improve the R2 for \(c\), it still remains below 0.5. In contrast, the GPSO-BP model achieves an R2 of 0.66 for \(c\), indicating that GPSO-BP has a better ability to handle complex models with small datasets compared to the other models. The value of VAF also well illustrates this viewpoint. Overall, the GPSO-BP model delivers the best fitting performance, followed by the GA-BP and PSO-BP networks, while the BP neural network exhibits the poorest fit.

To provide a more intuitive representation of the performance metrics, an accuracy matrix for the test set was constructed44, as shown in Fig. 12. Each value in the figure represents the accuracy achieved relative to its ideal value, expressed as a percentage. For example, the ideal values for R2 and MAE are 1 and 0, respectively. For the BP model, the R2 and MAE values for \(E\) output are 0.83 and 0.36, respectively. This can be interpreted as the model achieving 83% (\(0.83/1\times 100\%=83\%\)) accuracy for R2 and 64% (\((1-0.36)\times 100\text{\%}=64\text{\%}\)) accuracy for MAE. The accuracy for the other metrics follows Kardani’s definition44. It should be noted that for the \(\varphi\) output, the MAE and RMSE values for the four neural network models exceed 1, therefore, normalization should be performed before calculation. As shown in Fig. 12, except for a few instances where other models perform better, the GPSO-BP model consistently achieves the highest accuracy.

The comparison between the predicted and expected values for the four output parameters is shown in Fig. 13. It can be observed that among the four outputs, the GPSO-BP model deviates the least from the expected values. The mean absolute percentage errors (MAPE) of the GPSO-BP neural network for predicting the four rock parameters are 8.10%, 4.96%, 5.15%, and 28.55%, respectively, outperforming the other three models. For the first three parameters, the MAPE is below 10%, with the exception of the cohesion parameter. All three neural networks struggle to fit the cohesion parameter, primarily due to its expected range of 0.05 to 0.5 MPa. The minimum value is as low as 0.05, meaning that even small absolute errors lead to large relative errors. For example, if the predicted value is 0.1, the relative error for an expected value of 0.05 is 100%, significantly raising the overall MAPE. Despite this, the GPSO-BP model still performs the best, with a MAPE of 28.55%, compared to 66.67% for the BP network, 49.42% for the PSO-BP network, and 38.00% for the GA-BP network.

This study also uses the Developed Discrepancy Ratio (DDR), a metric proposed by Noori et al. in 2010. The formula for calculating DDR is provided in formula (13). While the standard error offers an average measure of error, it does not provide information about the error distribution. Therefore, DDR is used to assess the efficiency of the model during its development phase47,48,49. Figure 14 presents the DDR results for different models across the four output parameters. The deviation of the GPSO-BP model’s DDR curve is the smallest for all four outputs, staying closest to the zero line. This indicates that, based on the DDR metric, the GPSO-BP model demonstrates the highest efficiency.

Positive analysis and comparison based on inversion results

The measured displacement values on site were input into the trained neural network, and the back analysis results of the surrounding rock parameters are shown in Table 6.

The surrounding rock parameters obtained from different neural networks were input into Flac3D for forward calculation, yielding values for roof settlement and horizontal convergence. These results were compared with the on-site measured values, as shown in Fig. 15. The displacement predictions obtained from the forward calculations using the inversion parameters of the GPSO-BP neural network model exhibited the smallest errors when compared to the measured values. The relative errors for roof settlement and the convergence of the three steps were 1.11%, − 6.88%, − 0.41%, and − 8.97%, respectively, which are significantly better than those produced by BP, GA-BP and PSO-BP neural networks.

As shown in Fig. 8, the deformation S1 was disregarded. The displacement at the moment of exposing the monitoring points on the upper step is 0 and gradually increases as the excavation processes. The displacement change curve obtained from the forward calculation using the GPSO-BP neural network is compared with the on-site measured values, as shown in Fig. 16. The trends of the four curves are generally consistent. However, some discrepancies exist in the convergence curves of the upper and middle steps. These differences may be due to various factors affecting the excavation, where the upper, middle, and lower steps might not have advanced simultaneously as assumed. In contrast, the Flac3D simulations were conducted with all three steps advancing simultaneously, leading to some variation in the middle portion of the curve. Nonetheless, the final deformation from the numerical simulation closely matches the field measurements, indicating that the parameter identification and back analysis results of the proposed GPSO-BP neural network inversion model are reliable.

Conclusions and discussion

Conclusions

This study proposes a displacement back analysis model based on the GPSO-BP neural network, which integrates the advantages of orthogonal experimental design, uniform experimental design, GA, PSO, and BP neural network. This model has been successfully applied to the inversion of surrounding rock parameters in the Zhaotong Tunnel.

-

(1)

To address the issues of slow convergence and susceptibility to local optima in the original BP neural network, the selection, crossover, and mutation operations of GA were improved and embedded into each iteration of PSO, resulting in the proposed GPSO-BP neural network model. This model enhances global search capabilities while preserving the best particles. The fitness curves indicate that the GPSO-BP model achieves faster convergence and higher accuracy compared to PSO-BP and GA-BP.

-

(2)

Traditional BP, GA-BP, and PSO-BP neural network models perform well with small datasets and simple models (numerical examples), but struggle with more complex models such as the Zhaotong Tunnel. In the back-analysis of rock parameters for the Zhaotong Tunnel, the R2 for the parameter \(c\) using the BP model was only 0.1619. For the GA-BP and PSO-BP models, the R2 values were also less than 0.5, whereas the GPSO-BP achieved an R2 of 0.66. Additionally, the GPSO-BP model achieved R2 values above 0.8 for the other three output parameters, demonstrating superior performance when dealing with small datasets and complex models. This suggests that the GPSO-BP model is more suitable for application in complex rock engineering scenarios.

-

(3)

A rank analysis of the test sets for the BP, PSO-BP, GA-BP, and GPSO-BP models indicates that, although GA-BP performed best for the parameter \(\varphi\), GPSO-BP achieved the highest overall score when considering total performance, and was therefore identified as the best model among the four. The back-analysis method provided in this study is shown to be reliable.

-

(4)

The GPSO-BP model was successfully applied to the displacement back analysis of the Zhaotong Tunnel case. The rock parameters obtained from this model were used in forward analysis through numerical simulation, resulting in an average relative error of only 4.34% across four monitoring points. The predicted trends closely aligned with the observed displacement values, outperforming the BP model (17.10%), PSO-BP (15.92%), and GA-BP (14.78%). Since the Zhaotong Tunnel is a typical deep-buried, large-section, high-stress tunnel, it is indicated that the GPSO-BP model provides valuable insights for similar underground engineering projects.

Discussion

Training a neural network requires a large dataset, but generating such data through numerical simulation software is an extremely time-consuming and labor-intensive process. This is why only 30 samples were used in this study. Although the GPSO-BP model shows improvements over the other three methods in handling small datasets and complex models, its generalization ability remains limited. In future work, it is planned to replace the time-consuming numerical simulation process with a BP neural network, which will generate additional data based on the existing dataset. This will expand the training samples and improve the model’s generalization ability.

Additionally, a grid search method was used to determine the parameters for BP, PSO, and GA, which introduced a level of empiricism. This led to high computational costs and long training times to obtain optimal parameters, and the selected parameters may not represent the best combination. Future research will focus on conducting sensitivity analysis of hyperparameters and implementing algorithms that enable adaptive adjustment of neural network hyperparameters to enhance model stability.

Statement attesting

For the images and information presented in this manuscript, informed consent was obtained from all study participants and/or their legal guardians for the publication of identifying information/images in an online open-access publication. All patient names and other personal identifiers have been removed, in compliance with ethical standards.

Data availability

All data used in this study is available in the manuscript.

References

Sahu, A., Sinha, S. & Banka, H. Fuzzy inference system using genetic algorithm and pattern search for predicting roof fall rate in underground coal mines. Int. J. Coal Sci. Technol. 11, 1 (2024).

Makowski, P., Niedbalski, Z. & Balarabe, T. A statistical analysis of geomechanical data and its effect on rock mass numerical modeling: a case study. Int. J. Coal Sci. Technol. 8(2), 312–323 (2020).

Song, Z. Y., Amann, F., Dang, W. G. & Yang, Z. Mechanical responses and fracturing behaviors of coal under complex normal and shear stresses, Part II: Numerical study using DEM. Int. J. Coal Sci. Technol. 11, 57 (2024).

Cai, M. et al. Back-analysis of rock mass strength parameters using AE monitoring data. Int. J. Rock Mech. Min. Sci. 44(4), 538–549 (2007).

Deng, D., Wang, H., Xie, L., Wang, Z. & Song, J. Experimental study on the interrelation of multiple mechanical parameters in overburden rock caving process during coal mining in longwall panel. Int. J. Coal Sci. Technol. 10, 47 (2023).

Zhao, Y. et al. Influence analysis of complex crack geometric parameters on mechanical properties of soft rock. Int. J. Coal Sci. Technol. 10, 78 (2023).

Han, Z., Liu, K., Ma, J. & Li, D. Numerical simulation on the dynamic mechanical response and fracture mechanism of rocks containing a single hole. Int. J. Coal Sci. Technol. 11, 64 (2024).

Zhang, Y. et al. Research on coal-rock identification method and data augmentation algorithm of comprehensive working face based on FL-Segformer. Int. J. Coal Sci. Technol. 11, 48 (2024).

Ma, D. et al. Water–rock two-phase flow model for water inrush and instability of fault rocks during mine tunnelling. Int. J. Coal Sci. Technol. 10, 77 (2023).

Sun, G., Zheng, H., Huang, Y. & Li, C. Parameter inversion and deformation mechanism of Sanmendong landslide in the Three Gorges Reservoir region under the combined effect of reservoir water level fluctuation and rainfall. Eng. Geol. 205, 133–145 (2016).

Khawaja, L. et al. Development of machine learning models for forecasting the strength of resilient modulus of subgrade soil: genetic and artificial neural network approaches. Sci. Rep. 14(1), 18244 (2024).

Siddig, O., Ibrahim, A. F. & Elkatatny, S. Estimation of rocks’ failure parameters from drilling data by using artificial neural network. Sci. Rep. 13(1), 3146 (2023).

Sun, Y., Jiang, Q., Yin, T. & Zhou, C. A back-analysis method using an intelligent multi-objective optimization for predicting slope deformation induced by excavation. Eng. Geol. 239, 214–228 (2018).

Cividini, A. & Rossi, A. Z. The consolidation problem treated by a consistent (static) finite element approach. Int. J. Numer. Anal. Methods Geomech. 7(4), 435–455 (1983).

Gioda, G. & Sakurai, S. Back analysis procedure for the interpretation of field measurements in geomechanics. Int. J. Numer. Anal. Methods Geomech. 11(6), 555–583 (1987).

Sakurai, S. & Takeuchi, K. Back analysis of measured displacements of tunnels. Rock Mech. Rock Eng. 16(3), 173–180 (1983).

Gao, W., Chen, D., Dai, S. & Wang, X. Back analysis for mechanical parameters of surrounding rock for underground roadways based on new neural network. Eng. Comput. 34, 25–36 (2018).

Kaiser, P. K., Zou, D. & Lang, P. A. Stress determination by back-analysis of excavation-induced stress changes—a case study. Rock Mech. Rock Eng. 23(3), 185–200 (1990).

Kavanagh, K. T. & Clough, R. W. Finite element applications in the characterization of elastic solids. Int. J. Solids Struct. 7(1), 11–23 (1971).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back propagating errors. Nature 323(6088), 533–536 (1986).

Zhao, H., Chen, B. & Li, S. Determination of geomaterial mechanical parameters based on back analysis and reduced-order model. Comput. Geotech. 132(4), 104013 (2021).

Finno, R. J. & Calvello, M. Supported excavations: observational method and inverse modeling. J. Geotech. Geoenviron. Eng. 131(7), 826–836 (2005).

Hashash, Y. M., Levasseur, S., Osouli, A., Finno, R. & Malecot, Y. Comparison of two inverse analysis techniques for learning deep excavation response. Comput. Geotech. 37(3), 323–333 (2010).

Hashash, Y. M. A., Marulanda, C., Ghaboussi, J. & Jung, S. Novel approach to integration of numerical modeling and field observations for deep excavations. J. Geotech. Geoenviron. Eng. 132(8), 1019–1031 (2006).

Samarajiva, P., Macari, E. J. & Wathugala, W. Genetic algorithms for the calibration of constitutive models for soils. Int. J. Geomech. 5(3), 206–217 (2005).

Kashani, A. R., Chiong, R., Mirjalili, S. & Gandomi, A. H. Particle Swarm optimization variants for solving geotechnical problems: review and comparative analysis. Arch. Comput. Methods. Eng. 28, 1871–1927 (2021).

Li, H., Chen, W., Tan, X. & Tan, X. Back analysis of geomechanical parameters for rock mass under complex geological conditions using a novel algorithm. Tunn. Undergr. Space Technol. 136, 105099 (2023).

Hu, D. et al. Prediction method of surface settlement of rectangular pipe jacking tunnel based on improved PSO-BP neural network. Sci. Rep. 13(1), 5512 (2023).

Qu, L. et al. Cloud inversion analysis of surrounding rock parameters for underground powerhouse based on PSO-BP optimized neural network and web technology. Sci. Rep. 14(1), 14399 (2024).

Wang, K., Luo, X., Shen, H. & Zhang, H. GSA-BP neural network model for back analysis of surrounding rock mechanical parameters and its application. Rock Soil Mech. 37(S1), 631–638 (2016).

Feng, X., Zhang, Z., Yang, C. & Lin, Y. Study on genetic-neural network method of displacement back analysis. Chin. J. Rock Mech. Eng. 18(5), 529–633 (1999).

Deng, J. & Lee, C. Displacement back analysis for a steep slope at the Three Gorges Project site. Int. J. Rock Mech. Min. Sci. 38(2), 259–268 (2001).

Zhuang, W. et al. Inversion analysis to determine the mechanical parameters of a high arch dam and its foundation based on a IAGA-BP algorithm. J. Tsinghua Univ. (Sci. Technol.). 62(8), 1302–1313 (2022).

Moreira, N. et al. Back analysis of geomechanical parameters in underground works using an Evolution Strategy algorithm. Tunn. Undergr. Space Technol. 33, 143–158 (2013).

Rechea, C., Levasseur, S. & Finno, R. Inverse analysis techniques for parameter identification in simulation of excavation support systems. Comput. Geotech. 35(3), 331–345 (2008).

Ma, H. S., Wang, H. L., Wang, R. B., Meng, Q. X. & Yang, L. L. Automatic back analysis of mechanical parameters using block discrete element method and PSO algorithm. Eur. J. Environ. Civil Eng. 27(7), 2576–2586 (2020).

Miranda, T., Dias, D., Eclaircy-Caudron, S., Correia, A. G. & Costa, L. Back analysis of geomechanical parameters by optimisation of a 3D model of an underground structure. Tunn. Undergr. Space Technol. 26(6), 659–673 (2011).

Tang, Y. G. & Kung, T. C. Application of nonlinear optimization technique to back analyses of deep excavation. Comput. Geotech. 36(1–2), 276–290 (2009).

He, B., Du, X., Bai, M., Yang, J. & Ma, D. Inverse analysis of geotechnical parameters using an improved version of non-dominated sorting genetic algorithm II. Comput. Geotech. 171, 106416 (2024).

Huang, F. et al. Slope stability prediction based on a long short-term memory neural network: comparisons with convolutional neural networks, support vector machines and random forest models. Int. J. Coal Sci. Technol. 10(1), 18 (2023).

Gong, W., Cai, Z. & Jiang, L. Enhancing the performance of differential evolution using orthogonal design method. Appl. Math. Comput. 206(1), 56–69 (2008).

Li, S., Zhu, W., Chen, W. & Li, S. Application of elasto-plastic large displacement finite element method to the study of deformation prediction of soft rock tunnel. Chin. J. Rock Mech. Eng. 21(4), 466–470 (2002).

Bardhan, A. et al. Hybrid ensemble soft computing approach for predicting penetration rate of tunnel boring machine in a rock environment. J. Rock Mech. Geotech. Eng. 13(6), 1398–1412 (2021).

Kardani, N., Bardhan, A., Kim, D., Samui, P. & Zhou, A. Modelling the energy performance of residential buildings using advanced computational frameworks based on RVM, GMDH, ANFIS-BBO and ANFIS-IPSO. J. Build. Eng. 35, 102105 (2021).

Kumar, V., Burman, A. & Kumar, M. Generic form of stability charts using slide software for rock slopes based on the Hoek–Brown failure criterion. Multiscale Multidiscip. Model. Exp. Des. 7(2), 975–989 (2024).

Pradeep, T., Samui, P., Kardani, N. & Asteris, P. G. Ensemble unit and AI techniques for prediction of rock strain. Front. Struct. Civ. Eng. 16(7), 858–870 (2022).

Noori, R., Khakpour, A., Omidvar, B. & Farokhnia, A. Comparison of ANN and principal component analysis-multivariate linear regression models for predicting the river flow based on developed discrepancy ratio statistic. Expert Syst. Appl. 37(8), 5856–5862 (2010).

Pradeep, T. & Samui, P. Prediction of rock strain using hybrid approach of ann and optimization algorithms. Geotech. Geol. Eng. 40(9), 4617–4643 (2022).

Thangavel, P. & Samui, P. Determination of the size of rock fragments using RVM, GPR, and MPMR. Soils Rocks. 45(4), e2022008122 (2022).

Qiu, G., Yin, L., Liu, C., Mei, P. & Wen, H. Meteorological Visibility Prediction Based on GA-PSO-BP Neural Network. Sci. Technol. Eng. 24(15), 06164–06208 (2024).

Standard Map Service Platform of the Ministry of Natural Resources of China. (Approval Number: GS(2019)1686) http://bzdt.ch.mnr.gov.cn/index.html (2019).

Acknowledgements

This research was supported by Yunnan Province Key Research and Development Program (No. 202303AA080004). And the authors are grateful for the data support from China Railway Eryuan Engineering Group Co., Ltd. In the end, the authors appreciate the editors and anonymous reviewers for their valuable comments and suggestions.

Author information

Authors and Affiliations

Contributions

S.S. and Y.M. wrote the main manuscript. C.D. and Q.Z. provided engineering data. Y.Z. made the figures and tables. C.L. reviewed and edited the manuscript. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shi, S., Miao, Y., Di, C. et al. Back analysis of mechanical parameters based on GPSO-BP neural network and its application. Sci Rep 15, 11018 (2025). https://doi.org/10.1038/s41598-025-86989-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86989-7

Keywords

This article is cited by

-

Deep Learning in Slope Stability Analysis: Evolution, Challenges, and Future Directions

Geotechnical and Geological Engineering (2025)