Abstract

With the wide access to data and advanced technologies, organizations and firms prefer to use data-based and interpretable analytics to deal with uncertain and cognitive decision-making problems. In this regard, this study considers quantitative data and qualitative variables, to propose a multi-dimensional decision framework based on the nested probabilistic linguistic term sets. Under the framework, XGBoost algorithm, one of the machine learning methods, is conducted to capture the importance of attributes by using the historical data, and further calculate the attribute weights. The constrained parametric approach is used to establish membership functions of linguistic variables, and then get the objective probabilities in the linguistic model, so that we can obtain a scientific decision matrix. A case study concerning the ranking of bank credit is applied to present the proposed decision framework, and the process of making a rational decision. According to the comparative analysis, the proposed framework is flexible and the result is stable. Managers and policymakers determine the attribute weights by real data and choose the suitable decision method for a certain application. The framework provides an opportunity for capturing, integrating, analyzing data, and interpreting linguistic variables in the model to consider uncertain and cognitive decision at the both theoretical and practical levels.

Similar content being viewed by others

Introduction

With the increasing complexity of decision-making problems, organizations and firms have been stuck in uncertainty and interpretability regarding the proper ways for translating prescriptive analytics use into organizational value1. On the one hand, organizations highlight the significance of making rational decisions depending on objective information with the wide access to data and affordable technologies2. On the other hand, managers and users prefer to deal with the descriptive models that provide meaningful and interpretive insights to support decision3. Therefore, decision-making using both quantitative data and qualitative variables has increased attention between the management science and operations research society to attack this type of problem4. Although a wealth of research achievements has been investigated considering machine learning, data analytics, and operation research methods5,6, there are still some challenges in balancing objective data with interpretability under a multi-dimensional decision environment:

How to determine a set of attribute weights objectively in the multi-dimensional decision framework? Practical decision-making problems gradually require accurate evaluations from the multiple perspectives in most cases, therefore researchers have established relevant models for this purpose. Inspired by probabilistic linguistic term set (PLTS)7, the nested probabilistic linguistic term set (NPLTS) is an improved linguistic model that is suitable to describe uncertain and nested information8,9. The model provides four decision-making scenarios based on the type of outer and inner linguistic variables, and has been widely applied in government investment10, communication technology11, and offshore energy option12. Attribute weight is an essential component of a decision-making framework. Ignoring rules or features of attributes would mislead the real weight, and impact the final decision to some extent13, Therefore, obtaining the attribute weight objectively is important to make a scientific decision, and exploring the feature attribute weights has become a popular trend14. Recent studies have been committed to developing this research direction, and proposed a series of methods to calculate the attribute weights. These include data-driven approaches such as the Analytic Hierarchy Process15, and consistency-driven techniques like personalized individual semantics16. Additionally, the probabilistic linguistic-Criteria Importance Through Intercriteria Correlation (CITIC) method17 and the extended entropy weight method for processing heterogeneous information in group decision-making 18 have been proposed. Other approaches, such as deviation maximization combined with TOPSIS for temporal preferences 19, as well as bidirectional projection and fuzzy entropy for handling unknown attribute weights 20, have further enriched the methodological landscape in this field. However, considering a multi-dimensional and cognitive decision-making problem, there is less research on determining the attribute weight based on historical data by using machine-learning methods, such as XGBoost algorithm, and decision tree algorithm, especially under the nested probabilistic linguistic decision environment. Table 1 presents some recent publications employing various attribute weighting methods.

How to portray the linguistic variables scientifically in the multi-dimensional decision framework? Linguistic model focused on the shape of a fuzzy set enhances the interpretability of the decision framework, while building the model that performs automatic reasoning is an increasingly important process as a necessary part of gathering data. Due to that any data collected from the real world comes with some degree of uncertainty, it is therefore crucial to design approaches that preserve the uncertainty in the modelling phase. Currently, fuzzy approaches have been popular to combine group of intervals representing the uncertainty, such as the interval approach21, the enhanced interval approach22, and the interval agreement approach23. Constrained parametric approach (CPA) is a novel method to aggregate data instances modelled in a way that preserves the shape used to model individual opinions, enhancing the interpretability of the produced models24. Considering that the CPA provides a way to choose the suitable shape, such triangular membership functions, to guarantee the representation of the acceptable instances, it has been applied to a case study involving combining data gathered from surveys. However, regarding the cognitive and uncertain decision-making problem, less literature considers interpretable membership functions to establish a linguistic model based on historical data, such as the CPA. It is a challenge to balance objective data with interpretable model under the nested probabilistic linguistic environment.

How to calculate the probabilities of cognitive information in the multi-dimensional decision framework? Probabilities in the linguistic model play an important part that affect the final decision directly, mainly representing confidence, ratio, weight, and proportion. If the evaluation information is provided by experts, the probabilities are relatively subjective. For example, a new-type smart city development evaluation problem is solved by using the probabilistic linguistic q-rung orthopair fuzzy set31. The selection of children English educational organization is considered using the two decision-making methods with probabilistic linguistic information32. Recent studies have preferred to use objective information to describe probabilities of linguistic variables. As for online information reviews, crawling comments and transforming to values is a popular way to obtain the probabilities, such as online learning platforms31, online doctor recommendation system33, and the prioritization of improvements in hotel services34. However, less study both considers the shape of the linguistic variable and the objective data, and it is still an open question to determine the probabilities in the linguistic model for multi-dimensional decision-making problems. In summary, Table 2 lists some recent publications using different models on the uncertain decision-making framework. As demonstrated, the Nested Probabilistic Linguistic Term Set (NPLTS) offers several key advantages over other fuzzy linguistic term sets, particularly in its ability to handle both quantitative and qualitative data, making it more versatile compared to models like Probabilistic Linguistic Term Set (PLTS) and Hesitant Fuzzy Linguistic Term Set with the Quality Function Deployment technique (HFLTS-QFD), which focus primarily on qualitative features. Additionally, NPLTS supports a multi-dimensional framework, a capability lacking in models such as PLTS and Double Hierarchy Hesitant Fuzzy Linguistic Term Set (DHHFLTS), allowing it to process multiple layers of information for more comprehensive decision-making. Moreover, NPLTS can incorporate weighting factors to reflect the varying importance of expert opinions or alternatives, a feature not available in simpler models like DHHFLTS and the Stimulus-Organism-Response framework (SOR). These strengths make NPLTS particularly well-suited for complex decision-making environments, such as ranking bank credit or evaluating multi-criteria scenarios, where nuanced data integration and expert consensus are crucial.

Aiming at these challenges both considering quantitative data and considering qualitative variables under the complex and uncertain environment, this study proposes a multi-dimensional decision framework combined with machine learning algorithm and constrained parametric approach, to calculate the attribute weights and probabilities in the linguistic model, respectively. Moreover, we apply the proposed framework to the ranking of bank credit, explore the robustness of the framework, and reveal the rules of attribute weights. To achieve these goals, the main contributions of this study are listed as follows:

(1) To address the first issue, we establish a multi-dimensional decision-making framework using the NPLTSs. Under the framework, we use the XGBoost algorithm to capture the importance of attributes by using the historical data, and further determine the attribute weights. This process obtains the appropriate and objective weights by leveraging large volumes of data to recognize the features and their importance degrees.

(2) To address the second issue, we conduct the constrained parametric approach (CPA) to construct triangular membership functions of linguistic variables in the linguistic model, also depending on the prior data. In this way, the model not only controls the uncertainty from objective data, but also guarantees the interpretability.

(3) To address the last issue, we calculate the probabilities in the linguistic model using the historical data and obtained membership functions. In addition, we apply the decision-making framework to the ranking of bank credit, and verify the robustness considering attribute weights and different decision-making methods by comparative analysis.

The remainder of this study is organized as follows: Section "Preliminaries" briefly reviews the relevant knowledge. Section "A multi-dimensional decision-making framework" proposes a multi-dimensional decision framework based on the XGBoost algorithm and the constrained parametric approach under the nested probabilistic linguistic environment. A case study considering the ranking of bank credit is presented in Section "Case study: the ranking of bank credit". In Section "Discussions", discussions and implications are made from theoretical and practical aspects. Section "Conclusions" ends the study with some conclusions.

Preliminaries

This section reviews the relevant knowledge of the nested probabilistic linguistic term set (NPLTS), and the Constrained Parametric Approach (CPA) for modelling uncertain data.

Nested probabilistic linguistic term set

To better describe multi-dimensional and uncertain information that conforms to human cognition, a nested probabilistic linguistic term set (NPLTS) was established by a set of nested, ordered, and continuous linguistic terms with the corresponding probabilities, which could represent the confidence coefficient, proportion, importance, weight or consistency of each linguistic term.

Let an outer linguistic term set be \(S_{O} = \left\{ {s_{\alpha } \left| {\alpha = - \tau , \cdots , - 1,0,1, \cdots ,\tau } \right.} \right\}\), and the corresponding inner linguistic term set be \(S_{I} = \left\{ {n_{\beta } \left| {\beta = - \varsigma , \cdots , - 1,0,1, \cdots ,\varsigma } \right.} \right\}\). The nested linguistic term set is defined as \(S_{N} = \left\{ {s_{\alpha } \left\{ {n_{\beta } } \right\}\left| {\alpha = - \tau , \cdots , - 1,0,1, \cdots ,\tau ;\beta = - \varsigma , \cdots , - 1,0,1, \cdots ,\varsigma } \right.} \right\}\), where \(s_{\alpha } \left\{ {n_{\beta } } \right\}\) is called the nested linguistic term9. A mapping function from a given set \(X\) to a subset of \(S_{N}\), the NPLTS is denoted as \(P_{{S_{N} }} = \left\{ { < x_{i} ,p_{{S_{N} }} \left( {x_{i} } \right) > \left| {x_{i} \in X} \right.} \right\}\), where \(p_{{S_{N} }} \left( {x_{i} } \right)\) is the set of several NPLTSs in \(S_{N}\), expressed as Wang, Xu, Wen & Li8:

where \(\# s_{\alpha } \left( {x_{i} } \right)\) is the number of the outer linguistic term elements, \(\# n_{\beta } \left( {x_{i} } \right)\) is the number of the inner linguistic term elements, and the probabilities satisfy \(\theta_{P} \left( \zeta \right)\).

According to the types of the linguistic variables in the outer linguistic term set and the inner linguistic term set, respectively, the NPLTS faces four scenarios for information fusion, and Wang et al.12 proposed the transformation functions as follows:

\(f^{(1)}\) in Case 1:

\(f^{(2)}\) in Case 2:

\(f^{(3)}\) in Case 3:

\(f^{(4)}\) in Case 4:

where \(len(s_{\alpha (k)} )\) and \(len(n_{\beta (l)} )\) represent the positive length of elements in the outer linguistic term set and the inner linguistic term set, respectively. In this paper, the application scenario of the case study conforms to Case 3.

Constrained parametric approach

Constrained Parametric Approach (CPA) was proposed by D’Alterio et al.24, to aggregate data instance modelled in a way that preserves the shape used to model individual opinions.

Let \(C = \left\{ {C_{1} ,C_{2} , \cdots ,C_{n} } \right\}\left( {n \ge 2} \right)\) be a set of attributes, in this study, we use the CPA to construct the triangular membership function of each attribute for the reason that the triangular membership function not only has good flexibility and effectively suppresses the noise, but also improves the control accuracy and reduces the calculation amount of the fuzzy control system, so as to the size of the fuzzy number can be adjusted according to the actual situation, and make the system more stable, accurate, and reliable. For each attribute \(C_{j}\), the triangular membership function has three parameters \(\left( {a,b,c} \right)\), which represents the start point, the peak point, and the end point, respectively. The constraints of each triangular membership function of \(C_{j}\) satisfy the following requirements (D'Alterio et al., 2022):

(1) \(P_{0}\): the membership function of \(C_{j}\) is in the following form to ensure that it is an actual triangle:

(2) \(P_{1}\): check that the start point is with the uncertainty ranges, represented as \(a \in \left[ {a_{\min } ,a_{\max } } \right]\);

(3) \(P_{2}\): check that the peak point is with the uncertainty ranges, represented as \(b \in \left[ {b_{\min } ,b_{\max } } \right]\);

(4) \(P_{3}\): check that the end point is with the uncertainty ranges, represented as \(c \in \left[ {c_{\min } ,c_{\max } } \right]\).

After calculating above four parameters, the triangular membership functions of attributes are modelled through parametric fuzzy sets according to the available data set. In this study, we use the CPA to complete the NPLTSs by obtaining the probabilities of the outer linguistic term sets, and the inner linguistic term sets.

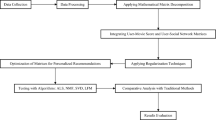

A multi-dimensional decision-making framework

To make a rational and scientific decision, we propose a novel decision-making framework with NPLTSs due to optimizing the attribute weights by the XGBoost algorithm and describing cognitive information by the CPA. The framework can be applied to various fields in practice. Figure 1 shows the flow chart of the NPLTSs-based multi-dimensional decision-making framework.

The decision-making framework under nested probabilistic linguistic environment

Let \(A=\left({A}_{1},{A}_{2},\cdots ,{A}_{m}\right)\left(m\ge 2\right)\) be a set of alternatives, \(C=\left({C}_{1},{C}_{2},\cdots ,{C}_{n}\right)\left(n\ge 2\right)\) be a set of attributes, \(w=\left({w}_{1},{w}_{2},\cdots ,{w}_{n}\right)\left(n\ge 2\right)\) be a set of weights with respect to attributes with \({w}_{j}\ge 0\) and \(\sum\nolimits_{j = 1}^{n} {w_{j} } = 1\). To comprehensively describe the alternatives \({A}_{i}\left(i=\text{1,2},\cdots ,m\right)\) with the attributes \({C}_{j}\left(j=\text{1,2},\cdots ,n\right)\) in a multi-dimensional and uncertain decision-making problem, nested probabilistic linguistic sets (NPLTSs) are used to evaluate the characteristics of alternatives by inner and outer linguistic terms, and the corresponding probability represents preferences or hesitations. According to the nested probabilistic linguistic model, \({S}_{O}=\left\{{s}_{\alpha }|\alpha =-\tau ,\cdots ,-\text{1,0},1,\cdots ,\tau \right\}\) is the outer linguistic term set, \({S}_{I}=\left\{{n}_{\beta }|\beta =-\varsigma ,\cdots ,-\text{1,0},1,\cdots ,\varsigma \right\}\) is the inner linguistic term set. NPLTSs are constructed to evaluate the alternatives \({A}_{i}\left(i=\text{1,2},\cdots ,m\right)\) with respect to the attributes \({C}_{j}\left(j=\text{1,2},\cdots ,n\right)\), and then the corresponding NPLTS decision matrix \({B}_{N}={P}_{{S}_{{N}_{ij}}}\left(i=\text{1,2},\cdots ,m;j=\text{1,2},\cdots ,n\right)\) is shown as follows:

where \({P}_{{S}_{{N}_{ij}}}\) represents the NPLTS of the \(i\)-th alternative with respect to the \(j\)-th attribute. In the following, we go on two procedures to obtain the attribute weights and probabilities of inner linguistic terms, and outer linguistic terms, respectively.

Determining attribute weights using the XGBoost algorithm

XGBoost (eXtreme Gradient Boosting), a scalable machine learning system for tree boosting, is widely applied to sales forecasting, network text classification, and risk prediction35,36. In the multi-dimensional decision-making framework, we use the algorithm to determine the attribute weights. For the test data of the alternative \(A=\left({A}_{1},{A}_{2},\cdots ,{A}_{m}\right)\left(m\ge 2\right)\), there are classification labels of all alternatives based on the attribute \(C=\left({C}_{1},{C}_{2},\cdots ,{C}_{n}\right)\left(n\ge 2\right)\). After the algorithm processing with the input data, including attributes information, the importance of each attribute that is a vector of weight \(w=\left({w}_{1},{w}_{2},\cdots ,{w}_{n}\right)\left(n\ge 2\right)\) will be obtained by the optimized distributed gradient enhancement library. To realize the process clearly in such a decision-making environment, the following describes the XGBoost algorithm:

Step1. Split data set. Splitting the data set into a train set and a test set, and then using the train set to train the model, and using the test set to evaluate the model’s performance on previously unseen data. Here, we split the train set and the test set in 4:1.

Step2. Apply Gridsearch to optimize the parameters. Grid search is an exhaustive search method that searches for the optimal hyperparameter by traversing all possible combinations of hyperparameters. We use the commonly used the GridSearchCV package to complete the grid search process and find the optimal parameter combination.

Step3. Calculate importance. Typically, the importance provides a score that indicates the usefulness or value of each feature in the enhanced decision tree within the built model. The more attributes that use the decision tree to make key decisions, the higher their relative importance. This importance is explicitly calculated for each attribute in the data set, allowing the ranking of attributes to be compared with each other. We calculate attribute importance using the functions of the xgboost package.

Obtaining NPLTSs using the constrained parametric approach

To complete NPLTSs scientifically, we obtain the outer probabilities and the inner probabilities in each NPLTS, respectively, using the data set and the triangular membership function of each attribute established by the CPA. For a decision-making problem, let a set of alternatives be \(A=\left({A}_{1},{A}_{2},\cdots ,{A}_{m}\right)\left(m\ge 2\right)\), and a set of attributes be \(C=\left({C}_{1},{C}_{2},\cdots ,{C}_{n}\right)\left(n\ge 2\right)\). According to the definition of NPLTS, the outer probabilistic linguistic term set is \({s}_{{\alpha }_{ij}}\left({p}_{{s}_{ij}}\right)=\left\{{s}_{{\alpha \left(k\right)}_{ij}}\left({p}_{{s\left(k\right)}_{ij}}\right)|i=\text{1,2},\cdots ,m;j=\text{1,2},\cdots ,n;k=\text{1,2},\cdots ,\#{s}_{\alpha }\right\}\) to describe the evaluation information with respect to attributes and outer linguistic terms. The inner probabilistic linguistic term set is \({n}_{{\beta }_{kl}}\left({p}_{{n}_{kl}}\right)=\left\{{n}_{{\beta \left(l\right)}_{k}}\left({p}_{{n\left(l\right)}_{k}}\right)|k=\text{1,2},\cdots ,\#{s}_{\alpha };l=\text{1,2},\cdots ,\#{n}_{\beta }\right\}\) used to describe the evaluation information considering both the outer linguistic terms and the inner linguistic terms.

For the element \({n}_{{\beta \left(l\right)}_{k}}\left({p}_{{n\left(l\right)}_{k}}\right)\) of the linguistic inner term set,

The first step is to calculate the outer linguistic probabilities. According to the historical data, we collect the prior data of each alternative \({A}_{i}\) with respect to the attribute \({C}_{j}\) in terms of the outer linguistic term \({s}_{\alpha \left(k\right)}\), represented as \({Data}_{ijk}=\left\{{g}_{ijk}|i=\text{1,2},\cdots ,m;j=\text{1,2},\cdots ,n;k=\text{1,2},\cdots ,\#{s}_{\alpha }\right\}\). At the same time, the feature is extracted denoted as \({feature}_{ijk}\left(i=\text{1,2},\cdots ,m;j=\text{1,2},\cdots ,n;k=\text{1,2},\cdots ,\#{s}_{\alpha }\right)\). Therefore, the probability \({p}_{{s\left(k\right)}_{ij}}\) in the outer probabilistic linguistic term set \({s}_{{\alpha }_{ij}}\left({p}_{{s}_{ij}}\right)\) is calculated in Eq. (9). Table 3 provides the framework of the outer-layer decision matrix \({R}_{O}={\left({s}_{{\alpha }_{ij}}\left({p}_{{s}_{ij}}\right)\right)}_{m\times n}\).

The second step is to calculate the inner linguistic probabilities. According to the CPA, we obtain the membership functions of each attribute with respect to the inner linguistic term, denoted as \({MF}_{jl}\). Put prior data \({Data}_{ijk}\) into the membership functions \({MF}_{jl}\), then we get the interval values \(\left[ {a_{j1} ,b_{j1} } \right],\left[ {a_{j2} ,b_{j2} } \right], \cdots ,\left[ {a_{{j\# n_{\beta } }} ,b_{{j\# n_{\beta } }} } \right]\), and determine the inner linguistic term \({n}_{{\beta }_{kl}}\). Let \({N}_{{n}_{{\beta }_{ijkl}}}\) be the number of the inner linguistic terms. Therefore, the probability \({p}_{{n\left(l\right)}_{ijk}}\) in the inner probabilistic linguistic term set \({n}_{{\beta }_{kl}}\left({p}_{{n}_{kl}}\right)\) is calculated in Eq. (10). Table 4 provides the framework of the inner-layer decision matrix\(R_{I} = \left( {n_{{\beta_{kl} }} \left( {p_{{n_{kl} }} } \right)} \right)_{{\# s_{\alpha } \times \# n_{\beta } }}\).

The last step is to combine with the outer-layer decision matrix and the inner-layer decision matrix, and get the NPLTS-based decision matrix \(R={\left({P}_{{S}_{{N}_{ij}}}\right)}_{m\times n}\), listed in Table 5. For \({P}_{{S}_{{N}_{ij}}}\),

Deriving alternative rankings via NPLTS-based TOPSIS

According to the definition of NPLTS, the outer probabilistic linguistic term set is\(s_{{\alpha_{ij} }} \left( {p_{{s_{ij} }} } \right) = \left\{ {s_{{\alpha \left( k \right)_{ij} }} \left( {p_{{s\left( k \right)_{ij} }} } \right)\left| {i = 1,2, \cdots ,m;j = 1,2, \cdots ,n;k = 1,2, \cdots ,\# s_{\alpha } } \right.} \right\}\). For each attribute \(C_{j}\), calculate the outer-layer positive ideal solution and negative ideal solution \({{s}_{\alpha }\left({p}_{s}\right)}^{+}\) and \({{s}_{\alpha }\left({p}_{s}\right)}^{-}\) as follows:

where \(\begin{array}{c}{S}_{{\alpha }_{j}}{\left({p}_{{S}_{j}}\right)}^{+}=\left\{{\left({S}_{{\alpha }_{j}}^{\left(k\right)}\right)}^{+}| k=\text{1,2},\dots ,\#{S}_{{\alpha }_{ij}}\left(P{s}_{ij}\right)\right\}\end{array}\), \(\begin{array}{*{20}c} {\left( {S_{{\alpha _{j} }}^{{\left( k \right)}} } \right)^{ + } = S_{{\left( k \right)}} \left( {{}_{i}^{{\max }} \left\{ {p_{{ij}}^{{\left( k \right)}} } \right\}} \right),j = 1,2, \cdots ,n\# } \\ \end{array}\), \({S}_{{\alpha }_{j}}{\left({p}_{{S}_{j}}\right)}^{-}=\left\{{\left({S}_{{\alpha }_{j}}^{\left(k\right)}\right)}^{-}| k=\text{1,2},\dots ,\#{S}_{{\alpha }_{ij}}\left(P{s}_{ij}\right)\right\}\), \(\left( {S_{{\alpha _{j} }}^{{\left( k \right)}} } \right)^{ - } = S_{{\left( k \right)}} \left( {{}_{i}^{{\min }} \left\{ {p_{{ij}}^{{\left( k \right)}} } \right\}} \right),j = 1,2, \cdots ,n\) .

Subsequently, incorporating the attribute weights \(w=\left({w}_{1},{w}_{2},\cdots ,{w}_{n}\right)\) derived from the XGBoost algorithm, the deviation between each alternative and the positive ideal solution is calculated:

and the deviation between each alternative and the negative ideal solution is

The smaller the deviation \(d\left({A}_{i},{{s}_{\alpha }\left({p}_{s}\right)}^{+}\right)\), the better the alternative \({A}_{i}\), and the larger the deviation \(d\left({A}_{i},{{s}_{\alpha }\left({p}_{s}\right)}^{-}\right)\), the better the alternative \({A}_{i}\). Let

be the smallest deviation between the alternative and the positive ideal solution,

be the largest deviation between the alternative and the negative ideal solution. According to the improved closeness coefficient method37, the inner weights are calculated as follows:

It is noted that the inner weight is a vector, that is \(\varepsilon_{i} = \left( {\varepsilon_{i\left( 1 \right)} ,\varepsilon_{i\left( 2 \right)} , \cdots ,\varepsilon_{{i\left( {\# n_{\beta } } \right)}} } \right)^{T}\). And the inner-layer score \(I{Z}_{i}\) is expressed as:

where \(i = \text{1,2}, ... ,m\).

Finally, the whole score \({F}_{w}\left({P}_{{S}_{{N}_{i}}}\right)\) of each alternative can be obtained with the inner weight \(\varepsilon_{i} = \left( {\varepsilon_{i\left( 1 \right)} ,\varepsilon_{i\left( 2 \right)} , \cdots ,\varepsilon_{{i\left( {\# n_{\beta } } \right)}} } \right)^{T}\) by the following steps:

The outer-layers’s score of \({s}_{\alpha }\left({p}_{s}\right)\) is

where \(\overline{\alpha } = \frac{{\mathop \sum \nolimits_{k = 1}^{{\# s_{\alpha } }} \alpha \left( k \right)p_{s\left( k \right)} }}{{\mathop \sum \nolimits_{k = 1}^{{\# s_{\alpha } }} p_{s\left( k \right)} }}\), and the inner-layer’s score of \({n}_{\beta }\left({p}_{n}\right)\) is

where \(\bar{p}_{{n\left( k \right)}}^{{\left( l \right)}} = \left( {\left\lceil {\bar{\alpha }} \right\rceil - \bar{\alpha }} \right) \times n_{{\left( {\left\lfloor {\bar{\alpha }} \right\rfloor } \right)}} \left( {p_{{n\left( k \right)}}^{{\left( l \right)}} } \right) + \left( {\bar{\alpha } - \left\lfloor {\bar{\alpha }} \right\rfloor } \right) \times n_{{\left( {\left\lceil {\bar{\alpha }} \right\rceil } \right)}} \left( {p_{{n\left( k \right)}}^{{\left( l \right)}} } \right)\),\(\left\lceil {\bar{\alpha }} \right\rceil\) means the smallest integer greater than \(\bar{\alpha }\), and \(\left\lfloor {\bar{\alpha }} \right\rfloor\) means the greatest integer less than \(\bar{\alpha }\). Then, the whole score of \({F}_{w}\left({P}_{{S}_{{N}_{i}}}\right)\) is

The comparison laws of any two NPLTS, \({\text{P}}_{{S}_{{N}_{1}}}\) and \({\text{P}}_{{S}_{{N}_{2}}}\), can be presented as follows:

-

(1)

If \({F}_{w}\left({\text{P}}_{{S}_{{N}_{1}}}\right)>{F}_{w}\left({\text{P}}_{{S}_{{N}_{2}}}\right)\), then \({P}_{{S}_{{N}_{1}}}\succ {P}_{{S}_{{N}_{2}}}\)

-

(2)

If \({F}_{w}\left({\text{P}}_{{S}_{{N}_{1}}}\right)={F}_{w}\left({\text{P}}_{{S}_{{N}_{2}}}\right)\), then \({P}_{{S}_{{N}_{1}}}\prec {P}_{{S}_{{N}_{2}}}\)

-

(3)

If \({F}_{w}\left({\text{P}}_{{S}_{{N}_{1}}}\right)={F}_{w}\left({\text{P}}_{{S}_{{N}_{2}}}\right)\), then further compared with deviation function. The deviation degree of NPLTS is

$$\begin{array}{c}\sigma \left({P}_{{S}_{N}}\right)={\left(\frac{{\sum }_{l=1}^{\#{n}_{\beta }}{\left({p}_{n\left(k\right)}^{\left(l\right)}-{F}_{w}\left({P}_{{S}_{N}}\right)\right)}^{2}}{\#{n}_{\beta }}\right)}^{1/2}\ \end{array}$$(22)

Therefore, \(\sigma \left({\text{P}}_{{S}_{{N}_{1}}}\right)>\sigma \left({\text{P}}_{{S}_{{N}_{2}}}\right)\), then \({P}_{{S}_{{N}_{1}}}\prec {P}_{{S}_{{N}_{2}}}\); if \(\sigma \left({\text{P}}_{{S}_{{N}_{1}}}\right)<\sigma \left({\text{P}}_{{S}_{{N}_{2}}}\right)\), then \({P}_{{S}_{{N}_{1}}}\succ {P}_{{S}_{{N}_{2}}}\), and if \(\sigma \left({\text{P}}_{{S}_{{N}_{1}}}\right)=\sigma \left({\text{P}}_{{S}_{{N}_{2}}}\right)\), then \({P}_{{S}_{{N}_{1}}}\sim {P}_{{S}_{{N}_{2}}}\).

Hence, we can rank the alternatives and select the best one. In particular, the comparation rule between the \({A}_{i}\) and the \({A}_{j}\) \((i,j = \text{1,2},...,m;i\ne j)\) is that.

-

(4)

If \({F}_{w}\left({\text{P}}_{{S}_{{N}_{i}}}\right)>{F}_{w}\left({\text{P}}_{{S}_{{N}_{j}}}\right)\), then \({A}_{i} >{A}_{j}\)

-

(5)

If \({F}_{w}\left({\text{P}}_{{S}_{{N}_{i}}}\right)<{F}_{w}\left({\text{P}}_{{S}_{{N}_{j}}}\right)\), then \({A}_{i} <{A}_{j}\)

-

(6)

If \({F}_{w}\left({\text{P}}_{{S}_{{N}_{i}}}\right)={F}_{w}\left({\text{P}}_{{S}_{{N}_{j}}}\right)\), further compare \(\sigma \left({\text{P}}_{{S}_{{N}_{i}}}\right)\) and \(\sigma \left({\text{P}}_{{S}_{{N}_{j}}}\right)\):

-

a)

\(\sigma \left({\text{P}}_{{S}_{{N}_{i}}}\right)>\sigma \left({\text{P}}_{{S}_{{N}_{j}}}\right)\), then \({A}_{i} <{A}_{j}\)

-

b)

\(\sigma \left({\text{P}}_{{S}_{{N}_{i}}}\right)<\sigma \left({\text{P}}_{{S}_{{N}_{j}}}\right)\), then \({A}_{i} >{A}_{j}\)

-

c)

\(\sigma \left({\text{P}}_{{S}_{{N}_{i}}}\right)=\sigma \left({\text{P}}_{{S}_{{N}_{j}}}\right)\), then \({A}_{i} \sim {A}_{j}\)

-

a)

Case study: the ranking of bank credit

In this section, a case study on ranking the bank credit is presented to choose the safest banking institution by the proposed multi-dimensional decision-making framework based on the XGBoost algorithm and constraint parametric approach.

Case description

Bank credit has become an essential part of the considerable factors for people to choose financial service institution, especially after the 2008 global financial crisis38. Modern payment systems provide a broad range of services to the users of the system, and it is one of the most advanced forms of payment39. However, bank credit still suffers from some fraud problems, including card-present and online fraud. Many fraud detection systems are prone to difficulties and challenges considering overlapping data, imbalanced data, and adaptability40. Accordingly, for individual or organizational level, choosing the optimal financial institution could decrease the risk of financial fraud, and security defense technologies are promising solutions for mid-term and long-term objectives to avoid financial fraud. In this study, we consider four alternative banks, denoted as \(A_{1} ,A_{2} ,A_{3} ,A_{4}\).

Numerous factors help determine whether a transaction involves credit card fraud. In this study, we focus on four key aspects: (1) The number of replacing the device (\(C_{1}\)), which can indicate attempts to access the account from different or unauthorized devices; (2) The number of payment failures (\(C_{2}\)), which may suggest unauthorized usage or testing of stolen card information; (3) The number of IP addresses changed (\(C_{3}\)), as frequent changes may signal an effort to disguise the user’s location; and (4) The number of IP country changed (\(C_{4}\)), as sudden cross-border shifts can be a red flag for potential fraud. These four variables are chosen because they capture crucial elements of suspicious network and device behavior, offering early indicators for detecting fraudulent activities. These four variables are chosen because they capture vital elements of suspicious network and device behavior, offering early indicators for detecting fraudulent activities. Financial institutions commonly use the location of online users in their fraud detection algorithms, and by analyzing the changes in IP addresses and countries41, we can enhance the effectiveness of detecting such fraudulent behavior. In general, financial systems evaluate the above-mentioned attributes from five aspects, i.e., food, reside, medical, education, and transportation. The outer linguistic term set is \(S_{0} = \{ s_{ - 2} = food,\;s_{ - 1} = reside,\;s_{0} = medical,\;s_{1} = education,\;s_{2} = \, transportation\}\) and the inner linguistic term set is given as \(S_{I} = \{ n_{ - 1} = low,n_{0} = medium,n_{1} = high \, \}\).

Solve the case

In the following, we solve the decision-making case by the proposed framework described in Section "A multi-dimensional decision-making framework".

Step 1. According to a set of publicly available data from a book Python Big Data Analysis and Machine Learning Business Case Practice42, we calculate the attribute weights as \(w = (0.333,0.238,0.143,0.286)\). Figure 2 shows feature importance of four attributes by using the XGBoost algorithm.

Sources: Authors’ own research.

Step 2. Calculate the outer probabilistic linguistic term sets by Eq. (8), and obtain the outer-layer decision matrix, listed in Table 6.

Step 3. Calculate the inner probabilistic linguistic term sets. First, we construct the triangular membership functions of four attributes by using CPA, formed as:

The universe of discourse (UOD) of each attribute is the interval [0,7], the interval [0,12], the interval [0,10], and the interval [0,5], respectively. Table 7 lists the parameters of triangular membership functions of each attribute.

Figure 3 shows the membership functions of each attribute. Note that the green area, the red area, and the blue area represent the linguistic information “low”, “medium”, and “high”, respectively. Light blue means the left border, yellow means the right border for each inner linguistic term, and pink area is the overlap region. Considering the number of payment failures \(C_{2}\), we take Fig. 3(b) as an example, two pink areas refer to the range of payment failures around between 3 and 5, and between 9 and 10, respectively. Specifically, when the number of payment failures is 3, the membership degree of linguistic term “low” is between 0.3 and 0.4, while the membership degree of linguistic term “medium” is between around 0.2 and 0.3, which both cover 0.3. It means that this situation can be described by two membership functions, and the similar logic is suitable for other points in the pink areas.

Sources: Authors’ own research.

Next, we calculate the probabilities in the inner linguistic term sets by Eq. (9), and obtain the inner-layer decision matrix, listed in Table 8.

Step 4. We use the NPLTS-based TOPSIS (Technique for Order Preference by Similarity to an Ideal Solution) method with two types of information fusion, as detailed in Section "Deriving alternative rankings via NPLTS-based TOPSIS" and outlined in Eqs. (12) to (22), to obtain the final ranking of bank credit, and Table 9 lists the final assessment values and results.

According to the final values, the rankings of these alternatives are \({A}_{3}\succ {A}_{4}\succ {A}_{1}\succ {A}_{5}\succ {A}_{2}\) and \({A}_{3}\succ {A}_{4}\succ {A}_{1}\succ {A}_{2}\succ {A}_{5}\), respectively, and the results indicate that \({A}_{3}\) is the best one considering the bank credit.

Comparative analysis

To show the effectiveness and stability of the proposed decision-making framework, we conduct the comparative analysis from two aspects that may affect the final ranking, and try to find rules of the weight factor under different situations.

The impact of attribute weights in the NPLTSs-based decision-making framework.

(1) In the case study, we obtain the attribute weights by using the XGBoost algorithm. In the following, we compare the ranking when the attribute weight is the average weight vector, i.e., \(w = (0.25,0.25,0.25,0.25)\). Choosing the same decision-making method with information fusion by expectation and variance, and transformation function, respectively, we get the rankings \({A}_{3}\succ {A}_{4}\succ {A}_{5}\succ {A}_{1}\succ {A}_{2}\) and \({A}_{3}\succ {A}_{4}\succ {A}_{1}\succ {A}_{2}\succ {A}_{5}\), as shown in Table 10. At this time, the best alternative is \({A}_{3}\) as well as the result in the case study. However, the assessed values of alternatives are changed by two ways, especially when using the method of expectation and variance, the rankings are different from the original one. Therefore, the attribute weights would effect on the rankings, and it is a key point to get the weights objectively.

(2) To explore whether the fluctuations of the attribute weights have effect on rankings, we consider the noise of the weight for each attribute. Let each attribute weight in \(w = (0.333,0.238,0.143,0.286)\) be with noise belonging to \(N\left( {0.25,0.05} \right)\), and the sum of all weights also equals to 1. After 1000 times, the final assessed values are calculated based on the NPLTSs-based TOPSIS method. Table 11 lists part of 1000 sets of weight.

Sources: Authors’ own research.

Figure 4 shows the assessed values of alternatives considering the noise obey the normal distribution by two ways. In Fig. 4a the results show that \(\sigma \left( {A_{3} } \right)\) fluctuates around between 0.154 and 0.156, and \(\sigma \left( {A_{4} } \right)\) fluctuates around between 0.156 and 0.158;\(\sigma \left( {A_{1} } \right)\) fluctuates around between 0.160 and 0.162; \(\sigma \left( {A_{5} } \right)\) fluctuates roughly between 0.158 and 0.164; \(\sigma \left( {A_{2} } \right)\) fluctuates around between 0.164 and 0.166; and thus the ranking relationship is \(A_{3} > A_{4} > A_{1} > A_{5} > A_{2}\) or \(A_{3} > A_{4} > A_{5} > A_{1} > A_{2}\). In Fig. 4b, the assessed values of alternative are more stable that fluctuate slightly, and the ranking is always \(A_{3} > A_{4} > A_{1} > A_{5} > A_{2}\). The results by two ways indicate that different fusions may cause a little variation of the final values. As a result, when the attribute weights change a little, the ranking in the case is relatively stable.

(3) To explore the relationship between the importance of each attribute and the ranking, we consider the impact of individual attribute weight. Let each attribute weight \(w_{j} (j = 1,2,3,4)\) randomly generate 1000 sets with step size 0.001, and belongs to [0,1] obeying to a standard normal distribution, and arranging in ascending order. At the same time, other three weights satisfy the standard normal distribution that the sum of all weights equals to 1. Through the same decision-making method, the rankings in the case study are conducted in terms of different importance degrees of each attribute. Table 12 lists part of the 100,100 sets of weights under the above situation.

Figure 5 shows the probability of being the best of each alternative when the importance of each attribute varies. In Fig. 5a, when the weight \(c_{1}\) increases from 0 to 1, the impacts of ranking on \(A_{3}\), \(A_{5}\) and \(A_{4}\) are bigger. To be specific, \(A_{3}\), \(A_{5}\) and \(A_{4}\) are the best alternative with a certain probability at the beginning, especially for \(A_{3}\). When the weight \(c_{1}\) increases to 0.2, \(A_{3}\) is the best alternative until the weight \(c_{1}\) increases to around 0.6. At this time, \(A_{4}\) is more likely to be the best one with the weight \(c_{1}\) continues to increase. The phenomenon indicates that the alternative \(A_{4}\) is very sensitive to the weight \(c_{1}\), i.e., the number of replacing the device.

In Fig. 5b, when the weight \(c_{2}\) increases from 0 to 1, there is little effect of ranking on \(A_{3}\), \(A_{5}\) and \(A_{4}\) as well. As we can see, the beginning trend is similar to the weight \(c_{1}\) varies that \(A_{3}\), \(A_{5}\) and \(A_{4}\) are possible to be the best alternative, and \(A_{3}\) is with the highest probability. When the weight \(c_{2}\) increases to around 0.25, \(A_{5}\) loses competitiveness, and \(A_{4}\) is the same situation when the weight \(c_{2}\) increases to around 0.4. When the weight \(c_{2}\) is larger than 0.4, \(A_{3}\) is always the best one no matter what other attribute weights vary. The result shows that the weight \(c_{2}\), i.e., the number of payment failures, is a critical factor for the alternative \(A_{3}\) that could enhance the competitiveness.

In Fig. 5c, when the weight \(c_{3}\) increases from 0 to 1, there are obvious impacts of the best alternative on \(A_{3}\), \(A_{5}\) and \(A_{4}\), especially for \(A_{3}\) and \(A_{5}\). At the beginning, \(A_{3}\) and \(A_{4}\) are likely to be the best alternative, and \(A_{3}\) is also the significant one. When the weight \(c_{3}\) increases to around 0.2, \(A_{3}\) holds the dominant space and \(A_{4}\) withdraws from being the best alternative. When the weight \(c_{3}\) increases to around 0.6, \(A_{5}\) starts to be the best one, and the probability is larger when the weight \(c_{3}\) continues to increase. When the weight \(c_{3}\) is more than 0.8, \(A_{5}\) is always the best alternative. This phenomenon indicates that the alternative \(A_{5}\) is very sensitive to the weight \(c_{3}\), i.e., the number of IP addresses changed.

In Fig. 5d, when the weight \(c_{4}\) increases from 0 to 1, the impacts of ranking on \(A_{3}\), \(A_{5}\) and \(A_{4}\) are more obvious than other alternatives. Specifically, \(A_{3}\), \(A_{5}\) and \(A_{4}\) have possible to be the first rank at the beginning, especially for \(A_{3}\). When the weight \(c_{4}\) increases to around 0.2, \(A_{3}\) is the best alternative until it increases to around 0.78. At this time, \(A_{4}\) is more likely to be the best one, and the probability becomes larger with the weight \(c_{4}\) continues to increase. The phenomenon indicates that the weight \(c_{4}\) i.e., the number of IP country changes, has a significant influence on the alternative \(A_{4}\), which is similar to the rules of the weight \(c_{1}\). The most difference is that \(A_{4}\) is the best alternative when the weight \(c_{4}\) reaches a larger value than the weight \(c_{1}\).

When each attribute weight increases gradually, we count the ranking times of each alternative to reveal the rule relationship between attribute weights and the ranking. Figure 6 presents the statistical ranking results of alternatives when the importance of each attribute varies.

Sources: Authors’ own research. When the weight \(c_{1}\) increases in Fig. 6a, \(A_{1}\) always ranks the third or the fourth place, and the frequency with the third ranking is highest. \(A_{2}\) almost ranks the fourth or the fifth place, among which the frequency with the fifth ranking is close to the frequency with the fourth ranking. \(A_{3}\) almost ranks the first or the second place that the first rank is higher one. \(A_{4}\) has three kinds of rankings with the larger proportion, where a second-place ranking is the highest, followed by the first-place, and the second-place. \(A_{5}\) appears in all situations, and the highest one is with a fifth-place ranking, followed by the fourth-place, the third-place, and the second-place.

When the weight \(c_{2}\) increases in Fig. 6b, \(A_{1}\) basically ranks the third or the fourth place, and the highest frequency belongs to a fourth-place ranking, which is similar to the rule of the weight \(c_{1}\). \(A_{2}\) almost ranks the fourth or the fifth place, which is far higher than the frequency of the fifth-place. \(A_{3}\) almost ranks the first or the second place that being the best alternative has an absolute advantage. \(A_{4}\) has all kinds of rankings, where the highest frequency is a second-place ranking. The ranking of \(A_{5}\) appears in all possible situations, but the frequency with the fourth ranking is significantly higher than other four rankings. Therefore, the simulations show that all rankings tend to be stable that is \(A_{3} > A_{4} > A_{1} > A_{5} > A_{2}\).

When the weight \(c_{3}\) increases in Fig. 6c, \(A_{1}\) almost ranks the third or the fourth place, and the highest frequency is with a third-place ranking, higher than that of the other ranking. \(A_{2}\) ranks the fourth or the fifth place with higher frequency. \(A_{3}\) has the first or the second ranking that the frequency with the first-place ranking is highest. \(A_{4}\) holds five rankings from the first to the last ranking, where the frequency with the second-place ranking is highest. \(A_{5}\) also has all possible rankings, and the highest frequency is with the second-place ranking, which has a little gap compared to the first-place.

When the weight \(c_{4}\) increases in Fig. 6d, \(A_{1}\) basically ranks t the third or the fourth place, and the highest frequency is with a fourth-place ranking. \(A_{2}\) almost ranks the fourth or the fifth place, among which the frequency with the fifth ranking is far higher than the fourth ranking. \(A_{3}\) almost ranks the first or the second place that being the first seems to be an obvious trend. The rankings of \(A_{4}\) and \(A_{5}\) appear in all possible situations, where the highest frequencies are the second-place ranking and the third-place ranking, respectively. The results indicate that all rankings tend to be stable that \(A_{3} > A_{4} > A_{5} > A_{1} > A_{2}\).

In general, the rankings of \(A_{1}\), \(A_{2}\) and \(A_{3}\) are relatively stable. No matter how attribute weights vary, \(A_{1}\) is either the third or the fourth one, \(A_{2}\) is either the fourth or the last one, and \(A_{3}\) is the first or the second one. In terms of each attribute, the weight \(c_{1}\), i.e., the number of replacing the device, mainly affects the fourth and the fifth order. The weight \(c_{2}\), i.e., the number of payment failures, has little impact on the rankings, and the stable ranking is \(A_{3} > A_{4} > A_{1} > A_{5} > A_{2}\). The weight \(c_{3}\), i.e., the number of IP addresses changed, mainly affects the second and the fourth order, and the weight \(c_{4}\), i.e., the number of IP country changed, does not impact the stable ranking that is \(A_{3} > A_{4} > A_{5} > A_{1} > A_{2}\).

The impact of decision-making methods in the NPLTSs-based decision-making framework. Under the proposed decision-making framework, we choose four kinds of decision-making methods to deal with the case study.

Table 13 lists the final values and rankings by using these methods. TOPSIS, TODIM (Interactive Multi-criterion Decision-making), and PROMETHEE (Preference Ranking Organization Method for Enrichment Evaluation) are classical decision-making methods to deal with selecting the most satisfied alternative with the specific characteristics. The results show that the ranking is \({A}_{3}\succ {A}_{4}\succ {A}_{1}\succ {A}_{2}\succ {A}_{5}\) by three methods with transformation function, while the ranking is \({A}_{3}\succ {A}_{4}\succ {A}_{1}\succ {A}_{5}\succ {A}_{2}\) by using TOPSIS with expectation and variance functions. In sum, the best alternative is \({A}_{3}\) by using these four methods, and the result indicates that the decision-making framework is flexible and reliable.

Discussions

Considering the uncertainty and the multi-dimensional structure in the complex and data-driven decision –making problems, this study proposes a cognitive and scientific decision-making framework combining the XGBoost algorithm with the constrained parametric approach (CPA) under the nested probabilistic linguistic environment. In the following, we discuss the framework that provides the objective and explicable information and implications for policy makers and managers.

The proposed decision-making framework provides a way to deal with complex problem based on historical data and interpretable model. On the one hand, linguistic terms are natural and efficient as the preference modeling tools in the elicitation of uncertain assessments28, since language is the closest form to express people’s cognitive information managed flexible linguistic expression43. On the other hand, data-driven techniques make up for the disadvantage of linguistic variables not being operational without detailed quantification, and have shown promising results in the analysis and reliable decision-making processes in various fields44,45. Recent studies have focused on establishing suitable linguistic models and using machine learning based on data to solve real problems applied in various fields, such as location planning of electric vehicle charging stations46, early detection of Alzheimer’s disease47, and risk assessment of Chinese enterprises’ overseas mergers and acquisitions48. Compared with other advanced decision-making framework, the proposed framework has the following characteristics:

(1) Under the nested probabilistic linguistic decision-making environment, the model is not limited to the traditional structure of a decision-making problem. Depending on the inner-layer and outer-layer with two types, ordinal or nominal linguistic terms, decision-making issues related to the preference degrees or features can be solved in the proposed framework, as well as discrimination, and optimization problems.

(2) In the proposed framework, depending on the data, a machine learning method, i.e., the XGBoost algorithm, is applied to determine the attribute weights, which provides a scientific way to indicate the importance of attributes for decision makers. Moreover, the probabilities in the linguistic model are calculated by the CPA, combined with fuzzy theory and data-based method. In this way, the established framework has features of objectiveness and interpretability.

(3) There is no specific decision-making method under the proposed framework. Each decision-making method has its own characteristics, thus, the framework is flexible and rational to adapt different problems, such as behavior decisions, cognitive decisions, and uncertain decisions. In addition, a data-based process is an essential part in the framework, which guarantees the objectivity principle. In this sense, the proposed framework not only contributes to the linguistic model with machine learning under uncertainty and interpretability, but also enriches the multi-dimensional cognitive decision-making.

(4) The proposed framework presents one of the decision-making problems, the ranking of bank credit. With the emergence of artificial intelligence, fraud detection is one of the utmost concerns for investors and financial authorities49, and related technologies have been deployed for the management of corporate financial risk50. In the case study, we explore the impact of attribute weights considering the noises and rules, and different decision-making methods on each alternative. After comparative analysis of attribute weights, the importance of each attribute for alternatives is portrayed and the effect is presented clearly. The phenomenon provides the basis that managers or decision makers could set a plan to adjust the impact of each attribute on alternatives according to the rules of attribute weights. In addition, the results indicate that the proposed framework has good stability and effectiveness.

Conclusions

At a genuine numeral network age with the driving of high and new technology, decision-making framework not only focuses on the most satisfied selection objectively driven by big data, but also the interpretability depending on the cognitive information and dealing with the uncertainty. Under such a complex environment, this study proposes a cognitive decision-making framework combining the XGBoost algorithm with the constrained parametric approach (CPA) using the nested probabilistic linguistic term sets (NPLTSs). We firstly provide a flexible linguistic model for decision makers to describe multi-dimensional and uncertain information that may belong to preference degree or nominal feature. And then, we obtain the complete nested probabilistic linguistic information combined with the CPA approach and the historical data. After that, the XGBoost algorithm is applied to determine the attribute weights, presenting the importance of each attribute scientifically. Finally, a case study concerning the ranking of bank credit is given to demonstrate the flexible applicability of the proposed decision-making framework. Besides, the comparative analysis explores the impact of each attribute weight on ranking, and reveals the rules between attributes and alternatives. The results of the case study show the good smoothness and the stability that the best one is constant by using two different fusion ways. However, it is important to acknowledge that the considered attributes are limited by data accessibility constraints, particularly the four selected attributes, which may not fully capture all relevant factors. In general, this work provides an objective and cognitive decision-making framework to deal with multi-dimensional and uncertain information, and presents the reliable process from the perspectives of theory and application, respectively. Policy makers and managers can choose the suitable decision-making method to solve a specific problem using the proposed decision-making framework. In the future, we will continue to focus on cognitive decision-making with NPLTSs in prescriptive analytics, artificial intelligence and big data analysis.

Data availability

The datasets generated and analyzed during the current study are based on publicly available data from the book Python Big Data Analysis and Machine Learning Business Case Practice.

References

Akter, S., Michael, K., Uddin, M. R., McCarthy, G. & Rahman, M. Transforming business using digital innovations: the application of AI, blockchain, cloud and data analytics. Ann. Oper. Res. 308, 7–39 (2022).

Chukwuma, U., Gebremedhin, K. G. & Uyeh, D. D. Imagining AI-driven decision making for managing farming in developing and emerging economies. Comput. Electron. Agric. 221, 108946 (2024).

Luckyardi, S., Rahayu, A., Adiwibowo, L. & Hurriyati, R. Information technology in evolutionary strategic management: decision support system in smart university. J. Eng. Sci. Technol. 18, 1561–1569 (2023).

Dias, L. C., Passeira, C., Malca, J. & Freire, F. Integrating life-cycle assessment and multi-criteria decision analysis to compare alternative biodiesel chains. Ann. Oper. Res. 312, 1359–1374 (2022).

Chae, B., Sheu, C. & Park, E. O. The value of data, machine learning, and deep learning in restaurant demand forecasting: Insights and lessons learned from a large restaurant chain. Decis. Support Syst. 184, 114291 (2024).

Ulhe, P. P. et al. Flexibility management and decision making in cyber-physical systems utilizing digital lean principles with Brain-inspired computing pattern recognition in Industry 4.0. Int. J. Comput. Integr. Manuf. 37, 708–725 (2024).

Pang, Q., Wang, H. & Xu, Z. Probabilistic linguistic linguistic term sets in multi-attribute group decision making. Inf. Sci. 369, 128–143 (2016).

Wang, X., Xu, Z., Wen, Q. & Li, H. A multidimensional decision with nested probabilistic linguistic term sets and its application in corporate investment. Econ. Res.-Ekon. Istraz. 34, 3382–3400 (2021).

Wang, X., Xu, Z. & Gou, X. Nested probabilistic-numerical linguistic term sets in two-stage multi-attribute group decision making. Appl. Intell. 49, 2582–2602 (2019).

Wang, X., Xu, Z. & Li, H. Evaluation of government investment using nested probabilistic linguistic preference relations based on graph theory. Technol. Econ. Dev. Econ. (2022).

Wang, X., Xu, Z., Gou, X. & Trajkovic, L. Tracking a maneuvering target by multiple sensors using extended kalman filter with nested probabilistic-numerical linguistic information. IEEE Trans. Fuzzy Syst. 28, 346–360 (2020).

Wang, X., Li, Y., Xu, Z. & Luo, Y. Nested information representation of multi-dimensional decision: An improved PROMETHEE method based on NPLTSs. Inf. Sci. 607, 1224–1244 (2022).

Gao, Y. et al. Mechanical equipment health management method based on improved intuitionistic fuzzy entropy and case reasoning technology. Eng. Appl. Artif. Intell. 116, 105372 (2022).

Zhu, H. et al. A structure optimization method for extended belief-rule-based classification system. Knowl Based Syst. 203, 106096 (2020).

Ren, P., Zhu, B., Ren, L. & Ding, N. Online choice decision support for consumers: Data-driven analytic hierarchy process based on reviews and feedback. J. Oper. Res. Soc. 74, 2227–2240 (2023).

Fan, S., Liang, H., Dong, Y. & Pedrycz, W. A personalized individual semantics-based multi-attribute group decision making approach with flexible linguistic expression. EXPERT Syst. Appl. 192, 116392 (2022).

Zhang, S., Wu, Z., Ma, Z., Liu, X. & Wu, J. Wasserstein distance-based probabilistic linguistic TODIM method with application to the evaluation of sustainable rural tourism potential. Econ. Res.-Ekon. Istraz. 35, 409–437 (2022).

Ye, J. et al. A Novel Diversified Attribute Group Decision-Making Method Over Multisource Heterogeneous Fuzzy Decision Systems With Its Application to Gout Diagnosis. IEEE Trans. Fuzzy Syst. 31, 1780–1794 (2023).

Chen, B., Tong, R., Gao, X. & Chen, Y. A novel dynamic decision-making method: Addressing the complexity of attribute weight and time weight. J. Comput. Sci. 77, 102228 (2024).

Fu, S. & Xiao, Y. Study on venture capital multi-attribute group decision-making based on improved Hamming-Hausdorff distance and weighted bidirectional projection. Biomed. Signal Process. Control 91, 105985 (2024).

Akyurt, I. Z., Pamucar, D., Deveci, M., Kalan, O. & Kuvvetli, Y. A flight base selection for flight academy using a rough macbeth and rafsi based decision-making analysis. IEEE Trans. Eng. Manag. 71, 258–273 (2024).

Li, C., Yi, J., Wang, H., Zhang, G. & Li, J. Interval data driven construction of shadowed sets with application to linguistic word modelling. Inf. Sci. 507, 503–521 (2020).

Deveci, M., Pekaslan, D. & Canitez, F. The assessment of smart city projects using zSlice type-2 fuzzy sets based interval agreement method. Sustain. Cities Soc. 53, 101889 (2020).

D’Alterio, P., Garibaldi, J. & Wagner, C. A Constrained Parametric Approach for Modeling Uncertain Data. IEEE Trans. FUZZY Syst. 30, 3967–3978 (2022).

Lin, M., Huang, C., Xu, Z. & Chen, R. Evaluating IoT Platforms Using Integrated Probabilistic Linguistic MCDM Method. IEEE Internet Things J. 7, 11195–11208 (2020).

Finger, G. S. W. & Lima-Junior, F. R. A hesitant fuzzy linguistic QFD approach for formulating sustainable supplier development programs. Int. J. Prod. Econ. 247, 108428 (2022).

Gou, X., Xu, Z. & Zhou, W. Interval consistency repairing method for double hierarchy hesitant fuzzy linguistic preference relation and application in the diagnosis of lung cancer. Econ. Res.-Ekon. Istraz. 34, 1–20 (2021).

Ma, X., Qin, J., Martinez, L. & Pedrycz, W. A linguistic information granulation model based on best-worst method in decision making problems. Inf. FUSION 89, 210–227 (2023).

Liu, F., Liu, S. & Jiang, G. Consumers’ decision-making process in redeeming and sharing behaviors toward app-based mobile coupons in social commerce. Int. J. Inf. Manag. 67, 102550 (2022).

Yuan, J., Lu, W., Ding, H., Liu, J. & Mahmoudi, A. A novel Z-number based real option (ZRO) model under uncertainty: application in public-private-partnership refinancing value evaluation. Expert Syst. Appl. 213, 118808 (2023).

Su, W., Luo, D., Zhang, C. & Zeng, S. Evaluation of online learning platforms based on probabilistic linguistic term sets with self-confidence multiple attribute group decision making method. EXPERT Syst. Appl. 208, 118153 (2022).

Lin, M., Chen, Z., Xu, Z., Gou, X. & Herrera, F. Score function based on concentration degree for probabilistic linguistic term sets: An application to TOPSIS and VIKOR. Inf. Sci. 551, 270–290 (2021).

Chen, X., Wang, H. & Li, X. Doctor recommendation under probabilistic linguistic environment considering patient’s risk preference. Ann. Oper. Res. https://doi.org/10.1007/s10479-022-04843-9 (2022).

Zhang, C., Xu, Z., Gou, X. & Chen, S. An online reviews-driven method for the prioritization of improvements in hotel services. Tour. Manag. 87, 104382 (2021).

Shi, X., Wong, Y. D., Li, M.Z.-F., Palanisamy, C. & Chai, C. A feature learning approach based on XGBoost for driving assessment and risk prediction. Accid. Anal. Prev. 129, 170–179 (2019).

Zhou, J. et al. Estimation of the TBM advance rate under hard rock conditions using XGBoost and Bayesian optimization. Undergr. SPACE 6, 506–515 (2021).

Zavadskas, E. K., Turskis, Z., Volvaciovas, R. & Kildiene, S. Multi-criteria assessment model of technologies. Stud. Inform. Control 22, 249–258 (2013).

Wellalage, N. H., Thrikawala, S. & Ghardallou, W. Political connections, family ownership and access to bank credit. FINANCE Res. Lett. https://doi.org/10.1016/j.frl.2022.103347 (2022).

Krivko, M. A hybrid model for plastic card fraud detection systems. Expert Syst. Appl. 37, 6070–6076 (2010).

Alonso-Robisco, A. & Carbó, J. M. Can machine learning models save capital for banks? Evidence from a Spanish credit portfolio. Int. Rev. Financ. Anal. 84, 102372 (2022).

Dan, O., Parikh, V. & Davison, B. D. Improving IP Geolocation using Query Logs. in Proceedings of the Ninth ACM International Conference on Web Search and Data Mining 347–356 (Association for Computing Machinery, New York, NY, USA, 2016). https://doi.org/10.1145/2835776.2835820.

Wang, Y. & Qian, Y. Python Big Data Analysis and Machine Learning Business Case Practice (China Machine Press, 2020).

Zhang, H. et al. Managing flexible linguistic expression and ordinal classification-based consensus in large-scale multi-attribute group decision making. Ann. Oper. Res. https://doi.org/10.1007/s10479-022-04687-3 (2022).

Ahmed, F. & Kim, K.-Y. Recursive approach to combine expert knowledge and data-driven RSW weldability certification decision making process. Robot. Comput.-Integr. Manuf. 79, 102428 (2023).

Yang, F. X., Li, Y., Li, X. & Yuan, J. The beauty premium of tour guides in the customer decision-making process: An AI-based big data analysis. Tour. Manag. 93, 104575 (2022).

Wei, G. et al. Algorithms for probabilistic uncertain linguistic multiple attribute group decision making based on the GRA and CRITIC method: application to location planning of electric vehicle charging stations. Econ. Res.-Ekon. Istraz. 33, 828–846 (2020).

Adhikari, S. et al. Exploiting linguistic information from Nepali transcripts for early detection of Alzheimer’s disease using natural language processing and machine learning techniques. Int. J. Hum.-Comput. Stud. 160, 102761 (2022).

Lan, J. et al. CODAS methods for multiple attribute group decision making with interval-valued bipolar uncertain linguistic information and their application to risk assessment of Chinese enterprises’ overseas mergers and acquisitions. Econ. Res.-Ekon. Istraz. 34, 3166–3182 (2021).

Bernard, P. et al. A financial fraud detection indicator for investors: an IDeA. Ann. Oper. Res. 313, 809–832 (2022).

Lin, K. & Gao, Y. Model interpretability of financial fraud detection by group SHAP. Expert Syst. Appl. 210, 118354 (2022).

Funding

This work was supported by the National Natural Science Foundation of China under Grant Nos. 72101168, 72271173; and China Postdoctoral Science Foundation under Grant No. 2021M692259.

Author information

Authors and Affiliations

Contributions

X.X W: Data curation; methodology; formal analysis; writing; B.B Z: Methodology; writing-review and editing; data curation; Z.S X: Formal analysis; writing-review and editing; M L: study design; writing-review and editing; investigation; M S: Interpretation; writing-review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, X., Zhang, B., Xu, Z. et al. A multi-dimensional decision framework based on the XGBoost algorithm and the constrained parametric approach. Sci Rep 15, 4315 (2025). https://doi.org/10.1038/s41598-025-87207-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-87207-0