Abstract

Adverse Drug Reactions (ADRs) stand out as a pressing challenge in public health and a critical aspect of drug discovery. The dilemma arises from the inherent impossibility of conducting a comprehensive evaluation of a drug before its market release, constrained by the limitations in scale and duration of clinical trials. Therefore, the post-marketing detection of ADRs in a timely and accurate manner becomes imperative. Adding to the complexity, a multitude of tweets harbor concealed information about adverse drug reactions, creating difficulties due to their concise, sporadic, and noisy content. To solve the problem, we regard ADR detection as a question-answer problem and introduces an innovative neural network framework with multiple GRU layers designed for extracting ADR-related information from tweets. The Von Mises-Fisher distribution is applied to derive keyword vectors through tweet sampling. An attention mechanism is employed to enhance the interaction between these keyword vectors and the word sequences within tweets. The credibility of word sequences is systematically evaluated based on the reliability of answer factors. To address concerns related to background information and training speed, we propose a quality assurance mechanism utilizing a GRU network due to its straightforward structure and efficient training capabilities. As a result of the training process, word sequences are mapped to a low-latitude vector space, generating corresponding answers. Experimental results obtained from two Twitter ADR datasets affirm that our Question-Answer Mechanism, leveraging multi-GRU architecture, significantly improves the accuracy of ADR detection in tweets. Our method achieved F1-scores of 81.3% and 73.3% on the two datasets, respectively, while consistently maintaining a higher recall.

Similar content being viewed by others

Introduction

Twitter is often considered a microblogging platform1, but it is also frequently included as a social network. It is convenient that people write tweets on Twitter through the Twitter web interface or through a variety of mobile devices, like smartphones, some cell phones and other devices owing to the rapid development of the internet. Therefore, more than 50 million posts are released every day according to Twitter’s official reports. Rich large-scale multimedia obtained from Twitter data provides opportunities for various researches, such as sentiment analysis, opinion mining, Adverse Drug Reaction detection2. Adverse Drug Reactions (ADRs) are one of the major causes of mortality and morbidity in health care2. However, traditional post-market Adverse Drug Reaction (ADR) surveillance systems face significant challenges, including substantial underreporting, incomplete data, and delayed reporting3. These issues arise due to limitations in the scale and duration of clinical trials and the occasional nature of ADR occurrences. Thus, ADR detection in a timely and accurate manner is of paramount significance. Moreover, advanced natural language process (NLP)4 and deep learning (DL)5 algorithms make it more possible to aid the detection of ADRs from massive unstructured data automatically.

Social media, such as Twitter, is an useful platform to share health-related information due to its popularity6. Moreover, some real-time information about ADRs of post-market drugs is published on these social medias, but this real-time information has not been officially reported yet, making social medias an effective means of public health surveillance, especially ADRs. Therefore, more and more researchers focus on detecting ADRs from social medias. Azadeh Nikfarjam et al.6 mined adverse drug reaction mentions with sequence labeling with word embedding cluster features, and they collected the initial version data of PSB2016 which stimulating the research interest of researchers in detecting ADR from social media. Anne Cocos et al.7 adopted CNN and Zahra Rezaei et al.8 employed attention mechanism to detect ADRs from Twitter and Daily-Strength. However, it is difficult to detect ADRs from social medias due to the colloquialism of social texts and the sparseness of posts including descriptions of ADRs or drugs, so that some methods that perform well in other written biomedical texts such as PubMed cannot be directly used in social texts. Hence, tradition machine learning-based methods like SVM use various features such as word embedding, position feature and medical knowledge to promote the classifier ability of their methods for detecting ADRs, while their detection performance mainly depends on time-consuming manual heuristic rules or features purposefully to a special task or specific datasets. Thus, researchers employed deep learning-based methods which have become a dominant method for NLP tasks to automatically learn latent features and detect ADRs, thus achieving better detection and generalization performance. Zhao et al.9 proposed collocation and aggregated representation models with multi-head attention to identify ADR, making full use of the collocation information from training data to improve the representation of medical concepts and enhance the performance. Gupta et al.10 employed cotraining method for extraction of ADR mentions from tweets, exploiting a large pool of unlabeled tweets to augment the limited supervised training data and enhance the overall performance. Meanwhile, Li et al.11 integrated BERT and emotional features to detect ADRs, obtaining better results owing to external matched drugs and learned emotional information.

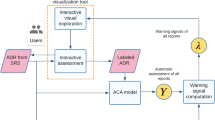

The overall architecture of the proposed QA framework, consisting of input layer, process layer and output layer. First, the original tweets are preprocessed using multiple rules to obtain more normal texts, then QA pairs are generated via matching drug and symptom datasets. Mean-while, the vMF distribution is employed to focus on pivotal tweets and capture important semantic information. Second, word embeddings generated by pre-training word embedding set are fed into multi-GRU layer and attention layer to extract deep semantic information. Finally, the outputs of multi-GRU layer and attention layer are concatenated to predict the results.

Tradition machine learning- and deep learning-based methods achieve certain results, but these results are not satisfactory to meet actual needs. Noise information in social texts and incomplete semantic information expressed by limited texts are the possible reasons which cause not better performance. The alleviation solution of existing methods aimed to noise information includes preserving words stem, removing noise information like hyperlink, etc. Furthermore, introducing external resources, is one of the effective methods to alleviate semantic deficiencies, such as SIDER12, UMLS13. Nevertheless, researchers also exploit extended source text and information-enhanced methods (eg, interactive at-tention14) to supplement semantics information or enhance semantic expression. Recently, Li et al.15 employed sequence labeling framework to extract document-level relations from biomedical texts. It achieved better results compared with other document-level relation extraction owing to capturing more complete semantic information of entities. Meanwhile, Chen et al.16 leveraged reading comprehension and prior knowledge for biomedical relation extraction. Their methods achieved better performance compared with other methods because of the novel framework and supplementary prior knowledge. Ramamoorthy et al.17 regarded ADE extraction as a Question-Answering problem and take inspiration from Machine Reading Comprehension (MRC) literature, obtaining better accuracy. The aforementioned methods utilize technologies of different fields to make up for the shortcomings of existing methods, but their methods are limited by the application in the written texts, but their results also indicate that introducing methods employed by other tasks may be helpful for detecting ADR and non-ADR.

Inspired by the Ramamoorthy et al.17 that adopt attention mechanism to capture important semantic information and Li et al.15 that take advantage of the interactions between relations via constructing sequence labeling-based framework and aim to the problems with social texts, we regard ADR detection as a Question-Answering (QA) problem and propose a novel neural network framework with multi-GRU layer to detect ADR from social texts in this paper. Our framework consists of QA generating layer to automatically construct question-answering pair of every tweet, word embedding expression layer to generate tweet token representations, multi-GRU fusion layer to extract more effective information on tweets, attention layer to enhance semantic information of tweet and output layer to yield the final prediction for each tweet, as shown in Fig. 1. The proposed model makes full use of the interactivity between question and answer generated by the tweets to alleviate the shortcoming of semantic incompleteness. Consequently, it helps our model promote ADR detection performance.

The Von Mises-Fisher distribution (vMF distribution)18 is employed to model high-dimensional directional data (such as social short text with topic) , and to refine key words using its probabilistic clustering. For ADR detection task, drug and co-occurrence symptoms are important for improving the detection performance, especially, the semantic relation between them. In addition, there are similar topic in tweets related to ADR, such as topics concerning drugs. Therefore, the vMF distri-bution is introduced into our model to refine key words, thus enhancing the overall performance.

The proposed method was evaluated on the twitter ADR dataset from PSB2016-Task119 and Social Media Mining for Health Applications (SMM4H)Workshop & Shared Task 2018-Task320, in which the ADR and non-ADR are annotated, respectively. Experimental results demonstrate that our model achieves strong performances on both corpora.

The main contributions of our worker can be summarized as follows.

-

A question-answering framework is proposed to detect ADRs from social media. The frame-work harnesses multi-GRU to fuse different semantic information, thus improving the overall performance of our model. Moreover, attention mechanism is incorporated to optimize the efficiency and accuracy of the proposed framework in extracting answers from tweets. Finally, the concatenation of attention layer and multi-GRU layer output is fed into the soft-max function to predict whether the content pertains to ADR or Non-ADR.

-

The vMF distribution is introduced into the proposed framework to extract the prominent vectors from tweets. The vMF distribution helps the proposed model focus on the key tweets, capturing the important semantic information. Consequently, it enhances semantic expression of individual tweet, thus increasing the distinguishing ability of the proposed model.

-

Two social media datasets pertaining to ADR are employed to verify the effectiveness of the proposed model. The experimental results highlight that extracting semantic information between questions and answers using multi-GRU proves beneficial in compensating for the inherent lack of semantics in social text. Additionally, the vMF distribution and attention mechanism can help our model capture the pivotal information for distinguishing between ADR and non-ADR instances.

Related work

Social medias have been platforms on which trivial matters in life, opinions on major events and things happened to tpeople themselves are published. Thus, more and more researchers concentrate on utilizing the texts collected form these platforms to conduct opinion mining, product recommendation, depression detection, sentiment analysis, event extraction, etc. Although researchers detect ADRs and their relations from Electronic Health Records (EHR)21 and clinical notes using NLP techniques, social medias can provide timelier and more extensive chances to detect ADRs, compared to the traditional pharmacovigilance systems like FDA. Therefore, more and more researchers focus on detecting ADRs from social texts.

Filtering out posts containing adverse reactions is regarded as a first step to discover ADRs from social media. Then, ADRs are extracted from these posts. The first step is crucial and typically regarded as text classification problem. Existing ADR detection methods can be roughly divided into two categories: traditional machine learning methods which depend on the designed rules and features and neural networks methods which automatically learn the parameters and high dimension and abstract features. ADRMine6, a hybrid lexicon and conditional random fields (CRF) with word embedding cluster feature, is proposed for ADR concept extraction from twitter and daily-strength. Sarker et al.22 utilize rich features to classify ADR and non-ADR tweets, and their experimental results illustrate the benefits of incorporating various semantic features such as topics, concepts, sentiments, and polarities. Dai et al.19 explore several entity recognition features, feature conjunctions, and feature selection and analyze their characteristics and impacts on the recognition of ADRs, outperforming the partial-matching-based method by 12.2%. Wang et al.23 employ a variety of imbalanced techniques and compared their performance on two large imbalanced datasets released for the purpose of detecting ADR posts, and their methods achieve comparable or even better F-scores. Nikfarjam et al. use pattern recognition24 and Sampathkumar et al.25 propose Hidden Markov Model (HMM) to classify a post containing or not containing drug side-effect information.

The performance of the aforementioned methods mainly rely on time-consuming manual heuristic rules or features purposefully to a special task or specific datasets, which is an empirical and skill-dependent work26. Consequently, researchers utilize neural network methods which own more generalization performance to automatically learn latent features and achieve better detection ADRs results. Semi-supervised CNN-27 and RNN-based models2 are proposed to detect ADRs from social texts in public twitter dataset related to ADR28, and these methods can leverage unlabeled data also present in abundance on social media, achieving state-of-the-art performance at the time. Researchers also employ transfer learning29, co-training10 and multi-task learning30 to extract ADRs, classify tweets mentioning ADRs and normalize ADRs concept. The above-mentioned methods generally acquire better performance owing to learning automatically deep semantic information. Nevertheless, the tokens in social text are represented mainly using pre-trained word embedding which can only learn a context-independent representation for each token. Thus, this is not enough to capture more complete semantics, causing that it is difficult to improve performance. Li et al.11 integrate the sufficient emotional context information and Bidirectional Encoder Representations from Transform-ers (BERT)31 to detect tweets related to ADR, gaining the best results on the two twitter datasets. Hussain et al.32 and Rawat et al.33 also employ BERT Fine-Tuned to detect ADRs, their models yield better F-scores on four datasets. However, the pre-trained models, such as BERT31, GPT34, have high requirements for computers’ hardware like GPU.

In this paper, to reduce dependence on hardware resources, we adopted traditional word embedding to express basic semantic information. Meanwhile, the overall performance is not compromised, we construct question-answering pair of tweets to learn more complete semantic information inspired by Li et al.15 and utilize the vMF distribution18 to filter out the important tweet and attention mechanism to capture the key tokens, enhancing the distinguishing ability of the proposed model and promoting the performance.

Methods

In this study, the purpose is to classify whether the tweets are related to ADRs. However, the semantic information, especially more complete semantic information, is difficult to accurately captured because of the noise information caused by oral expression and incomplete expression caused by the input limitation. Hence, we present a question-answering framework with multi-GRU and attention mechanism to alleviate the existing limitations and increase the detection performance. The proposed framework is composed of three parts, namely, input layer, process layer and output layer. Input layer mainly contains (i) question-answering generating layer (Section “Question-answering generating layer”) for automatically constructing question-answering pair of every tweet, (ii) word embedding expression layer (Section “Word representation”) for generating representation of question-answering pair, process layer includes (iii) multi-GRU fusion layer for extracting more effective information on tweets (Section “Multi-GRU fusion layer”) and (iv) attention layer for focusing on important tokens and enhancing semantic information (Section “Attention layer”), and (v) the output layer for yielding the final prediction results (Section “Output layer”).

Question-answering generating layer

Social texts are usual limited to a certain length; thus, it is insufficient to express semantic information or difficult to understand meaning. Capturing the more complete semantic information is a key point to detect ADRs. Consequently, question-answering pair is generated to relieve the shortcomings of insufficient semantic expression in this paper. However, generating appropriate question-answer pairs may not be straightforward for some tweets that contain metaphors, slang, or abbreviations, etc., resulting in inaccurate question-answer generation. There are a high degree of contextual dependence and ambiguity, making it difficult to directly translate into precise question-answering pairs in these tweets. Therefore, to generate rea-sonable question-answering pair, tweets are pre-processed by different rules and we first pre-defined a drug dictionary and collected some corresponding cooccur symptoms inspired by Li et al.’s published work11. Moreover, multiple expressions of one drug or one symptom are contain in the dictionary. Then, drug and symptom are extracted from tweets in the corpus. Last, question-answering pair is obtained by the question-answering template, such as “will [tweet] contains [symptoms]?”, “will taking [drug] cause [symp-toms]?”, and the answers may be “yes” and “no”, as shown in Fig 2.

Word representation

Topic semantic information are naturally contained in tweets, especially, tweets with ADR (such as drug), namely, tweets may be regard as directional data. Moreover, drug and co-occurrence symptoms are essential for improving detection ADR performance, namely, it is important that capturing the key words. The vMF distribution is suitable for directional data, and inherently possesses the characteristic of probabilistic clustering. Therefore, it is well-suited for detection ADR from social media data.

Specifically, the mean direction parameter \(\mu\) of the vMF distribution can be interpreted as the center or dominant direction of the keyword vectors, while the concentration parameter k reflects the degree of tightness or clustering of the vectors around this center. Then, the similarity between each keyword vector and the dominant direction \(\mu\) can be calculated. The detailed calculation process is described as follows.

Suppose \(w_i\) is the entity (i.e., drug and symptom) we are interested in of the word sequence in the tweet, it is necessary to map the word sequence \(w_i\) into a word embedding vector adr by looking up the word embedding table \(W_{adr}\). The word embed-ding table \(W_{adr}\) can be obtained by random process or pre-training word embedding set.

Let \(s_q\) be the set of answer factors corresponding to the question Q in the word sequence \(s_{twent}\). For QA system, the probability distribution of the word sequence \(s_{twent}\) in the tweet can be described by the Dirichlet distribution, then

Where, \(\phi _q\) is the \(K_q\)-dimensional vector and \(K_q\) is the number of answer factors for question Q.

For the question Q, the trustworthiness of the k-th answer factor \(a_k\) can be described by a binary label \(t_{ak}\), namely, if the answer factor \(a_k\) is the answer of the question Q, then the binary label \(t_{ak} = 1\), otherwise, the binarized label \(t_{ak} = 1\). The probability distribution of the binarized label \(t_{ak}\) can be described by the Bernoulli distribution:

Where \(\gamma _{ak}\) is the priori true probability, which determines the real possibility of each answer factor \(a_k\). \(\gamma _{ak}\) can be subordinated to the Beta distribution:

Where \(\alpha\) and \(\beta\) are respectively hyperparameters of the beta distribution.

To improve the accuracy of detecting ADRs from tweets, the reliability of word semantics and answer factors in the word sequence are employed to determine the label of answer keywords. Equations (1) and (2) are combined as follows:

where \(P\left( Z_{qm} = k|\phi _{qk},t_{qk}\right)\) is the key word factor of the answer, \(\phi _{qk}\) and \(t_{qk}\) denote the probability distribution of the word sequence and the binarized label, respectively.

After determining the label of the key word factor of the answer, the word embedding vector \(adr^{qm}\) describing the semantics of the corresponding factor is obtained according to the word embedding table \(W_{adr}\). The word embedding vector \(adr^{qm}\) is sampled according to the Eq. (4), and the keyword vector \(s^{qm}\) corresponding to the word sequence \(s_{tweet}\) is obtained, then the probability distribution of \(s^{qm}\) can be described as: \(s^{qm}\) \(\sim\) \(vMF\left( \mu _{qk}, \kappa _{qk}\right)\).

where \(\mu _{qk}\) is the centroid parameter and \(\kappa _{qk}\) is the lumped parameter.

To better describe the semantic features of the answer factor in the word sequence, \(\mu _{qk}\) and \(\kappa _{qk}\) are subject to their joint distribution \(\mu _{qk}\), \(\kappa _{qk}\) \(\sim\) \(\Phi (\mu _{qk}, \kappa _{qk}:m_0, R_0, c)\), which is defined as follows:

where \(C_D(\kappa )\) \(=\) \(\frac{k^{\frac{D}{2} - 1}}{I_{\frac{D}{2} - 1}(\kappa )}\), \(I_{\frac{D}{2} - 1}(\cdot )\) is the first type of improved Bessel function, \(m_0\), \(R_0\) and c are constants.

Multi-GRU fusion layer

Similar to LSTM35, GRUs36 can adaptively reset or update their memory content. Therefore, each GRU has only a reset gate and an update gate, its configuration is simpler than the LSTM’s. However, unlike the LSTM, the GRU fully exposes its memory content each timestep and balances between the previous memory content and the new memory content strictly using leaky integration, albeit with its adaptive time constant controlled by update gate \(z^j_t\). At time step t, the state \(h^j_t\) of the j-th GRU can be described as:

where \(h^j_{t-1}\) and \(\tilde{h}^j_t\) are the previous storage contents and the new candidate storage contents, respectively.

The update gate \(z^j_t\) can control the unit to update it to activate new stored content or to forget previous stored content. Based on the previously hidden state \(h^j_{t-1}\) and current input \(s^{qm}_{current}\), the update gate can be designed as follow:

Similar to the LSTM, the GRU takes a linear sum between the existing state and the new calculated state. However, the GRU does not have any mechanism to control the exposure of its computing state. Therefore, every time GRU is calculated, its state is completely exposed.

Same to the traditional recurrent unit37, candidate activation can be described as:

where \(r_t\) is a set of reset gates, \(\otimes\) is an element-by-element multiplication operation.

When GRU closed (close to 0), the reset gate effectively allows the unit to forget the previously calculated state just as it reads the first symbol of the input sequence. The reset gate is calculated similarly to the update gate:

To obtain sufficient global semantic information, the multi-GRU layer is built, consisting of the forward GRU, the current GRU, and the backward GRU.

Attention layer

Attention mechanism has demonstrated success in a wide range of tasks ranging such as relation classification38, NER39, it can help model focus on key information and improve the overall performance. To capture the correlation between the word sequence \(s_{tweet}\) in the tweet and the keyword vector \(s^{qm}\) obtained after training through the GRU unit, attention mechanism is introduced into our model. The proposed QA system’s attention mechanism is described as follow.

where \(score(\cdot ,\cdot )\) is the alignment function of Manhattan distance and cosine distance. The function \(score(\cdot ,\cdot )\) can be shown in Eq. (12).

where \(W_a\) is a weight matrix.

In our model, the answer factor \(a_k\) in the word sequence \(s^{current}_{tweet}\) and the attention score of the current word sequence are used to better evaluate the correctness and credibility of the answer. Therefore, the answer credibility value can be defined as:

where n is the number of words in the current word sequence \(s^{current}_{tweet}\), and \(P(t_{ak} = 1 | t_{ak}, \gamma _{ak})\) is the probability that the word in the current word sequence.

Output layer

For the current word sequence \(s^{current}_{tweet}\), the final weighted \(g_{current}\) of the output layers of the QA system can be illustrated as follow:

The tanh operation is performed on the vector \(g_{current}\) to obtain the final output \(o_{current}\) of the proposed model, namely:

where \(W_o\) is a weight matrix.

To obtain a better global semantic context for the tweet, the multi-GRU layer is constructed, consisting of the forward GRU, the current GRU, and the backward GRU. Similar to Eq. (14), the multi-GRU can be described as:

The final weighted of the output layers based on the multi-GRU network can be illustrated as:

By performing a tanh operation on Eq. (17), the final output is described in Eq. (18):

where, \(W^T_m\) is dimension matrix, and \((3 \cdot d) \times d\) is the dimension of word vector.

Experiments

Datasets

In our experiments, two corpora collected from Twitter related to ADR are used to verify the effectiveness of our model. The first corpus is from PSB2016-Task1 (named PSB2016), consisting of 10,822 tweets in the original data set. Another is from Social Media Mining for Health Applications (SMM4H) Workshop & Shared Task 2018-Task3 (named SMM4H2018), which contains 25,678 annotated tweets. Both two corpora only provide the tweet’s and user’s ID while do not allow sharing of actual raw tweet text for protecting user privacy. Therefore, we had to recrawl the data using the tweet’s and user’s ID through Twitter’s Service Streaming API, ultimately only 6,529 (60.3%) and 17809 (69.3%) tweets were still publicly available, respectively. We split the dataset into 80% training, 10% validation and 10% test tweets according to the Zheng-guang Li11, respectively.

From Table 1, we can find that the ratios of Pos./Neg are 12.9% and 12.3% on the original datasets, and Pos./Total(%) are 11.4% and 11.0% on the re-crawl datasets for PSB2016 corpus. Mean-while, for SMM4H2018 corpus, Pos./Neg are 10.2 and 11.1% on the original datasets, and Pos./Total(%) are 9.2% and 9.7% on the re-crawl datasets (ours). Namely, the changes in the ratios are minimal. Therefore, the experimental results on the re-crawl datasets are highly comparable to those on the original datasets, and the impact on potential bias and limitations should be ignored.

Pre-processing

Raw tweets collected from twitter generally contains such as grammatical errors, spelling mistakes and the manual abbreviation of words due to the casual nature of people’s behaviors and usage of social network that affect the performance of the model. Tweets have certain special characteristics such as hyperlink, retweets, emoticons, e.g., smiling or tear-stained face, user mentions, etc. which have to be suitably extracted for special pre-processing. Therefore, raw twitter data has to be normalized to create a dataset which can be easily learned by various algorithms. Two corpora are pre-processed to label tweets. The specific steps are as follows:

-

(I)

General pre-processing

Four operations should be done, namely, (i) converting the text to lower case; (ii) replacing 2 or more dots (.) with space; (iii) eliminating spaces and quotes (“ and’) from the ends of tweet, and (iv) re-placing 2 or more spaces or tab with a single space.

-

(II)

User handle

Every twitter user has a handle associated with them. Users often mention other users in their tweets by handle. We replace all user mentions with the special word USERHANDLE. The regular expression used to match user mention is [\(\backslash\)S]+.eg.raw text:“SoozSuze thanks and THANKS for the lozenge #lifesaver” ,after converting:“USERHANDLE thanks and THANKS for the lozenge #lifesaver.”

-

(III)

Hyperlink

Users often share hyperlinks to other webpages in their tweets. Any particular hyperlink is not important for text classification as it would lead to very redundant features. Therefore, we replace all the hyperlinks in tweets with the word HYPERLINK. The regular expression used to match hyperlink is ((www [\(\backslash\)S]+)|(https?://[§]+)||(http?://[\(\backslash\)S]+)).eg.,raw text:“#itsyourlife #blessed... https://www.instagram.com/p/BBdiniTLvH7/Hap-py Tuesday IG!”,after converting:“URL Happy Tuesday IG!”

-

(IV)

Hashtag

A hashtag is a word or a phrase without spaces, prefixed by a hash symbol (#) ,which is used to define topics and phrases of current hot topics such as # iPad, #News,#ibuprofen .All the hashtags are replaced with the words with the hash symbol by regular expression #(\(\backslash\)S+). eg.,raw text:“Gradual #smoking cessation may be possible with nicotine addiction pill. ”, after converting:“Gradual smoking cessation may be possible with nicotine addiction pill. ”

-

(V)

Retweet

Retweets are tweets which have already been sent by someone else and are shared by other users. Retweets begin with the letters RT. We remove RT from the tweets as it is not an important feature for extraction task by the regular expression \(\backslash\)brt \(\backslash\)b.

-

(VI)

Repeat character

People often use repetitive characters when expressing more colloquial languages, such as “I’m in a hurryyyy”, “We won, yaaayyyyyy!”. we replace these characters that have been repeated more than twice with two characters using the regular expres-sion \(\backslash\)1 \(\backslash\)1.

-

(VII)

Word-level processing

After applying tweet-level pre-processing, we processed individual words of tweets as follows. (i) Strip any punctuation [‘”?!,.():;] from the word.(ii) Convert 2 or more letter repetitions to 2 letters. Some people send tweets like “I am sooooo happpppy” adding multiple characters to emphasize on certain words. This is done to handle such tweets by converting them to “I am soo happy”. (iii) Remove - and ’. This is done to handle words like t-shirt and their’s by converting them to the more general form tshirt and theirs. (iv) Check if the word is valid and accept it only if it is. We define a valid word as a word which begins with an alphabet with successive characters being alphabets, numbers or one of dot (.) and hyphen (-).

Hyperparameters

Hyperparameters for the binarized label general remarks on figures

For QA systems, the choice of the binarized label \(t_{ak}\) is an important step. Since the answer to our QA system is only “yes” and “no”, the confidence space of the prior probability \(\gamma _{ak}\) in Eq. (2) can be set to 0.4 \(\sim\) 0.6. According to the Eq. (3), the values of the parameters \(\alpha\) and \(\beta\) are tested, and the result is shown in Fig. 3. When \(\alpha = \beta\), and as the values of \(\alpha\) and \(\beta\) increase, \(\gamma _{ak}\) becomes more concentrated within range (0.4, 0.6). Therefore, in our experiments, both \(\alpha\) and \(\beta\) are set to 128.

Training details

The proposed models were implemented with the open-source deep learning package Keras running on top of TensorFlow2.0 and Python3.6. Table 2 shows the other hyperparameters used in our experiments. 300D word embedding is generated by GloVe with a large number of tweets that mention drugs, with the drugs provided by literature11, and the dropout rates are set to 0.1 and 0.15 for SMM4H2018 and PSB2016 due to their size, respectively. Other hyperparameters is same for the two datasets, including a hidden size of 64, a batch size of 10, 10 epochs, and a token size of 34. In addition, the tokens in tweets are mapped to the vector space. During the training process, the word vector is treated as a fixed constant.

Results and discussion

Evaluation measures

Detecting ADR and non-ADR is a binary classification task, thus, precision (P), recall (R) and F1-score (F) are employed as the evaluation measures of comparing our model and other models, as shown in Eqs. 19–21.

where \(TP_{ADR}\) is the number of true ADR tweets, \(FN_{ADR}\) is the number of false non-ADR tweets, \(FP_{ADR}\) is the number of false ADR tweets.

Baseline methods

To illustrate the effectiveness of our proposed model, multiple baseline methods are selected to compare with our model.

-

Lee et al.27 trains convolutional neural networks with self-collected various tweets, then, adopts majority vote to detect ADR and non-ADR tweets.

-

Chowdhury et al.40 proposes a multi-task neural network model, which integrates ADR-classification, ADR-labelling and ADR-indication tasks with different levels of supervision collectively to learn several tasks’ association with ADR monitoring.

-

Chen et al.41 builds \(\langle\)drug, ADR\(\rangle\)pairs via introducing external knowledge to generate binary features, then integrates the features with the output of BERT.

-

Li et al.11 constructs variety emotion features including emotion word and emotion embedding and inputs them into BERT to learn abstract semantic information and the relation between ADR and emotion.

-

Sakhovskiy et al.42 proposes a multi-modal model with state-of-the-art BERT-based models for language understanding and molecular property prediction.

Performance comparison of our method and other existing methods

In this section, our method is compared with other existing methods. Table 3 illustrates the performance of the existing systems and our model. Lee et al.27 and Chowdhury et al.40 only provide the results on PSB2016. Meanwhile, Chen et al.41 and Sakhovskiy et al.42 only verify their model on SMM4H2018. However, our model and Li et al.11 perform experiments on both PSB2016 and SMM4H2018. Therefore, we compare the experimental results on different datasets separately.

Firstly, for PSB2016, Chowdhury et al.40 obtain the best precision value of 72.88%, but Lee et al.27 achieve the worst precision of 70.21%. The difference may be caused by the different methods, namely, voting method and multi-task model are adopted in Lee et al.27 and Chowdhury et al.40, respectively. Compared with existing model, the precision of our model is 1.3% and 0.7% higher than that of Lee et al.27 and Li et al.11, but 1.3% lower than that of Chowdhury et al.40. In the terms of recall, our model exceeds all previous published methods , i.e., 4.6% of Chowdhury et al.40, 0.6% of Li et al.11, 15.5% of Lee et al.27. This indicates that our model is more suitable than other methods to detect ADR because ADR involves human health. Moreover, our model achieves an F1-score of 73.29%, higher than that of all baselines.

Secondly, for SMM4H2018, our model and Sakhovskiy et al.42 gain better performance than Chen et al.41 and Li et al.11, namely, 78.21% and 79.40% versus 62.89% and 63.73 in precision, 84.65% and 80.80% versus 69.16% and 66.28 in recall, 81.30% and 79.90% versus 57.67% and 64.98 in F1-score. Sakhovskiy et al.42 outperforms the proposed method in precision by 1.2 points, but the proposed method achieves a recall of 84.65% and an F1-score of 81.30%, surpassing Sakhovskiy et al.42 by 3.8 points and 0.4 points, respectively. In addition, all methods’ recall values are higher than precision values. This maybe because the data size of SMM4H is more than that of PSB2016.

The performance variance between two datasets may stem from differences in data size, as more semantic features can be learned from a larger dataset during training, resulting in outcomes that closely align with real-world results. The improved performance of the proposed model, when compared to other methods, may be attributed to several factors: (i) the inclusion of Question-Answer pairs generated from external drug and symptom sets; (ii) the identification of crucial tweets through filtering by the vMF distribution; (iii) the effective capture of prominent semantic features facilitated by the attention mechanism. These factors also con-tribute to the higher recall rate as opposed to the precision rate.

Ablation research

To investigate the effect of each component, namely, vMF, Multi-GRU, Attention mechanism and QA, the ablation research on the PSB2016 and SMM4H datasets is performed, as shown in Table 4. Hyperparameters are fixed to the whole model, i.e., the best performance of the final model. Then, every component is gradually introduced into the baseline model.

Firstly, only one component is introduced. Using Bi-GRU (our baseline), results similar to Zhao et al.9 are achieved, F1-scores of 52.92% and 35.87% on PSB2016 and SMM4H2018, respectively. However, when vMF helps the model sift better data and generate higher quality word embeddings, the precision score on PSB2016 and SM44H2018 increases obviously first, resulting in F1-scores of 1.2% and 4.7% improvements. Attention mechanism has contributed to improving the recall rate of the whole model, attention mechanism outperforms our baseline in recall rate by 1.1 points and 4.7 points on PSB2016 and SM44H2018. Compare with Bi-GRU, the multi-GRU not only improves the precision rate, 65.22% versus 61.58% on PSB2016, 49.22% versus 45.61 on SMM4H2018, but also promotes the recall rate, 51.83% versus 48.34 on PSB2016, 60.35% versus 36.46% on SMM4H2018. Therefore, vMF+Multi-GRU obtains F1-scores of 57.76% and 54.22% on PSB2016 and SMM4H2018, respectively.

Secondly, two or more components is added into the model. Compared with only multi-GRU and attention mechanism, the model with both multi-GRU and attention mechanism gains the better results, a precision of 65.90%, a recall of 60.20% and an F1-score of 62.92% on PSB2016, surpassing vMF+Multi-GRU by 0.7 points, 8.4 and 5.2 points, respectively; a precision of 68.84%, a recall of 67.50% and an F1-score of 68.16% on SMM4H2018, exceeding vMF+Multi-GRU by 19.6 points, 7.2 and 11.9 points, respectively. This indicates that multi-GRU and attention mechanism are complementary to each other. QA mechanism demonstrates out-standing results and contributes to promoting precision rate, recall rate and F1-score. The proposed model outperforms the model ‘vMF+Multi-GRU+Attention’ in F1-score 10.7% and 12.14%. The possible reasons are (i) QA pairs are generated from original tweets, but extracted the crucial information via the drug and symptom dictionary; (ii) QA pairs may be regarded as the external knowledge. External knowledge is useful for extracting important information and improving the overall performance45,46,47.

In addition, the proposed model achieves different results on PSB2016 and SMM4H2018. Compared to vMF+Multi-GRU+Attention, Precision rate, recall rate and F1-score increase by 5.62%, 14.95%, 10.7%, respectively on PSB2016, but 9.37%, 17.15%, 12.14%, respectively on SMM4H2018, namely, the performance on SMM4H2018 is superior to that on PSB2016. The possible reasons are inferred as follows: (i) the positive-to-negative ratio in SMM4H2018 is smaller than that in PSB2016 according to Table 1, which likely has an impact on the performance, and (ii) the scale of SMM4H2018 is larger than that of PSB2016, perhaps resulting in better convergence of the proposed model on SMM4H2018.

Performance comparison of different pre-trained BERTs and glove embeddings

To compare the performance of BERT pre-trained using different resources and Glove word embeddings, the experiments using different pre-trained BERT are performed, as shown in Table 5. These BERTs are normal BERT provided by Google31, BioBERT pre-trained by Lee et al.46, Clinical BERT provided by Huang et al.47. We use the same hyperparameters described in the mentioned-above Section “Training Details” and Table 2.

For PSB2016, our model and Clinical BERT achieve the close results (73.29% vs. 73.12%), but for SMM4H2018, our model outperforms Clinical BERT by 1.2%. Moreover, compared the results obtained by the three BERT models, the performance of Clinical BERT is better than that of BioBERT, and results obtained by BioBERToutper-forms that of normal BERT.

The above results imply that BERTs with re-sources which lean towards oral language training have more advantages for detection ADR from tweets. Furthermore, BERT models trained with domain-specific resources outperform those trained on normal texts.

The effect of vMF

To illustrate the effect of the vMF distribution on word embedding vector sampling, an epoch data is collected and visualized.

Figure 4 shows the result of a keyword sampling distribution of an epoch data. In the figure, the dark blue is the ADR answer factor of the QA system in the tweet, and the light blue is not the answer factor of the QA system. It can be seen that vMF can effectively aggregate the answer factors of the QA system to form a keyword vector. According to Table 4, compared Bi-GRU+vMF with the classic Bi-GRU, the F1-score increased by 1.2% and 4.7% on PSB2016 and SMM4H2018, respectively. Thus, vMF may help the proposed model extract the key information and promote the whole performance.

Error analysis

Our proposed model achieves higher recall rate than precision rate (75.15% vs. 71.52% on PSB2016, 84.65% vs. 78.21%). This is helpful for guaranteeing the safety, but the relative balance results are also our expectation. Therefore, in this section, we summarize the findings of the error analyses performed on the proposed model, as shown in Table 6, pointing out the critical challenges that we have found out. The most common reason for false negatives was the free expression on social platform, existing the use of creative expressions (eg., “This cipro is some surrrrrrious shit”). A few of tweets are labelled as error because lack of context in the length-limited tweets poses problems for annotators as well as the systems, such as “How do guys ejaculate on paxil?? antide-pressants”, “Cymbalta, you ‘re driving me insame”. In addition, inadequate semantic expressed by the length-limited posts may cause the misclassification (eg., “Trazo-done is no joke. Slept through every alarm.”).

Therefore, the main challenge of detecting ADR on social media are i ) noise information; and ii )in-adequate semantic information. Researchers should focus on two aspects. On one hand, we should fine filter out more valid and clear tweet for training model. On the other hand, future work should investigate that incorporating surrounding tweets in the classification model may or not improve overall performance.

Conclusion

In this paper, a novel question-answer neural network framework with multi-GRU layer is proposed to detect ADR from tweets. Users often express complete thoughts over multiple posts, resulting in lack of context at the single tweet level. Therefore, we generate question-answer pairs via pre-defining a drug dictionary and collecting some corresponding cooccur symptoms, and regard the detection of ADR as a question-answer prob-lem to enhance semantic expression and improve the whole detection performance. Besides, some tweets play an important role but some not in distinguishing ADR and non-ADR, namely, some tweets are redundant in training step. Therefore, the vMF distribution is employed to obtain keyword vectors by sampling tweets. Moreover, to fuse different information extracted by different stage GRUs, we utilize the previous GRU, current GRU and next GRU to obtain different semantic information. Besides, semantic information extracted by different GRUs is concatenated with interaction semantic information captured by attention mechanism which is useful for learning key semantic information, and the concatenation information is used to classify ADR and non-ADR. Experimental analysis indicates that our approach can distinguish well ADR tweets and non-ADR tweets. Furthermore, our proposed model achieves the state-of-the-art results compared with other existing methods. Furthermore, multi-GRU, vMF and attention mechanism are complementary to each other. Error analysis indicate the key points of NLP tasks related to social text. In future work, we would like to discover the fine filtering social texts method to construct more large and valid training dataset and make full use of surrounding posts to alleviate inadequate semantic information due to the length-limited posts. Meanwhile, to alleviate the impact of metaphors, slang, or abbreviations in tweets on detection performance, NER, sentiment analysis, and semantic role annotation are fused to enhance the pre-processing capability for tweets. Additionally, we will consider more experiments on the cross-linguistic datasets to enhance the robustness of our model.

Data availability

The datasets used in this study are available at https://github.com/jiaoh-luo2004/dataset.

References

Viviani, M. et al. Assessing vulnerability to psychological distress during the COVID-19 pandemic through the analysis of microblogging content. Future Gener. Comput. Syst. 125, 446–459 (2021).

Echeverria, V., Falcones, G., Castells, J., Granda, R. & Chiluiza, K. Semi-Supervised Recurrent Neural Network for Adverse Drug Reaction Mention Extraction. In CEUR Workshop Proceedings, Vol. 2017 94–98 (1828).

Sarker, A. et al. Utilizing social media data for pharmacovigilance: A review. J. Biomed. Inform. 54, 202–212 (2015).

Miftahutdinov, Z., Alimova, I. & Tutubalina, E. KFU NLP Team at SMM4H 2019 Tasks: Want to Extract Adverse Drugs Reactions from Tweets BERT to The Rescue 52–57 (2019).

Sidhom, J. W. et al. DeepTCR is a deep learning framework for revealing sequence concepts within T-cell repertoires. Nat. Commun. 12, 1–12 (2021).

Nikfarjam, A., Sarker, A., O’Connor, K., Ginn, R. & Gonzalez, G. Pharmacovigilance from social media: Mining adverse drug reaction mentions using sequence labeling with word embedding cluster features. J. Am. Med. Inform. Assoc. 22, 1–11. https://doi.org/10.1093/jamia/ocu041 (2015).

Cocos, A., Fiks, A. G. & Masino, A. J. Deep learning for pharmacovigilance: Recurrent neural network architectures for labeling adverse drug reactions in Twitter posts. J. Am. Med. Inform. Assoc. 24(4), 813–821 (2017).

Rezaei, Z., Ebrahimpour-Komleh, H., Eslami, B., Chavoshinejad, R. & Totonchi, M. Adverse drug reaction detection in social media by deep learning methods. Cell J. 22(3), 319–324 (2020).

Zhao, X., Yu, D. & Vydiswaran, V. G. V. Identifying adverse drug events mentions in tweets using attentive, collocated, and aggregated medical representation. In Proceedings of the 4th Social Media Mining for Health Applications (#SMM4H) Workshop & Shared Task 62–70 (2019).

Gupta, S., Gupta, M., Varma, V. & Pawar, S. Co-training for Extraction of Adverse Drug 1–6 (2018). arXiv:1802.05121

Li, Z., Lin, H. & Zheng, W. An effective emotional expression and knowledge-enhanced method for detecting adverse drug reactions. IEEE Access 8, 87083–87093 (2020).

Nguyen, D. A., Nguyen, C. H. & Mamitsuka, H. A survey on adverse drug reaction studies: Data, tasks and machine learning methods. Brief. Bioinform. 22(1), 164–177 (2021).

Mohan, S. & Li, D. MedMentions: A Large Biomedical Corpus Annotated with UMLS 1–13 (2019). arXiv:1902.09476.

Li, Z., Lin, H. & Zheng, W. An effective emotional expression and knowledge-enhanced method for detecting adverse drug reactions. IEEE Access 8, 87083–87093 (2020).

Li, Z. et al. Exploiting sequence labeling framework to extract document-level relations from biomedical texts. BMC Bioinform. 21(125), 1–14 (2020).

Chen, J., Hu, B., Peng, W., Chen, Q. & Tang, B. Biomedical relation extraction via knowledge-enhanced reading comprehension. BMC Bioinform. 23(1), 1–19 (2022).

Ramamoorthy, S. & Murugan, S. An Attentive Sequence Model for Adverse Drug Event Extraction from Biomedical Text (2018). arXiv:1801.00625.

Li, S. & Mandic, D. Von Mises–Fisher elliptical distribution. IEEE Trans. Neural Netw. Learn. Syst. 34(12), 11006–11012 (2022).

Dai, H. J., Touray, M., Jonnagaddala, J. & Syed-Abdul, S. Feature engineering for recognizing adverse drug reactions from twitter posts. Information 7(2), 1–20 (2016).

Weissenbacher, D. et al. Overview of the Fourth Social Media Mining for Health (# SMM4H ) Shared Task at ACL 2019 21–30 (2019).

Wu, P. Y. et al. Omic and electronic health record big data analytics for precision medicine. IEEE Trans. Biomed. Eng. 64(2), 263–273 (2017).

Sarker, A. & Gonzalez, G. Portable automatic text classification for adverse drug reaction detection via multi-corpus training. J. Biomed. Inform. 53, 196–207 (2015).

Wang, C. Adverse drug reaction post classification with imbalanced classification techniques. In 2018 Conference on Technologies and Applications of Artificial Intelligence 5–9 (2018).

Nikfarjam, A. & Gonzalez, G.H. Pattern mining for extraction of mentions of adverse drug reactions from user comments. In AMIA Annual Symposium Proceedings 1019–1026 (2011).

Sampathkumar, H., Chen, X. & Luo, B. Mining adverse drug reactions from online healthcare forums using hidden Markov model. BMC Med. Inform. Decis. Mak. 14(91), 1–18 (2014).

Li, Z., Chen, H., Qi, R., Lin, H. & Chen, H. DocR-BERT: Document-level R-BERT for chemical-induced disease relation extraction via Gaussian probability distribution. IEEE J. Biomed. Health Inform. 26(3), 1341–1352 (2022).

Lee, K. et al. Adverse drug event detection in tweets with semi-supervised convolutional neural networks. In 2017 International World Wide Web Conference 705–714. https://doi.org/10.1145/3038912.3052671.

Sarker, A., Nikfarjam, A. & Gonzalez, G. Social media mining shared task workshop. In Pacific Symposium on Biocomputing, Vol. 21 581–592 (2016).

Dirkson, A. & Verberne, S. Transfer learning for health-related Twitter data. In Proceedings of the 4th Social Media Mining for Health Applications (#SMM4H) Workshop & Shared Task 89–92 (2019).

Gupta, S., Gupta, M., Varma, V. & Pawar, S. Multi-Task Learning for Extraction of Adverse Drug Reaction Mentions from Tweets. arXiv:1802.05130.

Kenton, M. C., Kristina, L. & Devlin, J. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (2018). arXiv:1810.04805.

Hussain, S., Afzal, H., Saeed, R., Iltaf, N. & Umair, M. Y. Pharmacovigilance with transformers: A framework to detect adverse drug reactions using BERT fine-tuned with FARM. Comput. Math. Methods Med. 2021, 1–12. https://doi.org/10.1155/2021/5589829 (2021).

Rawat, B. P. S., Jagannatha, A., Liu, F. & Yu, H. Inferring ADR causality by predicting the Naranjo score from clinical notes. In AMIA Annual Symposium Proceedings. AMIA Symposium 1041–1049 (2020)

Radford, A. & Salimans, T. improving language understanding by generative pre-training. In EMNLP 1–12.

Lyu, C., Chen, B., Ren, Y. & Ji, D. Long short-term memory RNN for biomedical named entity recognition. BMC Bioinform. 18(462), 1–11 (2017).

Li, L., Wan, J., Zheng, J. & Wang, J. Biomedical event extraction based on GRU integrating attention mechanism. BMC Bioinform. 19(Suppl 9), 93–100 (2018).

Tutubalina, E. & Nikolenko, S. Combination of deep recurrent neural networks and conditional random fields for extracting adverse drug reactions from user reviews. J. Healthc. Eng. 2017, 9451342. https://doi.org/10.1155/2017/9451342 (2017).

Zhou, P. et al. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics 207–212 (2016).

Luo, L. et al. An attention-based BiLSTM-CRF approach to document-level chemical named entity recognition. Bioinformatics 34(8), 1381–1388 (2018).

Chowdhury, S., & Yu, P. S. Multi-task pharma-covigilance mining from social media posts. In Proceedings of the World Wide Web Conference World Wide Web (WWW) 117-126. https://doi.org/10.1145/3178876.3186053 (2018).

Chen, S., Huang, Y., Huang, X., Qin, H., Yan, J., & Tang, B. HITSZ-ICRC: A report for SMM4H shared task 2019-automatic classification and extraction of adverse drug reactions in tweets. In Proceedings 4th Social Media Mining Health Applications (SMM4H) Workshop Shared Task 47–51 (2019).

Sakhovskiy, A. & Tutubalina, E. Multimodal model with text and drug embeddings for adverse drug reaction classification. J. Biomed. Inform. 135, 104182–104192 (2022).

Lee, J. et al. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 36(4), 1234–1240 (2020).

Huang, K., Altosaar, J., & Ranganath, R. ClinicalBERT: Modeling clinical notes and predicting hospital readmission. Association for Computational Linguistics 1–19. arXiv:1904.05342 (2019).

Sun, C. et al. Chemical-protein interaction extraction via Gaussian probability distribution and external biomedical knowledge. Bioinformatics 36(15), 4323–4330 (2020).

Wu, M. Commonsense knowledge powered heterogeneous graph attention networks for semi-supervised short text classification. Expert Syst. Appl. 232, 120800–120817 (2023).

Zhang, Q., Cheng, X., Chen, Y. & Rao, Z. Quantifying the knowledge in a DNN to explain knowledge distillation for classification. IEEE Trans. Pattern Anal. Mach. Intell. 45(4), 5099–5113 (2023).

Acknowledgements

This work was supported by Minnan University of Science and Technology Intelligent Industrial Internet and Digital Management Technology Innovation Team (No.23XTD114).

Author information

Authors and Affiliations

Contributions

Jiao-huang Luo wrote the main manuscript text and Ai-hua Yang reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Luo, Jh., Yang, Ah. Exploiting question-answer framework with multi-GRU to detect adverse drug reaction on social media. Sci Rep 15, 4157 (2025). https://doi.org/10.1038/s41598-025-87724-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-87724-y

This article is cited by

-

Detecting Adverse Drug Events in Social Media: A Brief Literature Review

SN Computer Science (2026)