Abstract

Raindrops on the windscreen significantly impact a driver’s visibility during driving, affecting safe driving. Maintaining a clear windscreen is crucial for drivers to mitigate accident risks in rainy conditions. A real-time rain detection system and an innovative wiper control method are introduced based on machine vision and deep learning. An all-weather raindrop detection model is constructed using a convolutional neural network (CNN) architecture, utilising an improved YOLOv8 model. The all-weather model achieved a precision rate of 0.89, a recall rate of 0.83, and a detection speed of 63 fps, meeting the system’s real-time requirements. The raindrop area ratio is computed through target detection, which facilitates the assessment of rainfall begins and ends, as well as intensity variations. When the raindrop area ratio exceeds the wiper activation threshold, the wiper starts, and when the area ratio approaches zero, the wiper stops. The wiper control method can automatically adjust the detection frequency and the wiper operating speed according to changes in rainfall intensity. The wiper activation threshold can be adjusted to make the wiper operation more in line with the driver’s habits.

Similar content being viewed by others

Introduction

Rain significantly impairs driving safety, disturbing drivers’ attention because of the poor visibility. Manual adjustments of wiper settings while driving can lead to distractions, potentially increasing traffic accident risks. Statistical reports indicate that approximately 7% of worldwide traffic accidents on rainy days are caused by the manual operation of wipers1. Designing an automatic wiper control system for cars becomes essential to address these concerns and mitigate risks. Intelligently detecting rain and autonomously adjusting the wiper speed reduces the need for driver intervention and minimises distractions.

Advanced Driving Assistance System (ADAS) is crucial in enhancing vehicle safety by mitigating driving hazards caused by human factors2. However, during rainy conditions, the adherence of raindrops to the windscreen poses a challenge, affecting the stability of other driving assistance systems and impeding the driver’s vision, thereby seriously compromising driving safety. To address these critical concerns and maintain the efficiency of ADAS, the integration of an automatic wiper control system becomes imperative.

Raindrop detection on windscreen involves two main modes: rain sensors and computer vision. These methods are pivotal in developing automatic wiper control systems, enhancing driving safety and convenience in adverse weather conditions.

The automotive rain sensor, commonly referred to as the raindrop sensor, utilises various methods for detecting raindrops3, including infrared light detection4, capacitive sensing5, resistive sensing6, and piezoelectric vibrator sensing7. Moreover, researchers have proposed various improved detection methods for rain sensors, combining infrared, audio, and capacitive sensors8. However, certain limitations persist among the adopted enhancement techniques. As noted in the literature4, although the detection range of raindrops on the windscreen has been expanded, it remains relatively small compared to the entire windscreen. Due to the rain sensor’s localised installation, it may not accurately represent the whole windscreen9.

In recent years, the continuous advancement of image processing technology has spurred a novel approach to raindrop detection, utilising image processing technology in conjunction with onboard cameras. Vehicle camera systems encompass multi-camera10 and single-camera11. Typically, a single-camera system is employed to reduce expenses.

In the initial investigation of raindrop detection12, Principal Component Analysis (PCA)13 was employed to extract features from training samples, resulting in the creation of a raindrop template called "feature drop." However, they noticed that not all raindrop instances adhere to this model, which subsequently affects the effectiveness of raindrop detection.

Target detection algorithms based on Deep learning14,15,16,17 are primarily categorised into two branches: a two-stage approach by R-CNN and a one-stage approach by YOLO and SSD. These algorithms possess distinct strengths and weaknesses. Typically, one-stage algorithms excel in speed, while two-stage algorithms excel in accuracy. The primary distinction between them lies in utilising the region proposals method to offer an estimated target distribution area for detection. Using a two-stage approach14, they classified candidate regions, yielding commendable raindrop detection results. However, the real-time performance requirements remained challenging.

A visual classifier18,19 is implemented to tackle the rain and no rain classification challenge. However, due to varying driver preferences in wiper activation thresholds, recalibration of the threshold necessitates model retraining.

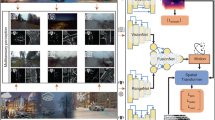

This paper presents a real-time raindrop detection and wiper control method based on a deep learning model to address the challenge of impaired driver visibility on rainy days. The proposed approach involves the creation of a comprehensive dataset comprising rainy driving videos captured under various weather conditions, including No Rain in Daytime (Fig. 1a), No Rain in Nighttime (Fig. 1b), Rain in Daytime (Fig. 1c) and Rain in Nighttime (Fig. 1d). A suitable Convolutional Neural Network (CNN) model, specifically YOLO, is then designed for rain detection, with the training data and validation dataset fed into the neural network for learning. The method optimises and adjusts the training parameters to develop distinct raindrop detection models suitable for different lighting conditions, encompassing daytime, nighttime, and all-weather scenarios. Rigorous testing and validation are conducted to assess the effectiveness of these models. Lastly, an automated wiper control system is developed. The raindrop area ratio is determined using deep learning-based detection of raindrop targets, which is utilised to evaluate variations in precipitation dynamics and intensity. By using cubic interpolation on the time series of the raindrop area ratio, the raindrop area ratio of the following detection can be predicted. When the raindrop area ratio exceeds the predefined wiper activation threshold, the system activates the wiper, and when the area ratio decreases to near zero, the wiper operation ceases. This wiper control method automatically adjusts the detection frequency and wiper operating speed in response to varying rainfall intensity. Furthermore, the adjustable activation threshold ensures the wiper’s operation can better align with individual driver preferences and habits, enhancing overall usability and driving experience.

Deep learning raindrop detection

The schematic representation of the raindrop detection and control system is illustrated in Fig. 2. The process begins with identifying raindrop targets in the captured images, accomplished using an image marking tool to establish the requisite training dataset. Subsequently, a well-suited convolutional neural network model is designed, and a deep learning environment is set up for model training. The modified YOLOv8 model is then deployed on the TX2 development board to accurately recognise rainy images acquired through the camera. Additionally, based on the gathered data concerning the number and size of raindrops, the system determines the intensity of rainfall and wiper frequency control.

Preliminary work for model training

In the initial phase of this research, a comprehensive raindrop dataset was meticulously prepared. The dataset was curated by capturing images of the car’s front windscreen during different weather conditions. These windscreen images were acquired through an onboard camera while traversing diverse weather and road conditions. Subsequently, the image annotation tool LabelImg was employed to accurately label the raindrop targets in each image.

The minimum enclosing rectangle encompassing each raindrop was utilised as the bounding box during the labelling process. This approach effectively minimised unnecessary background pixel information inside the bounding box, and corresponding XML files were generated. These XML files contain crucial label information, comprising the precise location details of each raindrop, the category name “rain”, and the associated coordinate information. The XML files thus serve as invaluable references for raindrop detection and further analysis within the proposed deep learning model.

The raindrop dataset was expanded using data augmentation techniques to enhance its diversity and improve the robustness of the deep-learning model under various conditions. To better understand the characteristics of rainy scenes, the HSV histograms of Fig. 1c were analysed, as shown in Fig. 3, revealing a distinct peak in the value (V) channel due to the sky’s brightness. This indicates that rain droplets in HSV space may exhibit similar brightness characteristics to the sky, potentially complicating their detection when relying solely on colour features. As a result, various rainfall scenarios were simulated by adjusting the HSV parameters of the sky region to reflect diverse lighting and atmospheric conditions. This was combined with geometric transformations, such as panning and zooming, to create more varied perspectives and scales in the training data. Additionally, truncation thresholding techniques were applied to the V-channel to minimise interference from the ground background, ensuring that the augmented data better emphasised raindrop features.

The HSV histogram of Fig. 1c

As depicted in Fig. 4, the data augmentation process significantly expanded the dataset, addressing the issue of limited samples. This enhanced diversity enabled the deep learning model to better generalise across unseen rainy scenarios. Experimental results demonstrated that the augmented dataset improved the model’s training stability and performance, particularly in handling complex background conditions.

The dataset encompasses approximately 80,000 instances of “rain” samples, ensuring an ample number of samples during the training process. Fig. 5 illustrates the dataset’s categories distribution while depicting the raindrop targets’ labels, centre coordinates, width, and height information. An exhaustive analysis of the image’s positional information in the raindrop target yields crucial theoretical data for subsequent raindrop assessment, effectively combining the raindrop targets’ size and quantity. This meticulous analysis and rich dataset contribute significantly to refining the accuracy of raindrop detection, thereby bolstering the reliability and efficacy of the proposed method for real-world applications.

Model network architecture

The YOLOv8 real-time target detection model is used as the training model, ensuring prompt responsiveness. As illustrated in Fig. 6, when a raindrop image is input into the network, YOLOv8 divides the image into N × N grid regions. In cases where the original image size does not align with the N × N dimensions, a filling or scaling operation is conducted to adjust it to the appropriate N × N size, effectively avoiding distortion before feeding it into the network.

Given the diversity of raindrop sizes and scales, the image is partitioned into distinct grid sizes, specifically 13 × 13, 26 × 26, and 52 × 52. Each grid size serves a specific purpose, with smaller grids responsible for detecting tiny raindrops while larger grids focus on identifying larger raindrops. When the centre point of the target raindrop aligns with a specific area within the grid, the corresponding grid point confirms the presence of a raindrop. This well-designed approach ensures precise raindrop detection across different sizes and enhances the overall accuracy and efficiency of the wiper control system, catering to varying rainy conditions. Each grid provides valuable prediction information, including the detected target’s category, confidence level, the bounding box’s centre coordinates, length, and width, encapsulating the identified raindrop.

Model establishment and validation

During most of the driver’s travel time, daytime conditions prevail. Separate training is initially conducted using daytime images to optimise raindrop detection precision. Daytime raindrops adhering to the car’s windscreen exhibit a relatively more precise outline, leading to the highest detection accuracy of the model at an impressive level of 90%, as illustrated in Fig. 7. The outcomes demonstrate excellent detection performance, as the model successfully identifies raindrops on the windscreen. Although some minuscule and inconspicuous raindrops may remain undetected, from a practical standpoint, these tiny raindrops have negligible impact on the driver’s field of vision and pose no significant hindrance during driving.

Subsequently, considering nighttime driving conditions, a distinct network model tailored for nighttime detection is devised. Training the network with a nighttime dataset gives its detection precision at 86%. Raindrops on the windscreen during nighttime driving are more susceptible to influences from intricate and unfavourable factors such as glare from lights, stains, and varying colours. These adverse elements can weaken raindrop characteristics, reducing the nighttime model’s detection precision. Nonetheless, despite the decline in detection precision compared to the daytime model, the nighttime model remains capable of achieving a high level of performance suitable for raindrop detection. Remarkably, the model effectively detects raindrops even under the influence of ambient lights, minimising false detections and resisting disturbances from objects like street lights with raindrop-like attributes in the sky area, as shown in Fig. 8.

In raindrop target detection, a significant challenge lies in the seamless switching between daytime and nighttime models. Instances like sudden entry into a tunnel or early evening lighting changes can lead to uncertainty regarding the appropriate model to apply. An all-weather model suitable for daytime and nighttime scenarios addresses this issue and ensures continuous and reliable raindrop detection. This model effectively circumvents the dilemma of toggling between the two models, providing a well-balanced accuracy of 89%. Remarkably, the model performs commendably regardless of whether the input image corresponds to daytime (Fig. 9a) or nighttime (Fig. 9b) conditions. While some false detections in the nighttime raindrop detection results may persist, the overall impact is minor. It can be disregarded when considering the overall performance.

In model design, various parameters play a crucial role in determining the final raindrop detection results, and it is imperative to ensure the rationality of parameter settings. Hence, the daytime, nighttime, and all-weather models are trained using identical parameters for a comprehensive performance analysis. This consistent parameter configuration allows a fair comparison of their respective detection capabilities. Specifically, the training images are standardised to 1280 * 720 pixels, while the batch size is set to 8 to ensure efficient training. The training process is iterated for 300 epochs to achieve model convergence and optimal performance.

Target detection evaluation metrics, namely precision, recall, Train-loss and Val-loss, are introduced to assess the raindrop detection performance of the models quantitatively. These evaluation indices are crucial in measuring the effectiveness of the target detection algorithm. The representation of the target detection evaluation index is presented in Table 1, providing valuable insights into the model’s performance.

Precision and recall are two fundamental metrics used to evaluate the performance of the raindrop detection models:

Precision refers to the proportion of true positive predictions among all predicted positive instances. In raindrop detection, precision signifies the probability of correctly identifying raindrops in the model’s prediction results. It is calculated as the ratio of true positive predictions (correctly detected raindrops) to the total number of predicted positive examples (all detected raindrops). A high precision value indicates that the model has a low false positive rate, meaning that most predicted raindrops are true.

Recall refers to sensitivity or true positive rate, which measures the proportion of true positive predictions from all the actual positive examples in the dataset. In the context of raindrop detection, recall indicates the ability of the model to capture all the true raindrop instances present in the dataset. It is calculated as the ratio of true positive predictions to the number of positive examples (ground truth raindrops). A high recall value implies that the model can successfully detect a large percentage of the raindrop targets.

FPS is an indicator that measures the number of image frames a model can process within a unit of time, reflecting the model’s inference speed. Precisely, FPS is calculated using the following formula:

where P, I, and N represent the time for image preprocessing, inference, and post-processing in the inference process, respectively.

Figure 10a,b demonstrate that after 100 epochs of training, the designed daytime, nighttime, and all-weather models achieve commendable detection precision and recall rates. Precisely, the daytime model attains a peak accuracy of 0.90, the nighttime model reaches 0.86, and the all-weather model reaches 0.89. Furthermore, the best recall values for the daytime, nighttime, and all-weather models are 0.83, 0.8, and 0.82, respectively. Concurrently, as depicted in Fig. 10c,d, during the training process, both the training set loss and the validation set loss gradually decline. This observation indicates that the chosen parameter settings for the network are appropriate, the network structure design is rational, and the network is effectively learning and converging.

A comprehensive comparison of precision, recall and FPS between the state-of-the-art and the proposed model is presented in Table 2. The precision rates of the designed daytime, nighttime, and all-weather rain detection models surpass the existing technology, reaching a higher level. Similarly, the recall rate of proposed models surpasses the average level of existing technologies. The FPS values of the daytime, nighttime, and all-weather models are 58, 59, and 63, respectively, demonstrating that these models also perform well in terms of real-time performance and can meet the demands for rapid detection in practical scenarios.

Feature extraction capability comparison

The essence of CNN is feature extraction; however, as the number of layers goes deeper, the connection between shallow and deep layers becomes more intricate. It is challenging to discern which regions contribute to raindrop recognition and accurately determine if the model has extracted key information or reached the optimal training state19. From the feature map, it can be intuitively observed that different feature maps extract different features. Some focus on raindrop edge features, some on background features, and others on overall features. The shallow layer’s feature map exhibits relatively comprehensive features, while the deep layer’s features are more subtle and abstract, enabling the extraction of finer raindrop features.

Images captured during transitional periods between day and night, such as dusk and dawn, were chosen to evaluate the model’s ability to extract features accurately under varying lighting conditions. Section II C’s findings indicate that the all-weather model’s detection precision lies between the daytime and nighttime models. However, through feature map analysis, it becomes evident that the feature extraction ability of the daytime and nighttime models is less effective than that of the all-weather model during alternating day and night detection. This further verifies the superior generalisation ability of the all-weather model compared to the other two models. Since the all-weather model contains both daytime and nighttime dataset samples, it is less affected by lighting changes, and the detection precision of all three models has reached a high level suitable for raindrop detection. Consequently, using the all-weather model as the final raindrop detection scheme is more reasonable, avoiding switching between the two models.

Rainfall determination and wiper control method

Rainfall information elements

By comparing the feature extraction capabilities of daytime, nighttime, and all-weather models, it was confirmed that the all-weather model is suitable for rainfall detection and was ultimately applied in the raindrop detection and wiper control system. During actual driving, the visual interference caused by raindrops can be quantified by the detection frame, where the frame’s area represents the size of the raindrop. The total interference can be evaluated by calculating the number of detection frames and their total area, as shown in Fig. 11.

Driver visibility through the windscreen is primarily influenced by the number and size of raindrops and the driving speed20. In image detection, the rain information is effectively represented by two variables: the total number of raindrops and their combined area. The number refers to the count of detected raindrops in the image, while the size represents the pixel area occupied by each detection frame. The total area is the sum of all detection frame areas.

Model deployment and testing

The YOLOv8-Ours model was deployed on the TX2 development board for real-time raindrop detection during actual driving scenarios, as shown in Fig. 12. The development board was tested using pre-recorded vehicle videos, and detection results, including the total number of raindrops and their combined pixel area, were visualised on the images for precise observation.

As shown in Fig. 9a, the upper left corner of each image displays the prediction category (e.g., “rain”), the total count of detected raindrops (e.g., “154”), and their cumulative area (e.g., “area: 745712”). The cumulative raindrop area obstructing the driver’s view is calculated by summing the detected raindrop areas across successive frames. This analysis identifies rainfall onset, cessation, and intensity variations. The raindrop area ratio, defined as the total obstructed area divided by the image area, determines whether the threshold for wiper activation has been reached, ensuring the driver’s visibility remains unobstructed.

Wiper control method

The intelligent wiper control system based on machine vision dynamically adapts to varying rainfall conditions by integrating real-time image analysis and adaptive control logic. The detailed control logic (Fig. 13) is as follows:

No rain (clear weather)

In clear weather, the windscreen remains free of raindrops, and the wipers remain inactive. The image acquisition frequency is reduced to its lowest level while maintaining readiness to detect the onset of rain. The raindrop area ratio is not set to zero but to a small baseline value, E, considering factors like dust, smudges, or occasional false detections.

Light rain or drizzle

As light rain starts, raindrops appear sporadically on the windscreen, and the system detects the raindrop area ratio exceeding the activation threshold. The wipers activate at a low frequency while the image acquisition rate increases to monitor changes in rainfall intensity with higher precision.

Increasing rainfall

When rainfall intensifies, the number and area of detected raindrops skyrocket, indicating a significant decrease in driver visibility. If the rain intensity surpasses the predefined threshold (M) before the image acquisition reaches the rated cycle (60 frames), the system synchronously increases the wiper speed and image acquisition frequency. This ensures rapid and effective removal of raindrops to maintain clear visibility.

Decreasing rainfall

The system detects a reduction in the raindrop area ratio as rain diminishes. The system synchronously reduces the wiper speed and image acquisition frequency if the raindrop area ratio falls below the threshold (M) after the rated image acquisition cycle (60 frames). This prevents unnecessary energy consumption while ensuring visibility is not compromised.

Steady rainfall

Under steady rainfall conditions, the raindrop area ratio stabilises at the threshold (M) during the rated image acquisition cycle (60 frames). In this scenario, the wiper frequency and image acquisition rate remain constant. This avoids excessive adjustments, provides smooth operation, and maintains system efficiency.

This innovative approach enhances driving safety and comfort across variable rainfall scenarios, optimises energy use, and extends the wiper system’s lifespan. Integrating advanced image processing and real-time control algorithms ensures seamless functionality, even in unpredictable weather conditions.

This paper proposes a new wiper control method based on the control logic. This method aims to achieve step-less frequency adjustment, activation, and deactivation of the windscreen wipers through machine vision while dynamically adjusting the wiper and target detection frequency according to the rainfall intensity. The specific control method is shown in Fig. 14. Here, E is the stop threshold, M is the activation threshold, Max is the image acquisition threshold, RN is the raindrop area ratio during the Nth detection, f is the image detection frequency (ranging from 10 to 60, Unit: fps), f0 is the standby detection frequency, and F is the wiper operating frequency (Unit: times per minute).

No rain (clear weather)

After the vehicle starts, the onboard camera continuously captures images of the windscreen area and calculates the raindrop area ratio RN using the raindrop detection model. When RN is less than the stop threshold E, the system checks whether the image acquisition counter has reached the threshold Max (60). If it has, this indicates that no rain or the rainfall has stopped. If the wiper is still working, it should be turned off, the image detection frequency is set to f₀ (10 fps), and the image acquisition counter N is reset to 1.

Increasing rainfall or steady rainfall

When RN exceeds the activation threshold M, this indicates an increase in rainfall. The wiper operating frequency F and image detection frequency f are then set to f × Max/N, and the counter N is reset to 1. When the counter N equals the image acquisition threshold (Max), the wiper frequency and image acquisition rate remain constant.

Decreasing rainfall

When RN is more significant than E but less than the activation threshold M, the system checks whether the counter has reached the image acquisition threshold M. If it has, this indicates a decrease in rainfall. The wiper operating frequency F and image detection frequency f are then set to f × RN/M, and the counter N is reset to 1. Otherwise, N is incremented by 1, and the previous operation is repeated.

The wipers can be controlled differently under different rain conditions using the current wiper control method. When it’s light rain, the increase rate of the raindrop area ratio is small. A raindrop detection cycle is set to prevent the raindrop area from reaching the wiper activation threshold for a long time. During rainfall, the wipers will be activated once at most within a detection cycle to remove the raindrops, and at this time, the wiper sweep frequency is the lowest. During moderate rain, the raindrop area ratio may not reach the wiper activation threshold in one detection but may get it in subsequent detections. In this case, the wiper sweep frequency will change with the amount of rainfall. When it is heavy rain, the raindrop area ratio in each detection will reach the wiper activation threshold, and at this time, the wiper sweep frequency is the highest. When there is no rain, the raindrop area ratio is less than the detection error, and the wipers halt.

The image acquisition frequency of the wiper control system is 10–60 fps, while the wiper’s sweeping frequency is typically 10–60 times per minute. This means that the image acquisition speed is significantly higher than the wiper’s sweeping frequency, thus laying the foundation for the system’s operation. As shown in Fig. 15, the figure illustrates the variation in the raindrop area ratio on the windscreen when a vehicle is driving in the rain under this wiper control method. When the raindrop area ratio reaches the system’s set threshold of 11%, the wiper is activated once. At this point, the image acquisition frequency is about 24 fps, and the wiper operates at a speed of 2.5 seconds per cycle.

Conclusion

This paper introduces a novel automatic wiper control system based on machine vision and deep learning. The system determines the raindrop area ratio through deep learning-driven detection of raindrop targets, which is used to assess rainfall dynamics and intensity variations. The wiper operating frequency and the target detection frequency are dynamically adjusted based on the raindrop area ratio. Compared with traditional methods, this system effectively overcomes the limitations of conventional rain sensors, including restricted detection range and insufficient real-time performance.

The core of the detection model is based on a Convolutional Neural Network (CNN) architecture. The developed all-weather model can detect raindrops under daytime and nighttime conditions, delivering high performance regardless of image capture time. The all-weather model achieves a precision rate of 0.89, a recall rate of 0.82, and a processing speed of 63 fps. Compared with existing technologies, it demonstrates superior performance, thereby fully validating its effectiveness and real-time capability.

The automatic wiper control system, powered by machine vision, ensures optimal visibility across diverse weather conditions. Combining precise rain detection, energy-efficient operation, and adaptive responsiveness enhances driving safety and comfort. Moreover, the wiper control system offers extensive adaptability. Only minor scaling or adjustments are required to adapt to different types of vehicles.

Despite the variability and complexity of the external environment, external disturbances during driving may still cause false detections. Future work will expand the dataset to enhance the model’s generalisation capability and reduce false detections.

Data availability

The datasets analyzed during the current study available from the corresponding author on reasonable request.

References

Chen, Y. Design and research of intelligent wiper for automobile. In 2018 17th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES) 169–172. (IEEE, 2018).

Okuda, R., Kajiwara, Y. & Terashima, K. A survey of technical trend of ADAS and autonomous driving. In Technical Papers of 2014 International Symposium on VLSI Design, Automation and Test 1–4. (IEEE, 2014).

Kim, Y. G., Lee, S. H. & Kim, B. S. Measurement of rainfall using sensor signal generated from vehicle rain sensor. KSCE J. Civ. Environ. Eng. Res. 38(2), 227–235 (2018).

Choi, K.N. Omni-directional rain sensor utilising scattered light reflection by water particle on automotive windshield glass. In SENSORS, 2011. 1728–1731. IEEE (IEEE, 2011).

Jarajreh, M., Nortcliffe, A. & Green, R. Fuzzy logic and equivalent circuit approach to rain measurement. Electron. Lett. 40(24), 1 (2004).

Joshi, M., Jogalekar, K., Sonawane, D., Sagare, V. & Joshi, M. A novel and cost effective resistive rain sensor for automatic wiper control: circuit modelling and implementation. In 2013 Seventh International Conference on Sensing Technology (ICST) 40–45. (IEEE, 2013).

Alazzawi, L. & Chakravarty, A. Design and implementation of a reconfigurable automatic rain sensitive windshield wiper. Int. J. Adv. Eng. Technol. 8(2), 73 (2015).

Cheok, K. C., Kobayashi, K., Scaccia, S. & Scaccia, G. A fuzzy logic-based smart automatic windshield wiper. IEEE Control Syst. Mag. 16(6), 28–34 (1996).

OpenRoad Auto Group, Audi Boundary, "How rain-sensing wipers work," Accessed: March, 2021. [Online]. Available: https://openroadaudi.com/ a) blog/how-rain-sensing-wipers-work.

Yamashita, A., Kuramoto, M., Kaneko, T. & Miura, K.T. A virtual wiper-restoration of deteriorated images by using multiple cameras. In Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), vol. 4, 3126-3131. (IEEE, 2003).

Yamashita, A., Kaneko, T. & Miura, K.T. A virtual wiper-restoration of deteriorated images by using a pan-tilt camera. In IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA’04. 2004, vol. 5, 4724–4729. (IEEE, 2004).

Kurihata, H. et al. Rainy weather recognition from in-vehicle camera images for driver assistance. In IEEE Proceedings. Intelligent Vehicles Symposium 205–210. (IEEE, 2005).

Ringnér, M. What is principal component analysis?. Nat. Biotechnol. 26(3), 303–304 (2008).

Guo, T., Akcay, S., Adey, P.A. & Breckon, T.P. On the impact of varying region proposal strategies for raindrop detection and classification using convolutional neural networks. In 2018 25th IEEE International Conference on Image Processing (ICIP) 3413–3417. (IEEE, 2018).

Wu, Q., Zhang, W. & Kumar, B.V. Raindrop detection and removal using salient visual features. In 2012 19th IEEE International Conference on Image Processing 941–944. (IEEE, 2012).

Kurihata, H. et al. Detection of raindrops on a windshield from an in-vehicle video camera. Int. J. Innov. Comput. Inf. Control 3(6), 1583–1591 (2007).

Roser, M. & Geiger, A. Video-based raindrop detection for improved image registration. In 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops 570–577. (IEEE, 2009).

Li, C.-H.G., Chen, K.-W., Lai, C.-C. & Hwang, Y.-T. Real-time rain detection and wiper control employing embedded deep learning. IEEE Trans. Veh. Technol. 70(4), 3256–3266 (2021).

Wang, S.-H., Hsia, S.-C. & Zheng, M.-J. Deep learning-based raindrop quantity detection for real-time vehicle-safety application. IEEE Trans. Consum. Electron. 67(4), 266–274 (2021).

Vasile, A. et al. Rain sensor for automatic systems on vehicles. In Advanced Topics in Optoelectronics, Microelectronics, and Nanotechnologies vol. 7821, 509–514. (SPIE, 2010).

Acknowledgement

The authors thank the Jiangsu Science and Technology Department of the People’s Republic of China for supporting this research under Contract No. BY2020356.

Author information

Authors and Affiliations

Contributions

G.Z., G.W. and J.C. wrote the main manuscript text and prepared figures. W.J. performed the validation; X.H. performed the formal analysis; T.D. performed the Validation. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, G., Wang, G., Chen, J. et al. An automatic control system based on machine vision and deep learning for car windscreen clean. Sci Rep 15, 4857 (2025). https://doi.org/10.1038/s41598-025-88688-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-88688-9