Abstract

Semantic segmentation of LIDAR point clouds is essential for autonomous driving. However, current methods often suffer from low segmentation accuracy and feature redundancy. To address these issues, this paper proposes a novel approach based on adaptive fusion of multi-scale sparse convolution and point convolution. First, addressing the drawbacks of redundant feature extraction with existing sparse 3D convolutions, we introduce an asymmetric importance of space locations (IoSL) sparse 3D convolution module. By prioritizing the importance of input feature positions, this module enhances the sparse learning performance of critical feature information. Additionally, it strengthens the extraction capability of intrinsic feature information in both vertical and horizontal directions. Second, to mitigate significant differences between single-type and single-scale features, we propose a multi-scale feature fusion cross-gating module. This module employs gating mechanisms to improve fusion accuracy between different scale receptive fields. It utilizes a cross self-attention mechanism to adapt to the unique propagation features of point features and voxels, enhancing feature fusion performance. Experimental comparisons and ablation studies conducted on the SemanticKITTI and nuScenes datasets validate the generality and effectiveness of the proposed approach. Compared with state-of-the-art methods, our approach significantly improves accuracy and robustness.

Similar content being viewed by others

Introduction

The rapid advancement of autonomous driving technology has thrust scene understanding into the forefront, garnering considerable attention1,2. Scene understanding involves swiftly and accurately providing precise location and classification details of environmental objects3. Usually, scene understanding is performed by a visual camera or 3D LIDAR. Unlike conventional visual cameras, 3D LIDAR has emerged as a favored sensor for scene comprehension due to its robustness and ability to capture highly precise environmental data4,5. The main task of semantic scene understanding based on 3D LIDAR is to assign predicted class information to the captured environmental point cloud6.

In recent years, with the continuous evolution of deep learning, research on LIDAR-based point cloud semantic segmentation methods has also progressed. Initially, in the early stages of point cloud semantic segmentation, a 3D point cloud was projected onto a 2D plane, followed by processing using 2D CNNs7. However, this approach inevitably led to the loss of structural geometry features of the point cloud. Simultaneously, some studies utilized 3D CNNs to directly process the unordered point cloud after converting it into regular voxels. While this voxel-based representation method captured superior spatial feature information and was more efficient, it also resulted in the loss of contextual feature information, significantly impacting the accuracy of point cloud segmentation8. Consequently, the research focus gradually shifted towards direct point processing. PointNet9 emerged as the pioneering method for directly processing and segmenting points. Despite its ability to retain structural geometry information to a significant extent and its enhanced capability to capture spatial location context feature information, the disorderly and sparse distribution of point clouds led to computationally intensive calculations10.

Due to the limitations of various methods, researchers have increasingly explored combining different approaches to enhance the extraction of complex geometric and potentially related features in point cloud semantic segmentation. Among these, the fusion method that combines point-based and voxel-based approaches has shown superior segmentation performance11. This method integrates the high-precision context features extracted by the point-based approach with the global structural features provided by the voxel-based approach12, leading to more accurate point cloud segmentation. However, while existing methods combining point and voxel-based approaches have achieved high segmentation accuracy, they still face significant challenges. Sparse 3D convolution methods, for instance, tend to expand all sparse features within a voxel, resulting in excessive redundant features. Additionally, while integrating point features with voxel features can leverage their complementary strengths, straightforward feature addition often leads to losing crucial information. To address these issues, this paper proposes an adaptive fusion point cloud semantic segmentation method using multi-scale sparse three-dimensional convolution and point convolution. The main contributions of this work are as follows:

-

To address the limitations of existing sparse 3D convolution methods, which tend to extract excessive redundant features while neglecting the structural features in each direction, we propose an asymmetric importance of space locations (IoSL) sparse 3D convolution module. This module enhances the sparse learning of voxel features by prioritizing the prediction of point positions. Additionally, it preserves the features of the point cloud along both the vertical and horizontal directions, leading to improved efficiency and accuracy in the segmentation process;

-

To address the limitations of existing point and voxel feature fusion methods, which typically fuse features directly at a single scale and overlook important contextual information across different scales, we propose a multi-scale feature fusion cross-gating module. This module enhances the fusion accuracy between different receptive fields and adapts to the distinct propagation features of points and voxels, thereby improving the overall feature fusion performance;

-

Extensive experiments validate the effectiveness of the proposed point cloud semantic segmentation method, demonstrating its superior performance compared to alternative methods.

The remaining sections of this paper are outlined below. The second section provides a comprehensive review of related research work. Following this, the third section elaborates on the components of the proposed method. Subsequently, the fourth part delves into the details of the experiment conducted. Finally, the fifth section summarizes the entire text.

Related work

In this section, we will briefly introduce existing research methods on point cloud semantic segmentation. Existing methods can be categorized into four main types based on their research approaches: projection-based, point-based, voxel-based, and fusion-based methods.

Projection-based segmentation

Projection-based methods offer an indirect approach to point cloud semantic segmentation by transforming 3D point clouds into 2D representations for processing. Alonso et al. introduced 3D-MiniNet13, a framework combining 3D and 2D learning layers to capture both local and global features. These features are fed into an FCNN for semantic segmentation. The resulting 2D labels are then re-projected into 3D space and refined with post-processing modules. Similarly, Wang et al. proposed SwinURNet14, which employs spherical projection to transform the point cloud into a distance image. Extracted 2D features are encoded and decoded using a non-square transformer, while a multi-dimensional information fusion module balances semantic differences between 2D feature maps and the 3D feature space. Massa et al. developed a Bayesian multi-projection fusion method15 that enhances robustness by weighting classification probabilities from a basic classifier and further refining results through KNN processing and multi-projection fusion. Yuan et al. proposed a segmentation method16 for large-scale point clouds using a transformer and slot attention mechanism. Their approach projects the point cloud into multi-channel images, where a transformer-based context feature aggregation module extracts global features. The slot attention mechanism then learns the relative relationships between multi-channel features, improving semantic understanding and segmentation accuracy. Although projection-based methods often achieve faster processing speeds, they have limitations. These include reduced capacity for preserving 3D spatial features and challenges such as distortion and occlusion during projection, which can hinder segmentation performance.

Point-based segmentation

Point-based methods focus on capturing feature information at the individual point. Recent advancements in applying neural networks to point clouds have shown significant promise in this domain. Qi et al. pioneered this approach with PointNet9, the first semantic segmentation method for directly processing raw point clouds. PointNet employs MLP and max-pooling layers to extract both point-specific and global features from irregular point sets. However, its ability to capture local feature information is limited. To address this, Li et al. proposed a semantic segmentation method17 using attention transfer learning, which enhances local feature extraction through an attention pooling module. Transfer learning is then applied to optimize segmentation performance with minimal data requirements. Zhan et al. introduced FA-ResNet18, which leverages a residual MLP to deeply aggregate local shape relationships and point features, significantly improving the accuracy of point feature extraction. Inspired by the success of convolution operators in image processing, methods based on point convolution have also emerged. Thomas et al. developed KPConv19, a kernel point convolution method that uses multiple local 3D filters for flexible and effective convolution operations on point clouds. This approach enables unrestricted feature description and learning capabilities. Building on this, Zhao et al. proposed a semantic segmentation network20 using dual-attention KPConv, which integrates channel and spatial attention blocks to refine features adaptively. The method employs multiple attention gates during the decoding process to fuse upsample features, yielding multi-scale perceptual insights. Compared to MLP-based methods, kernel convolution approaches demonstrate superior performance by effectively extracting point-wise features and neighborhood relationships, making them more adept at capturing local and global features in point clouds.

Voxel-based segmentation

Voxel-based methods represent 3D spaces by converting voxelized point clouds into a structured voxel grid. This approach enables the estimation of geometric and feature information within each voxel, providing a robust framework for semantic segmentation. Zhu et al. introduced a model21 leveraging 3D CNNs that utilizes a cylindrical partition representation of 3D point clouds. Their approach integrates asymmetric residual blocks and dimensional decomposition-based context modeling modules to extract higher-order contextual features while minimizing computational overhead. Similarly, Cheng et al. proposed the Bilateral Voxel Transformer (BVT)22, which employs a dual-branch structure to query voxel locations and dynamically update key features through weighted geometric sensing sampling. Bao et al. presented GLSNet + + 23, a context-dependent voxel segmentation method that combines global and local feature manifold streams to capture multi-scale context and structural information. The method employs spatial context-dependent feature fusion to accurately resolve class membership relationships near voxel boundaries. Building on these advancements, Yang et al. proposed V-SIM24, an approach designed to mitigate the challenges of point density imbalance. V-SIM enhances feature interactions through voxel slicing and uses an interactive attention mechanism to achieve adaptive, self-enhancing voxel feature extraction. While voxel-based methods excel in accuracy and precision, their efficiency is limited by the computational burden of processing empty voxels, which significantly hinders inference speed.

Fusion-based segmentation

To address the limitations of individual methods, researchers have explored fusion-based approaches that combine multiple techniques to enhance point cloud semantic segmentation. Dai et al. proposed PCE25, a fusion method that integrates 2D projection context-embedded features with 3D voxel features. By employing an embedding entanglement strategy, PCE effectively combines these features to improve prediction accuracy. Park et al. presented a fusion method12 that combines sparse 3D convolution and point convolution, using the latter for feature extraction and the former for efficient feature propagation. Their approach further optimizes discretization errors through cross-entropy loss and position-perception loss. Li et al. introduced a multi-scale fusion method26 that incorporates voxel and point data alongside a pyramid decoding module, enhancing feature representation across multi-scale. Similarly, Zhang et al. developed PVCFormer27, which processes voxel data at varying resolutions simultaneously to expand the receptive field. This method employs a cross-attention mechanism to fuse point and voxel features, significantly improving segmentation accuracy and computational efficiency. Xie et al. proposed a fusion method28 leveraging axial movement operations, capturing richer geometric features through upward axial movements around the voxel. This approach integrates point features using an attention mechanism to achieve a more robust feature representation. Fusion-based methods are highly effective at extracting diverse feature information in parallel and integrating it through specialized backbones29. Building on this, this paper proposes an adaptive fusion point cloud semantic segmentation method that leverages multi-scale sparse three-dimensional convolution and point convolution.

Methods

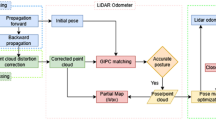

The paper introduces a novel point cloud semantic segmentation method designed for LIDAR, with the overall network architecture depicted in Fig. 1. Initially, the input LIDAR point cloud undergoes preprocessing to generate voxel data based on a multi-scale cylindrical grid partitioning approach. Subsequently, the original point cloud and multi-scale voxel data are fed into two independent feature encoder branches, allowing for parallel processing. In the point cloud processing branch, KPConv kernel point convolution is employed to capture fine-grained point features. Meanwhile, the voxel processing branch utilizes asymmetric IoSL sparse 3D convolution to extract voxel features, obtaining coarse-grained voxel representations at different scales. After feature extraction in both branches, a multi-scale feature fusion cross-gating module is applied. This module incorporates a voxel feature fusion gating mechanism and a cross self-attention fusion mechanism to combine features of varying types and scales adaptively. Finally, point-wise refinement using an MLP is performed to produce the final point cloud semantic segmentation results. The subsequent sections provide a detailed description of each module.

Data pre-processing

The performance of point cloud semantic segmentation tasks is closely related to the size of the receptive field30, where a larger receptive field can capture more extensive global contextual information31. Meanwhile, due to the sparse density distribution features of LIDAR point clouds, uniform point grid partitioning can lead to an uneven distribution of points. Therefore, inspired by the cylindrical voxel partitioning method21 we propose a multi-scale cylindrical coordinate point cloud partitioning pre-processing method, which obtains layered cylinder grid voxels by processing point clouds with different scale resolutions.

In cylindrical grid division, an increased mesh size is employed within the cylindrical coordinate system to encompass more distant point clouds while maintaining a uniform proportion of non-empty voxels21. This ensures a more balanced distribution of point clouds at varying distances. The multi-scale point cloud cylindrical partitioning process is illustrated in Fig. 2. Initially, we employ the farthest point sampling (FPS) algorithm32 three times on the input point cloud, resulting in three subsets of point clouds with different resolutions, containing \(\:{N}_{1}\), \(\:{N}_{2}\), and \(\:{N}_{3}\) points, respectively. Subsequently, the points in the three sets of point cloud subsets in Cartesian coordinates are transformed into cylindrical coordinates. This transformation entails converting coordinates \(\:(x,y,z)\) to \(\:(\rho\:,\theta\:,z)\), where \(\:\rho\:\) represents the radius, which is the distance from the origin to the point projected onto the \(\:xy\)-plane, and \(\:\theta\:\) denotes the azimuthal angle, indicating the angle between the \(\:x\)-axis and the projection of the point onto the \(\:xy\)-plane. Subsequently, the three-dimensional coordinate system is partitioned into cylindrical volumes. Notably, within the cylindrical coordinate system, the size of the segmented unit grid increases proportionally with the distance from the origin. Finally, we use mapping functions \(\:P\to\:V\) and \(\:V\to\:P\) to represent the mutual conversion between points and cylinders, respectively. Utilizing these mapping functions enables the relative transformation between features. Consequently, the extracted coarse-grained voxel features can complement the fine-grained point features, thereby mitigating feature loss and deviation.

Point processing

In the fine-grained point processing branch, we utilize the KPConv19 kernel convolution method to extract feature information from the input point cloud \(\:P\). Following the methodology established in prior research, we consider an input point \(\:{x}_{i}\in\:P\) with its corresponding input feature \(\:{f}_{i}\in\:{F}_{P}\). Subsequently, the convolution operation for a point \(\:x \in \:\mathbb{R}^{{N \times \:3}}\) at core point \(\:{\{x}_{k}\left|k<K\right\}\subset\:\mathbb{R}^{K\times\:3}\) is defined as:

Where \(\:k\in\:\mathbb{R}^{K\times\:3}\) is the neighborhood position of the central point, \(\:{W}_{k}\in\:\mathbb{R}^{{D}_{in}\times\:{D}_{out}}\) represents the correlation convolution weight mapping the input dimension of the central point to the output dimension. Here, \(\:h\left(\cdot\:\right)\) signifies the correlation between the input point \(\:{x}_{i}\) and its corresponding central point \(\:{x}_{k}\). Notably, as the central point approaches the input point, the output of the correlation function \(\:h\) increases. Consequently, the linear correlation function \(\:h\left(\cdot\:\right)\) can be expressed as follows:

Where \(\:\sigma\:\) represents the influence distance of the central point, which is selected based on the density of the input point cloud. In nuclear deformation learning, linear correlation is employed to alleviate the backpropagation of gradients.

Voxel processing

For the branch of coarse-grained cylindrical partition voxel processing, this paper employs a method of feature extraction based on three-dimensional sparse convolution. After pre-processing the point cloud, three different resolutions of cylindrical voxel partitioning are obtained, which are utilized for extracting voxel features at small, medium, and large scales, respectively. At each scale, an asymmetric IoSL sparse 3D convolution module is employed for feature extraction. Next, we will introduce the IoSL sparse 3D convolution, and asymmetric IoSL sparse 3D convolution module separately.

IoSL sparse 3D convolution

Sparse 3D convolution is the preferred method for voxel feature extraction. However, existing regular and submanifold sparse convolutions33 process all input sparse data, resulting in a significant increase in sparse features and generating redundant candidate features that blur valuable information34. These limitations can decrease the performance of the model. To address this issue, inspired by focal sparse 3D convolution35, this paper proposes the IoSL sparse 3D convolution based on the importance of space locations.

The IoSL Sparse 3D Convolution selects feature position inputs based on predicting the importance of sparse feature space locations36. The IoSL sparse 3D convolution process is shown in Fig. 3. It begins by computing the importance map \(\:{I}^{p}\), for all sparse features within the space. For a given input sparse feature \(\:{T}_{p}\), situated at position \(\:p\) in three-dimensional space, with \(\:{c}_{in}\) feature channels, the input and output feature spaces are denoted as \(\:{P}_{in}\) and \(\:{P}_{out}\) respectively. When \(\:{P}_{in}{=P}_{out}\), through nuclear weight \(\:w\in\:\mathbb{R}^{{K}^{d}\times\:{c}_{in}\times\:{c}_{out}}\) convolution of importance to deal with the features of the output mapping \(\:{I}^{p}\), the specific calculation process is as follows:

Where \(\:{I}^{p}\) signifies feature importance mapping; \(\:k\) denotes the discrete position within feature space \(\:{K}^{d}\); \(\:\overline{p} _{k} = p + k\) represents the corresponding position of the feature center \(\:p\), where \(\:k\) indicates the offset distance from \(\:p\). \(\:{K}^{d}(p,{P}_{in})\) refers to the subset of feature space \(\:{K}^{d}\) with vacant positions eliminated, contingent upon position \(\:p\) and input feature space \(\:{P}_{in}\).

After obtaining the feature importance mapping \(\:{I}^{p}\), the model selects significant input features based on the threshold value \(\tau\). The important input feature space \(\:{P}_{im}\) constitutes a subset of the input feature space \(\:{P}_{in}\), encompassing the positions of the most crucial input features, outlined as follows:

Where \(\:{I}_{0}^{p}\) denotes the center of the importance prediction map \(\:{I}^{p}\) at position \(\:p\); \(\tau\) represents the set threshold. When \(\tau\) equals 0 or 1, the formula respectively transforms into regular or submanifold sparse convolution.

The feature extends into a dynamic form within the significant input feature space \(\:{P}_{im}\), and the output close to the feature position \(\:p\) is determined by the dynamic output set \(\:{K}_{im}^{d}\left(p\right)\).

For the remaining unimportant features, their output positions will serve as fixed inputs, implying that the submanifold convolves the inputs. Deleting them outright or employing a fully dynamic approach without preserving them could render the training process unstable. In this scenario, the output feature space \(\:{P}_{out}\) can be established as the amalgamation of all significantly extended regions and other unimportant positions. The extent of the extended area can be dynamically and adaptively determined based on its input position.

After delineating the significant feature input space \(\:{P}_{im}\) and the feature output space \(\:{P}_{out}\), the explicit calculation formula for IoSL sparse 3D convolution is presented as follows:

Asymmetric IoSL sparse 3D convolution module

In the actual application process, the objects in the natural scene all show irregular shapes and contours, so it is still a big challenge to accurately segment the object boundary37,38. Therefore, to achieve the accurate segmentation of the upward boundary of all parties, we use the asymmetric upsample convolution blocks and downsample convolution blocks in the cylinder partition voxel processing branch, and extract as many geometric features of the upward structure of all parties as possible through the left and right asymmetric convolution operations. Figure 4 illustrates the network structure of the asymmetric IoSL sparse 3D convolution module. The AD and AU sections depict the structures of the downsample network and upsample network, respectively. Within the downsample network, voxel inputs from the cylinder partition bifurcate into two branches: one comprises a 3*1*3 convolution kernel and a 1*3*3 convolution kernel, while the other consists of a 1*3*3 convolution kernel and a 3*1*3 convolution kernel, followed by aggregation of their outputs. These aggregated results undergo processing through downsample IoSL sparse 3D convolution. In the upsample network, inputs initially undergo upsample IoSL sparse 3D convolution to ensure consistency in the number of feature channels between upsample and downsample layers. Subsequently, the output of the subsampled network, along with corresponding layers, is aggregated and fed into the residual branch for further processing.

The asymmetric IoSL sparse 3D convolution module preserves the feature information in each direction to the maximum extent by constructing different convolution branches in the horizontal and vertical directions, thus enhancing the feature extraction capability. In addition, compared with conventional convolution modules, the asymmetric IoSL sparse 3D convolution module also greatly saves computing costs and improves segmentation efficiency.

Multi-scale feature fusion cross-gating module

After point processing and voxel processing to obtain voxel features and point features at different scales, all features will be input into the multi-scale fusion module for feature fusion. The proposed multi-scale feature fusion cross-gating module in this paper consists of two main parts: the multi-scale voxel feature fusion gating mechanism and the cross self-attention fusion mechanism. The multi-scale voxel feature fusion gating mechanism processes voxel features at multi-scale for coarse-grained voxel feature fusion. And the cross self-attention fusion mechanism is responsible for fusing coarse-grained voxel features with fine-grained point features. Next, we will introduce these two modules separately.

Multi-scale voxel feature fusion gating mechanism

Figure 5 illustrates the network structure of the multi-scale voxel feature fusion gating mechanism. At each scale, the feature mapping \(\:{T}_{i}\) corresponds to the voxel feature \(\:{F}_{{V}_{i}}\). The calculation formula for feature mapping \(\:{T}_{i}\) is as follows:

Where \(\:{W}^{{T}_{i}}\) denotes the learning weight matrix computed by a linear function, and \(\:{T}_{i}\in\:\left[\text{0,1}\right]\) represents the acceptance or rejection of the transfer of voxel features at each scale. For voxels at scale \(\:I\), the fusion features are calculated as follows:

Where \(\:\widetilde{F}_{V}\) represents the fusion voxel feature, and \(\:\cdot\:\) represents element-wise multiplication, the probability weights of feature channels at each scale are calculated through softmax normalization. Subsequently, these weights are multiplied and accumulated with the corresponding feature channels to derive the final fusion voxel features.

Cross self-attention fusion mechanism

Fusing fine-grained point features with coarse-grained voxel features can enhance the model’s accuracy and robustness. However, direct fusion may result in erroneous feature fusion due to differing propagation features39.To address this, we employ a feature fusion mechanism based on cross self-attention40 to enhance both point and voxel feature information fusion.

Figure 6 illustrates the network structure of cross self-attention fusion mechanism. Initially, the matrices \(\:Q\), \(\:K\), and \(\:V\) are computed separately by employing a linear function on the fused feature \(\:{F}_{PV}\), where \(\:F_{{PV}} = F_{P} \oplus \:\widetilde{F}_{V}\). Subsequently, the \(\:R\) matrix is derived by embedding the local point coding features41. The calculation formula is as follows:

Where \(\:Q\), \(\:K\), and \(\:V\) represent the query matrix, key matrix, and value matrix, respectively, while \(\:R\) denotes the point coding matrix. \(\:{W}^{Q},{\:W}^{K},{\:W}^{V}\), and \(\:{W}^{R}\) denote the weights of their respective matrices, and \(\:{R}_{ec}\) represents the embedded local point coding feature.

Next, it is important to note that the score \(\:E\) is computed by multiplying the \(\:Q\) matrix with both the \(\:K\) matrix and the \(\:R\) matrix. Subsequently, the attention weight is determined using the softmax function and then multiplied with the \(\:V\) matrix to derive the final fused feature.

Where \(\:dt\) represents the number of eigen-dimensions of the key vector; And \(\:\widetilde{F}_{{PV}}\) represents the final fused feature between the point and voxel.

Point-wise refinement module

After obtaining the fused feature \(\:\widetilde{F}_{{PV}}\), it is projected onto the corresponding points using the voxel mapping function. At this stage, each point’s features encompass abundant point-specific details as well as extensive global context information. Subsequently, the MLP layer42 computes the probability for each category at every point based on the fused feature. The maximum probability value for each category is then utilized to classify individual points, thereby achieving semantic segmentation of the point cloud on a point-by-point basis.

Experiments results and analyses

In this section, we will evaluate the performance of our approach using different datasets. We begin by describing the experimental details and evaluation indexes. Next, we present a comparative analysis of the proposed method and other approaches using the SemanticKITTI and nuScenes datasets. Finally, we provide an in-depth analysis of the results, offering insights into the proposed method’s performance.

Implementation details and evaluation indexes

These experiments were conducted using an NVIDIA RTX 3090 GPU. For both the SemanticKITTI and nuScenes datasets, we segmented the input point cloud into a three-dimensional cylinder partition with dimensions of 480*360*32. During the training phase, the model was optimized using the Adams optimizer with default parameter settings. The maximum number of epochs was set to 150, and the initial learning rate was 0.001.

To evaluate the performance of the proposed method, we use the mean cross-association mIoU43 as the main evaluation metric. The specific calculation formula of mIoU is shown as follows:

Where \(\:C\) is the number of semantic categories; \(\:{TP}_{c}\), \(\:{FP}_{c}\), and \(\:{FN}_{c}\) represent the true positive examples, false positive examples, and false negative examples corresponding to semantic category \(\:C\), respectively. A higher mIoU value indicates better model performance.

Dataset

SemanticKITTI

The SemanticKITTI dataset, collected using the Velodyne HDL-64 LIDAR, is a large-scale driving scene dataset designed for point cloud segmentation. It is tailored for specific tasks such as semantic segmentation and panoramic segmentation. The dataset comprises 22 sequences and 19 semantic classes. Sequences 00 to 10 constitute the training set, sequence 08 form the validation set, and sequences 11 to 21 constitute the test set.

nuScenes

The nuScenes dataset is collected using the Velodyne HDL-32E LIDAR and is specifically designed for autonomous driving with sparse point cloud segmentation, which introduces additional challenges for LIDAR semantic segmentation tasks. The dataset comprises 1,000 scene sequences categorized into 16 standard semantic classes. Out of these, the first 700 scene sequences are designated for the training set, the next 150 scene sequences for the validation set, and the remaining 150 scene sequences for the test set.

Comparative experimental results

Comparison on SemanticKITTI

The comparative experimental results between our method and others on the SemanticKITTI dataset are presented in Table 1. The selected comparison methods include projection-based methods, point-based methods, voxel-based methods, and fusion-based methods. From the data in the table, it is evident that our method achieves the most accurate semantic segmentation results on the SemanticKITTI dataset, outperforming other methods in terms of the mIoU evaluation metric. Compared to projection-based methods such as 3D-MiniNet13 and SalsaNext45, our method demonstrates a significant improvement in mIoU values, showing increases of 9.8% and 6.1%, respectively. Similarly, compared to point-based methods like LG-Net46 and HCT-Kpconv47, our approach outperforms these models, achieving 9.3% and 1.5%, respectively. Furthermore, our method also surpasses voxel-based and fusion-based techniques, including MarS3D48, Memoryseg49, FusionNet50, and PCSCNet12, with mIoU values improving by 12.9%, 7.3%, 4.3%, and 2.9%, respectively. Particularly noteworthy is that our method demonstrates significantly better segmentation performance for dynamic objects such as cars and trucks compared to other methods.

To more intuitively reflect the point cloud semantic segmentation quality of our proposed method, we present a visual comparison diagram of segmentation results as shown in Fig. 7. The test sequence selected for visualizing the segmentation results is the validation sequences of the SemanticKITTI dataset. In Fig. 7, column a depicts the ground segmentation truth images of the point cloud. Subsequently, column b displays the segmentation results obtained using the FusionNet method, while column c showcases the segmentation visualization results of the PCSCNet method. Finally, column d presents the visualization images of point cloud segmentation generated by our method. As shown in Fig. 7, both FusionNet and PCSCNet exhibit poor segmentation performance, particularly when dealing with complex real-world environments, where significant errors in segmentation occur. These methods struggle to accurately segment visually similar objects, such as traffic poles and trees, leading to many missegmentation cases. This issue arises because traffic poles and trees often share similar characteristics in color and texture, making it challenging to distinguish between them. Additionally, dynamic objects, such as moving vehicles, are frequently misclassified as part of the static environment, further exacerbating segmentation errors. In contrast, the method presented in this paper demonstrates clear advantages in segmentation accuracy when applied to various targets in real-world environments. Specifically, for segmentation tasks involving moving vehicles, trees, roads, and other objects, the proposed method effectively segments and provides more precise results. Of particular note is its strong performance in segmenting dynamic targets, as it can accurately capture the motion and morphological characteristics of vehicles. This ability allows the method to minimize interference from the static background and significantly improve segmentation accuracy. These advantages enable the proposed method to reduce error rates and enhance overall performance when dealing with segmentation tasks in complex scenes.

Comparison on nuScenes

The comparative experimental results between the proposed method and others on the nuScenes dataset are presented in Table 2. It is evident from the data that the proposed method demonstrates superior performance in the nuScenes dataset, boasting the highest mIoU value. Our method’s mIoU value shows a notable increase of 7.5%, 2.0%, 1.0%, 0.2%, and 1.1%, respectively, compared to the following methods: RangeNet + + 51, PolarNet52, PCSCNet12, ACPNet53, and Salsanext45. Notably, the method presented in this paper excels at segmenting both dynamic and static targets, particularly those that are difficult for other methods to handle, such as motorcycles, buses, and bicycles. When compared to alternative approaches, the mIoU of these moving object categories shows a significant increase, further demonstrating the advantages and robustness of the proposed method in managing complex scenes. In addition, it is worth noting that the nuScenes dataset features a sparser point cloud distribution compared to the SemanticKITTI dataset. Despite this, the proposed method still achieves the best segmentation performance, outpacing other point cloud segmentation methods, which struggle in sparse point cloud environments. This result further demonstrates that the proposed method can maintain high semantic segmentation performance even under low point cloud density.

Efficiency studies

In this section, we analyze the computational complexity and runtime performance of the proposed method. Evaluating efficiency provides insights into the method’s ability to handle large-scale point cloud data and required computational resources for deployment54. Table 3 presents a comparison of the efficiency of our method against other approaches on the SemanticKITTI dataset.

Table 3 compares the parameters, multiply-accumulate operations (MACs), latency, and mIoU of various methods on the SemanticKITTI dataset under consistent evaluation criteria. The data highlights key trade-offs between model size, computational efficiency, and segmentation performance. Compared to small models with low latency, such as PolarNet52, SalsaNext45, and LG-Net46, the proposed method shows a significant improvement in mIoU, with increases of 11.3%, 6.1%, and 9.3%, respectively. Additionally, it uses significantly fewer parameters than larger models like SqueezeSegV355, which has 26.2 M parameters, thanks to the asymmetric IoSL sparse 3D convolution module. Furthermore, while the average MACs of other comparison methods is 164.4G, the proposed method only requires 92.7G, a reduction of 71.7G compared to the average. This demonstrates that the proposed method consumes fewer computing resources. Finally, while the proposed method is comparable to PCSCNet12 in terms of model size and runtime, it achieves a notable improvement in segmentation performance, with a mIoU increase of 2.9%.

The comparative analysis of operational efficiency demonstrates that the proposed method achieves the highest segmentation accuracy while maintaining low computational complexity. This makes it well-suited for deployment in resource-constrained environments, as it requires significantly fewer computational resources compared to other methods.

Ablation experimental results

In this section, we will conduct comprehensive ablation experiments on various components of the proposed method to investigate their impact on the overall method performance.

Asymmetric IoSL sparse 3D convolution module

To evaluate the impact of the proposed asymmetric IoSL sparse 3D convolution module on segmentation performance, we isolated this module while retaining other components of our method. We directly applied the traditional submanifold sparse 3D convolution module to extract voxel features from the cylinder grid partition, effectively removing the asymmetric IoSL sparse 3D convolution module. This modified method is denoted as M1. The results of the ablation experiment M1 on the SemanticKITTI dataset are presented in Table 4. Analysis of the comparative data in the table clearly shows that the integration of the asymmetric IoSL sparse 3D convolution module not only improves the mIoU evaluation index by 2.7%, but also reduces the number of parameters in the network model by 2.4 M. In addition, the table also contains three categories of data that are most affected by asymmetric IoSL sparse 3D convolution module, namely car, person, and pole. Compared with M1 without asymmetric IoSL sparse 3D convolution module, the IoU of the method proposed in this paper increases by 3.1%, 5.5%, and 5.3% respectively. It is further shown that the asymmetric IoSL sparse 3D convolution module can not only reduce the extraction of redundant features, but also enhance the extraction ability of key features.

To facilitate an intuitive comparison of ablation performance, we selected partial field point clouds from sequence 08 in the SemanticKITTI dataset for visual segmentation comparison, depicted in Fig. 8. The visual comparison clearly shows that M1, which excludes the asymmetric IoSL sparse 3D convolution module, exhibits limited segmentation capability for distant objects like trees and cars within the environment. Ablation experiment M1 underscored the effectiveness of the proposed asymmetric IoSL sparse 3D convolution module. This module enhances the characterization of target distributions by extracting asymmetric sparse features horizontally and vertically, prioritizing critical features through feature importance analysis to mitigate the redundancy of irrelevant features.

Multi-scale feature fusion cross-gating module

To better understand how the multi-scale feature fusion cross-gating module influences the overall segmentation performance of the method, we conducted ablation experiments on two specific mechanisms: M2, which focuses on the multi-scale voxel feature fusion gating mechanism, and M3, which explores the cross self-attention point and voxel feature fusion mechanism within the module.

In ablation experiment M2, we retained all other modules of the method while ablating the multi-scale voxel feature fusion gating mechanism. Instead, we directly fused the extracted voxel features of the cylinder mesh at three different scales. The results of this ablation are presented in Table 4. The ablation study results reveal the significant impact of the multi-scale voxel feature fusion gating mechanism on the method’s performance. Removing this mechanism led to a 2.9% reduction in mIoU, underscoring its importance. For dynamic objects with relatively distinct spatial structures, such as cars and pedestrians, the mechanism effectively aggregated key features across different scales, resulting in IoU improvements of 1.7% and 1.4%, respectively. For fixed objects with elongated spatial structures, such as bar-like classes, the mechanism maintained segmentation accuracy and integrity by enhancing the distinction between features of different classes, achieving a 2.4% increase in IoU. Additionally, the number of parameters was reduced by 1.3 M, demonstrating improved efficiency. These findings validate the effectiveness of the multi-scale voxel feature fusion gating mechanism. By addressing feature disparities across scale resolutions and selectively integrating critical multi-scale features, it significantly enhances the semantic segmentation performance of point clouds.

In ablation experiment M3, we utilized the fusion mechanism involving ablative cross self-attention points and voxel features. Specifically, we directly fused corresponding point features with voxel features, leaving other modules unchanged. The results of this ablation are detailed in Table 4. The detailed ablation results, presented in Table 4, demonstrate that the proposed fusion mechanism significantly enhances the aggregation accuracy of point and voxel features, leading to a 1.7% increase in mIoU. Notably, in three representative categories—cars, pedestrians, and poles—the mechanism achieved IoU improvements of 0.8%, 1.1%, and 1.6%, respectively. Despite these accuracy gains, the number of parameters remains largely unchanged. The findings from ablation experiment M3 further validate the effectiveness of the fusion mechanism. By addressing the unique propagation features of point and voxel features, the mechanism significantly improves integration accuracy while minimizing the risk of erroneous fusion. This highlights its contribution to the overall performance of the method.

To better compare the performance of the ablation experiments M2 and M3, selected partial field point clouds from sequence 08 in the SemanticKITTI dataset were visually compared for segmentation results, as depicted in Fig. 9. The comparison clearly illustrates that segmentation performance declines when the multi-scale feature fusion cross-gating module is omitted. This omission results in varying degrees of false segmentation, particularly affecting dynamic targets like cars and smaller targets such as street trees. Therefore, it can be concluded that the multi-scale feature fusion cross-gating module enhances the accuracy of feature fusion across different types and scales, thereby improving the overall accuracy and robustness of the method.

Experimental discussion and analysis

The superior performance of the proposed method is demonstrated through comparison and ablation results on the SemanticKITTI and nuScenes datasets. These results show that the proposed method outperforms other methods in both datasets. Firstly, on the SemanticKITTI dataset, the proposed method achieves high precision and robust segmentation, especially in complex environments. This performance improvement is largely attributed to the multi-scale feature fusion cross-gating module, which enhances the segmentation of dynamic objects with distinct spatial structures, such as cars and people. Furthermore, for static objects with elongated spatial structures, the gating mechanism plays a crucial role in effectively aggregating key semantic features, thereby preserving the structural integrity of the environment, particularly for objects like trees and poles. Additionally, experiments on the SemanticKITTI dataset demonstrate that the proposed method significantly improves LIDAR point cloud segmentation, even in complex scenes. Despite challenges such as illumination variations and dynamic targets, our method consistently outperforms current mainstream methods in terms of accuracy. Secondly, the comparative experimental results on the nuSenes dataset clearly demonstrate that the proposed method outperforms other methods in terms of high-precision segmentation, even in complex environments with sparse point cloud distributions. This is due to the asymmetric IoSL sparse 3D convolution module, which effectively solves the challenges brought by sparse point clouds. Specifically, the module effectively fuses global and local context features at different scales, significantly alleviating the feature loss problem common in sparse point clouds. Therefore, when applied to nuScenes dataset with sparse point cloud distribution and complex scenes, the method proposed in this paper still has high segmentation performance. Finally, the comparison of efficiency between the proposed method and other approaches shows that the proposed method achieves the highest segmentation accuracy while keeping computational complexity low. This advantage primarily arises from the Iosl sparse network, which identifies key features by pre-calculating their locations. This pre-selection process reduces the number of network parameters, further optimizing computational efficiency. As a result, the proposed method is easy to implement in complex environments and demonstrates greater robustness.

While the proposed method demonstrates excellent segmentation performance and robustness, it has some potential limitations. Specifically, it has only been tested on public datasets, so its performance in real-world scenarios has not been verified. Additionally, as segmentation accuracy improves, the complexity and computational cost of the network significantly increase. This presents challenges in real-world environments where computational resources may be limited, potentially affecting both efficiency and accuracy. Nevertheless, the experimental results of this paper indicate that the proposed method generalizes well to unknown data. When compared to the most advanced methods, our approach shows significant improvements in both accuracy and robustness.

Discussion

In this paper, we propose a LIDAR point cloud semantic segmentation method based on point convolution and sparse 3D convolution adaptive fusion. The method utilizes an asymmetric IoSL sparse 3D convolution module, which enhances the sparse learning performance of key voxel features by prioritizing the prediction of feature location importance. Following this, a multi-scale feature fusion cross-gating module is employed to adaptively fuse fine-grained point features with coarse-grained voxel features. This improves the accuracy of feature fusion across different types and scales, effectively extracting contextual semantic information. Experimental validation on the SemanticKITTI and nuScenes datasets demonstrates that, compared to other methods, the proposed model achieves state-of-the-art segmentation accuracy.

In the future, we will continue to explore more precise and reliable improvements, utilizing model compression and lightweight techniques to reduce computational complexity and enhance algorithm efficiency. Furthermore, we plan to extend the method from public datasets to real-world environments, ensuring optimal performance while significantly improving runtime. This will contribute to advancing the real-time processing applications of large-scale LIDAR point cloud semantic segmentation.

Data availability

The datasets analyzed in the paper are sourced from the SemanticKITTI dataset [http://www.semantic-kitti.org] and the nuScenes dataset [https://www.nuscenes.org].

References

He, Y. et al. Deep learning based 3D segmentation: A survey. arxiv preprint. arxiv:2103.05423 (2021).

Zhao, L. et al. SVASeg: sparse voxel-based attention for 3D LiDAR Point Cloud Semantic Segmentation. Remote Sens. 14, 4471 (2022).

Feng, D. et al. Deep multi-modal object detection and semantic segmentation for autonomous driving: datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 22, 1341–1360 (2020).

Li, Z. et al. A LiDAR-OpenStreetMap matching method for Vehicle Global position initialization based on Boundary Directional feature extraction. IEEE Trans. Intell. Veh. 1–13 (2024).

Liu, H. et al. A hybrid attention semantic segmentation network for unstructured terrain on Mars. Acta Astronaut. 204, 492–499 (2023).

Guo, Z. et al. A survey on deep learning based approaches for scene understanding in autonomous driving. Electronics 10, 471 (2021).

Chen, C. et al. GeoSegNet: Point Cloud Semantic Segmentation via Geometric Encoder-Decoder modeling. Arxiv preprint. (2022). arXiv:2207.06766.

Daniel, M., Sebastian, S. & Voxnet A 3d convolutional neural network for real-time object recognition. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 922–928, (2015). (2015).

Qi, C. R. et al. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 652–660, (2017). (2017).

Qi, C. R. et al. Pointnet++: deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 30, (2017).

Shi, S. et al. PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 10526–10535, (2020). (2020).

Park, J., Kim, C., Kim, S., Jo, K. & PCSCNet Fast 3D semantic segmentation of LiDAR point cloud for autonomous car using point convolution and sparse convolution network. Expert Syst. Appl. 212, 118815 (2023).

Alonso, I., Riazuelo, L., Montesano, L. & Murillo, A. C. 3D-MiniNet: learning a 2d representation from point clouds for fast and efficient 3d lidar semantic segmentation. IEEE Robot Autom. Lett. 5, 5432–5439 (2020).

Wang, Z. et al. SwinURNet: Hybrid Transformer-CNN Architecture for Real-Time unstructured road segmentation. IEEE Trans. Instrum. Meas. 73, 1–16 (2024).

Massa, K. J. L. & Grobler, H. Adapting projection-based LiDAR semantic segmentation to natural domains. J. Vis. Commun. Image Represent. 100, 104111 (2024).

Yuan, T., Yu, Y. & Wang, X. Semantic segmentation of large-scale point clouds by integrating attention mechanisms and transformer models. Image Vis. Comput. 146, 105019 (2024).

Li, L. et al. Deep learning network for indoor point cloud semantic segmentation with transferability. Automat Constr. 168, 105806 (2024).

Zhan, L., Li, W. & Min, W. FA-ResNet: feature affine residual network for large-scale point cloud segmentation. Int. J. Appl. Earth Obs Geoinf. 118, 103259 (2023).

Thomas, H. et al. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision 6411–6420, (2019). (2019).

Zhao, J., Zhou, H., Pan, F. A. & Dual Attention KPConv Network Combined with attention gates for semantic segmentation of ALS Point clouds. IEEE Trans. Geosci. Remote. 62, 1–14 (2024).

Zhu, X. et al. Cylindrical and asymmetrical 3d convolution networks for lidar segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 44, 6807–6822 (2021).

Cheng, T. et al. Learning accurate monocular 3D voxel representation via bilateral Voxel transformer. Image Vis. Comput. 150, 105237 (2024).

Bao, R. et al. GLSNet++: global and local-Stream Feature Fusion for LiDAR Point Cloud Semantic Segmentation using GNN Demixing Block. IEEE Sens. J. 24, 11610–11624 (2024).

Yang, J. et al. MsVFE and V-SIAM: attention-based multi-scale feature interaction and fusion for outdoor LiDAR semantic segmentation. Neurocomputing 584, 127576 (2024).

Dai, H. et al. Large-scale ALS Point Cloud Segmentation via Projection-based context embedding. IEEE Trans. Geosci. Remote. 62, 1–16 (2024).

Li, H. et al. MVPNet: a multi-scale voxel-point adaptive fusion network for point cloud semantic segmentation in urban scenes. Int. J. Appl. Earth Obs Geoinf. 122, 103391 (2023).

Zhang, S. et al. Point and voxel cross perception with lightweight cosformer for large-scale point cloud semantic segmentation. Int. J. Appl. Earth Obs. 131, 103951 (2024).

Xie, G. et al. Point and shifted Voxel MLP for 3D deep learning. Pattern Recogn. Lett. 185, 1–7 (2024).

Cheng, Z. et al. Fusion is Not Enough: Single Modal Attacks on Fusion Models for 3D Object Detection. arxiv preprint arXiv:2304.14614, (2023).

Yang, J. et al. Concrete crack segmentation based on UAV-enabled edge computing. Neurocomputing 485, 233–241 (2022).

Khan, S. D. & Othman, K. M. Indoor scene classification through Dual-Stream Deep Learning: a Framework for Improved Scene understanding in Robotics. Computers 13, 121 (2024).

Han, X. F., He, Z. Y., Chen, J. & Xiao, G. Q. 3CROSSNet: Cross-level Cross-scale Cross-attention Network for Point Cloud representation. IEEE Robot Autom. Lett. 7, 3718–3725 (2022).

Graham, B., Engelcke, M. & Maaten, L. v. d. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 9224–9232, (2018). (2018).

Wang, W., Liang, J. & Liu, D. Learning equivariant segmentation with instance-unique querying. Adv. Neural Inf. Process. syst. 35, 12826–12840 (2022).

Chen, Y. et al. Focal Sparse Convolutional Networks for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 5418–5427, (2022). (2022).

Wang, W. et al. Visual recognition with deep nearest centroids. arxiv preprint arXiv:2209.07383, (2022).

Yang, D. et al. LFRSNet: a robust light field semantic segmentation network combining contextual and geometric features. Front. Environ. Sci. 10, 996513 (2022).

Liu, D. et al. Densernet: Weakly supervised visual localization using multi-scale feature aggregation. In Proceedings of the AAAI conference on artificial intelligence 6101–6109, (2021).

Khan, S. D., Alarabi, L. & Basalamah, S. An encoder–decoder deep learning framework for building footprints extraction from aerial imagery. Arab. J. Sci. Eng. 48, 1273–1284 (2023).

Qin, Z. et al. Geometric Transformer for Fast and Robust Point Cloud Registration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11133–11142, (2022). (2022).

Gu, X. et al. SiMaLSTM-SNP: novel semantic relatedness learning model preserving both siamese networks and membrane computing. J. Supercomput. 80, 3382–3411 (2024).

Shi, H., Dao, S. D., Cai, J. & LLMFormer Large Language Model for Open-Vocabulary Semantic Segmentation. Int. J. Comput. Vis. 1–18, (2024).

Behley, J. et al. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision 9297–9307, (2019). (2019).

Caesar, H. et al. nuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11618–11628, (2020). (2020).

Cortinhal, T., Tzelepis, G., Aksoy, E. E. & Salsanext Fast, uncertainty-aware semantic segmentation of lidar point clouds for autonomous driving. arxiv preprint. arXiv:2003.03653 (2024).

Zhao, Y. et al. A large-scale point cloud semantic segmentation network via local dual features and global correlations. Computers Graphics. 111, 133–144 (2023).

Wen, S., Li, P. & Zhang, H. Hybrid Cross-Transformer-KPConv for Point Cloud Segmentation. IEEE Signal. Process. Lett. 31, 126–130 (2023).

Liu, J. et al. Mars3d: A plug-and-play motion-aware model for semantic segmentation on multi-scan 3d point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9372–9381, (2023). (2023).

Li, E., Casas, S. & Urtasun, R. Memoryseg: Online lidar semantic segmentation with a latent memory. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 745–754, (2023). (2023).

Zhang, F., Fang, J., Wah, B. & Torr, P. Deep FusionNet for Point Cloud Semantic Segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference 644–663, (2020).

Milioto, A., Vizzo, I., Behley, J. & Stachniss, C. RangeNet ++: Fast and Accurate LiDAR Semantic Segmentation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 4213–4220, (2019). (2019).

Zhang, Y. et al. PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 9598–9607, (2020). (2020).

Sun, X. et al. LiDAR Point clouds Semantic Segmentation in Autonomous driving based on asymmetrical convolution. Electronics 24, 4926 (2023).

Liang, J. et al. Clusterfomer: clustering as a universal visual learner. Adv. Neural Inf. Proces s syst., 36, (2024).

Xu, C. et al. Squeeze-segv3: Spatially-adaptive convolution for efficient point-cloud segmentation. arxiv preprint. arXiv: 01803 (2020). (2004).

Acknowledgements

This work was supported by the Jilin Province Science and Technology Development Plan Project under Grant (No. 20210201021GX).

Author information

Authors and Affiliations

Contributions

Y.X.B.: conceptualization, methodology, first draft writing, software. P.L.: methodology, validation, writing, criticism and editing. T.Y.Z.: reviewing and editing. J.L.S.: reviewing and editing. C.X.W.: reviewing and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Bi, Y., Liu, P., Zhang, T. et al. Multi-scale sparse convolution and point convolution adaptive fusion point cloud semantic segmentation method. Sci Rep 15, 4372 (2025). https://doi.org/10.1038/s41598-025-88905-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-88905-5