Abstract

In credit scoring, data often has class-imbalanced problems. However, traditional cost-sensitive learning methods rarely consider the varying costs among samples. Moreover, previous studies have limitations, such as the lack of fit to real-world business needs and limited model interpretability. To address these issues, this paper proposes a novel example-dependent cost-sensitive learning based selective deep ensemble (ECS-SDE) model for customer credit scoring, which integrates example-dependent cost-sensitive learning with the interpretable TabNet (attentive interpretable tabular learning) and GMDH (group method of data handling) deep neural networks. Specifically, we use TabNet, which excels in handling tabular data, as the base classifier and optimize its performance on imbalanced data with an example-dependent cost loss function. Next, we design a GMDH based on an example-dependent cost-sensitive symmetric criterion to selectively deep integrate the base classifiers. This approach reduces the redundancy of base models in traditional ensemble strategies and enhances classification performance. Experimental results show that the ECS-SDE model outperforms six cost-sensitive models and five advanced deep ensemble models in overall performance for credit scoring. It shows significant advantages in the BS+, Save, and AUC metrics on four datasets. Furthermore, the ECS-SDE model provides strong interpretability, and detailed analysis reveals the key roles of various features in credit scoring.

Similar content being viewed by others

Introduction

Global economic integration has created a more complex environment for financial institutions1. In particular, the rise in financial derivatives and consumer loans has increased risks for financial institutions2. Credit risk, arising from borrower defaults, is a primary concern for financial institutions3. While it is difficult to accurately predict whether a borrower will default in the future, effective credit risk scoring can significantly reduce potential default losses for financial institutions4. Thus, the identification of suitable measures to mitigate losses incurred by customer defaults has emerged as a critical concern in the financial industry.

Customer credit scoring is an effective tool for evaluating borrowers’ credit risk. Credit scoring is commonly regarded as a binary classification task5,6,7, which classifies borrowers into two categories: “good credit” or “poor credit.” Most of the currently widely used credit scoring models are based on cost-insensitive learning methods, which aim to minimize the number of misclassifications while assuming that the cost of all misclassifications is the same8. However, this assumption does not fully consider the actual business objectives of financial institutions, which are to minimize operating costs9. For financial institutions, reducing the potential costs associated with misclassification is often more important than merely improving classification accuracy. As a result, cost-sensitive learning has emerged, aiming to minimize total classification costs by balancing management expenses and loss expenses.

Currently, many studies have applied cost-sensitive learning methods to credit scoring10, but most methods assume that the classification cost for each class (e.g., good credit vs. poor credit) is constant, which is referred to as class-dependent cost-sensitive (CCS) learning11. However, the limitation of CCS is that it only focuses on the misclassification cost between different classes and primarily aims to improve the classification performance of the model, neglecting the need for cost minimization in the actual business operations of financial institutions12. In real-world customer credit scoring scenarios, the economic loss to financial institutions from lending to bad customers varies, because customers have different credit limits and economic conditions13. To address this issue, researchers have proposed example-dependent cost-sensitive (ECS) learning. Studies have shown that, compared to CCS, ECS methods demonstrate better performance in customer credit scoring14. This is because ECS methods account for cost differences between classes as well as between samples. In customer credit scoring, ECS models can accurately estimate the economic loss caused by misclassification, taking into account the varying credit conditions and economic situations of different customers. This helps better meet the needs of financial institutions and enhances the economic benefits of credit scoring.

Lenarcik and Piasta15 first introduced the concept of ECS while improving the probabilistic rough set al.gorithm. Based on the stage when costs are introduced, ECS methods can be divided into three categories: introducing example-dependent costs before, during, and after model training8,16. (1) Example-dependent costs introduced in pre-training methods involve adjusting sample weights according to their misclassification costs. Common methods include cost-proportionate rejection sampling (CPRS)17 and cost-proportionate over-sampling (CPOS)18. CPRS retains or rejects samples based on a probability proportional to their misclassification cost, while CPOS creates a new dataset by duplicating samples, with the frequency of duplication determined by their misclassification cost. (2) Example-dependent costs introduced during the training phase modify the loss function to directly optimize model performance. Typical models include ECS logistic regression (LR)19, ECS decision trees (DT)8,9, and ECS support vector machines20. (3) Example-dependent costs introduced after the training phase primarily employ a cost-sensitive Bayesian minimum risk approach21,22. This approach combines the predicted probabilities from base classifiers with the example-dependent costs to minimize the overall expected risk. However, before-training approaches, which rely on the prior distribution of the training data, may lead to data bias or reduced model generalization21. After-training methods, in turn, depend on the base classifiers, and if they fail to effectively capture cost-sensitive information during training, optimization may be limited. In contrast, by incorporating the ECS mechanism during training, the model can more directly optimize the cost-sensitive objective, thereby improving its focus on high-cost samples. Therefore, this paper studies the ECS method that introduces example-dependent costs during the training phase.

Most of the above studies focus on improving a single classification model. However, single models are prone to overfitting, which can affect the model’s generalization ability. To solve this problem, researchers have begun to enhance the performance of ECS models through ensemble learning. For example, Bahnsen et al.23 proposed an ECS classification framework that combines ECS decision trees (CSDT) using four different ensemble methods: random forest (RF), bagging, and their variants, random patches, and pasting. The results showed that the CSDT model with the RP ensemble method produced the best performance on five datasets across four applications, including credit card fraud detection, customer churn prediction, credit scoring, and marketing. Zelenkov24 used DT as base classifiers and introduced the ECS method into the AdaBoost model using three different methods: inside the exponent, outside the exponent, and both inside and outside the exponent, constructing an ECS AdaBoost ensemble model. Experiments showed that this model outperformed other ECS models on five datasets in banking marketing and insurance fraud domains. Bhargava et al.25 proposed an ECS stacking ensemble framework for predicting potential tax defaulters. This framework consisted of two stages: the first stage-trained multiple cost-insensitive classifiers, and the second stage used CSDT, RF, artificial neural networks (ANN), and a bagging ensemble classifier based on CSDT as meta-models. The outputs of the first-stage models were used as inputs to train the meta-models. Experimental results showed that this framework not only outperformed traditional ECS classifiers but also significantly reduced costs.

In recent years, deep neural networks (DNN)26,27,28,29,30,31 have demonstrated outstanding performance in various fields, showing significant potential in credit-scoring tasks. Mehta et al.32 proposed an ECS deep neural network (ECS-DNN) by modifying the loss function to incorporate ECS. Experimental results indicated that this model had significant advantages in terms of cost savings. However, traditional DNN models typically require extensive data preprocessing when dealing with complex tabular data. In contrast, the attentive interpretable tabular deep neural network (TabNet)33 is specifically designed for tabular data. It can be applied directly to raw data and demonstrates high prediction accuracy. As a result, researchers have attempted to introduce TabNet to credit-scoring tasks. For instance, Cai et al.34 proposed a deep ensemble model for credit card fraud detection, which used TabNet as the base classifier and XGBoost for the ensemble. Experimental results showed that the proposed model outperformed the comparative models across multiple evaluation metrics. Zhang et al.35 proposed a TabNet-based credit fraud detection model, which significantly outperformed traditional XGBoost and Naive Bayes algorithms. Lee et al.36 used various ensemble techniques such as LightGBM, XGBoost, RF, and CatBoos to integrate TabNets, and successfully applied it to credit card default prediction. Despite the significant success of TabNet in credit scoring tasks, most existing studies focus on performance enhancement and do not consider ECS. In addition, model interpretability is particularly important in financial credit scoring. Since TabNet combines the interpretability of tree-based models with the learning ability of DNNs, it has the potential to play a greater role in this field.

However, after careful analysis, we find that the existing studies still have the following four limitations: (1) Most cost-sensitive learning-based deep learning models still adopt CCS methods, and research on ECS techniques is relatively limited14. Only one study32 has applied ECS in single DNN modeling; (2) Existing ECS ensemble models for credit scoring integrate traditional machine learning-based base classifiers, and no studies have explored ECS deep ensemble models that use deep learning models as base classifiers. In addition, existing ensemble models typically combine the predictions of all base classifiers, which may lead to redundancy. Using deep learning models as base classifiers and selecting an appropriate model subset for the ensemble, i.e., selective deep ensemble, may improve model performance; (3) Existing models that introduce the ECS mechanism during training typically adjust the loss function to account for example-dependent cost. While this adjustment reduces misclassification costs, it may compromise performance on traditional accuracy-based metrics; (4) Current deep learning algorithms considering ECS in credit scoring are black-box models, with low transparency and poor interpretability, limiting their practical application.

To address the above limitations, this paper proposes an example-dependent cost-sensitive learning based selective deep ensemble (ECS-SDE) model for customer credit scoring. First, an example-dependent cost matrix is constructed for the raw data, and the processed dataset is randomly sampled several times to generate ECS training subsets. Second, we construct example-dependent cost-sensitive TabNet (ECS TabNet) base classifiers and train multiple differentiated base classifiers using the training subsets. Finally, we propose an example-dependent cost-sensitive GMDH (ECS GMDH) neural network that uses the selection mechanism of GMDH for the selective deep ensemble. To verify the performance of the proposed model, this paper introduces five evaluation metrics and conducts empirical analysis on four datasets. The experimental results show that, compared to three ECS models, three CCS models, and five advanced deep ensemble models, the ECS-SDE model demonstrates better overall performance in customer credit scoring and has stronger model interpretability.

The theoretical contributions of this paper are as follows: (1) We are the first to apply ECS techniques in constructing deep ensemble models for customer credit scoring by combining the interpretable TabNet and GMDH deep neural networks; (2) We introduce ECS technique to the TabNet model, proposing a new TabNet deep learning model. This model is trained by embedding an enhanced ECS-based loss function, which significantly improves its performance when dealing with imbalanced data; (3) We propose a novel example-dependent cost-sensitive symmetric criterion (ECS-SC) for the GMDH, which accounts for the cost differences between samples and aims to minimize the total cost. The ECS-SC overcomes the limitation of traditional criteria that assign equal misclassification costs to all samples, making it more feasible for the practical needs of credit scoring. Additionally, we develop an ECS-SC-based GMDH model for selective deep ensemble learning. This method resolves base model redundancy in traditional ensemble strategies, enhancing classification performance; (4) We conduct a comparative analysis using four credit-scoring datasets, comparing three ECS models, three CCS models, and five advanced deep ensemble models. The results show that the ECS-SDE model achieves superior overall performance in customer credit scoring and offers strong interpretability.

The remainder of this paper is structured as follows. Section 2 briefly reviews the relevant theoretical foundations. Section 3 provides a detailed description of the basic concept and modeling steps of the ECS-SDE model. In Sect. 4, we present the experimental design, including dataset information, experimental setup, and model evaluation metrics, and we analyze the experimental results. Finally, in Sect. 5, we present the conclusions of this paper and suggest possible future research directions.

Related works

Class dependent cost sensitive learning

In the real world, misclassification of different classes may have different consequences. In credit scoring, it is often observed that misclassifying a customer with poor credit as having good credit causes more severe economic losses than misclassifying a customer with good credit as poor credit. Therefore, many studies use CCS methods that assign different costs to the misclassification of each class. Classification costs are represented by a cost matrix, where the elements within the cost matrix are the same for all samples in the same class. Credit scoring can be represented as a binary classification problem, where samples are either in the negative class or in the positive class. To quantify the cost of misclassification, a cost matrix17 is used, as shown in Table 1:

where \({C_{TP}}\) is the cost of correctly classifying a positive sample as positive. \({C_{FP}}\) is the cost of incorrectly classifying a negative sample as positive. \({C_{FN}}\) is the cost of wrongly classifying a positive sample as negative. \({C_{TN}}\) is the cost of correctly classifying a negative sample as negative.

In recent years, CCS methods have become one of the main approaches to address class-imbalanced problems. Many researchers have combined CCS techniques with deep learning to solve the challenges of classification models on imbalanced datasets. For example, Yotsawat et al.10 proposed a class-dependent cost-sensitive neural network ensemble model (CSNNE). This model generated multiple differentiated cost-sensitive neural networks using different class weights and ensembled them through majority voting. Experiments showed that CSNNE was suitable for handling imbalanced datasets and demonstrated good performance on several credit-scoring datasets. Geng and Luo37 proposed an adaptive class-dependent cost-sensitive convolutional neural network ensemble model (CSCNN). This model adaptively updated the weights of misclassification costs based on the imbalance distribution of the entire training set and local training subsets. Experimental results showed that CSCNN performed well on all evaluation metrics. Similarly, the class-dependent cost-sensitive convolutional neural network model (CCS-CNN) proposed by Vimala et al.38 (2024) enhances the classification performance of minority-class samples by adjusting the classifier’s decision threshold, achieving good classification results on imbalanced datasets. Experimental results showed that the CCS-CNN method outperformed existing methods across multiple metrics.

TabNet deep neural network

TabNet33 is a deep neural network designed for tabular data, proposed by Google in 2021. It combines the interpretability of tree models with the high predictive accuracy of DNNs. TabNet uses an end-to-end learning approach to directly learn features from raw data, reducing preprocessing time. It also provides feature importance through a sequential attention mechanism, enhancing model interpretability. TabNet has been widely applied in fields such as healthcare, insurance, and environmental studies5,39,40,41.

TabNet constructs a sequential multi-step neural network architecture, which mainly consists of a feature transformer module and an attention transformer module. The input for each decision step is a d-dimensional feature matrix \(a \in {{\mathbb{R}}^d}\). First, the initial features pass through a batch normalization (BN) layer before entering the feature transformation module. This module is composed of a fully connected layer, a BN layer, and a gated linear unit layer, which are used sequentially to process the features into more useful representations. In addition, to accelerate network convergence and stabilize the training process, \(momentum\) is introduced as a hyperparameter in the BN layer. This ensures that the mean and variance in the BN layer update smoothly, thereby reducing instability caused by batch size data fluctuations. In each decision step j, the features \({a_{j - 1}}\) processed from the previous step are input into the current step. After processing through the feature transformation module \({f_j}( \cdot )\), the output is split into two parts, which can be represented as follows: \([{d_j},{a_j}]={f_j}({M_{j - 1}} \cdot {a_{j - 1}})\), where \({M_{j - 1}}\) is the mask obtained from the previous step, \({d_j} \in {{\mathbb{R}}^{{N_d}}}\) is the feature representation of the decision layer, which is output by the feature transformation module, \({N_d}\) is the dimension of the decision layer features, which are used to generate the final prediction result. On the other hand, \({a_j} \in {{\mathbb{R}}^{{N_a}}}\) is the feature representation used for feature selection in the feature attention module, where \({N_a}\) is the dimension of the attention layer features. The feature attention module is used to select important features. Let \({h_j}\) is the combination of a fully connected layer and a BN layer. This combination performs a linear transformation and normalization on the \({a_{j - 1}}\) to obtain the intermediate representation \({h_j}({a_{j - 1}})\). The attention module uses the prior weight \({P_{j - 1}}\) from the previous step and the current step \({h_j}({a_{j - 1}})\), to obtain a sparse mask \({M_j} \in {{\mathbb{R}}^d}\) through the \(Sparsemax\) activation function:

where \(Sparsemax\) is a sparse activation function used to select a small number of important features. The prior weights \({P_{j - 1}}\) control the frequency with which the model selects features. These weights are calculated using the previous masks and a relaxation factor gamma as follows: \({P_{j - 1}}=\prod\nolimits_{{k=1}}^{{j - 1}} {(gamma - {M_k})}\), where k is the step number \((k=1,2,\ldots,j - 1)\), and \(gamma\) is a hyperparameter that controls the flexibility of feature selection. When \(gamma=1\), the model enforces the use of a feature in each step. As \(gamma\) increases, the likelihood of reusing the same feature across multiple steps increases, reducing the constraints on feature selection at each step, and thereby enhancing the model’s flexibility. Then, the new mask \({M_j}\) and the new feature \({a_j}\) generated at the j-th step will be passed to the next decision step. This process is repeated until the preset number of steps \({N_{step}}\) is reached or a stopping condition is met.

Based on the feature masks at each step, the local importance score for each feature can be obtained. The local importance score \({S_{i,j}}\) for the i -th feature at the j -th step is expressed as: \({S_{i,j}}=\sum\nolimits_{{j=1}}^{{{N_{step}}}} {{\eta _j}{M_{i,j}}}\). where \({M_{i,j}}\) is the mask value for the i-th feature at the j -th step, and \({\eta _j}\) is the weight factor for the j -th step. Finally, by aggregating the masks and weight factors from all steps, the global importance score for the i-th feature is obtained:

at the same time, by aggregating the outputs of all decision layers, the final decision output \({d_{final}}\) is expressed as: \({d_{final}}=\sum\nolimits_{{j=1}}^{{{N_{step}}}} {ReLU({d_j})}\), where \(ReLU\) is the activation function used to process the decision layer outputs. Finally, the aggregated decision layer output \({d_{final}}\) is mapped to the model’s output space through a fully connected layer to generate the final prediction result. In binary classification problems, TabNet typically uses the binary cross-entropy loss function for training, which is expressed as:

where y is the true value, and \(\hat {y}\) is the predicted value.

GMDH neural network

GMDH neural network is a self-organizing inductive modeling technique42, commonly used for modeling and identifying complex systems. Let \(X=({x_1},{x_2},.,{x_n})\) and y represent the input and output variables, respectively. The modeling process of GMDH is as follows:

First, the input dataset \({D_{input}}\) is randomly divided into a learning set A and a selection set B. Typically, a discrete Kolmogorov-Gabor (K-G) polynomial is used to establish the general relationship between the input and output variables:

Where \({w_0}\), \({w_i}\), \({w_{ij}}\)\ldots. are the weights. Next, an initial input model set \(V=\{ {v_1}={x_1},{v_2}={x_2},.,{v_n}={x_n}\}\) is created. These initial models in V are then combined pairwise using a transfer function \(f( \cdot )\), generating the first layer of \({n_1}=C_{n}^{2}\) intermediate candidate models in total. Then, the ordinary least squares (OLS) method is used to estimate the parameters of candidate models on set A, and the external criterion values of candidate models are calculated on set B. The candidate models are ranked based on these criterion values, and the optimal \({F_1}( \leqslant C_{n}^{2})\) models are selected. To avoid losing important information too early, the initial models are included in the intermediate candidate model set for each layer43. That is, the selected candidate models are combined with the n initial models and once again pairwise combined using the transfer function, generating the second layer of \({F_2}=C_{{{F_1}+n}}^{2}\) candidate models. From this, the optimal \({F_2}\) models are selected. Finally, this process is repeated layer by layer to generate intermediate candidate models. The process continues until a termination criterion is met, i.e., the external criterion value initially decreases and then increases as the complexity of the candidate models increases44. When the external criterion value reaches its minimum, the optimal complexity model \({Y^*}\) with m layers is obtained. The structure of the GMDH network is shown in Fig. 1.

The most commonly used external criterion for GMDH is the symmetric regularity criterion (SRC). This criterion primarily evaluates the fitting accuracy of the model. Its mathematical expression is as follows:

where \({y_B}\) is the actual output of set B, and \({\hat {y}_B}(A)\) is the predicted output of set B by the model constructed on set A. Similarly, \({y_A}\) is the actual output of set A, and \({\hat {y}_A}(B)\) is the predicted output of set A by the model constructed on set B. \({\Delta ^2}(A)\) is the error on set B by the model constructed on set A, \({\Delta ^2}(B)\) is the error on set A by the model constructed on set B, and \({d^2}({D_{input}})\) is the total error on \({D_{input}}\).

However, in the SRC, all samples are assigned the same misclassification cost. In credit scoring, in contrast, different classes often have different misclassification costs. Therefore, in our previous research45, we combined CCS with SRC and proposed a class-dependent cost-sensitive symmetric regularity criterion (CS-SRC):

where \({n_{11}}\) and \({n_{12}}\) are the numbers of positive and negative samples in subset B, \({n_{21}}\) and \({n_{22}}\) are the numbers of positive and negative samples in set A, respectively. Assume that the misclassification cost for each negative sample is 1, while the misclassification cost for positive samples is \(\varepsilon\). \(\operatorname{Cost} (A)\) is the total misclassification cost of set B by the model constructed on set A, \(\operatorname{Cost} (B)\) is the total misclassification cost of set A by the model constructed on set B, \(\operatorname{Cost} ({D_{input}})\) is the total misclassification cost on set \({D_{input}}\).

Methods

Basic framework

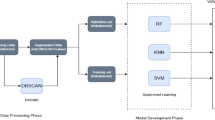

Existing credit scoring models often use traditional CCS techniques. However, these methods fail to account for cost differences between samples and rarely consider practical business needs or model interpretability. To address these issues, this paper proposes an ECS-SDE model for customer credit scoring.

Let \(D=\{ ({x_i},{y_i})\} _{{i=1}}^{N}\) be a dataset containing N samples, where \({x_i} \in {{\mathbb{R}}^n}\) is an n-dimensional vector and \({y_i} \in \{ 0,1\}\) is the class label of \({x_i}\). \({D_{maj}}\) and \({D_{min}}\) are the majority and minority class samples in D, respectively. The modeling process of the ECS-SDE model mainly consists of three phases:

Phase I: construction of the example-dependent cost matrix and ECS training subset

First, based on the example-dependent cost matrix in the credit scoring domain, this paper calculates the cost matrix \({C_i}\) for each sample \({x_i}\). Next, the cost matrix is added to the dataset D to create the new dataset \({D^\prime }=\{ ({x_i},{C_i},{y_i})\} _{{i=1}}^{N}\). Then, \({D^\prime }\) is randomly divided into a training set \({D_{train}}\) and a test set \({D_{test}}\). Finally, several random samplings are performed on \({D_{train}}\) to generate the ECS training subset \({D_{sub}}\).

Phase II: training of ECS TabNet base classifiers

First, this paper constructs the ECS TabNet base classifier by embedding a new loss function. Then, M differentiated ECS TabNet base classifiers, denoted as \(\{ {T_1},{T_2},\ldots,{T_M}\}\), are trained on the ECS training subset \({D_{sub}}\). The prediction result of the j-th base classifier \({T_j}\) on the j-th ECS training subset is denoted as \(\hat {y}_{j}^{{^{\prime }}}=\{ \hat {y}_{j}^{{^{\prime }}}\} _{{i=1}}^{N}\)\((j=1,2,\ldots,M)\). Thus, the prediction results of all base classifiers on the training subsets are \(\hat {y}_{1}^{{^{\prime }}}\), \(\hat {y}_{2}^{{^{\prime }}}\),\ldots,\(\hat {y}_{M}^{{^{\prime }}}\).

Phase III: design of an ECS GMDH for the selective deep ensemble

First, this paper proposes a new ECS-SC external criterion to construct the ECS GMDH neural network. Then, the ECS GMDH is used to perform a selective deep ensemble on the prediction results of the M ECS TabNet base classifiers, ultimately yielding the credit-scoring result. The framework of ECS-SDE is shown in Fig. 2.

Construction of the example dependent cost matrix and ECS training subset

In ECS learning, different samples correspond to different cost matrices. For customer credit scoring, this paper uses the example-dependent cost matrix proposed by Bahnsen et al.19 (Table 2) and applies the corresponding calculation formula (Eq. 9) to derive the cost matrix for all samples.

where \(\operatorname{Cost} ({y_i},{\hat {y}_i})={y_i}({\hat {y}_i}{C_{T{P_i}}}+(1 - {\hat {y}_i}){C_{F{N_i}}})+(1 - {y_i})({\hat {y}_i}{C_{F{P_i}}}+(1 - {\hat {y}_i}){C_{T{N_i}}})\), where \({y_i}\) is the actual output of a sample \({x_i}\), and \({\hat {y}_i}\) is the predicted output of a sample \({x_i}\), \({\text{Cost}}({D^\prime })\) is the total misclassification cost for all samples. When \({y_i}=1\), the cost is \({\hat {y}_i}{C_{T{P_i}}}+(1 - {\hat {y}_i}){C_{F{N_i}}}\). When \({y_i}=0\), the cost is \({\hat {y}_i}{C_{F{P_i}}}+(1 - {\hat {y}_i}){C_{T{N_i}}}\).

Next, the dataset \({D^\prime }\) is randomly split into a training set \({D_{train}}\) and a test set \({D_{test}}\). Finally, multiple random samplings are performed on \({D_{train}}\) to generate ECS training subsets \({D_{sub}}\).

Training of ECS TabNet base classifiers

Traditional TabNet deep neural networks treat all samples equally during training, which may lead to underestimating the importance of minority-class samples, especially in class-imbalanced problems. To address this, we replace the traditional loss function (Eq. 3) with an enhanced example-dependent cost function (Eq. 9), resulting in an improved loss function.

Specifically, to address class imbalance, this paper considers the importance of minority-class samples in credit scoring. According to Elkan18, in credit scoring, misclassification costs for minority-class samples could be up to 5 times higher than that for majority-class samples. Therefore, the new loss function calculates example-dependent costs separately for both classes, multiplying the cost for minority-class samples by 5 to place greater emphasis on them during training. The new loss function is as follows:

where \([C_{{_{{F{P_i}}}}}^{{maj}},C_{{_{{_{{F{N_i}}}}}}}^{{maj}},C_{{_{{_{{T{P_i}}}}}}}^{{maj}},C_{{_{{_{{T{N_i}}}}}}}^{{maj}}]\) is the cost matrix for majority class samples, and \({\operatorname{Cost} _{maj}}( \cdot )\) is the misclassification cost generated by majority class samples. Similarly, \([C_{{_{{F{P_i}}}}}^{{min}},C_{{_{{_{{F{N_i}}}}}}}^{{min}},C_{{_{{_{{T{P_i}}}}}}}^{{min}},C_{{_{{_{{T{N_i}}}}}}}^{{min}}]\) is the cost matrix for minority class samples, and \({\operatorname{Cost} _{min}}( \cdot )\) is the misclassification cost generated by minority class samples. \(Los{s_{cost}}( \cdot )\) is the total misclassification cost.

Next, we build the ECS TabNet classifier by embedding the new loss function. We train on M ECS training subsets \({D_{sub}}\) to generate M differentiated ECS TabNets, denoted as \(\{ {T_1},{T_2},\ldots,{T_M}\}\). Let the prediction results of the j-th base classifier \({T_j}\) on the j -th training subset be \(\hat {y}_{j}^{\prime }=\{ \hat {y}_{j}^{\prime }\} _{{i=1}}^{N}\)\((j=1,2,\ldots,M)\). Thus, the prediction results of all base classifiers on training subsets are denoted as \(\hat {y}_{1}^{\prime }\), \(\hat {y}_{2}^{\prime }\),\ldots,\(\hat {y}_{M}^{\prime }\).

Design of an ECS GMDH for selective deep ensemble

First, let the predicted outputs of the base classifiers be \({\hat {Y}^\prime }=(\hat {y}_{1}^{\prime },\hat {y}_{2}^{\prime },\ldots,\hat {y}_{M}^{\prime })\) and the actual outputs be y, which will serve as the input and output vectors for the ECS GMDH neural network, respectively. This forms a new input dataset \({D_{input}}=({\hat {Y}^\prime },y)\). Then, \({D_{input}}\) is randomly split into a model learning set A and a model selection set B. Next, an initial model set \(V=\{ {v_1}=\hat {y}_{1}^{\prime },{v_2}=\hat {y}_{2}^{\prime },\ldots,{v_n}=\hat {y}_{n}^{\prime }\}\) is created. All initial models in the set V are pairwise combined using the transfer function \(f({v_i},{v_j})={w_0}+{w_1}{v_i}+{w_2}{v_j}+{w_3}{v_i}{v_j}\) (for\(i,j=1,2,\ldots,M\) with \(i \ne j\)) to generate the first layer of intermediate candidate models. It is important to note that in the real world of credit scoring, due to operational cost constraints, companies can only manage a portion of customers that are most likely to reduce operational costs. Therefore, the question for companies is how much money can be saved with the help of the model.

To achieve this goal, inspired by previous research45, we introduce the example-dependent cost function (Eq. 9) into the external criteria of GMDH and propose a novel criterion, the example-dependent cost-sensitive symmetric criterion (ECS-SC). Traditional SRC criterion selects models by minimizing overall classification error, assuming equal misclassification costs for all samples. In contrast, ECS-SC accounts for cost differences between samples and optimizes total cost, better aligning with the practical needs of financial institutions. Specifically, ECS-SC calculates the example-dependent costs for majority and minority-class samples separately, assigning higher weights to minority-class samples (we still set the weight to 5) to emphasize their importance in model selection. The ECS-SC is defined as follows:

where \(\:{y}_{B}^{i}\) is the actual output of the i-th sample \({x_i}\) on set B, and \(\:{\widehat{y}}_{B}^{i}\left(A\right)\) is the predicted output of the i-th sample \({x_i}\) on set B by the model constructed on set A. Similarly, \(y_{A}^{i}\) is the actual output of the i-th sample \({x_i}\) on set A, and \(\hat {y}_{A}^{i}(B)\) is the predicted output of the i-th sample \({x_i}\) on set A by the model constructed on set B. \({A_{maj}}\)and \({A_{min}}\) are the majority and minority class samples in set A, respectively, and \({B_{maj}}\) and \({B_{min}}\) are the majority and minority class samples in set B, respectively. \({\operatorname{Cost} _{maj}}(A)\), \({\operatorname{Cost} _{min}}(A)\) and \(\operatorname{Cost} (A)\) are the misclassification costs of majority class samples, minority class samples, and the overall misclassification cost, respectively, when the model constructed in set A is applied to set B. \({\operatorname{Cost} _{maj}}(B)\), \({\operatorname{Cost} _{min}}(B)\) and \(\operatorname{Cost} (B)\) represent the misclassification costs of majority class samples, minority class samples, and the overall misclassification cost, respectively, when the model constructed in set B is applied to set A.

It should be noted that the traditional GMDH typically uses the OLS method to estimate the parameters of candidate models. However, as the number of layers in the GMDH network increases, the correlations between input variables also increase, which may lead to multicollinearity issues, thereby affecting the model performance40. To address this issue, we introduce an L2 regularization term, which compresses some of the highly correlated parameters to near zero, effectively suppressing model overfitting and mitigating the effects of multicollinearity. The expression is as follows:

where \({J_{LS}}(\hat {w})\) is the sum of squared errors of the model parameters estimated by the OLS method,\(\left\| {\hat {w}} \right\|_{2}^{2}\) is L2 norm, and \(\lambda\) is a constant used to adjust the relative strength between \(JLS(\hat {w})\) and \(\left\| {\hat {w}} \right\|_{2}^{2}\). Specifically, as \(\lambda\) increases, some of the less important model parameters are compressed towards zero, leading the model to produce sparser solutions, thereby reducing the model complexity.

Then, based on Eq. 13, the ECS-SC external criterion value for the first layer of intermediate candidate models is calculated and ranked. The top \({F_1}\) models with the best external criterion values are selected. Next, the selected \({F_1}\) candidate models, along with the initial models, are combined again using the transfer function \(f( \cdot )\) in pairs to generate the next layer of candidate models. Finally, the process is repeated until the ECS-SC external criterion value reaches its minimum, obtaining the optimal complexity model \({Y^*}\).

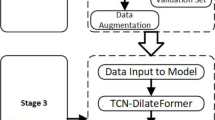

Modeling process

The detailed modeling process of the ECS-SDE model is as follows:

Phase I: construction of the example-dependent cost matrix and ECS training subset

Step 1: For each sample \(\:{x}_{i}\), we calculate its corresponding cost matrix \({C_i}=[{C_{F{P_i}}},{C_{F{N_i}}},{C_{T{P_i}}},{C_{T{N_i}}}]\)and expand the original dataset \(D=\{ ({x_i},{y_i})\} _{{i=1}}^{N}\) into a new dataset \({D^\prime }=\{ ({x_i},{C_i},{y_i})\} _{{i=1}}^{N}\). Then, we randomly divide \(\:{D}^{{\prime\:}}\) into a training set \({D_{train}}=\{ ({x_{train}},{C_{train}},{y_{train}})\}\) and a test set \({D_{test}}=\{ ({x_{test}},{C_{test}},{y_{test}})\}\);

Step 2: Multiple random samplings are performed on the training set \({D_{train}}\) to generate M ECS training subsets \({D_{sub}}\);

Phase II: training of ECS TabNet base classifiers

Step 3: ECS TabNet base classifiers are constructed, and M ECS training subsets \({D_{sub}}\) are used for training. This results in M differentiated ECS TabNets, denoted as \(\{ {T_1},{T_2},\ldots,{T_M}\}\);

Phase III: design of an ECS GMDH for selective deep ensemble

Step 4: ECS-SC external criterion is constructed, and the ECS GMDH neural network is built based on this criterion;

Step 5: Prediction results of the M ECS TabNets are taken as inputs for the ECS GMD. The ECS-SC external criterion values for each layer of candidate models are calculated based on Eq. 13;

Step 6: The process continues until the external criterion value reaches its minimum, obtaining the optimal complexity model \({Y^*}\) and achieving selective deep ensemble predictions.

The modeling flowchart of the ECS-SDE model is shown in Fig. 3.

Results and analysis

This section presents comparative experiments to evaluate the effectiveness of the proposed ECS-SDE model. Section 4.1 to 4.3 introduce the datasets, experimental settings, and evaluation metrics. In Sect. 4.4, the ECS-SDE model’s performance is compared with three ECS models, three CCS models, and five deep ensemble models. Section 4.5 compares the computation time of ECS-SDE with five deep ensemble models. Section 4.6 presents ablation experiments to assess the impact of ECS TabNet and ECS GMDH on model performance. Section 4.7 analyzes the interpretability of the ECS-SDE model, and Sect. 4.8 conducts sensitivity analysis on ECS TabNet parameters, the number of base classifiers, and ECS GMDH parameters.

Datasets

This paper evaluates the model using four credit-scoring datasets, including the IEEE-CIS Fraud Detection (IEEE) dataset from the Kaggle competition. This dataset, which aims to predict online transaction fraud, contains 151 features and 1 binary label. The data is divided into transaction and identity information, covering aspects such as transaction amount, payment card details, and digital signatures. The Give Me Some Credit (GMSC) dataset, also from Kaggle, is used to predict the likelihood of a customer experiencing financial distress within two years, helping determine loan issuance. It contains 10 features and 1 binary label, with key features including credit utilization rate, debt ratio, and monthly income et al. The Default of Credit Card Clients (DCCC) dataset, sourced from the UCI public database, records customer credit card payment history in Taiwan from April to September 2005. It contains 23 features and 1 binary label, with features related to credit limit, age, repayment history, and more. The 2009 Pacific-Asia Knowledge Discovery and Data Mining Conference (PAKDD) dataset includes credit data from a Brazilian financial institution, collected between 2003 and 2008. It contains 20 features and 1 binary label, with attributes such as customer age, personal net income, gender et al. Table 3 provides the basic information of the four credit-scoring datasets, where the imbalanced ratio (IR) is defined as the ratio of majority class (good credit) samples to minority class (bad credit) samples. A higher IR value indicates a greater imbalance in the class distribution. The datasets used in this paper were preprocessed as described in the literature14,47. The “Data availability” section at the end of the paper provides details on how to obtain these datasets, with clickable links for accessing specific acquisition information. The GMSC dataset is used as a case study, and a detailed feature description is included in Appendix A for a deeper analysis of the model’s interpretability.

Experimental setup

In this experiment, we used four credit-scoring datasets, and the following steps were performed for each dataset. First, the augmented dataset \({D^\prime }\) is randomly divided into a training set and a test set in a 6:4 ratio. In the training set, 90% of the samples are used to train the model, and the remaining 10% are used for hyperparameter optimization. To reduce the randomness of the results, we repeat the entire experiment 10 times and calculate the average of the results for subsequent analysis and model performance comparison. Additionally, the credit scoring example-dependent cost matrix is shown in Table 2, where the personal income \(In{c_i}\) can be directly obtained from the dataset, and the debt ratio \(deb{t_i}\) can be indirectly calculated based on information such as income and credit limit in the dataset. Other parameters, such as the market average credit limit \(\bar {C}l\), the average profit margin \(\bar {r}\), and the loan term \({l_i}\), are set based on the research by Bahnsen et al.19.

In the model comparison, this paper compares the proposed ECS-SDE model with other models that use cost-sensitive techniques, including three ECS models and three CCS models. Given that the ECS-SDE model is a deep ensemble framework based on ECS, a review of the literature reveals that the latest advancements in ECS-based models primarily focus on traditional ensemble models and deep learning models. Therefore, the three ECS models selected include: the example-dependent cost-sensitive AdaBoost model using the outside exponent method (ECS-AdaBoost) proposed by Zelenkov17, the example-dependent cost-sensitive deep neural network (ECSDNN) proposed by Mehta et al.32, and the example-dependent cost-sensitive stacking ensemble framework (ECS-Stacking) proposed by Bhargava et al.25. Next, the three CCS models are as follows: the class-dependent cost-sensitive neural network ensemble model (CSNNE) proposed by Yotsawat et al.10 and the class-dependent cost-sensitive convolutional neural network ensemble model (CSCNN) proposed by Geng and Luo37, and the class-dependent cost-sensitive convolutional neural network (CNN) model (CCS-CNN) proposed by Vimala et al.38.

To further evaluate the performance of the ECS-SDE model, this paper compares it with five advanced deep ensemble models: the deep ensemble model based on long short-term memory (LSTM) and gated recurrent unit (GRU) neural networks (LSTM-GRU-ANN) proposed by Forough and Momtazi49, the deep ensemble model based on deep recurrent neural networks (LSTM-GRU-MLP) proposed by Mienye and Sun50, the deep ensemble model based on CNNs and bidirectional long short-term memory (BiLSTM) networks (CNN-BLSTM) proposed by Haghighi and Omranpour51, as well as the deep ensemble models based on CNN and BiLSTM (BiLSTM-CNN), and on CNN, BiLSTM, and Transformer (BiLSTM-Trans-CNN), both proposed by Wang et al.52. The parameter settings for the comparative models are shown in Table 4.

In the parameter setting for the ECS-SDE model, first, for ECS TabNet, this paper refers primarily to the research by Arik and Pfister33. The Adam optimizer is used with a learning rate of 0.006, a batch size of 128, and 70 epochs. The ranges for some of the hyperparameters are shown in Table 5. To solve the data imbalance problem and achieve higher economic benefits, this paper employs a multi-objective optimization algorithm, with cost-saving (Save) and geometric mean indicators as optimization objectives. The optimization is performed using the default multi-objective algorithm from the Optuna library in Python. A sensitivity analysis of the optimal hyperparameter combinations is provided in Sect. 4.8. Then, for the parameter settings of the ECS GMDH neural network, this paper refers to the study by Lemke and Müller53. The maximum number of layers for the network is set to 20, and the data division method is set to random. The reference function form is \(~y={w_0}+{w_1}{x_1}+{w_2}{x_2}+{w_3}{x_1}{x_2}\), with the remaining parameters kept at their default values. Additionally, considering that the ECS GMDH model complexity parameter \(\lambda\) and the number of ECS TabNet base classifiers M have a significant impact on the performance of the ECS-SDE model, this paper conducts a sensitivity analysis of these important parameters in Sect. 4.8. All experiments are run on a Windows 10 × 64 system equipped with an Intel(R) Core(TM) i5 processor. The experiments are conducted in Python 3.7, and the coding implementation uses the deep learning framework PyTorch and the GmdhPy library.

Evaluation metrics

Traditional classification frameworks evaluate models based on statistical metrics, which typically aim to minimize misclassifications under the assumption of equal misclassification costs. However, cost-sensitive classification methods provide a comprehensive evaluation of the model performance, rather than simply aiming for the highest classification accuracy. Therefore, this paper employs two different types of metrics: precision-oriented metrics, which include AUC-PR54, AUC-ROC55, Brier Score− (BS−), and Brier Score+ (BS+)56; and a cost-oriented metric, namely cost savings (Save)19. These five metrics provide a comprehensive evaluation of the model’s performance. The confusion matrix for customer credit scoring is shown in Table 6.

TP represents the number of true positives, FN represents the number of false negatives, FP represents the number of false positives, and TN represents the number of true negatives.

(1) Save: In credit scoring, business needs are typically cost-driven. Therefore, this paper uses the Save metric to evaluate improvements in model performance from a cost-efficiency perspective. The Save metric19 is defined as the cost reduction achieved by using a model compared to not using any model. Specifically, Save assumes that all samples are predicted as the default class with the lowest cost (either 0 or 1), i.e., the baseline cost \({C_{base}}=\hbox{min} \{ C(y,0),C(y,1)\}\). It then calculates the total cost saved by the model’s classification compared to \({C_{base}}\). The formula is as follows:

when the model shows improvement in cost, the Save value lies between \([0,1]\), with the higher value indicating better performance.

(2) AUC-PR: The precision-recall (PR) curve shows the trade-off between precision and recall. Precision is the proportion of true positives among all samples predicted as positive, i.e., precision\(=TP/\left( {TP+FP} \right)\), while recall is the proportion of actual positives correctly identified, i.e., recall\(~=TP/\left( {TP+FN} \right)\). This paper uses the area under the precision-recall curve (AUC-PR)54 to assess the model’s ability to discriminate positive samples, with a higher AUC-PR indicating better performance.

(3) AUC-ROC: The receiver operating characteristic curve (ROC) curve plots the true positive rate (TPR) against the false positive rate (FPR), where the x-axis is the false positive rate FPR\(~=FP/\left( {FP+TN} \right)\), and the y-axis is the true positive rate TPR\(~=TP/\left( {TP+FN} \right)\). It evaluates performance under uncertain class distributions or misclassification costs. The area under the ROC curve (AUC-ROC)55 is used to assess performance, with higher values indicating better results.

(4) BS+: BS+ is defined as the mean squared error of the minority class (positive class) samples, reflecting the model’s calibration for the minority class. It is calculated as follows:

where \(\hat {y}_{i}^{{min}}\) is the predicted probability that sample i belongs to the minority class, \(y_{i}^{{min}}\) is the actual label of the minority-class sample, and \({N_{min}}\) is the number of minority-class samples. Lower BS+ values indicate better calibration for minority-class samples.

(5) BS−: BS− is defined as the mean squared error of the majority class (negative class) samples, indicating the model’s calibration for the majority class. It is calculated as follows:

where \(\hat {y}_{i}^{{maj}}\) is the predicted probability that sample i belongs to the majority class, \(y_{i}^{{maj}}\) is the actual label of the majority-class sample, and \({N_{maj}}\) is the number of majority-class samples. Lower BS− values indicate better calibration for the majority-class samples.

Comparison experiments

Comparison of different cost sensitive models

This section compares the ECS-SDE model with three ECS models and three CCS models in terms of credit scoring performance. Table 7 shows the performance of the ECS-SDE model and six comparative models in the four datasets. In the table, bold indicates the top-performing model in each row, and the number in brackets indicates the model’s ranking. The smaller the number, the better the model performance in credit scoring. In addition, the area in parentheses below the metric values represents the 95% confidence interval57, which reflects the stability of the model’s performance.

The results in Table 7 show that the ECS-SDE model consistently outperforms the other models, particularly excelling in the cost savings (Save) metric across all four datasets. Notably, the ECS-SDE model shows a 45.448% improvement in cost savings on the GMSC dataset and a 51.258% improvement on the IEEE dataset. This highlights the model’s effectiveness in enhancing cost efficiency, optimizing resource allocation, and minimizing financial losses by accurately identifying high-risk customers and reducing the over-management of low-risk ones.

To further assess statistically significant differences between the seven models on each metric, this paper applies non-parametric statistical tests recommended by Demšar58, namely the Friedman test59 and the Iman-Davenport test60. The null hypothesis for both tests is that the performance of the seven models is the same. For the 4 datasets and 7 models, we use a \({\chi ^2}\) distribution with 6 degrees of freedom and an F distribution with 6 and 18 (i.e., 6 × 3) degrees of freedom. The significance level is set at 0.05, with results presented in Table 8.

The test values exceed the corresponding distribution values, leading to the rejection of the null hypothesis at the 95% confidence level. This indicates significant performance differences between the seven models on each metric. Additionally, pairwise comparisons are conducted to further explore the performance differences among the models. First, we compute \(z=({R_i} - {R_j})\sqrt {k(k - 1)/(6*Num)}\), where \({R_i}\) and \({R_j}\) are the average rankings of the i-th and j-th models, respectively, k is the number of models being compared (7 in this case), and \(Num\) is the number of datasets (4 in this case). After calculating z, it is converted into a probability value, and the Benjamini-Hochberg multiple testing correction61 is applied to obtain the adjusted p-values. Table 9 shows the results of the test.

From Table 9, it can be concluded that the ECS-SDE model shows significant advantages on multiple key metrics: (1) For the AUC-ROC metric, ECS-SDE shows a significant difference compared to ECS-AdaBoost, ECSDNN, ECS-Stacking, CSNNE, and CCS-CNN, with no significant difference observed between ECS-SDE and CSCNN. This indicates that ECS-SDE has stronger discriminatory power in the ROC curve area, allowing it to more accurately distinguish between high-risk and low-risk customers. (2) For the AUC-PR metric, ECS-SDE significantly outperforms ECS-AdaBoost, ECSDNN, CSNNE, and CSCNN models. This indicates that the ECS-SDE model has higher classification accuracy in handling class imbalance, particularly in identifying the minority-class samples (i.e. high-risk customers). (3) For the BS+ metric, ECS-SDE significantly outperforms ECS-AdaBoost, ECS-Stacking, and CSNNE models. This highlights the efficacy of the ECS-SDE model in identifying positive samples and in detecting high-risk customers. (3) For the Save metric, ECS-SDE significantly outperforms ECS-AdaBoost, CSNNE, and CCS-CNN models, indicating superior performance in cost savings. (4) For the BS– metric, ECS-SDE shows a significant advantage over CSCNN, despite its relatively average performance in predicting the negative class (low-risk customers). However, customer credit evaluation places more emphasis on the prediction of positive class samples, as accurately identifying high-risk customers is crucial for reducing financial losses. (6) Among the six comparison models, ECS-AdaBoost, ECSDNN, ECS-Stacking, CSNNE, CSCNN, and CCS-CNN show no significant performance differences across most metrics, indicating that their overall performance is similar.

In conclusion, the ECS-SDE model outperforms the six comparison models, particularly in handling class imbalance and identifying high-risk customers, with superior classification accuracy. The performance differences among the other models are minimal across most metrics, indicating their overall similarity.

Comparison of deep ensemble models

This section compares the performance of the ECS-SDE model with five advanced deep ensemble models in credit scoring (Table 10). To ensure fairness, we used the SMOTE technique12 to generate new minority-class samples to balance the training set when training the deep ensemble comparative models. The area in parentheses below the metric values represents the 95% confidence interval57. In the table, bold text highlights the top-performing model in each row.

The results in Table 10 show that the ECS-SDE model achieves the best overall average ranking among all comparison models, indicating that it has the best performance in credit scoring. It also outperforms other models in the cost savings (Save) metric across all four datasets, indicating its ability to accurately identify high-risk customers and reduce financial losses from misclassification.

To further analyze whether there are statistically significant differences between the ECS-SDE model and the five deep ensemble models in each metric, this paper still uses the Friedman test59 and the Iman-Davenport test60. The null hypothesis for both tests is that the performance of the six models is the same. When the number of datasets is 4 and the number of models is 6, we use a \({\chi ^2}\) distribution with 5 degrees of freedom and an F distribution with 5 and 15 (5 × 3) degrees of freedom, with a significance level of 0.05. The test results are shown in Table 11.

As shown in Table 11, the test values are all greater than the corresponding distribution values. Therefore, at a 95% confidence level, we reject the null hypothesis and conclude that there are significant differences in the performance of the six models across each metric. To further understand the performance differences between the six models, we perform pairwise comparisons of the model performance. We also apply the Benjamini-Hochberg multiple testing correction61 to obtain the adjusted p-values. The results are shown in Table 12. In the table, bold values indicate that the adjusted p-value is less than 0.05.

According to Table 12, the ECS-SDE model shows significant advantages in most metrics: (1) For the Save metric, ECS-SDE significantly outperforms the LSTM-GRU-ANN, LSTM-GRU-MLP, BiLSTM-CNN, and BiLSTM-Trans-CNN models, but there is no significant difference when compared to CNN-BLSTM, indicating that ECS-SDE excels in cost savings. (2) ECS-SDE significantly outperforms the CNN-BLSTM, BiLSTM-CNN, and BiLSTM-Trans-CNN models, showing higher accuracy in identifying minority-class samples (high-risk customers). (3) For the AUC-ROC and AUC-PR metrics, ECS-SDE significantly outperforms the BiLSTM-CNN and BiLSTM-Trans-CNN models. (4) For the BS– metric, ECS-SDE significantly outperforms the LSTM-GRU-ANN and BiLSTM-Trans-CNN models. (6) For LSTM-GRU-ANN, LSTM-GRU-MLP, CNN-BLSTM, BiLSTM-CNN, and BiLSTM-Trans-CNN models, no significant differences are observed in most metrics, indicating that their performance is relatively similar.

In conclusion, the ECS-SDE model excels in key metrics, particularly outperforming most deep ensemble models in Save and BS+ metrics. Its superior ability to identify high-risk customers and reduce financial losses highlights its effectiveness in cost savings and minority-class prediction.

Computational time comparison of deep ensemble models

This section compares the computational time of the ECS-SDE model with five advanced deep ensemble models. Table 13 shows the time required by the six models to fit on the training set and make predictions on the test set. Bold text indicates the model with the shortest computation time in each row, with the number in brackets representing the model’s ranking, where a lower value indicates a shorter computation time. The last row presents the average ranking of total time for each model.

From Table 13, it can be seen that the average ranking of the ECS-SDE model is the same as that of the LSTM-GRU-ANN model, indicating that the computational time of the ECS-SDE model is at a moderate level among the six models. However, the computational time of the ECS-SDE model varies across different datasets. For instance, on the IEEE dataset, which has a large number of samples, high imbalance, and many features, the ECS-SDE model may require more complex processing, leading to increased computational time. On the other hand, on the PAKDD dataset, which has fewer samples and lower imbalance, the ECS-SDE model ranks 4th, with relatively shorter computational time compared to the LSTM-GRU-ANN and LSTM-GRU-MLP deep ensemble models. In contrast, the CNN-BLSTM model has the shortest overall computational time, with an average ranking of 1.75, indicating it completes computations faster across multiple datasets. The average rankings of the BiLSTM-CNN and BiLSTM-Trans-CNN models are 2.25 and 2.00, respectively, with slightly longer computational times than the CNN-BLSTM model. The LSTM-GRU-MLP model has an average ranking of 4.50, with moderate computational time.

Ablation experiment

To analyze the impact of the ECS TabNet training process and the ECS GMDH selective ensemble process on the performance of the ECS-SDE model, we conducted an ablation experiment (Table 14). The experiment compared the credit scoring performance of three models on four datasets. The three models are as follows: (1) ECSTabNet + SRCGMDH selective deep ensemble model, which uses ECS TabNet as the base classifier and applies SRC-based GMDH for selective ensemble; (2) TabNet + ECSGMDH selective deep ensemble model, which uses the traditional TabNet as the base classifier and applies ECS GMDH for selective ensemble; (3) The proposed ECS-SDE model. In the table, bold text highlights the top-performing model in each row.

Table 14 shows that the ECS-SDE model, which combines the two techniques, has the highest average ranking, indicating the best performance in credit scoring. To further analyze whether there are statistically significant differences in the performance of the three models, we used the non-parametric Wilcoxon rank-sum test62. The null hypothesis is that the credit scoring performance of the two models is the same. We define \({R^+}\) as the sum of the ranks where the first model is better than the second, and \({R^ - }\) as the sum of the ranks where the first model is worse than the second. In this study, we set the significance level to \(\alpha =0.05\). At a 95% confidence level, when the number of data sizes is 20, the corresponding critical value (CV) is 52. The results of the rank-sum test comparing the performance of the three models are shown in Table 15. If \(T=\hbox{min} \left( {{R^+},{R^ - }} \right)\) is less than or equal to 52, the null hypothesis is rejected, indicating a statistically significant difference between the two models. Specifically, if \(T={R^ - }\) is less than or equal to 52, it means that the performance of the first model is statistically significantly better than the second model. Conversely, if \(T={R^+}\) is less than or equal to 52, the situation is reversed.

The results in Table 15 show that, at the 95% confidence level, the ECS-SDE model, which uses these two techniques, has statistically significantly better performance than the other two models. However, there is no significant difference in performance between the models that only use the ECS TabNet or the ECS GMDH. This suggests that the combination of the ECS TabNet with the ECS GMDH technique is critical to maximize the performance of the ECS SDE model.

Analysis of model interpretability

In practical scenarios, it is crucial not only to focus on model performance but also to analyze the impact of features on outcomes, especially for real-world applications like credit scoring. For instance, when a loan application is rejected, explaining the reasons to both the customer and manager is important. This section explores the interpretability of the proposed model, including visualizing the ECS GMDH selective ensemble process and analyzing the feature importance of ECS TabNet.

To explain the selection process of base classifiers, this paper visualizes the ECS GMDH network structure. According to the ECS GMDH selective ensemble modeling principle in Sect. 2.3, the prediction results of 20 base classifiers \(\{ {T_1},{T_2},\ldots,{T_{20}}\}\) are used as the initial inputs \(\{ {v_1},{v_2},\ldots,{v_{20}}\}\). These inputs are then combined pairwise through a transfer function \(f(\cdot)\) to generate intermediate candidate models. The selection process follows the ECS-SC external criterion, where candidate models are chosen layer by layer based on the external criterion value. This process continues until the external criterion value reaches its minimum. The result is an optimal complexity model with a multilayer network structure. To visualize this, the selective ensemble process of ECS GMDH and the weight coefficients of each layer are presented.

This paper uses the GMSC dataset as an example. Due to the large number of inputs at each layer, direct explanation is challenging. To simplify the ECS GMDH selective ensemble process, only the combination results of the selected base classifiers are retained (Fig. 4), with the corresponding weights listed in Table 16. By calculating layer by layer from back to front, an embedded polynomial combination function is finally obtained to represent the relationships among the selected optimal base classifiers. For example, in Layer 1, the combination of \({v_4}\)and \({v_9}\) is represented as \({H_1}=f\left( {{v_4},{v_9}} \right){\text{ }}\)\(={w_0}+{w_1}{v_4}+{w_2}{v_9}+{w_3}{v_4}{v_9}\). It is worth noting that the initial inputs \(\{ {v_1},{v_2},\ldots,{v_{20}}\}\) are included in the candidate model set for each layer.

As shown in Fig. 4, on the GMSC dataset, the ECS GMDH model selects 10 optimal initial inputs \(\left( {{v_1},{v_2},{v_4},{v_8},{v_9},{v_{13}},{v_{17}},{v_{18}},{v_{19}},{v_{20}}} \right)\), corresponding to the optimal ECS TabNet base classifiers: \({T_1},{T_2},{T_4},{T_8},{T_9},{T_{13}},{T_{17}},{T_{18}},{T_{19}},{T_{20}}\). Table 16 shows the weights of each layer. Since the complexity of the polynomial functions and the large coefficients of individual terms compared to interaction terms, only the individual terms are retained, with interaction effects ignored. The simplified functional relationship between base classifiers and the prediction result on the GMSC dataset is as follows:

Based on the simplified function expression, we obtain the weights for the selected 10 base classifiers as \(\left\{ {{w_1}={\text{ }} - 1350.74,{w_2}={\text{ }}113.72,{\text{ }} \ldots ,{w_{10}}={\text{ }} - 473.24.74} \right\}\), and the influence of each base classifier on the prediction result: \({T_1}>{T_{20}}>{T_{18}}>{T_4}>{T_2}>{T_8}>\)\({T_{19}}>{T_{13}}>{T_9}>{T_{17}}\). According to the feature importance calculation method of TabNet described in Sect. 2.2, we calculate the importance score of each base classifier for each feature. Let \({S_k}(k=1,2,.,10) \in {{\mathbb{R}}^d}\) be the importance score of the k-th ECS TabNet base classifier for d features. The global importance of a feature reflects its contribution to the overall model performance33. The feature importance scores \(\{ {S_1},{S_2},.,{S_{10}}\}\) output by the 10 selected base classifiers are summed and averaged to obtain the final global importance score for each feature: \({S_{final}}=\sum\nolimits_{{k=1}}^{{10}} {{S_k}} /10\). Figure 5 presents the feature importance plot for the optimal ECS TabNet models selected by ECS GMDH on the GMSC dataset. Detailed feature descriptions are available in Appendix A. Feature importance plots and ECS GMDH selective ensemble results for the other three datasets can be found in Appendix B.

Figure 5 shows that, on the GMSC dataset, the top five most important features for the selected ECS TabNet classifiers \(\left( {{T_1},{T_2},{T_4},{T_8},{T_9},{T_{13}},{T_{17}},{T_{18}},{T_{19}},{T_{20}}} \right)\) are: A2 (Age), A7 (Number of Times 90 Days Late), A4 (Debt Ratio), A9 (Number Of Time 60-89Days Past Due Not Worse), and A3 (Number Of Time 30–59 Days Past Due Not Worse). These features play a significant role in credit scoring prediction, as detailed below:

-

Feature A2 (Age) is generally considered an important factor in credit assessment. Older borrowers typically have more career experience and greater financial stability, which positively impacts their ability to repay loans. Therefore, age has a positive effect on credit scoring, especially when assessing a borrower’s long-term repayment capacity.

-

Feature A4 (Debt Ratio) is a key indicator of a borrower’s level of debt, representing the ratio of debt to income. A higher debt ratio typically signifies that the borrower is under more financial stress and has a weaker ability to repay debt, which increases credit risk. Therefore, A4 is of significant reference value in credit assessment, particularly when evaluating whether a borrower has sufficient repayment capacity.

-

Credit History Features, including A3 (Number of Times 30–59 Days Past Due Not Worse), A7 (Number of Times 90 Days Late), and A9 (Number of Times 60–89 Days Past Due Not Worse). These features directly reflect the borrower’s past repayment behavior. Multiple overdue records are generally seen as a sign of credit risk, as they indicate that the borrower may have had instability in repaying debts in the past. As such, these features help financial institutions better predict the borrower’s future repayment behavior, influencing the approval of loan or credit card applications.

In summary, the five features mentioned above reflect key aspects of the borrower, such as repayment capacity, debt levels, and repayment history. Older age and lower debt ratios generally improve credit assessment, while overdue records highlight past repayment behavior and credit risk, making these features essential for loan or credit card approval decisions.

In contrast, features like A1 (Revolving Utilization of Unsecured Lines), A5 (Monthly Income), A6 (Number of Open Credit Lines and Loans), A8 (Number of Real Estate Loans or Lines), and A10 (Number of Dependents) have a smaller impact on the model’s predictions. While these features have limited influence, they still offer valuable insights into the borrower’s financial situation. Financial institutions should consider these features alongside critical indicators, such as overdue records and debt ratio, for a more comprehensive and accurate risk assessment.

Parameter sensitivity analysis

In this section, the parameter sensitivity analysis is performed to investigate the effect of the parameters \({N_a}\), \({N_d}\), \({N_{step}}\), \(gamma\), and \(momentum\) of ECS TabNet on the performance of the ECS-SDE model. Additionally, the influence of the number of ECS TabNet base classifiers, M, on the performance of the ECS-SDE model in credit scoring is investigated. The impact of the complexity control parameter \(\lambda\) of the ECS GMDH on the performance of the ECS-SDE model is also analyzed. The results of the parameter sensitivity analysis are presented in Appendix C.

Conclusion

This paper proposes the ECS-SDE model and applies it to customer credit scoring. The model constructs an example-dependent cost matrix to generate ECS training subsets. It then integrates the proposed ECS TabNet and ECS GMDH deep neural networks to perform selective deep ensemble modeling. The experimental results show that the ECS-SDE model outperforms other comparison models in terms of overall performance for credit scoring. Notably, the ECS-SDE model shows strong interpretability, which reveals the importance of each feature in credit scoring. This interpretability analysis offers valuable insights for financial institutions to identify and mitigate customer default risk, enabling more precise risk management. In summary, the study provides an effective credit-scoring tool and has practical implications for improving deep learning model interpretability, ultimately reducing economic losses from customer defaults.

This paper offers key insights for financial institution management, including:

-

(1)

It is important to focus on core financial features, taking into account both personal information and financial status. (1) In credit scoring, financial institutions should prioritize core indicators like debt ratio and repayment history, as they directly reflect a borrower’s repayment ability and credit risk. Higher debt ratios and overdue records should trigger closer scrutiny and prompt risk management measures, such as adjusting loan terms or conducting further risk assessments. (2) Institutions should adopt a comprehensive approach in borrower assessments, considering personal information (e.g., age, gender, occupation), financial status (e.g., income, debt ratio), and historical repayment records. This holistic evaluation enhances credit risk scoring and supports the development of more effective risk control strategies.

-

(2)

The improvement of the interpretability and transparency of the model is of great importance for the management of financial institutions. (1) Clear decision-making criteria enhance managers’ understanding of the model’s process, fostering greater trust in its results. (2) Interpretable models enable managers to identify and manage potential risks, facilitating timely actions to sustain operations. (3) A transparent decision-making process ensures regulatory compliance, builds client trust, and supports more accurate business strategies.

Despite the promising potential of the ECS-SDE model in customer credit scoring, certain limitations remain. Future research could focus on the following areas: (1) Enhancing model interpretability. This study relies on feature correlation analysis, which may lead to biased or inconsistent interpretations when applied to complex business logic. Future research should incorporate causal inference techniques to better identify intrinsic feature relationships, enhancing both the accuracy and transparency of model behavior. (2) Optimizing computational resources. Training TabNet and GMDH models on large datasets is computationally intensive. Future research could address this limitation by investigating model compression, quantization, and knowledge distillation to improve efficiency and reduce hardware demands. (3) Expanding to multi-class scenarios. This study is limited to binary classification within the ECS framework. Future research could extend the ECS model to multi-class scenarios, addressing more complex, real-world applications.

Data availability

The datasets analyzed in the current study are publicly available from various sources. The IEEE-CIS Fraud Detection dataset can be accessed from the Kaggle competition (https://www.kaggle.com/competitions/ieee-fraud-detection). The Give Me Some Credit dataset is available at http://www.kaggle.com/c/GiveMeSomeCredit/. The Default of Credit Card Clients dataset is available through the UCI Machine Learning Repository (https://archive.ics.uci.edu/ml/datasets/default+of+credit+card+clients). Additionally, the 2009 Pacific-Asia Knowledge Discovery and Data Mining dataset can be accessed via http://sede.neurotech.com.br:443/PAKDD2009/. The GMDH library is available at https://github.com/kvoyager/GmdhPy. The TabNet library is available at https://github.com/dreamquark-ai/tabnet.

References

Bressan, G., Đuranović, A., Monasterolo, I. & Battiston, S. Asset-level scoring of climate physical risk matters for adaptation finance. Nat. Commun. 15 (1), 5371 (2024).

Petrone, D., Rodosthenous, N. & Latora, V. An AI approach for managing financial systemic risk via bank bailouts by taxpayers. Nat. Commun. 13 (1), 6815 (2022).

Tang, Q., Tong, Z. & Yang, Y. Large portfolio losses in a turbulent market. Eur. J. Oper. Res. 292 (2), 755–769 (2021).

Berger, L. M. et al. Inequality in high-cost borrowing and unemployment insurance generosity in US states during the COVID-19 pandemic. Nat. Hum. Behav. 1–13. https://doi.org/10.1038/s41562-024-01922-8 (2024).

Wang, Y. et al. Hyperspectral estimation of soil copper concentration based on improved TabNet model in the Eastern Junggar Coalfield. IEEE Trans. Geosci. Remote Sens. 60, 1–20 (2022).

Xiao, J. et al. Black-box attack-based security evaluation framework for credit card fraud detection models. INFORMS J. Comput. 35 (5), 986–1001 (2023).

Xiao, J. et al. A novel deep ensemble model for imbalanced credit scoring in internet finance. Int. J. Forecast. 40 (1), 348–372 (2024).

Bahnsen, A. C., Aouada, D. & Ottersten, B. Example-dependent cost-sensitive decision trees. Expert Syst. Appl. 42 (19), 6609–6619 (2015).

Höppner, S., Baesens, B., Verbeke, W. & Verdonck, T. Instance-dependent cost-sensitive learning for detecting transfer fraud. Eur. J. Oper. Res. 297 (1), 291–300 (2022).

Yotsawat, W., Wattuya, P. & Srivihok, A. A novel method for credit scoring based on cost-sensitive neural network ensemble. IEEE Access. 9, 78521–78537 (2021).

Zhao, H. et al. An ensemble learning approach with gradient resampling for class-imbalance problems. INFORMS J. Comput. 35 (4), 747–763 (2023).

Almhaithawi, D., Jafar, A. & Aljnidi, M. Example-dependent cost-sensitive credit cards fraud detection using SMOTE and Bayes minimum risk. SN Appl. Sci. 2 (9), 1–12 (2020).

Janssens, B., Bogaert, M. & Bagué, A. & Van Den Poel, D. B2Boost: Instance-dependent profit-driven modelling of B2B churn. Ann. Oper. Res. 341, 1–27 (2022).

Vanderschueren, T., Verdonck, T., Baesens, B. & Verbeke, W. Predict-then-optimize or predict-and-optimize? An empirical evaluation of cost-sensitive learning strategies. Inf. Sci. 594, 400–415 (2022).

Lenarcik, A. & Piasta, Z. Rough classifiers sensitive to costs varying from object to object. Proc. Int. Conf. Rough Sets Curr. Trends Comput., 222–230 (1998).

Bahnsen, A. C., Aouada, D. & Ottersten, B. A novel cost-sensitive framework for customer churn predictive modeling. Decis. Anal. 2 (1), 1–15 (2015).

Zadrozny, B., Langford, J. & Abe, N. Cost-sensitive learning by cost-proportionate example weighting. Proc. 3rd IEEE Int. Conf. Data Min., 435–442 (2003).

Elkan, C. The foundations of cost-sensitive learning. Proc. Int. Joint Conf. Artif. Intell. 17, 973–978 (2001).

Bahnsen, A. C., Aouada, D. & Ottersten, B. Example-dependent cost-sensitive logistic regression for credit scoring. Proc. Int. Conf. Mach. Learn. Appl. (IEEE), 263–269 (2014).

González, P. et al. Multiclass support vector machines with example-dependent costs applied to plankton biomass estimation. IEEE Trans. Neural Netw. Learn. Syst. 24 (11), 1901–1905 (2013).

Bahnsen, A. C., Aouada, D. & Ottersten, B. Example-dependent cost-sensitive credit scoring using Bayes minimum risk. Proc. Int. Conf. Mach. Learn. Appl. (IEEE), 10 (2014).

Bahnsen, A. C., Stojanovic, A., Aouada, D. & Ottersten, B. Cost sensitive credit card fraud detection using Bayes minimum risk. Proc. 12th Int. Conf. Mach. Learn. Appl. (IEEE). 1, 333–338 (2013).

Bahnsen, A. C., Aouada, D. & Ottersten, B. Ensemble of example-dependent cost-sensitive decision trees. Preprint Submitted May 18, 6609 (2015). https://arxiv.org/abs/1505.04637

Zelenkov, Y. Example-dependent cost-sensitive adaptive boosting. Expert Syst. Appl. 135, 71–82 (2019).

Bhargava, S. et al. A novel example-dependent cost-sensitive stacking classifier to identify tax return defaulters. Proc. Bus. Inf. Syst., 343–353 (2021).

Bhuvaneshwari, K., Kannimuthu, S., Bhanu, D., Karthi, M. & Sagar, K. H. Effective radical driver support system using machine learning methods for connected vehicles. Turk. J. Physiother Rehabil. 32 (2), 1024–1031 (2020).

Saqr, A. E. S., Elshewey, A. M., Raju, S. K. & Eid, M. M. A comprehensive review on optimizing machine learning models for early detection and forecasting of monkeypox outbreaks. J. Artif. Intell. Metaheuristics. 8 (1), 9–20 (2024).

Zuo, C., Zhang, X., Yan, L. & Zhang, Z. G. U. G. E. N. Global user graph enhanced network for next POI recommendation. IEEE Trans. Mob. Comput. 23 (12), 14975–14986 (2024).

Zhu, C. Research on emotion recognition-based smart assistant system: emotional intelligence and personalized services. J. Syst. Manag Sci. 13 (5), 227–242 (2023).

Peng, Y. et al. Unveiling user identity across social media: a novel unsupervised gradient semantic model for accurate and efficient user alignment. Complex. Intell. Syst. 11 (1), 1–28 (2025).

Li, T., Li, Y., Zhang, M., Tarkoma, S. & Hui, P. You are how you use apps: user profiling based on spatiotemporal app usage behavior. ACM Trans. Intell. Syst. Technol. 14 (4), 1–21 (2023).

Mehta, P., Babu, C. S., Rao, S. K. V., Kumar, S. & DeepCatch Predicting return defaulters in taxation system using example-dependent cost-sensitive deep neural networks. Proc. IEEE Int. Conf. Big Data (IEEE), 4412–4419 (2020).

Arik, S.,, Ö. & Pfister (ed, T.) TabNet: attentive interpretable tabular learning. Proc. AAAI Conf. Artif. Intell. 35 6679–6687 (2021).

Cai, Q. & He, J. Credit payment fraud detection model based on TabNet and Xgboot. Proc. 2nd Int. Conf. Consum. Electron. Comput. Eng. (IEEE), 823–826 (2022).

Zhang, L., Ma, K., Yuan, F. & Fang, W. A TabNet based card fraud detection algorithm with feature engineering. Proc. 2nd Int. Conf. Consum. Electron. Comput. Eng. (IEEE), 911–914 (2022).