Abstract

In the context of modern agricultural intelligence, smart picking is becoming a crucial method for enhancing production efficiency. This paper proposes a tomato detection and localization system that integrates the YOLOv5 deep learning algorithm with the SGBM algorithm to improve detection accuracy and three-dimensional localization of tomatoes in complex environments. In a greenhouse setting, 640 tomato images were collected and categorized into three classes: unobstructed, leaf-covered, and branch-covered.Data augmentation techniques, including rotation, translation, and CutMix, were applied to the collected images, and the YOLOv5 model was trained using a warmup strategy.Through a comparative analysis of different object detection algorithms on the tomato dataset, the feasibility of using the YOLOv5 deep learning algorithm for tomato detection was validated. Stereo matching was used to obtain depth information from images, which was combined with the object detection algorithm to achieve both detection and localization of tomatoes. Experimental results show that the YOLOv5 algorithm achieved a detection accuracy of 94.1%, with a distance measurement error of approximately 3–5 mm.

Similar content being viewed by others

Introduction

Smart agriculture is a key link in the modernization of agriculture, bringing together the latest findings of modern science and technology, while working with agricultural production techniques to become an important link in driving productivity. The use of picking robots in the tomato growing industry can result in significant cost savings and increased productivity. At present, the level of automation and practicality of picking robots in China is not high, which leads to the fact that picking robots are not well applied to actual production and remain in the laboratory stage.

In developed countries such as Europe, the United States and Japan, research and implementation of picking robots has been dedicated in recent decades. Typical research projects on picking robots are those studied in the literature1,2,3,4,5,6,7: AUFO for apple picking; CITRUS for citrus picking work; AGROBOT for tomato picking work; and tomato and cucumber picking robots studied by N. Kondo and other researchers.

In China, research on picking robots started late, but has been developing rapidly in recent years, and is still in the laboratory stage. To further improve the accuracy and efficiency of apple recognition as a way to enhance the efficiency of apple-picking robots. The literature8 combined a k-means clustering algorithm, genetic algorithm, and neural network algorithm optimized using minimum mean squared difference for apple fruit recognition. However, the algorithm proposed in that paper is time-consuming and to some extent cannot meet the demand of real-time for picking robots. In the literature9, Li Yuhua discussed some technical keys for tomato target detection, which include the selection of color space model, the selection of segmentation algorithm, and some processing work after the tomatoes are successfully segmented, and compared and elaborated the differences and advantages and disadvantages between various methods.

The literature10 proposed to detect and classify citrus targets using a deep convolutional neural network object detection algorithm, which classified the judgment targets into three categories: unobstructed, branch obscured, and leaf obscured. The detected area is then finely segmented using SVM and restored by circle fitting with the least squares to the fruit obscured by leaves, and the center point of the citrus is obtained. The camera is calibrated using binocular vision, the parallax value of the center of the citrus is calculated, and the 3D coordinates of the center point are calculated and converted to the robot arm coordinate system. In this way, the position of the target is determined and the unreachable points are eliminated by calculating the coordinate values of the target.

With the development of neural networks, the literature11,12,13,14,15,16,17 used convolutional neural networks such as LeNet and YOLO to implement recognition, detection and classification techniques for targets. The recognition training of targets in different scenes and under different illumination effectively improves the effect of complex environment on target recognition and detection, and uses neural networks to classify targets and eliminate the obscured unpickable targets. Achieve certain breakthroughs in occlusion and illumination problems. The literature18 proposed to improve the speed and accuracy of tomato picking robot detection using the constructed RC-YOLOV4 network, but the model of this network is relatively large and it is difficult to deploy this in practical application scenarios. The YOLOV5 algorithm is more easily applied to practical engineering, so the YOLOV5 model is chosen to detect tomatoes. The YOLOV5 model is fused with the SGBM algorithm to achieve tomato detection and localization.

Due to the relatively complex and demanding working environment of a picking robot, the robot needs to accurately identify and locate randomly distributed targets from a complex background that includes sky, land, branches, and leaves. The key to solving this problem is the robot’s vision system, which can drive the robot’s end-effector to complete the picking accurately and quickly only if the robot’s vision system can accurately identify and locate the target in the complex environment. Therefore, an in-depth study of the vision system of picking robots is very urgent. This study focuses on the target identification and localization of tomato-picking robots in complex environments, and the key issues of this study are:

-

(1)

The picking environment is complex and seriously affected by light and other factors.

-

(2)

Tomato targets obscured by branches, leaves or other fruits.

-

(3)

The problem of target recognition and localization efficiency of picking robots.

Materials and methods

Construction of tomato image sample library

Acquisition of tomato image samples

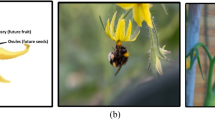

The tomatoes were photographed from multiple directions and angles in a tomato greenhouse, keeping the camera at a distance of about 0.7 m from the tomatoes to ensure that images could be captured at different angles, with different levels of light and different levels of shading. In this paper, the tomato images were labeled using LabelImg software. Some of the acquired tomato images are shown in Fig. 1.

The collected tomato datasets were analyzed and classified into the following three categories according to the different shading degrees of tomatoes: tomatoes with shaded branches, tomatoes with shaded leaves, and tomatoes without shading. The images of tomatoes with different shading degrees are shown in Fig. 2.

Pre-processing of tomato image samples

In order to make the trained network have better detection ability for images with uneven illumination, the brightness of 300 images in the dataset is brightened by 50%, and the brightness of another 300 images is reduced by 30%. In order to be able to make the model with strong noise immunity, pepper noise was randomly added to the 300 images in the dataset in this paper. The pre-processed tomato images are shown in Fig. 3.

For the problem of overlapping and occlusion of tomato targets in detection, this paper uses Cutout19 to simulate the occlusion situation and improve the generalization ability of the model. A square box is randomly selected and then supplemented with zeros to achieve a simulation of the occlusion effect. the Cutout approach to simulate the occlusion situation is shown in Fig. 4.

In the tomato detection task, YOLOv5 enhances its robustness through various strategies. First, data augmentation techniques such as rotation, scaling, color adjustment, and brightness modification are employed to simulate different lighting conditions and background variations. These augmentation methods increase the diversity of the training data, enabling the model to adapt to various environmental changes and enhancing its robustness in complex scenarios such as uneven lighting and cluttered backgrounds. Specifically, rotation and scaling help the model recognize tomatoes from different angles and at various scales, while color and brightness adjustments allow the model to maintain high detection accuracy under different lighting intensities. Multi-scale training enables the model to extract features from different resolutions, effectively handling objects at various scales and adapting to background changes. Additionally, YOLOv5 incorporates adaptive background processing by optimizing convolutional layers and introducing attention mechanisms to accurately distinguish targets from cluttered backgrounds. These combined strategies ensure efficient and stable detection performance in dynamic environments.

Tomato detection with Yolov5 deep learning algorithm

Yolov5 network structure

Yolov4 proposes to divide the target detection network framework into three parts: backbone, head, and neck. The Yolov5 model used in this paper places the neck part in the backbone and head, respectively, and the overall network structure diagram is shown in Fig. 5.

The Backbone section first uses the Focus layer to transform the image from (w*h*d) to (w/2*h/2*4d), as shown in Fig. 6.

BottleNeckCSP and spp layers are used in the BackBone of the network, as shown in Figs. 7 and 8, respectively.

Optimizer selection for Yolov5 training

In this paper, compare the SGD and Adam optimization methods. The training results are shown in Table 1, and the comparison of the loss function is shown in Fig. 9. paper see that the training model converges faster using the SGD optimization method, the model training effect is better and the training time is shorter, so the SGD optimization method is used in this paper.

Choice of data enhancement method at the input of Yolov5 algorithm

Mosaic data enhancement: The idea of Mosaic is to mix data from four images onto one image and allow the neural network to detect content outside the normal context of the image. In this paper, Mosaic data enhancement is used for tomato images, as shown in Fig. 10.

Mixup data enhancement: Mixup enhancement enables the model to identify two targets from a local view on one image and improves the efficiency of training. In this paper, Mixup data enhancement is used for tomato images, as shown in Fig. 11.

Mosaic and Mixup selection: In this paper, Yolov5 was trained with Mosaic enhancement and Mixup enhancement respectively. The training results are shown in Table 2. From the results, the training results of Mosaic are better than Mixup, so the Mosaic data enhancement method is finally chosen to train the data in this paper.

Selection of training strategy for the Yolov5 algorithm

In the training of the YOLOv5 model, we adopted two key strategies to improve the model’s convergence speed and accuracy. First, a linear warmup strategy was employed, gradually increasing the learning rate during the initial stages of training. This strategy helps avoid instability caused by an excessively high initial learning rate. The warmup phase lasted for the first three epochs, starting with a low initial learning rate that gradually increased. After the warmup phase, the learning rate was increased to 0.01.Second, we employed a cosine annealing learning rate decay strategy, where the learning rate gradually decreased during the latter stages of training. This decay strategy helps the model converge more stably and improves its accuracy.

As shown in Fig. 12, the learning rate undergoes a rapid increase at the beginning of training, reaching its peak (around 0.01), and then gradually decreases as training progresses. This warmup and decay strategy not only facilitates a quick performance boost in the early stages but also ensures stability in the later stages of training by progressively reducing the learning rate, preventing overfitting. The combination of these strategies effectively enhances the YOLOv5 model’s training speed, convergence, and stability, particularly showing good convergence and robustness when training on complex datasets.

Positioning principle of tomato picking robot

After the successful identification of the tomato target, in order for the robot to work, it is necessary to obtain information about the position of the tomato target, i.e., the positioning of the tomato needs to be completed. By performing camera calibration, the internal and external parameters of the camera can be obtained. Camera calibration is the corresponding method to obtain these data, and the results, i.e., the parameters needed to quantify the model, are obtained through the calibration process. To find the mapping between the spatial points and the pixel points of the tomato image, a coordinate system is established and the relationships between the coordinate systems are derived. The main coordinate systems involved in this study are the world coordinate system, the camera coordinate system, the image coordinate system, and the pixel coordinate system. The relationship between these four coordinate systems is shown in the figure below. The internal reference matrix of the camera is the conversion relationship from the camera coordinate system to the image coordinate system, and the external reference matrix of the camera is the conversion relationship from the world coordinate system to the camera coordinate system. The four coordinate systems are shown in Fig. 13.

In Fig. 13, OwXwYwZw and OcXcYcZc are the world coordinate system and the camera coordinate system, respectively. oxy and ouv are the image coordinate system and the pixel coordinate system, respectively. Oc is the camera centroid. O is the image centroid. P(XwYwZw) is the real coordinate point of the tomato. p(x,y) is the projection point of point P(XwYwZw) under the image coordinate system.

The world coordinate system is obtained by rotating and translating the camera coordinate system. (xw,yw,zw) denotes the points in the world coordinate system, and (xc,yc,zc) denotes the points in the camera coordinate system. The conversion relationship between the world coordinate system and the camera coordinate system is shown in Eq. (1).

In Eq. (1), R denotes the rotation matrix and T denotes the translation matrix.

The camera coordinate system is obtained from the image coordinate system by the similar triangle principle in the imaging model. This is shown in Fig. 14.

(xc,yc,zc) denotes the points in the camera coordinate system. (x,y) denotes the points under the image coordinate system. According to the principle of similar triangle, Eq. (2) is obtained.

In Eq. (2), f is the focal length. Transforming Eq. (2), can get Eq. (3) and Eq. (4).

According to Eq. (3) and Eq. (4), the augmented form of the matrix can be obtained as:

The image coordinate system and the pixel coordinate system are in the same plane, and the image coordinate system is obtained by translating and scaling the pixel coordinate system. The image coordinate system and the pixel coordinate system are shown in Fig. 15.

The origin of the pixel coordinate system is located in the upper left corner of the image coordinate system. (x,y) denotes the point under the image coordinate system. (u,v) denotes the point under the pixel coordinate system. (u0,v0) denotes the coordinates of the origin under the image coordinate system. The transformation relationship between the pixel coordinate system and the image coordinate system is as follows:

In Eq. (6), dx denotes the physical size of the pixel point in the x-direction. In Eq. (7), dy represents the physical dimension of the pixel point in the y-direction. Equation (6) and Eq. (7) are written in matrix form to obtain Eq. (8).

Eventually, obtain the transformation relation from the world coordinate system to the pixel coordinate system as:

Experiments and results

Experiments with yolov5 training tomato dataset

The experimental platform in this paper uses RTX 2060Ti GPU processor, Windows 10 operating system, CUDA version 11.0, and image acceleration library version cuDNN 11.0. In this paper, 7788 tomato datasets were divided according to the division principle of 6:2:2, as shown in Table 3.

Experimentally verified by selecting optimizer SGD, Mosaic data enhancement, batchsize = 32, epoch = 100. training results are shown in Fig. 16.

To evaluate the goodness of the model, in this paper, use mAP and FPS from the literature20 to evaluate the detection effect of the model and verify the comparison of the model training result metrics. Use 1/5 of the dataset as the test set to calculate the average recognition rate and count the results. The identification results of the test set and the true calibration results of the test set are shown in Figs. 17 and 18.

The PR curve in Fig. 19 shows that the YOLOv5 model performs well in multi-class target detection tasks. The overall mAP@0.5 reaches 0.947, indicating that the model maintains a good balance between precision and recall. For individual categories, the mAP@0.5 for "unoccluded tomato," "leaf occluded," and “branch occluded” are 0.941, 0.953, and 0.946, respectively. This demonstrates that the model has high detection accuracy across different categories, reducing the risk of false detection and missed detection.

This paper analyzes common object detection algorithms such as SSD, Faster R-CNN, and YOLOv5, and compares their performance in tomato detection tasks. SSD, although advantageous in terms of real-time performance, suffers from lower accuracy under complex backgrounds and lighting variations, especially when the target is small or the background is cluttered, leading to frequent false detections. Faster R-CNN performs better in terms of accuracy, but it has a large computational load, and its training and inference speeds are slower, making it unsuitable for real-time applications. Compared to YOLOv4, which achieves a balance between accuracy and speed, YOLOv5 is more efficient in structure optimization and training strategies, providing higher detection accuracy and faster inference speed under the same conditions. In comparison, YOLOv5 responds quickly while maintaining high accuracy. Its multi-scale feature extraction and adaptive background handling enable it to perform excellently even in challenging environments such as lighting changes, cluttered backgrounds, and shadow occlusions. Therefore, YOLOv5 performs the best in tomato detection tasks, especially in applications requiring both real-time performance and high accuracy, which is why YOLOv5 is chosen for tomato detection in this study.The experimental results are shown in Table 4.

3.2. Experiments with binocularly positioned tomatoes.

Binocular calibration

In this paper, use Matlab calibration toolbox to calculate the focal length and offset of the camera using Zhengyou Zhang’s calibration method21 and calculate the aberration coefficient of the camera using Brown aberration model. The steps are as follows.

-

(1)

Print an 11*8 checkerboard grid. Each square of the checkerboard grid measures 30 mm*30 mm, and need to ensure that the surface of the checkerboard grid is clear when printing. The checkerboard calibration board used in this paper is shown in Fig. 20.

-

(2)

Fix the camera. Rotate and move the calibration plate to take images of the plate at different angles. Ensure the whole calibration plate is in the image field of view when shooting. Shoot the final selection 21 to the image for camera calibration. The calibration images in this paper are shown in Fig. 21.

-

(3)

Use Matlab’s calibration toolbox to calibrate the images. Firstly, open the calibration toolbox and add the left and right camera images.And click “Calibrate” to start the calibration. The calibration process is shown in Fig. 22.

-

(4)

In Fig. 23, the histogram at the bottom left is the error. Remove the images with larger errors according to this histogram.

-

(5)

Finally, use "Export camera parameters" to export the parameters. This paper uses the toolbox to calibrate the internal and external parameters of the camera as shown in Table 5.

3D spatial positioning of tomatoes

Firstly, perform monocular camera calibration and binocular stereo calibration on the binocular camera to obtain the internal and external camera parameters and the position relationship matrix between the left and right cameras. Use aberration correction and stereo correction to reduce the pixel position changes due to image distortion.

In this paper, obtain the coordinates of tomato fruit centers of the left and right images by target detection and use the stereo-matching method to obtain the region of the same tomato represented in the left and right images. Finally, the coordinates of the center point of the tomato fruit are obtained using the triangle law.

The Lena CV USB3.0 binocular camera is used. A binocular camera was used to capture the tomato image as shown in Fig. 23.

Pass the 2D coordinates of the tomato centroids calculated during the Yolov5 target detection into the SGBM algorithm. The corresponding 3D coordinates and depth distance are calculated. The calculation results are shown in Table 6.

The table presents the three-dimensional coordinates of tomatoes obtained by integrating the SGBM algorithm with YOLOv5, along with the actual distances between the tomatoes and the camera, the detected distances, and detection times. These coordinates accurately represent the spatial position of the center of the tomato.

During the experiment, the distances between the camera and the two tomatoes were 300 mm and 270 mm, respectively. After calculating six groups of coordinate points, the measurement error was consistently around 5 mm, primarily influenced by variations in lighting conditions. This demonstrates that the algorithm can effectively estimate the distance between the tomato and the camera under different lighting environments, exhibiting strong robustness and accuracy.Moreover, the detection time data in the table highlights the computational efficiency of this method. The processing time fluctuates between 1.16 and 1.51 s, with an average of 1.27 s. This is particularly critical for agricultural automation, as such applications often require real-time decision-making capabilities. The experimental results confirm that the proposed algorithm meets the temporal requirements for real-time operations, making it suitable for applications such as automated tomato harvesting.

Conclusions

This paper constructs a tomato image dataset, compares and analyzes the detection performance of different object detection algorithms for tomato recognition, validates the feasibility of the YOLOv5 deep learning algorithm for tomato recognition, and selects appropriate training strategies for training. The YOLOv5 deep learning algorithm is integrated with the SGBM algorithm to design a detection and localization system for the tomato picking robot.However, the complexity of real-world tomato-picking environments continues to pose challenges to the system’s real-time performance and accuracy. Factors such as dense vegetation and cluttered backgrounds can significantly impact detection reliability.Future research should prioritize optimizing the robustness and adaptability of the algorithms, thereby enhancing the overall performance of tomato-picking robots in dynamic and unpredictable conditions.

Data availability

The data presented in this study are available on request from the corresponding author.

References

Buemi, F., Massa, M., Sandini, G. Agrobot: a robotic system for greenhouse operations. 4th Workshop on robotics in Agriculture. IARP, Tolouse, pages 172184, (1995).

Kondo, N. et al. Control method for 7DOF robot to harvest tomato. Proceedings of Asian Control Conference, (1994), 1- 4.

Kondo, N. et al. Visual feedback guided robotic cherry tomato harvesting. Trans. ASAE 39(6), 2331–2338 (1996).

Pla, F., Juste, F. & Ferri, F. Feature extraction of spherical objects in image analysis: An application to robotic citrus harvesting. Co mput. Electron. Agricult. 8, 57–72 (1993).

Benady, M., Miles, G. Locating melons for robotic harvesting using structured light. Pap. - Am. Soc. Agric. Eng. (1992), 927021.

Arima, S. et al. Studies on cucumber harvesting robot (Part 1). J. Jpn. Soc. Agricult. Machin. 56, 55–64 (1994).

Kondo, N. et al. Basic studies on robot to work in vine yard. J. Jpn. Soc. Agricult. Machin. 55, 85–94 (1993).

Jia, W. K. et al. Apple recognition based on K-means and GA-RBF-LMS neural network applicated in harvesting robot. Trans. CSAE. 31, 175–183 (2015).

Li, Y. H. Tomato picking robot target detection and grasping key technology research. Trans. New Technol. New Products China 12, 55–57 (2018).

Hu, Y.C. Research on target recognition and location method of citrus picking robot in natural environment. Master Thesis, Chongqing University of Technology, Chongqing, (2018). 3.25.

Liu, Z. C., Zhu, Y. X., Wang, H., Tian, Li. & Feng, S. L. Multi-target real-time detection based on convolutional neural network. Trans. Comput. Eng. Des. 40, 1085–1090 (2019).

Ai, L. M. & Ye, X. N. Object detection and classification based on circular convolutional neural network. Trans. Comput. Technol. Dev. 28, 31–35 (2018).

Huang, X. H., Su, H., Peng, G. & Xiong, C. Object identification and pose detection based on convolutional neural network. Trans. Huazhong Univ. Sci. Tech. 45, 7–11 (2017).

Yin, X. & Yan, L. Image object detection based on deep convolutional neural network. Trans. Ind. Control Comput. 30, 96–97+100 (2017).

Cheng, H. F. Research on apple image recognition technology based on improved LeNet convolution neural network in natural scene. Trans. Food Machin. 35, 155–158 (2019).

Cai, S. P., Sun, Z. M., Liu, H., Wu, Y. X. & Zhuang, Z. Z. Real-time detection methodology for obstacles in orchards using improved YOLOv4. Trans. Chin. Soc. Agricul. Eng. 37, 36–43 (2021).

Liu, G. X., Nouaze, J. C., Touko, P. L. & Kim, J. H. YOLO-Tomato: a robust algorithm for tomato detection based on YOLO v3. Sensors 20, 1–20 (2020).

Zheng, T. X., Jiang, M. J., Li, Y. F. & Feng, M. C. Research on tomato detection in natural environment based on RC-YOLOv4. Comput. Electron. Agric. 198, 107029. https://doi.org/10.1016/j.compag.2022.107029 (2022).

Terrance, D., Graham, W.T. Improved regularization of convolutional neural networks with CutOut. arXiv preprint arXiv: 1708.04552, (2017). 3.

Everingham, M. et al. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vision 88, 303–338 (2010).

Lu, Y., Wang, H. Q., Tong, W. & Li, J. J. Study on selection of typical city residential heating mode in hot summer and cold winter zone. Xi’an Univ. Arch. Tech. 46, 860–864+870 (2014).

Funding

This research was funded by General Project of Education of National Social Science Foundation of China, Research on Educational Robots and Their Teaching System, BIA200191.

Author information

Authors and Affiliations

Contributions

Conceptualization, Jianwei Zhao and Wei Bao; methodology, Jianwei Zhao and Leiyu Mo; software, Zhiting Li; validation, Yushuo Liu and Jiaqing Du; formal analysis, Jiaqing Du; investigation, Zhiting Li; resources, Jiaqing Du; data curation, Zhiting Li; writing—original draft preparation, Yushuo Liu; writing—review and editing, Jianwei Zhao and Yushuo Liu; visualization, Zhiting Li; supervision, Jiaqing Du; project administration, Jianwei Zhao.; funding acquisition, Jianwei Zhao, Wei Bao and Leiyu Mo. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhao, J., Bao, W., Mo, L. et al. Design of tomato picking robot detection and localization system based on deep learning neural networks algorithm of Yolov5. Sci Rep 15, 6180 (2025). https://doi.org/10.1038/s41598-025-90080-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-90080-6