Abstract

Traditional spectral clustering algorithms are sensitive to the similarity matrix, which impacts their performance. To address this, a local adaptive fuzzy spectral clustering (FSC) method is introduced, incorporating a fuzzy index to reduce this sensitivity. FSC also simplifies the traditional process through a local adaptive framework, optimizing the similarity matrix’s use. Experimental results show that FSC outperforms traditional methods, particularly on high-dimensional datasets with complex structures.

Similar content being viewed by others

Introduction

Cluster analysis is a key method in data mining, categorizing datasets based on similarity, According to different clustering rules, common clustering methods include hierarchical clustering1,2,3, K-means clustering4,5,6,7, fuzzy C-means clustering8,9,10,11,12, spectral clustering13,14,15, and multi-view clustering16,17,18,19, among others.

Spectral clustering, based on graph theory, constructs undirected graphs where edge weights represent sample similarity. It involves two main steps: embedding a low-dimensional similarity matrix between samples and using a partition-based algorithm (e.g., K-means clustering method) for clustering.

Traditional spectral clustering relies on K-means for clustering. To address this, article14 proposes a local adaptive clustering framework that learns an efficient similarity matrix and the dataset’s clustering structure simultaneously. This framework improves traditional spectral clustering by eliminating the need for K-means, allowing the learnt similarity matrix to be directly used for clustering. Experimental results demonstrate that this framework enhances clustering performance while simplifying the spectral clustering process.

Spectral clustering, a type of hard clustering20, excels with linear or structurally clear datasets. However, it struggles with complex datasets, such as high-dimensional or nonlinear ones, where the constructed graph fails to accurately reflect sample similarity, weakening clustering performance.

Fuzzy clustering methods21,22,23,24 have shown better performance on complex datasets by using soft partitioning. Experimental results indicate that introducing fuzzy operators enhances clustering performance on complex datasets.

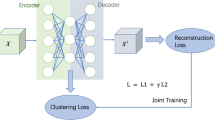

To reduce the impact of the similarity matrix on spectral clustering performance, this study introduces a local adaptive fuzzy spectral clustering (FSC) approach, FSC integrates a fuzzy index into the similarity matrix, mitigating its influence and improving clustering results. Additionally, FSC simplifies the spectral clustering process by allowing direct use of the similarity matrix for clustering. The main contributions of this study are summarized as follows:

-

1.

By introducing the fuzzy index, the clustering performance of FSC has a low sensitivity to the similarity matrix of the dataset, that is, the dependence of FSC on the similarity matrix is low. Therefore, FSC method has a good adaptability. Moreover, by introducing fuzzy parameters, FSC can be flexibly adapted to different datasets, so the adaptability of FSC can also be further improved.

-

2.

Owing to the introduction of the idea of “soft partition”, FSC can effectively deal with the problem of datasets with more complex structures. Compared to other spectral clustering methods, FSC has improved its clustering performance on high dimensional datasets or nonlinear data sets. Therefore, FSC improves its practicability.

-

3.

FSC adopts a new spectral clustering framework, which can adaptively optimize the optimal similarity matrix and clustering structure at the same time. Hence the similarity matrix can be directly used to do clustering, it simplifies the process of spectral clustering method.

-

4.

Experimental results show FSC outperforms other methods in most cases both on linear datasets and complex datasets.

Related works

Normalize cut

Normalize cut (NC)13,25 is a classical spectral clustering method that focuses on global features of datasets rather than consistent features. Assume that \(G=\{{\varvec{V}},{\varvec{E}}\}\) denotes the set of graph, where \({\varvec{V}}\) is the set of nodes and \({\varvec{E}}\) is the set of edges. Suppose \(G\) can be divided into two independent sets \({\varvec{A}}\) and \({\varvec{B}}\). And the error of dividing is denoted as \(cut({\varvec{A}},{\varvec{B}})={\sum }_{{\varvec{u}}\in {\varvec{A}},{\varvec{v}}\in {\varvec{B}}}w({\varvec{u}},{\varvec{v}})\), where \(w({\varvec{u}},{\varvec{v}})\) represents the weight of the node \({\varvec{u}}\) and the node \({\varvec{v}}\). So the objective function of NC is defined as follows:

where \(assoc({\varvec{A}},{\varvec{V}})={\sum }_{u\in {\varvec{A}},t\in {\varvec{V}}}w(u,t)\) represents the sum of the weights of edges between all nodes of \({\varvec{A}}\), and the \(assoc({\varvec{B}},{\varvec{V}})\) has the same definition. In order to represent the weight of each edge, suppose \({\varvec{z}}=\left[{z}_{1},{z}_{2},...,{z}_{N}\right]\in {R}^{1\times N}\) denotes the vector, whose element \({z}_{i}=1\) represents the i-th node belong to the set \({\mathbf{A}}\), otherwise, \({z}_{i}=-1\). Then the weight of each edge can be defined by the similarity between two nodes, i.e.,

Let \({\varvec{D}}=({d}_{1},{d}_{2},...,{d}_{N})\) be the sum vector, whose element represents the sum of similarity between the i-th node and other all nodes. Then Eq. (1) can be transformed into:

So optimize Eq. (1) is equal to optimize Eq. (4), we have

According to13, Eq. (4) is equal to:

In the equation, the term in the numerator, \(\begin{array}{c}{y}^{T}(D-S)y\end{array}\), represents the cut of the graph, where \(S\) is the similarity matrix and \(D\) is the degree matrix. The term in the denominator, \({y}^{T}Dy\), is a normalization factor, typically used to prevent trivial solutions. The constraint \({y}^{T}D1=0\) ensures that the solution vector \(y\) is orthogonal to the \(D\)-weighted all-one vector, which helps prevent solutions that are biased towards any particular cluster. The set to which each \({y}_{i}\) belongs represents constraints on the possible values for the entries of \({y}\). These values are derived based on the degrees \({d}_{i}\) and a vector \({z}\), where \({z}_{i}\) likely relates to the membership of the ith data point in a cluster or attributes of the data point. The constraints on \({y}_{i}\) introduce a fuzzy element, indicating that the method allows for soft clustering, where data points can have varying degrees of membership within clusters.

Then Eq. (5) can become further

where \({{\varvec{L}}}{\prime}={{\varvec{D}}}^{-(\frac{1}{2})}({\varvec{D}}-{\varvec{W}}){{\varvec{D}}}^{(-\frac{1}{2})}\) and \({\varvec{H}}={{\varvec{D}}}^{-\frac{1}{2}}y\).

According to13, the optimal solution of Eq. (6) is \({\varvec{U}}\in {{\varvec{R}}}^{N\times k}\), it generated by selecting the corresponding eigenvectors of the \(k\) smallest eigenvalues of \({{\varvec{L}}}{\prime}\), and each row of \({\varvec{U}}\) is normalized into 1. Finally, the K-means clustering method is adopted to partition \({\varvec{U}}\) into \(k\) clusters.

From the above the description, we can find the only input of NC is the similarity of datasets, after exerting NC, an optimal matrix (i.e., \({{\varvec{L}}}{\prime}\)) is obtained to generate the clustering structure (i.e., \({\varvec{U}}\)). Finally, exerting K-means on the clustering structure to obtain the clustering results.

However, we can also observe that the clustering performance of NC is seriously depended on the input of similarity of datasets.

Fuzzy C-means clustering method

Fuzzy C-means clustering (FCM)8 is a frequently utilized fuzzy clustering technique. It improves upon K-means by incorporating a fuzzy index, which enhances its ability to handle uncertainty. The objective function of FCM is defined as follows:

FCM optimizes \({u}_{ij}\) and \({{\varvec{c}}}_{j}\) by iteratively updating, and the updated rules are:

where, \({u}_{ij}\) represents the degree of the sample \({{\varvec{x}}}_{i}\) belonging to j-th cluster, the larger \({u}_{ij}\), the more possibility of \({{\varvec{x}}}_{i}\) belonging to j-th cluster. \({{\varvec{c}}}_{j}\) represents the j-th cluster center. \(m\) is the fuzzy index, by setting the value of \(m\), the influence of \({u}_{ij}\) on the clustering performance can be adjusted, Experimental results show that the clustering performance of FCM can be improved by introducing the fuzzy index.

In this context, \({u}_{ij}\) denotes the degree to which sample \({{\varvec{x}}}_{i}\) belongs to the \(j\) cluster. The higher the \({u}_{ij}\), the more likely \({{\varvec{x}}}_{i}\) is part of the \(j\) cluster. The variable \({{\varvec{c}}}_{j}\) represents the center of the \(j\) cluster. The parameter \(m\) is the fuzzy index, which adjusts the impact of \({u}_{ij}\) on clustering performance by altering its value.Experimental results indicate that introducing the fuzzy index enhances FCM’s clustering performance. So we will explain that how to introduce the fuzzy index into spectral clustering and simultaneously simplify the process of spectral clustering.

A local adaptive fuzzy spectral clustering method

Objective function

Assume that \({\varvec{X}}\in {{\varvec{R}}}^{n\times d}\) represents the training sample set, where \(n\) is the total number of training samples, \(d\) is the number of dimensions, and \(c\) is the number of clusters. Let \({\varvec{S}}\in {{\varvec{R}}}^{n\times n}\) denote the similarity matrix, with each element \({s}_{ij}(i,j=\text{1,2},...,n)\) representing the similarity between the \(i\) and \(j\) samples. Similarly,\({\varvec{D}}\in {{\varvec{R}}}^{n\times n}\) is the distance matrix \(X\), with \({d}_{ij}\) as the distance between the \(i\) and \(j\) samples, calculated using the Euclidean distance, \({d}_{ij}={\Vert {{\varvec{x}}}_{i}-{{\varvec{x}}}_{j}\Vert }_{2}\). By incorporating the fuzzy index into the local adaptive spectral clustering framework and referencing the objective function of FCM, we define the objective function of FSC as follows:

Obviously, compared to classical spectral clustering method, the optimized similarity matrix \({\varvec{S}}\) becomes more sparser so as to be directly used to partition without K-means clustering method. Besides, by means of the fuzzy index, it can reduce the impact of the similarity matrix \({\varvec{S}}\) on the clustering results and improve the adaptability of the spectral clustering.

Although the sparsity of similarity matrix \({\varvec{S}}\) has been improved by introducing the fuzzy index, it can not guarantee the datasets can be partitioned into exact clusters because the number of the connected component of similarity matrix \({\varvec{S}}\) can not be equal to \(c\). In order to the optimal similarity matrix \({\varvec{S}}\) can be divided into exact \(c\) clusters, referring to14, we add a rank constraint \(rank({{\varvec{L}}}_{{\varvec{S}}})=n-c\) into the Laplacian matrix of \({\varvec{S}}\). And the new objective function is as follows:

According to14, by adding the rank constraint into the Laplacian matrix of \({\varvec{S}}\), the optimized \({\varvec{S}}\) has exact \(c\) connected components, which is equal to the number of clusters. Compared to the objective function in14, by introducing the fuzzy index, the new objective function further improves the sparsity of the similarity matrix and simultaneously improves the anti-sensitivity of spectral clustering method, it improves the adaptivity of FSC.

Optimizing objective function

In order to optimize the Eq. (11), we firstly need to transform the Eq. (11). Suppose \({\sigma }_{i}({{\varvec{L}}}_{{\varvec{S}}})\) denotes the i-th smallest eigen value of \({{\varvec{L}}}_{{\varvec{S}}}\), since \({{\varvec{L}}}_{{\varvec{S}}}\) is a semi-definite positive matrix, then we have \({\sigma }_{i}({{\varvec{L}}}_{{\varvec{S}}})\ge 0\). Considering \(\lambda\) is enough large, Eq. (11) can be transformed as follows:

When \(\lambda\) is enough large, the second term of Eq. (12) trends to zero during the optimization of Eq. (12), so the constraint \(rank({{\varvec{L}}}_{{\varvec{S}}})=n-c\) is satisfied. According to Ky Fan’s theory26, we have

where \({\varvec{F}}\) is the generated by the \(c\) corresponding eigenvectors of the \(c\) smallest eigenvalues of \({{\varvec{L}}}_{{\varvec{S}}}\), then Eq. (12) can be further transformed into:

Obviously, compared to Eq. (12), optimizing Eq. (14) is easier operator. So we propose an alternate optimization method to optimize Eq. (14), the main process is summarized as follows:

When \({\varvec{S}}\) is fixed, optimizing \({\varvec{F}}\). Equation (14) becomes

The optimal solution of Eq. (15) is generated by the \(c\) corresponding eigenvectors of the \(c\) smallest eigenvalues of \({{\varvec{L}}}_{{\varvec{S}}}\).

When \({\varvec{F}}\) is fixed, optimizing \({\varvec{S}}\). Equation (14) becomes

Considering \({{\sum }_{i,j=1}^{n}\Vert {{\varvec{f}}}_{i}-{{\varvec{f}}}_{j}\Vert }_{2}^{2}{s}_{ij}=2Tr({{\varvec{F}}}^{T}{{\varvec{L}}}_{{\varvec{S}}}{\varvec{F}})\),\({\varvec{F}}\in {{\varvec{R}}}^{n\times c}\) and whose element \({{\varvec{f}}}_{i}\) represents the i-th column of \({\varvec{F}}\). Then Eq. (16) becomes:

For the optimization of Eq. (17), we adopt the Lagrange multiplier method to solve the problem, and the Lagrange function is as follows:

where \({\alpha }_{i}\) is the Lagrange multiplier, then let \(\frac{\partial J}{\partial {s}_{ij}}=0\), we can have

Then we have:

Inverting Eq. (20) into \({\sum }_{j=1}^{n}{s}_{ij}=1\), we can obtain \({\alpha }_{i}\), then inverting into Eq. (20), we have:

Time complexity

The main time complexity of the proposed method FSC is concentrated on Step1, Step2.1, Step2.2 of Algorithm 1. Step1 initializes the similarity matrix \({\mathbf{S}}\), it requires \({\rm O}(n^{2} dk)\). Step2.1 resolves the eigenvalues of \({\mathbf{L}}_{{\mathbf{S}}}\), it requires \({\rm O}(n^{2} d + n^{3} )\). The time complexity of Eq. (21) is \({\rm O}(mn^{2} dk)\), so the time complexity of Step2.2 is \({\rm O}(mn^{2} dk)\). Therefore, the total time complexity of Algorithm1 is \({\rm O}(n^{2} dk + t(n^{2} d + n^{3} + mn^{2} dk))\), where t is the number of the iteration. From the above descriptions, we can observe that the time complexity of FSC is related to the n, d and k.

Experimental and results

Experimental setting

To evaluate the clustering performance of FSC, three experiments were conducted on different datasets. The first experiment used four simple 2D simulation datasets, the second involved 10 commonly used UCI datasets, and the third focused on high-dimensional or large-sample datasets with complex structures. Comparing FSC with K-means, FCM, NC, and adaptive neighbors clustering (CAN)14, the study comprehensively assessed FSC’s effectiveness and applicability across various datasets.

To evaluate FSC’s performance on 2D datasets, we generated four simulation datasets with varied structures: clear structures (SD1, SD4), uncertainty (SD2, SD4), and large scales (SD1, SD2). Detailed information is provided in Fig. 1.

To assess FSC’s practicality, we selected 10 complex datasets from the UCI repository and five high-dimensional datasets, including images and fonts. These datasets, with their higher dimensions and complex structures, provide a robust test for evaluating FSC’s effectiveness.

For the K-means method, the parameter k represents the number of clusters. In the FCM (Fuzzy C-Means) method, the parameter m is set to 2. For the NC method, the parameter σ is set to 1. In the CAN method, the parameter γ represents the average distance between all samples. In the FSC method, the parameter m ranging from {1.5, 2, 2.5, 3, 3.5} and the parameter β ranging from {50, 60, 70, 80, 100}.

Evaluation criterion

In our experiments, two commonly used clustering indexes are selected as evaluation indexes, which are clustering accuracy (ACC)27 and normalized mutual information (NMI)28. The calculation formulas are as follows:

where \(n\) is the total number of all the training samples, \({l}_{i}\) and \({m}_{i}\) represent the number of true labels and predicted labels respectively, \(\delta\) is the comparative function. \({\varvec{X}}\) represents the true labels of datasets and \({\varvec{Z}}\) represents the predicted labels of datasets, \(I({\varvec{X}},{\varvec{Z}})\) represents the mutual information between \({\varvec{X}}\) and \({\varvec{Z}}\), \(H({\varvec{X}})\) and \(H({\varvec{Z}})\) represent the entropy of \({\varvec{X}}\) and \({\varvec{Z}}\) respectively.

Experimental results and conclusion

On simulation datasets

To verify FSC’s effectiveness on simulation datasets, SD1–SD4 were used. K-means, FCM, and NC were run 20 times to report average results, while CAN and FSC were run once. For FSC, parameter values were optimized based on the highest NMI value. Results are shown in Fig. 2.

Figure 2 show that FSC performs well in clustering. For clearly shaped datasets, CAN and FSC outperform other methods. However, in datasets with uncertainty, FSC’s use of a fuzzy index mitigates the impact of the similarity matrix, leading to better performance compared to CAN. Overall, FSC demonstrates strong clustering performance on both clear and uncertain datasets.

On the real datasets and high dimensional datasets

To verify FSC’s practicality on real datasets, we selected 10 datasets from the UCI database. Furthermore, in order to further verify its applicability in high-dimensional datasets, five high-dimensional datasets are added in this Experimental results are presented in Table 1.

Table 1 shows that FSC outperforms other methods, including K-means, FCM, and NC, on real datasets due to its ability to effectively capture local information. FSC, by incorporating fuzzy ideas, reduces the impact of the similarity matrix and enhances performance, particularly on high-dimensional datasets, compared to CAN.

Running speed analysis

In order to evaluate the running speed of all methods, we compare the once running time of each method on the real datasets (UCI datasets and high-dimensional datasets) under the optimal parameters. The experimental results are shown in Table 2.

For large-scale datasets (e.g., Coil, Mnist, MSRA, Palm, USPS), spectral clustering takes more time due to similarity matrix calculations. The method CAN and FSC need the most running time because they use the solution method of alternate optimization. FSC is slower than CAN because the introduction of the fuzzy index adds to the computation.

Statical analysis

In this experiment, we use the Friedman test with the post-Holm test29 to evaluate FSC’s classification performance compared to other methods in terms of ACC on real and high-dimensional datasets. The Friedman test assesses overall statistical significance, while the post-Holm test compares FSC with other methods. Results are reported in Tables 3 and 4.

Table 3 shows the ranking of methods based on the Friedman test, with lower values indicating better performance. FSC has the best classification performance due to its lowest ranking value. The null hypothesis, which assumes no significant difference among methods, is rejected since the p-value (0.000001) is below 0.005, confirming significant differences among all methods.

Table 4 presents p-values and statistical magnitudes comparing FSC with other methods. The null hypothesis of no significant difference is rejected if the p-value is below 0.005. The results in Table 4 show statistically significant differences between FSC and all the comparative methods.

Conclusions

To address the high sensitivity of clustering results to the input similarity matrix, this study introduces a local adaptive fuzzy spectral clustering method (FSC). FSC reduces the impact of the similarity matrix by incorporating a fuzzy index and simplifies the spectral clustering process by directly using the learnt similarity matrix. The fuzzy index also enhances FSC’s ability to handle uncertainties, improving clustering performance on complex datasets. Experimental results demonstrate that FSC performs better on both simulation and real datasets, including high-dimensional datasets.

Data availability

The code presented in this study is available upon request from the corresponding author.

References

Johnson, S. C. Hierarchical clustering schemes. Psychometrika 32(3), 241–254 (1967).

Crnkić, A. & Jaćimović, V. Data clustering based on quantum synchronization. Nat. Comput. 18(4), 907–911 (2019).

Bulychev, Y. G., Nasenkov, I. G. & Chepel, E. N. Cluster variational-selective method of passive location for triangulation measuring systems. J. Comput. Syst. Sci. Int. 57, 179–196 (2018).

Hartigan, J. A., & Wong, M. A. Algorithm AS 136: A k-means clustering algorithm. J. R. Stat. Soc. Ser. C (Applied Statistics), 1979, 28(1): 100–108.

Dandea, V., Grigoras, G., & Neagu, B. C. K-means clustering-based data mining methodology to discover the prosumers’ energy features. In 2021 12th International symposium on advanced topics in electrical engineering (ATEE), 2021, 1–5.

Shweta, S. D., & Barve, S. S. External feature based quality evaluation of Tomato using K-means clustering and support vector classification. In 2021 5th International conference on computing methodologies and communication (ICCMC). 2021, 192–200.

Niu, B., Duan, Q., Liu, J., Tan, L. & Liu, Y. A population-based clustering technique using particle swarm optimization and k-means. Nat. Comput. 16, 45–59 (2017).

Bezdek, J. C. Pattern recognition with fuzzy objective function algorithms. Springer. 2013.

Liu, Z., Bai, X., Liu, H. & Zhang, Y. Multiple-surface-approximation-based FCM with interval memberships for bias correction and segmentation of brain MRI. IEEE Trans. Fuzzy Syst. 28(9), 2093–2106 (2019).

Bai, X., Zhang, Y., Liu, H. & Chen, Z. Similarity measure-based possibilistic FCM with label information for brain MRI segmentation. IEEE Trans. Cybern. 49(7), 2618–2630 (2018).

Zhu, L. F., Wang, J. S. & Wang, H. Y. A novel clustering validity function of FCM clustering algorithm. IEEE Access 7, 152289–152315 (2019).

Utomo, V., and Marutho, D. Measuring hybrid SC-FCM clustering with cluster validity index. In 2018 International seminar on research of information technology and intelligent systems (ISRITI), 2018, 322–326.

Ng, A., Jordan, M., & Weiss, Y. On spectral clustering: Analysis and an algorithm. Adv. Neural Inf. Process. Syst. 2001, 14.

Wang, Q., Qin, Z., Nie, F. & Li, X. Spectral embedded adaptive neighbors clustering. IEEE Trans. Neural Netw. Learn. Syst. 30(4), 1265–1271 (2018).

Borzov, A. B., Labunets, L. V. & Steshenko, V. B. Noncanonical spectral model of multidimensional uniform random fields. J. Comput. Syst. Sci. Int. 57, 874–889 (2018).

Huang, Z. et al. Dual self-paced multi-view clustering. Neural Netw. 140, 184–192 (2021).

Xie, Y. et al. Joint deep multi-view learning for image clustering. IEEE Trans. Knowl. Data Eng., 2020; 33(11): 3594–3606.

Yao, X., Chen, X., Matveev, I. A., Xue, H. & Yu, L. Semi-paired multiview clustering based on nonnegative matrix factorization. J. Comput. Syst. Sci. Int. 58, 579–594 (2019).

Daneshfar, F. et al. Elastic deep multi-view autoencoder with diversity embedding. Inf. Sci. 689, 121482 (2025).

Baek, M. & Kim, C. A review on spectral clustering and stochastic block models. J. Korean Stat. Soc. 50, 818–831 (2021).

Talukdar, N. A. & Halder, A. Partially supervised kernel induced rough fuzzy clustering for brain tissue segmentation. Pattern Recognit. Image Anal. 31, 91–102 (2021).

A. B. Ayed, M. B. Halima, & A. M. Alimi. Adaptive fuzzy exponent cluster ensemble system based feature selection and spectral clustering. In 2017 IEEE international conference on fuzzy systems (FUZZ-IEEE),2017, 1–6..

Azarkasb, S. O., Khasteh, S. H. A new approach for mapping of soccer robot agents position to real filed based on multi-core fuzzy clustering. In 2021 26th International Computer Conference, Computer Society of Iran (CSICC), 2021, 1–5.

Parvathavarthini, S., Naveenkumar, R. V., & Chowdry, S. Fuzzy clustering using Hybrid CSO-PSO search based on Community mobility during COVID 19 lockdown. In 2021 5th International conference on computing methodologies and communication (ICCMC).2021, 1515–1519.

Dutta, A., Engels, J. & Hahn, M. Segmentation of laser point clouds in urban areas by a modified normalized cut method. IEEE Trans. Pattern Anal. Mach. Intell. 41(12), 3034–3047 (2018).

Fan, K. On a theorem of Weyl concerning eigenvalues of linear transformations I. Proc. Natl. Acad. Sci. 35(11), 652–655 (1949).

Ding, S., Zhang, N., Zhang, J., Xu, X. & Shi, Z. Unsupervised extreme learning machine with representational features. Int. J. Mach. Learn. Cybern. 8(2), 587–595 (2017).

Strehl, A., & Ghosh, J. Cluster ensembles: A knowledge reuse framework for combining multiple partitions. J. Mach. Learn. Res., 2003, 3(Dec): 583–617.

Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res., 2006, 7: 1–30.

Acknowledgements

We are grateful for the funding of the following project funds: Huzhou key research and development project, Grant/AwardNumber2021ZD2003; Huzhou public welfare project, Grant/AwardNumber:2021GZ30,2022GZ36;Natural Science Foundation of Zhejiang Province, Grant/AwardNumber:LGF22C160002; National Natural Science Foundation of China under Grant, Grant/Award Number:62205104.

Author information

Authors and Affiliations

Contributions

Q.Y.: Conceptualization, Formal analysis, Writing—original draft. L.J.: Data curation, Funding acquisition. Y.S.: Data curation, Formal analysis. J.H.: Methodology, Software. J.W. & X.Y.: editing, Supervision. M.H. & Y.Y.: Literature search. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yu, Q., Jia, L., Shao, Y. et al. A local adaptive fuzzy spectral clustering method for robust and practical clustering. Sci Rep 15, 7833 (2025). https://doi.org/10.1038/s41598-025-91812-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-91812-4