Abstract

Prognostics and health management (PHM) technology aims to analyze and diagnose the state of equipment using a large amount of data, predict potential failures, and adopt corresponding maintenance and repair strategies to enhance equipment reliability, reduce repair costs, and prevent production interruptions. In this paper, we propose a remaining useful life (RUL) prediction model based on Mamba, which incorporates learnable parameters and a multi-head attention mechanism; to address the issues faced by traditional algorithms, which struggle to efficiently capture dependencies in long sequences and parallelize the processing of these sequences. Firstly, min-max scaling and exponential smoothing techniques are used to preprocess the feature data in order to prevent gradient explosion while speeding up the convergence of the model. Secondly, a learnable scaling parameter is introduced into the Residual block to adjust the output, and a multi-head attention mechanism is innovatively integrated into the Mamba block to operate on the data processed by the convolutional layer, thereby enhancing the expressiveness and accuracy of the model. Lastly, the model is compared with the current state-of-the-art research findings on aero-engine and lithium-ion batteries datasets, and the experimental results demonstrate that the model outperforms the current state-of-the-art methods in RUL prediction tasks, exhibiting better generalization, and can be applied as a general RUL prediction method to other fields.

Similar content being viewed by others

Introduction

In the modern industrial sector, which is highly reliant on reliability and safety, the remaining useful life (RUL) prediction technology has become the core link in the prognostics and health management (PHM). And it is prevalently employed in aero-engine, lithium-ion batteries, rolling bearings, and mining machinery and equipment, among others1. For instance, precise RUL prediction for aero-engine facilitates flight safety assurance amidst material fatigue, operational wear, and natural aging. Similarly, accurate RUL prediction for lithium-ion batteries aids in ensuring the safe operation of electric vehicles, energy storage systems, and other domains, thereby achieving cost control.

Currently, RUL prediction methods can be primarily categorized into two major groups: model-driven and data-driven approaches. Among them, model-driven prediction methods rely heavily on physical properties and engineering principles. These methods employ mathematical models to forecast the future performance of aero-engine, or they simulate the degradation process of lithium-ion batteries by constructing corresponding physical failure models or electrochemical models. The advantage of this approach is its ability to describe the degradation process relatively precisely, providing a solid theoretical foundation for predictions. However, the numerous components and intricate structure of aero-engine systems, coupled with the complex physicochemical changes within lithium-ion batteries, render the modeling process challenging. Furthermore, this methodology necessitates a high level of expertise and high-quality data.

Data-driven prediction methods offer a more advantageous alternative, as they focus on extracting valuable insights from historical operational data, rather than heavily relying on an in-depth understanding of the internal physical mechanisms. Traditional data-driven machine learning algorithms, including decision trees (DT)2,3, relevance vector machines (RVM)4, random forests (RF)5, and extreme learning machines (ELM)6, are capable of uncovering hidden patterns and trends within extensive sensor data for predictive purposes. However, they also exhibit drawbacks such as high computational costs, susceptibility to overfitting, and relatively low robustness. In response to these issues, Xiang et al.7 proposed an aero-engine remaining useful life prediction model based on multicellular long short-term memory (LSTM) deep learning, which overcomes the limitation of addressing long-term data dependency often hindered by gradient vanishing. Hong et al.8 proposed an LSTM-GRU hybrid neural network that employs a similarity measurement method to reduce the dimensionality of parameters input into the neural network. They then verify the robustness of this method by predicting real lithium-ion battery data under four seasonal operating conditions. Lyu et al.9 presented a predictive framework that integrates multi-LSTM networks with a two-stage random forest regression model. In this framework, processed multi-value features, variation features, statistical features, and parameter features are fed into multiple LSTM networks as time series inputs. A two-stage random forest mechanism is employed to integrate and optimize the state of health (SOH). Finally, the importance of features for interpretability analysis is investigated from the perspectives of individual and categorical features. Hu et al.10 proposed an auto-expanding cascaded long short-term memory (ACLSTM) prediction model that incorporates the aging trend of ATE life. This model adopts a network structure where multiple LSTM modules are connected sequentially, with the prediction error from the preceding module continuously set as the training output for the subsequent module, thereby reducing errors to a certain extent.

However, the serial processing characteristic of LSTM itself leads to its slow processing speed and is also constrained by the design of its internal state and forgetting gates. Transformer, on the other hand, is able to process each element in the sequence in parallel by introducing the self-attention mechanism, which significantly improves the processing speed. Meanwhile, Transformer is able to efficiently capture the dependency between any two positions in the whole sequence. Luo et al.11 used electrochemical impedance spectroscopy (EIS) as a feature for SOH prediction, which can directly reflect the aging state of lithium-ion batteries. Meanwhile, due to the high dimensionality of the information contained in the EIS, the use of the self-attention mechanism in Transformer makes the model focus on influential features. Xu et al.12 proposed a Transformer-based multi-channel patch model that splits sequence data into a series of patches for model inputs. This model not only captures the fine-grained local features of the data but also reduces the complexity of the model. Zhang et al.13 pruned the Transformer model to maximally eliminate redundant parameters and improve computational efficiency. Meanwhile, the ASP mechanism was introduced into the remaining useful life prediction model of aero-engine for the first time, so as to obtain the optimal lightweight network model.

Although all of the above methods take advantage of the powerful temporal feature extraction capability of Transformer and have achieved certain results, the self-attention mechanism in them leads to a square-level increase in computation with the increase in the length of the input sequence, which leads to the problems of high complexity and low computational efficiency. In order to solve the shortcomings of Transformer, Albert Gu and Tri Dao jointly proposed the Mamba model14. The two major contributions of this model: (1) Selective scanning algorithm, which enables the model to dynamically adjust to different inputs by associating the parameters (matrices B, C and step size \(\:\varDelta\:\)) in State Space Model (SSM) with the inputs. (2) Hardware-aware algorithm, which is proposed to reduce the number of data transfers from DRAM to SRAM and improve the computational efficiency.

Based on the baseline Mamba model, this paper proposes a RUL prediction framework incorporating multi-head attention mechanisms and learnable scaling parameters, termed Mamba-Attention. Mamba-Attention solves to some extent the limitation that traditional algorithms cannot process data in parallel. At the same time, traditional algorithms tend to suffer from greater interference when dealing with data containing noise. The improved Mamba algorithm not only reduces the complexity and has higher computational efficiency, but also can effectively filter out the noisy information and capture more subtle and useful feature differences, thus improving the prediction accuracy and robustness of the model. Experimental results demonstrate that the framework performs exceptionally well in RUL prediction tasks using both aero-engine and lithium-ion batteries datasets. The key contributions of this paper are as follows:

-

(1)

Introducing a learnable scaling parameter in Mamba’s ResidualBlock, which adjusts its output while controlling the flow of gradients, effectively avoiding gradient explosion. Meanwhile, the innovative integration of a multi-head attention mechanism in MambaBlock acts on the data processed by the convolutional layer to improve the expressiveness and accuracy of the model.

-

(2)

In terms of data processing, considering the problem of feature scale inconsistency, min-max scaling is used to unify all feature scales between 0 and 1. The exponential smoothing technique is used to weighted average the data points to solve the noise problem in the time series data.

-

(3)

The RUL prediction method proposed in this paper performs well in the task of RUL prediction for aero-engine and lithium-ion batteries, indicating its potential as a versatile RUL prediction approach applicable to rolling bearing RUL prediction, mining machinery RUL prediction, and so forth.

By guiding specific work through Mamba-Attention’s RUL prediction method, various potential failure risks can be identified to ensure safety. At the same time, the maintenance strategy based on RUL prediction can arrange the maintenance time and content more precisely, which can transform the outfield maintenance into base-level maintenance, and the unplanned maintenance into planned maintenance, further reducing the maintenance guarantee cost.

The rest of the paper is organized as follows: Sect. 2 highlights the related work of this study. Section 3 outlines the proposed method. Section 4 analyses the experimental results. Section 5 concludes this study.

Related work

Mamba algorithm

The current mainstream algorithms in the RUL prediction task have progressed from CNN and RNN to Transformer. Although Transformer solves the issues that CNN and RNN have with capturing dependencies in long sequences and parallelizing sequence processing, its efficiency diminishes as sequences lengthen. Therefore, this paper introduces, for the first time, the Mamba model, which can handle long sequences more efficiently in the RUL prediction task of aero-engine; meanwhile, the Mamba-Attention framework is applied to the RUL prediction task of lithium-ion batteries. As shown in Fig. 1, the Mamba model selectively processes input information through a selective mechanism and efficiently stores results via parallel scanning, kernel fusion, and recomputation.

Although the State Space Model (SSM) fully considers the internal state of the system, enabling it to predict the future behavior of the system more accurately, it has a limitation: it is difficult to accurately adapt to different inputs during reasoning. To address this shortcoming, Mamba introduces a selection mechanism to SSM, thereby forming the Selective State Space Model. As shown in (Fig. 1), the selection mechanism maps \(\:{X}_{t}\) to obtain B, \(\:\varDelta\:\), and C. A and B are then discretized using Δ, enabling selective propagation or forgetting of information along the sequence length dimension. Next, the discretized B is multiplied with \(\:{X}_{t}\), and the discretized A is multiplied with the original state \(\:{h}_{t-1}\). Finally, these two products are added together to obtain the new state \(\:{h}_{t}\), which is then multiplied with C to obtain the output \(\:{y}_{t}\).

In order to make traditional SSM efficiently computable on GPUs as well, Mamba borrows the Flash Attention technique15. It enables Mamba to decrease the number of reads from the main memory (HBM) by caching data during parallel scanning in SRAM. Furthermore, it further reduces the number of memory accesses and lowers memory bandwidth consumption by consolidating multiple matrix operations into a single one through the kernel fusion technique. Meanwhile, the seamless integration of H3 (the foundation of most SSM architectures) and MLP16 (Multilayer Perceptron) not only allows it to inherit the linear scalability of the sequence length in the state-space model but also enables the realization of the Transformer’s modeling capability.

Traditional algorithms (CNN, Transformer) encounter difficulties in efficiently parallelizing the RUL prediction task, which requires processing a significant amount of data samples. Therefore, this paper applies the Mamba model to RUL prediction for aero-engines and lithium-ion batteries, capitalizing on its benefits of low complexity and high computational efficiency.

Multi-head attention mechanism

The attention mechanism originates from the study of human vision, which scans the global image quickly to obtain the target area that needs to be focused on, also known as the focus of attention, and then devotes more attention to this area in order to obtain more detailed information while suppressing other useless information.

However, the attention mechanism can tend to excessively focus on specific parts of the input, resulting in limited ability to capture a broad range of effective information. To address these shortcomings, Ashish Vaswani et al.17 proposed a multi-head attention mechanism, as shown in (Fig. 2). The left side illustrates a single scaled dot product attention, while the right side shows the multi-head attention mechanism, which incorporates multiple parallel scaled dot product attentions. By using multiple attention “heads” in parallel, the multi-head attention mechanism allows different “heads” to focus on different parts of the input and capture more diverse features and patterns. At the same time, the existence of multiple heads makes the model more stable in the learning process and better able to capture dependencies and complex structural information with higher robustness.

In a multi-head attention mechanism, the inputs are first transformed linearly to generate a query (Q), key (K) and value (V) vector for each head. Each head computes the attention score and weighted output independently. Finally, the outputs of all heads are concatenated and linearly transformed again to generate the final output. The output of each head and the output of multiple heads are shown in Eq. (1) and Eq. (2), respectively.

Based on the in-depth study of the above algorithms, this paper leverages the strengths of the multi-attention mechanism and applies it to the data processed by the convolutional layer in mamba, so as to more comprehensively capture the dependencies in the input sequences, enhance the feature representations, and improve the expressive ability of the model, as well as enhancing its flexibility in dealing with complex tasks.

Proposed method

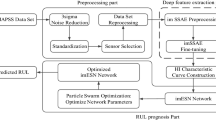

While the Mamba model makes use of its unique selective state-space mechanism, thereby controlling its time complexity to O(N) when dealing with long sequence data, avoiding the computational cost that grows quadratically with the sequence length in traditional models, there are drawbacks such as excessive focus on invalid features, interference from data outliers, and overfitting of the model. Based on such shortcomings, this paper proposes a fast and accurate RUL prediction method called Mamba-Attention, as shown in (Fig. 3). To address the problem of feature scale inconsistency in datasets such as PRONOSTIA Bearing, Aero-engune, and Lithium-ion batteries, the min-max scaling technique is used to unify all feature scales between 0 and 1 during data processing, which enables the model to treat each feature equally and eliminates the difference in magnitude. To reduce the prediction interference caused by noise and fluctuations in the data, the exponential smoothing method is used to smooth the time series data. To further accelerate the convergence speed of the model and improve its robustness, a learnable scaling parameter is introduced in the Residual block of the Mamba framework to dynamically adjust the weights of the residual connections. The innovative integration of the multi-attention mechanism in Mamba block, acting on the data processed by the convolutional layer, enhances the model’s ability to capture complex data relationships and improves prediction accuracy.

Learnable scaling parameters accelerate model convergence

The learnable scaling parameters mainly include the scaling factor \(\:\gamma\:\) and the panning factor \(\:\beta\:\), which are mathematically formulated as follows:

Where \(\:Z\)is the normalized feature value, the scaling factor \(\:\gamma\:\) is used to adjust the standard deviation of each feature and the panning factor \(\:\beta\:\) is used to adjust the mean of each feature. In the rescaling phase, \(\:\gamma\:\)is multiplied with the normalized output data; in the panning phase, an offset \(\:\beta\:\) is added, which is learned during the training process. With these two phases, the learnable scaling parameters can effectively reduce the internal covariate offsets, speed up the training process, and improve the generalization performance of the model.

Residual connection is a central part of residual blocks, which helps solve the problem of gradient vanishing or gradient explosion in deep network training by directly connecting the inputs to the outputs of the blocks. In this paper, learnable scaling parameters are employed to further elevate the importance of residual connectivity. Throughout the training procedure, the optimizer autonomously modifies the parameter values based on the gradient of the loss function. Specifically, when the parameter value surpasses 1, the residual connection’s weight is augmented; conversely, when the value falls below 1, the weight of the residual connection is diminished. Consequently, this methodology enhances the information flow and feature representation within the network.

To more intuitively demonstrate that the learnable scaling parameters can accelerate the convergence of the model, this paper calculates and visualizes the loss values for the aero-engine dataset (see Sect. 4.2.2 ) and the lithium-ion batteries dataset (see Sect. 4.3.2 ), respectively. As illustrated in Fig. 4, the aero-engine dataset is depicted on the left, and the lithium-ion batteries dataset is shown on the right. The lower and upper borders of the boxplot indicate the first quartile (lower quartile) and the third quartile (upper quartile) of the data, respectively. The horizontal line within the box represents the median. The results indicate that after 60 epochs, the data tends to stabilize, suggesting that the model converges rapidly. This rapid convergence aids in mitigating the issues of vanishing gradients or gradient explosions, stabilizes the gradients during backpropagation, facilitates faster convergence to the optimal solution, and enhances the efficiency of the training process.

In this paper, the learnable parameter “alpha” is employed to dynamically adjust the weights of the residual connections. As illustrated in Fig. 5, the data tensor inputted into the Mamba model is denoted as X, and the following four steps are executed:

Step 1: Normalize X to enhance data stability and eliminate magnitude discrepancies among different features, thereby facilitating the subsequent feature transformation process.

Step 2: The normalized data is fed through a mixer comprising multiple neural network layers (including convolutional, fully-connected, and activation layers) to extract useful features that enable the model to learn an effective representation from the original data.

Step 3: The output processed by the mixer is summed with the original input X to establish a residual connection. This connection allows the gradient to propagate directly through the constant mapping to the shallow layers, thereby accelerating the model’s convergence and enhancing its stability.

Step 4: The results of the residual connections undergo scaling by “alpha”, allowing the strength of the residual connections to be adjusted based on the task’s requirements. In turn, this aids the model in better balancing the contributions of the residual connections with those of the original inputs. By incorporating learnable scaling parameters, the ResidualBlock is able to capture more nuanced and useful feature distinctions, thereby enhancing the model’s predictive accuracy and robustness.

Multi-head attention mechanism for enriching feature representation

The multi-head attention mechanism is an extended form of the attention mechanism, which obtains attention distributions in different subspaces of the input sequence by running multiple independent attention mechanisms in parallel. In multi-head attention, the input sequence is first passed through a linear transformation layer to produce three matrices: Query, Key, and Value. These matrices are then divided into multiple “heads”, each of which independently performs the attention computation. Within each head, a scaled dot-product attention mechanism is utilized to compute the similarity ( attention scores) between the query and the key. These scores are normalized using a softmax function to obtain weights, which are subsequently used to perform a weighted summation of the values. This allows the model to focus on the important parts of the sequence. The outputs of all the “heads” are concatenated to form a wider matrix, and the linear transformation in the multi-head attention mechanism integrates the features learned by different “heads” to create a richer and more comprehensive representation. In this paper, we fully leverage the capability of the multi-head attention mechanism to enrich feature representation by applying it to the output of the convolutional layer in MambaBlock.

In the multi-head attention mechanism, we set up with four attention heads, each of which may focus on different features of the input data. For example, one head may focus on the data from the temperature sensor and another head may focus on the data from the vibration sensor. The feature information extracted by different heads is fused by stitching the outputs of all the heads to form a wider matrix.

As shown in Fig. 6, this paper extends the data tensor X(B, L,D) to X(B, L,2*ED) and subsequently splits it into X(B, L,ED) and Z(B, L,ED). Next, X(ED) is convolved, and the convolved result is inputted to the multi-head attention mechanism to enrich the feature representation. Meanwhile, Z(ED) remains unprocessed and is directly passed through the silu activation function. Following the aforementioned series of processing steps, the model is capable of capturing more fine-grained feature information, thereby enhancing its prediction accuracy and generalization ability.

A generalized method for data feature selection

This paper aims to propose a generalized RUL prediction methodology that is capable of performing rapid and accurate RUL prediction tasks across various domains, including aero-engine, lithium-ion batteries, rolling bearings, and mining machinery equipment. Despite the fact that these datasets consist solely of data samples, inconsistencies in data feature scales and the presence of outliers or noise in the samples pose challenges to the subsequent prediction model, potentially introducing interference. In response to these issues, this paper adopts a min-max scaling technique to normalize all feature scales within the dataset to the range of 0–1. Subsequently, a smoothing process is applied to the time-series data to mitigate noise and fluctuations.

Specifically, as shown in Fig. 7, this paper presents a visualization of all the data and proceeds to eliminate the feature data that exhibit no discernible trend from the healthy stage to the failure stage. Subsequently, the data with invalid features removed is subjected to min-max scaling, unifying all feature scales to the range of 0–1, thereby eliminating dimensional discrepancies and enabling the model to treat each feature equitably. Furthermore, normalization accelerates model convergence and ensures more stable gradient values. Following the consistent feature scaling, an exponential smoothing treatment is applied. By iterating through all columns of the training and testing sets, columns meeting certain criteria (containing the character “s”) are identified as time-series data and subsequently subjected to smoothing. This smoothing process results in smoother transitions between data points, effectively reducing or eliminating random fluctuations and noise, thereby enhancing the visibility of long-term trends and periodic variations within the data.

Experiments

Performance evaluation metrics

Experiments are executed on the computer environment with one Intel (R) Xeon(R) Silver 4214R CPU @ 2.40 GHz×48 and NVIDIA GeForce RTX 3090 GPU card, which are implemented in Python with Pytorch2.0.1.The hyperparameters of the model are set as shown in Table 1, an Adam optimizer with a learning rate of 0.1 is chosen to optimize the model. The model is trained for 100 epochs, with a weight decay of 0.00001, a representation dimension of 8, and a number of layers of 2,smoothing parameter of 0.98, attention layers of 4.

In order to comprehensively evaluate both the fitting effect and prediction performance of the model, the root mean squared error (RMSE) is selected as the primary evaluation metric.Its mathematical expression is provided as follows:

Where \(\:m\) is the total number of data samples in the training set,\(\:{\text{y}}_{\text{i}}\) is the true value, and \(\:\widehat{{\text{y}}_{\text{i}}}\) is the predicted value. In addition, to further illustrate the accuracy of model prediction, this paper also adopts mean absolute error (MAE) as another evaluation metric on this basis. The mathematical expression of MAE is as follows:

When the values of RMSE and MAE are smaller, it indicates that the accuracy of the model’s predictions is higher, the model exhibits better fitting performance. This, in turn, implies that the model is capable of more accurately predicting the RUL of various components and systems, such as aero-engine, lithium-ion batteries, rolling bearings, as well as mining machinery and equipment.

Aero-engine remaining useful life prediction experiment

Comparison with state-of-the-art baselines

To demonstrate the superiority of the proposed method, this paper compares its RMSE results with those obtained by a variety of state-of-the-art RUL prediction methods. The comparison methods encompass a CNN-based approach (1-DCNN-BiGRU18), a self-attention mechanism-driven method (BiGRU-TSAM19), an MLP architecture-based technique (MLP-Mixer20).

1-DCNN-BiGRU

A novel parallel hybrid network is proposed, which comprises a one-dimensional convolutional neural network (1-DCNN) and a bidirectional gated recurrent unit (BiGRU), designed to extract spatial and temporal information from historical data, respectively.

BiGRU-TSAM

A new bi-directional gated recursive unit integrated with a temporal self-attention mechanism, is proposed for RUL prediction. It addresses, to some extent, the discrepancy between the handling of reverse time series and the ability to reflect RUL prediction results at various moments.

MLP-mixer

An architecture based entirely on Multilayer Perceptron (MLP) is capable of extracting multi-dimensional features from time series. In a comparative experiment, a 2-layer MLP-Mixer with 8 feature dimensions was utilized.

Mamba-Turbofan(ours)

In this paper, Mamba is used for the first time in RUL prediction, fully leveraging its capabilities to capture dependencies within long sequences and to process sequences in parallel.

Mamba-Attention(ours)

This paper builds upon Mamba-Turbofan by incorporating a multi-head attention mechanism and learnable scaling parameters, thereby improving prediction accuracy and generalization.

Description of the dataset

The dataset utilized in this experimental section is the NASA-published aero-engine RUL prediction dataset21, commonly referred to as C-MAPSS (Commercial Modular Aero-Propulsion System Simulation). The C-MAPSS dataset encompasses four subsets (FD001 through FD004), which include engine IDs, the current cycle count for each engine, operational settings, sensor readings, and other pertinent information. It simulates an engine model with a thrust capacity of 90,000 pounds, capable of replicating a diverse array of flight conditions, encompassing altitudes ranging from sea level to 40,000 feet, Mach numbers varying from 0 to 0.90, and sea-level temperatures extending from −60 to 103 °F. The specific sample sizes for each subset within the dataset are detailed in (Table 2).

Comparative experimental results and analysis

A comparative experimental approach is used to evaluate the performance of the proposed methods’ metrics on four subsets. The values of RMSE under multiple methods are presented in Table 3 and visualized in (Fig. 8). The results presented in both the table and the figure clearly demonstrate that, within the FD002 subset, the Mamba-Turbofan method proposed in this paper exhibits superior RMSE values compared to current mainstream methods. Concurrently, the introduction of a learnable scaling parameter dynamically adjusts the weights of residual connections, while the incorporation of a multi-attention mechanism fuses the learned features into a richer and more comprehensive representation, collectively forming the Mamba-Attention method. Notably, the RMSE values of the Mamba-Attention method under the FD001, FD002, and FD004 subsets are remarkably lower than those of current mainstream algorithms, achieving a maximum improvement of 35.7%. Due to the presence of some outliers or noise in the FD003 subset, the attempt to fit the outliers or noise led to an increase in model complexity to the extent that random fluctuations in the data rather than the true signal were captured and overfitting occurred. In order to mitigate the impact of outliers and noise on the model, the introduction of regularization techniques (L1 or L2) will be considered in the future to limit the model complexity by adding a penalty term related to the size of the model parameter to the loss function to further improve the model’s generalization ability.

Furthermore, this paper verifies the significant advantages of the proposed method in terms of computational efficiency, which is particularly important for dealing with large-scale applications. Through quantitative benchmark analysis, we compare the average time consumed by several methods in the RUL prediction task. From the comparison data, it is easy to find that our proposed method takes only 17.7 s on average, which is definitely a more outstanding performance compared to the other three methods that take 163.34, 176.44, and 170.55 s, respectively. This result fully demonstrates Mamba’s excellent ability in parallel data processing, which not only significantly reduces the cost of model training, but also greatly improves the computational efficiency. It is worth noting that if the proposed method can be paired with more powerful hardware facilities, the response time will be further shortened, resulting in fast and accurate RUL prediction within seconds. This will undoubtedly provide more powerful support for real-time performance monitoring and prediction of large-scale applications.

While RMSE provides a precise measure of prediction accuracy, it tends to amplify the impact of outliers. Therefore, in this paper, we incorporate the MAE metric to supplement the evaluation of the model’s performance. RMSE and MAE complement each other, with RMSE highlighting the model’s sensitivity to extreme values and MAE emphasizing the model’s overall average performance. This complementary nature enables a more comprehensive assessment of the model’s strengths and weaknesses, facilitating more accurate judgments.

Table 4 presents the MAE values obtained under different methods, which are also visualized in (Fig. 9). As evident from the data in the table, the presence of outliers or noise in the FD003 subset slightly diminishes the predictive capability of the model compared to current mainstream methods. Nevertheless, the MAE values of the proposed method under the FD001, FD002, and FD004 subsets are consistently lower than those of the state-of-the-art mainstream methods, further corroborating the superiority of the model’s predictive performance. At the same time, we also introduce the metrics of accuracy rate and F1 score. In the same experimental setting, the average accuracy and F1-score of the four subsets are 80.79 and 82.84%, respectively, which reflects the stability and reliability of the model in recognizing samples of different categories.

Ablation experiments

This section aims to validate and analyze the contribution of each improved component in the aero-engine dataset through ablation experiments. Specifically, we will analyze the RMSE values obtained under Mamba-Turbofan, Mamba with learnable parameters, Mamba with a multi-head attention mechanism, and the final proposed method, respectively. Table 5 presents the ablation experiment results for the four subsets when the epoch is set to 100.

The results presented in the table reveal that, similarly to the findings from the comparative analysis, for datasets FD001, FD002, and FD004, both the learnable scaling parameter and the multi-head attention mechanism contributed positively to the RUL prediction, achieving an improvement of up to 10.5% relative to the baseline model. However, for dataset FD003, under certain conditions, the inclusion of the learnable scaling parameter led to a decrease in effectiveness. Analysis indicates that this addition increased the model complexity, resulting in an occurrence of overfitting.

Lithium-ion batteries remaining useful life prediction experiment

Comparison with state-of-the-art baselines

In this paper, the proposed Mamba-Attention model is compared with the current state-of-the-art methods under the same lithium-ion battery dataset, and the comparison methods include PINN22 and Mamba-Lithium23.

PINN

A model fusion scheme based on Physical Information Neural Network (PINN) is proposed to simulate the degradation dynamics of lithium-ion batteries more efficiently.

Mamba-lithium

The Mamba algorithm was utilised to more proficiently capture the complex aging and charging states of lithium-ion batteries.

Description of the dataset

The dataset used for the experiments in this section is the dataset of 124 commercial LiFePO4/graphite batteries cycled under fast charging conditions, disclosed by Severson et al.24 in Nature Energy. A123 APR18650M1A cells with a nominal capacity of 1.1 Ah and a nominal voltage of 3.3 V were used, and all cells were tested in a four-point contact cylindrical fixture on a 48-channel Arbin LBT battery test cycler. In this paper, batteries 91, 100, and 124 at 30 °C were randomly selected as Case A, while batteries 101, 108, 116, and 120 were designated as Case B for RUL and SOH (State of Health) prediction. Additionally, the State of Charge (SOC) prediction was performed using the battery states recorded at 0, 10, 25, 30, 40, and 50 °C. The details of Case A and Case B are presented in (Table 6).

Comparative experimental results and analysis

In this section, a comparative experimental approach is also employed to compare the RMSE results obtained in the RUL prediction task between the datasets of Case A and CaseB, as shown in (Table 7). Additionally, Table 8 presents a comparison of the RMSE results after conducting the SOC (State of Charge) prediction task using different methods on the corresponding datasets collected at temperatures of 0, 25, and 50℃, which are visualized in (Fig. 10).

As evident from the table, the method proposed in this paper not only achieves lower RMSE values for multiple batteries at a constant temperature of 30 °C compared to current state-of-the-art methods, but also maintains exceptional performance across different temperatures. This demonstrates that Mamba-Attention exhibits robust robustness in this task and shows strong generalization ability when dealing with diverse tasks. Although the results at 25℃ are slightly inferior to the Mamba-Lithium method, extensive experimental results reveal that the proposed model does not fully learn the features within 100 epochs. When the number of epochs is increased to 200, 500, and 1000, the corresponding SOC RMSE results improve to 0.0140, 0.0131, and 0.0114, respectively, all of which outperform the compared methods.

Ablation experiments

SOH is defined as the ratio of the current battery capacity to its initial (or rated) capacity, serving as a quantitative measure of the battery’s health status. As the battery undergoes usage and aging, SOH gradually declines. To underscore the robust generalization capability of the proposed method, this paper delves into the performance of various model configurations, including Mamba, Mamba with learnable parameters, Mamba augmented with a multi-attention mechanism, and finally, the RMSE achieved by our proposed method under the SOH prediction task for both Case A and Case B datasets. The significance of each improvement component within the proposed method is rigorously validated through ablation experiments, using the analysed lithium-ion batteries dataset. Table 9 demonstrates the results of the ablation experiments at an epoch of 100.

From the results presented in the table, it can be learned that for both datasets, Case A and Case B, the addition of the learnable scaling parameter and the multi-head attention mechanism led to a reduction in the values of RMSE, resulting in a maximum performance improvement of 36.8%. This fully verifies the positive contribution of these mechanisms to the model’s performance.

Conclusion

In this paper, a RUL prediction framework termed Mamba-Attention is proposed. This framework is based on the Mamba model and incorporates a learnable scaling parameter and a multi-head attention mechanism. It fully leverages Mamba’s ability to capture dependencies in long sequences and parallelize the processing of these sequences, thereby reducing training costs and improving computational efficiency. Additionally, the introduction of the learnable scaling parameter and multi-head attention mechanism in this paper enhances the model’s interpretability and prediction accuracy. Upon evaluating and comparing its performance with current state-of-the-art baseline methods using two publicly available datasets, the proposed method is found to demonstrate superior generalization and robustness. As a result, it can be used as a generalized RUL prediction method to guide the RUL prediction for various practical applications, including rolling bearings and mining machinery and equipment, etc., and has a very promising application development prospect.

However, the inherent 1D nature of the selective scanning technique in the Mamba model makes its application to 2D or higher dimensions challenging. In the future, we will work on introducing a hierarchical scanning approach to divide the data in a hierarchical structure and apply the scanning technique at each level. At the same time, we will combine spatio-temporal scanning methods to consider the changes of data in different time and space scales, in order to capture the characteristics of high-dimensional data more comprehensively, so as to be able to propose a brand new scanning scheme that can effectively deal with high-dimensional non-causal data.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Eknath, K. G. & Diwakar, G. Prediction of remaining useful -life of rolling bearing using hybrid DCNN-BiGRU model. J. Vib. Eng. Technol. 11, 997–1010 (2023).

Kundu, P., Darpe, A. K. & Kulkarni, M. S. An ensemble decision tree methodology for remaining useful life prediction of spur gears under natural pitting progression. Struct. Health Monit. 19, 854–872 (2020).

Bortnowski, P., Matla, J., Sierzputowski, G., Włostowski, R. & Wróbel, R. Prediction of toxic compounds emissions in exhaust gases based on engine vibration and bayesian optimized decision trees. Measurement 115018 (2024).

Li, L., Xu, J. & Li, J. Estimating remaining useful life of rotating machinery using relevance vector machine and deep learning network. Eng. Fail. Anal. 146, 107125 (2023).

Zhao, L., Zhu, Y. & Zhao, T. Deep learning-based remaining useful life prediction method with transformer module and random forest. Mathematics 10, 2921 (2022).

Berghout, T., Mouss, L., Kadri, O., Saïdi, L. & Benbouzid, M. Aircraft engines remaining useful life prediction with an adaptive denoising online sequential extreme learning machine. Eng. Appl. Artif. Intel. 96, 103936 (2020).

Xiang, S., Qin, Y., Luo, J., Pu, H. & Tang, B. Multicellular LSTM-based deep learning model for aero-engine remaining useful life prediction. Reliab. Eng. Syst. Safe 216, 107927 (2021).

Hong, J. et al. Multi-forword-step state of charge prediction for real-world electric vehicles battery systems using a novel LSTM-GRU hybrid neural network. Etransportation 20, 100322 (2024).

Lyu, G., Zhang, H. & Miao, Q. An adaptive and interpretable SOH Estimation method for lithium-ion batteries based-on relaxation voltage cross-scale features and multi-LSTM-RFR2. Energy 132167 (2024).

Hu, L., He, X. & Yin, L. Remaining useful life prediction method combining the life variation laws of aero-turbofan engine and auto-expandable cascaded LSTM model. Appl. Soft Comput. 147, 110836 (2023).

Luo, K., Zheng, H. & Shi, Z. A simple feature extraction method for estimating the whole life cycle state of health of lithium-ion batteries using transformer-based neural network. J. Power Sour. 576, 233139 (2023).

Xu, G. et al. A transformer-based model for earthen ruins climate prediction. Tsinghua Sci. Technol. (2024).

Zhang, X. et al. PAOLTransformer: Pruning-adaptive optimal lightweight transformer model for aero-engine remaining useful life prediction. Reliab. Eng. Syst. Safe 240, 109605 (2023).

Gu, A., Dao, T. & Mamba Linear-time sequence modeling with selective state spaces. ArXiv 2312.00752 (2023).

Yang, Q., Bai, Y., Liu, F. & Zhang, W. Integrated visual transformer and flash attention for lip-to-speech generation GAN. Sci. Rep. 14, 4525 (2024).

Fan, C., Lin, H., Qiu, Y. & Yang, L. DAGM-fusion: A dual-path CT-MRI image fusion model based multi-axial gated MLP. Comput. Biol. Med. 155, 106620 (2023).

Vaswani, A. Attention is all you need. Adv. Neural. Inf. Process. Syst. (2017).

Zhang, J. et al. A parallel hybrid neural network with integration of Spatial and Temporal features for remaining useful life prediction in prognostics. IEEE T Instrum. Meas. 72, 1–12 (2022).

Zhang, J. et al. Prediction of remaining useful life based on bidirectional gated recurrent unit with temporal self-attention mechanism. Reliab. Eng. Syst. Safe 221, 108297 (2022).

Tolstikhin, I. O. et al. Mlp-mixer: an all-mlp architecture for vision. Adv. Neural. Inf. Process. Syst. 34, 24261–24272 (2021).

Saxena, A., Goebel, K., Simon, D. & Eklund, N. Damage propagation modeling for aircraft engine run-to-failure simulation. 2008 International Conference on Prognostics and Health Management 1–9 (IEEE, 2008).

Wen, P. Physics-informed neural networks for prognostics and health management of lithium-ion batteries. IEEE T Intell. Vehicl (2023).

Shi, Z. & MambaLithium Selective state space model for remaining-useful-life, state-of-health, and state-of-charge Estimation of lithium-ion batteries. ArXiv 240305430, (2024).

Severson, K. A. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 4, 383–391 (2019).

Acknowledgements

This work has been partially supported by Fundamental Research Fund for the Provincial Universities in Heilongjiang Province (Innovation Team for Coal Mine Informatization and Intelligence 2024-KYYWF-1102) and Scientific and technological key project of“Revealing the List and Taking Command”in Heilongjiang Province (2021ZXJ02A02).

Author information

Authors and Affiliations

Contributions

Fugang Liu: Methodology, Writing—original draft, Funding acquisitionShenyang Liu: Writing—review and editing, Software, Validation, VisualizationYuan Chai: ConceptualizationYongtao Zhu: Data curation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, F., Liu, S., Chai, Y. et al. Enhanced Mamba model with multi-head attention mechanism and learnable scaling parameters for remaining useful life prediction. Sci Rep 15, 7178 (2025). https://doi.org/10.1038/s41598-025-91815-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-91815-1