Abstract

Sleep posture, a vital aspect of sleep wellness, has become a crucial focus in sleep medicine. Studies show that supine posture can lead to a higher occurrence of obstructive sleep apnea, while lateral posture might reduce it. For bedridden patients, frequent posture changes are essential to prevent ulcers and bedsores, highlighting the importance of monitoring sleep posture. This paper introduces CHMMConvScaleNet, a novel method for sleep posture recognition using pressure signals from limited piezoelectric ceramic sensors. It employs a Movement Artifact and Rollover Identification (MARI) module to detect critical rollover events and extracts multi-scale spatiotemporal features using six sub-convolution networks with different-length adjacent segments. To optimize performance, a Continuous Hidden Markov Model (CHMM) with rollover features is presented. We collected continuous real sleep data from 22 participants, yielding a total of 8583 samples from a 32-sensor array. Experiments show that CHMMConvScaleNet achieves a recall of 92.91%, precision of 91.87%, and accuracy of 93.41%, comparable to state-of-the-art methods that require ten times more sensors to achieve a slightly improved accuracy of 96.90% on non-continuous datasets. Thus, CHMMConvScaleNet demonstrates potential for home sleep monitoring using portable devices.

Similar content being viewed by others

Introduction

Sleep posture, closely related to sleep quality, has become a crucial focus in sleep medicine. Studies associate the supine posture with increased risk of obstructive sleep apnea (OSA), while a lateral posture may help reduce the severity of OSA. Additionally, frequent posture changes are essential for bedridden patients to prevent ulcers and bedsores1, highlighting the need for effective sleep posture monitoring. Although Polysomnography (PSG) is the gold standard for sleep posture assessment2, its high cost, time consumption, and need for professional oversight limit its practicality for continuous monitoring.

Home sleep tests(HST) devices offer practical, cost-effective solutions for monitoring sleep conditions and assessing posture at home. These HST can be categorized into wearable and non-wearable devices. Wearable devices, such as gyroscope-equipped chest straps3 and accelerometer-integrated smartbands4, detect sleep posture and monitor heart rate but may cause hight false positives and discomfort due to limited placement areas (upper limb or thoracic region5). Non-wearable devices, including camera-based, radar-based, and pressure-based systems, are more suitable for continuous monitoring. Video systems using RGB or RGB-depth images capture detailed postures6,7 but are sensitive to lighting, and raise privacy concerns. Radar-based devices, such as the BodyCompass system from MIT8, detect posture changes via signal reflections9,10. While effective in detecting posture during long-term monitoring, their performance is limited in short-term scenarios. Recent studies have explored various pressure sensor-based techniques, such as the pressure-sensor-based smart mattress system11, that utilize pressure distribution maps to detect sleep postures12,13. These wear-free solutions are well-suited for long-term monitoring and continue to gain favor for widespread use. However, challenges remain in handling motion artifacts and ensuring the continuous posture detection.

Traditional pressure sensor-based devices use dense sensor arrays embedded in mattresses to map body pressure distribution14,15,16, employing machine learning or deep learning to identify sleep postures. While achieving over 90% accuracy during resting states, they heavily depend on image stability and high resolution, requiring large mattresses with thousands of sensors. Accurate data can only be obtained in controlled laboratory settings with fixed postures, limiting practical applications. To address these challenges, we previously proposed the S3CNN network17, integrating dynamic physiological features with static cardiopulmonary (CR) maps, achieving 92.9% accuracy using a \(58 \times 28\) cm mattress with 32 piezoelectric ceramic sensors. However, this method struggles with two major challenges: it cannot handle motion artifacts and body movements, leading to 13% data loss, and lacks the ability to track long-term sleep posture patterns, limiting its reflection of realistic sleep behavior. Thus, current technology still faces obstacles in delivering reliable long-term monitoring in home environments.

In this study, we propose CHMMConvScaleNet, a novel method for detecting sleep postures in continuous scenarios. CHMMConvScaleNet integrates four components: the Movement Artifact and Rollover Identification (MARI) module, a multi-scale CNN module, a Squeeze and Excitation (SE) module, and a Continuous Hidden Markov Model (CHMM) module. To address body movements and rollovers, MARI identifies these events as critical nodes to enhance posture detection continuity and accuracy. The multi-scale CNN module consists of six sub-networks: three 1D convolutional networks for temporal features, and three 2D networks for spatial features. Outputs are aligned and stacked along the channel dimension, integrating spatiotemporal information for enhanced recognition continuity and robustness. The SE module processes the multi-scale feature maps, optimizing channel weighting. To capture the long-term continuity of sleep patterns, the CHMM models temporal stability and transitions between postures. It establishes six distinct hidden states and optimizes paths through the Baum-Welch algorithm, using rollover events and initial postures from the CNN. Testing demonstrates that CHMMConvScaleNet achieves state-of-the-art results, with an accuracy of 93.41%. The main contributions of this article can be outlined as follows:

-

The MARI module identifies motion artifacts and rollovers, providing critical node features to optimize posture detection.

-

To address severe motion artifacts, we incorporate information from adjacent segments to enhance detection reliability.

-

The multi-scale CNN module integrates temporal and spatial features using three Conv1D and three Conv2D sub-networks, enhancing long-term posture tracking.

-

By integrating a CHMM, our method improves detection performance, particularly around rollovers on continuous data.

Hardware construction

We used piezoelectric ceramics in our monitoring mattress for their flat shape, high sensitivity, low cost, and excellent high-frequency response. Each sensor consists of a circular piezoelectric element attached to a brass plate (Fig. 1a). When exposed to changing forces, it generates a faint current due to the piezoelectric effect18. To ensure stable connectivity, each sensor is mounted on a 3 cm by 5 cm printed circuit board. The flexible array forms a \(4 \times 8\) matrix with 32 channels (Fig. 1b), covering an area of \(58 \times 28\) cm, which matches an adult’s chest width for detailed visualization of vibration distribution.

The readout circuit (Fig. 1c) processes the signals from this array, including an analog front end, a low-pass filter, an analog-to-digital converter, and a wireless module. Because the piezoelectric constant \(d_{33}\) is relatively small (<600 pC/N), the sensor generates a weak micro-current under periodic pressure. The material responds quickly to high-frequency vibrations, producing a current proportional to the applied force. At lower frequencies or during non-periodic vibrations, the response slows due to hysteresis, causing nonlinear distortion between output voltage and pressure19. This design effectively captures vital physiological signals, such as breathing and heartbeat, while minimizing interference from non-target activities like body movements, ensuring high signal quality and accuracy.

Methods

Signal processing

This study was approved by the Institutional Review Board (IRB) of Peking Union Medical College (Approval Number: JS-2089), and all participants provided written informed consent. Our device is only 1 cm thick and placed under a 15 cm mattress, ensuring it does not interfere with patient care or ongoing medical treatments. The collected raw signals are a mixture of various physiological activities. To accurately analyze cardiopulmonary features, it is essential to distinguish individual physiological components from the aggregated data. During a resting state, a single respiratory cycle spans approximately 2–5 s, with a frequency range of 0.1–0.8 Hz. Cardiovascular pressure signals, identified as ballistocardiograms (BCG), include two main parts: the envelope waveform and the IJKL waves. The envelope waveform period matches the heartbeat cycle (roughly 0.6–1.2 s). The IJKL waves, associated with heart contractions and blood flow, occur at a frequency approximately five times that of the heartbeat20, as discussed in my previous work17, placing BCG signals within the 0.8-15 Hz range21. We utilized a Chebyshev Type I finite impulse response (FIR) band-pass filter with an order of 999 to isolate these signals because of its steeper frequency response. The deviation signal was obtained by subtracting respiratory and cardiac components from the composite signal. Details of the decomposition are provided in the Supplementary Materials.

The respiratory signal shows significant periodicity, accounting for about 90% of the original signal’s energy. The BCG signal, including both its envelope and subwaves, also exhibits stable periodicity and contributes 5–8% of the total signal energy. In contrast, the deviation signal lacks significant periodicity and accounts for less than 3% of the total energy. Unlike undisturbed signals, disturbed signals are primarily influenced by movements such as rollover events or other bodily activities. These movements mask the subtle physiological information and, due to their lack of periodicity, introduce substantial nonlinear distortion. Consequently, the energy distribution among signal components changes significantly compared to the resting state.

MARI module

Significant differences between undisturbed and disturbed signals necessitate their identification before feature extraction. Undisturbed signals exhibit stable periodicity aligning with physiological activity cycles, facilitating the extraction of temporal heartbeat amplitude intervals and spatial features of respiratory and heartbeat strength. In contrast, severe nonlinear distortion in disturbed signals complicates accurate physiological information extraction. To address this, we developed the MARI module to distinguish between undisturbed and disturbed signals, ensuring that data affected by motion artifacts is analyzed rather than discarded. This approach preserves data integrity and continuity. Details of these are provided in Supplementary Materials.

Drawing inspiration from the signal quality index method for ECG as presented in a previous study22, we extracted 16 evaluation parameters across four dimensions: energy stability, periodic stability, skewness, and kurtosis to differentiate between signal types. Energy stability was assessed using energy entropy, which measures signal complexity and helps identify disturbed signals with a uniform energy distribution caused by motion artifacts. Periodic stability was evaluated using Approximate Entropy (ApEn), as disturbed signals exhibit increased randomness and higher ApEn values. Skewness and kurtosis were analyzed to assess asymmetry and peakedness, as motion artifacts introduce irregular peaks and deviations. In the calculation of ApEn, Euclidean distance was chosen as the distance metric due to its simplicity and effectiveness in capturing subtle differences between time-series data. We then used a multilayer perceptron with 12 hidden units to classify signals as “Disturbed” or “Undisturbed” based on these features.

Observing patterns across multiple channels during rollover events revealed that significant motion artifacts frequently appear concurrently across a large number of channels. To effectively detect rollovers, we determined the optimal threshold for the number of “Disturbed” channels. Raising the threshold initially improved detection, but increasing it beyond 13 significantly reduced recall. Therefore, we empirically set the threshold at 13 to balance detection fidelity and operational efficiency, as illustrated in Fig. 2. The MARI module provides critical insights into signal quality and rollover events. Although it does not directly classify sleep postures, it flags periods of poor signal quality and rollover events. By identifying these events, MARI not only mitigates the effects of motion artifacts but also improves the accuracy and consistency of subsequent posture detection.

Spatial and temporal features extraction

In the resting state, signal acquisition maintains a linear relationship with the applied force, which facilitates the extraction of critical physiological information. Clinical research indicates that different sleep postures, influenced by pressure variations, alter the amplitude and intervals of cardiopulmonary signals. To analyze these temporal features, we extract amplitude and interval characteristics from respiratory and cardiac signals. Since respiratory signals are susceptible to disturbances, we use a smoothing window of size 5 to reduce incorrect peak detection. Peaks are then identified using a zero-crossing method, and the amplitude and interval of each peak are recorded. For cardiac signals, specifically BCG signals, the cycles correspond to the envelope rather than individual IJKL waves. Therefore, we apply envelope detection to BCG signals and use the same techniques to identify and record peak values of the cardiac cycles.

Spatial features reflect the intensity of cardiopulmonary activities detected by the sensor array. These features are captured by combining the distribution of the sensor matrix with each channel’s intensity. Since both respiratory and BCG signals exhibit strong periodicity and stable energy structures, we approximate them using a sum of sinusoids. Fourier transformation identifies the principal frequency components, which are used to construct sinusoids to approximate the signals. Accuracy is assessed using the R-squared value (\(R^2\)). If \(R^2\) is less than 0.7, additional sinusoids are added until \(R^2\) exceeds 0.7. Generally, a single sinusoid fits most respiratory signals with \(R^2\) above 0.80, whereas BCG signals require three to four sinusoids to achieve \(R^2\) over 0.75. After approximation, detailed in my previous work17, the root mean square (RMS) of the respiratory and cardiac signals is computed to measure amplitude, reflecting activity strength. The sensor matrix captures consistent frequencies across channels, yet variations in applied force cause significant spatial variability in recorded intensities. We then calculate the intensity for each channel, forming two \(4 \times 8\) matrices representing the distribution of respiratory and cardiac activity intensities. For visualization, these strengths are normalized to a scale from 1 to 255, corresponding to color depths, as shown in Fig. 3.

However, several issues compromise the utility of the original features. Varying frequencies of respiratory and cardiac activities result in inconsistent amplitude-interval quantities, complicating analysis. Additionally, the \({4 \times 8}\) spatial feature array has low resolution and widely spaced sensors, hindering model training. To address these challenges, we resampled temporal and spatial features. For temporal features, we used feature-based interpolation, inserting data points between peaks to match the original signal length. For spatial features, resolution was quadrupled, resulting in a \({16 \times 32}\) image. Figure 3 displays CR maps for different sleep postures, where lighter shades indicate higher intensity. In the supine posture, the chest’s wide contact with the sensor array results in the largest highlighted areas. With body tilt offset gravity shift, heartbeat and respiratory activity centroids shift left when lying on the right side, and right when lying on the left. Unlike high-resolution pressure maps requiring dense sensor arrays, our approach effectively monitors sleep postures by filtering out static pressure and disturbances, achieving accuracy with a sparse sensor array. More detailed analysis is provided in the Supplementary Materials.

CHMMConvScaleNet model

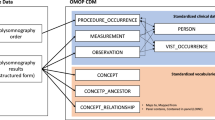

Clinical observations indicate that individuals generally maintain a posture during sleep for extended periods rather than frequently changing positions. Analysis of our dataset shows that 84.60% of 5-min segments exhibit consistent postures, while mixed-posture segments constitute only 15.40%. Incorporating data from adjacent three and five-minute segments enhances posture detection, especially when a one-minute segment lacks sufficient information. In PSG recordings, about 13% of one-minute samples are affected by body movements, highlighting the need for multi-scale segment processing. To address posture variability, we use CHMMConvScaleNet, which integrates modules: MSCNN, SE, and CHMM. As illustrated in Fig. 4, MSCNN employs six sub-networks to process cardio-respiratory data across one, three, and five-minute scales. Three networks extract temporal features, while the others process cardio-respiratory maps. The SE module refines features by recalibrating channel-wise responses, enhancing sensitivity to informative features. The CHMM module captures temporal relationships among postures, improving continuity and accuracy. Detailed parameters are provided in the Supplementary Materials.

Multi-scale CNN

As shown in Fig.5, the multi-scale CNN processes dynamic temporal and static spatial features. The model is trained across multiple time scales, incorporating features from the current 1-minute sample along with adjacent samples spanning 3 and 5 minutes. The dynamic temporal features include amplitude and interval characteristics of heartbeat and respiration, while the static spatial features are derived from CR intensity images. Since amplitude and interval variations occur within 0.5 to 5 seconds ranges, the original 100Hz data is downsampled to 3Hz, reducing feature dimensions from \(4 \times 6000\) to \(4 \times 180\) per minute, significantly lowering computational cost. Temporal features are processed by three MS-Conv1D sub-networks, each focusing on a specific time scale: 1-minute, 3-minute, and 5-minute samples. The inputs, labeled \({M^1}\), \({M^2}\), and \({M^3}\), combine respiratory \({R_A^i}\) and heartbeat \({R_B^i}\) features as \([R_A^i, R_B^i]\). Similarly, spatial features are handled by three MS-Conv2D sub-networks corresponding to the same time scales. The inputs for MS-Conv2D, denoted as \({N^1}\), \({N^2}\), and \({N^3}\), encapsulate respiratory \({H_A^{ij}}\) and cardiac \({H_B^{ij}}\) activity maps across channels j, enabling comprehensive feature analysis by the Conv1D and Conv2D networks.

The Conv1D inputs, labeled as \({M^1}\), \({M^2}\), and \({M^3}\), correspond to 1, 3, and 5-minute segments with dimensions of \(4 \times 180\), \(4 \times 540\), and \(4 \times 900\). These inputs, referred to as Cardiopulmonary Amplitude-Interval (CRAI) features, including both the amplitude and interval of respiratory and cardiac signals. We apply feature-based interpolation to standardize the sample length to 6000, followed by resampling to the required length, This ensures that amplitude and interval accuracy is preserved even after downsampling. \({M^1}\), \({M^2}\), and \({M^3}\) are then processed through three sets of layers, each consisting of one or two convolution operations, followed by pooling layers. This well-structured design transforms the varying input dimensions into uniform feature maps \({F^i}\) of size \(32 \times 30\), facilitating efficient feature fusion. The MS-Conv1D mapping function \({f_i}( \cdot )\) with parameters \({\theta ^i}\) governs this transformation.

For the Conv2D, the input is the Cardiopulmonary (CR) map, which represents the spatial distribution of respiratory and cardiac activity captured by the sensor array. The input features \({N^1}\), \({N^2}\), and \({N^3}\) correspond to different segment durations, with channel counts increasing as the segment duration extends. For a 1-minute segment, \({N^1}\) has dimensions of \(16 \times 32 \times 2\). For the longer 3-minute and 5-minute segments, \({N^2}\) and \({N^3}\) expand to \(16 \times 32 \times 6\) and \(16 \times 32 \times 10\), respectively. These features are processed by MS-Conv2D sub-networks, each comprising 2 to 3 sets of 2D convolutional layers with pooling layers, followed by an adaptive pooling layer sized at \(30 \times 1\) to standardize the output dimensions. The MS-Conv2D mapping function \({g_i}( \cdot )\) then transforms these inputs into uniform feature maps \({E^i}\) of \(32 \times 30\), with the transformation controlled by parameters \({\beta ^i}\). The calculation of intensity and its mapping to spatial features involves several complex processes, including circuit design and signal quantization. These steps are discussed in our previous work17.

Aligning and integrating temporal and spatial information is pivotal when fusing multi-modal features. The Conv1D network processes three input sequences of different time lengths, standardizing their temporal dimension to 30. Similarly, the Conv2D network reshapes spatial features into 32 channels, with the temporal dimension adjusted to 30 via adaptive pooling. As a result, outputs from both Conv1D and Conv2D are aligned with consistent dimensions: 30 in temporal length and 32 channels. Outputs \({F^i}\) from Conv1D and \({E^i}\) from Conv2D are stacked along the channel dimension, resulting in a combined feature set \({S = [{F^i};{E^i}]}\), effectively merging information from different temporal scales for further analysis.

Squeeze-and-excitation networks

The spatiotemporal features extracted by MSCNN are stacked into a \(192 \times 30\) feature matrix. The disparity between channel count (192) and temporal length (30) indicates redundancy. To address this, SE-Net enhances crucial channels while reducing irrelevant ones, effectively minimizing redundancy. SE-Net operates in two stages: “Squeeze” and “Excitation”. In the Squeeze stage, feature maps are compressed along the temporal dimension via global average pooling, generating channel-wise descriptors. Each feature map is reduced to a scalar representing average activation:

where \({s_c}\) is the squeezed feature value for channel C, \({x_c}(i)\) is the activation at position i, and L denotes the temporal dimension.

In the Excitation stage, Excitation Layer 1, a fully connected layer with a reduction ratio of 16, compresses channels from 192 to 12, focusing on relevant features. Excitation Layer 2 then expands the compressed vector back to 192 channels, ensuring independent weight calculation for each channel. The final channel-wise weights \({f_c}\) are then applied to the original stacked feature set S to recalibrate the importance of each channel. This recalibration is followed by two fully connected (FC) layers and a Softmax layer, resulting in the initial sleep posture prediction \({\hat{Z}}\):

where \({f_c}\) is the weight for channel c, s is the descriptor vector from global average pooling, \({W_1}\) and \({W_2}\) are the weight matrices of Excitation Layers 1 and 2; \({b_1}\) and \({b_2}\) are bias terms, and \(\sigma\) is the Sigmoid activation function. S represents the stacked feature matrix, \(\odot\) denotes element-wise multiplication, \(W_3\) and \(W_4\) are the weight matrices of the FC layers, \(b_3\) and \(b_4\) are their corresponding bias terms, and \(\hat{Z}\) is the initial sleep posture prediction.

Continuous HMM

Sleep patterns exhibit long-term continuity, often remaining unchanged for extended periods. Our dataset shows that 90.9% of patients changed their sleep postures fewer than 25 times overnight. Although MSCNN integrates features across different time scales, it struggles to capture long-term dependencies, causing sleep posture changes exceeding 40 times per night. To improve temporal continuity, we applied the CHMM model, which excels at modeling sequences with hidden state transitions.

Observations reveal that sleep postures remain stable unless triggered by a rollover event. To model this, we categorize sleep behavior based on posture type and whether a rollover event occurs, resulting in six unique hidden states: \(h_1\) (Supine, No Rollover), \(h_2\) (Left Lateral, No Rollover), \(h_3\) (Right Lateral, No Rollover), \(h_4\) (Supine, Rollover), \(h_5\) (Left Lateral, Rollover), and \(h_6\) (Right Lateral, Rollover). The observation states are initial posture estimates from MSCNN, used to refine the model and derive accurate symbol states. These symbol states represent the final outputs of the sleep posture recognition model: \(v_1\) (Supine), \(v_2\) (Left Lateral), and \(v_3\) (Right Lateral).

Figure 6a illustrates the state transition diagram, where transitions between the six hidden states are represented by probabilities \(a_{ij}\). Transition probability matrix, \(A = {a_{ij}}\), defines the likelihood of transitioning from \(h_i\) to \(h_j\). Rare state transitions, such as maintaining the same posture after a rollover or frequent changes in consecutive samples (<0.1%), are ignored. Symbol output probability matrix, \(B = {b_j(v_k)}\), specifies the probability of hidden state \(h_j\) producing symbol state \(v_k\), linking hidden physiological states to observed sleep postures. The time series spans L time points, with each hidden state sequence \((I_1,\ldots , I_L)\) corresponding to an observation sequence \((O_1,\ldots , O_L)\). The CHMM model generates optimized predictions \((P_1, \ldots , P_L)\), where each \(P_i\) represents the sleep posture estimate at time point i. Figure 6b illustrates the state transitions from the beginning (B) to the end (E) of the sequence. To optimize posture classification, HMM parameters were initialized:

where N represents the six possible combinations of sleep postures and rollover states, and \(\pi\) is the initial state distribution. Given the large sample size, optimizing the entire path at once is impractical, the data is segmented into 50 sample blocks with a 20% overlap, improving computational efficiency and ensuring accurate posture detection.

Next, we apply the Baum–Welch algorithm to iteratively optimize the model by maximizing the log-likelihood function and then use the Viterbi algorithm to determine the optimal path for each observation sequence, identifying the most likely sequence of hidden states and mapping it to the corresponding symbol states. The trained Hidden Markov Model (HMM), denoted as \(\Theta\), is used to classify observation sequences into one of the three possible sleep postures.

where \(\alpha _t(i)\) and \(\beta _t(i)\) are the forward and backward probabilities, representing the hidden state distribution at each time point t given the current parameter estimate \(\lambda\), and \(C_*(q)\) returns the most likely sequence of states q.

Results

Dataset

Our study, conducted at the Sleep Center of Peking Union Medical College Hospital, involved data from 22 patients with varying degrees of OSA, including 15 men and 7 women aged 30 to 68. Among the participants, 8 were overweight, 5 were lean, and the remainder had normal weight. To ensure the broad applicability of our study, we evaluated mattresses of different thicknesses, including a 15 cm standard spring mattress, a 3-5 cm cotton mattress, and a 1 cm travel pad. According to our previous studies, while mattress thickness significantly affects signal amplitude, its influence on heartbeat, respiratory cycles, and pressure distribution is limited17. Additionally, a 15 cm spring mattress effectively reduces signal interference caused by minor body movements and aligns with the standard mattress thickness used in most households and hospitals. Therefore, Data collection devices were placed under a 15 cm thick mattress near the chest area, with pressure signals recorded alongside PSG monitoring. Each sleep session lasted 5 to 8 hours, resulting in 8583 minutes of data, including 4232 minutes (49.30%) in supine posture, 2934 minutes (34.18%) in right lateral, and 1417 minutes (12.06%) in left lateral, indicating an imbalance across sleep postures. To mitigate bias during model training, we employed stratified sampling to maintain a balanced ratio of positive to negative samples within each batch (1:1), ensuring that training data remained evenly distributed across different sleep postures.

For the Movement Artifact and Rollover Identification module, 6600 one-minute signal segments were randomly selected from the most active channels, balanced across all patients. These samples were labeled by three experienced technicians according to established standards for signal quality and movement artifact identification, with discrepancies resolved through discussion. During training, we further applied stratified sampling to balance the ratio of artifact and non-artifact samples, thereby reducing bias toward the non-artifact category. Additionally, to address the missing prone posture data and evaluate the model’s ability to detect it, we collected 240 additional samples from six subjects for a four-class sleep posture classification experiment (supine, right lateral, left lateral, and prone). To address the small sample size and improve the model’s generalizability, we used a leave-one-subject-out cross-validation (LOSO-CV) strategy. In this approach, each participant’s data was used for testing, while the data from the remaining 21 participants formed the training set. This strategy helps prevent the model from overfitting to individual characteristics and provides a more realistic evaluation of its performance on unseen subjects.

Detection performance

Our study focuses on sleep posture detection using the MARI and CHMMConvScaleNet models, with performance evaluated through F1 score, accuracy (Acc), recall (Rec), precision (Pre), and AUC values. Key hyperparameters, including a learning rate of 0.001, batch size of 32, and a maximum of 100 epochs, were carefully initialized, while other settings followed Keras defaults. To prevent overfitting, L1 regularization was applied. The MARI module used a dataset of 6600 one-minute signal segments, with 5000 for training and 1600 for testing. The CHMMConvScaleNet model was assessed using LOSO-CV on 8583 minutes of continuous sleep data, with a final confusion matrix created by summing the matrices from each fold. Additionally, to address the missing prone posture data, we collected 240 samples for a four-class sleep posture classification experiment (supine, right lateral, left lateral, and prone). These samples were expanded to 2400 samples based on the statistical distribution of sleep postures23. As these additional samples lack continuous time series, they were processed using Spatiotemporal CNN, which excludes the CHMM module. Stratified sampling was applied to address the class imbalance issue in the input samples for MARI, Spatiotemporal CNN, and CHMMConvScaleNet models.

As illustrated in Fig.7a the MARI module demonstrates relatively low precision, with 68.12% for rollover events. However, its recall rates are high, reaching 94.67%, ensuring that most critical events are captured. Given that the subsequent models can filter out misclassified samples, the lower precision has a limited impact on the overall performance. Overall, the MARI module’s performance meets the requirements for the subsequent processing stages. The confusion matrix in Fig. 7b presents the results of sleep posture detection using the CHMMConvScaleNet model, achieving an accuracy of 93.41%, recall of 92.91%, precision of 91.87%, and F1 score of 0.9235. Fig. 7c shows the loss curve for CHMMConvScaleNet, which decreases rapidly during the first 10 epochs, and stabilizes around 0.15 without showing overfitting or underfitting. This indicates that our deep learning approach is feasible even on a small dataset. In Fig. 7d, we present the results of an additional 4-class classification experiment. This experiment utilized the spatial-temporal CNN component of our model, with results of 86.08% for accuracy and 84.93% for recall. This experiment demonstrates that, despite the limited data for prone posture, our method is sufficiently capable of distinguishing between the four sleep postures.

Discussion

Performance comparison

Table 1 compares CHMMConvScaleNet with several leading sleep posture detection methods. Although our method’s accuracy is 3-5% lower than the best-performing methods13,14,20,24,25,28, it achieves significant breakthroughs in sensor quantity, device portability, and continuous applicability. Compared to traditional method12,13,14,15,16,24,25,26,29, our device uses only about 1-5% of the sensors they employ, and its size is less than one-fifth of theirs. This improvement enhances integration with embedded systems and reduces sensor costs and computational requirements. While our earlier S3CNN method improved practicality and achieved 92.9% accuracy on real datasets, it struggled with motion artifacts and lacked inter-sample correlations. CHMMConvScaleNet addresses these limitations by leveraging multi-scale information to predict compromised segments, integrating MARI and CHMM modules to link posture transitions with rollover events, thus improving adaptability, reducing susceptibility to interference.

Furthermore, unlike many studies that rely on controlled short-duration experiments, our dataset captures continuous sleep dataset, making it closer to real-world sleep behaviors. Traditional datasets often use forced posture changes at fixed intervals, whereas our data naturally records spontaneous movements and posture transitions throughout an entire sleep session. This approach provides a more realistic assessment of sleep posture dynamics, making it better suited for practical deployment in home-based sleep monitoring.

However, the current approach has several limitations that need to be addressed in future work. One limitation is the requirement for manual signal processing, which increases workload and may introduce bias, particularly in the use of sine approximation for spatial feature extraction. Additionally, the model currently recognizes only three sleep postures, lacking finer granularity in classification. To address these limitations, future research could explore using graph neural networks for automated feature extraction, leveraging sensor adjacency matrices to capture spatial information and reduce manual bias. Further improvements will also require larger datasets and collaboration with medical experts to refine posture classification, enhancing the applicability and precision of sleep monitoring technology.

Ablation study

To validate the efficacy of each component in CHMMConvScaleNet, we conducted ablation studies focusing on MS-Conv1D, MS-Conv2D, the CHMM module, and the integration of features from different time scales, as detailed in Table 2. Models M1 to M3 use one or two CNN components without the CHMM module or multi-scale fusion, while \(M(i + 3)\) enhances M(i) by adding the CHMM module. M7 and M8 further enhance M3 and M6 by incorporating multi-scale feature fusion. All experiment were validated using 5-fold cross-validation. More detailed comparison is given in Supplementary Materials.

The ablation results demonstrate the contributions of each module. Methods M1 to M3 show that integrating spatial with temporal features improved accuracy from 70.81% to 87.46%, yet frequent posture changes persisted, particularly near rollovers. The CHMM module (M4 to M6) leveraged rollover events to enhance continuity, increasing accuracy to 78-91% and reducing posture changes from 350-200 to 180-100 instances. Further multi-scale integration (M7, M8) improved detection, with M8 achieving the highest accuracy and stability, closely matching the actual number of posture changes (98 detected versus 83 true). These results highlight that each module contributes to improved accuracy and continuity in complex scenarios.

Conclusion

This study presented an automated sleep posture recognition model, using 32 piezoelectric ceramic sensors to capture subtle physiological signals such as breathing and heart activity. By integrating multi-scale CNNs for spatiotemporal feature extraction and a CHMM module to enhance posture transitions, we significantly improved accuracy and continuity of posture detection, addressing information loss in disturbed samples. These improvements have the potential to ensure reliable long-term sleep monitoring in home environments, providing strong support for practical applications in sleep care and disease prevention. Future research could explore using graph neural networks for automated feature extraction, leveraging sensor adjacency matrices to capture spatial information and reduce manual bias. Further improvements will also require larger datasets and collaboration with medical experts to refine posture classification, enhancing the applicability and precision of sleep monitoring technology.

Data availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Schecter, S. & Liu, J. Rem predominance of OSA: Associated with supine position, but not with CPAP adherence. Sleep 44, A163–A163. https://doi.org/10.1093/sleep/zsab072.410 (2021).

Pan, Q., Brulin, D. & Campo, E. Evaluation of a wireless home sleep monitoring system compared to polysomnography. IRBM 44, 100735. https://doi.org/10.1016/j.irbm.2022.09.002 (2023).

Abdulsadig, R. S., Singh, S., Patel, Z. & Rodriguez-Villegas, E. Sleep posture detection using an accelerometer placed on the neck. In 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). 2430–2433. https://doi.org/10.1109/embc48229.2022.9871300 (2022).

He, C. et al. A novel snore detection and suppression method for a flexible patch with mems microphone and accelerometer. IEEE Internet Things J. 9, 25791–25804. https://doi.org/10.1109/jiot.2022.3199085 (2022).

Walmsley, C. P. et al. Measurement of upper limb range of motion using wearable sensors: A systematic review. Sports Med. 4, 53. https://doi.org/10.1186/s40798-018-0167-7 (2018).

Deng, F. et al. Design and implementation of a noncontact sleep monitoring system using infrared cameras and motion sensor. IEEE Trans. Instrum. Meas. 67, 1555–1563. https://doi.org/10.1109/TIM.2017.2779358 (2018).

Li, Y.-Y., Wang, S.-J. & Hung, Y.-P. A vision-based system for in-sleep upper-body and head pose classification. Sensors 22. https://doi.org/10.3390/s22052014 (2022).

Yue, S., Yang, Y., Wang, H., Rahul, H. & Katabi, D. Bodycompass: Monitoring sleep posture with wireless signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 66:1-66:25. https://doi.org/10.1145/3397311 (2020).

Luo, B., Yang, Z., Chu, P. & Zhou, J. Human sleep posture recognition method based on interactive learning of ultra-long short-term information. IEEE Sens. J. 23, 13399–13410. https://doi.org/10.1109/JSEN.2023.3273533 (2023).

Liu, J. et al. Monitoring vital signs and postures during sleep using WIFI signals. IEEE Internet Things J. 5, 2071–2084. https://doi.org/10.1109/JIOT.2018.2822818 (2018).

Kau, L.-J. & Wang, M.-Y. Pressure-sensor-based sleep status and quality evaluation system. IEEE Sens. J. 23, 9739–9754. https://doi.org/10.1109/JSEN.2023.3262747 (2023).

Hsia, C. et al. Analysis and comparison of sleeping posture classification methods using pressure sensitive bed system. In 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 6131–6134. https://doi.org/10.1109/IEMBS.2009.5334694 (2009).

Diao, H. & Chen, C. Deep residual networks for sleep posture recognition with unobtrusive miniature scale smart mat system. IEEE Trans. Biomed. Circuits Syst. 15, 111–121. https://doi.org/10.1109/tbcas.2021.3053602 (2021).

Matar, G. & Lina, J.-M. Artificial neural network for in-bed posture classification using bed-sheet pressure sensors. IEEE J. Biomed. Health Inform. 24, 101–110. https://doi.org/10.1109/jbhi.2019.2899070 (2020).

Hu, Q. & Tang, X. A real time patient specific sleeping posture recognition system using pressure sensitive conductive sheet and transfer learning. IEEE Sens. J. 21, 6869–6879. https://doi.org/10.1109/jsen.2020.3043416 (2020).

Mineharu & Kuwahara, A. A study of automatic classification of sleeping position by a pressure-sensitive sensor. In Proceedings of the 2015 International Conference on Informatics, Electronics & Vision (ICIEV). 1–5. https://doi.org/10.1109/ICIEV.2015.7334059 (2015).

Hu, D., Gao, W. & Ang, K. K. Smart sleep monitoring: Sparse sensor-based spatiotemporal CNN for sleep posture detection. Sensors 24. https://doi.org/10.3390/s24154833 (2024).

Li, Y., Liu, Y., Zhang, Y., Wang, K. & Webber, K. G. Piezoelectric ceramics with hierarchical macro- and micro-pore channels for sensing applications. Addit. Manuf. 79, 103915. https://doi.org/10.1016/j.addma.2023.103915 (2024).

Zhang, Y. et al. Enhanced linearity of KNNS-BNKZ ceramics by combining the controls of phase composition and microstructure. Ceram. Int. 44, 8380–8386. https://doi.org/10.1016/j.ceramint.2018.02.030 (2018).

Jiao, C. & Su, B.-Y. Multiple instance dictionary learning for beat-to-beat heart rate monitoring from ballistocardiograms. IEEE Trans. Biomed. Eng. 65, 2634–2648. https://doi.org/10.1109/TBME.2018.2812602 (2018).

Mai, Y. & Chen, Z. Non-contact heartbeat detection based on ballistocardiogram using UNET and bidirectional long short-term memory. IEEE J. Biomed. Health Inform. 26, 3720–3730. https://doi.org/10.1109/JBHI.2022.3162396 (2022).

Orphanidou, C. & Drobnjak, I. Quality assessment of ambulatory ECG using wavelet entropy of the HRV signal. IEEE J. Biomed. Health Inform. 21, 1216–1223. https://doi.org/10.1109/JBHI.2016.2615316 (2017).

Liu, S. et al. Simultaneously-collected multimodal lying pose dataset: Enabling in-bed human pose monitoring. IEEE Trans. Pattern Anal. Mach. Intell. 45, 1106–1118. https://doi.org/10.1109/TPAMI.2022.3192389 (2023).

Enokibori, Y. & Mase, K. Data augmentation to build high performance DNN for in-bed posture classification. J. Inf. Process. 26, 718–727. https://doi.org/10.2197/ipsjjip.26.718 (2018).

Heydarzadeh, M. & Nourani, M. In-bed posture classification using deep autoencoders. In Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). 3839–3842 (2016).

Xu, X. & Lin, F. Body-earth mover’s distance: A matching-based approach for sleep posture recognition. IEEE Trans. Biomed. Circuits Syst. 10, 1023–1035. https://doi.org/10.1109/tbcas.2016.2543686 (2016).

Shin, S. & Yousefian, P. A unified approach to wearable ballistocardiogram gating and wave localization. IEEE Trans. Biomed. Eng. 68, 1115–1122. https://doi.org/10.1109/TBME.2020.3010864 (2021).

Georgios, G. & Papangelis, A. Recognition of sleep patterns using a bed pressure mat. In Proceedings of the 4th ACM International Conference on PErvasive Technologies Related to Assistive Environments. –5 (2011).

Chen, Z. & Wang, Y. Remote recognition of in-bed postures using a thermopile array sensor with machine learning. IEEE Sens. J. 21, 10428–10436. https://doi.org/10.1109/JSEN.2021.3059681 (2021).

Author information

Authors and Affiliations

Contributions

Conceptualization, W.G., R.H and D.H.; Investigation, D.H., and W.G.; Methodology, D.H., W.G., K.K.A, and M.H.; Project administration, W.G. and R.H.; Software, D.H.; Supervision, W.G, K.K.A, and G.C.; Validation, D.H and X.L; Writing—original draft, D.H. and M.H.; Writing—review and editing, D.H., W.G., K.K.A., M.H. and X.L All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hu, D., Gao, W., Ang, K.K. et al. CHMMConvScaleNet: a hybrid convolutional neural network and continuous hidden Markov model with multi-scale features for sleep posture detection. Sci Rep 15, 12206 (2025). https://doi.org/10.1038/s41598-025-93541-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-93541-0