Abstract

Sign language (SL) is an effective mode of communication, which uses visual–physical methods like hand signals, expressions, and body actions to communicate between the difficulty of hearing and the deaf community, produce opinions, and carry significant conversations. SL recognition (SLR), the procedure of automatically identifying and construing gestures of SL, has gotten considerable attention recently owing to its latent link to the lack of communication between the deaf and the hearing world. Hand gesture detection is its domain, in which computer vision (CV) and artificial intelligence (AI) help deliver non-verbal communication between computers and humans by classifying the significant movements of the human hands. The emergence and constant growth of DL approaches have delivered motivation and momentum for evolving SLR. Therefore, this manuscript presents an Innovative Sign Language Recognition using Hand Pose with Hybrid Metaheuristic Optimization Algorithms in Deep Learning (ISLRHP-HMOADL) technique for Hearing-Impaired Persons. The main objective of the ISLRHP-HMOADL technique focused on hand pose recognition to improve the efficiency and accuracy of sign interpretation for hearing-impaired persons. Initially, the ISLRHP-HMOADL model performs image pre-processing using a wiener filter (WF) to enhance image quality by reducing noise. Furthermore, the fusion of three models, ResNeXt101, VGG19, and vision transformer (ViT), is employed for feature extraction to capture diverse and intricate spatial and contextual details from the images. The bidirectional gated recurrent unit (BiGRU) classifier is implemented for hand pose recognition. To further optimize the performance of the model, the ISLRHP-HMOADL model implements the hybrid crow search-improved grey wolf optimization (CS-IGWO) model for parameter tuning, achieving a finely-tuned configuration that enhances classification accuracy and robustness. A comprehensive experimental study is accomplished under the ASL alphabet dataset to exhibit the improved performance of the ISLRHP-HMOADL model. The comparative results of the ISLRHP-HMOADL model illustrated a superior accuracy value of 99.57% over existing techniques.

Similar content being viewed by others

Introduction

People with difficulties or disabilities are usually separated from accessing education, good health, and other social communications1. Assistive technology can alter the life of a differently abled individual to a large extent by all means. Vocal impairment and deafness are one of the main disabilities handled by human beings for centuries2. To interact in society, they need a manual interpreter. SL regenerates natural language into hand gestures, eyebrow movements, and facial expressions. SL is not universal; it switches from area to area3. Hand gesture detection is a critical feature of CV and Human–Computer Interaction (HCI), specifically in applications that facilitate nonverbal communication and SLR between non-deaf societies and hard-to-hearing. Hand gestures, essential for everyday activities, transfer particular information through distinct movements, hand orientation, and posture. They symbolize objects, digits, or letters, often depending on hand orientation for meaning; some gestures have universal meanings, and others vary depending on context or culture4. SL is the only interaction medium among non-deaf communities and the hard of hearing. Still, the hard-of-hearing society handles ongoing tasks to meet their basic needs in the digital phase. Hand gesture detection is not only utilized to help communication among the deaf but also in several other applications5. It stimulates new technologies like smart television, gesture-based signalling methods, design recognition industries, and virtual reality in the CV. Various applications have made significant progress, like controlling robots, playing with virtual musical instruments, and SL detection6. The progress in identifying gestures by hand has also captivated a lot of attention from the industrial sector in manufacturing communications between self-driving cars and human robots. The SL recognition, recognition of sports, mainly SL, the precision of human activity, posture and stance, and physical monitoring of exercises are all innovative and novel applications of hand gesture detection7. In SL detection, classical machine learning (ML) approaches can play important roles8. These approaches are employed for SL modelling, classification, and feature extraction. Still, classical ML methods frequently face certain restrictions and have attained a bottleneck9. Furthermore, these approaches may struggle with high-dimensional and complex data, such as the spatio-temporal dast in SL gestures. In recent years, deep learning (DL) approaches have surpassed advanced ML methods in various fields, particularly CV and natural language processing. DL methods have taken significant developments in SL identification, leading to flow in study investigations published on DL-based SLR10.

This manuscript presents an Innovative Sign Language Recognition using Hand Pose with Hybrid Metaheuristic Optimization Algorithms in Deep Learning (ISLRHP-HMOADL) technique for Hearing-Impaired Persons. The main objective of the ISLRHP-HMOADL technique focused on hand pose recognition to improve the efficiency and accuracy of sign interpretation for hearing-impaired persons. Initially, the ISLRHP-HMOADL model performs image pre-processing using a wiener filter (WF) to enhance image quality by reducing noise. Furthermore, the fusion of three models, ResNeXt101, VGG19, and vision transformer (ViT), is employed for feature extraction to capture diverse and intricate spatial and contextual details from the images. The bidirectional gated recurrent unit (BiGRU) classifier is implemented for hand pose recognition. To further optimize the performance of the model, the ISLRHP-HMOADL model implements the hybrid crow search-improved grey wolf optimization (CS-IGWO) model for parameter tuning, achieving a finely-tuned configuration that enhances classification accuracy and robustness. A comprehensive experimental study is accomplished under the ASL alphabet dataset to exhibit the improved performance of the ISLRHP-HMOADL model. The major contribution of the ISLRHP-HMOADL model is as follows.

-

The ISLRHP-HMOADL method utilizes WF for noise reduction in image pre-processing, improving the quality of input images. This step assists in maintaining the clarity of crucial features and reducing distortions. It ensures that subsequent feature extraction processes are more accurate and reliable, enhancing overall model performance.

-

The ISLRHP-HMOADL approach integrates ResNeXt101, VGG19, and ViT, benefiting from each architecture’s unique merits and improving its capability for extracting diverse and rich features. This fusion enhances the model’s robustness in recognizing intrinsic patterns. The approach ensures a better understanding of the data, improving feature extraction performance.

-

The ISLRHP-HMOADL method employs the BiGRU classifier to effectually capture both forward and backward dependencies in hand pose sequences, improving the recognition of dynamic SL gestures. By utilizing its capability to process sequential data, it enhances the accuracy of hand pose recognition. This enables the model to adapt to the temporal discrepancies in SL, giving more reliable performance.

-

The ISLRHP-HMOADL model implements the hybrid CS-IGWO approach for optimizing the technique’s hyperparameters, enhancing its accuracy by efficiently searching for optimal solutions. This tuning process improves the recognition system’s robustness, resulting in more reliable and precise performance. The integration of both CS and IGWO techniques ensures superior convergence and optimization.

-

The novelty of the ISLRHP-HMOADL method is in incorporating three powerful models such as ResNeXt101, VGG19, and ViT, for feature extraction, each contributing unique strengths for more accurate and comprehensive representation. Furthermore, utilizing the hybrid CS-IGWO optimization method for hyperparameter tuning significantly improves the precision and efficiency of the model. This dual integration of advanced architectures and optimized tuning drives superior performance in hand pose recognition.

Literature works

Sonsare et al.11 developed a method by incorporating YOLO Neural Architecture Search (NAS) for real-world SL detection, which can precisely identify and localize SL gestures. The DL component utilizes transformer models or recurrent neural networks (RNNs) to convert identified characters into text. This invention offers a complete solution for the hard of hearing and deaf people and permits them to communicate with the hearing world perfectly. Even though this method influences the power of advanced DL, existing approaches have restrictions. YOLO NAS is utilized to make a precise SL and a more effective translation method. Joshi et al.12 proposed an advanced system or app to support people with hearing or visual impairments interacting utilizing American SL (ASL). The developed method processes and captures hand gestures using image processing and ML methods, allowing the optimum transliteration of SL numbers and alphabets into machine-readable English text. This method applies Random Forest or DL methodology to classify the gestures and map them to particular numbers or alphabets. Kumar et al.13 proposed an SL recognition and detection method. The major goal of this method is to detect SL gestures ideally, interpret them into written text, and then convert them into spoken language, enhancing the communicative flexibility of a method. The developed method uses DL and ML methods, like LSTM (Long Short-Term Memory) and MediaPipe Holistic, for sign recognition and feature extraction. The authors14 developed a deep TL method by utilizing sufficiently labelled data of isolated signs. A new TL structure is projected, and the past few layers of the pertained system are re-educated utilizing the limited count of labelled data sentences.

Saw et al.15 developed an innovative method in ML for detecting SL, merging the capabilities of CV with cutting-edge DL approaches. This approach is supported by advanced neural network structures, particularly convolutional neural networks (CNN) and RNNs, proficient at processing complex visual data in real-time. Using a diverse and strong dataset, the projected method showcases its efficiency in real-time SL recognition and effectiveness and demonstrates higher precision. Ahlawat et al.16 developed a new method for gesture-based SL recognition, using a two-step process including LSTM networks and keypoint detection. This paper builds an effective SL method by ideally incorporating these key points into an LSTM-based architecture. Sivaraman et al.17 developed an SL Recognition employing an Improved Seagull Optimization Algorithm with DL (SLR-ISOADL) method. In the SLR-ISOADL method, a Bilateral Filtering (BF) method is used to eliminate noise. The SLR-ISOADL method utilizes the AlexNet model to derive and learn intrinsic patterns. Also, the ISOA is employed to optimize the hyper-parameter election of the AlexNet model. Ultimately, the Multilayer Perceptron (MLP) approach is utilized to classify and identify the SLs. Chouvatut et al.18 projected a Chinese finger SL detection technique depending on Adam and ResNet optimization and additional image processing methods to gain high precision. The proposed method compares the detection results to other CNN methods, which are broadly applied by DL methods for detection. Okorie, Blamah, and Bibu19 present a DL-based support system for speech- and hearing-impaired individuals, utilizing TensorFlow and transfer learning (TL) to convert Nigerian SL (NSL) signs into audio and spoken words into text, enabling real-time communication. Al Farid et al.20 propose a DL-based methodology for hand gesture recognition by utilizing RGB and depth data from a Kinect sensor. The approach utilizes single shot detector-CNN (SSD-CNN) for hand tracking, deep dilation for improved gesture visibility, and support vector machine (SVM) for classification.

Leiva et al.21 present a cost-effective real-time system for Pakistan SL (PSL) recognition using a glove with flex sensors and an MPU-6050 device. Rezaee et al.22 propose a hand gesture recognition model with three steps: (1) EMG feature extraction using time–frequency and fractal dimension features, (2) feature selection through soft ensembling of t-tests, entropy, and wrapper methods, and (3) SVM classification optimized with the Gases Brownian Motion Optimization (GBMO) algorithm. Jeyanthi et al.23 introduce an SLR system that uses ML, CV, and Media Pipe Hands for real-time hand landmark detection and interpretation. Sucharitha et al.24 present a DL methodology for SLR, utilizing TL with pre-trained VGG16 weights. The model extracts feature from images of Indian SL signs, covering all English alphabets and digits. Arularasan et al.25 introduce the SSODL-ASLR model for individuals with speech and hearing impairments using CNNs and HCI. It detects SL using Mask RCNN and classifies it with an SSO approach and SM-SVM model. Zhou et al.26 developed an ultrasensitive, anti-interference, flexible ionic composite nanofiber membrane pressure sensor for SLR, addressing issues like poor mechanical stability and external signal interference by employing polymer-blending interactions and unique hydrogen bond networks. Snehalatha et al.27 developed a real-time SLR system utilizing CV. It uses the TL model with pre-trainedamely VGG-16 and ResNet to train a deep neural network (DNN) on a labelled SL dataset. Zhang, Wang, and Wang28 propose a gloss-free Transformer-based framework for the SL translation (GFTLS-SLT) approach. This model utilizes multimodal alignment and fusion through cross-attention. Gesture Lexical Awareness (GLA) and Global Semantic Awareness (GSA) modules capture lexical and semantic meanings, improving SLT accuracy without relying on gloss.

The existing studies for SLR still encounter diverse limitations, comprising challenges in real-time processing, accuracy, and adaptability to various SLs. While several DL methods, namely CNNs, RNNs, and transformers, have exhibited promise, they often face difficulty with noisy environments, dynamic gestures, and large-scale datasets. Furthermore, most existing approaches concentrate on isolated sign recognition, limiting their applicability in continuous SL translation. There is also a lack of research on systems that can efficiently incorporate multimodal data, such as hand movements, facial expressions, and voice input. Addressing these issues could significantly improve the performance and usability of SLR systems.

Proposed methodology

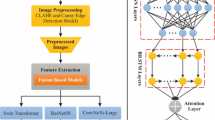

In this study, an ISLRHP-HMOADL technique for hearing-impaired persons is presented. The main objective of the ISLRHP-HMOADL technique focused on hand pose recognition to improve the efficiency and accuracy of sign interpretation for hearing-impaired persons. It involves various stages: image pre-processing, feature extraction, classification process, and hyperparameter tuning. Figure 1 represents the overall workflow procedure of the ISLRHP-HMOADL method.

Image pre-processing

Initially, the ISLRHP-HMOADL model performs the image pre-processing stage and employs WF to enhance image quality by reducing noise29. This model is chosen for its capability to effectually mitigate noise while preserving the crucial features of the input image. Unlike other noise reduction methods, WF adapts to local image statistics, enhancing noise filtering without blurring the edges or significant detail loss. This characteristic makes it highly appropriate for pre-processing images in tasks such as hand pose recognition, where edge sharpness and feature clarity are critical. The WF also gives a good balance between noise suppression and image quality, making it a reliable choice for improving the performance of subsequent feature extraction models. This method outperforms conventional filters by dynamically adjusting based on image variance, improving overall model accuracy. Figure 2 illustrates the structure of the WF model.

The WF is an effectual image pre-processing model that improves images’ excellence by decreasing blurring and noise, vital for precise hand pose detection in SL methods. Using WF, the noise is minimalized while upholding crucial features of the hand gestures, foremost to more defined and precise images. This pre-processing stage certifies that the following feature extraction techniques can function on higher-quality data, enhancing the complete performance of SLR methods. For deaf persons, improved image clarity enables real communication by permitting automatic methods to understand hand signs precisely. Eventually, the WF donates much to emerging efficient and robust SLR applications. This enhancement results in faster processing and more reliable interpretation of gestures. Ultimately, it bridges communication gaps, fostering inclusivity and enabling seamless interaction between the deaf and hearing communities.

Fusion of feature extraction

Then, the fusion of feature extraction using three models, ResNeXt101, VGG19, and ViT, is employed to capture diverse and intricate spatial and contextual details from the images30. This fusion technique is chosen to utilize each architecture’s merits in capturing local and global features. ResNeXt101 outperforms at handling complex spatial patterns, VGG19 is known for its deep network architecture that captures complex details, and ViT is effective at capturing long-range dependencies and contextual data in images. Integrating these models ensures a comprehensive feature representation, improving the technique’s capability to handle various image complexities. This multi-model fusion enhances the robustness of feature extraction, making it more adaptable to diverse SL gestures. This strategy gives a more robust and diverse feature set than single-model approaches, ultimately improving recognition accuracy.

ResNeXt101 model

ResNeXt101 is an advanced CNN framework well-known for its excellent capability to take composite spatial features. Embedded in the ResNeXt architecture, it presents the Block of ResNeXt that uses new split‐transform‐merge tactics significant to the Inception module. These tactical methods permit the combination of many transformations inside a solitary component, defining it excepting its precursors. The overview of a novel size recognized as cardinalities plays an essential part inside the ResNeXt framework, together with conventional sizes of width and depth. The factor of cardinality decides how transformations are structured and joined, considerably improving the system’s capability to take complex features and patterns.

ResNeXt has outshined in different CV tasks, overtaking other network structures such as Inception and ResNet on the ImageNet database. For example, a 101-layer ResNeXt attains greater precision than ResNet 200 while preserving only partial complexities. Moreover, it claims a more efficient design in comparison with Inception methods. Mainly, ResNeXt worked as the basis for the second-best ending in the classification task of ILSVRC 2016. It enables real-time recognition of dynamical physical gestures from live camera footage, using a hierarchical framework for the effective online process of offline-trained CNN frameworks. It contains a deep classifier and a lightweight detector, using the Levenshtein distance metric to assess one-time starts of identified movements. It attains sophisticated offline classification precision on NVIDIA and EgoGesture benchmarks, keeping near-offline performance in real-time classification and detection. The pre‐trained models and architecture codes are openly obtainable for additional utilization and exploration.

VGG19 model

VGG19, a new CNN structure, is an essential breakthrough in the CV area, demonstrating notable growth in image recognition. It includes a deep structural design, including 19 layers, with detailed planning of 3 fully connected (FC) layers and 16 convolutional layers. A differentiating representative of this method is its constant use of 3 × 3 convolutional filters through its convolutional layers, joined by a one-pixel stride. Furthermore, it uses 2 × 2 max-pooling layers using a two-pixel stride. Notably, the author’s study exposed that applying this reduced convolution filter (3 × 3) was more effective in information representation and feature extraction than more excellent filters with a strength of 5 × 5 or 7 × 7.

Its outstanding performance and powerful part in DL are authenticated by its training on the wide-ranging ImageNet database, which boasts a massive source of over 1 million images surrounding a thousand different class labels. The result of these training pieces was more and nothing less outstanding, as they achieved advanced outcomes in ImageNet’s localization and classification tasks. Additionally, its localization rate of error of 25.3% highlights its competence in precisely identifying objects inside the images, a crucial ability in real-world applications such as object recognition and detection.

ViT model

ViT signifies a paradigm change in CV by using the achievement of transformer structures in the tasks of natural language processing (NLP)31. Conventionally, CNNs are the mainstay of image classification methods, and then ViT presents a new process by handling an image as a patch sequence, similar to words in a sentence. This withdrawal from grid‐based handling allows it to take longer-term relationships and dependencies inside an image. The self-attention mechanism of the transformers permits them to concentrate on various portions of the input sequence and accomplish it extremely efficiently to capture contextual information globally.

During this field of ViT, this attention mechanism has been used to image patches, permitting the method to join various spatial areas concurrently. The ViT structure contains a first embedding layer. As the training develops, these previous layers are slowly released to permit essential alteration to the objective dataset. In the process of fine‐tuning, each layer is trained with a similar rate of learning. These decisions are based on the hyperparameter tuning outcomes gained using Bayesian optimization. Figure 3 represents the ViT model.

BiGRU-based classification process

For hand pose recognition, the BiGRU classifier is deployed. BiGRU is an RNN dedicated to handling a series of information32. This classifier is chosen due to its capability to capture sequential dependencies in time-series data, which is crucial for recognizing dynamic gestures in SL. Unlike conventional RNNs, BiGRU processes data in both forward and backward directions, allowing it to capture past and future context in the gesture sequence. This bidirectional approach improves the understanding of gesture transitions of the model and improves accuracy in classifying complex SL movements. Using BiGRU over other techniques like LSTMs or simple RNNs is advantageous for handling longer sequences with lesser computational resources. It also mitigates the risk of vanishing gradients, which can affect learning in deep architectures. This makes BiGRU an ideal choice for high-performance real-time SLR. Figure 4 specifies the BiGRU framework.

BiGRU techniques were employed in the framework to treat time-based data attained, which are mainly beneficial for recognizing and predicting. GRU is a structure of RNN. A GRU contains dual gates, such as a reset and an update. The bidirectional feature of BiGRU denotes the method that handles input data. A BiGRU contains dual GRUs, such as one manages the information from beginning to close (forward), whereas the other handles information from close to beginning (backwards). The last output for every time step is the progression of the backward and forward hidden layers (HLs). The working process of GRU was separated into four gates such as reset, update, candidate HL, and final HL, which are condensed by the below-mentioned calculations:

Here, \(z_{t}\) denotes an update gate at \(tth\) time, which defines how ample the previous data wants to be delivered. \(r_{t}\) refers to a reset gate at \(tth\) time that decides how much of the earlier data wish to be neglected. \(\tilde{h}_{t}\) indicates a candidate HL at \(tth\) time that covers the candidate data to move to the subsequent state. \(h_{t}\) specifies the last HL at \(tth\) time. \(h_{t - 1}\) is a HL at the preceding time-step \(t - 1\). \(x_{t}\) is an input at \(tth\) time. \(W_{Z} ,\;W_{r} ,\;W\) denote the weight matrices. \(b_{Z} ,\;b_{r} , \;and\; b\) refer to bias terms. \(\sigma\) indicates an activation function of the sigmoid. \(tanh\) is an activation function of Hyperbolic tangent. \(\odot\) indicates an element‐wise multiplication. \(\left[ , \right]\) is a concatenation.

In predicting and analyzing, BiGRU techniques were employed to sequence the feature vectors removed from every frame by \(the \;ResNet50\) method. BiGRU techniques are mainly appropriate for this task due to their ability to handle time-based series and take both future (backwards) and past (forward) data. The usage of BiGRU permits the technique to know patterns over time. This ability to recognize the tendency for long-term movement was vital in the prediction and identification.

Hyperparameter tuning process

To further enhance the model’s performance, the ISLRHP-HMOADL model implements a hybrid CS-IGWO model for parameter tuning, achieving a finely tuned configuration that boosts classification accuracy and robustness33,34. This model was selected for its effective optimization of hyperparameters, which incorporates the merits of CSA and IGWO. This hybrid technique balances exploration and exploitation, enabling faster convergence and enhanced accuracy over conventional methods. The population size plays a significant role in ensuring a diverse set of candidate solutions, averting premature convergence and improving the technique’s capability to explore a broader search space. The search learning rate controls the speed of convergence, ensuring that the algorithm adjusts its pace based on the complexity of the model. Furthermore, the mutation rate in CS-IGWO introduces diversity within the population, helping avoid local optima and enhancing global search capabilities. The convergence criteria of this approach ensure that the algorithm terminates when an optimal or near-optimal solution is found, improving the overall performance and efficiency of hyperparameter tuning. Compared to conventional optimization techniques like grid or random search, CS-IGWO gives a more computationally efficient solution while significantly improving model performance.

Recently, the GWO has been established as a metaheuristic optimizer technique. The hunting behaviours and leadership style of grey wolves are the basis of this optimizer method. Their social power hierarchy is highly designed, containing four different sub-groups, namely beta (\(\beta\)), alpha (\(\alpha\)), delta (\(\delta\)), and omega (\(\omega\)) wolves. In GWO, grey wolves show an exclusive social behaviour recognized as combined hunting, as shown in the mathematical formulation. Likewise, the Crow search algorithm (CSA) is a meta-heuristic technique that uses the crow’s intellectual hunt method to defend their foodstuff and evoke their hiding location.

The traditional GWO technique and CSA hold significant attention due to their simple implementation, restricted number of parameters, and strong convergence abilities. Compared to many other methods, CSA can accomplish vast populations and produce superior outcomes more quickly. Many researchers have enhanced GWO to progress the technique’s optimizer abilities by striking a superior balance between exploitation and exploration. The CS‐IGWO approach is presented, a novel hybrid type of GWO and CSA that enhances performance.

In GWO, the parameters ‘A’ and ‘a’ play a controlling role in balancing global and local searches. As an outcome, this work aims at an iterative non-linear exponential decay control method exemplified in Eq. (5) to enhance the optimum development of GWO by upgrading the parameter ‘a’.

A new type of GWO, named the weighted distance GWO (GWO‐WD), has been projected, where the locations of \(\omega\) wolves are assessed by utilizing the three leader wolves as the result of their fitness as in Eq. (6). The \(\alpha\) wolf contains the maximum value of weight, and \(\delta\) wolves correspondingly.

Here \(f_{\alpha } , \;f_{\beta }\), and \(f_{\delta }\) signify the fitness of \(\alpha ,\;\beta\), and \(\delta\) wolves, correspondingly. Moreover, wolves will study their positions per the Eq. (7).

Mutation strategy

In the GWO technique, the grey wolves slowly meet near the alpha wolf, resulting in a decreased range in local searching and a prompt convergence of the technique. The Cauchy‐Gaussian mutation strategy is used to enlarge the range of leader wolves and increase their capability for local search, with the main aim of improving populace range. Its mathematical formulation is expressed in Eqs. (8)–(12).

The location upgrading mechanism depends on CSA

In classical GWO, search agents (wolf) were upgraded depending upon \(\alpha ,\;\beta\), and \(\delta\) wolves in the optimizer procedure. The location upgrades method limits the ability of searching agents to discover the searching space well, leading to early convergence. To overwhelm these restrictions of classical GWO, a hybrid technique was utilized by uniting it with CSA for optimum trade‐off between exploration and exploitation. The CSA technique contains fight length ‘\(fl\)’ in its location upgrade. The mathematical formulation is expressed below:

Additionally, not every population member is upgraded in GWO-CSA by both \(\beta\) and \(\alpha\) wolves upgrading directions, then by \(\alpha\) wolf as in Eq. (37) to uphold the variability of the population.

To avoid premature convergence, a technique must idyllically be capable of discovering a larger searching space in the optimizer’s early phases. This states that a fixed balance probability among Eqs. (13) and (14) do not help get the essential ratio of exploration‐exploitation. The likelihood of the adaptive balance is calculated as in Eq. (15).

Here, \(t\) signifies the present iteration, and the \(T_{{{\text{ Max }}iter}}\) denotes the entire number of iterations. The pseudocode of the developed Hybrid CS‐IGWO was defined in Algorithm 1.

The hybrid CS-IGWO approach grows a fitness function (FF) to improve classifier solutions. It defines a positive value to suggest the good efficiency of candidate outcomes. This paper assumes that the minimalized classifier error ratio is FF.

Result analysis

The performance evaluation of the ISLRHP-HMOADL methodology is confirmed under the ASL alphabet dataset35. The database is a set of alphabets of images from the ASL, divided into 29 folders, which indicate the numerous class labels. The dataset details are shown in Table 1, and the image sample is illustrated in Fig. 5. The suggested technique is simulated by employing Python 3.6.5 tool on PC i5-8600k, 250 GB SSD, GeForce 1050Ti 4 GB, 16 GB RAM, and 1 TB HDD. The parameter settings are provided: learning rate: 0.01, activation: ReLU, epoch count: 50, dropout: 0.5, and batch size: 5.

Figure 6 shows the confusion matrix made by the ISLRHP-HMOADL approach under 70%TRPH. The results show that the ISLRHP-HMOADL model can recognize and identify 29 class labels.

Table 2 and Fig. 7 denote the classifier outcomes of the ISLRHP-HMOADL technique under 70%TRPH. The outcomes indicate that the ISLRHP-HMOADL technique correctly recognized all the samples. With 70%TRPH, the ISLRHP-HMOADL approach presents an average \(accu_{y}\), \(prec_{n}\), \(sens_{y}\), \(spec_{y}\), and \(F1_{measure}\) of 99.56%, 94.33%, 93.66%, 99.77%, and 93.81%, respectively.

Figure 8 provides the confusion matrix produced by the ISLRHP-HMOADL technique under 30%TSPH. The outcomes recognize that the ISLRHP-HMOADL methodology has proficient identification and detection of all class labels.

Table 3 and Fig. 9 represent the classifier results of the ISLRHP-HMOADL approach under 30%TSPH. The results imply that the ISLRHP-HMOADL model correctly established all the samples. With 30%TSPH, the ISLRHP-HMOADL model presents an average \(accu_{y}\), \(prec_{n}\), \(sens_{y}\), \(spec_{y}\), and \(F1_{measure}\) of 99.57%, 94.43%, 93.70%, 99.78%, and 93.89%, correspondingly.

Figure 10 shows the training (TRA) \(accu_{y}\) and validation (VAL) \(accu_{y}\) analysis of the ISLRHP-HMOADL method. The \(accu_{y}\) values are computed throughout 0–25 epochs. The outcome highlights that the TRA and VAL \(accu_{y}\) graphs display a rising tendency, which informed the capacity of the ISLRHP-HMOADL methodology and its superior performance over various iterations. Also, the TRA and VAL \(accu_{y}\) remain closer over the epochs, which specifies minimum overfitting and demonstrates higher efficiency of the ISLRHP-HMOADL methodology, guaranteeing consistent prediction on hidden models.

Figure 11 demonstrates the TRA loss (TRALOS) and VAL loss (VALLOS) values of the ISLRHP-HMOADL method. The loss values are computed over the range of 0–25 epochs. It is denoted that the TRALS and VALLS curves exemplify a reducing tendency, informing the ability of the ISLRHP-HMOADL technique to balance the trade-off between generalize and data fitting. The continuous decrease in loss values guarantees the ISLRHP-HMOADL technique’s maximal performance and tuning of the prediction outcomes over time.

In Fig. 12, the precision-recall (PR) graph examination of the ISLRHP-HMOADL methodology explains its performance by plotting Precision against Recall for class labels. The figure illustrates that the ISLRHP-HMOADL technique constantly achieves maximum PR analysis across dissimilar classes, signifying its capacity to maintain a significant part of true positive predictions between every positive prediction (precision), which likewise captures a considerable ratio of actual positives (recall). The steady rise in PR analysis between all classes depicts the proficiency of the ISLRHP-HMOADL technique in the classification procedure.

In Fig. 13, the ROC analysis of the ISLRHP-HMOADL methodology is investigated. The results imply that the ISLRHP-HMOADL technique achieves improved ROC curves over each class, demonstrating essential proficiency in discriminating the class labels. This dependable drift of higher ROC analysis over several class labels indicates the capable performance of the ISLRHP-HMOADL technique in predicting class labels, highlighting the robust nature of the classification process.

Table 4 compares the results of the ISLRHP-HMOADL technique with those of the existing methods36,37,38,39.

Figure 14 illustrates the comparative examination of ISLRHP-HMOADL methodology with existing \(accu_{y}\), \(prec_{n}\) and \(F1_{measure}\) techniques. The simulation result stated that the ISLRHP-HMOADL approach outperformed superior performances. Based on \(accu_{y}\), the ISLRHP-HMOADL approach has a better \(accu_{y}\) of 99.57%. In contrast, the DNN-Squeezenet, CNN, RBM, ASLAR-HPE, StackGAN, VGG19, InceptionResnet, SVM, MobileNetV2, hand-aware graph convolution network (HA-GCN), Transformer models have lower \(accu_{y}\) of 90.78%, 93.00%, 98.13%, 98.45%, 97.11%, 96.30%, 99.36%, 96.89%, and 93.58%, 98.93%, and 99.05%, correspondingly. Furthermore, dependent on \(prec_{n}\), the ISLRHP-HMOADL method has better \(prec_{n}\) of 94.43% where the DNN-Squeezenet, CNN, RBM, ASLAR-HPE, StackGAN, VGG19, InceptionResnet, SVM, MobileNetV2, HA-GCN, Transformer models have inferior \(prec_{n}\) of 91.46%, 91.39%, 93.29%, 91.90%, 92.60%, 93.51%, 93.12%, 93.76%, 91.89%, 93.82%, and 92.59%, respectively. Lastly, based on \(F1_{measure}\), the ISLRHP-HMOADL method has maximal \(F1_{measure}\) of 93.89% while the DNN-Squeezenet, CNN, RBM, ASLAR-HPE, StackGAN, VGG19, InceptionResnet, SVM, MobileNetV2, HA-GCN, Transformer models have minimal \(F1_{measure}\) of 92.53%, 92.65%, 92.65%, 93.04%, 92.88%, 92.25%, 91.99%, 92.60%, 93.22%, 93.35%, and 93.08%, correspondingly.

Figure 15 demonstrates the comparative results of the ISLRHP-HMOADL technique with existing methods in terms of \(sens_{y}\) and \(spec_{y}\). The simulation result stated that the ISLRHP-HMOADL technique outperformed enhanced performances. Based on \(sens_{y}\), the ISLRHP-HMOADL model has maximum \(sens_{y}\) of 93.70% where the DNN-Squeezenet, CNN, RBM, ASLAR-HPE, StackGAN, VGG19, InceptionResnet, SVM, MobileNetV2, HA-GCN, Transformer models have lower \(accu_{y}\) of 92.44%, 92.16%, 92.71%, 93.01%, 93.12%, 93.01%, 92.25%, 91.94%, 92.74%, 93.38%, and 93.03%, correspondingly. At the same time, based on \(spec_{y}\), the ISLRHP-HMOADL method has a maximum \(spec_{y}\) of 99.78%. In contrast, the DNN-Squeezenet, CNN, RBM, ASLAR-HPE, StackGAN, VGG19, InceptionResnet, SVM, MobileNetV2, HA-GCN, Transformer models have minimum \(spec_{y}\) of 97.65%, 97.76%, 98.30%, 96.61%, 96.69%, 96.85%, 97.07%, 97.89%, 98.28%, 98.86%, and 97.26%, correspondingly.

In Table 5 and Fig. 16, the comparative analysis of the ISLRHP-HMOADL methodology is definite in processing time (PT). The outcomes indicate that the ISLRHP-HMOADL methodology gets superior performance. Dependent on PT, the ISLRHP-HMOADL technique presents lower PT of 4.64 min where the DNN-Squeezenet, CNN, RBM, ASLAR-HPE, StackGAN, VGG19, InceptionResnet, SVM, MobileNetV2, HA-GCN, Transformer approaches achieve greater PT values of 9.74 min, 8.30 min, 7.48 min, 9.68 min, 8.06 min, 8.27 min, 9.22 min, 7.92 min, 8.54 min, 9.13 min, and 6.98 min, respectively.

Table 6 and Fig. 17 illustrate the ablation study of the ISLRHP-HMOADL technique with existing methods. Each demonstrated robust accuracy and precision, starting with ViT, ResNetXt101, and VGG19 models. Still, integrating the CS-IGWO optimization improved \(accu_{y}\), \(prec_{n}\), and \(F1_{measure}\), emphasizing the significance of hyperparameter tuning. The BiGRU model, which added sequential learning capability, additionally improved the ability of the model to capture dynamic hand gestures, enhancing all metrics significantly. Finally, the ISLRHP-HMOADL model, integrating all components, attained the highest performance across all evaluation metrics, comprising \(accu_{y}\), \(prec_{n}\), \(sens_{y}\), \(spec_{y}\), and \(F1_{measure}\), illustrating that the integration of these techniques results in superior hand gesture recognition.

Conclusion

In this study, an ISLRHP-HMOADL technique for hearing-impaired persons is presented. The main objective of the ISLRHP-HMOADL technique focused on hand pose recognition to improve the efficiency and accuracy of sign interpretation for hearing-impaired persons. Initially, the ISLRHP-HMOADL model takes place in the image pre-processing stage and employs WF to enhance image quality by reducing noise. Next, the fusion of feature extraction using three models such as ResNeXt101, VGG19, and ViT, is employed to capture diverse and intricate spatial and contextual details from the images. For hand pose recognition, the BiGRU classifier is deployed. To further optimize the model’s performance, the ISLRHP-HMOADL model implements a hybrid CS-IGWO approach for parameter tuning, achieving a finely tuned configuration that boosts classification accuracy and robustness. A comprehensive experimental study is accomplished under the ASL alphabet dataset to exhibit the improved performance of the ISLRHP-HMOADL model. The comparative results of the ISLRHP-HMOADL model illustrated a superior accuracy value of 99.57% over existing techniques. The limitations of the ISLRHP-HMOADL model comprise the reliance on a limited dataset, which restricts the generalizability of the model to diverse real-world SL gestures. Moreover, the performance of the model may degrade under varying lighting conditions and backgrounds, affecting recognition accuracy. The model also encounters challenges with hand occlusions and complex gestures, restricting its efficiency in dynamic environments. Furthermore, real-time processing capabilities could be impacted in devices with lower computational power. Future work could concentrate on expanding the dataset to comprise a broader range of SLs and environmental variations. Exploring advanced TL techniques and integrating multimodal data could improve robustness. Furthermore, optimizing the model for mobile and edge devices could facilitate real-world deployment.

Data availability

The data supporting this study’s findings are openly available in the Kaggle repository at https://www.kaggle.com/datasets/grassknoted/asl-alphabet, reference number35.

References

Miah, S. M., Hasan, M. A. M., Shin, J., Okuyama, Y. & Tomioka, Y. Multistage spatial attention-based neural network for hand gesture recognition. Computers 12(1), 13 (2023).

Egawa, R., Miah, A. S. M., Hirooka, K., Tomioka, Y. & Shin, J. Dynamic fall detection using graph-based spatial temporal convolution and attention network. Electronics 12(15), 3234 (2023).

Khari, M., Garg, A. K., Crespo, R. G. & Verdú, E. Gesture recognition of RGB and RGB-D static images using convolutional neural networks. Int. J. Interact. Multim. Artif. Intell. 5, 22–27 (2019).

Obi, Y., Claudio, K. S., Budiman, V. M., Achmad, S. & Kurniawan, A. Sign language recognition system for communicating to people with disabilities. Proc. Comput. Sci. 216, 13–20 (2023).

Rahim, M. A., Miah, A. S. M., Sayeed, A. & Shin, J. Hand gesture recognition based on optimal segmentation in human-computer interaction. In Proc. 3rd IEEE Int. Conf. Knowl. Innov. Invention (ICKII), 163–166 (2020).

Murray, J., Snoddon, K., Meulder, M. & Underwood, K. Intersectional inclusion for deaf learners: Moving beyond general comment no. 4 on article 24 of the united nations convention on the rights of persons with disabilities. Int. J. Inclusive Educ. 24, 691–705 (2018).

Roux, I. et al. CHD7 variants associated with hearing loss and enlargement of the vestibular aqueduct. Hum. Genet. 142, 1499–1517 (2023).

Barbhuiya, A. A., Karsh, R. K. & Jain, R. CNN based feature extraction and classification for sign language. Multimedia Tools Appl. 802, 3051–3069 (2021).

Aly, S. & Aly, W. DeepArSLR: A novel signer-independent deep learning framework for isolated Arabic sign language gestures recognition. IEEE Access 8, 83199–83212 (2020).

Mohamed, L., Soliman, G. & Nasser, A. A survey on sentiment analysis algorithms and techniques for arabic textual data. Fusion Pract. Appl. 2(2), 74–87 (2020).

Sonsare, P., Gupta, D., Sayyed, J., Nandha, P. & Laira, S. Sign language to text conversion using deep learning techniques. In 2024 2nd International Conference on Computer, Communication and Control (IC4) 1–6 (IEEE, 2024).

Joshi, S., Sharma, B., Tripathi, D. & Shah, A. Hand sign language detection using deep learning and computer vision. In 2023 International Conference on Ambient Intelligence, Knowledge Informatics and Industrial Electronics (AIKIIE) 1–4 (IEEE, 2023).

Kumar, M., Bhatt, C., Manori, S., Khati, R., Singh, T. & Taranath, N. L. Sign language recognition by LSTM-driven speech synthesis. In 2024 Second International Conference on Advances in Information Technology (ICAIT), Vol. 1, 1–6 (IEEE, 2024).

Sharma, S., Gupta, R. & Kumar, A. Continuous sign language recognition using isolated signs data and deep transfer learning. J. Ambient Intell. Human. Comput., 1–12 (2023).

Saw, P. K., Gupta, S., Raj, A., Chauhan, S. & Agrawal, K. Gesture recognition in sign language translation: a deep learning approach. In 2024 International Conference on Integrated Circuits, Communication, and Computing Systems (ICIC3S), Vol. 1, 1–7 (IEEE, 2024).

Ahlawat, R., Adlakha, S., Malik, M. & Jatana, N. Action and gesture recognition using deep learning and computer vision for deaf and dumb people. In Proceedings of the 5th International Conference on Information Management and Machine Intelligence 1–8 (2023).

Sivaraman, R., Santiago, S., Chinnathambi, K., Sarkar, S., Sangeethaa, S. N. & Srimathi, S. Sign language recognition using improved seagull optimization algorithm with deep learning model. In 2024 Second International Conference on Intelligent Cyber Physical Systems and Internet of Things (ICoICI) 1566–1571 (IEEE, 2024).

Chouvatut, V., Panyangam, B. & Huang, J. Chinese finger sign language recognition method with ResNet transfer learning. In 2023 15th International Conference on Knowledge and Smart Technology (KST) 1–6 (IEEE, 2023).

Okorie, E., Blamah, N. V. & Bibu, G. D. An intelligent support system for speech and hearing-impaired persons using deep learning for Nigerian sign language. Available at SSRN 5088026.

Al Farid, F. et al. Single shot detector CNN and deep dilated masks for vision-based hand gesture recognition from video sequences. IEEE Access 12, 28564–28574 (2024).

Leiva, V., Rahman, M. Z. U., Akbar, M. A., Castro, C., Huerta, M. & Riaz, M. T. A real-time intelligent system based on machine-learning methods for improving communication in sign language. IEEE Access (2025).

Rezaee, K., Ghaderi, F., Taheri Gorji, H. & Haddadnia, J. Hand gesture and movement recognition based on electromyogram signals using soft ensembling feature selection and optimized classifier. Iran. J. Biomed. Eng. 14(3), 195–208 (2020).

Jeyanthi, P., Ajees, A., Kumar, A. P., Revathy, S. & Gladence, M. Interactive hand gesture recognition with audio response. In Multidisciplinary Applications of AI and Quantum Networking 195–212 (IGI Global, 2025).

Sucharitha, G., Rufus, D. C. B., Reddy, C. M. & Sekhar, G. C. Unlocking sign language interpretation: Leveraging transfer learning in deep learning models. In Artificial Intelligence Technologies for Engineering Applications 79–92 (CRC Press, 2025).

Arularasan, R., Balaji, D., Garugu, S., Jallepalli, V. R., Nithyanandh, S. & Singaram, G. Enhancing sign language recognition for hearing-impaired individuals using deep learning. In 2024 International Conference on Data Science and Network Security (ICDSNS) 1–6 (IEEE, 2024).

Zhou, Y., Guo, S., Zhou, Y., Zhao, L., Wang, T., Yan, X., Liu, F., Ravi, S. K., Sun, P., Tan, S. C. & Lu, G. Ionic composite nanofiber membrane‐based ultra‐sensitive and anti‐interference flexible pressure sensors for intelligent sign language recognition. Adv. Funct. Mater., 2425586 (2025).

Snehalatha, N., Kumar, S. M., Kavitha, H., Nisha, S., Gowda, P. & Prasad, S. K. Sign language detection using action recognition LSTM deep learning model. In 2024 Second International Conference on Networks, Multimedia and Information Technology (NMITCON) 1–6 (IEEE, 2024).

Zhang, J., Wang, Q. & Wang, Q. GFTLS-SLT: Gloss-free transformer based lexical and semantic awareness framework for multimodal sign language translation. In IEEE Transactions on Multimedia (2025).

Xie, H. W., Guo, H., Zhou, G. Q., Nguyen, N. Q. & Prager, R. W. Improved ultrasound image quality with pixel-based beamforming using a Wiener-filter and a SNR-dependent coherence factor. Ultrasonics 119, 106594 (2022).

Josef, A. & Kusuma, G. P. Alphabet recognition in sign language using deep learning algorithm with Bayesian optimization. Revue d’Intelligence Artificielle 38(3) (2024).

Kumarappan, J., Rajasekar, E., Vairavasundaram, S., Kotecha, K. & Kulkarni, A. Siamese graph convolutional split-attention network with NLP based social sentimental data for enhanced stock price predictions. J. Big Data 11(1), 154 (2024).

Wan, Q., Zhang, Z., Jiang, L., Wang, Z. & Zhou, Y. Image anomaly detection and prediction scheme based on SSA optimized ResNet50-BiGRU model. arXiv preprint arXiv:2406.13987 (2024).

Syama, S., Ramprabhakar, J., Anand, R. & Guerrero, J. M. An integrated binary metaheuristic approach in dynamic unit commitment and economic emission dispatch for hybrid energy systems. Sci. Rep. 14(1), 23964 (2024).

Rajasekar, E., Chandra, H., Pears, N., Vairavasundaram, S. & Kotecha, K. Lung image quality assessment and diagnosis using generative autoencoders in unsupervised ensemble learning. Biomed. Signal Process. Control 102, 107268 (2025).

Shin, J., Matsuoka, A., Hasan, M. A. M. & Srizon, A. Y. American sign language alphabet recognition by extracting feature from hand pose estimation. Sensors 21(17), 5856 (2021).

Natarajan, B. et al. Development of an end-to-end deep learning framework for sign language recognition, translation, and video generation. IEEE Access 10, 104358–104374 (2022).

Miah, A. S. M., Hasan, M. A. M., Tomioka, Y. & Shin, J. Hand gesture recognition for multi-culture sign language using graph and general deep learning network. IEEE Open J. Comput. Soc. (2024).

Song, J. et al. Hand-aware graph convolution network for skeleton-based sign language recognition. J. Inf. Intell. 3(1), 36–50 (2025).

Acknowledgements

The authors thank the King Salman Center for Disability Research for funding this work through Research Group no KSRG-2024-238.

Author information

Authors and Affiliations

Contributions

Conceptualization: Bayan Alabduallah, Reham Al Dayil, Data curation and Formal analysis: Bayan Alabduallah, Investigation and Methodology: Bayan Alabduallah, Reham Al Dayil, Project administration and Resources: Supervision; Bayan Alabduallah, Writing—original draft: Bayan Alabduallah, Reham Al Dayil, Abdulwhab Alkharashi, Amani A Alneil, Validation and Visualization: Bayan Alabduallah, Reham Al Dayil, Abdulwhab Alkharashi, Amani A Alneil, Writing—review and editing, Bayan Alabduallah, Reham Al Dayil, Abdulwhab Alkharashi, Amani A Alneil, All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alabduallah, B., Al Dayil, R., Alkharashi, A. et al. Innovative hand pose based sign language recognition using hybrid metaheuristic optimization algorithms with deep learning model for hearing impaired persons. Sci Rep 15, 9320 (2025). https://doi.org/10.1038/s41598-025-93559-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-93559-4

Keywords

This article is cited by

-

An automated framework for qur’anic education of the hearing-impaired using body pose classification and Arabic sign language integration

Scientific Reports (2026)

-

Enhanced feature fusion with hand gesture recognition system for sign language accessibility to aid hearing and speech impaired individuals

Scientific Reports (2026)

-

Ppent: a pose embedding refinement framework aligning estimated and motion-captured skeletons for real-time word-level sign language recognition

International Journal of Information Technology (2025)