Abstract

Current black-box adversarial attacks have demonstrated significant efficacy in creating adversarial texts against natural language processing models, exposing potential robustness vulnerabilities of these models. However, present attack techniques exhibit inefficiency due to their failure to account for the query counts needed in the adversarial text generation process, causing a disparity between the existing methodology and the practical adversarial attack scenario. To this end, this work proposes a query-efficient hard-label attack method called QEAttack, which leverages the genetic algorithm to produce persuasive and semantically equivalent adversarial texts relying solely on observing the final predicted label output by the victim model. To reduce query counts, a dual-gradient fusion strategy and a locality sensitive hashing based sentence-level semantic clustering strategy are proposed and applied to the crossover and mutation steps, respectively. Extensive experiments and ablation studies are conducted on three victim models with varying architectures across five benchmark datasets. The results demonstrate that QEAttack consistently achieves high attack success rates with significantly reduced query counts, while maintaining or even enhancing the imperceptibility and quality of generated adversarial texts.

Similar content being viewed by others

Introduction

Natural Language Processing (NLP) has achieved significant advancements due to the quick progress in powerful computer equipment and the extensive collection of data. These technological advancements have brought about significant changes in various domains, including sentiment analysis1,2, machine translation3,4, and question answering5,6,7 systems, which are extensively used in real-world applications like information retrieval, healthcare, e-commerce, etc8,9,10,11,12. Recently, the emergence of large language models such as GPT-413 has pushed the development of the NLP field to a new high point.

However, the growing dependence on NLP models has brought concerns about their vulnerability to adversarial attacks, which presents a significant challenge to the robustness and reliability of NLP systems. Recent studies14,15 have demonstrated that attackers can manipulate or deceive NLP models by adversarial examples crafted through tiny perturbation addition to the original input. For humans, such adversarial examples are almost similar to pure ones. More realistically, the vulnerability analysis presented in the work16 demonstrated that even ChatGPT, a widely used large language model known for its state-of-the-art performance on various NLP tasks, remains significantly susceptible to adversarial attacks.

To improve the reliability of Deep Neural Networks (DNNs) in real-world NLP applications, researchers investigate the vulnerability of DNNs through the enhancement of the adversarial attack technology on textual examples. Unlike approaches in the computer vision field, adversarial attack methods in NLP face more strict constraints17. For instance, texts are discrete and semantic, the alteration of a solitary word inside a text may entirely change its meaning or be easily perceived by human beings. Hence, the generated adversarial texts must be syntactically accurate and semantically coherent with the pure ones.

Previous studies have progressively advanced the development of realistic methods for generating textual adversarial texts through the exploration of white-box adversarial attacks18,19,20,21 to black-box ones22,23,24,25,26,27. Compared to white-box attacks that require knowledge of the victim model’s parameters, network architecture, or gradients to produce adversarial texts, black-box attacks require gathering the victim model’s output for given input texts. During the beginning stage, soft-label (also known as score-based) black-box approaches that necessitate the victim model to furnish probability values for each category22,23,24,25 are commonly used. However, the feasibility of these approaches still needs to be improved as DNNs are typically deployed through application programming interfaces in real-world scenarios, restricting users’ access to probability distributions across all categories. Consequently, researchers have made advancements in the field of black-box textual adversarial attacks within the hard-label (decision-based) framework, which solely relies on the final labels that have the highest likelihood generated by the victim26,27,28. Despite such progress in textual adversarial attacks, a gap exists between prevalent methodologies and the realistic adversarial attack scenario in the real world.

In practical scenarios, DNN systems frequently exhibit the capability to identify and restrict the user query counts. Hence, minimizing the frequency of queries made to the victim model is imperative for effectively executing hard-label attacks. The work26 first randomly changed words with their synonyms to find examples that meet the adversarial attack requirements, and then employed the genetic algorithm (GA) to facilitate the movement of adversarial examples toward the decision boundary, hence improving semantic similarity between adversarial and pure examples. However, this approach is inefficient because GA requires numerous queries to relocate adversarial texts close to the decision border of the victim model. Considering this challenge, TextHoaxer27 formulated the hard-label adversarial attack as an optimization problem in the word embedding space, and solved this problem through a gradient-based strategy. TextDecepter28 first determined the important sentences and words, and then selected appropriate words to add perturbations. Although these approaches somewhat reduce the query counts, the optimization procedure using a single candidate dramatically increases the possibility of suboptimal solutions. For example, TextDecepter indeed reduces the number of queries to a certain extent but leads to a significant decrease in the attack success rate. Therefore, developing query-efficient optimization approaches for hard-label adversarial attacks presents a novel and significant challenge that warrants further investigation.

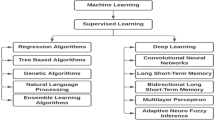

Overview of Proposed QEAttack. Given a text x, \(x_0^{\prime }\) and \(x_1^{\prime }\) are the adversarial texts obtained after the random initialization and search space reduction, respectively. The step (a) mutates \(x_1^{\prime }\) to generate candidates with higher semantic similarity. The step(b) obtains a better adversarial text \(x_2^{\prime }\) after performing crossover, and then step (c) mutates it. Steps (b) and (c) are executed several times alternately to find the optimal adversarial text \(x^*\).

This work focuses on the efficient querying issues in hard-label black-box settings in NLP tasks. The proposed QEAttack leverages the GA’s effectiveness in solving combination optimization problems and its ability to be applied directly to discrete input spaces to maximize the semantic correspondence between the original text and the adversarial one, as shown in Figure 1. More critically, this work proposes a dual-gradient fusion strategy and a locality sensitive hashing (LSH) based sentence-level semantic clustering strategy, which are applied to the GA’s crossover and mutation procedure, to minimize the query counts required in GA further. The main contributions of this work are as follows:

-

This research proposes a novel GA-based hard-label attack method called QEAttack, which generates natural and semantically similar adversarial texts for text classification tasks in hard-label black-box attack scenarios.

-

This research proposes a dual-gradient fusion strategy, which generates the child by combining the gradient information of two parents inspired by the Adam optimization method, improving the attack ability and quality of adversarial examples while decreasing the number of queries in GA’s crossover operation.

-

This research proposes a locality sensitive hashing based sentence-level semantic clustering strategy, in which LSH maps candidates with similar sentence embedding to the same bucket, substantially reducing the query counts in GA’s mutation operation.

-

The extensive experiments demonstrate that the proposed QEAttack requires fewer queries compared to most state-of-the-art methods. It achieves comparable attack success, and enchances the imperceptibility and quality of generated adversarial texts

Related work

DNNs exemplified by pre-trained models like Bert and GPT, recently have achieved remarkable capabilities across a range of NLP tasks. Thus, the potential threat of adversarial attacks on these DNNs has drawn researchers’ attention. Researchers have proposed various textual adversarial attacks to better investigate and strengthen these DNNs’ resilience against adversarial threats. While early studies were dominated by white-box attacks, which demand knowing the victim model’s information, such as parameters and architecture, recent studies have switched their attention towards black-box adversarial scenarios, where attackers only need the victim’s outputs regarding input texts.

Since DNNs models produce two types of output (probability scores and predicted labels), black-box adversarial attack methods can be categorized as soft-label attacks and hard-label attacks. Specifically, the former needs to obtain probability scores of all categories from victim models, providing more information for crafting adversarial examples, while the latter only knows the final predicted label output by victim models. For instance, when a triple-sentiment classification model takes a text as input, an attacker in a soft-label setting obtains the confidence score \(p=[p_1, p_2, p_3]\) for all three categories \(c=[c_1, c_2, c_3]\). In contrast, the attacker only knows that the model classifies the text as “positive” in the hard-label setting.

In the early stage, researchers proposed black-box adversarial attack methods adopted in the soft-label context, whereby the probability score is leveraged to enhance the generation of adversarial texts. The work22 introduced an approach known as Probabilistic Weighted Word Saliency (PWWS) that relies on synonym substitution. PWWS incorporated the word saliency and classification probability to establish the sequence for replacing words. Among them, the former signified the significance of the original term with the probability of categorization, while the latter denoted the effectiveness of the substitution in terms of attack performance. PWWS demonstrated a high level of efficacy in efficiently minimizing the number of replacements. TextFooler23 involves two steps. The victim model’s output was initially utilized to calculate the probability change value, word saliency, and classification probability. Subsequently, TextFooler adopted three strategies, namely synonym extraction, part-of-speech checking, and semantic similarity checking, to identify and replace words with substitutes that are most semantically similar and grammatically correct. Unlike the approaches mentioned above, the work29 (denoted as GA-A) utilized GA to select words randomly from the input text, and then performed synonym substitution operations on chosen words to maximize the probability of the victim model outputting a wrong result. The work24 (denoted as PSO-A) searched in the sememe space to identify the word that has to be altered by employing the particle swarm optimization (PSO) algorithm30. It presented that substitutes identified using word embedding or language models exhibit varying degrees of semantic consistency with the original term and appropriateness within the given context. Moreover, the work25 (denoted as LSHA) identified the significance score of each input word through the attention mechanism and locality sensitive hashing. Subsequently, the input words were modified in descending order based on their importance scores.

Recently, due to the impracticality of soft-label attack methods in real-world scenarios, researchers have shifted their focus to more challenging hard-label attacks. According to existing literature, some researchers have represented notable and successful initial investigations in this specific context. The work26 (denoted as HLBB) first generated an initial adversarial example through random initialization, and then used GA to enhance the semantic similarity between the original and adversarial texts. Nevertheless, since the limited search space provided by a single candidate hinders the ability of GA to produce high-quality adversarial examples, the utilization of GA-based optimization methods necessitates the initial generation of a substantial population of adversarial candidates, hence requiring a considerable number of queries to DNNs systems and being unsuitable for real-world applications. To tackle this issue, TextHoaxer27 formulated the optimization of adversarial perturbations as a gradient-based optimization problem, explicitly targeting the perturbation matrix in the word embedding space. It maintained a single candidate and leveraged the gradient provided by the objective function concerning the perturbation matrix to craft adversarial texts. LeapAttack31 used the difference between word vectors to represent the semantic deviation between two words, and employed the gradient estimation of the continuous word vector space to optimize the semantics of generated adversarial texts. SSPAttack32 initially used the initialization to craft an adversarial example and eliminated superfluous replacement words. Then, it determined the replacement order and searches for an anchor synonym, thereby circumventing the need to review all synonyms. Finally, it moved the substitute words closer to the original ones until an optimal adversarial example was obtained. PAT33 leveraged the distance from each candidate word to the estimated adversarial and non-adversarial prototypes to evaluate the semantic change. Consequently, PAT calculated the semantic deviation of each candidate word from its distance to the estimated prototypes according to the geometric interpretation. These approaches maintain one single candidate to minimize query consumption. However, they significantly increased the likelihood of gathering suboptimal adversarial texts.

Methodology

Problem formulation

This section begins by defining the hard-label textual adversarial attack in NLP tasks. The primary symbols used in this work are listed in Table 1. Let the original text be formalized as an ordered word sequence \(x=\left[ w_1, w_2, \cdots , w_N\right]\), y be its ground truth label, N be the word count of x, and \(w_i\) denotes the i-th word in x, \(1 \le i \le N\). The victim model f correctly predicts x, i.e., \(y=f(x)\). The adversarial attacker aims to construct an optimal adversarial text \(x^*\), which exhibits semantic similarity to the original text x for humans, while causing the prediction of f to differ from its prediction on x, i.e., \(f(x^*) \ne f(x)\). Therefore, an optimal \(x^*\) with minimal perturbation can be obtained by Equation (1).

Where \(x^{\prime }\) is a noised text corresponding to x, \(\operatorname {ADV}(f(x^{\prime }))\) represents the adversarial criteria, which takes the value of 1 if \(x^{\prime }\) is misclassified by f, and 0 otherwise. When \(\operatorname {ADV}(f(x^{\prime }))\) is equal to 1, \(x^{\prime }\) is called adversarial text. The function \(\operatorname {SEM}\left( x, x^{\prime }\right)\) quantifies the degree of semantic similarity between x and \(x^{\prime }\). The function \(\delta (\cdot )\) is defined as 0 when the statement \(\operatorname {ADV}(f(x^{\prime }))\) is equal to 1, and \(-\infty\) otherwise.

Proposed method

This work focuses on the word substitution-based adversarial attack method under the hard-label black-box scenario. To produce highly impactful adversarial texts, this work first randomly substitutes several words in original texts with their synonyms. Subsequently, the semantic similarity between the noised and original texts is optimized by querying and observing victim models’ final predictions. The overall optimization procedure of the proposed QEAttack is shown in Algorithm 1, which includes a three-step strategy consisting of random initialization, search space reduction, and population-based optimization.

For the population-based optimization step, since the GA is particularly suitable for solving complex optimization problems with large solution spaces, and is more applicable to discrete space and more accessible than other evolutionary algorithms like PSO30, this work adopts the GA to optimize the semantic similarity of the adversarial texts. The GA includes four key steps: initialization, selection, crossover, and mutation. To address the issue that the GA-based optimization needs a substantial number of queries to the victim model, a dual-gradient fusion strategy and a locality sensitive hashing based sentence-level semantic clustering strategy are proposed and introduced to the GA’s crossover and mutation step, respectively.

Note that the initialization step of the GA employs the same operation with mutation. The selection step includes the current best candidate selection and parent selection. In the best candidate selection, the one most semantically similar to x is chosen from P and recorded as \(x_{best}\). In the parent selection, a candidate pair (parents) is sampled from P with a probability that is proportional to \(\phi\) defined in Equation (2).

Where K is the hyperparameter indicating the population size of P, and \(exp(\cdot )\) is the exponential function. The three steps above (selection, crossover, and mutation) are iterated at most \(\rho\) times, and each word in the candidate text is allowed to be mutated at most \(\lambda\) times.

Random initialization and search space reduction

Given an input \(x=\left[ w_1, w_2, \cdots , w_N\right]\), an initial adversarial text \(x_0^{\prime }\) that satisfies \(\operatorname {ADV}(f(x_0^{\prime }))=1\) is generated through the random initialization step, which is an iterative process, as shown in lines 4-10 of Algorithm 2. Specifically, 30% of the words in x are initially selected at random without repetition. In the first iteration of the initialization process, the first selected word \(w_i\) is substituted with one of its top 50 synonyms \(syns(w_i)\) in the counter-fitted embedding space34; in the second iteration, the second selected word undergoes a similar substitution with one of its top 50 synonyms; and so on. In each iteration, if the resulting text is misclassified by f, then the iteration process is stopped and the resulting text \(x_0^{\prime }=[w_1, w_2, \cdots , w_i^{\prime }, \cdots , w_N]\) is used as the optimization target for the following search space reduction process. In \(x_0^{\prime }\), \(w_i^{\prime }\) is the synonym chosen to replace \(w_i\). After randomly replacing 30% of the words in x, if f still produces correct outputs, another round of random replacements is executed. The random initialization process can be repeated for a maximum of three iterations; if the attack remains unsuccessful after this, the process is terminated.

To mitigate the search space problem increasing exponentially when substituting a large number of words in \(x_0^{\prime }\), unessential synonyms are replaced with their corresponding original words. This procedure involves two steps: ranking semantic similarities (Algorithm 2, line 19-26) and reverting substitutions (Algorithm 2, line 27-34). In the first step, for each substituted \(w_i^{\prime }\) in \(x_0^{\prime }\), three operations are performed: First, \(w_i^{\prime }\) in \(x_0^{\prime }\) is replaced with its corresponding \(w_i\), resulting in a candidate text \(x_i^{tmp}\). Next, the semantic similarity \(sim_i=\operatorname {SEM}(x, x_i^{tmp})\) is calculated using the Universal Sentence Encoder35 (USE). Finally, if \(x_i^{tmp}\) successfully attacks the victim model, the pair \((sim_i, w_i)\) is stored in the similarity score list called scores. After performing the above process for each \(w_i^{\prime }\), all \(w_i\) in scores are sorted in descending order based on the semantic similarity scores. In the second step, \(x_1^{\prime }\) is initialized with \(x_0^{\prime }\). The following two operations are then executed iteratively for each \(w_i\) stored in scores: First, \(w_i^{\prime }\) in \(x_1^{\prime }\) is replaced by \(w_i\) to produce a new candidate text \(x_i^{tmp}\). Then, \(x_i^{tmp}\) is utilized to query f to determine whether \(x_i^{tmp}\) meets the adversarial criteria. If \(x_i^{tmp}\) causes f to produce incorrect output, it becomes the new \(x_1^{\prime }\), and the process continues with the next \(w_i\). If not, the iterative process is terminated. In this case, the final resulting text \(x_{1}^{\prime }\) serves as the optimization object for the subsequent process.

Mutation with locality sensitive hashing

Given an adversarial text \(x^{\prime }\), this step aims to choose a more suitable substitute for each \(w_{i}^{\prime }\) to ensure that the resulting text \(x^{\prime \prime }\) not only satisfies the adversarial criteria but also enhances the semantic similarity. The process is detailed in Algorithm 3. Note that a better substitute for \(w_{i}^{\prime }\) is searched only if its current replacement count is below the maximum threshold \(\lambda\).

For a specified word \(w_{i}^{\prime }\), if the resulting text generated by replacing it with \(w_i\) does not meet the adversarial requirement, it then is substituted with each synonym of \(w_i\), yielding \(X = \{x^{\prime (1)}, \cdots , x^{\prime (j)}, \cdots , x^{\prime (J)}\}\) that contains J noised texts, where \(x^{\prime (j)}\) is produced by replacing \(w_{i}^{\prime }\) in \(x^{\prime }\) with j-th synonym in \(syns(w_i)\). During selecting a noised text that has the highest semantic consistency with x and meets the adversarial criteria, this work proposes an LSH based sentence-level semantic clustering strategy to retain the most potential candidates for querying, thus greatly reducing the query consumption (Algorithm 3, line 8-12). The LSH technique searches for the nearest neighbors in high-dimensional space. It takes a vector e as input and computes its hash value such that similar vectors get the same hash value with a high probability.

Specifically, this work first encodes each \(x^{\prime (j)}\) in X to a d dimensional vector \(e_j\) through the sentence encoder USE, and constructs \(E=\{e_1, \cdots , e_j, \cdots , e_J\}\), where \(e_j\) is the sentence embedding of \(x^{\prime (j)}\). Then, the hash value of each \(e_j\) is computed by the Random Projection Method (RPM)36, an approach of LSH. To obtain the hash value of \(e_j\), RPM first generates a set of spherically symmetrical random vectors \(R=\{r_0, \cdots , r_q, \cdots , r_Q\}\), where \(r_q\) is a vector of unit length from d dimensional space, and then constructs a family of hash functions in which each hash function \(h_{r_q}(e)\) is defined in Equation (3). The final hash value \(h_j\) of \(e_j\) is obtained by concatenating all \(h_{r_q}(e_j)\). Such a process is efficient as the hash code is generated using only the dot product between two matrices.

Subsequently, according to the obtained \(H=\{h_1, \cdots , h_j, \cdots , h_J\}\), texts with the same hash value are mapped to the same bucket in the hash table, resulting \(B=\{b_1, \cdots , b_k, \cdots , b_I\}\) that contains I buckets. Then, one candidate text \(x_k\) is randomly selected from each bucket \(b_k\), and the candidate text with the maximum semantic similarity to x is selected from the sampled texts, denoted as \(x_k^*\). Thus, the bucket that contains \(x_k^*\) is selected and denoted as \(b_k^*\). Later, The candidates in \(b_k^*\) are then used to query f, and candidates that do not satisfy the adversarial criteria are removed. Among the remaining texts, the one that exhibits the most remarkable improvement in semantic similarity is picked and denoted as \(x^{\prime \prime }\), and the i-th word in \(x^{\prime \prime }\) is chosen as the substitute for \(w_i\).

In this case, adopting LSH reduces the query counts from being proportional to the number of synonyms of each word to the number of texts contained in the selected bucket. Differing from the work25, which used LSH to calculate importance scores for all words and conducts the soft-label attack, the proposed QEAttack utilizes LSH to choose a better replacement for a word and implements a hard-label attack.

Crossover with Dual-Gradient fusion

Given texts \(x^{\prime (1)}\) and \(x^{\prime (2)}\), this step aims to craft a new candidate meeting the adversarial criteria and the semantic similarity improvement requirements. Previous work26 employs the random selection approach to determine each position’s word for two parent candidates, being inefficient and needing a large number of queries. Therefore, this work proposes a dual-gradient fusion strategy that leverages the gradient information provided by two parents, as illustrated in Algorithm 4.

This work first encodes x, \(x^{\prime (1)}\) and \(x^{\prime (2)}\) to \(N \times L\) dimentional matrixs \(V=[v_1, \cdots , v_i, \cdots , v_N]\), \(V^{(1)}=[v_1^{(1)}, \cdots , v_i^{(1)}, \cdots , v_N^{(1)}]\) and \(V^{(2)}=[v_1^{(2)}, \cdots , v_i^{(2)}, \cdots , v_N^{(2)}]\), where \(v_i\), \(v_i^{(1)}\) and \(v_i^{(2)}\) are the L dimension embedding of i-th word in x, \(x^{\prime (1)}\) and \(x^{\prime (2)}\) in the counter-fitted embedding space34, respectively. Thus, the perturbation matrixs are \(T^{(1)}=V^{(1)} - V\) and \(T^{(2)}=V^{(2)} - V\).

To obtain the gradient from \(T^{(1)}\), this work randomly samples a \(N \times L\) dimension perturbation \(U^{(1)}=[u_1^{(1)}, \cdots , u_i^{(1)}, \cdots , u_N^{(1)}]\) from a zero-mean Gaussian distribution, and obtains a neighbor \(T^{\prime (1)}\) around \(T^{(1)}\) through Equation (4), where \(\beta\) is a hyperparameter controling the perturbation strength, \(v_i^{\prime (1)}=v_i^{(1)}-v_i +u_i^{(1)}\).

Since the locations of the substituted synonyms in \(x_1^{\prime }\) are preserved in a collection pos, for x, each \(w_i\) whose position is in pos is replaced with the synonym \(w_i^* \in syns(w_i)\), whose embedding is closest to \(v_i + v_i^{\prime (1)}\) based on the \(L_2\) norm. This process generates a new text \(x^{\prime \prime (1)}=[w_1, \cdots , w_i^*, \cdots , w_N]\). Given \(x^{\prime (1)}\) and \(x^{\prime \prime (1)}\), the i-th perturbation’s gradient direction is obtained by Equation (5). Thus, the gradient \(g^{(1)}\) is obtained. Similarly, the gradient \(g^{(2)}\) is obtained utilizing \(x^{\prime (2)}\).

To generate the new perturbation, this work is inspired by the Adam optimization method37, and regards \(g^{(1)}\) and \((g^{(2)})^2\) as the first-order momentum and second-order momentum with correction for deviations, respectively. Thus, the new perturbation matrix is obtained by Equation (6), where the hyperparameter \(\eta\) represents the learning step size, \(\epsilon\) is a small value used to avoid the denominator becoming zero.

Finally, \(T_{new}\) is added to x to generate the new candidate. Concretely, \(T_{new}\) can be denote as \([t_1, \cdots , t_i, \cdots , t_N\)], where \(t_i\) represents the new perturbation vector that will be added to \(v_i\) in V. The approach used in the gradient calculation step is adopted to obtain the new candidate \(x_{new}\). If \(x_{new}\) satisfies the adversarial criteria and improves the semantic similarity compared to the current best candidate, it is retained for the subsequent optimization; otherwise, it is discarded.

Experiments

Comprehensive experiments are conducted across five text classification datasets on five victim models to evaluate the proposed attack method. The experimental settings are described first, followed by the analysis of experimental results.

Experimental settings

Datasets and victim models

This work employs five text classification datasets for comprehensive experiments. Among five benchmark datasets, there are two sentence-level classification datasets, namely AG’s News38 (AG) and Movie Review39 (MR), and three document-level classification datasets, namely Yelp Reviews38 (Yelp), Yahoo Answers38 (Yahoo), and Internet Movie Databaset40 (IMDB). 1,000 examples randomly sampled from the test set of each dataset are selected and employed to generate adversarial texts.

This work adopts three typical models with different neural network structures: BERT41, WordCNN42 and WordLSTM43. The settings in previous work26 are employed for these victim models. WordCNN employs convolutional neural networks with window sizes of 3, 4, and 5, each comprising 150 filters. WordLSTM employs a single bidirectional LSTM with 150 hidden units. WordCNN and WordLSTM models utilize a dropout rate of 0.3 and 200-dimensional Glove Word Embedding44. Table 2 shows the details of selected 1,000 examples, as well as the original prediction accuracy of each victim model on these 1,000 examples.

In addition, this work takes GPT-3.5 and Claude as victim models to analyze the performance of the proposed method in the scenario closer to practical application. When attacking GPT-3.5 and Claude, this work uses 100 samples randomly selected from the test set of MR dataset.

Baseline attacks

Eight black-box adversarial attack methods are utilized as baselines in this work, including four soft-label attacks (TextFooler23, GA-A29, PSO-A24, and LSHA25) and four hard-label attacks (HLBB26, TextHoaxer27, LeapAttack31, and SSPAttack32). All baseline methods are systematically analyzed in the “Related Work” section of this work. Within the hard-label methods, TextHoaxer, LeapAttack, and SSPAttack uniformly employed the two-step strategy proposed by HLBB, which first identifies initial adversarial texts, and then optimizes the semantics of these adversarial texts.

Evaluation metrics

The proposed attack is assessed with baseline attacks through three aspects: the imperceptibility, the quality, and attack performance of generated adversarial texts.

The imperceptibility of generated adversarial texts is assessed by three metrics: semantic similarity (Sim), perturbation rate (Pert), and recall-oriented understudy for gisting evaluation (ROUGE). The semantic similarity is calculated through the cosine similarity score between two embeddings, which are gathered by inputting the original and generated adversarial texts into the Sentence-BERT45. The perturbation rate is defined as the proportion of altered words relative to the total word count of an adversarial text. The ROUGE indicates the overlap of n-grams between the original texts and adversarial ones, and this work adopts the Rouge-L metric, which represents the ratio of the length of the longest common subsequence between an original text and the corresponding adversarial one to the length of the original text. The lower the perturbation rate, the higher the semantic similarity, and the higher the ROUGE, the more semantically similar the adversarial texts are to the original texts.

The quality of adversarial texts is evaluated by two metrics: the grammar error count (GErr) and the perplexity (PPL). Among them, the former refers to the number of grammatical errors introduced in the adversarial text by adversarial attacks, and is obtained through the LanguageTool. The latter assesses the fluency of adversarial texts, and is calculated through the large-sized GPT-2 with a 50k-sized vocabulary. The lower the grammar error count and the lower the perplexity, the higher the quality of adversarial texts are.

The attack ability evaluation involves two metrics: the attack success rate (Suc) and the number of queries (Qrs). The former refers to the percentage of adversarial texts that effectively alter the prediction made by victim models. The latter represents the number of query requests to the victim model required by the attack method. The higher the attack success rate and the lower the query count, the stronger the attack is.

Detail settings

For a fair comparison with existing black-box attacks, this work follows the practice in the work26. The stop words are filtered out using NLTK, and Spacy is used for POS tagging. The USE35 is used in the crossover process and semantic similarity evaluation. For the GA-based optimization procedure, population size K is set to 30, maximum iteration counts \(\rho\) and maximum replacement count \(\lambda\) are set to 100 and 25, and hyperparameter Q for LSH is set to 5. The hyperparameters \(\beta\), \(\eta\) and \(\epsilon\) are set to 0.5, 0.001 and 1e-8, respectively. While performing the LSHA attack, the attention model pre-trained on IMDB is adopted for attacks on the other four datasets, and the attention model pre-trained on Yelp is adopted for attacks on IMDB.

Experimental results

Imperceptibility performances

The semantic similarities between the original and adversarial texts generated by nine attack methods are shown in Table 3. Compared to baseline attacks, QEAttack can generate adversarial texts with higher semantic similarity. On average, the semantic similarity of the adversarial texts generated by QEAttack is 92.28%, which is not only 0.54%, 1.39%, 2.65% and 0.57% higher than that of HLBB, TextHoaxer, LeapAttack and SSPAttack, respectively, but also 0.05% higher than that of LSHA, which is with the highest semantic similarity among soft-label attacks.

Among hard-label attacks, when generating adversarial texts using three document-level datasets (Yelp, Yahoo, and IMDB), the proposed QEAttack achieves higher semantic similarity than all hard-label baselines. When adopting two sentence-level datasets (AG and MR), the semantic similarity of adversarial texts generated by QEAttack is still higher than that of HLBB and SSPAttack. Although the semantic similarity of QEAttack is slightly lower than that of TextHoaxer and SSPAttack when attacking BERT models with AG and MR datasets, the average semantic similarity of adversarial texts generated by QEAttack using the AG and MR is 88.68%, which is 1.44% and 0.08% higher than that of TextHoaxer and SSPAttack, respectively.

Compared to soft-label attacks, when using Yelp and IMDB datasets, QEAttack achieves 0.51% higher semantic similarity on average than LSHA, which performs best among the four soft-label attacks. The results demonstrate that the proposed QEAttack is better at generating adversarial texts with higher semantic similarity for relatively long texts.

The perturbation rates of adversarial texts generated by QEAttack and eight baseline attacks are shown in Tables 4. The experimental results indicate that QEAttack can generate adversarial texts with lower perturbation rates. On average, the perturbation rate of adversarial texts generated by QEAttack is 8.19%, which is lower than that of all baseline attacks. It is worth noting that among the soft-label methods, PSO-A achieves the lowest average perturbation rate of 8.99%, which is still 0.8% higher than that of QEAttack.

When generating adversarial texts using three document-level datasets (Yelp, Yahoo, and IMDB), the perturbation rates of adversarial texts produced by QEAttack are lower than those of all baseline methods. This indicates that QEAttack can effectively generate adversarial texts with lower perturbation rates for longer texts. When employing two sentence-level datasets (AG and MR), although QEAttack has higher perturbation rates than PSO-A, TextHoaxer, and SSPAttack in some settings, it consistently achieves lower perturbation rates than TextFooler, GA-A, LSHA, HLBB, and LeapAttack. This demonstrates that QEAttack is also capable of generating adversarial texts with lower perturbation rates for relatively short texts compared to several baseline methods.

The ROUGE of adversarial texts generated by QEAttack and eight baseline attacks are shown in Tables 5. Overall, QEAttack achieves higher ROUGE than baseline attacks in most settings. On average, QEAttack achieves the highest ROUGE of 91.01% among all soft-label and hard-label attacks. Compared to soft-label attacks, the ROUGE of QEAttack is higher than that of TextFooler, GA-A, and LSHA. Although the ROUGE of QEAttack is 2.82% and 1.77% lower than that of PSO-A when attacking BERT and WordCNN using MR dataset, QEAttack achieves an average ROUGE that is 1.58% higher than that of PSO-A across all datasets. Compared to hard-label attacks, the ROUGE of adversarial texts generated by QEAttack is higher than that of TextHoaxer and LeapAttack in all settings. Although HLBB and SSPAttack achieve higher ROUGE than QEAttack in some cases, their average ROUGE values across all datasets are 90.69% and 90.81%, which are still lower than that of QEAttack. The results demonstrate that QEAttack can generate adversarial texts with higher ROUGE.

The experimental results in Tables 3, 4, and 5 reveal that the proposed QEAttack generates adversarial texts with higher semantic similarity, lower perturbation rate, and higher ROUGE scores compared to baseline attacks.

Quality of adversarial texts

The grammar error count of adversarial texts generated by QEAttack and eight baseline attacks are presented in Tables 6. Overall, adversarial texts produced by soft-label attacks tend to have fewer grammar errors than those generated by hard-label attacks. Among hard-label attacks, QEAttack introduces an average of 0.58 grammar errors in the generated adversarial texts, which is lower than the average error counts of all baseline attacks. Compared to HLBB and LeapAttack, QEAttack results in fewer grammar errors across all settings. Additionally, when compared to TextHoaxer and SSPAttack, the grammar error count for QEAttack is slightly higher in a few settings; however, the average number of grammar errors introduced by QEAttack is still 0.1 and 0.03 less than those of TextHoaxer and SSPAttack, respectively. The experimental results indicate that the Proposed QEAttack introduces fewer grammar errors in generated adversarial texts than most baseline methods.

The perplexities of adversarial texts generated by QEAttack and eight baseline attacks are presented in Tables 7. Overall, adversarial texts produced by soft-label methods exhibit lower perplexity than those generated by hard-label methods, as soft-label methods benefit from the additional information provided by the output probabilities. Among hard-label attacks, the perplexity of adversarial texts generated by QEAttack using MR And IMDB datasets is lower than that of four hard-label baseline methods. When using AG, Yelp, and Yahoo datasets, the perplexity of adversarial texts generated by QEAttack is consistently lower than that of LeapAttack across all settings. Additionally, in certain settings, its perplexity is slightly higher than that of HLBB, TextHoaxer, and SSPAttack. According to the average value, the perplexity of adversarial texts generated by QEAttack is 185.40, which is 173.14, 249.08, 166.00, and 62.09 lower than HLBB, TextHoaxer, LeapAttack, and SSPAttack, respectively.

The experimental results in Tables 6 and 7 reveal that the proposed QEAttack generates adversarial texts with fewer grammar errors, and lower perplexity compared to most baseline attacks.

Attack capabilities

The comparison of attack success rates for the proposed QEAttack and eight baseline attacks is presented in Table 8. The results indicate that QEAttack achieves a success rate of over 97% in most scenarios, outperforming three soft-label attacks (GA-A, PSO-A, and LSHA) across various data domains and victim model architectures.

Compared with soft-label attacks, QEAttack achieves a comparable success rate to TextFooler, which is the most competitive soft-label method that achieves over a 93% success rate. For instance, when attacking BERT using AG and MR datasets, QEAttack achieves attack success rates of 96.99% and 98.82%, which are 3.66% and 5.29% higher than that of TextFooler, respectively. In combination with the results in Tables 3 and 4, adversarial texts generated by QEAttack have a lower perturbation rate and possess similar or higher semantic similarity, while still achieving an equivalent attack success rate compared to TextFooler. In comparison to GA-A, PSO-A, and LSHA, adversarial texts produced by QEAttack exhibit similar or better perturbation rates and semantic similarity, while achieving a higher attack success rate.

Compared with hard-label attacks, the attack success rate of QEAttack is comparable to that of four hard-label baseline methods, with a maximum difference of just 0.81%. On average, QEAttack achieves an attack success rate of 98.10%, which is 0.05% higher than HLBB, 0.01 higher than TextHoaxer, 0.04% lower than LeapAttack, and 0.07% higher than SSPAttack, respectively.

The experimental results indicates that QEAttack has an advantage in finding optimal perturbations compared to hard-label baseline attacks and almost soft-label attacks.

Queries to victim models

The number of queries consumed by the proposed QEAttack and eight baseline methods to generate the aforementioned adversarial texts are presented in Tables 9. Compared with the soft-label methods, the query count of QEAttack is approximately three to four times the number of queries required by TextFooler and LSHA, but is one to two orders of magnitude lower than that of GA-A and PSO-A, respectively. Among hard-label attacks, QEAttack requires fewer queries than four hard-label baseline methods across all settings. On average, the queries required by QEAttack are about 10% of HLBB, 70% of TextHoaxer, and 15% of LeapAttack and SSPAttack. Therefore, these experimental results demonstrate that QEAttack significantly improves query efficiency while maintaining or even enhancing the quality and semantic similarity of generated adversarial texts.

Queris for texts of different lengths

To analyze the impact of text length on the number of queries required by the proposed QEAttack, this work divided 1,000 test texts from each benchmark dataset into several groups based on text length. The average number of queries needed by QEAttack and HLBB to generate adversarial texts for each group is presented in Figure 2.

Specifically, due to the difference in the average length of texts between the sentence-level and document-level dataset is significant, this work extracts texts with lengths ranging from 1 to 50 from two sentence-level datasets (MR and AG), and then respectively categorizes them into five groups based on the text length, with each group spanning an interval of 10. For instance, the group labelled “10” includes texts ranging from 1 to 10 words long, the group labelled “20” includes texts ranging from 11 to 20 words long, and so forth. It is important to note that there are no examples in AG with lengths from 1 to 10; hence, the value associated with “10” in Figure 2(b) is “0”. Additionally, texts with lengths ranging from 1 to 350 from three document-level datasets (Yelp, Yahoo, and IMDB) are extracted and divided into five groups according to the text length, with an interval of 50 words. For example, the group labelled “50” contains texts that are from 1 to 50 words long, the group labelled “100” contains texts that are from 51 to 100 words long, and so forth.

Figure 2 indicates that compared to HLBB, QEAttack requires fewer queries to generate adversarial examples for texts of varying lengths, which is consistent with the conclusion in the previous sections. Furthermore, although the number of queries needed by QEAttack increases as the text length grows, this increase is significantly more gradual than that of HLBB. For instance, when the text length increased from 1~10 to 41~50 in MR dataset, the query count for HLBB increased by approximately 3,000, while the query count for QEAttack increased by only roughly 500, which is one-sixth of HLBB’s increase. For the document-level dataset like Yelp, when the text length increased from 1~50 to 300~350, the query count for HLBB and QEAttack increased by more than 15,000 and roughly 1,500, respectively, where the former is ten times the latter. Overall, these findings demonstrate that QEAttack efficiently generates adversarial texts with fewer query counts than HLBB.

Attacking models with defense mechanism

This work evaluates the attack performance of the proposed QEAttack with four hard-label baseline attacks against WordLSTM, which employs adversarial training as the defense mechanism. Specifically, following the practice in the work46, this work adopts HotFlip47 as the adversarial policy and retrains WordLSTM with adversarial training. The original prediction accuracies achieved by the resulting WordLSTM on AG and IMDB dataset are 93.2% and 89.5%, respectively. Table 10 shows attack performances of adversarial texts’ semantic similarity, perturbation rate, and attack success rate, indicating that QEAttack is capable of producing more effective adversarial texts, even when the victim model employs a defense mechanism.

Attacking large language models

This work compares the attack performance of the proposed QEAttack with four hard-label baseline attacks against two large language models: GPT-3.5 and Claude, as shown in Table 11. This experiment randomly chooses 100 examples from the selected 1000 test examples of MR dataset, where the original accuracy of GPT-3.5 and Claude on these 100 examples is about 93% and 90%, respectively. When attacking these two models, the maximum number of queries is set to 350. In Table 11, the metric “Suc Counts” refers to the total number of adversarial texts that successfully attacked the victim model. The experimental results indicate that both GPT-3.5 and Claude are successfully perturbed by most adversarial texts, and adversarial texts generated by QEAttack have higher semantic consistency and text quality compared to baseline attacks under the same query budget.

Ablation study

To investigate the contribution of the proposed sentence-level semantic clustering strategy and dual-gradient fusion strategy in improving query efficiency, this work compares the query consumption of four methods: HLBB, with CDGF, with MLSH, and QEAttack, as illustrated in Figure 3. Specifically, HLBB is the hard-label attack proposed in the work26, which adopts a random strategy for both crossover and mutation processes in the genetic algorithm. Compared to QEAttack, with CDGF method adopts the proposed dual-gradient fusion strategy in the crossover step while using the random mutation strategy from HLBB. In contrast, with MLSH method employs the proposed sentence-level semantic clustering strategy in the mutation step while using the random crossover strategy from HLBB. Figure 3 demonstrates that compared with HLBB, both the dual-gradient fusion strategy and the sentence-level semantic clustering strategy can effectively improve the query efficiency.

Furthermore, Table 12 presents the performance comparison of adversarial texts produced by these four methods using AG and IMDB datasets when attacking BERT. Overall, with CDGF and with MLSH methods reduce the number of queries to about 20%~30% of HLBB, respectively, and the combination of the two strategies can reduce the number of queries to nearly 10% of HLBB. When using the AG dataset, the adversarial text generated by with CDGF performs best in terms of semantic similarity, perturbation rate, ROUGE, perplexity, and the number of grammar errors. On the contrary, when using the IMDB dataset, the adversarial text generated by with MLSH performs best in terms of perturbation rate, ROUGE, perplexity, and the number of grammar errors, while with CDGF perform better in semantic similarity than with MLSH. The experimental results in Table 12 indicate that the proposed sentence-level semantic clustering strategy and dual-gradient fusion strategy have complementary effects, and optimize the adversarial text from different aspects in the process of generating adversarial text of different lengths. The joint use of the two optimization strategies can greatly improve the query efficiency while maintaining or even improving the text quality and semantic consistency of generated adversarial texts.

Ethical concerns

Adversarial attacks are an effective method for exposing models’ vulnerabilities to malicious inputs, thereby prompting researchers to enhance the robustness and security performance of these models. The proposed QEAttack is capable of producing adversarial texts that are semantically consistent with the original text with efficient query efficiency. This capability can be utilized to evaluate the robustness of existing models in real-world application scenarios. Moreover, the generated adversarial texts can serve as training data for adversarial training, which is a widely recognized and effective defense strategy.

To mitigate the risk of adversarial attack technologies being maliciously exploited to cause potential harm, appropriate preventive measures must be implemented. On the one hand, preventing the misuse of adversarial technologies is an effective strategy to mitigate such effects. Therefore, laws and regulations must be established to govern the use and development of adversarial attacks; researchers and developers must ensure that adversarial attacks are only used for lawful purposes and do not harm individuals or society. In this regard, all experiments in this work use public data, and the experimental process does not produce any harm. On the other hand, strengthening the model’s adversarial robustness is the fundamental way to avoid such dangers. Therefore, researchers should focus on constructing more robust models, investigating more effective adversarial attack defense mechanisms, and regularly evaluating and updating the model to cope with newly discovered vulnerabilities.

Conclusion

Considering the challenge that existing hard-label adversarial attacks typically demand a significant number of queries to victim models in the black-box setting, rendering them ineffective for investigating potential adversarial vulnerabilities of current NLP models, this work proposes a query-efficient hard-label adversarial attack method called QEAttack. To efficiently diminish the query count needed in the adversarial text semantic optimization process, this work proposes a dual-gradient fusion strategy and a locality sensitive hashing based sentence-level semantic clustering strategy, and incorporates them into the crossover and mutation processes of the genetic algorithm, respectively. Experimental results demonstrate that QEAttack effectively reduces the required query counts during the adversarial text generation process, while preserving attack efficacy, imperceptibility, and quality of generated adversarial texts, particularly for document-level texts. Nonetheless, there are still shortcomings: for lengthy texts, QEAttack still demands a considerable total query count. Therefore, in future work, we plan to further refine the method to decrease the query counts and conduct more experiments against real-world models that perform complex language processing tasks.

Data availability

Data is available from the corresponding author on reasonable request.

References

Feldman, R. Techniques and applications for sentiment analysis. Communications of the ACM 56, 82–89 (2013).

Wankhade, M., Rao, A. C. S. & Kulkarni, C. A survey on sentiment analysis methods, applications, and challenges. Artificial Intelligence Review 55, 5731–5780 (2022).

Wang, H., Wu, H., He, Z., Huang, L. & Church, K. W. Progress in machine translation. Engineering (2021).

Fan, A. et al. Beyond english-centric multilingual machine translation. The Journal of Machine Learning Research 22, 4839–4886 (2021).

Kwiatkowski, T. et al. Natural questions: a benchmark for question answering research. Transactions of the Association for Computational Linguistics 7, 453–466 (2019).

Karpukhin, V. et al. Dense passage retrieval for open-domain question answering. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), 6769–6781 (2020).

Zhu, F. et al. Retrieving and reading: A comprehensive survey on open-domain question answering. arXiv preprint, arXiv:2101.00774 (2021).

Guo, W. et al. Deep natural language processing for search and recommender systems. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 3199–3200 (2019).

Wen, A. et al. Desiderata for delivering nlp to accelerate healthcare ai advancement and a mayo clinic nlp-as-a-service implementation. NPJ digital medicine 2, 130 (2019).

Lin, X. Sentiment analysis of e-commerce customer reviews based on natural language processing. In Proceedings of the 2020 2nd International Conference on Big Data and Artificial Intelligence, 32–36 (2020).

Nakano, R. et al. Webgpt: Browser-assisted question-answering with human feedback. arXiv preprint, arXiv:2112.09332 (2021).

Zhou, B., Yang, G., Shi, Z. & Ma, S. Natural language processing for smart healthcare. IEEE Reviews in Biomedical Engineering (2022).

Achiam, J. et al. Gpt-4 technical report. arXiv preprint, arXiv:2303.08774 (2023).

Szegedy, C. et al. Intriguing properties of neural networks. arXiv preprint, arXiv:1312.6199 (2013).

Papernot, N., McDaniel, P., Swami, A. & Harang, R. Crafting adversarial input sequences for recurrent neural networks. In MILCOM 2016-2016 IEEE Military Communications Conference, 49–54 (IEEE, 2016).

Wang, J. et al. On the robustness of chatgpt: An adversarial and out-of-distribution perspective. In ICLR 2023 Workshop on Trustworthy and Reliable Large-Scale Machine Learning Models.

Qiu, S., Liu, Q., Zhou, S. & Huang, W. Adversarial attack and defense technologies in natural language processing: A survey. Neurocomputing 492, 278–307 (2022).

Ebrahimi, J., Lowd, D. & Dou, D. On adversarial examples for character-level neural machine translation. In Proceedings of the 27th International Conference on Computational Linguistics, 653–663 (Association for Computational Linguistics, Santa Fe, New Mexico, USA, 2018).

Cheng, Y., Jiang, L. & Macherey, W. Robust neural machine translation with doubly adversarial inputs. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, 4324–4333 (2019).

Cheng, M., Yi, J., Chen, P.-Y., Zhang, H. & Hsieh, C.-J. Seq2sick: Evaluating the robustness of sequence-to-sequence models with adversarial examples. In AAAI, 3601–3608 (2020).

Atanasova, P., Wright, D. & Augenstein, I. Generating label cohesive and well-formed adversarial claims. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), 3168–3177 (2020).

Ren, S., Deng, Y., He, K. & Che, W. Generating natural language adversarial examples through probability weighted word saliency. In Proceedings of the 57th annual meeting of the association for computational linguistics, 1085–1097 (2019).

Jin, D., Jin, Z., Zhou, J. T. & Szolovits, P. Is bert really robust? a strong baseline for natural language attack on text classification and entailment. In Proceedings of the AAAI conference on artificial intelligence 34, 8018–8025 (2020).

Zang, Y. et al. Word-level textual adversarial attacking as combinatorial optimization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 6066–6080 (2020).

Maheshwary, R., Maheshwary, S. & Pudi, V. A strong baseline for query efficient attacks in a black box setting. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, 8396–8409 (2021).

Maheshwary, R., Maheshwary, S. & Pudi, V. Generating natural language attacks in a hard label black box setting. In Proceedings of the AAAI Conference on Artificial Intelligence 35, 13525–13533 (2021).

Ye, M., Miao, C., Wang, T. & Ma, F. Texthoaxer: Budgeted hard-label adversarial attacks on text. In Proceedings of the AAAI Conference on Artificial Intelligence 36, 3877–3884 (2022).

Saxena, S. Textdecepter: Hard label black box attack on text classification. arXiv preprint, arXiv:2008.06860 (2020).

Alzantot, M. et al. Generating natural language adversarial examples. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, 2890–2896 (2018).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95-International Conference on Neural Networks, vol. 4, 1942–1948 (IEEE, 1995).

Ye, M., Chen, J., Miao, C., Wang, T. & Ma, F. Leapattack: Hard-label adversarial attack on text via gradient-based optimization. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2307–2315 (2022).

Liu, H. et al. Sspattack: a simple and sweet paradigm for black-box hard-label textual adversarial attack. In Proceedings of the AAAI Conference on Artificial Intelligence 37, 13228–13235 (2023).

Ye, M. et al. Pat: Geometry-aware hard-label black-box adversarial attacks on text. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 3093–3104 (2023).

Mrkšić, N. et al. Counter-fitting word vectors to linguistic constraints. arXiv preprint, arXiv:1603.00892 (2016).

Cer, D. et al. Universal sentence encoder. arXiv preprint, arXiv:1803.11175 (2018).

Charikar, M. S. Similarity estimation techniques from rounding algorithms. In Proceedings of the thiry-fourth annual ACM symposium on Theory of computing, 380–388 (2002).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint, arXiv:1412.6980 (2014).

Zhang, X., Zhao, J. & LeCun, Y. Character-level convolutional networks for text classification. Advances in neural information processing systems 28 (2015).

Pang, B. & Lee, L. Seeing stars: Exploiting class relationships for sentiment categorization with respect to rating scales. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL’05), 115–124 (2005).

Maas, A. et al. Learning word vectors for sentiment analysis. In Proceedings of the 49th annual meeting of the association for computational linguistics: Human language technologies, 142–150 (2011).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint, arXiv:1810.04805 (2018).

Kim, Y. Convolutional neural networks for sentence classification. CoRR abs/1408.5882 (2014). arXiv:1408.5882.

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural computation 9, 1735–1780 (1997).

Pennington, J., Socher, R. & Manning, C. D. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 1532–1543 (2014).

Reimers, N. & Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing (Association for Computational Linguistics, 2019).

Zhou, Y., Zheng, X., Hsieh, C.-J., Chang, K.-W. & Huan, X. Defense against synonym substitution-based adversarial attacks via dirichlet neighborhood ensemble. In Association for Computational Linguistics (ACL) (2021).

Ebrahimi, J., Rao, A., Lowd, D. & Dou, D. Hotflip: White-box adversarial examples for text classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), 31–36 (2018).

Acknowledgements

We acknowledge the financial support from the National Natural Science Foundation of China (NSFC) under Grant 62272089.

Author information

Authors and Affiliations

Contributions

S.Q. designed the approach, conceived the experiments, and wrote the article; Q.L. and S.Z. directed and reviewed the article; M.G. conducted the experiments; Y.Z. analyzed the results; Z.Z and Z.W. searched and analyzed researches.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qiu, S., Liu, Q., Zhou, S. et al. Hard label adversarial attack with high query efficiency against NLP models. Sci Rep 15, 9378 (2025). https://doi.org/10.1038/s41598-025-93566-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-93566-5

This article is cited by

-

Dialectal substitution as an adversarial approach for evaluating Arabic NLP robustness

Scientific Reports (2026)

-

Enhancing adversarial resilience in semantic caching for secure retrieval augmented generation systems

Scientific Reports (2026)