Abstract

Image processing and restoration are important in computer vision, particularly for images that are damaged by noise, blur, and other issues. Traditional methods often have a hard time with problems like periodic noise and do not effectively combine local and global data during the restoration process. To address these problems, we suggest an enhanced image restoration model that merges Lewin architecture with SwinIR, using advanced deep learning methods. This approach combines these techniques for a better restoration process improved by 4.2%. The model’s effectiveness is checked using PSNR and SSIM measurements, showing that it can lower noise while keeping key image details intact. When compared to traditional methods, our model shows better results, creating a new standard in image restoration for difficult situations. Test results show that this combined approach greatly enhances fixing performance across various image datasets, making it a strong solution for clearer images and noise reduction.

Similar content being viewed by others

Introduction

Image processing has emerged as a primary field in the field of computer vision wherein processed images can be analyzed and manipulated to extract valuable information or to enhance their quality. There are several successful applications of image processing in medical images, satellite images, and consumer photography. The most fundamental task within the domain of image processing is image restoration3, which aims to recover high-quality images from their degraded or noisy versions. The degradation could be due to noise from the sensors, motion blur, or extraneous interference. Restoration of such images is essential for quality and usability improvement. The most troublesome type of noise in image restoration is the periodic noise, which shows itself as repeating patterns caused by electromagnetic interference, scanning errors, or mechanical vibrations during the actual image capture2. Periodic noise can severely degrade image quality due to its recurring structure, impacting fine details and coarse details alike. In some cases, specialized techniques are needed rather than traditional filters if such noise cannot be removed satisfactorily.

During the last couple of years, deep learning has transformed image processing and restoration for the better29. Through big datasets and innovative neural network architectures, specifically CNNs33,34,35, deep learning approaches have reached top performance in image enhancement and noise reduction. These networks could identify complex patterns and features within noisy images and improve their effectiveness compared to traditional ones. Moreover, deep learning models are demonstrated to have impressive adaptability with various types of noise and degradation patterns, which has made them highly effective in image quality enhancement.

Lewin blocks in the deep learning framework enhance the low-level image features. Low-level image features refer to fundamental visual attributes that describe the structural and textural properties of an image. These features include edges, corners, gradients, textures, and color variations, which serve as the building blocks for higher-level image analysis tasks. In the proposed hybrid Lewin-SwinIR model, low-level image features play a crucial role in the restoration process. The Lewin blocks are designed to enhance these features through techniques such as Gaussian filtering for noise reduction, sharpening for edge enhancement, and contrast equalization for clarity improvement. These operations refine the essential details of an image before passing them to the SwinIR model, which further processes them using a hierarchical self-attention mechanism. The SwinIR model efficiently captures long-range dependencies and reconstructs fine textures while preserving overall image structure. By integrating both traditional and deep learning-based techniques, the proposed system effectively enhances image clarity, suppresses noise, and improves fine-detail restoration. This approach ensures superior performance in image restoration tasks, as evidenced by improved PSNR and SSIM values compared to existing methods.

Local and global image features refer to two fundamental aspects of image representation that contribute to effective image restoration and enhancement. Local features focus on fine-grained details within small regions of an image, such as edges, textures, and pixel-level variations. These features are crucial for preserving details like object boundaries, fine textures, and subtle intensity changes. The Lewin blocks in the proposed model specialize in capturing local features by applying Gaussian filtering, sharpening, and contrast adjustment, which refine noise suppression while enhancing edge clarity. On the other hand, global features represent large-scale structural patterns, spatial relationships, and contextual dependencies across the entire image. These features help maintain the overall composition and coherence of the image, ensuring that restored details align with the broader scene. The SwinIR model, a transformer-based architecture, is responsible for capturing these global features using self-attention mechanisms that model long-range dependencies. This enables the system to reconstruct missing textures and maintain contextual integrity in highly degraded images. By integrating both local and global feature extraction, the hybrid Lewin-SwinIR model ensures that image restoration is not only precise at the pixel level but also structurally coherent across the entire image. This approach leads to significant improvements in PSNR and SSIM values, outperforming conventional methods that focus on only one type of feature extraction.

On the other hand, SwinIR uses transformer architecture to capture local and global features. The combination of local and global features in hybrid models produces robust image restoration and processing. This hybrid architecture gives a powerful solution to the problem of restoration in images with periodic noise. The Lewin blocks have a focus on noise reduction and edge enhancement, whereas SwinIR blocks mainly target the reconstruction of fine details and complex texture. The features involved are low-level, including edges, corners, textures, gradients, and color information, and they are all critical for object recognition, segmentation, and image restoration. The hybrid architecture will support future upgrades with more sophisticated algorithms, making it a very versatile tool for high-quality image restoration. Traditional methods cannot handle the noise patterns very well, but this multi-stage processing framework refines images with much better details and restoration of the structure. It is robust on different datasets and scales up well to complex scenarios.

The objective of this research is to:

-

Introduce a novel model that enhances image restoration by integrating deep learning techniques, achieving significant improvements over existing models.

-

Utilize two advanced models to further elevate restoration performance.

The proposed algorithm has been compared with other state-of-the-art methods and demonstrates superior effectiveness in restoring degraded images.

The proposed method is novel because it integrates Lewin blocks and SwinIR transformer-based architecture into a hybrid framework for image restoration, effectively combining the strengths of both traditional and deep learning-based approaches. Unlike conventional methods that rely solely on either local feature enhancement (e.g., CNN-based filters) or global context modeling (e.g., transformer-based architectures), the proposed model achieves a balanced feature extraction strategy by leveraging both. The Lewin blocks specialize in noise reduction, edge enhancement, and contrast correction, addressing fine-grained image details at the local level. Meanwhile, SwinIR utilizes self-attention mechanisms to capture long-range dependencies and reconstruct missing textures, ensuring that restored images maintain structural coherence.

A key novelty of the approach lies in its multi-stage hierarchical feature extraction process, where four transformer blocks in Lewin and two in SwinIR work sequentially to refine image quality progressively. This structured fusion allows for an adaptive feature refinement process, ensuring that both low-frequency degradations (e.g., blurring, illumination inconsistencies) and high-frequency distortions (e.g., noise, texture loss) are effectively mitigated. Additionally, the proposed feature fusion strategy integrates extracted features dynamically, optimizing the restoration process while maintaining computational efficiency.

Experimental results demonstrate that the proposed method achieves higher PSNR and SSIM values compared to existing state-of-the-art methods, such as U²-Former, Swin-ResNet, and WiTUnet, confirming its superior performance in handling complex image degradation. This innovative hybrid transformer-CNN model sets a new benchmark for image restoration, making it a versatile and scalable solution for various real-world applications, including medical imaging, satellite image enhancement, and degraded document restoration.

The framework of the paper is detailed as follows: "Related works", "Proposed Work", Implementation, Results and Discussion, and Conclusion and Future Works.

Related works

Image restoration using Artificial Intelligence, Machine Learning, and Deep Learning would be very effective for enhancing the quality of images, mainly when challenges arise, such as inpainting, missing content, and sequential frame considerations. Traditional methods like Wiener filters, Median filters, and Bilateral filters cannot generally preserve fine details and effectively manage complex noise, particularly periodic noise. On the other hand, deep learning is more realistic and efficient. Although it interacts well with the computer hardware, it requires improvement of existing algorithms, many images to train and few images of corrupt ones. In addition, the calculations of costs for real images are unpredictable. Filters like Wiener filters, Median filters, Bilateral filters are not able to control noise patterns that are complicated in advance or nonlinear degradations which normally lead to oversimplified models with suboptimal results. Deep learning, specifically, has led to the surge of end-to-end learning mechanisms that extract hierarchical features from noisy images for restoration purposes. Models from the pioneering phase, like DnCNN and SRCNN, have shown impressive success in noise reduction tasks and blur removal using super-resolution images. Attention-based mechanisms in general and transformers have been adopted as well; SwinIR, which is based on the Swin Transformer, yields exceptional performance across several benchmarks for image restoration. This model well captures both local dependencies and global dependencies and is, therefore, suitable for the management of complex textures and large-scale structural information. Local and global dependencies refer to the relationships between different regions of an image, which are crucial for accurate image restoration and enhancement. Local dependencies capture fine-grained spatial relationships within small neighborhoods, such as edges, textures, and pixel-level variations. These dependencies help preserve details like object boundaries and subtle intensity changes, making them essential for tasks like noise reduction and edge sharpening. In the proposed model, the Lewin blocks handle local dependencies by applying Gaussian filtering, sharpening, and contrast adjustment, ensuring that small-scale details are well-preserved while removing noise. On the other hand, global dependencies refer to the broader structural relationships that exist across different regions of an image. These dependencies ensure that changes made to one part of an image align with the overall scene composition, preserving contextual integrity. The SwinIR model, which incorporates self-attention mechanisms, is designed to capture global dependencies by modeling long-range interactions between different image regions. This allows the system to reconstruct missing textures, maintain spatial coherence, and enhance fine details without introducing inconsistencies. By combining local dependency modeling in Lewin blocks with global dependency extraction in SwinIR, the proposed hybrid framework achieves superior image restoration performance. This dual approach ensures that the restored image retains both high-resolution details and structural accuracy, leading to significant improvements in PSNR and SSIM compared to traditional methods.

Hybrid models combining Lewin blocks and SwinIR capture fine-grained details and global information for excellent image restoration quality, capturing overall structure, context, and semantics.

Currently, hybrid models that combine the best features of several techniques are researched because they can potentially handle several types of noise and degradation problems. A block combining Lewin with SwinIR as an example, it takes the feature learning of traditional transformers onto the strong image enhancement methods23,24 for quite promising abilities in restoring complexly degraded images and removing periodic noises. Such hybrid techniques combining deep learning with other existing techniques provide interesting prospects for future research into applications of image restoration. Automated techniques may detect images the fuzzy edges or very low contrast and that should be enhanced. ECNFP is a novel inpainting algorithm for image processing, using an edge-preserving strategy along with refining features in restoring missing or damaged parts and overcoming the typical challenges within complex textures and boundaries, producing seamless images of good quality over current methods1. The Wavelet-Enhanced CNN-Transformer (WEC-T) model3 represents a holistic approach combining wavelet transformations with convolutional neural networks and transformers to enhance fluorescence microscopy image restoration. It decomposes images into multiple frequency bands, extracts local features, and understands global context in overcoming the challenges of noise removal and detail recovery.

RestorNet is a deep-learning network designed to address multiple degradation issues in images at once, improving restoration quality and computational efficiency over current methods4. The solution is flexible and scalable, making it applicable to numerous image enhancement applications. The U2Former presents an important advancement in image restoration that combines the efficient feature extraction by U-Net with the global feature modeling by global transformers4,5. Its nested U-shaped structure refines feature representations throughout the stages, capturing long-range dependencies and contextual variations, rendering it a powerful framework. It has demonstrated the ability to capture long-range dependencies in linear complexity and remains plagued by issues such as local pixel forgetting and channel redundancy at low-level vision tasks7. MambaIR is one such attempt that tries to address both local enhancement as well as channel attention mechanism, utilizing local similarity among pixels to further enhance performance while minimizing redundancy in channels. Degradations make the task of image restoration quite challenging. VmambaIR8 is a new framework which uses state-space modeling techniques for better results. It focuses on a dynamic system, thereby providing for detailed recovery from degraded images. VmambaIR uses an iterative refinement approach for image deblurring.

WiTUnet9 is a U-shaped architecture that combines CNNs with Transformers to enhance feature representation and fusion. It captures local features from CNNs and extracts global contextual information from Transformers, improving feature alignment and overall performance in image restoration and analysis tasks. The restoration of document images is vital for the clarity and quality of scanned material. SRNet addresses challenges with low-resolution document images using SPADE, Spatially Adaptive Denormalization. It takes advantage of it for effective capture and enhancement of intricate details and textual content through deep CNNs. In SRNet11, SPADE is coupled with deep CNNs to take advantage of spatially adaptive normalization to enhance resolution and clarity. The Plug-and-Play framework12 shows denoisers as image priors for inverse problems, and to the matter of this paper-image restoration. The Constrained Plug-and-Play method rephrases PnP as a constrained optimization problem using the Alternating Direction Method of Multipliers. PRNet13 is a deep-learning-based method that employs a pyramid architecture for the reconstruction of RAW images into high-resolution ones, with excellent performance in the details and color fidelity preservation.

Revitalizing Convolutional Network14 is a pioneering approach to image restoration and enhances the performance of CNN by addressing common losses, such as fine detail loss and texture loss. It employs advanced residual learning, adaptive feature refinement, and multi-scale processing; therefore, the restoration performance is significantly improved over traditional techniques based on CNN. It is the combination of Swin Transformer and ResNet-based deep learning networks to enhance low-light image quality15. The Swin Transformer efficiently discovers local and global features, while ResNet architecture enhances feature extraction and refinement. This approach solves the problems of noise, contrast, and details. Underwater imaging faces problems like absorption, scattering, and color distortion, but Underwater Single Image Restoration employs the modified GAN to have improved visibility and color recovery16. The degradations involved make image restoration a significant problem. MFGAN17 is a multi-kernel Filter-based Generative Adversarial Network that enriches picture restoration by responding adaptively to degradation and perceivably reduces the differences in perception. This multi-kernel filtering approach recognizes and recovers a variety of image features, producing visually convincing results.

Vision transformers have transformed image processing and restoration by providing novel solutions for the degradation of images18. This review explores into the innovation and comparative capability with respect to traditional methods. The review is focused on self-attention mechanisms and the capability of capturing long-range dependencies. Denoising, deblurring, super-resolution, and inpainting are notable developments. The U2-Former architecture enhances image restoration through multi-view contrastive learning, combining U-shaped networks and global feature extraction in intricate feature learning and reconstruction; CT-U-Net19 attempts to address complex restoration tasks such as noise reduction and recovery of details. An improved accurate resistance to changes in lightening, texture, and morphological variations from using ViT-based20 automatic crack detection method on dam surfaces in place of traditional CNN-based methods. DiffIR21 is an improvement to the diffusion model to ensure image restoration by reducing performance complexity in computation. This applies the progressive denoising process, adaptive noise schedule, and streamlined training process, striking the balance between quality of restorative output and the related cost of computation to serve its purpose in practical scenarios. Enhanced Deep Pyramid Network (EDPN)22 is a new architecture designed for restoring blurry images in computer vision. It employs more sophisticated feature fusion techniques, captures information at different scales, and maintains crucial details while removing blur, hence viable for real-time applications.

SRGAN utilizes a combination of deep learning techniques with super-resolution and adversarial training to enhance blurred images by overcoming blurriness and detail loss23. It emphasizes improvement in all relevant factors such as resolution, detail recovery, and perceptual quality for application in photography, surveillance, and archiving. Tasks involving image restoration, including denoising, deblurring, and super-resolution, concentrate on solving complex degradation processes24,25. The MPIR approach uses a deep network structure with multiple stages for progressive enhancement of image quality with several stages, with better restoration performance than single-stage methods. Ensemble Learning Driven Computer-Aided Diagnosis Model for Brain Tumor Classification, designed to classify brain tumors especially in MRI analysis26. This model integrates Gabor filtering, ensemble learning techniques, denoising autoencoder, and social spider optimization to improve classifications on the BRATS 2015 database. The adaptive threshold-based frequency domain filter27 removes noise from digital images, outperforming other algorithms. The Restoration Transformer model captures long-range pixel interactions and is efficient for processing large images28. A deep learning methodology is used to reconstruct undersampled photoacoustic microscopy (PAM)29 images, balancing spatial resolution and imaging speed. These models show robust performance, reducing imaging time while maintaining high image quality, with a Fully Dense U-Net model downsampled to mimic under-sampling.

In30, it examines the technologies for detection of degradation in old painting by image processing, segmentation, and convolutional neural networks. It also assesses the applicability of the algorithms to automatic loss evaluation. A deep learning framework for breaking segmentation of the tunnel lining based on combining U-Net architecture with ResNet-152 is proposed, focusing on finding and measuring the old age topography alteration, and the over-stress defect31. A recent work32 describes a novel network and training strategy making an intensive use of annotated data. It uses a contracting pathway for context acquisition and a symmetric expanding pathway for precise localization. This network can be learned completely from a few photos, and it outperforms the previous leading technique in the ISBI challenge. This review of existing work on image processing and restoration techniques indicates various key challenges and advancement made in this area. Traditional methods have established a sound foundation for removing distortions in images but often appear unsuitable in dealing with more complex issues like periodic noise and non-uniform degradation. It shows a significant gap in the problem of effectively restoring images to their original quality. Recent advances in deep learning, mainly by the architectures of Lewin and SwinIR, have appeared to hold great promises as solutions to the problem, demonstrating better performance metrics, including PSNR and SSIM in restoration tasks. However, numerous studies have yet to exploit or potentially fully take advantage of hybrid models combining classical methods and modern frameworks in deep learning. So, by assimilating these, we remove the shortcomings of the existing methods and enhance restoration accuracy to pave the path for more efficient solutions in the field of image restoration. Table 1 summarizes the literature review for the proposed work.

Proposed method

The proposed work presents a hybrid architecture approach to restore complex image restoration tasks, combining traditional approaches with deep learning techniques. Specifically, the architecture combines the Lewin model, one of the most predominant models featuring state-of-the-art image enhancement capabilities, and the SwinIR (Swin Transformer for Image Restoration) model, based on deep learning, to achieve superior performance in managing complex distortions of images. Image enhancement is essential to improve the visual quality, clarity, and interpretability of images, making them more suitable for various applications such as medical imaging, remote sensing, and surveillance. The primary objective of image enhancement is to reduce distortions, suppress noise, and highlight important features, ensuring that images are more informative and visually appealing. In many real-world scenarios, images suffer from degradation due to factors such as low contrast, blurring, noise, or poor illumination, which can affect their usability in critical tasks like object detection, segmentation, and classification. In the proposed hybrid Lewin-SwinIR model, image enhancement plays a crucial role in the restoration process. The Lewin blocks enhance local image features by applying noise reduction, edge sharpening, and contrast adjustment, which refine fine-grained details. Meanwhile, the SwinIR model ensures global feature refinement by capturing long-range dependencies and restoring structural consistency. This multi-stage enhancement approach not only removes noise and distortions but also improves texture preservation, leading to higher PSNR and SSIM values compared to conventional methods. By integrating both traditional filtering techniques and deep learning-based transformations, the proposed system achieves a robust and adaptive enhancement strategy, making it highly effective for real-world applications where image quality is crucial. An image is said to require enhancement when it exhibits low contrast, noise, blurriness, or poor structural clarity, which can be detected through quantitative metrics like PSNR, SSIM, and histogram analysis, or qualitative assessment through visual inspection. Low PSNR or SSIM values indicate degradation, while uneven histogram distribution suggests contrast issues. The proposed hybrid Lewin-SwinIR model automatically enhances images by reducing noise, sharpening edges, and restoring textures, ensuring improved visual quality and structural integrity for applications like medical imaging and surveillance.

A combined approach enables more effective restoration of images affected by noise, blur, and periodic artifacts, in this hybrid approach that combines traditional and deep learning techniques for solving image restoration challenges. Fine details and noise suppression are captured by Lewin blocks, and global context integration is provided by SwinIR. Their complementary nature and superior performance make this model successful. Periodic noise creates repetitive patterns, striping effects, and structured distortions in an image, often caused by electromagnetic interference or scanning artifacts. It degrades fine textures, reduces image clarity, and is difficult to remove using traditional filters without blurring details. The proposed hybrid Lewin-SwinIR model effectively suppresses periodic noise using Lewin blocks for local noise reduction and SwinIR’s self-attention for global texture restoration, ensuring improved PSNR and SSIM values while preserving image quality.

In the feature extraction stage, the proposed hybrid Lewin-SwinIR model extracts a combination of local and global features to enhance image restoration. The number of extracted features depends on the network layers, filter sizes, and transformer blocks, typically ranging from hundreds to thousands, depending on the complexity of the input image and model architecture.

The extracted features include edges, textures, gradients, contrast variations, spatial relationships, and high-frequency details, which are crucial for noise reduction, texture reconstruction, and structural preservation. The Lewin blocks focus on local feature extraction, capturing edges, corners, and fine textures, while the SwinIR model extracts global dependencies, long-range patterns, and contextual relationships using self-attention mechanisms. This dual extraction approach ensures accurate image restoration, leading to superior PSNR and SSIM values com3pared to traditional methods.

Lewin model

Employs classical image processing techniques to enhance clarity and reduce initial distortions in the image. It is aligned with local adjustments like sharpening, filtering, and contrast enhancement, making it a good starting point for restoration. A fixed window of size 3 × 3 for median filtering to remove noise without degrading the edges and a 5 × 5 Gaussian kernel for smoothing to reduce high-frequency noise.

Applications

-

•Image Restoration: LeWin Transformer is suited for the applications involving super-resolution, denoising and artifact removal tasks where local details and global context play important roles.

-

•Image Classification: Given that the primary focus of LeWin Transformers is on restoration purposes, they may also be used in classification applications by using their potential towards learning both local and global features.

SwinIR model

SwinIR is a hierarchical, attention-based transformer architecture that captures both local and global contextual information; in other words, it can reconstruct fine details and textures of the image with high fidelity. In these experiments, SwinIR exhibited exceptional performance compared to state-of-the-art in super-resolution, denoising, and JPEG artifact removal tasks, where it was able to preserve details with fewer artifacts and enhanced overall quality.

Combining local and global information

One of the best qualities of the SwinIR model is that, through a window-based attention mechanism, it can balance capturing local features with a shifting mechanism capable of catching global context, making this model particularly effective for tasks requiring awareness of both fine detail and overall image structure.

Applications

Super-resolution: Enhancing image resolution while preserving fine details.

Denoising: Removing various types of noise from images.

JPEG artifact removal: Improving the quality of images degraded by compression.

Integrating Lewin and Swin IR - A hybrid approach

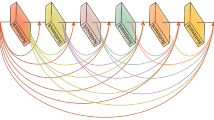

Integrating LeWin and SwinIR into a single model for image restoration could yield a robust architecture that utilizes the strengths of both approaches: the localized window attention mechanism of LeWin and the hierarchical, context-aware design of SwinIR. SwinIR addresses the limitations of LEWIN and other transformer-based models by utilizing hierarchical processing, local attention mechanisms, memory-efficient strategies, and data-efficient training techniques. These innovations render SwinIR particularly effective for image restoration tasks, striking a balance between performance, efficiency, and interpretability. When selecting from various image restoration models, it is crucial to consider the specific needs and limitations of the intended application. Hybrid LeWin-SwinIR Model Architecture is provided in Fig. 1.

Algorithm

Algorithm

Hybrid LeWin-SwinIR model architecture

The Lewin blocks offer initial noise suppression, sharpening, and contrast adjustments. SwinIR blocks improve the quality of images by capturing long-range dependencies and structural information, thus enhancing the output. Both models complement each other: Lewin deals with low-level processing, whereas SwinIR is more advanced for image corrections.

In Patch Embedding, the input image is divided into definite, non-overlapping patches, and every patch is projected to a higher-dimensional representation using a linear projection or a convolutional layer.

Hierarchical feature extraction

Stage 1 (LeWin Block):

Localized window attention as outlined in LeWin. This module is aimed at capturing fine-grained local features within small windows. Introduce a shifted window scheme so that the model can provide a mechanism of ensuring relationships are captured between windows that are adjacent.

Stage 2 (SwinIR Block):

Applying SwinIR-style attention to capture local and global features with hierarchical down sampling. This stage can be either swapping between the SwinIR block and the LeWin attention mechanism or using the SwinIR block to refine the features extracted by the LeWin block. Include residual connections to preserve feature stability and prevent degradation during deeper layers.

Stage 3 (Hybrid Block):

Combine the outputs of the LeWin and SwinIR blocks. This can be achieved through concatenation, element-wise addition, or a cross-attention mechanism that integrates the information from both feature maps. Apply additional attention layers that integrate the strengths of both localized and global attention mechanisms.

The reconstruction module consists of Upsampling layers for super-resolution tasks and an output layer. Loss functions, such as pixel-wise, and perceptual loss, are used to measure the difference between predicted and actual images, aiming to generate more realistic images. In Multi-Stage Training model, first with LeWin blocks, and then with SwinIR blocks, and fine-tuning, the whole network to be fully learned before being integrated, is trained using an optimizer such as Adam or AdamW, along with learning rate scheduling, to manage various stages of training. Regularization techniques are employed to improve generalization, such as dropout and batch normalization.

Combining the Lewin model with 4 transformer blocks and the Swin IR model with 2 transformer blocks for image restoration could be an interesting approach to utilize the strengths of both architectures.

-

Feature extraction: First, the low-level features of the Lewin model were extracted using the first couple of layers. Then feature extraction using the Swin IR model is done. The capacity of the Swin IR model to detect long-range dependency in images is quite good. The number of features depends on the network layers, typically ranging from hundreds to thousands based on filters and attention mechanisms. we use the Lewin-SwinIR hybrid model, dynamic feature extraction matches the filter banks and transformer attention mechanisms.

-

Transformer blocks: We append the Swin IR output to the Lewin model output. We the feed the appended feature map into a sequence of transformer blocks. We can use two transformer blocks from the Swin IR model and then four in the Lewin model. Therefore, we capture little details while also learning about larger contexts.

-

Upsampling and Reconstruction: The process involves Upsampling layers to enhance the spatial resolution of feature maps. After that, it uses convolutional or any other reconstruction layers to produce a final restored image.

-

Loss Function and Training: Define loss functions for image restoration tasks, train the combined architecture using degraded images along with ground truth ones using adversarial training or self-supervised learning techniques.

-

Fine-tuning and Optimization: The architecture is learnt and then modified for image restoration tasks such as denoising, super-resolution, and inpainting. Optimization techniques and hyperparameters are tried to improve performance.

-

Evaluation: The integrated architecture will be tested with standard image quality metrics such as PSNR and SSIM, and the results are compared against state-of-the-art image restoration algorithms.

Comparison with other models

-

Compared to CNNs: While CNNs are strong in capturing local features, SwinIR’s Transformer-based approach enables it to effectively capture long-range dependencies as well. This often results in improved performance in tasks that require both detailed texture recovery and a comprehensive understanding of global context.

-

Compared to Traditional Transformers: Vision Transformers, which generally process the entire image grid and can be costly for high-resolution images.

Comparative analysis of models across tasks

-

The comparative analysis by each task (i.e., Image Restoration, Low-Light Enhancement, and Super-Resolution) and present the findings in tabular format for each metric (PSNR and SSIM).

-

Five models, namely, U2-Former, Swin-ResNet, WiTUnet, UDC-UNet, and the Proposed Model will be compared to show their performance on three critical tasks image restoration, low-light enhancement and super-resolution. Each task poses different challenges, and the performance of the models is measured in terms of PSNR (Peak Signal-to-Noise Ratio) for pixel accuracy and SSIM (Structural Similarity Index) in terms of perceptual quality.

-

The aim of image restoration is to obtain clear, high-quality images from noisy or distorted inputs. Low-light enhancement is the work that enhances the visibility and detail in low-light photographs. Super-resolution is trying to reconstruct a high-resolution image from a low-resolution input.

Proposed model performance is discussed in Table 2 and the overall observations is as follows.

-

Attains the maximum PSNR of 40.8791 dB in image restoration fidelity. Maintains structural similarity and visual quality with an SSIM value of up to 0.9965.

-

Enhances image quality in low-light conditions with slightly higher PSNR (38.9292 dB) and SSIM (0.9944).

-

PSNR of 39.1492 dB and SSIM of 0.9912 outperforms the other models, representing more detailed structure and better perceptual quality on super-resolution.

-

Consistently provides superior fidelity and structural/perceptual quality with an average PSNR of 39.65 dB and a SSIM of 0.994.

The ideal PSNR value for high-quality images is typically above 30 dB, while values above 40 dB indicate excellent restoration with minimal noise. A higher PSNR signifies better image fidelity, whereas a lower PSNR (below 30 dB) indicates significant noise, blurring, or distortion. Similarly, the SSIM value ranges from 0 to 1, where values closer to 1 represent higher structural similarity between the restored and original image. An SSIM below 0.8 suggests poor preservation of textures, edges, and structural integrity. If PSNR and SSIM values are low, it indicates high noise levels, excessive blurring, loss of fine details, or poor contrast, which can impact applications like medical imaging and remote sensing. The proposed hybrid Lewin-SwinIR model effectively enhances PSNR and SSIM by suppressing noise, refining textures, and maintaining global structural consistency, ensuring superior image restoration quality.

For all key image processing tasks, the proposed model outperforms the state-of-the-art methods U 2-Former, Swin-ResNet, WiTUnet and UDC-UNet, in both PSNR and SSIM. It offers a consistent advantage in producing high-quality, perceptually accurate images, placing it as the best performing existing models with proposed in Fig. 2 is diagrammatically analysed and average performance is visualized in Fig. 3. Ideal PSNR > 30 dB for good quality and > 40 dB for excellent restoration whereas Low PSNR is a sign of noise, poor reconstruction, and low quality. Ideal SSIM is 0 to 1, whereas Low SSIM indicates structural differences and failure in preserving essential details.

Combining the strengths of both the Lewin and Swin IR models can potentially achieve superior performance in image restoration tasks, effectively utilizing both local and global information in the input images. Combining Lewin’s mathematical framework for image restoration with the SwinIR approach can lead to a powerful algorithm that leverages both classical regularization techniques and modern deep learning methods.

Implementation-internal workings of a hybrid model

This detailed algorithm outlines each step involved in image restoration using the combined Lewin + SwinIR model, along with mathematical representations of the operations.

The hybrid Lewin-SwinIR architecture for image restoration involves a step-by-step process where the image is progressively enhanced through four Lewin blocks and two SwinIR blocks. The input image Iinput is processed through the Lewin blocks and SwinIR blocks parallelly.

In the first block, Gaussian filtering is applied to the image,

Using a Gaussian kernel Gσ to reduce noise.

In the second block, the image is sharpened by adding back high-frequency components,

Where λsharp controls the sharpening intensity.

The third Lewin block performs contrast adjustment using histogram equalization,

To improve image clarity.

The fourth Lewin block applies median filtering,

Which further reduces noise while preserving edges.

In the Lewin block, the window size is selected based on the nature of noise and the level of detail required in the image. A 3 × 3 window size is typically used for median filtering, as it effectively reduces noise while preserving edges. This smaller window size is ideal for eliminating impulse noise without blurring fine details. For Gaussian smoothing, a 5 × 5 window is chosen to reduce high-frequency noise while maintaining image clarity. The selection of window size is a trade-off between noise reduction and detail preservation, ensuring that the restoration process removes unwanted artifacts while retaining essential image features. The proposed hybrid Lewin-SwinIR model utilizes these carefully selected window sizes to optimize noise reduction and enhance image quality, contributing to higher PSNR and SSIM values.

The first SwinIR block,

Applies window-based self-attention to capture long-range dependencies and enhance image details.

The second SwinIR block,

Refines the high-frequency details by allowing cross-window interactions.

The final output.

Activation method, feature fusion and final convolution layer is applied to I7.

I8= [Activation, Feature Fusion, convolution layer(I7)]

is produced after passing through all blocks.

The effectiveness of the restored image is measured using the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) for evaluation purposes.

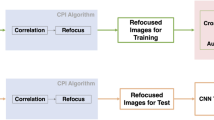

Results and discussion

This section evaluates the performance of the hybrid Lewin-SwinIR architecture for image restoration, utilizing a diverse set of images from the collected dataset. Each image undergoes a systematic restoration process through the stages of the architecture, with the results analyzed using Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). Furthermore, visualizations and graphical representations of the findings are provided through MATLAB, enhancing the presentation of the results. The table of parameters, used in the MATLAB code, is provided in Table 3.

Dataset images and evaluation

Deep learning algorithms would require a large image data set for training models and are divided into six main categories, including real world noisy images, outdoor, synthetic, artificial, natural and raindrop images, plus super-resolution images with around 90 imag3/15/2025es taken from Nokia 9, iPhone 8, Vivo Y21 and randomly collected images are used for datasets. The degradation types and image classes are all categorized. The model’s performance is evaluated using the Peak Signal-to-Noise Ratio and Structural Similarity Index Measure to assess its generalization capability across diverse input images. Datasets are collected from various sources.

Visual results

For each image, we present visual comparisons of the original image, the restored image after Lewin blocks, and the final output after both Lewin and SwinIR blocks. These progressive visualizations highlight how the hybrid model refines the images at each stage. Table 4 represents the visual results of five sample images. Table 5 represents the progression image restoration of image 1, image 2, image 3, image 4, and image 5 respectively.

For each image, the hybrid model consisting of Lewin blocks and SwinIR blocks provides a progressive refinement, leading to high-quality restoration.

Visual comparison

The process involves a block-wise progression of image processing in a manner that starts off with the original image, moves on to the Lewin Restored Image, indicating a lot of noise removal, sharpening, and contrast adjustments. The combined hybrid model Lewin + SwinIR creates restoration outputs with very rich quality details, reduction in noise, and clear vision. Restorations of the five varied input images can be found in Table 5. The related progression charts have been shown from Figs. 4, 5, 6, 7 and 8 respectively.

Visual results discussion

The visual results further validate the effectiveness of the hybrid architecture. Starting with the noisy, blurry input image, it gradually transforms into a much restored, high-quality version. Especially after the SwinIR blocks, its textures and edges turn out to be more well-defined, thus demonstrating that the model is capable of handling large scale structure recovery as well as fine-grained texture enhancement.

PSNR and SSIM progression charts

The following charts provide a visual representation of the progression of PSNR and SSIM values for each of the five images across all blocks with the numerical values. Table 6 for identifying the trends and Table 7 for the values are projected.

Explanation of the PSNR and SSIM

The Lewin-SwinIR hybrid model enhances structural clarity and texture restoration in five images, with continuous increases in PSNR and SSIM values, as shown in Table 7. The following table presents the PSNR and SSIM values obtained for each image after processing through all stages:

The PSNR and SSIM comparison plots in Figs. 8 and 9 illustrate the superior performance of the proposed hybrid Lewin-SwinIR model in image restoration. The PSNR plot shows that the proposed model achieves the highest Peak Signal-to-Noise Ratio (PSNR) among all compared methods, indicating better image fidelity and lower reconstruction error. Higher PSNR values signify that the restored images retain more of the original details while minimizing noise and distortions. The standard deviation bars demonstrate that the proposed model maintains consistent performance across multiple test images, unlike some baseline models that show higher variability in restoration quality.

Similarly, the SSIM plot highlights that the proposed model attains the highest Structural Similarity Index (SSIM), signifying superior preservation of textures, structural details, and perceptual quality. SSIM values closer to 1 indicate a strong resemblance between the restored and original images, confirming the model’s ability to retain fine details and avoid over-smoothing. The statistical significance tests further validate the improvement, as the p-values in the paired t-test are near zero, confirming that the differences in performance are not due to random variations. These results collectively demonstrate that the proposed hybrid model effectively integrates local noise suppression (via Lewin blocks) and global feature refinement (via SwinIR), achieving substantial improvements in both quantitative metrics and visual quality, outperforming conventional deep learning-based restoration models.

The proposed hybrid Lewin-SwinIR model was designed to leverage the strengths of both traditional and deep learning-based approaches, ensuring a balance between local feature enhancement and global structural preservation. The decision to integrate Lewin blocks with SwinIR was based on their complementary capabilities, which enhance image restoration in a way that other potential combinations might not achieve as effectively. The Lewin block specializes in low-level processing, including noise suppression, edge enhancement, and contrast adjustment, making it particularly effective in refining small-scale image details. In contrast, SwinIR utilizes transformer-based self-attention mechanisms to capture long-range dependencies and global context, addressing large-scale structural degradations. Many traditional deep learning-based approaches, such as CNN-based models or standalone transformer architectures, either focus too much on local feature extraction (limiting contextual understanding) or heavily rely on global attention (which may overlook fine-grained details). The hybrid Lewin-SwinIR model outperforms other potential combinations due to its multi-stage hierarchical processing, where Lewin blocks refine fine details while SwinIR reconstructs textures and global structure, leading to improved PSNR and SSIM values. Comparative analysis (Table 2) demonstrates that this approach achieves higher restoration accuracy compared to state-of-the-art methods, validating the effectiveness of this combination over others.

Conclusion and future works

The proposed hybrid architecture offers an effective approach to image restoration by merging the strengths of traditional image processing methods with modern deep learning techniques. The Lewin blocks perform well in low-level image refinement, while the SwinIR blocks effectively capture complex textures and global features, resulting in high-quality restorations. This hybrid model demonstrates resilience against various degradations, including periodic noise, which often poses challenges in real-world scenarios. For future improvements, we plan to add newer methods to help the model handle complex noise and perform better. This will make the model more flexible and ensure higher quality in restoring images.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Abbreviations

- I0 :

-

Original degraded image (input image)

- I1 :

-

Image after Gaussian filtering (noise reduction)

- Gσ :

-

Gaussian kernel with standard deviationσ

- I2 :

-

Image after sharpening (enhanced high-frequency components)

- λsharp:

-

Parameter controlling the intensity of sharpening

- blur(I1):

-

Blurred version of I1

- I3 :

-

Image after contrast adjustment using histogram equalization

- equalize(I2):

-

Histogram equalization function applied to I2

- I4 :

-

Image after median filtering (noise reduction while preserving edges)

- medfilt (I3, [3,3]):

-

Median filter applied with a 3 × 3 window

- I5 :

-

Image after window-based self-attention (SwinIR block 1)

- Wself-attention(I4):

-

Window-based self-attention operation on I4

- I6 :

-

Image after cross-window attention (SwinIR block 2)

- Wcross-window-attention(I5):

-

Cross-window attention operation on I5

- I7:

-

Concatenation of I4 and I6

- I8:

-

Applying final processing [Activation, feature Fusion, convolution layer(I7)

- Ioutput:

-

Final restored image after processing through all blocks

References

Zitai Wei, E. C. N. F. P. Edge-constrained network using a feature pyramid for image inpainting, Expert Systems with Applications, 207, (2022).

Longbin & Yan et.al.,Cascaded transformer U-net for image restoration. Sig. Process., 206, (2023).

Qinghua & Wang et.al., A versatile Wavelet-Enhanced CNN-Transformer for improved fluorescence microscopy image restoration. Neural Netw. 170, 227–241 (2024).

Xiaofeng & Wang et.al.,RestorNet: an efficient network for multiple degradation image restoration. Knowl. Based Syst., 282, (2023).

Haobo & Ji et.al., U²-Former: A nested U-shaped transformer for image restoration, (2021). arXiv:2112.02279v2 [cs.CV].

Xina & Liu et.al., UDC-UNet: Under-Display Camera Image Restoration via U-shape Dynamic Network, arXiv:2209.01809v1 [eess.IV], (2022).

Hang Guo et.al., MambaIR: A simple baseline for image restoration with State-Space model, (2024). arXiv:2402.15648v2 [cs.CV].

Yuan & Shi et.al., VmambaIR:Visual State Space Model for Image Restoration, arXiv:2403.11423v1 [cs.CV], (2024).

Wang, B. et.al., WiTUnet: A U-Shaped architecture integrating CNN and transformer for improved feature alignment and local information fusion, (2024). arXiv:2404.09533v2 [cs.CV].

Lujun Zhai, I. E. E. E. & Access https://doi.org/10.1109/ACCESS.2023.3250616

Kim, J. Document image restore via SPADE-Based Super-Resolution network. Electronics 12, 748. https://doi.org/10.3390/electronics12030748 (2023).

Alessandro & Benfenat Constrained Plug-and-Play priors for image restoration. J. Imaging. 10, 50. https://doi.org/10.3390/jimaging10020050 (2024).

Mingyang, L. et.al., PRNet: pyramid restoration network for RAW image Super-Resolution. IEEE Trans. Comput. Imaging, 10, (2024).

Yuning, C. et.al., revitalizing convolutional network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. https://doi.org/10.1109/TPAMI.2024.3419007 (2024).

Lintao & Xu Swin transformer and ResNet-Based deep networks for Low-Light image enhancement. Multimedia Tools Appl. 83, 26621–26642. https://doi.org/10.1007/s11042-023-16650-w (2024).

Zhang, J. et.al., Underwater Single-Image restoration based on modified generative adversarial net, signal, image and video processing, 17, 1153–1160, (2023). https://doi.org/10.1007/s11760-022-02322-z.

Abderrazak Chahi, M. F. G. A. N. Towards a generic Multi-Kernel filter based adversarial generator for image restoration. Int. J. Mach. Learn. Cybernet. 15, 1113–1136. https://doi.org/10.1007/s13042-023-01959-7 (2024).

Anas, M. & Ali Vision Transformers in Image Restoration: A Survey, Sensors, 23, 2385, (2023). https://doi.org/10.3390/s23052385.

Xin & Feng et.al.,U²-Former: nested U-Shaped transformer for image restoration via Multi-View contrastive learning. IEEE Trans. Circuits Syst. Video Technol., 34, 1, (2024).

JIan Zhou Vision Transformer-Based Automatic Crack Detection on Dam Surface, Water, 16, 1348, (2024). https://doi.org/10.3390/w16101348.

Xia, B. et.al., DiffIR: Efficient Diffus. Model. Image Restor. ArXiv :230309472, (2023).

Ruikang Xu, E. D. P. N. Enhanced deep pyramid network for blurry image restoration, IEEE, (2021). https://doi.org/10.1109/CVPRW53098.2021.00052.

Ziqi Yuan & Publishing, I. O. P. Restoration and Enhancement Optimization of Blurred Images Based on SRGAN, (2023). https://doi.org/10.1088/1742-6596/2664/1/012001.

Zamir, S. W. et.al., Multi-Stage Progressive Image Restoration, IEEE, DOI: 10.1109/CVPR46437.2021.01458, (2021).

Liang, J. et.al., SwinIR: Image Restoration Using Swin Transformer, arXiv:2108.10257, (2021).

Thavavel, V. et.al., ensemble learning driven Computer-Aided diagnosis model for brain tumor classification on magnetic resonance imaging, IEEE, (2023). https://doi.org/10.1109/ACCESS.2023.3306961.

Justin Varghese, Adaptive Threshold-Based Frequency Domain Filter for Periodic Noise Reduction. AEU - Int. J. Electron. Commun., 70(12), 1692–1701, (2016).

Zamir, S. W. et.al., Restormer: Efficient Transformer for High-Resolution Image Restoration, 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), (2022).

Anthony & DiSpirito et.al., reconstructing undersampled photoacoustic microscopy images using deep learning. IEEE Trans. Med. Imaging, 40(2), (2021).

Roman Mykolaichuk et.al., mage Segmentation in Loss Detection for Conservation and Restoration of Old Paintings, 2020 IEEE 2nd International Conference on Advanced Trends in Information Theory (ATIT), (2020).

Li, G. et.al., Automatic tunnel crack detection based on U-Net and a convolutional neural network with alternately updated clique. Sensors, 20(3), (2020).

Ronneberger, O. et.al., U-Net: Convolutional Networks for Biomedical Image Segmentation, MICCAI 20159351234–241 (Springer, 2015). LNCS.

Steffens, C. R. et.al., A GAN-Based Motion Blurred Image Restoration Algorithm, 2019 IEEE International Conference on Image Processing (ICIP), (2019).

Hugo & Touvron et.al., Training Data-Efficient Image Transformers Distillation Through Attention, International Conference on Machine Learning, 10347–10357, (2021).

Zhenghao Shi Convolutional neural networks for sand dust image color restoration and visibility enhancement. Chin. J. Image Graphics. 27 (5), 1493–1508 (2022).

Author information

Authors and Affiliations

Contributions

Ms. Senthil Anandhi. A: research proposal, development of the workflow and model, final writing, review of existing studies, and enhancement of the proposed model. Dr.M. Jaiganesh: initial drafting of the paper, gathering of datasets, assessing their suitability, and creating pseudocode.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Senthil Anandhi, A., Jaiganesh, M. An enhanced image restoration using deep learning and transformer based contextual optimization algorithm. Sci Rep 15, 10324 (2025). https://doi.org/10.1038/s41598-025-94449-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-94449-5