Abstract

As AI is expected to take on the role of providing workplace feedback to employees in the future, understanding how AI and humans can complement effectively in this context is crucial. This study explores this through a randomized controlled experiment testing the feedback effectiveness of two different Chat-GPT-based AI chatbots—one that delivers positive feedback focused on individual strengths (n = 54) and the other that provides negative feedback aimed at areas for improvement (n = 48). Japanese office workers, including freelancers, participated in this study. We conducted mixed-effects model analysis to assess whether the effects of these AI chatbots on occupational self-efficacy are moderated by the level of social support from supervisors and colleagues. The results showed that positive feedback from the AI chatbots significantly enhanced occupational self-efficacy. Furthermore, negative feedback also boosted self-efficacy, but only when employees perceived a high level of emotional support from their supervisors and colleagues. These findings point to effective ways of integrating AI into the workplace, where humans and AI can assume complementary roles in employee development.

Similar content being viewed by others

Introduction

How can AI and humans jointly foster member growth in the workplace? This question highlights a pivotal challenge for modern organizations as AI technology fundamentally reshapes how we nurture talent. Preliminary observations suggest that AI can facilitate data-driven, proactive human resource management—assessing employee turnover risks and skill levels to recommend tailored interventions1. Although it remains unclear how extensively AI can influence workplace behaviors, there is growing indication that AI tools may complement—or even redefine—conventional talent development approaches.

Of particular note is the feasibility of AI-based workplace feedback. AI-powered workplace coaching has already demonstrated results comparable to human-led coaching2. Such technological innovations hold the potential to democratize access to effective work feedback. AI is expected to broaden opportunities for individuals who previously could not afford coaching, and the same principle can be applied to workplace feedback as well3. Incorporating AI into workplace talent management can be especially significant for small and medium-sized enterprises. By addressing challenges such as managers’ limited time and lack of feedback skills, AI-based feedback is expected to supplement these resource constraints and enable a more systematic approach to member development1.

In light of recent advancements in AI technology, future workplaces are poised to integrate AI into their organizational environments, with AI and humans working synergistically to support employee development. However, the combined effects of AI-driven and human-provided support remain largely unexplored. This point becomes especially significant now that large language model (LLM)–based AI—beyond traditional rule-based systems—has begun to be used for conversational interventions such as coaching3. As more natural human-like conversations with AI become feasible, the possibilities for integrating AI into workplace feedback continue to expand.

Based on this perspective of future workplaces, the present study examines how AI-driven feedback and human social support within the workplace combine to influence employee development, particularly their occupational self-efficacy—the belief in one’s ability to successfully perform job-related tasks.

Occupational self-efficacy

The concept of self-efficacy, a core element of Bandura’s Social Cognitive Theory, is defined as an individual’s belief in their ability to perform specific tasks4. Enhanced self-efficacy leads to increased intrinsic motivation, higher goal-setting, greater effort, and persistence in the face of challenges5. Consequently, a positive correlation exists between self-efficacy and job performance6. Recognizing the importance of context, Bandura emphasized the need to measure self-efficacy within specific domains4, leading to the development of the concept of occupational self-efficacy, which pertains to an individual’s confidence in performing work-related tasks7,8. The current study investigates how AI-driven feedback contributes to the enhancement of employees’ occupational self-efficacy.

Positive/negative feedback

Feedback in the workplace could enhance occupational self-efficacy. Feedback research has extensively examined how different feedback styles impact workplace performance and development. A comprehensive meta-analysis spanning 30 years demonstrated that positive feedback generally yields larger effect sizes compared to negative (constructive) feedback or combinations of both approaches9. However, the effectiveness of feedback styles is contingent on specific boundary conditions. Research indicates that negative feedback can be particularly effective when recipients are experts rather than novices10, or when the focus is on information recognition rather than commitment enhancement11,12. Cultural differences also play a significant role; studies suggest that individuals in Japan and Chile, compared to those in North America, are more inclined to focus on negative self-related information, making negative feedback a more powerful motivator for self-improvement in these cultures13,14,15.

As AI increasingly assumes the role of feedback provider in workplaces, it remains unclear whether these established findings on human-to-human feedback will hold true for AI-delivered feedback. This uncertainty raises critical questions about the boundary conditions under which different types of AI feedback can effectively contribute to employee skill development and performance improvement.

Workplace social support

As AI systems increasingly provide workplace feedback, understanding the optimal integration of human support becomes crucial. Social support research offers valuable theoretical insights for examining human-AI interaction, as it illuminates how different types of human support could complement and enhance the effectiveness of AI-driven feedback. In workplace settings, social support—comprising assistance from supervisors, colleagues, and other sources—plays a pivotal role in stress management and employee development16.

Social support operates through two mechanisms: a buffering effect that mitigates stress impact, and a direct effect that enhances overall wellbeing16, with this study focusing on the former buffering effect. This support is particularly crucial in high-stress situations, such as when receiving negative feedback, making employees more receptive and willing to implement it. AI feedback can be designed to provide constructive criticism by identifying areas for improvement. This approach is feasible because AI, unlike human feedback providers, can readily switch between positive and negative feedback styles through prompt engineering. Based on objective data, AI can provide detailed, specific feedback about areas for improvement. However, when delivering such improvement-focused feedback—negative feedback—a supportive workplace environment becomes essential. Indeed, Organizational Support Theory has demonstrated that when employees perceive high levels of organizational support—a belief that their organization values their contributions and cares about their well-being—the stress and psychological burden associated with negative feedback can be significantly reduced17. Consequently, employees are better equipped to respond to negative information in a more constructive manner16.

Importantly, social support is recognized as a multidimensional construct, with emotional support identified as one of its key dimensions18. In this study, we analyze three dimensions of social support: informational support—advice and guidance, instrumental support—practical assistance, and emotional support—empathy and encouragement19. This three-dimensional analysis is particularly relevant for our Japanese dataset, allowing us to test cultural differences in social support effectiveness. A comparative experience sampling study between Japan and the United States revealed significant cultural variations in social support effects20. Their findings showed that for Japanese participants, informational/instrumental support sometimes triggered feelings of incompetence and stress, while emotional support was perceived as natural and empathetic, fostering a sense of calm and competence20.

Based on these insights, we examine whether emotional support demonstrates similarly pronounced effects in our Japanese dataset when AI and humans jointly support team members.

Generative AI chatbot

Over the past few decades, AI technology has witnessed significant advancements. Initially, AI systems were primarily based on rule-based algorithms and basic statistical models, with limited capabilities in processing natural language. However, until now, the field has seen substantial progress, particularly with the advent of machine learning and deep learning technologies, which have enabled AI to tackle increasingly complex tasks21. A pivotal moment in this evolution was the introduction of transformer models, which greatly enhanced the precision and efficiency of natural language processing22. The rise of generative AI has brought a revolutionary change in AI capabilities. These large language models (LLMs) are now able to generate sophisticated and contextually relevant dialogues. Trained on extensive datasets, generative AI systems can simulate natural, human-like conversations23. This leap in technology has transformed AI from a mere supportive tool to a potential feedback provider or coach within actual work environments3. By 2022, studies have already suggested that AI-based coaching can be as effective as human coaching2.

Current study

This study’s findings primarily offer value to two key stakeholder groups. The first consists of practitioners seeking to enhance management functions through AI. In rapidly changing workplaces, learning how best to leverage “interactive support” such as AI-driven workplace feedback is a pressing concern for them. The second group comprises researchers in fields such as feedback studies and social support within organizational contexts. By connecting traditional theories to the AI domain and empirically examining their applicability in future workplaces, this research has the potential to broaden the scope of existing feedback and social support theories.

Accordingly, this study is expected to make a significant theoretical contribution as well. Traditional feedback research generally presupposes human-led interventions, leaving it unclear whether similar—and potentially new—effects might emerge when AI replaces the human as the feedback provider. To address this, the present research involves creating a chatbot capable of delivering two types of AI feedback (positive and negative) and testing their efficacy via a randomized controlled trial design. In addition, it investigates how varying degrees of social support from participants’ workplace relationships may amplify or diminish the effects of AI feedback. Through these examinations, the study aims to (a) extend the concept of who or what can serve as the agent in feedback theory, (b) expand the domain in which social support research can be applied, and (c) offer new insights on how to implement collaborative support for employees in real-world organizations where AI adoption is advancing. Ultimately, it will contribute to clarifying how future workplaces—where AI and human support become intricately interwoven—can balance employee development with organizational outcomes.

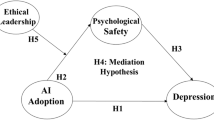

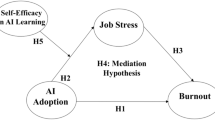

Hypotheses

In this study we propose two hypotheses.

First, we consider the effect of positive feedback through the lens of Bandura’s self-efficacy theory. Bandura identified four key sources of self-efficacy enhancement: mastery experiences, vicarious experiences, verbal persuasion, and physiological/affective states4,24. In the context of AI-provided feedback, receiving input that highlights accomplishments and emphasizes personal strengths serves two key functions. First, it constitutes verbal persuasion: explicit affirmations (e.g., “You showed strong analytical skills”) reinforce the message “you are capable.” Second, it reinforces mastery experiences: by referencing past successes (e.g., meeting challenging goals), the AI prompts users to reflect on their achievements, strengthening the self-perception that “I can do this.” Through these mechanisms, AI chatbots replicate the same theoretical processes as human feedback in fostering occupational self-efficacy.

One distinction between AI and human feedback lies in their different characteristics of flexibility and consistency. AI demonstrates high flexibility in switching between positive and negative styles through prompt modifications, and once set, maintains high consistency without being influenced by daily moods or recipient reactions during the session. In contrast, humans show lower flexibility due to their predisposition towards certain feedback styles—shaped by training and habits—and lower consistency as their feedback can fluctuate based on situational factors such as their mood or the session content. While there is a difference in flexibility and consistency to change and keep feedback styles, once the feedback takes on a clearly positive or negative orientation, both AI and human feedback are often perceived simply as positive or negative information within a natural dialogue, making any differences in how they are received relatively minimal. Therefore, we hypothesize that positive AI-generated feedback will enhance occupational self-efficacy just as effectively as feedback from human providers.

Hypothesis 1

Positive feedback from AI chatbots will enhance occupational self-efficacy.

Next, we examine how negative feedback from AI chatbots interacts with workplace social support. The buffering effect of social support has been well-documented in reducing stress and mitigating negative psychological impacts16. When individuals perceive strong emotional support from colleagues and supervisors, they are better equipped to process and utilize negative feedback constructively. In such supportive environments, negative feedback from AI is more likely to be viewed as valuable information for improvement rather than as a threat. Consequently, individuals can focus on identifying the next steps for success, ultimately leading to an increase in occupational self-efficacy, despite the negative nature of the feedback.

Importantly, among the three types of social support—informational, instrumental, and emotional—we hypothesize that only emotional support demonstrates a buffering effect. We base this hypothesis on previous findings in cultural psychology, which suggest that in Japanese contexts, while informational or instrumental support can sometimes leave recipients feeling incompetent, emotional support is generally perceived as natural and warm-hearted, thus producing positive outcomes20.

Hypothesis 2

Negative feedback from AI chatbots will enhance occupational self-efficacy only when there is a high level of emotional support from workplace supervisors and colleagues.

Methods

Participants

The experiment was conducted online in May 2024 using a randomized controlled trial format. The flowchart of the randomized controlled trial is showed in Fig. 1. Participants were recruited through Lancers, a Japanese crowdsourcing service. Initially, 340 individuals completed a pre-survey. From this pool, the study focused on 125 office workers aged 25 to 59 with at least a junior college education, as this demographic was deemed more likely to experience early adoption of AI feedback in their work environment. These 125 participants were stratified by gender and age group, then randomly assigned within each stratum to one of two chatbot groups using an R script. All 125 individuals were invited to participate in the main survey, with 102 completing it (54 in the positive feedback group and 48 in the negative feedback group), representing an 81.6% response rate.

Table 1 presents the demographic and professional characteristics of participants in each feedback group. The positive and negative feedback groups showed similar age distributions, with mean ages of 41.2 and 42.2 years, respectively. In both groups, over half of the participants held staff-level positions. Regarding employment status, while the majority were full-time employees, approximately 30% were self-employed in each group. The detailed characteristics of self-employed workers and their implications for the analysis are presented in Appendix 3 of the Supplementary Materials. The average household income was slightly higher in the negative feedback group (6.9 million yen) compared to the positive feedback group (5.8 million yen). Similarly, the negative feedback group reported higher average weekly working hours (40.6 h) than the positive feedback group (35.5 h), both approximating typical full-time schedules. Notably, experience with AI chatbots was limited across both groups, with over 60% of participants in each group reporting either “never using” or “using only a few times a year.” Overall, while some minor differences exist, the two groups appear largely comparable in terms of key demographic and professional characteristics.

To assess the equivalence of the two groups across key variables, we conducted statistical comparisons. Categorical variables (i.e., gender, job position, employment status, and AI chatbot usage) were analyzed using chi-square tests, while continuous variables (i.e., age, household income, and weekly working hours) were compared using independent samples t-tests. No significant differences were observed between the groups on any of these variables (all p > .05). These results suggest that the randomization process was effective in creating comparable groups, thus minimizing potential confounding effects in subsequent analyses. With group equivalence established, we proceeded to examine the effects of the experimental manipulation.

Sample size and power analysis

The sample size was determined using G*Power (3.1.9.7) for a multiple regression analysis with seven explanatory variables. We calculated the required number of respondents to achieve 80% power at a 5% error probability, assuming a medium effect size (f2 = 0.15), which indicated that 103 respondents were necessary. This closely aligns with the actual number of participants in the experiment, which was 102. Although meta-analyses have often reported large effect sizes for feedback9, we judged that our conservative assumption of a medium effect size was reasonable, considering that the feedback provider in this study was an AI chatbot rather than a human.

Measures

Occupational self-efficacy

Participants’ job-related self-efficacy was measured through both pre- and post-surveys using the occupational self-efficacy Scale7. This scale includes items such as “I can remain calm when facing difficulties in my job because I can rely on my abilities” and “Whatever comes my way in my job, I can usually handle it.” The Japanese translation was verified through a back-translation procedure, in which the back-translation was conducted without the original translator’s involvement. The final translation was adjusted until a native English checker confirmed that it conveyed the same meaning as the original. Participants responded on a 5-point Likert scale, ranging from 1 (Not at all true) to 5 (Very true). The scale demonstrated high internal consistency in both pre- and post- surveys, with Cronbach’s alpha coefficients of 0.85 and 0.90, respectively.

Workplace social support

The short version of the Workplace Social Support Scale19 was employed in the pre-survey to assess three dimensions of social support in the workplace: informational, instrumental, and emotional support. Each dimension was measured using two items. Participants were prompted with the question, “To what extent do you actually receive the following types of support from your supervisors and colleagues at work?” followed by support-related items. Responses were recorded on a 5-point Likert scale, ranging from 1 (Hardly any) to 5 (Very much). Representative items for each dimension include: “They give you advice to solve problems” (informational support), “They help you handle tasks together” (instrumental support), and “They understand and validate you” (emotional support). The scale demonstrated high internal consistency across all three dimensions, with Cronbach’s alpha coefficients of 0.94, 0.86, and 0.86 for informational, instrumental, and emotional support, respectively.

AI chat-bot

Two distinct AI chatbots were developed using OpenAI’s GPT-4o to provide contrasting feedback styles. The positive feedback chatbot was designed to emphasize participants’ strengths and accomplishments, framing feedback and guiding conversations in an affirming manner. Conversely, the negative feedback chatbot was designed to focus on participants’ challenges and areas for improvement, structuring feedback and steering dialogues towards growth opportunities. Each chatbot was configured with unique prompts reflecting its designated feedback style and was implemented on a separate webpage using OpenAI’s API. All prompts used in this study are documented in Appendix 1 of the supplementary Materials.

Experiment procedure

The study protocol was conducted online following procedures pre-approved by the ethics review board of Kyoto University Psychological Unit (approval number: 6-P-1). The protocol included pre- and post-surveys and AI-mediated feedback sessions. Participants were initially presented with the study description and consent form. In this instruction sheet, respondents were informed that the survey would be conducted with complete anonymity and that they would not face any disadvantages if they chose to discontinue participation or skip questions they did not wish to answer. Upon providing informed consent, they completed a 15-minute pre-survey. Eligible participants received an invitation to the feedback session the day after completing the pre-survey and were given a four-day window to engage in a 15- to 30-minute AI-mediated feedback session. All methods were performed in accordance with the relevant guidelines and regulations, including the Declaration of Helsinki.

During this session, an AI chatbot first inquired about specific work tasks the participant had completed on the same day or the day prior, before proceeding with the feedback protocol. Immediately following the feedback session, participants were directed to a webpage to complete a 10-minute post-survey questionnaire. Compensation was structured in two parts: 100 JPY was provided for completing the pre-survey, with an additional 900 JPY (approximately 6 USD) for those who completed both the feedback session and the post-survey.

Analytical procedure

The analysis was conducted through the following process. First, a series of preliminary analyses were performed to confirm the reliability of the data before the main analysis. These included Little’s Missing Completely At Random (MCAR) test, Common Method Variance (CMV) assessment to verify data quality, followed by convergent and discriminant validity tests to ensure that the variables used in this analysis appropriately converge while remaining sufficiently distinct from one another. These procedures help ensure the reliability of the main analysis.

Subsequently, a manipulation check was conducted. In this experiment, AI chatbots performed two different feedback sessions, and since the sessions took place between participants and AI, we analyzed participants’ session evaluation scores from the post-experiment survey to confirm that the intended sessions were indeed carried out.

Following this, basic statistical measures including means, standard deviations, and correlations were examined before proceeding to the main analysis. All analyses were conducted using R (version 4.3.3). The main analysis employed mixed-effects modeling using the lmer function from the lme4 package. We constructed models that appropriately reflected the actual response structure, where Level 1 responses (pre- and post-measurements) were nested within Level 2 (individuals), separating individual differences as random intercepts from the fixed effects analysis. Results from alternative analytical approaches, such as ANOVA, are reported in the appendix 4 as robustness checks for the main analysis.

For the analysis of the moderating effects of three types of social support, we set up three separate models, one for each type of social support. While it would be technically possible to include all three types of social support in a single model, as reported in the descriptive statistics and preliminary analyses, the three types of social support show correlations that raise concerns about multicollinearity, despite meeting assumptions of convergent and discriminant validity. Therefore, we adopted an approach that separates the analysis into three models and interprets the results accordingly. Appendix 5 explains the rationale for using separate models and briefly reports results from a single model as comparison.

Results

Preliminary analyses

To ensure the appropriateness of the data for the main analysis, we conducted a series of preliminary analyses. First, we checked whether the missing data patterns were random by performing Little’s MCAR (Missing Completely At Random) test. The results indicated that the missing data could be considered completely random (χ2(120) = 135.0, p = .16). Next, we examined the potential for Common Method Variance (CMV) by conducting Harman’s single-factor test. The results showed that the first factor explained 29.96% of the total variance, well below the 50% threshold, indicating that CMV was not a significant concern in this dataset.

Next, we assessed the convergent and discriminant validity of the variables used in our analysis. occupational self-efficacy was measured twice—both before and after the intervention—while social support was measured only before, so we based our validation on the pre-intervention data. To evaluate convergent validity, we performed a confirmatory factor analysis, checking the factor loadings of each item, Composite Reliability (CR), and Average Variance Extracted (AVE). For occupational self-efficacy, although some factor loadings were slightly below the ideal threshold of 0.70 (ranging from 0.54 to 0.83), the CR was 0.85, and the AVE was 0.50. Since these values met the necessary criteria, we decided to retain all original items for the main analysis. Informational Support had high factor loadings for both items at 0.95, with a CR of 0.95 and an AVE of 0.90. Instrumental Support showed strong factor loadings between 0.87 and 0.89, with a CR of 0.87 and an AVE of 0.77. Emotional Support also had high factor loadings ranging from 0.83 to 0.91, with a CR of 0.86 and an AVE of 0.76. Based on these results, we concluded that the convergent validity of the variables used in our analysis was generally well supported.

We assessed discriminant validity using the Fornell–Larcker criterion, which compares the square roots of the AVE for each construct with the correlation coefficients between them. Our analysis revealed that discriminant validity was confirmed for all constructs except for informational support and instrumental support. The square root of AVE for informational support did not surpass its correlation with instrumental support, indicating a potential issue with discriminant validity between these two forms of support. The high correlation coefficient of 0.82 between informational and instrumental support further highlights their close relationship. However, it’s important to note that the square root of AVE for instrumental support did exceed its correlation with informational support, suggesting that while closely related, these are not identical constructs. Moreover, we confirmed the discriminant validity of emotional support, which is central to our hypothesis. Given these findings, we will continue to classify social support into three categories (informational, instrumental, and emotional), as done in previous research. However, we will explicitly acknowledge the potential limitation in discriminant validity between informational and instrumental support in our study. This approach allows us to maintain consistency with existing literature while transparently addressing the complexities in our data.

Finally, to ensure the assumptions of the mixed-effects model analysis were satisfied, we examined the residuals of the models used in the main analysis, focusing on normality, homoscedasticity, and linearity. Normality was assessed using the Shapiro-Wilk test, which confirmed that both Model 2 (Instrumental Support) and Model 3 (Emotional Support) adhered to the normality assumption (Model 2: W = 0.99, p = .094; Model 3: W = 0.99, p = .051). However, Model 1 (Informational Support) did not meet the normality assumption (Model 1: W = 0.98, p = .029). To further check the normality of the residual of Model 1, we checked the Q-Q plot for Model 1. While the central data points closely followed a straight line, deviations were observed at the tails, indicating the need for caution when interpreting the results of Model 1. Nevertheless, since the primary hypothesis is based on Model 3 (Emotional Support), we concluded that this deviation does not substantially affect the overall interpretation of the analysis. Additionally, we reviewed the residual plots against the predicted values to assess homoscedasticity and linearity. While minor bias was detected across all three models, no patterns emerged that would critically impact the validity of the results. Q-Q plots for all models are presented in Figure S1, Supplementary Materials, Appendix 2.

As a result of the preliminary analyses, while there was an issue regarding the normality of residuals in Model 1, we conclude that the assumptions required for the main analysis were satisfied.

Manipulation check

To verify the effectiveness of our experimental manipulation—focusing either on challenges and areas for improvement or on strengths and accomplishments—we conducted a manipulation check. In the post-survey, participants were asked to evaluate the session content on a scale ranging from “1 = Mainly discussed my issues and areas to improve” to “7 = Mainly discussed my strengths and what I did well.” Fig. 2 illustrates the distribution of participants’ responses.

The manipulation check results confirmed the success of our experimental design. The positive feedback group reported an average score of 5.43 (SD = 1.46), indicating that those participants perceived the session as primarily focusing on their strengths and accomplishments. Conversely, the negative feedback group reported an average score of 2.65 (SD = 1.77), suggesting a perceived focus on knowledge gaps and challenges. A t-test revealed a statistically significant difference between the two groups (t(91) = − 8.59, p < .001), with a large effect size (Cohen’s d = − 1.73, 95% CI [− 2.19, − 1.26]). These results provide strong evidence that our manipulation effectively created distinct feedback experiences for the two groups, as intended.

Mean/SD/correlation

Table 2 presents the descriptive statistics and correlation coefficients for the main variables used in this study. A strong positive correlation (r = .82) was observed between informational and instrumental support. Additionally, emotional support showed a moderate positive correlation with occupational efficacy (pre: r = .35, post: r = .46).

Enhancement of occupational self-efficacy

We conducted a mixed-effects model analysis to examine how social support and AI chatbots feedback collectively affected occupational self-efficacy. Social support, chat type, and time point (pre-/post-chat) were included as fixed effects, with random intercepts to account for individual differences. Time point was dummy-coded as 0 (before the chat) and 1 (after the chat), while chat type was coded as 0 (negative feedback) and 1 (positive feedback). Social support was analyzed in three separate categories: informational, instrumental, and emotional support, each in its own model. Social support variables were grand-mean centered for the analysis. Table 3 presents the results.

Our analysis revealed significant interactions between time point and chat type for all three social support models (Model 1: informational support, B = 0.24, p = .02; Model 2: instrumental support, B = 0.25, p = .01; Model 3: emotional support, B = 0.21, p = .03). To further explore the differences in the effects of different feedback styles, we conducted simple slope analyses for the interaction effects between pre-/post-chat status and feedback type under each condition. Figure 3 illustrates these results.

In Fig. 3, simple slope analyses for the three models are presented. In each panel, the x-axis represents the measurement time point (Pre vs. Post), and the y-axis shows the predicted occupational self-efficacy score. Solid lines indicate positive feedback, while dashed lines represent negative feedback. An upward slope indicates an increase in occupational self-efficacy from pre- to post-intervention, whereas a flat or downward slope indicates little or no improvement.

A similar trend was observed across all models. For participants who received positive feedback, occupational self-efficacy significantly increased across all three types of support: informational (B = 0.29, p < .001), instrumental (B = 0.30, p < .001), and emotional support (B = 0.29, p < .001). In contrast, for those who received negative feedback, there was no significant increase in occupational self-efficacy across any type of support: informational (B = 0.05, p = .48), instrumental (B = 0.05, p = .49), and emotional support (B = 0.08, p = .27). These results support Hypothesis 1, which proposed that positive feedback from AI would enhance occupational self-efficacy.

Next, we analyzed how AI-driven feedback interacts with social support from supervisors and colleagues. The analysis revealed a significant three-way interaction between time point, feedback type, and emotional support (B = − 0.24, p = .03). To gain deeper insights into this interaction, we conducted a simple slope analysis, examining each combination of time point (coded as 0 and 1) and feedback types (also coded as 0 and 1), as well as high and low levels of social support (defined as ± 1 SD from the mean). Figure 4 presents the results, with panels distinguishing between low (left) and high (right) emotional support. The interpretation of axes and lines follows the same format as Fig. 3.

The simple slope analysis revealed distinct patterns depending on the level of emotional support from supervisors and colleagues. Under high emotional support (+ 1 SD), both positive feedback (B = 0.27, p < .001) and negative feedback (B = 0.27, p = .01) significantly increased occupational self-efficacy from pre- to post-measurement. In contrast, under low emotional support (− 1 SD), only positive feedback significantly increased occupational self-efficacy (B = 0.31, p < .001), whereas negative feedback showed no significant effect (B = − 0.12, p = .19). These findings align with Hypothesis 2, which posits that negative feedback from AI chatbots enhances occupational self-efficacy only when emotional support from supervisors and colleagues is high.

Finally, although this point was not examined in our initial hypotheses, a comparison of the three distinct types of social support (informational, instrumental, and emotional) showed that only emotional support (Model 3) had a significant positive main effect on occupational self-efficacy (B = 0.23, p = .05). This finding suggests that the impact of social support on occupational self-efficacy varies by the type of support. In particular, emotional support is significantly associated with higher occupational self-efficacy. This aligns with Cohen and Wills’s social support theory, which proposes that effective social support can exert a direct effect on well-being.

Discussion

This study aims to elucidate the dynamics of AI-human interactions in the workplace and their impact on occupational self-efficacy. Specifically, it investigates how positive and negative feedback from AI chatbots interacts with social support from supervisors and colleagues to influence occupational self-efficacy.

Our findings suggest that positive feedback from AI chatbots significantly enhances occupational self-efficacy. In previous feedback research based on feedback from humans to humans, it has generally been shown that the effects of positive feedback surpass those of negative feedback, constructive feedback, or even their combinations. The present study suggests that these findings may also hold when the provider of feedback shifts from humans to AI, thereby extending the theoretical framework into the AI domain. These results also suggest that the pathway for increasing occupational self-efficacy, as explained by Bandura’s Social Cognitive Theory, may remain valid even when the feedback provider shifts from human to AI. In other words, feedback from well-designed AI chatbots capable of recognizing employees’ strengths and achievements can serve as verbal persuasion and recognition of mastery experiences, thus providing the same psychological benefits even when the source of workplace feedback shifts from humans to AI.

Regarding negative feedback, our results revealed a complex effect moderated by emotional support from supervisors and colleagues. When this emotional support is low, negative feedback shows no significant effect, implying that negative feedback does not serve as a source of beneficial information. Conversely, when emotional support is high, both positive and negative feedback can improve occupational self-efficacy to similar degrees. This finding aligns with Cohen & Wills’ buffering hypothesis16, which posits that social support can mitigate potential negative impacts. In our context, high emotional support appears to buffer against the potentially detrimental effects of negative feedback, allowing it to contribute positively to occupational self-efficacy, similar to positive feedback. These findings indicate that theories developed in the field of social support continue to hold valuable insights for future workplaces where AI becomes the primary source of feedback, serving as a significant guide for understanding the human role.

Additionally, the finding that negative feedback from AI can enhance occupational self-efficacy is particularly intriguing when considered through the lens of Bandura’s theory. This is because negative feedback in our study theoretically functioned as verbal persuasion from AI in the form of suggestions or exhortations to increase occupational self-efficacy4. Bandura notes that the effects of verbal persuasion are weaker compared to mastery experiences, and merely telling people they can accomplish something has limited efficacy in enhancing self-efficacy4. In fact, Bandura references past laboratory experiments where verbal persuasion showed minimal effectiveness, suggesting this could be attributed to issues of credibility4. The fact that verbal persuasion from AI demonstrated efficacy in enhancing self-efficacy in our study suggests, based on Bandura’s theoretical framework, that participants may have perceived AI with equal or greater credibility compared to human sources in previous experimental settings. Although the precise origin of this credibility toward AI falls beyond the scope of our present investigation, possible factors include the absence of personal biases (such as “liking” or “disliking” the individual) and a general trust in large-scale language models developed through extensive training. Future research will be needed to explore and verify these possibilities.

Building on these observations about AI credibility, the unique characteristics of AI represent important factors in understanding its impact in this field of research. By simply altering prompts, AI can easily shift its feedback orientation from positive to negative. Moreover, once oriented toward a particular feedback direction, AI can consistently maintain either positivity or negativity, unlike humans who may shift their tone depending on the emotional context or the flow of conversation. Even when AI delivers negatively oriented feedback, this study suggests that, with sufficient human support, such AI feedback can be harnessed as beneficial information fostering individual growth, rather than being perceived as irrelevant or potentially stressful information. This finding resonates with Edmondson’s concept of psychological safety in organizations25, which suggests that supportive environments foster a perspective where failures and criticisms are viewed as opportunities for growth rather than threats. This safe environment, in turn, may enable recipients to constructively respond to negative feedback, transforming potential setbacks into valuable opportunities for professional development and growth.

Our study’s findings also lend support to discussions on cultural differences regarding the effectiveness of various forms of social support. In our study, we examined three types of support: informational, instrumental, and emotional. The reason for analyzing the three types of social support separately was based on findings from previous cultural psychology research in the Japanese context, which indicate that while emotional support generally has a positive effect, informational or instrumental support can sometimes leave recipients with negative impressions—such as doubts about their own abilities. Notably, among the various support types, only emotional support showed both a significant main effect and a moderating effect on the relationship between AI feedback and occupational self-efficacy, highlighting the pivotal role of emotional support in the workplace. Although the main effect of emotional support on occupational self-efficacy was not considered at the hypothesis-development stage, it can be explained by the improvement of “physiological/affective states”—one of the four sources of self-efficacy explicitly identified by Bandura—through receiving emotional support. The exclusive effect of emotional support in our study suggests that it may significantly contribute to fostering psychological safety in the Japanese workplace context.

This study’s findings offer compelling insights into the synergistic relationship between AI support and human support in enhancing workplace dynamics. Given that this study used a Japanese sample, our findings suggest that, at least in Japan, an effective arrangement may involve supervisors and colleagues taking on the primary responsibility for providing emotional support, while AI chatbots deliver objective, data-driven evaluations. The power of this combination lies in its ability to leverage the distinct strengths of both human and artificial intelligence. AI chatbots can provide comprehensive feedback, encompassing both positive aspects and areas for improvement, based on quantitative analysis of work data. Simultaneously, humans excel in offering the empathy and emotional support that form the foundation of a supportive work environment. By strategically integrating these complementary elements—human empathy and emotional support with AI’s capacity for objective and quantitative analysis—organizations can potentially cultivate an environment that optimizes employees’ occupational self-efficacy.

Our results indicate a practical potential for AI chatbots to complement the role of workplace managers, especially in situations where providing sufficient feedback to team members is challenging due to managerial skill or resource constraints. The capacity of AI chatbots to operate continuously and deliver effective feedback frequently may represent a significant advancement in management practices, potentially revolutionizing how organizations approach employee development and performance management. Nevertheless, to maintain a balanced perspective, it is crucial to consider possible risks associated with deeper reliance on AI technologies. As scholars have cautioned, an overreliance on AI may, over the medium to long term, diminish individuals’ communication abilities26. Moreover, excessive dependence on AI-provided evaluations could reduce direct interpersonal interactions among coworkers, potentially undermining trust within teams. Recognizing such risks, organizations should employ AI in ways that preserve human-centered communication skills and foster healthy workplace relationships.

Limitations

This research offers valuable insights into the reception of workplace feedback from AI chatbots in management contexts, an area expected to see rapid advancement due to progress in generative AI technology. It illuminates the interactions between human and AI support activities in these emerging scenarios. However, the study has several limitations, underscoring the need for continued research in this field.

A primary limitation of this study lies in its experimental design, which was based on a single task feedback session. This approach diverges significantly from real workplace environments characterized by continuous feedback processes. In contexts where AI is integrated into ongoing task feedback systems, the long-term effects may differ substantially from those observed in this short-term experiment. Consequently, there is a critical need for longitudinal studies to evaluate the users’ perceptions toward AI, sustained impact, and effectiveness of AI-driven feedback in workplace settings. The design of our experimental manipulation also represents a limitation of this study. In this study, we manipulated the AI feedback style (positive/negative) in the experimental group but did not treat workplace social support as a target for manipulation. Consequently, to conduct a causal analysis of the effects of social support, further research is needed that employs an experimental design in which social support conditions are actively manipulated.

In addition to the design limitations, another significant constraint relates to the positioning of the AI chatbot in this study. The AI chatbot was not part of the participants’ actual organizations, which may have influenced how the feedback was perceived. For instance, participants may not fully engage with or take feedback seriously if the AI chatbot is seen as irrelevant to their performance evaluations or disconnected from their organizational context. This highlights the importance of conducting further research in real workplace environments to better understand how feedback from AI systems is integrated into everyday operations.

Furthermore, the generalizability of the present findings may be limited as this study’s experiment was conducted using a Japanese sample, and the results may depend on the cultural context and social environment specific to Japan. Indeed, in the present results, the effect of emotional support—one type of social support—was particularly pronounced, which may replicate the importance of emotional support in Japan. In light of previous cultural psychology research indicating cross-cultural differences in the effects of social support20, it is possible that different outcomes would emerge in samples outside of Japan (e.g., North America), where social support might be effective regardless of its specific type. Conversely, regions with cultural similarities to Japan may yield results comparable to our findings. The emphasis on emotional support likely stems from distinctive features of Japanese workplace environments, where job mobility is low and relationships with colleagues and business partners are fixed over long periods, fostering organizational cultures similar to clan cultures that prioritize positive human relationships and a sense of belonging27. This theoretically relates to interdependence/collectivism, and indeed, clan culture has been shown to positively affect employee well-being in Portugal28, which is also considered a culture with high interdependence. Therefore, cultures with similarly high levels of interdependence may produce results comparable to those observed in our study. Based on this discussion, future research should replicate and verify the present findings across diverse samples—spanning different cultures, industries, occupations, and various work styles—to examine the generalizability of these results.

Building upon these limitations, the study’s exploration of AI-human role divisions also warrants further investigation. While this research demonstrated the potential for enhancing occupational self-efficacy through a combination of emotional support from humans and negative/positive feedback from AI chatbots, it did not explore other possible combinations of AI and human roles. As AI technology continues to advance, there have been reports suggesting that AI can sometimes be perceived as more compassionate than human29. This finding implies that AI could also provide emotional support; in such a scenario, alternative, potentially more effective combinations of human and AI support might emerge. However, these other possible combinations of human and AI support were not examined in this study. Moreover, research on the potential for AI-based emotional care remains limited, and there are many uncertainties—such as how effectiveness might evolve over the long term. Continued investigation, taking into account ongoing advancements in AI technology, is therefore necessary.

Taken together, these limitations highlight the need for future research to explore a wider array of AI-human collaborations, utilizing longitudinal designs and diverse organizational data. Doing so will provide a more comprehensive understanding of how AI feedback systems function in complex workplace contexts and how they can be optimally integrated with human support.

Conclusion

Notwithstanding these limitations, our study provides valuable insights into how AI-driven feedback, in conjunction with human emotional support, can enhance occupational self-efficacy. As AI capabilities continue to advance at an unprecedented pace, the range and nature of its applications are anticipated to expand and transform dramatically. In this rapidly changing technological landscape, sustained research is essential to adapt to these emerging challenges and opportunities. Such efforts will be critical in understanding and managing the complex interactions between AI and human factors within organizational environments.

Data availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Liu, B., Sun, M. & Wang, Z. Exploring the optimisation of enterprise performance management in the context of artificial intelligence. J. Educ. Human. Soc. Sci. 27, 39–45 (2024).

Terblanche, N., Molyn, J., de Haan, E. & Nilsson, V. O. Comparing artificial intelligence and human coaching goal attainment efficacy. PLoS ONE 17, e0270255 (2022).

DiGirolamo, J. A. The potential for artificial intelligence in coaching. In The Digital and AI Coaches’ Handbook (eds Passmore, J. et al.) 276–285. https://doi.org/10.4324/9781003383741-28 (Routledge, 2024).

Bandura, A. Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 84, 191–215 (1977).

Bandura, A. & Schunk, D. Cultivating competence, self-efficacy, and intrinsic interest through proximal self-motivation. J. Personal. Soc. Psychol. 41, 586–598 (1981).

Stajkovic, A. D. & Luthans, F. Self-efficacy and work-related performance: A meta-analysis. Psychol. Bull. 124, 240–261 (1998).

Rigotti, T., Schyns, B. & Mohr, G. A short version of the occupational self-efficacy scale: structural and construct validity across five countries. J. Career Assess. 16, 238–255 (2008).

Schyns, B. & von Collani, G. A new occupational self-efficacy scale and its relation to personality constructs and organizational variables. Eur. J. Work Org. Psychol. 11, 219–241 (2002).

Sleiman, A. A., Sigurjonsdottir, S., Elnes, A., Gage, N. A. & Gravina, N. E. A quantitative review of performance feedback in organizational settings (1998–2018). J. Organ. Behav. Manag. 40, 303–332 (2020).

Finkelstein, S. R. & Fishbach, A. Tell me what I did wrong: experts seek and respond to negative feedback. J. Consum. Res. 39, 22–38 (2011).

Fishbach, A., Eyal, T. & Finkelstein, S. R. How positive and negative feedback motivate goal pursuit. Soc. Pers. Psychol. Compass 4, 517–530 (2010).

Eskreis-Winkler, L. & Fishbach, A. Routledge, When praise—versus criticism—motivates goal pursuit. In Psychological Perspectives on Praise 47–54. https://doi.org/10.4324/9780429327667-8 (2020).

Heine, S. J. & Raineri, A. Self-improving motivations and collectivism: the case of Chileans. J. Cross Cult. Psychol. 40, 158–163 (2009).

Heine, S. J., Kitayama, S. & Lehman, D. R. Cultural differences in self-evaluation: Japanese readily accept negative self-relevant information. J. Cross Cult. Psychol. 32, 434–443 (2001).

Norasakkunkit, V. & Uchida, Y. Psychological consequences of postindustrial anomie on self and motivation among Japanese youth. J. Soc. Issues 67, 774–786 (2011).

Cohen, S. & Wills, T. A. Stress, social support, and the buffering hypothesis. Psychol. Bull. 98, 310–357 (1985).

Eisenberger, R., Huntington, R., Hutchison, S. & Sowa, D. Perceived organizational support. J. Appl. Psychol. 71, 500–507 (1986).

Uchino, B. N., Cacioppo, J. T. & Kiecolt-Glaser, J. K. The relationship between social support and physiological processes: A review with emphasis on underlying mechanisms and implications for health. Psychol. Bull. 119, 488–531 (1996).

Mori, K. & Miura, K. Development of the brief scale of social support for workers and its validity and reliability. Annu. Bull. Inst. Psychol. Stud. 9, 74–88 (2007).

Morling, B., Uchida, Y. & Frentrup, S. Social support in two cultures: everyday transactions in the US and empathic assurance in Japan. PLoS ONE 10, e0127737 (2015).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Vaswani, A. et al. Attention is all you need. Advances in neural information processing systems. 30 (2017).

Brown, T. B. et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 33, 1877–1901 (2020).

Bandura, A. & Watts, R. Self-efficacy in changing societies. J. Cogn. Psychother. 10, 313–315 (1996).

Edmondson, A. Psychological safety and learning behavior in work teams. Adm. Sci. Q. 44, 350–383 (1999).

Turkle, S. Alone Together: Why We Expect More from Technology and Less from Each Other (Basic Books, 2011).

Watanabe, Y. et al. Person-organization fit in Japan: A longitudinal study of the effects of clan culture and interdependence on employee well-being. Curr. Psychol. 43, 15445–15458 (2023).

Rego, A. & Cunha, M. P. How individualism–collectivism orientations predict happiness in a collectivistic context. J. Happiness Stud. 10, 19–35 (2009).

Ovsyannikova, D., de Mello, V. O. & Inzlicht, M. Third-party evaluators perceive AI as more compassionate than expert humans. Commun. Psychol. 3, 4 (2025).

Author information

Authors and Affiliations

Contributions

Y.W. conceptualized the study, conducted the experiments, performed formal analysis, coordinated the overall research process, and wrote the original draft of the manuscript. M.N. contributed to the experimental design, provided specific input on statistical approaches, and critically revised the manuscript. K.T. contributed to the analysis design, offered insights into the implications of the results, and provided editorial feedback on the manuscript. Y.U. provided theoretical perspectives, supervised the literature review, and critically reviewed and revised the manuscript. All authors reviewed and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Watanabe, Y., Nakayama, M., Takemura, K. et al. AI feedback and workplace social support in enhancing occupational self-efficacy: a randomized controlled trial in Japan. Sci Rep 15, 11301 (2025). https://doi.org/10.1038/s41598-025-94985-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-94985-0