Abstract

In recent years, fine-grained image search has been an area of interest within the computer vision community. Many current works follow deep feature learning paradigms, which generally exploit the pre-trained convolutional layer’s activations as representations and learn a low-dimensional embedding. This embedding is usually learned by defining loss functions based on local structure like triplet loss. However, triplet loss requires an expensive sampling strategy. In addition, softmax-based loss (when the problem is treated as a classification task) performs faster than triplet loss but suffers from early saturation. To this end, a novel approach is proposed to enhance fine-grained representation learning by incorporating noise injection in both input and features. At the input, input image is made noised and the goal is set to reduce the distance between the L2 normalized features of input image and its noisy version in the embedding space, relative to other instances. Concurrently, noise injection in the features acts as regularization, facilitating the acquisition of generalized features and mitigating model overfitting. The proposed approach is tested on three public datasets: Oxford flower-17, Cub-200-2011 and Cars-196, and achieves better retrieval results than other existing methods. In addition, we also tested our approach in the Zero-Shot setting and got favorable results compared to the prior methods on Cars-196 and Cub-200-2011.

Similar content being viewed by others

Introduction

Image retrieval has been studied for decades, yielded significant results, and is still a challenging topic. A challenge is to obtaining visually related images to the query sample by analyzing its visual characteristics either by low-level semantics (like shape, texture, color) or by higher semantics (like bag of visual words, neural codes)1. Prior (Content based image retrieval) CBIR’s methods work well for databases of large inter-class variance as compared to databases of less inter-class variance (see Fig. 1). However, real-life scenarios require fine-grained search, that is, to locate images that correspond to the exact query’s sub-category. For instance, when a user queries an image (say bike or flower image), the user needs to access/retrieve images in the same fine-level category as a query (i.e., images correspond to the same model of bike or same flower species)2. In such a setting, retrieval becomes a complex and challenging task because it is arduous to distinguish between various models of cars or bikes, or various species of flowers, or different breeds of dogs. The reason for this is that they share visual appearances at the global level, which can only be distinguished by focusing on the critical parts of the object, such as the bird’s feature texture, the dog’s body color, and the shape of the bike’s headlight, etc. Therefore, the major challenge of this problem is to produce strong representations that can capture these subtle details and reduce differences between nearly identical categories. Fine-grained search can be used for various purposes, including but not limited to surveillance, evaluation of climate change, intelligent retail, monitoring of biodiversity and ecosystems, intelligent transportation, etc.

Comparison of image database. {Dataset Source: corel_images [https://www.kaggle.com/datasets/elkamel/corel-images], Oxford Flowers-1714; https://www.robots.ox.ac.uk/~vgg/data/flowers/17/index.html and Cars-19615; https://www.kaggle.com/datasets/jessicali9530/stanford-cars-dataset?datasetId=30084&sortBy=dateCreated&select=cars_test}.

Learning effective descriptors plays an important role in the fine-grained image retrieval (FGIR) domain. When good features are exploited, a retrieval algorithm allows similar images to be placed in beginning of a ranked list and dissimilar ones at the end. Since2, FGIR has drawn a growing research focus in computer vision society. Despite recent progress, FGIR is still an open problem for commercial and cataloging applications. With the recent developments in deep learning3,4,5, the deep learning methods built upon (convolutional neural network) CNN features have become the mainstream of fine-grained search. However, these features are learned from the coarse domain; direct exploitation is not feasible since they cannot capture the fine details of the object. Instead, low dimensional features are learned on top of CNN features using the so-called deep metric learning (DML) approach, which aims to learn the low dimensional metric space (or embedding space) of embeddings where similar things are close and dissimilar are distant. Lots of work has been done in this area using contrastive loss6, triplet loss7,8, and quadruplet loss9,10. Most of them follow triplet loss. However, triplet loss is based on mining strategies7,8,11,12,13 to make it fast convergence, which requires extra computations. On the other hand, softmax is generally faster to converge compared to triplet loss but suffers in early saturation, which converges to some worse local minima. Furthermore, learning embeddings from larger networks poses overfitting to small datasets. In this paper, we tend to overcome these issues by proposing a noise-invariant feature learning approach. In this approach, the model is trained using auxiliary induced noise injected at two positions: at input layer and final layer of the deep network. By introducing noise at the input layer, the model learns noise-invariant features by maximizing the similarity between an image instance and its corresponding noisy version. Meanwhile, the noise added at the final layer, in conjunction with the softmax cross-entropy loss function, serves as a form of regularization by generating augmented features within the embedding space. In the former case, we employ a contrastive learning approach, where positives are formed by injecting noise into images, while other samples serve as negatives. In the latter case, the induced noise prevents softmax from suffering early saturation and allows for the continued propagation of gradients computed on noise-augmented features, thereby helping to reduce overfitting on small datasets.

The following are our key contributions:

-

1)

We propose a Noise-invariant feature embedding learning method by optimizing it using softmax. This minimizes the costly sampling process in training DML, which is the main limitation of triplet loss. This also alleviates the problem of early saturation of softmax-based learning.

-

2)

This is done by adding noise into both the input layer and the last layer of the deep network during the training process. The primary objective, grounded in contrastive learning, aims to maximize the similarity between an image instance and its corresponding noisy version. The secondary objective, relying on softmax cross-entropy, addresses augmented features generated within the embedding space, serving as a form of regularization.

-

3)

Analysis on three fine-grained datasets illustrates that our approach achieves better results than state-of-the-art.

The rest of the paper is structured as follows: existing related works are explored in Section “Related Work”. The proposed approach is detailed in Section “Methodology”. Section “Experiments” discusses the experimental settings and analyzes the outcome results. Section “Conclusion” concludes the paper.

Related Work

Following the success of CNN3, deep learning techniques also led to research in image retrieval1. For instance, Babenko et al.16 employed a pretrained CNN, fine-tuned it on the target images, and used its responses for image representation and retrieval. In17, a feature aggregation method was presented that exploits sum pooling on deep features to generate compact descriptors. Further, Mohedano et al.18 exploit bag-of-Word model with CNN features, whereas in19, CNN features with VLAD are exploited for image search. Reference20 employed sum pooling in their aggregated method over weighted convolutional features across channels and spatial locations. In addition, Yang et al.21 presented an image retrieval technique based on Cross Batch Reference based feature learning strategy. Tolias et al.22 presented an approach that generates compact features by encoding multiple locations with convolutional layer’s activations. Shakarami et al.23 present a fusion-based descriptor for image retrieval, which includes LBP, HOG, and CNN features. Although these methods work well for coarse levels, fine-grained localization is required as an initial step for fine-grained images. Using the deep learning paradigm some efforts have also been made for fine-grained image tasks. For instance, reference24 utilized convolutional kernels for both object’s parts selection and representation. Watkins et al.25 suggested a two-stage learning scheme (localization learning followed by classification using detected location) for fine-grain classification by exploring resnet architectures. Zhou et al.26 explore label hierarchy using rich relationships through bipartite-graph with VGG-net4 for fine-grained classification. In27, authors deployed pre-trained VGG-164 for object localization and selected its deep descriptors by removing noise or background. Zheng et al.28 suggested the centralized ranking loss and trained the CNN with weakly supervised object localization. Then they employed a CNN response map with the contours to precisely extract the features. Kumar et al.29 explored ResNet185 for the FGIR task, where they fine-tuned it on the target dataset and used its activations for retrieval. Yingying et al.30 proposed relation based convolutional descriptor that encodes local subtle features for FGIR. Further, some efforts are made in the direction of learning embedding. For instance,6 used the pair-wise loss and7 used the triplet loss for learning image embedding with CNN as a backbone. Subsequently, Song et al.31 exploited every pair in the minibatch to obtain hard negatives. Sohn et al.32 extends the triplet loss7,8 into N-pairs loss, which uses softmax cross-entropy loss on pair-wise similarity values within the batch. Song et al.33 presented the clustering loss for embedding learning by considering the embedding space’s global structure. Huang et al.10 exploited quadruplet and mines hard examples in end-to-end network with PDDM block for similarity evaluation. Zheng et al.34 proposed softmax Loss for FGIR with normalize-scale layer. The Ranked List loss35 accounts for both positive and negative data within a batch, aiming to clearly differentiate between the positive and negative sets. Reinforcement learning based sampling was proposed in36. Koth et al.13 also explored policy-adapted sampling via reinforcement learning for triplet losses. Further, Zheng et al.37 explore hard negative mining via generative approach. Duan et al.38 proposed multilevel similarity based metric loss which explore global, local and channel level similarity. Sanakoyeu et al.39 explored divide and conquer approach in which they iteratively divide the embedding to learn different features.

However, most of these methods rely on sampling strategies that make model training more computationally expensive. In contrast to the above analysis, we implemented a simple strategy for learning fine-grained features via a noise-assisted learning approach which strengthens the feature representation potential of the base network without requiring any sampling strategies.

Methodology

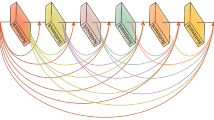

The outline of proposed method is depicted in Fig. 2. First a minibatch of images is randomly sampled and noised. Then pairs of noisy images and natural images are fed to Siamese network and a minibatch of natural images is fed to two standalone networks. The Siamese network is responsible for making features noise-invariant, while other two networks are responsible for learning class discriminating features. All networks are jointly trained with common goal of feature representation learning for fine grained image retrieval.

Consider the training images \(\left\{ {x_{1} ,x_{2} , \ldots ,x_{m} } \right\} \in X\) with associated labels \(y_{i} \in Y\) in the minibatch. Let \(f_{p}\) and \(f_{n}\) be the L2 normalized feature embedding of positive instance \(x_{p}\) and negative instance \(x_{n}\) to instance \(x_{i}\) such that \(y_{i} = y_{p} ;y_{i} \ne y_{n}\). These positives and negatives are selected from the minibatch during training. Assume \(\left( { \cdot , \odot , \cdot } \right)\) the cosine similarity function with \(\odot\) as dot product. To enforce the compactness among same class instances and separateness among different class instances in the embedded space, the class discrimination loss inspired by40 could be given as:

where, P(i) is set of positive indices to ith instance and N(i) is set of negative indices to ith instance.

Noise-invariant feature learning for FGIR

To improve the feature representation capability for a network, the noise can helps the deep CNN to learn better representations for fine-grained images. The noisy labels used in prior publications41,42 for feature learning need a large dataset with noisy labels network’s training. Instead of using noisy labels, the network is optimized by injecting noise at the input layer and CNN’s higher layer. Specifically, for each training iteration, a noise is sampled from zero mean Gaussian distribution, which is injected to the input images as well as in the activations of last layer (output of average pooling layer in our case) of the deep CNN (refer Fig. 2).

Let \(\xi_{i}^{I} \in N\left( {0,\delta_{i}^{2} } \right)\) be the noise sampled from the zero mean Gaussian distribution. The noise is injected to each sample selected for minibatch as \(\tilde{x}_{i} = x_{i} + \xi_{i}^{I}\). Let \({\text{f}}_{\text{i}}\) and \({\widetilde{\text{f}}}_{\text{i}}\) be the L2 normalized feature embedding of \(x_{i}\) and \(\tilde{x}_{i}\). For all instances \(x_{i} \in X\), the objective is to maximize \(\left( {{\text{f}}_{{\text{i}}} \odot {\tilde{\text{f}}}_{{\text{i}}} } \right)\).

Given a Siamese network, we compute the probability of noisy sample \(\tilde{x}_{i}\) being classified as ith image as:

The loss40 associated with (2) is given as:

The Siamese network in this approach excels at learning embeddings for fine-grained representation by comparing and distinguishing pairs of inputs. In our approach, it is utilized to create a meaningful embedding space that brings similar images closer together. Here, the loss LN will take care for compacting the distance between \(\left( {f_{i} ,\tilde{f}_{i} } \right)\) pairs which means making features noise invariant. It also minimizes \(\text{exp}\left({\text{f}}_{\text{j}}\odot {\widetilde{\text{f}}}_{\text{i}}\right)\) for all other instances, making separateness among other instances relative to its clean instance.

We also adopt multi-classification task to further optimize the network, however softmax suffers early saturation due to overfitting to smaller datasets. To overcome this, we inject the gaussian noise to the output of final layer of network (avg. pool in our case), so that each time loss will penalize the noisy feature for predicting low score.

Let \(\xi_{i}^{F} \in N\left( {0,\delta_{i}^{2} } \right)\) be a noise, \(Z_{i}\) represents the deep CNN’s last layer normalized43 activations for input image i, the noisy response can be deduced as \(\tilde{Z}_{i} = Z_{i} + \xi_{i}^{F}\). Now, with K-way softmax through fully connected layer \(FZ = w_{z} \tilde{Z}_{i} + b_{z}\), the probability distribution of a model parameterized by \(\phi\) over m classes is given as:

With the goal to maximize this probability (4), the loss is to minimize is:

The total loss is given as:

Minimizing L means minimizing all three losses LD, LN and LS. first Eq. (1) can be reformulated as

Now, examining L, minimizing Eq. (7) necessitates maximizing \(\text{exp}\left({\text{f}}_{\text{i}}\odot {\text{f}}_{\text{p}}/\tau \right)\) and minimizing \(\text{exp}\left({\text{f}}_{\text{i}}\odot {\text{f}}_{\text{n}}/\tau \right)\). Given that features are L2 normalized, maximizing \(\text{exp}\left({\text{f}}_{\text{i}}\odot {\text{f}}_{\text{p}}/\tau \right)\) involves maximizing the cosine similarity between \({\text{f}}_{\text{i}}\) and \({\text{f}}_{\text{p}}\), forcibly aligning the features of the original sample and its positive counterpart. Similarly, minimizing \(\text{exp}\left({\text{f}}_{\text{i}}\odot {\text{f}}_{\text{n}}/\tau \right)\) involves decreasing the cosine similarity between \({\text{f}}_{\text{i}}\) and \({\text{f}}_{\text{n}}\), forcibly separating the features of the original sample from its negative counterparts. This results in compactness of similar samples and separateness of dissimilar samples in embedding space. Now looking into LN, minimizing it necessitates maximizing \(\text{exp}\left({\text{f}}_{\text{i}}\odot {\widetilde{\text{f}}}_{\text{i}}/\tau \right)\) and minimizing \(\text{exp}\left({\text{f}}_{\text{j}}\odot {\widetilde{\text{f}}}_{\text{i}}/\tau \right)\). Maximizing \(\text{exp}\left({\text{f}}_{\text{i}}\odot {\widetilde{\text{f}}}_{\text{i}}/\tau \right)\) compels forcibly aligning the features of the original sample \({\text{f}}_{\text{i}}\) and its noisy counterpart \({\widetilde{\text{f}}}_{\text{i}}\). The outcome is a noise-invariant feature embedding. Similarly, minimizing \(\text{exp}\left({\text{f}}_{\text{j}}\odot {\widetilde{\text{f}}}_{\text{i}}/\tau \right)\) forcibly separating \({\widetilde{\text{f}}}_{\text{i}}\) from the features of other instances \({\text{f}}_{\text{j}}\). This further ensures separateness of dissimilar samples in embedding space. Last minimizing LS will further enhance the noise invariant property and class separability.

Overall steps of our approach is summarized in Algorithm 1.

Training details

We used resnet18 (R18)5 as a backbone. To make a good start, we initialize the R18’s parameters with weights trained on imagenet48. The dense layers’ weights are initialized as in5. The size of embedding is set to 256 and adam with weight decay of 10e-4 is used for network training. The learning rate and mini-batch’s size is set to 10e-4 and 64 respectively. We first sample 8 class randomly and then sample 8 instances per class. For each sample, noisy sample is created for siamese network. We exploit the data augmentation operations as follows: after randomly sampling a mini-batch of training images, first it is resized with its shorter side to 256 by preserving the aspect ratio, which maintains the original shape of the object. Then it is crop with size 224 × 224 from random location within the image. Next, it is rotated with degree within the range of (-15, 15) (followed by a center crop to maintain same spatial size). At last, with a 0.5 probability, color augmentation takes place followed by horizontal flipping with 0.5 probability. For color augmentation, we employ the proposed method of44 that generates realistic like synthetic images. Using44, we randomly select one image out of 10 generated images for each image of the minibatch. For LS (Eq. 5), we utilize label smoothing for the target probabilities within the cross-entropy to better tackle overfitting. This entails setting the probability of the correct class to 1 – φ with φ = 0.1, while assigning φ/(cl-1) as the probability for all other classes. Also L2 normalization is done to sampled noise before adding to feature. For inference, we first rescaled the image to shorter side with 224 and samples 3 network input’s sized crops (a center crop and a crop from each of the two shorter sides) from the image before feeding to the network. All crops’ feature vectors are then averaged to produce the feature representation of image. For matching we employ cosine similarity using L2 normalized features of gallery set to query.

Experiments

This section first discuss the dataset setting and evaluation measures. Then report the FGIR results and analyze the effect of noise-injection in retrieval performance. Finally, we also test our approach in context with Zero-shot learning.

Datasets and evaluation setting

The experiments are conducted on two datasets, the Oxford Flowers-1714 and the Cars-19615. Oxford Flowers-17 consists of 17 fine-grained categories with 1360 flower images. Cars-196 consists of 196 fine-grained classes of cars models with 16,185 images. Since Oxford Flowers-17 is a small dataset that contains 80 images per category, we conduct the experiment on randomly selected five splits of the dataset, and each split consist of three sets: training, gallery and query as depicted in Table 1. As a result, there are 680, 425 and 255 images for training, gallery and query sets, respectively. In the case of Cars-196 dataset, we conduct the experiment on the standard training testing split i.e. 8,144/8,041 images for training/testing. Note, the retrieval process is performed in the testing set by treating all images as queries, and the retrieved images are then evaluated by excluding the query image. MATLAB and NVIDIA Tesla K40c GPU are used to perform the experiments. To assess retrieval performance, we use Mean Average Precision (mAP) as described in27.

Results and analysis

Results on Oxford Flowers-17 under FGIR setting

In this comparative analysis of proposed method with state-of-arts is done and results (mAPs) are reported in Table 2 for. It can be seen that handcrafted features perform poorly with mAPs of 0.101 (LPB59) and 0.112 (HOG58), as they are unable to distinguish subtle differences in fine-grained images because these methods are not designed by keeping subtle details into consideration. However, Deep CNN descriptors shows great improvement over handcrafted ones. For instance, pre-trained ResNet18 descriptors shows 0.513 mAP, which is around + 0.4 (mAP) improvement over handcrafted features. Further, with fine tuning on target dataset, performance is further enhanced with mAPs of 0.877 (Yang et al.21) and 0.928 (Kumar et al.29). With 0.946 mAP, the suggested approach is able to achieve better results than others, which confirm the importance of noise insertion while training the network on small datasets. Further, mAP@K is also depicted in Fig. 3, where we can see that our method gradually improves over fine-tuned R1829 with the increase of K.

Top k mAP comparison between29 and our approach.

Moreover, Tables 3 and 4 depicts the categorical wise performance of Flowers-17 with comparative analysis with state-of-arts. From the results, we can observe the methods of45,46 and47 performs much better compared to HOG and LBP, and further29 able to improves over these methods in 13 classes. Our method is able to outperform29 in thirteen classes.

Results on Cars-196 under FGIR setting

Further, we compare our method with the SOTA on cars-196, which is reported in Tables 5 and 6 respectively. On comparing with baselines in Table 5, our method is able to achieve 80.2% mAP which is 3.7% higher than 76.5% of Kumar et al.29 and far ahead of LBP and HOG. That mainly owes to the effectively learning of image representation through intensive augmentation in the form of noise. Along with LBP (0.007 mAP) and HOG (0.010 mAP), pretrained ResNet18’s responses performs poorly with mAP of 0.041. This implies that for a larger number of fine-grained classes (compared to classes of flowers-17), the pretrained ResNet18 is unable to distinguish them. The reason is that through imagenet dataset48 it is learned to focus on the global relationships of the object rather than object’s subtle description. Furthermore, in the context of top-1 and top-5 mAP, we can see in Table 6 that our method consistently outperforms the SPOC17, CroW20, RMAC22, Wei et al.27 and Kumar et al.29 with an 86.14% top1 mAP and 81.62% top5 mAP.

Ablation study

Effect of noise induced on retrieval performance

We conduct experiments on cars-196 to assess the impact of injected noise on retrieval performance. The findings, presented in the form of mAP at Top-k, are shown in Table 7, where our proposed work is performs well compared to other settings, e.g., 86.14% (with all loss) vs. 84.98 \(({\text{with}}\;L_{N} \;{\text{and}}\;L_{D} )\) vs. 84.12% \(({\text{with}}\;L_{N} \;{\text{and}}\;L_{S} )\) and 82.61% \(({\text{with}}\;L_{D} )\) for Top-1 mAP. This also indicates inclusion of noises at both end benefits to learning generalizable features. Figure 4 further visualize the performance under different settings.

Fine-grained recognition

In this ablation study, we analyze the effect of our approach on recognition accuracy. For this we use the cars-196 dataset and the standard protocol for training and testing. We set the minibatch size to 64, learning rate to 0.0001 and data augmentation setting as discussed in Section “Training details”. The results in the term of recognition accuracy are reported in Table 8, where we can see the boost in accuracy with our approach.

Zero shot learning

Next, we test the generalization of our method in the context of zero-shot setting, namely to test whether the proposed method helps to find discriminative features even for the unseen images. In this regard, following the settings in34, we conduct the experiment on the Cars-196 and Cub-200-201149 datasets, where the first half classes are employed to train the network and the remaining half classes for testing purpose. We conduct the zero shot learning experiments using pytorch with max 40 epochs. We implement our method on both base networks: resnet18 (R18) and resnet50 (R50). First, we analyze the effectiveness of the proposed method on Cub-200-2011 and Cars-196 using experimentation setting (R18, embedding size = 512, learning rate = 0.002, gamma = 0.1 for every 15 epochs, batch_size = 240 with 12 samples per class) and the results are reported in Table 9, where we can see that by including \(L_{N}\) and \(L_{S}\) the retrieval performance tends to increase, which confirms using noise in \(L_{N}\) can help to incorporate intra-class variance and noise in \(L_{S}\) serves as a form of regularization.

Further, we analyze the effect of embedding size on retrieval performance (recall@k) which is depicted in Fig. 5, and effect of noise in LS on Cub-200-2011 with our approach is shown in Fig. 6. In Figs. 7 and 8, we additionally depict the retrieval results for a randomly picked query from each dataset.

Findings on Cars-196 dataset. The retrieved instance is indicated correctly by a green boundary box, and incorrectly by a red boundary box. Dataset Source: https://www.kaggle.com/datasets/jessicali9530/stanford-cars-dataset?datasetId=30084&sortBy=dateCreated&select=cars_test.

Findings on Cub-200-2011 dataset. The retrieved instance is indicated correctly by a green boundary box, and incorrectly by a red boundary box. Dataset Source: https://www.vision.caltech.edu/datasets/cub_200_2011/.

In Table 10, we can also see that our method is able to achieve better results compare to baseline methods such as EPSHN50 and NormSoftmax51 (where, EPSHN50 is based on contrastive learning approach and NormSoftmax51 is based on classification approach). For Resnet50 and Resnet101, we set the batch size to 144 and 24 samples per class. As per Table 10, our method consistently achieves better results for Cars-196 and Cub-200-2011 datasets in terms of recall@k than SOTA. However, few methods performs better than proposed method, which can be seen our method’s limitation in context of Cub-200-2011 dataset due to small dataset. For SOP31 dataset our model consistently achieves better results compare to others in Table 11. We can also see that compared to the baseline methods50,51, the proposed method is able to improve its performance for all three datasets. This study confirms that our approach is able to generalize over unseen classes. We also show, with resnet101 model the proposed method is able to improve even more.

Conclusion

In this paper, a noise-assisted feature learning approach for FGIR is proposed which alleviates the expensive sampling process in triplet learning, and early saturation problem in softmax based learning. The deep CNN is jointly trained with multi loss objective dealing with class discriminative learning as well as noise invariant learning. Oxford flower 17 and cars-196 datasets are consider to validate our approach, where it achieves significant gains over existing schemes. Under the zero-shot setting, we achieved competitive results on cars-196, Cub-200-2011 and SOP datasets. The proposed approach exhibits great potential and can be explored in various industrial applications such as clothing retrieval, face retrieval, biomedical image retrieval, landmark retrieval, etc. The main limitation of this task may be the training time compared to normal CNN training which needs to be explore in larger networks. A second limitation might be that the loss of the proposed method primarily emphasizes a global perspective. This could be addressed by incorporating local attention mechanisms to capture subtle features more effectively. In subsequent work, we plan to leverage various deep variations of CNN and vision transformers to expand our approach to larger datasets. The applicability of these techniques can be evaluated in the medical field, utilizing both supervised and unsupervised learning techniques for potential advancements.

Data availability

All images used in Figures 1, 7, and 8 are sourced from publicly available datasets intended for research purposes. Therefore, permission for their use is not required. The data that support the findings of this study and publicly available datasets are available at https://www.robots.ox.ac.uk/~vgg/data/flowers/17/index.html; https://www.vision.caltech.edu/datasets/cub_200_2011/; https://www.kaggle.com/datasets/jessicali9530/stanford-cars-dataset?datasetId=30084&sortBy=dateCreated&select=cars_test.

References

Zhou, W., Li, H. & Tian, Q. Recent advance in content-based image retrieval: A literature survey (2017).

Xie, L., Wang, J., Zhang, B. & Tian, Q. Fine-grained image search. IEEE Trans. Multimed. 17, 636–647 (2015).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. In Adv. Neural Inf. Process. Syst. 1097–1105 (2012).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc. (2015).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 770–778 (2016).

Bell, S. & Bala, K. Learning visual similarity for product design with convolutional neural networks. In ACM Trans. Graph. (2015).

Wang, J., Song, Y., Leung, T., Rosenberg, C., Wang, J., Philbin, J., Chen, B. & Wu, Y. Learning fine-grained image similarity with deep ranking. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 1386–1393 (2014).

Schroff, F., Kalenichenko, D. & Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 815–823 (2015).

Chen, W., Chen, X., Zhang, J. & Huang, K. Beyond triplet loss: A deep quadruplet network for person re-identification. In Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition 403–412 (CVPR, 2017).

Huang, C., Loy, C. C. & Tang, X. Local similarity-aware deep feature embedding. In Adv. Neural Inf. Process. Syst. 1270–1278 (2016).

Manmatha, R., Wu, C. Y., Smola, A. J. & Krahenbuhl, P. Sampling matters in deep embedding learning. In Proc. IEEE Int. Conf. Comput. Vis. 2840–2848 (2017).

Ge, W., Huang, W., Dong, D. & Scott, M. R. Deep metric learning with hierarchical triplet loss. In Proceedings of the European Conference on Computer Vision (ECCV) 269–285 (2018).

Roth, K., Milbich, T., Ommer, B. Pads: Policy-adapted sampling for visual similarity learning. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 6567–6576 (2020).

Nilsback, M. E. & Zisserman, A. A visual vocabulary for flower classification. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 1447–1454 (2006).

Krause, J., Stark, M., Deng, J., Fei-Fei, L. 3D object representations for fine-grained categorization. In Proc. IEEE Int. Conf. Comput. Vis. 554–561 (2013).

Babenko, A., Slesarev, A., Chigorin, A. & Lempitsky, V. Neural codes for image retrieval. In European Conference on Computer Vision 584–599 (2014).

Yandex, A. B. & Lempitsky, V. Aggregating local deep features for image retrieval. In Proc. IEEE Int. Conf. Comput. Vis. 1269–1277 (2015).

Mohedano, E., Mcguinness, K., O’Connor, N. E., Salvador, A., Marqués, F. & Giró-I-nieto, X. Bags of local convolutional features for scalable instance search. In ICMR 2016 - Proc. 2016 ACM Int. Conf. Multimed. Retr. 327–331 (2016).

Ng, J. Y. H., Yang, F. & Davis, L. S. Exploiting local features from deep networks for image retrieval. In IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work. 53–61 (2015).

Kalantidis, Y., Mellina, C. & Osindero, S. Cross-dimensional weighting for aggregated deep convolutional features. In European Conference on Computer Vision 685–701 (2016).

Yang, H. F., Lin, K. & Chen, C. S. Cross-batch reference learning for deep classification and retrieval. In MM 2016 - Proc. 2016 ACM Multimed. Conf. 1237–1246 (2016).

Tolias, G., Sicre, R. & Jégou, H. Particular object retrieval with integral max-pooling of CNN activations. In 4th Int. Conf. Learn. Represent. ICLR 2016 - Conf. Track Proc. (2016).

Shakarami, A. & Tarrah, H. An efficient image descriptor for image classification and CBIR. Optik 214, 164833 (2020).

Zhang, X., Xiong, H., Zhou, W., Lin, W. & Tian, Q. Picking deep filter responses for fine-grained image recognition. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 1134–1142 (2016).

Watkins, R., Pears, N. & Manandhar, S. Vehicle classification using ResNets, localisation and spatially-weighted pooling (2018).

Zhou, F. & Lin, Y. Fine-grained image classification by exploring bipartite-graph labels. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 1124–1133 (2016).

Wei, X. S., Luo, J. H., Wu, J. & Zhou, Z. H. Selective convolutional descriptor aggregation for fine-grained image retrieval. IEEE Trans. Image Process. 26, 2868–2881 (2017).

Zheng, X., Ji, R., Sun, X., Wu, Y., Huang, F. & Yang, Y. Centralized ranking loss with weakly supervised localization for fine-grained object retrieval. In IJCAI Int. Jt. Conf. Artif. Intell. 1226–1233 (2018).

Kumar, V., Tripathi, V. & Pant, B. Content based fine-grained image retrieval using convolutional neural network. In 2020 7th Int. Conf. Signal Process. Integr. Networks 1120–1125 (SPIN, 2020).

Zhu, Y., Cao, G., Yang, Z. & Xiufan, Lu. Learning relation-based features for fine-grained image retrieval. Pattern Recogn. 140, 109543 (2023).

Song, H. O., Xiang, Y., Jegelka, S. & Savarese, S. Deep metric learning via lifted structured feature embedding. In Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 4004–4012 (2016).

Sohn, K. Improved deep metric learning with multi-class N-pair loss objective. In Adv. Neural Inf. Process. Syst. 1857–1865 (2016).

Song, H. O., Jegelka, S., Rathod, V. & Murphy, K. Deep metric learning via facility location. In Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition 2206–2214 (CVPR, 2017).

Zheng, X., Ji, R., Sun, X., Zhang, B., Wu, Y. & Huang, F. Towards optimal fine grained retrieval via decorrelated centralized loss with normalize-scale layer. In Proc. AAAI Conf. Artif. Intell. Vol. 33, 9291–9298 (2019).

Wang, X., Hua, Y., Kodirov, E., Hu, G., Garnier, R. & Robertson, N. M. Ranked list loss for deep metric learning. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 5207–5216 (2019).

Duan, Y., Chen, L., Lu, J. & Zhou, J. Deep embedding learning with discriminative sampling policy. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. 4964–4973 (2019).

Zheng, W., Lu, J. & Zhou, J. Hardness-aware deep metric learning. IEEE Trans. Pattern Anal. Mach. Intell. 43, 3214–3228. https://doi.org/10.1109/TPAMI.2020.2980231 (2021).

Duan, C. et al. Multilevel similarity-aware deep metric learning for fine-grained image retrieval. IEEE Trans. Industr. Inf. 19(8), 9173–9182. https://doi.org/10.1109/TII.2022.3227721 (2023).

Sanakoyeu, A., Ma, P., Tschernezki, V. & Ommer, B. Improving deep metric learning by divide and conquer. IEEE Trans. Pattern Anal. Mach. Intell. 44(11), 8306–8320 (2022).

Chen, T., Kornblith, S., Norouzi, M. & Hinton, G. A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning 1597–1607 (PMLR, 2020).

Rodner, E., Simon, M., Fisher, R. B. & Denzler, J. Fine-grained recognition in the noisy wild: Sensitivity analysis of convolutional neural networks approaches. In: Br. Mach. Vis. Conf. 2016 60.1–60.13 (BMVC, 2016).

Krause, J., Sapp, B., Howard, A., Zhou, H., Toshev, A., Duerig, T., Philbin, J. & Fei-Fei, L. The unreasonable effectiveness of noisy data for fine-grained recognition. In Lect. Notes Comput. Sci. (Including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics) 301–320 (2016).

Ba, J. L., Kiros, J. R. & Hinton, G. E. Layer normalization. arXiv preprint arXiv:1607.06450 (2016).

Afifi, M. & Brown, M. What else can fool deep learning? Addressing color constancy errors on deep neural network performance. In Proc. IEEE Int. Conf. Comput. Vis. 243–252 (2019).

Yang, J., Yu, K., Gong, Y. & Huang, T. Linear spatial pyramid matching using sparse coding for image classification. In Proc. IEEE Int. Conf. Comput. Vis. 1794–1801 (2009).

Gao, S., Tsang, I. W. H. & Ma, Y. Learning category-specific dictionary and shared dictionary for fine-grained image categorization. IEEE Trans. Image Process. 23, 623–634 (2014).

Ahmed, K. T., Ummesafi, S. & Iqbal, A. Content based image retrieval using image features information fusion. Inf. Fusion 51, 76–99 (2019).

Russakovsky, O. et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115, 211–252 (2015).

Wah, C., Branson, S., Welinder, P., Perona, P. & Belongie, S. The caltech-ucsd birds-200-2011 dataset (2011).

Xuan, H., Stylianou, A. & Pless, R. Improved embeddings with easy positive triplet mining. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass, CO, USA 2474–2482 (2020).

Zhai, A. & Wu, H.-Y. Classification is a strong baseline for deep metric learning. arXiv [cs.CV]. http://arxiv.org/abs/1811.1264 (2018).

Zhao, J.-M. & Lian, Q.-S. Multi-centers SoftMax reciprocal average precision loss for deep metric learning. Neural Comput. Appl. 35(16), 11989–11999 (2023).

Yan, J., Luo, L., Deng, C. & Huang, H. Adaptive hierarchical similarity metric learning with noisy labels. IEEE Trans. Image Process. 32, 1245–1256 (2023).

Yang, B., Sun, H., Li, F. W., Chen, Z., Cai, J. &Song, C. HSE: Hybrid species embedding for deep metric learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision 11047–11057 (2023).

Chan, P. P., Li, S., Deng, J. & Yeung, D. S. Multi-proxy based deep metric learning. Inf. Sci. 643, 119120 (2023).

Jiang, X., Yao, Y., Dai, X., Shen, F., Nie, L. & Shen, H. T. Anti-collapse loss for deep metric learning. IEEE Trans. Multimed. (2024).

Yang, L., Wang, P. & Zhang, Y.. Stop-gradient softmax loss for deep metric learning. In Proceedings of the AAAI Conference on Artificial Intelligence Vol. 37, 3164–3172 (2023).

Dalal, N. & Triggs, B. Histograms of oriented gradients for human detection. In Proc. - 2005 IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognition 886–893 (CVPR, 2005).

Ojala, T., Pietikäinen, M. & Mäenpää, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 24, 971–987 (2002).

Author information

Authors and Affiliations

Contributions

V.K.: write original draft; V.T. and B.P.: Supervision; P.S. and M.D.: writing, review and editing; A.B.: validation and analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kumar, V., Tripathi, V., Pant, B. et al. Learning optimal image representations through noise injection for fine-grained search. Sci Rep 15, 15560 (2025). https://doi.org/10.1038/s41598-025-97528-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-97528-9