Abstract

The present study uses the least squares residual power series (LSRPS) method to obtain approximate solutions to the nonlinear fractional-order Kawahara and Rosenau- Hyman equations. This method combines the residual power series (RPS) technique and the least squares approach. The calculations are obtained using Caputo’s sense as a basis. To obtain approximations of solutions, the well-known RPS method is first used. The functions are then proven to be linearly independent by checking the Wronskian determinant at fractional order. Next, a system of linear equations is generated and processed using the least squares approach. Using the least squares method, which uses fewer expansion terms than the classical RPS method, approximate solutions are determined. The problems presented below demonstrate how much faster the proposed method converges compared to the RPS method. Numerical results are presented to demonstrate the efficiency, accuracy, and rapid convergence of the method.

Similar content being viewed by others

Introduction

Fractional calculus has grown in popularity and application in many fields of science and engineering over the last few decades, including fluid mechanics, diffusive transport, electrical networks, electromagnetic theory, and various branches of physics, biological sciences, and other applications1,2,3,4,5. When it comes to modeling physical events, using fractional derivatives instead of integer derivatives provides greater benefits. The fractional derivatives improve the model by incorporating memory effects and nonlocal interactions, making it more applicable to complex physical systems. Caputo’s derivative is among the most often used fractional derivatives because it allows initial conditions to be included in the same way as classical differential equations, which is crucial in physics and engineering1. The definition is also more important than other definitions because it has a better memory effect than other definitions. Caputo derivative has been widely studied and applied in engineering, physics, and control systems. The ABC derivative is still relatively new, which means that there are few validated numerical methods, tools, and practical applications. Most nonlinear differential equations are often produced by modeling various physical systems mathematically. Finding analytical solutions to these problems is typically exceedingly challenging. The Roseau-Hyman equation was discovered as a simplified model for studying the role of nonlinear dispersion in pattern creation in liquid droplets, and it has found several uses in the modeling of various issues in physics and engineering6. The fractional Kawahara equation is an extension of the classical Kawahara equation, a higher-order, nonlinear partial differential equation used to describe wave propagation in dispersive media. This equation also simulates the propagation of long waves in shallow water, plasma waves, and fluid dynamics in systems. In the past, this problem has been extensively studied using a variety of methods, see7,8,9,10. The Kawahara equation is crucial for explaining the kinematics of plasma waves, capillary-gravity water waves, surface tension water waves, shallow water waves, and other wave types11,12,13,14. The fractional Rosenau-Heymann (RH) equation is a generalization of the classical Rosenau-Heymann equation, which models nonlinear wave phenomena, such as localized waves that do not dissipate over time15,16,17,18. It also has physical applications in Shallow water waves, Plasma and optical pulse propagation. For various fractional differential equations, several approaches have been investigated. These include the Double (G’/G, 1/G)-expansion technique19,20, the Lie group analysis and Lie’s invariant analysis methods21,22, the Laguerre wavelet collocation method23, the fractional reduced differential transform method24, the Hirota bilinear method, the variational iteration method, Kudryashov method25,26,27,28,29, Adomian decomposition method30, Homotopy analysis method31, RPS method32,33,34 and other efficient techniques35,36,37,38,39,40,41,42,43,44 which are powerful tools to find the solutions of the nonlinear partial differential equations (NPDEs). The main objective of this study is to develop and validate semi-analytical solutions to the time-fractional Kawahara and Rosenau- Hyman equations arising in fluid dynamics using the LSRPS method with the Caputo definition of time-fractional derivatives. The study aims to demonstrate the advantages of the LSRPS method in dealing with nonlinear terms without assumptions and to demonstrate the effects of enhanced memory. The LSRPS method is computationally efficient and robust for solving both linear and nonlinear equations. By utilizing least squares minimization and iterative projection, this method offers a powerful alternative to traditional direct and iterative methods, especially in cases involving unconditional or large-scale problems.

The following is the paper’s structure: The definitions of Caputo and Wronskian’s fractional are introduced in Sect. 2. Section 3 suggests the LSRPS approach. Section 4 Displays some applications of LSRPS Method. There is a conclusion in Sect. 5.

Preliminaries

Some basic concepts and properties of fractional calculus theory relevant to this section will be introduced. The Wronskian fractional equation is also described in this section.

Riemann-Liouville fractional integral

The Riemann-Liouville fractional integral of order\(\:\:\alpha\:>0\) of a function\(\:\:f:\:{R}^{+}\to\:R\) is defined as:

where

Hence, we have:

Riemann-Liouville fractional derivative

Riemann-Liouville fractional derivatives of order\(\:\:\alpha\:\) of a continuous function \(\:f:\:{R}^{+}\to\:R\:\)is obtained consecutively by:

where\(m - 1 < \alpha \le m,\:m \in N.\)

The Caputo fractional derivative

The Caputo fractional derivative of a continuous function \(\:f:\:{R}^{+}\to\:R\) may be represented as follows:

where \(m - 1 < \alpha \le m,\:m \in N,\:x > 0.\)

Some of the fundamental fractional derivatives and integrals for\(\:\alpha ,\:\beta \in R^{ + }\) are as follows:

Caputo fractional derivative characteristics

If \(\: - 1 < \alpha \le m,\:m \in N,\:\mu \ge - 1\:\)it holds:

where\(\:\:\xi\:\),\(\:\theta\:\), and C are real constants.

The fractional partial Wronskian (see45)

Let \(\:{\varphi\:}_{1},{\varphi\:}_{2},\dots\:,{\varphi\:}_{n}\:\)are the number of functions of the variables x and t that are specified on the domain\(\:\:{\Omega\:}\). Then, the fractional partial Wronskian of\(\:{\:\varphi\:}_{1},{\varphi\:}_{2},\dots\:,{\varphi\:}_{n}\) take the form:

where \(\:0<\alpha\:\le\:1,{\:D}^{\alpha\:}\left({\varphi\:}_{i}\right)=\left(\frac{\partial\:}{\partial\:x}+\frac{{\partial\:}^{\alpha\:}}{{\partial\:t}^{\alpha\:}}\right)\left({\varphi\:}_{i}\right),\:\text{w}\text{h}\text{e}\text{r}\text{e}\:i=1,\:2,\:3,\dots\:,n.\)

\(\:{\:D}^{n\alpha\:}={\:D}^{\alpha\:}{\:D}^{\alpha\:}\dots\:{\:D}^{\alpha\:}\left(\:n-times\right)\)and \(\:{\varphi\:}_{1}\left(x.t\right),{\varphi\:}_{2}\left(x.t\right),\dots\:,{\varphi\:}_{n}(x.t)\:\) are considered to be linearly independent if and only if the fractional partial Wronskian of all n functions\(\:{\:\varphi\:}_{1}\left(x.t\right),{\varphi\:}_{2}\left(x.t\right),\dots\:,{\varphi\:}_{n}(x.t)\:\)is nonzero at least once in the domain\(\:\:{\Omega\:}\:\)=\(\:\left[a.b\right]\times\:[a.b]\).

Theorem (Convergence theorem) (see46,47,48)

Suppose that \(\:{\text{u}}\left( {{\text{x,t}}} \right) \in {\text{C}}\left( {\left[ {{\text{r,t}}_{0} } \right] \times \:\left[ {{\text{r,t}}_{0} + {\text{r}}} \right]} \right),\:{\text{D}}_{{\text{t}}}^{{{\text{i}}\upalpha }} {\text{u}}\left( {{\text{x,t}}} \right) \in {\text{C}}\left( {\left[ {{\text{r,t}}_{0} } \right] \times \:\left[ {{\text{r,t}}_{0} + {\text{r}}} \right]} \right)\)

with respect to t on (\(\:\:{\text{t}}_{0} {\text{,t}}_{0} + {\text{r}})\) then \(\:\text{m}-1\) differentiate

where

and \(\:\text{r}\) is the radius of convergence. Moreover, the error term \(\:{\text{r}}_{{\text{N}}} \left( {{\text{x,t}}} \right)\:{\text{has}}\:{\text{the}}\:{\text{form}}\)

Proof (see48).

Methodology

In this section, we will outline the general procedure of the LSRPS technique, as described in46,47 for solving time-fractional differential equations. This technique combines the classical (RPS) method with the least-squares method.

Residual power series method (RPSM)

Considering the following time-fractional differential equation:

where \(\:{l}^{\alpha\:}\) is a linear fractional operator,\(\:\:N\) is a nonlinear operator, u (x, t) is an unknown function, and I is an initial condition. Based on the classical (RPS) technique as described in references32,33,34, an algorithm can be proposed as follows:

To obtain a reasonable approximation for Eq. (9), the Kth series of\(\:\:u\left(x.t\right)\) is introduced. Thus, the truncated series\(\:\:{\:u}_{k}(x.t)\) is defined as follows

The 0-th RPS approximate solution of \(\:\:u\left(x.t\right)\:\)is:

Equation (9) can be written by:

Let’s define the residual function for Eq. (9) as follows:

Considering the initial condition\(\:\:I\left(u\right)=0\), let’s define the Kth residual function \(\:{Res}_{u.k}\) as follows:

To obtain \(\:f_{n} \left( {\text{x}} \right),\:n \in N^{*} ,\) we seek the solution of the following Eq.

where \(\:{N}^{*}=\left\{1,\:2,\:3,.\dots\:,n\right\}.\)

To determine\(\:{f}_{1}\left(\text{x}\right)\),\(\:\:{f}_{2}\left(\text{x}\right),{f}_{3}\left(\text{x}\right)\), …, we consider \(\:(k=1,\:2,\:3.\dots\:.)\) in Eq. (10) and substitute this series expansion into Eq. (9) to obtain an approximate solution for Eq. (1). The standard residual power series approach can be employed to obtain Kth order approximation solutions as:

where,

Least-Squares residual power series technique (LSRPS)

This section presents the methodology for the LSRPS technique and introduces key definitions necessary for its implementation.

Let the remainder \(\:\widetilde{Res}\) for Eq. (1) be:

with\(\:\:I\left(\widetilde{u}\right)=0\), and \(\:\widetilde{u}\) is the approximate solution of Eq. (2).

Remark that if

where \(\:{{\{s}^{i\alpha\:}(x,t)\}\:\:}_{i\in\:{N}^{*}}\:\:\)is converge to the solution of Eq. (1).

The \(\:\widetilde{u}\) is the \(\:\epsilon\:-\)approximate RPS method solution of Eq. (1) on domain \(\:{\Omega\:}\) if:

and \(\:\:I\left(u\right)=0\:\text{i}\text{s}\:\text{a}\text{l}\text{s}\text{o}\:\text{s}\text{a}\text{t}\text{i}\text{s}\text{f}\text{i}\text{e}\text{d}\:\text{b}\text{y}\:\widetilde{u}\).

If \(\:\widetilde{u}\) is the weak -approximate (RPS) method solution of Eq. (1) on domain\(\:\:{\Omega\:}\), we call it that:

where\(\:I\left(u\right)=0\:\text{i}\text{s}\:\text{a}\text{l}\text{s}\text{o}\:\text{s}\text{a}\text{t}\text{i}\text{s}\text{f}\text{i}\text{e}\text{d}\:\text{b}\text{y}\:\widetilde{u}\).

To implement the least-squares Residual Power Series approach, we propose the following procedures:

1st step

We adopt the classical residual power series approach to approximate the solution. The expression for \(\:{u}_{k}\left(x.t\right)\) can be represented as follows:

and the kth residual function \(\:{Res}_{u.k}\) take the form:

Subsequently, we seek solutions for \(\:{f}_{n}\left(\text{x}\right)\) by exploring the following procedure:

where \(\:{N}^{*}=\{1,\:2,\:3,.,n\}.\)

In this case, the implementation of the RPS technique provides kth-order approximation solutions characterized by the following:

where \(\:{\varphi\:}_{0},{\varphi\:}_{1},{\varphi\:}_{2\:}\)can be computed by Eq. (4)

2nd step

The linearly independent functions can be verified or validated using the following procedure:

where \(\:{\:D}^{\alpha\:}\left({\varphi\:}_{i}\right)=\left(\frac{\partial\:}{\partial\:x}+\frac{{\partial\:}^{\alpha\:}}{{\partial\:t}^{\alpha\:}}\right)\left({\varphi\:}_{i}\right),\:\)and \(\:{s}_{k}=\{{\varphi\:}_{0},{\varphi\:}_{1},\dots\:,{\varphi\:}_{n}\}\) be a set of linearly independent elements in the vector space of continuous functions defined on R.

If it is not possible to identify any point where \(\:{\omega\:}^{\alpha\:}\left[{\varphi\:}_{1},{\varphi\:}_{2},\dots\:,{\varphi\:}_{n}\right]\) is not equal to 0, then it implies that the set of functions\(\:\:{s}_{k}\) is linearly dependent.

3rd step

We assume that:

By considering the approximated solution\(\:\:{\widetilde{\text{u}}}_{\text{k}}\) for Eq. (1), we can substitute it into Eq. (5) to obtain:

4th step

We relate the following functional to:

and obtain some constants of\(\:\:{c}_{n}\) by solving the algebraic systems \(\:\frac{{\partial \:J}}{{\partial \:c_{n} }} = 0,\:n = 1{\text{,}}2{\text{,}} \ldots ,k\).

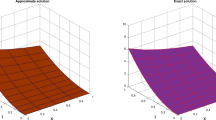

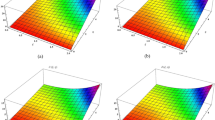

Application of the least-squares residual power series method

this section focuses on the application of the LSRPS method to address various problems. During the initial iterations of this new strategy, we often utilize the fractional RPS technique. The unidentified coefficients are subsequently determined using the least-squares method. To assess the accuracy of the approximation solutions, we employ graphs and tables, providing a visual and numerical analysis.

Problem 1

Considering the time-fractional Rosenau-Hyman equation:

where t >0,\(\:\:x\in\:R\), 0 < α ≤ 1.

Subject to the initial condition:

.

The exact solution at α=1 is

.

To introduce the solution of fractional Rosenau-Hyman equation, we can employ the well-known (RPS) method34 which offers a solution for the equation as:

where

The linearly independent functions could be validated by using:

where \(\:\alpha\:=1,\:t=0.5,\:x=0,c=1\:\text{a}\text{n}\text{d}\:{\omega\:}^{1}\left[{\varphi\:}_{0},{\varphi\:}_{1},{\varphi\:}_{2}\right]\ne\:0.\:\:\)

Hence, the functions \(\:{\varphi\:}_{0},{\varphi\:}_{1},{\varphi\:}_{2}\) are linearly independent define as:

Consequently, we can obtain an approximation that can be formulated as follows:

The residual function can be obtained by:

With the initial condition:

By using \(\:{\widetilde{u}}_{0}\) put \(\:{c}_{0}=1,\:\:\widetilde{u}\:\)can be written as:

By substituting \(\:\widetilde{u}\) into \(\:\widetilde{Res}\left(x,t,\widetilde{u}\right)\), we can obtain\(\:\:\widetilde{Res.}\) As a result, the functional J can be expressed as:

We have two algebraic equations:

And following that, we calculate the unknown coefficients of Eq. (43) when\(\:\:\alpha\:=1\) as:

The absolute error between the exact and approximated solutions using the proposed technique can be illustrated using the following formula:

Problem 2

We will now examine the fractional Kawahara Eq.

where t >0, \(\:x\in\:R\), 0 < α ≤ 1.

Subject to the initial condition:

The exact solution when\(\:\:{\upalpha\:}=1\) is:

By employing a similar approach as the classical residual power series method demonstrated in problem (1), we can derive the following Eq.

The series representation of the solution is provided as follows:

To verify the linear independence of the functions, we can employ the following procedure:

By evaluating the given parameters\(\:\:\alpha\:=1,\:t=0.5\:\text{a}\text{n}\text{d}\:x=0\), we can determine that\(\:\:\:{\omega\:}^{1}\left[{\varphi\:}_{0},{\varphi\:}_{1},{\varphi\:}_{2},{\varphi\:}_{3},{\varphi\:}_{4}\right]\ne\:0\). Consequently, it can be concluded that the functions \(\:{\varphi\:}_{0}.{\varphi\:}_{1}.{\varphi\:}_{2}.{\varphi\:}_{3}.{\varphi\:}_{4}\) are linearly independent. These functions are defined as:

Therefore, based on this observation, we can deduce an approximation that can be expressed as follows:

The residual function can be obtained as:

with the initial condition:

By using \(\:{\widetilde{u}}_{0}\) and put \(\:{c}_{0}=1,\:\widetilde{u}\:\)can written as:

Hence, by substituting \(\:\widetilde{u}\) into \(\:\widetilde{Res}\left(x,t,\widetilde{u}\right)\), then we obtain \(\:\widetilde{Res}\). Subsequently, the functional J can be expressed as:

By evaluating the functional J, we arrive at four algebraic equations, which can be stated as follows:

Subsequently, the unknown coefficients \(\:\left({c}_{1},{c}_{2},{c}_{3},{c}_{4}\right)\) for the case \(\:\:{\upalpha\:}=1\) take the form:

The formula for absolute error is:

Conclusion

The least squares residual power series method (LSRPSM) is an improved version of the residual power series method (RPSM), incorporating the least squares technique to improve accuracy and convergence. This paper introduces a novel comparative analysis between LSRPSM and RPSM, highlighting the advantages of incorporating the least squares approach in improving accuracy and convergence speed. The correctness of these results is displayed in Tables 1 and 2 and visually in Figs. 1, 2, 3, 4, 5, 6, 7 and 8 to demonstrate the effectiveness and distinctiveness of this approach. It can be observed that the LSRPSM often converges more quickly than RPSM, especially for problems with complex boundary conditions. It is suitable for problems where standard RPSMs cannot achieve sufficient accuracy within a limited number of terms. In this regard, it is significant and a useful alternative approach for resolving fractional NPDEs. In the future, least squares technique can be combined with other analytical methods to obtain optimal results. Other fractional definitions can also be used.

LSRPS Solution of Eq. (46) using for different values of \(\:\alpha\:\).

Data availability

Data is provided within the manuscript or supplementary information files.

References

Podlubny, I. Fractional Differential Equation (Academic, 1999).

Wang, K. J. An effective computational approach to the local fractional low-pass electrical transmission lines model. Alexandria Eng. J. 110, 629–635 (2025).

Liang, Y. H. & Wang, K. J. Bifurcation analysis, chaotic phenomena, variational principal, hamiltonian, solitary and periodic wave solutions of the fractional Benjamin ono equation. Fractals 33 (1), 2550016 (2025).

Wang, K. J. & Liu, J. H. On the zero state-response of the ℑ-order R-C circuit within the local fractional calculus. COMPEL-The Int. J. Comput. Math. Electr. Electron. Eng. 42 (6), 1641–1653 (2023).

Rida, S., Arafa, A., Abedl-Rady, A. & Abdl-Rahaim, H. Fractional physical differential equations via natural transform. Chin. J. Phys. 55 (4), 1569–1575 (2017).

Rosenau, P. & Hyman, J. M. Compactons: solitons with finite wavelength. Phys. Rev. Lett. 70 (5), 564–567 (1993). American Physical Society.

Molliq, R. Y. & Noorani, M. S. M. Solving the fractional Rosenau-Hyman equation via variational iteration method and homotopy perturbation method. Int. J. Differ. Equation (2012) Article ID. 472030, 14 (2012).

Iyiola, O. S., Ojo, G. O. & Mmaduabuchi, O. The fractional Rosenau–Hyman model and its approximate solution. Alexandria Eng. J. 55, 1655–1659 (2016).

Akgül, A., Aliyu, A. I., Inc, M., Yusuf, A. & Baleanu, D. Approximate solutions to the conformable Rosenau-Hyman equation using the two-step Adomian decomposition method with Padé approximation. Math. Meth Appl. Sci. 43 (13), 7632–7639 (2020).

Senol, M., Tasbozan, O. & Kurt, A. Comparison of two reliable methods to solve fractional Rosenau Hyman equation. Math. Meth Appl. Sci. 44 (10), 7904–7914 (2021).

Berloff, N. G. & Howard, L. N. Solitary and periodic solutions to Nonlinear Non integrable equations. Stud. Appl. Math. 99, 1–24 (1997).

Bridges, T. & Derks, G. Linear instability of solitary wave solutions of the Kawahara equation and its generalizations. SIAM J. Math. Anal. 33, 1356–1378 (2002).

Hunter, J. K. & Scheurle, J. Existence of perturbed solitary wave solutions to a model equation for water waves. Phys. D. 32, 253–268 (1988).

Kawahara, T. Oscillatory solitary waves in dispersive media. J. Phys. Soc. Japan. 33, 260–264 (1972).

Benbachir, M. & Saadi, A. The Kawahara equation with time and space-fractional derivatives solved by the Adomian method. J. Interdisciplinary Math. 17 (3), 243–253 (2014).

Sontakke, B. R. & Shaikh, A. Approximate solutions of time fractional Kawahara and modified Kawahara equations by fractional complex transform. Commun. Numer. Anal. 2016 (2), 218–229 (2016).

Saldır, O., Sakar, M. G. & Erdogan, F. Numerical solution of time-fractional Kawahara equation using reproducing kernel method with error estimate. Comput. Appl. Math. 38, 198 (2019).

Çulha Ünal, S. Approximate solutions of time fractional Kawahara equations by utilizing the residual power series method. Int. J. Appl. Comput. Math. 8, 78 (2022).

Uddin, M. H., Khatun, M. A., Arefin, M. A. & Akbar, M. A. Abundant new exact solutions to the fractional nonlinear evolution equation via Riemann-Liouville derivative. Alexandria Eng. J. 60 (6), 5183–5191 (2021).

Uddin, M. H., Akbar, M. A., Khan, M. A. & Haque, M. A. New exact solitary wave solutions to the space-time fractional differential equations with conformable derivative. AIMS Math. 4 (2), 199–214 (2019).

San, S. Invariant analysis of nonlinear time fractional Qiao equation. Nonlinear Dyn. 85 (4), 2127–2132 (2016).

San, S. Lie symmetry analysis and conservation laws of Non linear time fractional WKI equation. Celal Bayar Univ. J. Sci. 13 (1), 55–61 (2017).

Srinivasa, K. & Rezazadeh, H. Numerical solution for the fractional-order one- dimensional telegraph equation via wavelet technique. Int. J. Nonlinear Sci. Numer. Simul. 22 (6), 767–780 (2021).

Jena, R. M., Chakraverty, S., Rezazadeh, H. & Ganji, D. D. On the solution of time-fractional dynamical model of brusselator reaction‐diffusion system arising in chemical reactions. Math. Methods Appl. Sci. 43 (7), 3903–3913 (2020).

Wang, K. J., Zou, B. R., Zhu, H. W., Li, S. & Li, G. Phase portrait, bifurcation and chaotic analysis, variational principle, hamiltonian, novel solitary, and periodic wave solutions of the new extended Korteweg–de Vries–Type equation. Math. Meth Appl. Sci. https://doi.org/10.1002/mma.10852 (2025).

Wang, K. J. et al. Localized wave and other special wave solutions to the (3 + 1)-dimensional Kudryashov–Sinelshchikov equation. Math. Meth Appl. Sci. https://doi.org/10.1002/mma.10764 (2025).

Wang, K. J., Liu, X. L., Wang, W. D., Li, S. & Zhu, H. W. Novel singular and non-singular complexiton, interaction wave and the complex multi-soliton solutions to the generalized nonlinear evolution equation. Modern Physics Letters B 2550135 (2025). (2025).

Wang, K.-J., et al. Lump wave, breather wave and other abundant wave solutions to the (2 + 1)-dimensional Sawada–Kotera–Kadomtsev Petviashvili equation of fluid mechanics. Pramana - Journal of Physics 99(1), 40 (2025)

Liu, J.-H., Yang, Y.-N., Wang, K.-J. & Zhu, H.-W. On the variational principles of the Burgers-Korteweg-de Vries equation in fluid mechanics.Europhysics Letters 149, 52001 (2025).

El-Sayed, A. M. A., Rida, S. Z. & Arafa, A. A. M. On the solutions of the generalized reaction-diffusion model for bacterial colony. Acta Applicandae Math. 110 (3), 1501–1511 (2010).

Arafa, A. A. M. & Hagag, A. M. S. A new analytic solution of fractional coupled Ramani equation. Chin. J. Phys. 60, 388–406 (2019).

El-Ajou, A., Arqub, O. A., Zhour, Z. & Momani, S. New results on fractional power series: theories and applications. Entropy 15 (12), 5305–5323 (2013).

El-Ajou, A., Arqub, O. A. & Momani, S. Approximate analytical solution of the nonlinear fractional KdV-Burgers equation: a new iterative algorithm. J. Comput. Phys. 293, 81–95 (2014).

Ismail, J. G. M., Abdl-Rahim, H. R., Ahmad, H. & Chu, Y. Fractional residual power series method for the analytical and approximate studies of fractional physical phenomena. Open. Phys. 18, 799–805 (2020).

Sahu, I. & Jena, S. R. An efficient technique for time fractional Klein-Gordon equation based on modified Laplace Adomian decomposition technique via hybridized Newton-Raphson scheme arises in relativistic fractional quantum mechanics. Partial Differ. Equations Appl. Math. 10, 100744 (2024).

Jena, S. R. & Sahu, I. A reliable method for voltage of telegraph equation in one and two space variables in electrical transmission: approximate and analytical approach. Phys. Scr. 98 (10), 105216 (2023).

Sahu, I. & Jena, S. R. The kink-antikink single waves in dispersion systems by generalized PHI-four equation in mathematical physics. Phys. Scr. 99 (5), 055258 (2024).

Sahu, I. & Jena, S. R. SDIQR mathematical modelling for COVID-19 of Odisha associated with influx of migrants based on Laplace Adomian decomposition technique. Model. Earth Syst. Environ. 9 (4), 4031–4040 (2023).

Jena, S. R. & Senapati, A. An improvised cubic B-spline collocation of fourth order and Crank–Nicolson technique for numerical soliton of Klein–Gordon and Sine–Gordon equations. Iran. J. Sci. 49, 383–407 (2025).

Jena, S. R. & Senapati, A. One-dimensional heat and advection-diffusion equation based on improvised cubic B-spline collocation, finite element method and Crank-Nicolson technique. Int. Commun. Heat Mass Transfer. 147, 106958 (2023).

Jena, S. R. & Senapati, A. Stability, convergence and error analysis of B-spline collocation with Crank–Nicolson method and finite element methods for numerical solution of schrodinger equation arises in quantum mechanics. Phys. Scr. 98 (11), 115232 (2023).

Jena, S. R. & Senapati, A. Explicit and implicit numerical investigations of one-dimensional heat equation based on spline collocation and Thomas algorithm. Soft. Comput. 28 (20), 12227–12248 (2024).

Jena, S. R. & Sahu, I. A novel approach for numerical treatment of traveling wave solution of ion acoustic waves as a fractional nonlinear evolution equation on Shehu transform environment. Phys. Scr. 98 (8), 085231 (2023).

Jena, S. R. & Gebremedhin, G. S. Computational algorithm for MRLW equation using B-spline with BFRK scheme. Soft. Comput. 27 (16), 11715–11730 (2023).

Kumar, R. & Koundal, R. Generalized least square homotopy perturbations for system of fractional partial differential equations. (2018). http://arxiv.org/abs/1805.06650

Zhang, J., Wei, Z., Li, L. & Zhou, C. Least-Squares residual power series method for the Time-Fractional differential equations. Complexity 2019. Article ID. 6159024, 15 (2019).

Hassan, A., Arafa, A. A. M., Rida, S. Z., Dagher, M. A. & El Sherbiny, H. M. Adapting semi-analytical treatments to the time-fractional derivative Gardner and Cahn-Hilliard equations. Alexandria Eng. J. 87, 389–397 (2024).

Kumar, S., Kumar, A. & Baleanu, D. Two analytical methods for time-fractional nonlinear coupled Boussinesq–Burger’s equations arise in propagation of shallow water waves. Nonlinear Dyn. 85, 699–715 (2016).

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

“A.Hassan. wrote the main manuscript text and A.A.M.Arfaa, S.Z.Rida prepared figures 1-6. All authors reviewed the manuscript.”

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hassan, A., Arafa, A.A.M., Rida, S.Z. et al. Least squares residual power series solutions for Kawahara and Rosenau-Hyman nonlinear wave interactions with applications in fluid dynamics. Sci Rep 15, 14929 (2025). https://doi.org/10.1038/s41598-025-97639-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-97639-3