Abstract

Polyethylene glycol (PEG), a synthetic polymer made up of repeating ethylene oxide units, is widely recognized for its broad utility and adaptable properties. Precise estimation of CO2 solubility in PEG plays a vital role in enhancing processes such as supercritical fluid extraction, carbon capture, and polymer modification, where CO2 serves as a solvent or transport medium. This study focuses on building advanced predictive models using machine-learning approaches, such as random forest (RF), decision tree (DT), adaptive boosting (AdaBoost), k-nearest neighbors (KNN), and ensemble learning (EL) to forecast CO2 solubility in PEG across a wide range of conditions. The data utilized for model development is sourced from previously published literature, and an outlier detection method is applied beforehand to identify any suspicious data points. Additionally, sensitivity analysis is performed to evaluate the relative influence of each input parameter on the output variable. The results proved that DT model is the most performance method for estimating CO2 solubility in PEG since it showed largest R-squared (i.e., 0.801 and 0.991 for test and train, respectively) and lowest error metrics (MSE: 0.0009 and AARE%: 22.58 for test datapoints). In addition, it was found that pressure and PEG molar mass directly affects the solubility in contrast to the temperature variable which has an inverse relationship. The developed DT model can be regarded accurate and robust user-friendly tool for estimating CO2 solubility in PEG without needing experimental workflows which are known to be time-consuming, expensive and tedious.

Similar content being viewed by others

Introduction

Compose of repeated ethylene oxide units, the synthetic polyethylene glycol (PEG) is known for its versatile properties and wide range of applications. It is produced through the polymerization of ethylene oxide, resulting in a linear, water-soluble polyether. One of the defining features of polyethylene glycol is its availability in a broad range of molar masses, typically ranging from as low as 200 g/mol to over 35,000 g/mol1,2,3,4. This diversity in molecular weight significantly influences its physical properties, such as viscosity, solubility, and melting point. PEGs with lower molar mass values are liquids or viscous fluids at room temperature, while higher-molecular-weight PEGs are solid and wax-like. PEG is non-toxic, biocompatible, and non-immunogenic, making it suitable for applications in pharmaceuticals, cosmetics, and food products5,6,7,8.

The diverse range of molar masses allows polyethylene glycol to serve various roles across industries. In the pharmaceutical sector, PEGs with lower molecular weights are often used as solvents, excipients, and laxatives, while higher-molecular-weight PEGs function as binders, drug carriers, or controlled-release agents9,10. In cosmetics, PEG acts as a moisturizer, emulsifier, and thickener due to its hydrophilic nature. Furthermore, PEG is employed in industrial processes as a lubricant, plasticizer, and anti-foaming agent. Its ability to conjugate with proteins and other molecules has also expanded its use in medical disciplines like tissue engineering and drug delivery systems11,12. This versatility, driven by the broad spectrum of molar masses, has solidified polyethylene glycol as a critical material in numerous scientific and commercial fields13.

Supercritical fluids like supercritical carbon dioxide (SC-CO2)14 have shown a great ability in various fields including extraction of essential oil15,16,17,18,19,20,21, seed oil22,23,24, solubility25,26,27, particle formation (RESS, RESSAS, US-RESS or US-RESoLVe,…)28,29,30,31,32,33,34,35,36, impregnation37,38, optimization and mathematical modeling20,39, polymer synthesis40, etc. The mixture of polyethylene glycol (PEG) and carbon dioxide (CO2) holds significant importance in various industrial and scientific applications due to their complementary properties41,42,43,44,45. PEG, with its hydrophilic and non-toxic nature, can act as a stabilizer, solvent, or carrier, while CO2, particularly in its supercritical state, serves as an environmentally friendly solvent and processing medium46,47,48,49. This combination is particularly valuable in green chemistry and sustainable processes, such as supercritical fluid extraction, polymer processing, and nanoparticle synthesis50,51. The PEG-CO2 mixture enables the creation of efficient, low-toxicity systems for drug delivery, where CO2 can act as a carrier to encapsulate or release active pharmaceutical ingredients stabilized by PEG. Additionally, in advanced material production, the mixture provides enhanced solubility, reduced viscosity, and tunable processing conditions, supporting the development of innovative and sustainable technologies. The solubility of CO2 in PEG is vital for gas separation and capture technologies, as PEG can absorb CO2 efficiently due to its strong affinity for the gas. This makes it valuable in carbon capture applications, helping to address environmental challenges like greenhouse gas emissions52,53. In pharmaceutical and biomedical applications, the solubility of CO2 in PEG facilitates the creation of drug delivery systems and foamed materials, where CO2 serves as a blowing or delivery agent while PEG stabilizes or encapsulates the target substances54,55,56. Thus, the high solubility of CO2 in PEG enables innovation in sustainable manufacturing, gas absorption processes, and advanced material design, enhancing their economic and environmental viability.

Accurate estimation of CO2 solubility in polyethylene glycol (PEG) is crucial for optimizing processes such as supercritical fluid extraction, carbon capture, and polymer modification, where CO2 acts as a solvent or carrier. Precise solubility data ensures efficient process design, enhances energy savings, and supports the development of sustainable, environmentally friendly technologies. It also enables better control over material properties in applications like drug delivery and advanced manufacturing. On the other hand, the experiential methods are costly, tedious and time-consuming. Given the laboratory data availability of carbon dioxide solubility in PEG polymer in the published literature, this study seeks to construct rigorous smart models based on several machine learning methods such as random forest (RF), decision tree (DT), adaptive boosting (AdaBoost), k-nearest neighbors (KNN), and ensemble learning (EL) to estimate CO2 solubility on PEG over a wide of range of values using data collected from previously published literature data. An outlier detection algorithm is applied to find the prospective suspected points prior to the model development and a sensitivity analysis is done to figure out the relevant impacts of each input parameter. The constructed model’s performance is highlighted through a number of evaluation metrics as well as plotting approaches.

Machine learning models

Decision tree

Decision tree (DT) is a widely used machine learning and statistical tool for dual tasks of regression and classification. The method originates from the concept of recursively partitioning data into subsets based on feature values to create a tree-like structure of decisions. At each internal node, a test is performed on a specific feature to determine the optimal split, directing the flow of data points to child nodes57. This procedure is continued until the pertinent data is sufficiently homogeneous or meets a stopping criterion, and the resulting leaf nodes represent the estimated outcomes or class labels. The simplicity of the decision tree model, along with its intuitive, hierarchical structure, makes it highly interpretable and suitable for various practical applications, including finance, healthcare, and engineering58,59,60.

Interpretability is known as one of the primary advantages of decision tree structures. Unlike more complex models like neural networks, decision trees visually represent the decision-making process, enabling users to understand how estimations are made. This makes decision trees particularly valuable in domains requiring transparency and accountability, such as medical diagnosis, where understanding the reasoning behind a classification is as important as the outcome itself. This combination of flexibility, interpretability, and efficiency makes decision trees a strong tool for solving an extensive range of classification and regression problems61.

Adaptive boosting

Adaptive Boosting (AdaBoost) is a powerful ensemble learning technique introduced by Yoav Freund and Robert Schapire, intended to enhance the performance of weak classifiers, this approach combines them to form a robust and more accurate classifier. AdaBoost works iteratively, where each subsequent weak learner (commonly decision stumps—simple decision trees with one split) focuses on the mistakes made by the previous learners. It assigns higher weights to misclassified data points, making them more influential in the next iteration. By adjusting the model’s focus to harder-to-predict samples, AdaBoost progressively reduces errors and enhances the accuracy of estimations. This iterative process keep going until a specified number of weak learners are trained or no further improvement can be achieved62.

One of the main advantages of AdaBoost is its capability in boosting the performance of weak learners while maintaining simplicity. Since it combines multiple weak classifiers rather than building a single complex model, AdaBoost avoids overfitting when appropriately tuned. Its capacity to adjust to the hardest-to-classify samples allows it to achieve high accuracy on both binary and multiclass classification tasks63,64,65.

K-nearest neighbors

The K-Nearest Neighbors (KNN) algorithm is an intuitive and effective non-parametric approach frequently applied to classification and regression problems. It operates on the principle that data points with similar features are usually located near each other within the feature space. To generate a estimation, the algorithm measures the distance between the query point and all other points in the dataset commonly using Euclidean distance and identifies the k closest neighbors. The outcome is then determined based on the values or labels of these nearest neighbors. In classification tasks, the output is determined by the most frequent class among the k nearest neighbors, whereas in regression, the estimation is calculated as the average value of these neighbors. Since KNN requires no prior model training and makes estimations directly from the data, it is referred to as a “lazy learning” algorithm66,67,68.

A key advantage of KNN lies in its simplicity and straightforward implementation. Unlike many other methods, it does not require any assumptions about the data distribution, which makes it highly flexible and well-suited for managing complex, non-linear patterns within the data. KNN can work effectively on both numerical and categorical datasets, and its performance improves with larger datasets and appropriately chosen k. Another key benefit is that KNN adapts well to multi-class classification problems without requiring extensive modifications. Its intuitive nature makes it widely used in real-world applications such as recommendation systems, anomaly detection, and pattern recognition tasks like image classification69,70.

Random forest

Random forest (RF) is a machine learning ensemble technique that improves estimation reliability and accuracy by aggregating the results of multiple decision trees. Developed by Leo Breiman, it works by constructing a “forest” of multiple decision trees, each trained on a random subset of the data using a technique called bagging (Bootstrap Aggregating). At each node where a split occurs in the tree, a randomly selected subset of features is evaluated, which introduces additional diversity among the trees. The final output is obtained by combining the estimations of all individual trees, using majority voting for classification tasks or averaging for regression. This ensemble strategy minimizes the likelihood of overfitting, a frequent problem with single decision trees, and improves the model’s ability to generalize to new, unseen data71,72,73.

One of the principal advantages of Random Forest is its capability to deliver high accuracy while maintaining robustness. By combining estimations from multiple trees, the model mitigates the impact of noisy data, outliers, or overfitting that could compromise the performance of a single tree. Additionally, Random Forest is highly versatile, capable of handling both classification and regression tasks, as well as mixed datasets with numerical and categorical features. Moreover, it exhibits excellent generalization capability, making it widely used in diverse fields such as finance (credit risk estimation), healthcare (disease diagnosis), and environmental sciences (climate modeling). By combining the interpretability of decision trees with ensemble learning techniques, Random Forest strikes an optimal balance between accuracy, robustness, and computational efficiency, making it a reliable tool for solving complicated real-world issues74,75.

Ensemble learning

Ensemble learning (EL) is a methodology in machine learning that integrates several individual models, often called “base learners” or “weak learners,” to produce a more accurate and reliable predictive model. The central idea is that while a single model may struggle with certain aspects of a dataset, combining the forecasts of numerous models can diminish errors and enhance generalization. Ensemble methods achieve this by leveraging the diversity among base models to minimize bias, variance, and noise. The primary techniques used in ensemble learning are boosting (like AdaBoost), bagging (such as Random Forest), and stacking, where multiple models contribute to the final estimation through averaging, majority voting, or a meta-model76,77.

One of the vital compensations of ensemble learning is its ability to significantly improve accuracy and robustness over single models. By combining outputs from multiple learners, ensemble techniques reduce the risk of overfitting, particularly on noisy or complex datasets. For example, bagging (Bootstrap Aggregating) trains models independently on randomly sampled subsets of the data, which reduces variance and enhances stability78,79.

Dada analysis and models’ evaluation metrics

To construct the smart models in this study, data were sourced from previously published research that experimentally measured CO2 solubility in different PEGs in terms of their molar masses, temperature and pressure80,81,82,83,84,85. The resulting dataset consists of 164 data points, for which statistical parameters such as the minimum, maximum, median, mode, kurtosis, standard deviation, skewness, and mean have been presented in Table 1.

To assess how well each developed model performs in terms of estimation, various indices have been computed for comparison. These indices include mean square error (MSE), determination coefficient (R2), relative error percent (RE%), absolute relative error (ARE%) and average absolute relative error (AARE%) and the details are given in86,87,88.

For the development of predictive models, features contain PEG molecular weight, pressure and temperature, whereas CO2 solubility in PEG serves as the output variable. The dataset, consisting of 164 data points, is divided such that 90% is utilized for training and validation through the k-fold cross-validation approach with five folds, and the remaining 10% is reserved for evaluating the models’ performance. To minimize the effect of variability within the dataset, all input factors as well as the output variable are normalized beforehand according to the following simple equation89:

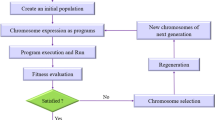

In this context, n refers to the actual data point, while nmin and nmax represent the minimum and maximum values in the dataset, respectively, and nnorm corresponds to the normalized data point. K-fold cross-validation is known as a methodology aimed at enhancing the accuracy of machine learning models by utilizing the entire dataset for repeated evaluations. The dataset is divided into K equal segments (folds), where each segment is used as a validation set once, while the remaining K − 1 segments are used for training. The outcomes from all K validation iterations are combined to produce a single performance estimate, reducing the bias associated with random data splitting. This approach also successfully decreases well-known overfitting phenomenon while training the data-driven models. A visual representation of the K-fold cross-validation algorithm is shown in Fig. 190,91. In this work, a five-fold cross-validation approach has been executed during the training phase for each machine learning model.

Results and discussion

Outlier detection

The functionality and reliability of the developed models are significantly affected by the quality of the datasets used in their creation. To verify the trustworthiness of the data, the Leverage approach is utilized. In this method, the Hat matrix is expressed as92,93:

Herein, design matrix denoted by X can be defined with dimensions m × n, in which m indicates total count of datapoints, and n embodies count of input factors, which equals 3 in this study. The William’s plot provides a visual representation of the correlation amongst the normalized values and the Hat values, with the latter corresponding to the diagonal elements of the Hat matrix. This plot is particularly useful for identifying outliers, and the warning leverage is calculated using88,94:

In this work, the warning leverage value has been calculated to be approximately 0.073. The standardized residuals are maintained within a range of − 3 to + 3. The William’s plot, shown in Fig. 2, visually highlights suspected and potential outlier data points. The vertical line indicates the warning leverage threshold, while the two horizontal lines define the standardized residual limits. Data points falling inside these boundaries are regarded as valid and trustworthy. As illustrated in Fig. 2, 12 data points are marked as suspected. However, to improve the generalizability of the developed models, all data points are incorporated into the algorithms.

Sensitivity study

In this section, the goal is to analyze how each input parameter such as temperature, pressure, and PEG molecular weight affects the output variable, CO2 solubility in PEG. This is achieved through the application of sensitivity analysis to quantify the individual contributions and influence of these parameters88,95,96,97,98. This approach entails determining the relevancy index pertinent to every feature, which is computed using the following equation:

The relevancy factor ranges from − 1 to 1, with two important points to note. Positive values signify a direct correlation with the output variable, whereas negative values represent an inverse relationship. Second, the greater the absolute value of the index, the stronger its impact on the output. Figure 3 presents the relevancy indices calculated for each input parameter. The results show that both pressure and PEG molecular weight have positive relevancy factors, suggesting a direct correlation with CO2 solubility in PEG polymer. On the other hand, temperature has a negative relevancy factor, indicating an inverse relationship with solubility. Among the parameters, pressure is observed to have the highest influence, whereas temperature is the least impactful on CO2 solubility in PEG.

Obtaining models’ hyperparameters

The training and validation subsets are used to identify the optimal parameters and hyperparameters for different algorithms. In the DT model, the max-depth hyperparameter is determined to be 6, as showed in Fig. 4. For the AdaBoost model, the optimal number of estimators is 12, as shown in Fig. 5. Similarly, Fig. 6 indicates that the number of estimators for the RF model is 11. For the KNN model, the value of K is determined to be 2, as illustrated in Fig. 7. It is notable that KNN, DT, RF, and AdaBoost models, with their respective optimized parameters, are considered components of the EL framework.

Performance of the developed models

Table 2 presents the evaluation metrics for training, test, and total datasets, showcasing the predictive performance of the data-driven models for CO2 solubility in PEG. To measure the effectiveness of the proposed soft computing techniques, three mathematical evaluation metrics are employed: R-squared, mean squared error (MSE), and the percent of average absolute relative errors. According to these metrics, the DT model demonstrates the highest accuracy in estimating solubility as it identified with lowest MSE, AARE% and highest R-squared for the unseen testing data. Note that AdaBoost and EL are detected with some degrees of overfitting due to considerable difference in MSE between train and test segments, even though they were empowered with k-fold cross validation during the training. Therefore, these two models are unreliable although their indicators for train and test are impressive.

This research employs two visualization methods, that is, crossplots and relative error plots to evaluate the accuracy of the machine learning algorithms. Figure 8 depict the comparison between actual and estimated solubility values for various models applied here. The DT predictive model demonstrates a close clustering of points near the bisector line, indicating a powerful correlation amongst the observed and estimated results. The resulted equations due to fitting lines to these datapoints are nearly identical to the y = x line, which confirms the model’ effectiveness in estimating solubility. Furthermore, scatter plots of relative errors in Fig. 9 reveal that the error values for the DT model are tightly concentrated around the x-axis, emphasizing its high accuracy. These visualization techniques provide strong evidence of the close match between the actual solubility values and the estimations generated by the DT model. Notice that the main limitation of the developed DT model is that they are eligible to applied only within the range of input parameters from which these models were developed.

Conclusions

This study aimed to develop advanced predictive models using numerous machine-learning techniques such as DT, RF, KNN, AdaBoost, and EL to estimate CO2 solubility in PEG under a variety of laboratory conditions. The data used to train and validate the models was collected from existing literature, and an outlier detection approach was applied beforehand to identify any irregular data points. Furthermore, sensitivity analysis was carried out to judge the relative contribution of each input parameter to the output variable. The findings demonstrated that DT model outperforms the other approaches, attaining highest R-squared (i.e., 0.801 and 0.991 for test and train, respectively) as well as smallest error metrics (MSE: 0.0009 and AARE%: 22.58 for test datapoints). The DT model is accurate, reliable, and user-friendly tool for estimating CO2 solubility in PEG, eliminating the need for experimental procedures, which are typically costly, labor-intensive, and time-consuming. Furthermore, it was observed that pressure and PEG molar mass have a direct impact on solubility, whereas temperature exhibits an inverse relationship with it.

Data availability

Data is accessible in the supplementary material.

References

D’souza, A. A. & Shegokar, R. Polyethylene glycol (PEG): A versatile polymer for pharmaceutical applications. Expert Opin. Drug Deliv. 13(9), 1257–1275. https://doi.org/10.1080/17425247.2016.1182485 (2016).

Chen, J., Spear, S. K., Huddleston, J. G. & Rogers, R. D. Polyethylene glycol and solutions of polyethylene glycol as green reaction media. Green Chem. 7(2), 64–82. https://doi.org/10.1039/b413546f (2005).

Zhang, T. et al. Ultrahigh-performance fiber-supported iron-based ionic liquid for synthesizing 3, 4-dihydropyrimidin-2-(1 H)-ones in a cleaner manner. Langmuir 40(18), 9579–9591. https://doi.org/10.1021/acs.langmuir.4c00332 (2024).

Wang, L., Hu, H. & Ko, C.-C. Osteoclast-driven polydopamine-to-dopamine release: An upgrade patch for polydopamine-functionalized tissue engineering scaffolds. J. Funct. Biomater. 15(8), 211. https://doi.org/10.3390/jfb15080211 (2024).

Arakawa, T. & Timasheff, S. N. Mechanism of polyethylene glycol interaction with proteins. Biochemistry 24(24), 6756–6762. https://doi.org/10.1021/bi00345a005 (1985).

Harris, J. M. Laboratory synthesis of polyethylene glycol derivatives. J. Macromol. Sci. Rev. Macromol. Chem. Phys. 25(3), 325–373. https://doi.org/10.1080/07366578508081960 (1985).

Wolosewick, J. J. The application of polyethylene glycol (PEG) to electron microscopy. J. Cell Biol. 86(2), 675–761. https://doi.org/10.1083/jcb.86.2.675 (1980).

Wu, H. et al. pH-sensitive phosphorescence in penicillamine-coated Au22 nanoclusters: Theoretical and experimental insights. Chem. Eng. J. 495, 153608. https://doi.org/10.1016/j.cej.2024.153608 (2024).

Hutanu, D., Frishberg, M. D., Guo, L. & Darie, C. C. Recent applications of polyethylene glycols (PEGs) and PEG derivatives. Mod. Chem. Appl. 2(2), 1–6. https://doi.org/10.4172/2329-6798.1000132 (2014).

Parveen, S. & Sahoo, S. K. Nanomedicine: Clinical applications of polyethylene glycol conjugated proteins and drugs. Clin. Pharmacokinet. 45, 965–988. https://doi.org/10.2165/00003088-200645100-00002 (2006).

Peng, Z., Ji, C., Zhou, Y., Zhao, T. & Leblanc, R. M. Polyethylene glycol (PEG) derived carbon dots: Preparation and applications. Appl. Mater. Today 20, 100677. https://doi.org/10.1016/j.apmt.2020.100677 (2020).

Gaballa, S. A., Naguib, Y., Mady, F. M. & Khaled, K. A. Polyethylene glycol: Properties, applications, and challenges. J. Adv. Biomed. Pharm. Sci. 7, 26–36. https://doi.org/10.21608/jabps.2023.241685.1205 (2023).

Hoffmann, M. M. Polyethylene glycol as a green chemical solvent. Curr. Opin. Colloid Interface Sci. 57, 101537. https://doi.org/10.1016/j.cocis.2021.101537 (2022).

Sodeifian, G., Razmimanesh, F. & Sajadian, S. A. Solubility measurement of a chemotherapeutic agent (Imatinib mesylate) in supercritical carbon dioxide: Assessment of new empirical model. J. Supercrit. Fluids 146, 89–99. https://doi.org/10.1016/j.supflu.2019.01.006 (2019).

Sodeifian, G. & Sajadian, S. A. Investigation of essential oil extraction and antioxidant activity of Echinophora platyloba DC. using supercritical carbon dioxide. J. Supercrit. Fluids 121, 52–62. https://doi.org/10.1016/j.supflu.2016.11.014 (2017).

Sodeifian, G. H., Azizi, J. & Ghoreishi, S. M. Response surface optimization of Smyrnium cordifolium Boiss (SCB) oil extraction via supercritical carbon dioxide. J. Supercrit. Fluids 95, 1–7. https://doi.org/10.1016/j.supflu.2014.07.023 (2014).

Sodeifian, G., Ardestani, N. S., Sajadian, S. A. & Ghorbandoost, S. Application of supercritical carbon dioxide to extract essential oil from Cleome coluteoides Boiss: Experimental, response surface and grey wolf optimization methodology. J. Supercrit. Fluids 114, 55–63. https://doi.org/10.1016/j.supflu.2016.04.006 (2016).

Sodeifian, G. & Ansari, K. Optimization of Ferulago Angulata oil extraction with supercritical carbon dioxide. J. Supercrit. Fluids 57(1), 38–43. https://doi.org/10.1016/j.supflu.2011.02.002 (2011).

Sodeifian, G., Sajadian, S. A. & Ardestani, N. S. Optimization of essential oil extraction from Launaea acanthodes Boiss: Utilization of supercritical carbon dioxide and cosolvent. J. Supercrit. Fluids 116, 46–56. https://doi.org/10.1016/j.supflu.2016.05.015 (2016).

Sodeifian, G., Sajadian, S. A. & Ardestani, N. S. Experimental optimization and mathematical modeling of the supercritical fluid extraction of essential oil from Eryngium billardieri: Application of simulated annealing (SA) algorithm. J. Supercrit. Fluids 127, 146–157. https://doi.org/10.1016/j.supflu.2017.04.007 (2017).

Sodeifian, G., Sajadian, S. A. & Ardestani, N. S. Evaluation of the response surface and hybrid artificial neural network-genetic algorithm methodologies to determine extraction yield of Ferulago angulata through supercritical fluid. J. Taiwan Inst. Chem. Eng. 60, 165–173. https://doi.org/10.1016/j.jtice.2015.11.003 (2016).

Sodeifian, G., Sajadian, S. A. & Ardestani, N. S. Supercritical fluid extraction of omega-3 from Dracocephalum kotschyi seed oil: Process optimization and oil properties. J. Supercrit. Fluids 119, 139–149. https://doi.org/10.1016/j.supflu.2016.08.019 (2017).

Sodeifian, G., Ghorbandoost, S., Sajadian, S. A. & Ardestani, N. S. Extraction of oil from Pistacia khinjuk using supercritical carbon dioxide: Experimental and modeling. J. Supercrit. Fluids 110, 265–274. https://doi.org/10.1016/j.supflu.2015.12.004 (2016).

Sodeifian, G., Ardestani, N. S., Sajadian, S. A. & Moghadamian, K. Properties of Portulaca oleracea seed oil via supercritical fluid extraction: Experimental and optimization. J. Supercrit. Fluids 135, 34–44. https://doi.org/10.1016/j.supflu.2017.12.026 (2018).

Sodeifian, G. & Usefi, M. M. B. Solubility, extraction, and nanoparticles production in supercritical carbon dioxide: A mini-review. ChemBioEng Rev. 10(2), 133–166. https://doi.org/10.1002/cben.202200020 (2023).

Sodeifian, G., Nateghi, H. & Razmimanesh, F. Measurement and modeling of dapagliflozin propanediol monohydrate (an anti-diabetes medicine) solubility in supercritical CO2: Evaluation of new model. J CO2 Util 80, 102687. https://doi.org/10.1016/j.jcou.2024.102687 (2024).

Sodeifian, G., Sajadian, S. A., Razmimanesh, F. & Ardestani, N. S. A comprehensive comparison among four different approaches for predicting the solubility of pharmaceutical solid compounds in supercritical carbon dioxide. Korean J. Chem. Eng. 35, 2097–2116. https://doi.org/10.1007/s11814-018-0125-6 (2018).

Sodeifian, G. & Sajadian, S. A. Solubility measurement and preparation of nanoparticles of an anticancer drug (Letrozole) using rapid expansion of supercritical solutions with solid cosolvent (RESS-SC). J. Supercrit. Fluids 133, 239–252. https://doi.org/10.1016/j.supflu.2017.10.015 (2018).

Ardestani, N. S., Sodeifian, G. & Sajadian, S. A. Preparation of phthalocyanine green nano pigment using supercritical CO2 gas antisolvent (GAS): experimental and modeling. Heliyon 6(9), e04947. https://doi.org/10.1016/j.heliyon.2020.e04947 (2020).

Hazaveie, S. M., Sodeifian, G. & Ardestani, N. S. Micro and nanosizing of Tamsulosin drug via supercritical CO2 antisolvent (SAS) process. J. CO2 Util. 84, 102847. https://doi.org/10.1016/j.jcou.2024.102847 (2024).

Razmimanesh, F., Sodeifian, G. & Sajadian, S. A. An investigation into Sunitinib malate nanoparticle production by US-RESOLV method: Effect of type of polymer on dissolution rate and particle size distribution. J. Supercrit. Fluids 170, 105163. https://doi.org/10.1016/j.supflu.2021.105163 (2021).

Sodeifian, G., Sajadian, S. A. & Derakhsheshpour, R. CO2 utilization as a supercritical solvent and supercritical antisolvent in production of sertraline hydrochloride nanoparticles. J CO2 Util 55, 101799. https://doi.org/10.1016/j.jcou.2021.101799 (2022).

Sodeifian, G., Sajadian, S. A., Ardestani, N. S. & Razmimanesh, F. Production of loratadine drug nanoparticles using ultrasonic-assisted rapid expansion of supercritical solution into aqueous solution (US-RESSAS). J. Supercrit. Fluids 147, 241–253. https://doi.org/10.1016/j.supflu.2018.11.007 (2019).

Sodeifian, G. & Sajadian, S. A. Utilization of ultrasonic-assisted RESOLV (US-RESOLV) with polymeric stabilizers for production of amiodarone hydrochloride nanoparticles: Optimization of the process parameters. Chem. Eng. Res. Des. 142, 268–284. https://doi.org/10.1016/j.cherd.2018.12.020 (2019).

Sodeifian, G., Ardestani, N. S., Sajadian, S. A. & Panah, H. S. Experimental measurements and thermodynamic modeling of Coumarin-7 solid solubility in supercritical carbon dioxide: Production of nanoparticles via RESS method. Fluid Phase Equilib. 483, 122–143. https://doi.org/10.1016/j.fluid.2018.11.006 (2019).

Sodeifian, G., Sajadian, S. A. & Daneshyan, S. Preparation of Aprepitant nanoparticles (efficient drug for coping with the effects of cancer treatment) by rapid expansion of supercritical solution with solid cosolvent (RESS-SC). J. Supercrit. Fluids 140, 72–84. https://doi.org/10.1016/j.supflu.2018.06.009 (2018).

Ameri, A., Sodeifian, G. & Sajadian, S. A. Lansoprazole loading of polymers by supercritical carbon dioxide impregnation: Impacts of process parameters. J. Supercrit. Fluids 164, 104892. https://doi.org/10.1016/j.supflu.2020.104892 (2020).

Fathi, M., Sodeifian, G. & Sajadian, S. A. Experimental study of ketoconazole impregnation into polyvinyl pyrrolidone and hydroxyl propyl methyl cellulose using supercritical carbon dioxide: Process optimization. J. Supercrit. Fluids 188, 105674. https://doi.org/10.1016/j.supflu.2022.105674 (2022).

Sodeifian, G., Sajadian, S. A. & Honarvar, B. Mathematical modelling for extraction of oil from Dracocephalum kotschyi seeds in supercritical carbon dioxide. Nat. Prod. Res. 32(7), 795–803. https://doi.org/10.1080/14786419.2017.1361954 (2018).

Daneshyan, S. & Sodeifian, G. Synthesis of cyclic polystyrene in supercritical carbon dioxide green solvent. J. Supercrit. Fluids 188, 105679. https://doi.org/10.1016/j.supflu.2022.105679 (2022).

Weidner, E., Wiesmet, V., Knez, Ž & Škerget, M. Phase equilibrium (solid-liquid-gas) in polyethyleneglycol-carbon dioxide systems. J. Supercrit. Fluids 10(3), 139–147. https://doi.org/10.1016/s0896-8446(97)00016-8 (1997).

Daneshvar, M., Kim, S. & Gulari, E. High-pressure phase equilibria of polyethylene glycol-carbon dioxide systems. J. Phys. Chem. 94(5), 2124–2128. https://doi.org/10.1021/j100368a071 (1990).

Zhao, Y. et al. Understanding the positive role of ionic liquids in CO2 capture by poly(ethylenimine). J. Phys. Chem. B 128(4), 1079–1090. https://doi.org/10.1021/acs.jpcb.3c06510 (2024).

Pan, X.-R. et al. The influence of carbon dioxide on fermentation products, microbial community, and functional gene in food waste fermentation with uncontrol pH. Environ. Res. 267, 120645. https://doi.org/10.1016/j.envres.2024.120645 (2025).

Zhao, H., Zhang, T., Chen, S. & Zhao, G. Hierarchical fibrous metal-organic framework/ionic liquid membranes for efficient CO2/N2 separation. Nano Lett. https://doi.org/10.1021/acs.nanolett.4c05232 (2025).

Henni, A., Tontiwachwuthikul, P. & Chakma, A. Solubilities of carbon dioxide in polyethylene glycol ethers. Can. J. Chem. Eng. 83(2), 358–361. https://doi.org/10.1002/cjce.5450830224 (2005).

Wang, Y. et al. Synthesis of 4 H-pyrazolo [3, 4-d] pyrimidin-4-one hydrazine derivatives as a potential inhibitor for the self-assembly of TMV particles. J. Agric. Food Chem. 72(6), 2879–2887. https://doi.org/10.1021/acs.jafc.3c05334 (2024).

Zhang, Y. et al. Bagasse-based porous flower-like MoS2/carbon composites for efficient microwave absorption. Carbon Lett. https://doi.org/10.1007/s42823-024-00832-z (2024).

Yang, Z. Q. & Zhu, H. L. Study on the effect of carbon nanotubes on the microstructure and anti-carbonation properties of cement-based materials. J. Funct. Mater. 54(08), 8217–8227. https://doi.org/10.1016/j.conbuildmat.2020.120452 (2023).

Vafaeezadeh, M. & Hashemi, M. M. Polyethylene glycol (PEG) as a green solvent for carbon–carbon bond formation reactions. J. Mol. Liq. 207, 73–79. https://doi.org/10.1016/j.molliq.2015.03.003 (2015).

Shiue, A., Yin, M.-J., Tsai, M.-H., Chang, S.-M. & Leggett, G. Carbon dioxide separation by polyethylene glycol and glutamic acid/polyvinyl alcohol composite membrane. Sustainability 13(23), 13367. https://doi.org/10.3390/su132313367 (2021).

Yang, Z.-Z., Song, Q.-W. & He, L.-N. Capture and Utilization of Carbon Dioxide with Polyethylene Glycol (Springer, Berlin, 2012).

Kealy J. Thermophysical properties of the green solvent polyethylene glycol (2023).

Ramachandran, J. P., Antony, A., Ramakrishnan, R. M., Wallen, S. L. & Raveendran, P. CO2-solvated liquefaction of polyethylene glycol (PEG): A novel, green process for the preparation of drug-excipient composites at low temperatures. J. CO Util. 59, 101971. https://doi.org/10.1016/j.jcou.2022.101971 (2022).

Kwon, K.-T., Uddin, M. S., Jung, G.-W. & Chun, B.-S. Preparation of micro particles of functional pigments by gas-saturated solution process using supercritical carbon dioxide and polyethylene glycol. Korean J. Chem. Eng. 28, 2044–2049. https://doi.org/10.1007/s11814-011-0088-3 (2011).

Al Rafea, K. Solubility Measurement of Polyethylene Glycol Polymers in Supercritical Carbon Dioxide at High. A MS Thesis at the University of Ryerson (2008).

Ghorbani, H., Krasnikova, A., Ghorbani, P., Ghorbani, S., Hovhannisyan, H. S. & Minasyan A. et al. Prediction of heart disease based on robust artificial intelligence techniques. IEEE 000167-74. https://doi.org/10.1109/cando-epe60507.2023.10417981

Suthaharan, S. & Suthaharan, S. Decision tree learning. In: Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning 237–69 (2016). https://doi.org/10.1007/978-1-4899-7641-3_10

Guan, D. et al. S2Match: Self-paced sampling for data-limited semi-supervised learning. Pattern Recogn. 159, 111121. https://doi.org/10.1016/j.patcog.2024.111121 (2025).

Zhu, Y. et al. Scaling graph neural networks for large-scale power systems analysis: Empirical laws for emergent abilities. IEEE Trans. Power Syst. https://doi.org/10.1109/tpwrs.2024.3437651 (2024).

Podgorelec, V., Kokol, P., Stiglic, B. & Rozman, I. Decision trees: an overview and their use in medicine. J. Med. Syst. 26, 445–463. https://doi.org/10.1023/a:1016409317640 (2002).

Sai, M. J. et al. An ensemble of light gradient boosting machine and adaptive boosting for prediction of type-2 diabetes. Int. J. Comput. Intell. Syst. 16(1), 14. https://doi.org/10.1007/s44196-023-00184-y (2023).

Ferreira, A. J. & Figueiredo, M. A. T. Boosting algorithms: A review of methods, theory, and applications. In: Ensemble Machine Learning: Methods and Applications 35–85 (2012).

Zhu, B. et al. (100)-Textured KNN-based thick film with enhanced piezoelectric property for intravascular ultrasound imaging. Appl Phys Lett 106(17), 173504. https://doi.org/10.1063/1.4919387 (2015).

Sui, X., Wu, Q., Liu, J., Chen, Q. & Gu, G. A review of optical neural networks. IEEE Access 8, 70773–70783. https://doi.org/10.1109/access.2020.2987333 (2020).

Peterson, L. E. K-nearest neighbor. Scholarpedia 4(2), 1883. https://doi.org/10.4249/scholarpedia.1883 (2009).

He, Y. et al. A systematic resilience assessment framework for multi-state systems based on physics-informed neural network. Reliab. Eng. Syst. Saf. 257, 110866. https://doi.org/10.1016/j.ress.2025.110866 (2025).

Zhu, B., Wei, W., Li, Y., Yang, X., Zhou, Q. & Shung, K. K. KNN-based single crystal high frequency transducer for intravascular photoacoustic imaging. In: IEEE 1–4. https://doi.org/10.1109/ultsym.2017.8092368

Bezdek, J. C., Chuah, S. K. & Leep, D. Generalized k-nearest neighbor rules. Fuzzy Sets Syst. 18(3), 237–256. https://doi.org/10.1016/0165-0114(86)90004-7 (1986).

Shi, X., Zhang, Y., Yu, M. & Zhang, L. Revolutionizing market surveillance: customer relationship management with machine learning. PeerJ Comput. Sci. 10, e2583. https://doi.org/10.7717/peerj-cs.2583 (2024).

Breiman, L. Random forests. Mach. Learn. 45, 5–32. https://doi.org/10.1023/a:1010933404324 (2001).

Wang, L., Liu, G., Wang, G. & Zhang, K. M-PINN: A mesh-based physics-informed neural network for linear elastic problems in solid mechanics. Int. J. Numer. Methods Eng. 125(9), e7444. https://doi.org/10.1002/nme.7444 (2024).

Li, X., Hu, C., Liu, H., Shi, X. & Peng, J. Data-driven pressure estimation and optimal sensor selection for noisy turbine flow with blocked clustering strategy. Phys Fluids 36(12), 125188. https://doi.org/10.1063/5.0239759 (2024).

Cutler, A., Cutler, D. R. & Stevens, J. R. Random forests. In: Ensemble Machine Learning: Methods and Applications 157–75 (2012). https://doi.org/10.1007/978-1-4419-9326-7_5

Wang, Z. et al. Towards cognitive intelligence-enabled product design: The evolution, state-of-the-art, and future of AI-enabled product design. J Ind Inf Integr 43, 100759. https://doi.org/10.1016/j.jii.2024.100759 (2024).

Dietterich, T. G. Ensemble learning. Handb. Brain Theory Neural Netw. 2(1), 110–125 (2002).

Deng, J., Liu, G., Wang, L., Liu, G. & Wu, X. Intelligent optimization design of squeeze casting process parameters based on neural network and improved sparrow search algorithm. J. Ind. Inf. Integr. 39, 100600. https://doi.org/10.1016/j.jii.2024.100600 (2024).

Hastie, T., Tibshirani, R., Friedman, J., Hastie, T., Tibshirani, R. & Friedman, J. Ensemble learning. In: The Elements of Statistical Learning: Data Mining, Inference, and Prediction 605–624 (2009). https://doi.org/10.1007/978-0-387-84858-7_16

Deng, J., Liu, G., Wang, L., Liang, J. & Dai, B. An efficient extraction method of journal-article table data for data-driven applications. Inf. Process. Manage. 62(3), 104006. https://doi.org/10.1016/j.ipm.2024.104006 (2025).

Chen, Y., Ma, C., Ji, X., Yang, Z. & Lu, X. Thermodynamic study on aqueous polyethylene glycol 200 solution and performance assessment for CO2 separation. Fluid Phase Equilib. 504, 112336. https://doi.org/10.1016/j.fluid.2019.112336 (2020).

Canci, H. High pressure solubilities and diffusion coefficients of carbon dioxide in polyethylene glycol 400 (2018). https://doi.org/10.1016/s0378-3812(99)00217-4

Huang, H. Experimental study of CO2 solubility in ionic liquids and polyethylene glycols (2015). https://doi.org/10.22541/au.159986533.36221265

Aionicesei, E., Škerget, M. & Knez, Ž. Measurement and modeling of the CO2 solubility in poly (ethylene glycol) of different molecular weights. J. Chem. Eng. Data 53(1), 185–188. https://doi.org/10.1021/je700467p (2008).

Li, J., Ye, Y., Chen, L. & Qi, Z. Solubilities of CO2 in Poly (ethylene glycols) from (303.15 to 333.15) K. J. Chem. Eng. Data 57(2), 610–616. https://doi.org/10.1021/je201197m (2012).

da Ponte, M. High pressure phase equilibria for poly (ethylene glycol) s+ CO2: Experimental results and modelling. Phys. Chem. Chem. Phys. 1(23), 5369–5375. https://doi.org/10.1039/a906927e (1999).

Hasanzadeh, M. & Madani, M. Deterministic tools to predict gas assisted gravity drainage recovery factor. Energy Geosci. 5(3), 100267. https://doi.org/10.1016/j.engeos.2023.100267 (2024).

Madani, M. & Alipour, M. Gas-oil gravity drainage mechanism in fractured oil reservoirs: Surrogate model development and sensitivity analysis. Comput. Geosci. 26(5), 1323–1343. https://doi.org/10.1007/s10596-022-10161-7 (2022).

Bemani, A., Madani, M. & Kazemi, A. Machine learning-based estimation of nano-lubricants viscosity in different operating conditions. Fuel 352, 129102. https://doi.org/10.1016/j.fuel.2023.129102 (2023).

Abbasi, P., Aghdam, S.K.-Y. & Madani, M. Modeling subcritical multi-phase flow through surface chokes with new production parameters. Flow Meas. Instrum. 89, 102293. https://doi.org/10.1016/j.flowmeasinst.2022.102293 (2023).

Prusty, S., Patnaik, S. & Dash, S. K. SKCV: Stratified K-fold cross-validation on ML classifiers for predicting cervical cancer. Front. Nanotechnol. 4, 972421 (2022).

Yan, T., Shen, S.-L., Zhou, A. & Chen, X. Prediction of geological characteristics from shield operational parameters by integrating grid search and K-fold cross validation into stacking classification algorithm. J. Rock Mech. Geotech. Eng. 14(4), 1292–1303. https://doi.org/10.1016/j.jrmge.2022.03.002 (2022).

Rousseeuw, P. J. & Leroy, A. M. Robust Regression and Outlier Detection (Wiley, Hoboken, 2005).

Madani, M., Moraveji, M. K. & Sharifi, M. Modeling apparent viscosity of waxy crude oils doped with polymeric wax inhibitors. J. Petrol. Sci. Eng. 196, 108076. https://doi.org/10.1016/j.petrol.2020.108076 (2021).

Bassir, S. M. & Madani, M. Predicting asphaltene precipitation during titration of diluted crude oil with paraffin using artificial neural network (ANN). Pet. Sci. Technol. 37(24), 2397–2403. https://doi.org/10.1080/10916466.2019.1570261 (2019).

Zhang, D. et al. A multi-source dynamic temporal point process model for train delay prediction. IEEE Trans. Intell. Transp. Syst. https://doi.org/10.1109/tits.2024.3430031 (2024).

Dong, J. F., Guan, Z. W., Chai, H. K. & Wang, Q. Y. High temperature behaviour of basalt fibre-steel tube reinforced concrete columns with recycled aggregates under monotonous and fatigue loading. Constr. Build. Mater. 389, 131737. https://doi.org/10.1016/j.conbuildmat.2023.131737 (2023).

Dong, J. et al. Mechanical behavior and impact resistance of rubberized concrete enhanced by basalt fiber-epoxy resin composite. Constr. Build. Mater. 435, 136836. https://doi.org/10.1016/j.conbuildmat.2024.136836 (2024).

Bassir, S. M. & Madani, M. A new model for predicting asphaltene precipitation of diluted crude oil by implementing LSSVM-CSA algorithm. Pet. Sci. Technol. 37(22), 2252–2259. https://doi.org/10.1080/10916466.2019.1632896 (2019).

Acknowledgements

The authors are thankful to the Deanship of Research and Graduate Studies, King Khalid University, Abha, Saudi Arabia, for financially supporting this work through the Large Research Group Project under Grant no. R.G.P.2/152/46.

Author information

Authors and Affiliations

Contributions

F. S., and D. S. did the formal study. A. Y., and S. B. did the conceptual study. A. S., and T. K. performed the required coding. S. V., F. Y. and I. A. did the visualization and validation. S. S supervised the whole project.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sead, F.F., Sur, D., Yadav, A. et al. Carbon dioxide solubility in polyethylene glycol polymer: an accurate intelligent estimation framework. Sci Rep 15, 13949 (2025). https://doi.org/10.1038/s41598-025-98512-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-98512-z