Abstract

Patients undergoing open surgical repair of abdominal aortic aneurysm (AAA) have a high risk of post-operative complications. However, there are no widely used tools to predict surgical risk in this population. We used machine learning (ML) techniques to develop automated algorithms that predict 30-day outcomes following open AAA repair. The National Surgical Quality Improvement Program targeted vascular database was used to identify patients who underwent elective, non-ruptured open AAA repair between 2011 and 2021. Input features included 35 pre-operative demographic/clinical variables. The primary outcome was 30-day major adverse cardiovascular event (MACE; composite of myocardial infarction, stroke, or death). We split our data into training (70%) and test (30%) sets. Using 10-fold cross-validation, 6 ML models were trained using pre-operative features with logistic regression as the baseline comparator. Overall, 3,620 patients were included. Thirty-day MACE occurred in 311 (8.6%) patients. The best performing prediction model was XGBoost, achieving an AUROC (95% CI) of 0.90 (0.89–0.91). Comparatively, logistic regression had an AUROC (95% CI) of 0.66 (0.64–0.68). The calibration plot showed good agreement between predicted and observed event probabilities with a Brier score of 0.03. Our automated ML algorithm can help guide risk-mitigation strategies for patients being considered for open AAA repair to improve outcomes.

Similar content being viewed by others

Introduction

Abdominal aortic aneurysm (AAA) is a dilation of the abdominal aorta to greater than 3 cm, which becomes life-threatening when ruptured, carrying a mortality rate over 80%1,2.Open surgical repair significantly reduces AAA rupture risk; however, the procedure itself carries a high rate of complications3. Menard and colleagues demonstrated that adverse events occur in over 25% of patients undergoing open repair of non-ruptured, infrarenal AAA3. As a result, the Society for Vascular Surgery (SVS) AAA guidelines recommend careful assessment of surgical risk when considering patients for intervention4.

Currently, there are no standardized tools to predict adverse outcomes following open AAA repair. A systematic review of 13 risk prediction models showed significant methodological limitations and variable performance across different populations5. Additionally, tools such as the American College of Surgeons (ACS) National Surgical Quality Improvement Program (NSQIP) surgical risk calculator6use modelling techniques that require manual input of clinical variables, deterring routine use in busy medical environments7. The ability for clinicians to predict post-operative outcomes using clinical judgment alone is suboptimal, with a systematic review of 27 studies demonstrating area under the receiver operating characteristic curve (AUROC) values ranging from 0.51 to 0.758. Therefore, there is an important need to develop better and more practical risk prediction tools for patients being considered for open AAA repair.

Machine learning (ML) is an evolving technology that enables computers to learn from large datasets and make accurate predictions9. This field has been driven by the explosion of electronic information combined with increasing computational power10. Previously, our group used the Vascular Quality Initiative (VQI) database to develop a ML algorithm that accurately predicts in-hospital major adverse cardiovascular events (MACE) following open AAA repair11. The primary outcome was in-hospital MACE because longer term myocardial infarction and stroke were not well captured in the VQI open AAA database11. Furthermore, VQI primarily comprises data from North American centres12. In contrast, the NSQIP database contains data from patients across ~ 15 countries worldwide and captures 30-day outcomes13. Therefore, a NSQIP algorithm may have advantages over a VQI model, including the ability to capture MACE beyond the initial hospitalization and greater potential to be generalizable across countries13. In this study, we applied ML to the NSQIP database to predict 30-day MACE and other outcomes following elective, non-ruptured open AAA repair using pre-operative data.

Methods

Ethics

ACS NSQIP approved the experimental protocol and provided the blinded dataset. The Unity Health Toronto Research Ethics Board deemed that this study was exempt from review as the data came from a large, deidentified registry. Due to the retrospective nature of the study involving data originating from an anonymized registry, the Unity Health Toronto Research Ethics Board waived the need for obtaining informed consent. All methods were performed in accordance with the relevant guidelines and regulations, including the Declaration of Helsinki14.

Design

We conducted a ML-based prognostic study and the findings were reported based on the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis + Artificial Intelligence (TRIPOD + AI) statement (eTable 1)15. ML methods were based on our previous work11.

Dataset

Created in 2004, the ACS NSQIP database contains demographic, clinical, and 30-day outcomes data on surgical patients across over 700 hospitals in approximately 15 countries worldwide13. The information is prospectively collected from electronic health records by trained and certified clinical reviewers and regularly audited by ACS for accuracy16. Targeted NSQIP registries for vascular operations were developed in 2011 by vascular surgeons, which contain additional procedure-specific variables and outcomes17.

Cohort

All patients who underwent open AAA repair between 2011 and 2021 in the ACS NSQIP targeted database were included. This information was merged with the main ACS NSQIP database for a complete set of generic and procedure-specific variables and outcomes. Patients treated for ruptured or symptomatic AAA, thoracoabdominal aortic aneurysm, thromboembolic disease, dissection, and graft infection were excluded.

Features

Thirty-five pre-operative variables were used as input features for our ML models. Given the unique advantage of ML in handling many input features, all available variables in the NSQIP database were used as input features to maximize predictive performance. Demographic variables included age, sex, body mass index (BMI), race, ethnicity, and origin status. Comorbidities included hypertension, diabetes, smoking status, congestive heart failure (CHF), chronic obstructive pulmonary disease (COPD), end stage renal disease (ESRD) requiring dialysis, functional status, and American Society of Anesthesiologists (ASA) class. Previous procedures included open or endovascular AAA repair or open abdominal surgery. Pre-operative laboratory investigations included serum sodium, blood urea nitrogen (BUN), serum creatinine, albumin, white blood cell count, hematocrit, platelet count, international normalized unit (INR), and partial thromboplastin time (PTT). Anatomic characteristics included AAA diameter, surgical approach, and proximal/distal AAA extent. Concomitant procedures included renal, visceral, and lower extremity revascularization. To account for the impact of advancements in surgical/anesthesia techniques over time on outcomes, the year of operation was included as an input variable. A complete list of features and definitions can be found in eTable 2.

Outcomes

The primary outcome was 30-day post-procedural MACE, defined as a composite of myocardial infarction (MI), stroke, or death. MI was defined as electrocardiogram changes indicative of acute MI (ST elevation > 1 mm in two or more contiguous leads, new left bundle branch block, or new q-wave in two or more contiguous leads), new elevation in troponin greater than 3 times the regular upper level of the reference range in the setting of suspected myocardial ischemia, or physician/advanced provider diagnosis of MI. Stroke was defined as motor, sensory, or cognitive dysfunction which persists for 24 h in the setting of a suspected stroke. Death was defined as all-cause mortality.

Thirty-day post-procedural secondary outcomes included individual components of the primary outcome, any re-intervention, other morbidity, non-home discharge, and unplanned readmission. Other morbidity was defined as a composite of ischemic colitis, lower extremity ischemia requiring intervention, secondary AAA rupture, surgical site infection (SSI), wound dehiscence, pneumonia, unplanned reintubation, pulmonary embolism (PE), failure to wean from ventilator (cumulative time of ventilator-assisted respirations > 48 h), acute kidney injury (AKI; rise in creatinine of > 2 mg/dL from pre-operative value or requirement of dialysis in a patient who did not require dialysis pre-operatively), urinary tract infection (UTI), cardiac arrest, deep vein thrombosis (DVT) requiring therapy, Clostridium difficile infection, sepsis, or septic shock. Non-home discharge was defined as discharge to rehabilitation, skilled care, or other facility. These outcomes are defined by the ACS NSQIP data dictionary18.

Model development

Six ML models were trained to predict 30-day primary and secondary outcomes: Extreme Gradient Boosting (XGBoost), random forest, Naïve Bayes classifier, radial basis function (RBF) support vector machine (SVM), multilayer perceptron (MLP) artificial neural network (ANN) with a single hidden layer, sigmoid activation function, and cross-entropy loss function, and logistic regression. The reason for choosing these models is because they have been previously shown to achieve excellent performance for predicting surgical outcomes19,20,21. The baseline comparator was logistic regression, which is the most common modelling technique used in traditional risk prediction tools22.

Our data were split into training and test sets in a 70:30 ratio23. We then performed 10-fold cross-validation and grid search on the training set to find optimal hyperparameters for each ML model24,25. Preliminary analysis of our data demonstrated that the primary outcome was uncommon, occurring in 311/3,620 (8.6%) of patients in our cohort. Class balance was improved using Random Over-Sample Examples (ROSE), a technique that uses smoothed bootstrapping to draw new samples from the feature space neighbourhood around the minority class and is a commonly used method to support predictive modelling of rare events26. The models were then evaluated on test set data and ranked based on the primary discriminatory metric of AUROC. Our best performing model was XGBoost, which had the following hyperparameters that were optimized for our dataset: number of rounds = 150, maximum tree depth = 3, learning rate = 0.3, gamma = 0, column sample by tree = 0.6, minimum child weight = 1, subsample = 1. eTable 3 details the process for selecting these hyperparameters. Once we identified the best performing ML model for the primary outcome, the algorithm was further trained to predict secondary outcomes.

Statistical analysis

Baseline demographic and clinical characteristics for patients with and without 30-day MACE were summarized as means (standard deviation) or number (proportion). Differences in characteristics between groups were assessed using independent t-test for continuous variables or chi-square test for categorical variables. Statistical significance was set at two-tailed p < 0.05.

The primary metric for assessing model performance was AUROC (95% CI), a validated method to assess discriminatory ability that considers both sensitivity and specificity27. Secondary performance metrics were accuracy, sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV). To further assess model performance, we plotted a calibration curve and calculated the Brier score, which measures the agreement between predicted and observed event probabilities28. In the final model, feature importance was determined by ranking the top 10 predictors based on the variable importance score (gain), which is a measure of the relative impact of each covariate in contributing to an overall prediction29. To assess model robustness on various populations, we performed subgroup analysis of predictive performance based on age (under vs. over 70 years), sex (male vs. female), race (White vs. non-White), ethnicity (Hispanic vs. Non-Hispanic), proximal AAA extent (infrarenal vs. juxta/para/suprarenal), presence of prior open or endovascular AAA repair, and need for concomitant renal/visceral/lower extremity revascularization.

Based on a validated sample size calculator for clinical prediction models, to achieve a minimum AUROC of 0.8 with an outcome rate of ~ 8% and 35 input features, the minimum sample size required is 3,213 patients with 258 events30,31. Our cohort of 3,620 patients with 311 primary events meets this sample size requirement. There was less than 5% missing data for variables of interest; therefore, complete-case analysis was applied whereby only non-missing covariates for each patient were considered32. This has been demonstrated to be a valid analytical method for datasets with small amounts of missing data (< 5%) and reflects predictive modelling of real-world data, which inherently includes missing information33,34. Given the small percentage of missing data, the use of various imputation methods, including multiple imputation by chained equations and mean/mode imputation, did not change model performance. All analyses were performed in R version 4.2.135with the following packages: caret36, xgboost37, ranger38, naivebayes39, e107140, nnet41, and pROC42.

Conference presentation

Presented at the Society for Vascular Surgery 2024 Vascular Annual Meeting in Chicago, Illinois, United States (June 19–22, 2024).

Results

Patients and events

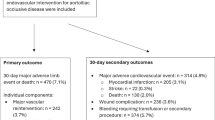

From a cohort of 5,784 patients who underwent open AAA repair in the NSQIP targeted database between 2011 and 2021, we excluded 2,164 patients for the following reasons: treatment for ruptured AAA (n = 1,421), symptomatic AAA (n = 401), type IV thoracoabdominal aortic aneurysm (n = 103), thromboembolic disease (n = 124), dissection (n = 90), and graft infection (n = 25). Overall, we included 3,620 patients. The primary outcome of 30-day MACE occurred in 311 (8.6%) patients. The 30-day secondary outcomes occurred in the following distribution: MI (n = 154 [4.3%]), stroke (n = 28 [0.8%]), death (n = 170 [4.7%]), re-intervention (n = 344 [9.5%]), other morbidity (n = 947 [26.2%]; composite of ischemic colitis (n = 120), lower extremity ischemia requiring intervention (n = 83), secondary AAA rupture (n = 11), SSI (n = 118), dehiscence (n = 48), pneumonia (n = 226), unplanned reintubation (n = 223), PE (n = 21), failure to wean from ventilator (n = 274), AKI (n = 209), UTI (n = 76), cardiac arrest (n = 81), DVT (n = 46), sepsis (n = 54), septic shock (n = 88), Clostridium difficile infection (n = 28)), non-home discharge (n = 905 [25.0%]), and unplanned readmission (n = 214 [5.9%]).

Pre-operative demographic and clinical characteristics

Compared to patients without a primary outcome, those who developed 30-day MACE were older and more likely to be transferred from another hospital and reside in nursing homes. They were also more likely to have hypertension, insulin dependent diabetes, CHF, COPD, a previous endovascular AAA repair, and be current smokers and ASA class 4 or higher. Notable differences in laboratory investigations included patients with a primary outcome having higher levels of creatinine and BUN. Anatomically, patients with 30-day MACE had a larger mean AAA diameter, with a greater proportion having suprarenal, pararenal, or juxtarenal aneurysms. They were also more likely to require concomitant renal, visceral, and lower extremity revascularization (Table 1).

Model performance

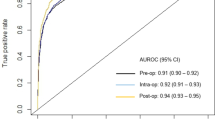

Of the 6 ML models evaluated on test set data for predicting 30-day MACE following open AAA repair, XGBoost had the best performance with an AUROC (95% CI) of 0.90 (0.89–0.91) compared to random forest [0.88 (0.87–0.89)], RBF SVM [0.86 (0.85–0.88)], Naïve Bayes [0.82 (0.81–0.83)], MLP ANN [0.77 (0.75–0.79)], and logistic regression [0.66 (0.64–0.68)]. The other performance metrics of XGBoost were the following: accuracy 0.81 (95% CI 0.80–0.82), sensitivity 0.81, specificity 0.81, PPV 0.82, and NPV 0.80 (Table 2).

For 30-day secondary outcomes, XGBoost achieved the following AUROC’s (95% CI): MI [0.87 (0.86–0.88)], stroke [0.88 (0.87–0.89)], death [0.91 (0.90–0.92)], re-intervention [0.81 (0.80–0.83)], other morbidity [0.85 (0.84–0.87)], non-home discharge [0.90 (0.89–0.91)], and unplanned readmission [0.83 (0.81–0.84)] (Table 3).

The ROC curve for prediction of 30-day MACE using XGBoost is demonstrated in Fig. 1. Our model achieved good calibration with a Brier score of 0.03, indicating excellent agreement between predicted and observed evented probabilities (Fig. 2). The top 10 predictors of 30-day MACE in our XGBoost model were the following: (1) prior endovascular AAA repair, (2) concomitant renal revascularization, (3) CHF, (4) ASA class, (5) concomitant visceral revascularization, (6) age, (7) proximal AAA extent, (8) transfer from another hospital, (9) COPD, and (10) pre-operative creatinine (Fig. 3).

Variable importance scores (gain) for the top 10 predictors of 30-day major adverse cardiovascular events (MACE) following open abdominal aortic aneurysm repair in the Extreme Gradient Boosting (XGBoost) model. Abbreviations: EVAR (endovascular aneurysm repair), CHF (congestive heart failure), AAA (abdominal aortic aneurysm), COPD (chronic obstructive pulmonary disease). Explanation of figure: patients who underwent a prior EVAR, required concomitant renal/visceral revascularization, had a more proximal AAA extent, or were transferred from another hospital likely underwent a more anatomically/technically challenging repair, and therefore, were more likely to suffer 30-day MACE. Furthermore, patients with older age or more comorbidities, including CHF, COPD, or chronic kidney disease as measured by pre-operative creatinine, thereby contributing to a higher ASA class, were more medically complex and therefore more likely to suffer 30-day MACE.

Subgroup analysis

Our XGBoost model performance for predicting 30-day MACE remained excellent on subgroup analysis of specific demographic and clinical populations with the following AUROC’s (95% CI): age < 70 [0.90 (0.88–0.92)] and age > 70 [0.88 (0.87–0.90)] (eFigure 1), males [0.89 (0.88–0.91)] and females [0.91 (0.89–0.92)] (eFigure 2), White patients [0.89 (0.88–0.91)] and non-White patients [0.90 (0.88–0.91)] (eFigure 3), Hispanic patients [0.89 (0.87–0.92)] and non-Hispanic patients [0.90 (0.89–0.91)] (eFigure 4), infrarenal AAA [0.90 (0.89–0.92)] and juxta/para/suprarenal AAA [0.89 (0.87–0.91)] (eFigure 5), patients with prior AAA repair [0.90 (0.88–0.91)] and without prior AAA repair [0.90 (0.89–0.91)] (eFigure 6), and patients requiring concomitant renal/visceral/lower extremity revascularization [0.89 (0.88–0.91)] and those who did not [0.89 (0.88–0.91)] (eFigure 7).

Discussion

Summary of findings

In this study, we used data from the ACS NSQIP targeted AAA files between 2011 and 2021 consisting of 3,620 patients who underwent open AAA repair to develop ML models that accurately predict 30-day MACE with an AUROC of 0.90. Our algorithms also predicted 30-day MI, stroke, death, re-intervention, other morbidity, non-home discharge, and unplanned readmission with AUROC’s ranging from 0.81 to 0.91. We showed several other key findings. First, patients who develop 30-day MACE following open AAA repair represent a high-risk population with several predictive factors at the pre-operative stage. Notably, they are older with more comorbidities, have more complex aneurysms, and are more likely to require concomitant revascularization procedures. Second, we trained 6 ML models to predict 30-day MACE using pre-operative features and showed that XGBoost achieved the best performance. Our model was well calibrated and achieved a Brier score of 0.03. On subgroup analysis based on age, sex, race, ethnicity, proximal AAA extent, prior AAA repair, and need for concomitant revascularization, our algorithms maintained robust performance. Finally, we identified the top 10 predictors of 30-day MACE in our ML models. These features can be used by clinicians to identify factors that contribute to risk predictions, thereby guiding patient selection and pre-operative optimization. For example, patients with multiple comorbidities could be further assessed and optimized through pre-operative consultations with cardiologists or internal medicine specialists to mitigate adverse events43,44. Overall, we have developed robust ML-based prognostic models with excellent predictive ability for perioperative outcomes following open AAA repair, which may help guide clinical decision-making to improve outcomes and reduce costs from complications, reinterventions, and readmissions.

Comparison to existing literature

A systematic review of 13 outcome prediction models for AAA repair was published by Lijftogt et al. (2017)5. The authors showed that most models had significant methodological and clinical limitations, including lack of calibration measures, heterogeneous datasets, and large numbers of variables requiring manual input5. After assessing multiple widely studied models including the Cambridge POSSUM (Physiological and Operative Severity Score for the enUmeration of Mortality and morbidity), Glasgow Aneurysm Score (GAS), and Vascular Study Group of New England (VSGNE) model, the authors concluded that best performing algorithms were the British Aneurysm Repair (BAR) score (AUROC 0.83) and Vascular Biochemistry and Hematology Outcome Model (VBHOM; AUROC 0.85)5. Both models were geographically limited to United Kingdom datasets5. VBHOM was trained on patients undergoing either elective or ruptured AAA repair; however, when applied to an elective open AAA repair cohort, performance declined significantly to AUROC 0.685. We applied novel ML techniques to a multi-national NSQIP cohort consisting specifically of patients undergoing open repair for non-ruptured, non-symptomatic AAA and achieved an AUROC of 0.90 for the primary outcome of 30-day MACE. Our ML model also has the advantages of excellent calibration (Brier score 0.03) and automated input of variables. Additionally, most existing models focus on mortality prediction, while our algorithm’s ability to predict secondary outcomes including non-home discharge and unplanned readmission allows for potential impact on both patient outcomes and health care costs45. Overall, in comparison to existing tools, our ML algorithms are methodologically robust, perform better, and consider a greater number of clinically relevant outcomes.

Using NSQIP data, Bonde et al. (2021) trained ML algorithms on a cohort of patients undergoing over 2,900 different procedures to predict peri-operative complications, achieving AUROC’s of 0.85–0.8846. Given that patients undergoing open AAA repair represent a unique population often with a high number of vascular comorbidities, the applicability of general surgical risk prediction tools may be limited47. Elsewhere, Eslami and colleagues (2017) used logistic regression to develop a mortality risk prediction model for elective endovascular and open AAA repair using NSQIP data, achieving an AUROC of 0.7548. By applying more advanced ML techniques to an updated NSQIP cohort and developing algorithms specific to patients undergoing open AAA repair, we achieved an AUROC of 0.90. Therefore, we demonstrate the value of building procedure-specific ML models, which can improve predictive performance. In comparison to our previously described VQI open AAA repair model11, the NSQIP algorithm predicts longer term MACE (30-day vs. in-hospital) and achieved similar predictive performance (AUROC’s ≥ 0.90). Given that NSQIP data captures information from ~ 15 countries13, compared to 3 for VQI12, the NSQIP model may be more generalizable across countries. This demonstrates the value of using NSQIP data to build ML models. This algorithm complements our previously described ML model for predicting 1-year mortality following endovascular AAA repair49.

Explanation of findings

There are several explanations for our findings. First, patients who develop adverse events following open AAA repair represent a high-risk group with multiple cardiovascular and anatomic risk factors, which is corroborated by previous literature50. The SVS AAA guidelines provide several recommendations regarding careful surgical risk assessment, appropriate patient/procedure selection, and pre-operative optimization for patients being considered for AAA repair4. In particular, there is a strong recommendation for smoking cessation at least 2 weeks before aneurysm repair, yet over 50% of patients who developed 30-day MACE in our cohort were current smokers at the time of intervention4. Therefore, there are important opportunities to improve care for patients by understanding their surgical risk and medically optimizing them prior to surgery51. Additionally, we demonstrate the contribution of anatomic complexity to adverse outcomes due to proximal aneurysm extent, prior endovascular AAA repair, and need for concomitant renal/visceral/lower extremity revascularization, which is corroborated by previous literature52. These patients may therefore benefit from multidisciplinary vascular assessment to ensure appropriate patient/procedure selection, including consideration of surveillance and advanced endovascular therapies53. Second, our ML models performed better than logistic regression for several reasons. Compared to logistic regression, advanced ML techniques can better model complex, non-linear relationships between inputs and outputs54,55. This is especially important in health care data, where patient outcomes can be influenced by many factors56. Our best performing algorithm was XGBoost, which has unique advantages including the avoidance of overfitting and faster computing while maintaining precision57,58,59. Furthermore, XGBoost works well with structured data, which may explain why it outperformed more complex algorithms such as MLP ANN on the NSQIP database60. While advanced ML models may outperform logistic regression in predictive performance, it is important to consider the limitations of ML models, including overfitting, reliance on large datasets, and potential lack of mechanistic insight61,62. Traditional statistical techniques are better designed to characterize specific relationships between variables and outcomes, while ML is more focused on developing high-performing prediction models while potentially sacrificing explainability63,64. Third, our model performance remained robust on subgroup analysis of specific demographic and clinical populations. This is an important finding given that algorithm bias is a significant issue in ML studies65. We were likely able to avoid these biases due to the excellent capture of sociodemographic data by ACS NSQIP, a multi-national database that includes diverse patient populations66,67. Fourth, the fact that a prior endovascular AAA repair was the most important predictor of 30-day MACE in our model suggests the importance of following device manufacturers’ instructions for use to reduce the risk of complications requiring open re-intervention such as endoleak, graft migration, and occlusion68.

Implications

Our ML models can be used to guide clinical decision-making in several ways. Pre-operatively, a patient predicted to be at high risk of an adverse outcome should be further assessed in terms of modifiable and non-modifiable factors69. Patients with significant non-modifiable risks may benefit from surveillance alone or consideration of endovascular therapy70. Specifically, those with anatomically complex aneurysms may benefit from multidisciplinary vascular assessment to optimize patient selection and procedure planning53. Those with modifiable risks, such as multiple cardiovascular comorbidities, should be referred to cardiologists or internal medicine specialists for further evaluation43,44. Pre-operative anesthesiology consultation may also be helpful for high-risk patients if not already a part of routine care71. Additionally, patients at high risk of non-home discharge or readmission should receive early support from allied health professionals to optimize safe discharge planning72. These peri-operative decisions guided by our ML models have the potential to improve outcomes and reduce costs by mitigating adverse events.

The programming code used for the development and evaluation of our ML models is publicly available through GitHub. These tools can be used by clinicians involved in the peri-operative management of patients being considered for open AAA repair. On a systems-level, our models can be readily implemented by the > 700 centres globally that currently participate in ACS NSQIP. They also have potential for use at non-NSQIP sites, as the input features are commonly captured variables for the routine care of vascular surgery patients73. Given the challenges of deploying prediction models into clinical practice, consideration of implementation science principles is critical74. A major limitation of existing tools is the need for manual input of variables by clinicians, which is time-consuming and deters routine use7. Our ML models can automatically extract a patient’s prospectively collected NSQIP data to make surgical risk predictions, thereby improving practicality in busy clinical settings6. Given that our models are designed to automatically extract many features from the NSQIP database to make risk predictions and model performance declined significantly with a reduction in the number of features, we opted not to develop a simplified model with fewer input variables. Rather than a web-based application for clinician use, which is limited by the need to manually input variables, our recommended deployment plan involves integration strategies with hospital electronic health record (EHR) systems and a user-friendly decision-support tool. Specifically, significant efforts have been made to integrate clinical registry data with EHR systems75,76,77. For example, clinical notes may be automatically extracted into structured NSQIP variables through natural language processing techniques75,76,77. Through this work, our open-source models can be deployed in hospital EHR systems with the support of institutional data analytics teams to provide automated risk predictions to support clinical decision-making. We advocate for dedicated health care data analytics teams at the institution level, as their significant benefits have been previously demonstrated and model implementation can be facilitated by these experts78. Through this study, we have also provided a framework for the development of robust ML models that predict open AAA repair outcomes, which can be applied by individual centers for their specific patient populations.

Limitations

Our study has several limitations. First, our models were developed using ACS NSQIP data. Future studies should assess whether performance can be generalized to institutions that do not participate in ACS NSQIP or record the pre-operative features used in our models. Validation of the models using an external dataset would strengthen generalizability. However, this was not plausible for this study given differences in the definitions of variables and outcomes between various databases, such as NSQIP and VQI12,18. With the recent creation of the ACS/SVS Vascular Verification Program, there may be an opportunity to perform external validation of our models on a unified NSQIP/VQI dataset in the future79. Furthermore, prospective validation of our models on a real-world cohort to assess predictive performance and impact on patient outcomes in future work would further strengthen the potential clinical utility of the models. Second, the ACS NSQIP database captures 30-day outcomes. Evaluation of ML algorithms on other data sources with longer follow-up would augment our understanding of long-term surgical risk. Third, we evaluated 6 ML models; however, other ML models exist. We chose these 6 ML models because they have been demonstrated to achieve the best performance for predicting surgical outcomes using structured data19. We achieved excellent performance; however ongoing evaluation of novel ML techniques would be prudent. Fourth, surgeon experience and hospital volumes were not recorded in our ACS NSQIP dataset, which may have reduced the predictive performance of our models. Given the potential explanatory power of these variables on surgical outcomes, it would be prudent to build future ML models on datasets that capture these variables.

Conclusions

In this study, we used the ACS NSQIP database to develop robust ML models that pre-operatively predict 30-day MACE following open AAA repair with excellent performance (AUROC 0.90). Our algorithms also predicted MI, stroke, death, re-intervention, other morbidity, non-home discharge, and readmission with AUROC’s of 0.81–0.91. Notably, our models remained robust across demographic/clinical subpopulations and outperformed existing prediction tools and logistic regression, and therefore, have potential for important utility in the peri-operative management of patients being considered for open AAA repair to mitigate adverse outcomes. Prospective validation of our ML algorithms is warranted.

Data availability

The data used for this study comes from the American College of Surgeons National Surgical Quality Improvement Program database. Access to and use of the data requires approval through an application process available at https://www.facs.org/quality-programs/data-and-registries/acs-nsqip/participant-use-data-file/. The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.

Code availability

The complete code used for model development and evaluation in this project is publicly available on GitHub: https://github.com/benli12345/AAA-ML-NSQIP.

References

Shaw, P. M., Loree, J. & Gibbons, R. C. Abdominal Aortic Aneurysm. In StatPearls (StatPearls Publishing, 2022).

Reimerink, J. J., van der Laan, M. J., Koelemay, M. J., Balm, R. & Legemate, D. A. Systematic review and meta-analysis of population-based mortality from ruptured abdominal aortic aneurysm. Br. J. Surg. 100, 1405–1413 (2013).

Menard, M. T. et al. Outcome in patients at high risk after open surgical repair of abdominal aortic aneurysm. J. Vasc Surg. 37, 285–292 (2003).

Chaikof, E. L. et al. The society for vascular surgery practice guidelines on the care of patients with an abdominal aortic aneurysm. J. Vasc Surg. 67, 2–77e2 (2018).

Lijftogt, N. et al. Systematic review of mortality risk prediction models in the era of endovascular abdominal aortic aneurysm surgery. Br. J. Surg. 104, 964–976 (2017).

Bilimoria, K. Y. et al. Development and evaluation of the universal ACS NSQIP surgical risk calculator: A decision aid and informed consent tool for patients and surgeons. J. Am. Coll. Surg. 217, 833–842 (2013).

Sharma, V. et al. Adoption of clinical risk prediction tools is limited by a lack of integration with electronic health records. BMJ Health Care Inf. 28, e100253 (2021).

Dilaver, N. M., Gwilym, B. L., Preece, R., Twine, C. P. & Bosanquet, D. C. Systematic review and narrative synthesis of surgeons’ perception of postoperative outcomes and risk. BJS Open. 4, 16–26 (2020).

Baştanlar, Y. & Özuysal, M. Introduction to machine learning. Methods Mol. Biol. 1107, 105–128 (2014).

Shah, P. et al. Artificial intelligence and machine learning in clinical development: a translational perspective. NPJ Digit. Med. 2, 69 (2019).

Li, B. et al. Using machine learning to predict outcomes following open abdominal aortic aneurysm repair. J. Vasc Surg. S0741-5214 (23), 01935–01933. (2023). https://doi.org/10.1016/j.jvs.2023.08.121

Society for Vascular Surgery Vascular Quality Initiative. (VQI). https://www.vqi.org/

ACS NSQIP. ACS https://www.facs.org/quality-programs/data-and-registries/acs-nsqip/

World Medical Association. World medical association declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA 310, 2191–2194 (2013).

Collins, G. S. et al. TRIPOD + AI statement: updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 385, e078378 (2024).

Shiloach, M. et al. Toward robust information: data quality and inter-rater reliability in the American college of surgeons National surgical quality improvement program. J. Am. Coll. Surg. 210, 6–16 (2010).

Cohen, M. E. et al. Optimizing ACS NSQIP modeling for evaluation of surgical quality and risk: patient risk adjustment, procedure mix adjustment, shrinkage adjustment, and surgical focus. J. Am. Coll. Surg. 217, 336–346e1 (2013).

ACS NSQIP Participant Use Data File. ACS https://www.facs.org/quality-programs/data-and-registries/acs-nsqip/participant-use-data-file/

Elfanagely, O. et al. Machine learning and surgical outcomes prediction: A systematic review. J. Surg. Res. 264, 346–361 (2021).

Bektaş, M., Tuynman, J. B., Pereira, C., Burchell, J., van der Peet, D. L. & G. L. & Machine learning algorithms for predicting surgical outcomes after colorectal surgery: A systematic review. World J. Surg. https://doi.org/10.1007/s00268-022-06728-1 (2022).

Senders, J. T. et al. Machine learning and neurosurgical outcome prediction: A systematic review. World Neurosurg. 109, 476–486e1 (2018).

Shipe, M. E., Deppen, S. A., Farjah, F. & Grogan, E. L. Developing prediction models for clinical use using logistic regression: an overview. J. Thorac. Dis. 11, S574–S584 (2019).

Dobbin, K. K. & Simon, R. M. Optimally splitting cases for training and testing high dimensional classifiers. BMC Med. Genomics. 4, 31 (2011).

Jung, Y. & Hu, J. A K-fold averaging Cross-validation procedure. J. Nonparametric Stat. 27, 167–179 (2015).

Adnan, M., Alarood, A. A. S. & Uddin, M. I. Ur Rehman, I. Utilizing grid search cross-validation with adaptive boosting for augmenting performance of machine learning models. PeerJ Comput. Sci. 8, e803 (2022).

Wibowo, P. & Fatichah, C. Pruning-based oversampling technique with smoothed bootstrap resampling for imbalanced clinical dataset of Covid-19. J King Saud Univ. - Comput Inf. Sci. 34, 7830–7839 (2022).

Hajian-Tilaki, K. Receiver operating characteristic (ROC) curve analysis for medical diagnostic test evaluation. Casp. J. Intern. Med. 4, 627–635 (2013).

Redelmeier, D. A., Bloch, D. A. & Hickam, D. H. Assessing predictive accuracy: how to compare Brier scores. J. Clin. Epidemiol. 44, 1141–1146 (1991).

Loh, W. Y. & Zhou, P. Variable importance scores. J. Data Sci. 19, 569–592 (2021).

Riley, R. et al. (ed, D.) Calculating the sample size required for developing a clinical prediction model. BMJ (2020). https://doi.org/10.1136/bmj.m441

Ensor, J., Martin, E. C., Riley, R. D. & pmsampsize Calculates the Minimum Sample Size Required for Developing a Multivariable Prediction Model. (2022).

Schafer, J. L. Multiple imputation: a primer. Stat. Methods Med. Res. 8, 3–15 (1999).

Ross, R. K., Breskin, A. & Westreich, D. When is a Complete-Case approach to missing data valid?? The importance of Effect-Measure modification. Am. J. Epidemiol. 189, 1583–1589 (2020).

Hughes, R. A., Heron, J., Sterne, J. A. C. & Tilling, K. Accounting for missing data in statistical analyses: multiple imputation is not always the answer. Int. J. Epidemiol. 48, 1294–1304 (2019).

R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. (2022). https://www.R-project.org/

Kuhn, M. et al. caret: Classification and Regression Training. (2022).

Chen, T., Guestrin, C. & XGBoost: A Scalable Tree Boosting System. Proc. 22nd ACM SIGKDD Int. Conf. Knowl. Discov. Data Min. - KDD ’16 785–794 (2016).

Wright, M. N., Wager, S. & Probst, P. ranger: A Fast Implementation of Random Forests. (2022).

naivebayes. High Performance Implementation of the Naive Bayes Algorithm version 0.9.7 from CRAN. https://rdrr.io/cran/naivebayes/

svm function - RDocumentation. https://www.rdocumentation.org/packages/e1071/versions/1.7-11/topics/svm

Ripley, B. & Venables, W. nnet: Feed-Forward Neural Networks and Multinomial Log-Linear Models. (2022).

Robin, X. et al. pROC: an open-source package for R and S + to analyze and compare ROC curves. BMC Bioinform. 12, 77 (2011).

Davis, F. M. et al. The clinical impact of cardiology consultation prior to major vascular surgery. Ann. Surg. 267, 189–195 (2018).

Rivera, R. A. et al. Preoperative medical consultation: maximizing its benefits. Am. J. Surg. 204, 787–797 (2012).

Friedman, B. & Basu, J. The rate and cost of hospital readmissions for preventable conditions. Med. Care Res. Rev. MCRR. 61, 225–240 (2004).

Bonde, A. et al. Assessing the utility of deep neural networks in predicting postoperative surgical complications: a retrospective study. Lancet Digit. Health. 3, e471–e485 (2021).

Hers, T. M. et al. Inaccurate risk assessment by the ACS NSQIP risk calculator in aortic surgery. J. Clin. Med. 10, 5426 (2021).

Eslami, M. H., Rybin, D. V., Doros, G. & Farber, A. Description of a risk predictive model of 30-day postoperative mortality after elective abdominal aortic aneurysm repair. J. Vasc Surg. 65, 65–74e2 (2017).

Li, B. et al. Machine learning to predict outcomes following endovascular abdominal aortic aneurysm repair. Br. J. Surg. (2023). https://doi.org/10.1093/bjs/znad287

Kessler, V., Klopf, J., Eilenberg, W., Neumayer, C. & Brostjan, C. AAA revisited: A comprehensive review of risk factors, management, and hallmarks of pathogenesis. Biomedicines 10, 94 (2022).

Saratzis, A. et al. Multi-Centre study on cardiovascular risk management on patients undergoing AAA surveillance. Eur. J. Vasc Endovasc Surg. Off J. Eur. Soc. Vasc Surg. 54, 116–122 (2017).

Young, Z. Z. et al. Aortic anatomic severity grade correlates with midterm mortality in patients undergoing abdominal aortic aneurysm repair. Vasc Endovascular Surg. 53, 292–296 (2019).

Drayton, D. J. et al. Multidisciplinary team decisions in management of abdominal aortic aneurysm: A service and quality evaluation. EJVES Vasc Forum. 54, 49–53 (2022).

Stoltzfus, J. C. Logistic regression: a brief primer. Acad. Emerg. Med. Off J. Soc. Acad. Emerg. Med. 18, 1099–1104 (2011).

Kia, B. et al. Nonlinear dynamics based machine learning: utilizing dynamics-based flexibility of nonlinear circuits to implement different functions. PloS One. 15, e0228534 (2020).

Chatterjee, P., Cymberknop, L. J. & Armentano, R. L. Nonlinear systems in healthcare towards intelligent disease prediction. Nonlinear Syst. - Theor Asp Recent. Appl. 1, e88163 (2019).

Ravaut, M. et al. Predicting adverse outcomes due to diabetes complications with machine learning using administrative health data. Npj Digit. Med. 4, 1–12 (2021).

Wang, R., Zhang, J., Shan, B., He, M. & Xu, J. XGBoost machine learning algorithm for prediction of outcome in aneurysmal subarachnoid hemorrhage. Neuropsychiatr Dis. Treat. 18, 659–667 (2022).

Fang, Z. G., Yang, S. Q., Lv, C. X., An, S. Y. & Wu, W. Application of a data-driven XGBoost model for the prediction of COVID-19 in the USA: a time-series study. BMJ Open. 12, e056685 (2022).

Viljanen, M., Meijerink, L., Zwakhals, L. & van de Kassteele, J. A machine learning approach to small area estimation: predicting the health, housing and well-being of the population of Netherlands. Int. J. Health Geogr. 21, 4 (2022).

Charilaou, P. & Battat, R. Machine learning models and over-fitting considerations. World J. Gastroenterol. 28, 605–607 (2022).

Sharma, A., Lysenko, A., Jia, S., Boroevich, K. A. & Tsunoda, T. Advances in AI and machine learning for predictive medicine. J. Hum. Genet. 69, 487–497 (2024).

Rajula, H. S. R., Verlato, G., Manchia, M., Antonucci, N. & Fanos, V. Comparison of conventional statistical methods with machine learning in medicine: diagnosis, drug development, and treatment. Med. (Mex). 56, 455 (2020).

Lu, S. C., Swisher, C. L., Chung, C., Jaffray, D. & Sidey-Gibbons, C. On the importance of interpretable machine learning predictions to inform clinical decision making in oncology. Front. Oncol. 13, 1129380 (2023).

Gianfrancesco, M. A., Tamang, S., Yazdany, J. & Schmajuk, G. Potential biases in machine learning algorithms using electronic health record data. JAMA Intern. Med. 178, 1544–1547 (2018).

Mazmudar, A., Vitello, D., Chapman, M., Tomlinson, J. S. & Bentrem, D. J. Gender as a risk factor for adverse intraoperative and postoperative outcomes of elective pancreatectomy. J. Surg. Oncol. 115, 131–136 (2017).

Halsey, J. N., Asti, L. & Kirschner, R. E. The impact of race and ethnicity on surgical risk and outcomes following palatoplasty: an analysis of the NSQIP pediatric database. Cleft Palate-Craniofacial J. Off Publ Am. Cleft Palate-Craniofacial Assoc.10556656221078154 (2022). https://doi.org/10.1177/10556656221078154

Hahl, T. et al. Long-term outcomes of endovascular aneurysm repair according to instructions for use adherence status. J. Vasc Surg. 76, 699–706e2 (2022).

Shaydakov, M. E. & Tuma, F. Operative risk. In StatPearls (StatPearls Publishing, 2022).

Lim, S. et al. Outcomes of endovascular abdominal aortic aneurysm repair in high-risk patients. J. Vasc Surg. 61, 862–868 (2015).

O’Connor, D. B. et al. An anaesthetic pre-operative assessment clinic reduces pre-operative inpatient stay in patients requiring major vascular surgery. Ir. J. Med. Sci. 180, 649–653 (2011).

Patel, P. R. & Bechmann, S. Discharge planning. In StatPearls (StatPearls Publishing, 2022).

Nguyen, L. L. & Barshes, N. R. Analysis of large databases in vascular surgery. J. Vasc Surg. 52, 768–774 (2010).

Northridge, M. E. & Metcalf, S. S. Enhancing implementation science by applying best principles of systems science. Health Res. Policy Syst. 14, 74 (2016).

Mao, J. et al. Combining electronic health records data from a clinical research network with registry data to examine long-term outcomes of interventions and devices: an observational cohort study. BMJ Open. 14, e085806 (2024).

Ehrenstein, V., Kharrazi, H., Lehmann, H. & Taylor, C. O. Obtaining Data From Electronic Health Records. in Tools and Technologies for Registry Interoperability, Registries for Evaluating Patient Outcomes: A User’s Guide, 3rd Edition, Addendum 2 [Internet] (Agency for Healthcare Research and Quality (US), (2019).

Williams, A., Goedicke, W., Tissera, K. A. & Mankarious, L. A. Leveraging existing tools in electronic health record systems to Automate clinical registry compilation. Otolaryngol. --Head Neck Surg. Off J. Am. Acad. Otolaryngol. -Head Neck Surg. 162, 408–409 (2020).

Batko, K. & Ślęzak, A. The use of big data analytics in healthcare. J. Big Data. 9, 3 (2022).

ACS and SVS Launch National Quality Verification Program for Vascular Care. ACS https://www.facs.org/for-medical-professionals/news-publications/news-and-articles/press-releases/2023/acs-and-svs-launch-national-quality-verification-program-for-vascular-care/

Acknowledgements

The American College of Surgeons National Surgical Quality Improvement Program (ACS NSQIP) and the hospitals participating in the ACS NSQIP are the source of the data used herein; they have not verified, and are not responsible for, the statistical validity of the data analysis or the conclusions derived by the authors.

Funding

This research was partially funded by the Canadian Institutes of Health Research, Ontario Ministry of Health, PSI Foundation, and Schwartz Reisman Institute for Technology and Society at the University of Toronto (BL). The funding sources did not play a role in any of the following areas: design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Author information

Authors and Affiliations

Contributions

All authors meet all four criteria: (1) Substantial contributions to the conception or design of the work or the acquisition, analysis, or interpretation of the data, (2) Drafting the work or revising it critically for important intellectual content, (3) Final approval of the completed version, and (4) Accountability for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. BL: concept, design, acquisition of data, analysis and interpretation of data, drafting the article, final approval of article. BA: concept, design, analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. DB (data scientist): analysis and interpretation of data, support for model development, revising article critically for important intellectual content, final approval of article. LAO: analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. MAH: analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. DSL: analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. DNW: analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. ODR: analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. CdeM: concept, design, analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. MM: concept, design, analysis and interpretation of data, revising article critically for important intellectual content, final approval of article. MAO: concept, design, acquisition of data, analysis and interpretation of data, revising article critically for important intellectual content, final approval of article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, B., Aljabri, B., Beaton, D. et al. Predicting outcomes following open abdominal aortic aneurysm repair using machine learning. Sci Rep 15, 14362 (2025). https://doi.org/10.1038/s41598-025-98573-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-98573-0