Abstract

There is growing support and interest in postsecondary interdisciplinary environmental education, which integrates concepts and disciplines in addition to providing varied perspectives. There is a need to assess student learning in these programs as well as rigorous evaluation of educational practices, especially of complex synthesis concepts. This work tests a text classification machine learning model as a tool to assess student systems thinking capabilities using two questions anchored by the Food-Energy-Water (FEW) Nexus phenomena by answering two questions (1) Can machine learning models be used to identify instructor-determined important concepts in student responses? (2) What do college students know about the interconnections between food, energy, and water, and how have students assimilated systems thinking into their constructed responses about FEW? Reported here is a broad range of model performances across 26 text classification models associated with two different assessment items, with model accuracy ranging from 0.755 to 0.992. Expert-like responses were infrequent in our dataset compared to responses providing simpler, incomplete explanations of the systems presented in the question. For those students moving from describing individual effects to multiple effects, their reasoning about the mechanism behind the system indicates advanced systems thinking ability. Specifically, students exhibit higher expertise in explaining changing water usage than discussing trade-offs for such changing usage. This research represents one of the first attempts to assess the links between foundational, discipline-specific concepts and systems thinking ability. These text classification approaches to scoring student FEW Nexus Constructed Responses (CR) indicate how these approaches can be used, in addition to several future research priorities for interdisciplinary, practice-based education research. Development of further complex question items using machine learning would allow evaluation of the relationship between foundational concept understanding and integration of those concepts as well as a more nuanced understanding of student comprehension of complex interdisciplinary concepts.

Similar content being viewed by others

Introduction

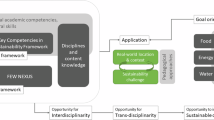

Many global problems are considered “wicked” in that they integrate complex systems that are often studied in distinct disciplines (Balint et al., 2011). To solve these 21st-century socio-ecological problems, students must instead learn cross-cutting concepts across disciplines within interdisciplinary programs. The Next Generation Science Standards (NGSS) identify crosscutting concepts as a framework to link different science disciplines, providing a means for students to link knowledge across fields to establish a cogent, scientifically-based way of interpreting the world (National Research Council, 2012; NGSS Lead States, 2013). In environmental programs within higher education, recent efforts are defining the key disciplinary ideas, concepts, practices, and skills embedded in complex meaningful learning and implementing new curricula with interdisciplinary frameworks (Global Council for Science and the Environment, n.d.; Vincent et al., 2013). Frameworks that link concepts across disciplines can include the Sustainable Development Goals (SDGs; Education for Sustainable Development), Resilience Thinking, the United Nations Principles for Responsible Management (UN-PRME), and the Food-Energy-Water Nexus (FEW Nexus) (Leah Filho et al., 2001; Martins et al., 2022). Interdisciplinary approaches to curricula and course design rely on content mastery and skill development to understand systems interactions and higher-order thinking. With this shift toward higher-level learning across interdisciplinary environmental and sustainability (IES) programs, we need new assessments that elicit complex student thinking and can be used to identify and categorize different levels of understanding, not just memorization of facts (Laverty et al., 2016; J. W. Pellegrino et al., 2013; Underwood et al., 2018).

Assessing crosscutting, interdisciplinary learning is challenging, and often constructed responses (CR) (i.e., open-ended questions) are used for assessing interdisciplinary connections because student thinking and reasoning are more explicit compared to multiple choice type questions; however, these CR assessment items are challenging and time-consuming to design and grade. One rapidly developing tool with the potential to support this kind of assessment is text classification models, which are machine learning (ML) algorithms and statistical models that learn from and analyze data patterns. Due to the challenges of assessing interdisciplinary learning, IES programs provide a useful context for education research on the application of these types of ML for studying complex CR assessment items. Further, technology such as ML may help us evaluate these complex formative assessments and provide an opportunity to improve science teaching and learning (Harrison et al., 2023). Often in science assessment, each individual model is specifically developed for each question and response set in an iterative process using human coding and model development and selection methods, making this process potentially very time-intensive (Brew and Leacock, 2013). However, once a model is constructed, it can be used to score many responses very quickly, thus addressing the labor and time-intensive aspects of evaluated CR questions to allow for big data research using those specific questions and associated models. While this trade-off between model development and model use is an important consideration, the process of model development itself can be aided by several considerations, which may speed development time and improve the validity of the final model (Rupp, 2018). Thus a well designed process for developing and evaluating both questions and models is essential, although iterations throughout the process will always be necessary.

Here, we report on a process of using human-scored responses to construct ML-based text classification models for assessing CR questions focused on the food–energy–water (FEW) Nexus. As part of this focus on the model development process, we address two research questions (1) Can machine learning models be used to identify instructor-determined important concepts in student responses? (2) What do our students know about the interconnections between food, energy, and water, and how have students assimilated “systems thinking” into their constructed responses about FEW?

Systems thinking as an example of cross-cutting concepts

Systems thinking involves understanding the interdisciplinary connections and relationships between associated components within a system, rather than simply focusing on discrete concepts (Meadows, 2008). The Global Council for Science and the Environment’s (GCSE) draft proposal for key competencies in sustainability higher education identifies systems thinking as a core skill and includes increasing complexity across scales in their definition as the foundation for strategic solution development and future thinking (Brundiers et al., 2023). This level of understanding typically falls on the higher levels of Bloom’s Taxonomy of knowledge that include categories such as “apply”, “analyze” and “evaluate” (Bloom and Krathwohl, 1956; Krathwohl, 2002). Systems thinking is a key competency in STEM education, both in discipline-specific and interdisciplinary reasoning (Blatti et al., 2019; Hmelo-Silver et al., 2007; Mambrey et al., 2020; Momsen et al., 2022; Ravi et al., 2021; Redman et al., 2021; Redman and Wiek, 2021), and is recognized as a core competency by the National Science Foundation (NSF), the National Academies of Sciences, Engineering, and Medicine (National Science Foundation, 2020), and the US Next Generation Science Standards for K12 education (NGSS Lead States, 2013). Systems thinking was recently identified as a key competency by IES educators in higher education (Vincent et al., 2013), where understanding complex natural and social systems is applied and evaluated using systems thinking (Clark and Wallace, 2015; Varela-Losada et al., 2016).

While fostering systems thinking remains challenging, many potential strategies exist to help anchor student learning. Assessment of systems thinking is challenging and typically is approached from the context of the subject matter (see Randle and Stroink, 2018; Grohs et al., 2018; Gray et al., 2019; Bustamante et al., 2021; Liu, 2023; Dugan et al., 2022 and references within), which means there is not one agreed upon definition or assessment for systems thinking. For example, Soltis and McNeil (2022) have developed a systems thinking concept inventory specific to Earth Science, but valid and reliable approaches for measuring learning gains associated with systems thinking more broadly or in other applications are currently lacking. However, within the field of interdisciplinary environmental programs, there is a widely accepted definition of systems thinking from Wiek et al. (2016) for complex problem-solving for sustainability and commonly accepted concepts associated with systems thinking from Redman and Wiek (2021) (Box 1) and the 2021 NAS report on Strengthening Sustainability Education. Assessing systems thinking can be thus understood in the context of how it is integrated within a particular concept or set of concepts.

The FEW Nexus provides a concrete concept integration framework for developing the skill of systems thinking that applies across many interdisciplinary environmental programs as it connects complex environmental processes, management, policy, and socioeconomics of FEW resources (Smajgl et al., 2016). The FEW Nexus is a coupled systems approach to research and global development that accounts for synergies and trade-offs across FEW resource systems (D’Odorico et al., 2018; Leck et al., 2015; Simpson and Jewitt, 2019). For teaching and learning contexts, the FEW Nexus provides a scaffold for incorporating systems thinking and sustainability concepts into courses and across curricula. With global resource consumption outpacing supply, the FEW Nexus is a global priority area for research (Katz et al., 2020; Simpson and Jewitt, 2019). Understanding the FEW Nexus and the global focus on FEW research and decision-making makes it an ideal concept for exploring complex systems content in introductory IES courses, as FEW resource systems are visible to learners in their daily lives. Students need to develop their systems thinking to fully grasp the importance of the FEW Nexus and how it is impacted and impacts other systems, e.g., climate change, resource scarcity (Brandstädter et al., 2012).

The need for tools to assess interdisciplinary systems thinking

Given the complexity of the relationships within the FEW Nexus and the relatively recent expansion of college-level IESs that incorporate FEW Nexus concepts, assessments that target these more advanced systems-level relationships are lacking. Assessing student conceptual understanding typically requires constructing valid and reliable tests, such as concept inventories (CIs) (Hestenes et al., 1992; Libarkin and Anderson, 2005; Libarkin and Geraghty Ward, 2011; Soltis and McNeal, 2022; Stone et al., 2003; Tornabee et al., 2016). Disciplinary CIs are traditionally used to assess learning using close-response questions (i.e., multiple choice). Existing CIs are inappropriate to assess complex skill development in IESs for two reasons: (1) IESs are interdisciplinary, and existing CIs do not capture the range of concepts typically covered in IES curricula, and (2) Close-ended questions (multiple choice) limit the ability to dissect higher level learning, such as systems thinking. An interdisciplinary, open-ended environmental CI could address these challenges; however, CR or open-ended assessments are labor-intensive to evaluate and can be very subjective for instructors to score. Artificial intelligence (AI) attempts to mimic human intelligent actions, including understanding language via Natural language processing (NLP) and classifying artifacts via ML. In the case of CIs, these approaches (NLP and ML) have been used to classify student written assessments and show promise for use with the first interdisciplinary environmental CI that enables assessment of deeper skill development (i.e., systems thinking, cause and effect, tradeoffs) while alleviating the burden of scoring CR questions. Few studies report on the use of interdisciplinary assessments in STEM (Gao et al., 2020), and this dearth of assessment tools also leads to little research about AI-based applications for such assessments (Zhai et al., 2020a, 2020b). The work presented here is a start towards developing assessments (like CIs) that use CR for more complex concepts, such as systems thinking and connecting concepts across disciplines. Here, we focus on FEW as it is a system that incorporates concept integration that connects environmental processes, management, policy, and socioeconomics of FEW resources. There is a need for education research and collaboration in the FEW Nexus, as evidenced by the recently funded National Collaborative for Research on Food, Energy, and Water Education (NC-FEW), of which author Romulo is a member. FEW concepts are commonly covered in introductory environmental courses (Horne et al., 2023), and this project will focus on IES introductory courses for this process of development.

Text classification: using machine learning processes for interdisciplinary assessment

AI has been part of computer science for a number of decades, with the goal of having computers mimic human intelligence in performing complex tasks. AI utilizes approaches from several different computational subfields in computer science depending on the intended use or task performed. NLP is a branch of computer science that is interested in how computers can identify, understand, and support human language. NLP has become foundational for many AI applications, including speech recognition, language translation, and chatbots. NLP has been incorporated into education contexts in a variety of ways, including scoring of student texts, in both summative and formative uses (McNamara and Graesser, 2011; Shermis and Burstein, 2013), intelligent agents for interactive feedback (Chi et al., 2011), and customization of curricula materials and assessments (Mitkov et al., 2006). NLP has been applied in science assessment in a variety of ways. For example, NLP coupled with ML techniques has been used to develop predictive scoring models (Nehm et al., 2012), as an approach to explore sets of student responses (Zehner et al, 2015), and to assist in developing coding rubrics (Sripathi et al., 2023). Here, we focus on using NLP as part of text classification approaches to categorize student CR to assessment items (Dogra et al., 2022). Specifically, these text based CRs are short in length but rich in disciplinary content and common in STEM assessment practices (Liu et al., 2014). Using approaches from AI, these CRs can be automatically categorized according to coding rubrics that are developed with assessment items (Zhai et al., 2021a).

Machine learning has been described as a “computer program that improves its performance at some task through experience” (Mitchell, 1997). “Experience” here refers to some information (e.g., outcomes, labels) available to the program from which it can “learn.” Much of the recent work on automated scoring of student CR has utilized supervised ML approaches, which use text representations from NLP along with assigned human codes as input for text classification models (Zhai et al., 2020b). Generally, in supervised ML, these data are used to “train” ML algorithms in order to develop a scoring (or classification) model. Once the scoring model is developed, the model can be “tested” by comparing the consistency of human and machine-assigned codes on subsets of the same (or new) data (Jordan and Mitchell, 2015; Williamson et al., 2012). Various ML scoring approaches have been used to evaluate student CRs in science; these reports cover a range of grade levels and disciplinary topics (Jescovitch et al., 2021; Liu et al., 2014; Nehm et al., 2012; Wilson et al., 2023), such as the water cycle in secondary science (Lee et al., 2021). These studies and others have identified important considerations when designing assessment items, rubrics, and text classification models for evaluating responses to science CR assessments. Using these ML approaches in automated assessment scoring, important student ideas can be recognized by machines from authentic student work, as opposed to predefined answers. This is important to identify these key ideas as actually expressed by students. Thus a collection of student responses are necessary to train the ML model and to represent the range of possible answers (Shiroda et al., 2022; Suresh and Guttag, 2021).

Methods

Overall, we follow a modified question development cycle (Urban-Lurain et al., 2015) (Fig. 1) that integrates question, rubric, and text classification model scoring as part of an integrative formative assessment development and validation process. Broadly, this approach uses linguistic feature-based NLP methods (Deane, 2006) to extract linguistic features from writing and then uses those extracted features as variables in supervised ML models that predict human raters’ scores of student writing.

Adapted from Urban—Lurain et al. (2015), each box represents a stage of the process beginning with Question Design with outputs from one stage being used in subsequent stages, as indicated by solid arrows. A predictive model in the top, right corner is the ultimate goal of the cycle, in which a machine learning model can accurately predict classifications of new responses. A dashed arrow represents possible iteration(s) of the cycle depending on the outcomes of previous stages.

In the first stage of the cycle, we begin with Question Design (top) to target student thinking about important interdisciplinary constructs. Data Collection is typically done by administering the questions online to a wide range of students within appropriate courses and levels to collect a diverse range of responses. Exploratory analysis combines automated qualitative and quantitative approaches to the student-supplied text, including NLP, to explore the data corpus. For example, we use text analysis software to extract key terms and disciplinary concepts from the responses and look for patterns and themes among ideas. These terms, concepts and themes are used to assist Rubric Development. We use rubrics, both analytic and holistic, to code for key disciplinary ideas or emergent ideas in responses. These coding rubrics are subsequently used during the Human Coding of student responses in which one or more experts assign codes or scores to student responses. During Confirmatory Analysis, we develop text classification models by extracting text features from student responses using NLP approaches. These text features are subsequently used as independent variables in statistical classification and/or ML algorithms to predict expert human coding of responses, as part of supervised ML. In this stage, the performance of the ML model is measured by comparing the machine-assigned score to the human-assigned score. Once benchmarks for sufficient performance are achieved (Williamson et al., 2012), the model is saved and used as a Predictive Model. These Predictive Models can be used to completely automate the scoring of a new set of responses, predicting how experts would categorize or score the data. Often, results from one or more stages of the cycle are used to refine the assessment question (dashed arrow), rubrics, and/or human coding. The overall process is highly iterative, with feedback from each stage informing the refinement of other components. Further, the iterative cycle allows considerations for automated scoring to be addressed throughout the cycle, providing opportunities to collect and examine valid evidence (Rupp, 2018).

Concept identification

In previous work, we performed content analysis on IES course materials collected from 30 institutions to identify shared learning objectives across IES courses and programs (Horne et al., 2023). We also conducted ~100 semi-structured interviews with undergraduates enrolled in the 10 IES programs used for data collection in this study. From these interviews, we found that students have a broad range of knowledge regarding FEW concepts (Horne et al., 2024; Manzanares et al. in review). We, therefore, sought to create assessment prompts that allowed us to explore a spectrum of student responses about the FEW Nexus. Informed by the previous results of the content analysis (Horne et al. 2023) and student interviews (Manzanares et al. in review), we identified two focal areas for assessment item development related to systems thinking (Box 1): (1) Identifying sources and Explaining Connections between FEW systems, and (2) Evaluating outcomes and Comparing Trade-offs between FEW systems (e.g., water used for food is water not used to create energy). We note that these assessment item topics align with NGSS standards of Systems & System Models (NGSS Lead States, 2013), since students must identify multiple boundaries, components, and connections between components, and they must predict outcomes from alterations in components or connections. We incorporated Bloom’s Taxonomy, a classification system for identifying skills that we intend our students to learn (Krathwohl, 2002), to help us scaffold our questions. For example, we recognize that students first must be able to identify sources of FEW and make connections to their environment (Table 1: Sources of FEW and connections: reservoir) before they can understand the trade-offs of gaining a local energy source while losing land for crops (Table 2: Trade-offs systems: biomass energy production). As such we have created questions that align with varying levels of student knowledge regarding the FEW Nexus.

Assessment Items

We developed multiple assessment items targeting comprehension of Identifying Sources of FEW and Connections and Trade-offs of FEW Systems using different phenomena (e.g., dams, biomass energy) commonly encountered in IES courses (Table 1). Items about important phenomena in IES courses were presented in relevant disciplinary context and broadly focused on one of the three main foci identified previously. For example, the assessment item about reservoirs is designed to have students identify sources of water and energy usage, then explain how these usages may be connected (Identifying sources of FEW, Connections between FEW systems). Items were structured to contain several sub-parts or prompts to better elicit student thinking, each of which was designed to assess a specific construct. For example, in Table 1, parts A and B of the “Sources of FEW & Connections: Reservoir” item was designed to assess student's ability to identify relevant sources of energy and water resources, while the last sub-question assesses how students understand connections between these sources. Thus, many of these items are multi-dimensional, as they require students to integrate disciplinary knowledge and crosscutting concepts.

Data collection

Higher education institutions were invited to participate in this research from the existing connections of the PIs and via an email to the Association of Environmental Studies and Sciences Listserv. Ten institutions were purposefully selected to represent the three primary categories of 4-year colleges according to the Carnegie Classifications of Institutions of Higher Education (Carnegie Foundation for the Advancement of Teaching, 2011) and the three approaches to IES curriculum design outlined by a representative survey of higher education institutions (see Vincent et al., 2013 for further description of the three curricular designs in IES program). The IES program curriculum research conducted by the NCSE found statistical alignment of all undergraduate degree programs in a large, nationally representative sample with one of the three broad approaches to curriculum design (Vincent et al., 2013). Our sample includes representation from baccalaureate colleges (4), master’s college and universities (3), and doctoral/research universities (4) and programs/tracks representative of the three approaches to curriculum design—emphasis on natural systems (7), emphasis on societal systems (6), and emphasis on solutions development (4). By selecting programs that represent different types of four-year institutions and the three empirically determined curriculum design approaches, we ensured the inclusion of course materials representative of the diversity of the IES field. We focus on four-year programs for the development of the NGCI due to resource constraints and the lack of equivalent research on community college IES curriculum design that would allow us to select representative programs. Additionally, community college IES degree programs are designed to either articulate with 4-year degree programs or to prepare students for immediate employment (Vincent et al., 2013).

Student responses (n = 698) were collected from introductory IES courses during Fall and Spring semesters from Spring 2022 through Spring 2023 by having students complete the assessment questions pre- and post-course discussion of the FEW Nexus. Demographic information revealed 57.45% identified as female, 4% as non-binary, and the remaining 38.55% as male. Racial and ethnic identities reported were 73.67% white, 5.3% Asian, 4.7% Hispanic/Latino/latinX, 1.78% black or african american, 1.38% american indian or Alaskan native and a majority choosing more than one identity (11.79%). We then added the items in a Qualtrics survey and administered the survey to over 400 IES undergraduates from seven post-secondary institutions across the United States to collect student responses (UNCO IRB#158867-1). Responses were then de-identified for coding to create training and testing data for machine learning.

We surveyed the eight IES instructors who had surveyed their students about the pilot items to collect content validity evidence and feedback on question structure. During this survey instructors were asked to both respond to the question item as if they were a student completing the assignment and then, in a separate survey, instructors were asked questions about the question items in the context of their courses. Instructors indicated that assessment phenomena (e.g., food vs. energy production, and energy flows) were typically covered in their introductory IES courses and the multi-part question structure was accessible to student learners.

Rubric development

Rubric development began by reviewing examples of previously published rubrics that were used in similar assessments and intended for use with automatic scoring (Jescovitch et al., 2021; Sripathi et al., 2023). We agreed upon a scale that would best represent the students’ varying levels of knowledge (Table 2). We created each rubric by first analyzing the range of student answers we had received from the different participating institutions. During this initial review, we used an inductive approach and read student answers to identify common themes that revealed student knowledge regarding food, energy, and water systems and their relationships to each other, to other natural and to human systems. During this process we also re-examined our assessment items and the intended goal(s) of the item and also reviewed instructor responses as examples of “expert responses” and alignment with what types of responses students were providing. This was to ensure that students understood the questions the way we intended and to determine if our questions and, therefore, rubrics would need further alterations. To fully capture students’ knowledge, the majority of the NGCI questions needed to have separate rubrics for each sub-question, i.e., sub-questions A–C would each have their own rubrics. At this stage in rubric development, we relied on the previously acquired instructor responses to define an expert-level answer. Instructor responses were similar, and where there were divergences we identified commonalities across responses. We then compared instructor responses to student responses to create a range of scores reflecting novice to expert knowledge.

We designed dichotomous, analytic rubrics with parallel structures for each node of the FEW Nexus. Each response is categorized based on the ideas it contains, with each response receiving a zero or one score for each code based on the presence or absence of the targeted ideas. We provide an example rubric for parts of the Trade-offs of FEW Systems: Biomass Energy Production question item (hereafter referred to as “Biomass question item”) in Table 2, and the other rubrics are available in the Supplemental Methods file.

To determine the level of expertise a student displayed in their response, we defined a certain combination of bins to receive a holistic score of one through four (Table 3). For example, the following student response to Biomass Part A is considered an expert level response (coded as 4) because it contains the following ideas: water usage will increase (bin A) and there will be changes to the local river (bin D). The student therefore makes two connections between energy and water (water usage increasing, impacts to the river).

While the turn to biomass is a more sustainable option, the use of fresh water is going to increase drastically to be able to sustain such a change to the energy source. More than likely the local river will have drastic impacts from such dependency upon it especially if it is a dry season for rain.

Human coding

After the development of the initial rubrics, we iteratively refined the rubrics over several rounds of human coding. During each iteration, two or three researchers separately assigned scores to a set of 30 randomly selected student responses. Each student response received a 0 or 1 for each bin in the rubric for the absence or presence of the corresponding theme in the response. After scoring the set of 30 responses separately, the researchers compared assigned scores and calculated percent agreement. A percent agreement of at least 85% per bin was considered the acceptable level of agreement between human coders to move forward with coding the rest of the dataset independently (80% agreement is acceptable per Hartmann, 1977). The scorers met to discuss agreement for each code; in cases of high percent disagreement, the rubric was revised to improve clarity on those codes. During these discussions, decisions about removing or revising codes with very low agreement or low frequency in the dataset were also made. For example, a reservoir code, “Energy needed for food production or irrigation” originally lacked clarification. It was then further described for coders with the addition of, “Irrigation minimum: POWERING the transport/pumping of water, but not implied movement of water without tying to energy. When to code with machinery: machinery + either harvest, produce, or process food.” Specificities like this helped improve coder agreement.

After revising the rubric, a new sample of 30 student responses was compiled, which were independently scored by two to three researchers against the bins with previously high disagreement. This iteration of separate scoring, calculating percent agreement, and revising the rubric continued until scorers either reached 85% agreement for each code in the rubric or resolved remaining disagreements through discussion until consensus was reached (5 iterations for the Biomass question item and 4 iterations for the Reservoir question item). After reaching a consensus for the rubric, all student responses were divided between two members of the research team and were scored independently. A total of 346 responses were scored for the Reservoir question item and 483 responses for the Biomass question item (Supplemental Tables 5 and 6, respectively).

Text classification model development

We employed a supervised ML text classification approach to assign student written responses a score. During our ML process, each individual student response was treated as a document and the bins in each scoring rubric were treated as classes (Aggarwal and Zhai, 2012). The predicted output of a ML model is a dichotomous outcome of whether a response would be categorized in each rubric bin or not. We decided to combine student responses for both parts of the Sources of FEW & Connections: Reservoir question item (hereafter referred to as “Reservoir question item”) into a single text response for text classification model development for two reasons. First, the final coding rubric for each part of the question was identical, although certain ideas/bins were expected to be more frequent in one part than the other. Second, the human coding team adopted a similar approach when assigning codes: regardless of in which response part the student included the idea, the human coders marked the code as “present” for the response as a whole. For the Biomass question item, student responses to each part of the question were kept separated during model development since different parts have different coding rubrics (see Table 2).

Text features (single or strings of words) were extracted as n-grams from each response using NLP methods. We used a default set of extraction settings and processing, including stemming, stop word removal, and number removal, to generate a set of text n-grams. The computerized scoring system then generated predictions on whether each given document was a member of each class (i.e., rubric bin) using the extracted n-grams in a bag-of-words approach as input variables in a series of ML classification algorithms. To generate these predictions, we used an ensemble of eight individual machine-learning algorithms (Jurka et al., 2013) to score responses to each question. The predictions of the set of individual algorithms are then combined to produce a single class membership prediction for each response and rubric bin. The text classification, including the ensemble ML model, was generated using a 10-fold cross-validation approach using the Constructed Response Classifier (CRC) tool (Noyes et al., 2020). The CRC has been used previously to score short, concept-based CR even in complex disciplinary contexts and is described in more detail elsewhere (Jescovitch et al., 2021). For evaluation, we compared the machine-predicted score from the ensemble for each response in each rubric category to the human-assigned score for each response.

For each of the models developed in this study, we optimized model performance based on the training set by starting with a default set of extraction parameters, then adjusted several other common model parameters (e.g., n-gram length, digit removal) and retrained classification models to evaluate model performance. This is what we describe as exploratory, basic feature engineering, and we applied a similar approach to every model for each rubric bin. We used the human-coded data for Reservoir and Biomass questions, and we removed several responses with a missing value for a human-assigned score. We used 345 coded student responses for the Reservoir question item, 480 for the Biomass question item Part A, and 466 for Part B as our initial training and testing sets. During this and further iterative rounds, we used common benchmarks of Cohen’s kappa as our targets (kappa > 0.6 as substantial; kappa > 0.8 as “almost perfect” (Nehm et al., 2012). Cohen’s kappa is a measure of agreement between raters (in this case, human and machine) that takes into account chance agreement and is frequently reported in evaluating the overall performance of ML applications to science assessments (Zhai et al., 2021b). We further considered evaluation metrics of accuracy, sensitivity, specificity, F1 score and Cohen’s kappa to guide iterations of model development and to evaluate the overall performance of models once a benchmark was achieved (Rupp, 2018). It is noted that while Cohen’s kappa serves as the primary metric for reporting our model’s overall performance, we also routinely consider other evaluation metrics during model building and evaluation. The assessment of these metrics should not be construed as an all-encompassing validation, as their effectiveness is contingent upon the distribution of scores assigned by humans and the quality of those human scores (Williamson et al., 2012). In our specific context, we encountered a challenge with the disproportionate representation of certain score points, particularly in some specific analytic rubric bins where cases scored as 1 were significantly fewer than 0. Such low cases of positive occurrences in the training set led to decreased sensitivity metrics for those rubric bins. In some cases (e.g. Reservoir B3), the overall model still exhibited an acceptable overall performance metric and an acceptable F1 score.

Analysis of model outputs and iterative model development

After performing the initial model development and examining the basic feature turning settings, we examined the outputs of the model for low-performing rubric bins, including model evaluation metrics and groups of responses that showed disagreement between human and machine-assigned scores. We hoped to find possible ways to adjust the model parameters and/or training set of data to improve model performance in subsequent iterations. For example, we collected responses with disagreement in assigned human and machine scores. We examined the false negative and false positive predicted responses (compared to the human coding) in a rubric bin and performed conventional content analysis to try to identify words, phrases, or ideas that were common among these misscored responses (Hsieh and Shannon, 2005). We also reexamined the criteria of coding rubrics with low-performing models to ensure the criteria clearly identify important disciplinary ideas and to confirm the original assigned human codes to responses (Sripathi et al., 2023). The coding team met to discuss the results of miscode analysis and changes to target during iterative cycles, including possible changes to the rubric, best approaches to tuning model parameters consistent with assessment items and student ideas, and/or adjusting training sets.

The insufficiency of educational data (Crossley et al., 2016; Wang and Troia, 2023), which often suffers from limited availability of data for training ML models as compared to other sectors, and the observed lack of diversity in undergraduates’ CR (Jescovitch et al., 2021) have long posed challenges for educational researchers. These issues present difficulties for ML algorithms in discerning patterns effectively and reliably identifying a broad range of student ideas. To address these challenges, we have adopted a set of extended model tuning strategies, which have been both theoretically and empirically validated (Bonthu et al., 2023; Jescovitch et al., 2021; Romero et al., 2008). We employed these extended strategies beyond our exploratory, basic parameter tuning (described above). The extended strategies we employed are:

Additional feature engineering

In certain instances, we implemented two advanced feature engineering techniques, often arising to address patterns identified during our miscode analysis. These techniques encompassed (1) substituting specific words with synonyms and (2) extending N-gram analysis to more complex levels, including trigrams (three words combined into one feature) and quadgrams (four words combined into one feature).

Data rebalancing

Training sets that heavily represent only certain types of responses can impede model training; therefore, we applied data rebalancing strategies to address situations where the dichotomous coding significantly favored one category (over three times). When our dataset exhibited such imbalances, we implemented data rebalancing techniques by removing responses associated with the most frequently occurring codes to achieve a more equal distribution of the dichotomous codes. In our data set, cases coded as 0 often outnumbered those coded as 1. Since cases coded as 0 sometimes failed to provide meaningful patterns for ML algorithms to learn from, we selectively removed excess cases coded as 0 to equalize or enhance the distribution (i.e., reducing the ratio to equal to or less than two times difference).

Dummy responses

For datasets characterized by a balanced distribution of dichotomous scoring codes, yet still yielding low performance metrics, another extended strategy was devised. In this strategy, we initially ensured dataset balance, saved cases with human rater scores, and ML-predicted scores and outputs of the CRC tool after the initial round of analysis. Subsequently, we filtered out responses that were incorrectly classified, identified by a misalignment between human and ML predicted scores. These misclassified cases underwent further qualitative examination, with notes indicating which phrases and segments included in (or absent from) the response were indicative of the critical concept targeted by the rubric. We then generated new cases (i.e., dummy responses), which only replaced the identified segments of responses with new words or phrases, without altering the sentence’s underlying meaning. This procedure offers advantages, including mitigating overfitting concerns in which the model is only effective on responses very similar to the training set and augmenting the training dataset’s size. The dummy responses were integrated into the overall dataset solely for model training purposes. To derive the final performance metrics of the classifier model, the dummy responses were subsequently removed for model evaluation calculations.

Merging rubric bins

In some instances, despite the explicit indication in the original rubric descriptors that certain ideas are intended to be scored separately as they are designed as mutually exclusive during rubric development, some machine models faced challenges in effectively identifying these subtle textual patterns. Collaborative discussions with expert raters led to a consensus among researchers to combine these rubric bins. This decision was informed by empirical investigation revealing overlapping content, and the re-coding of these bins to a single code / score to enhance the model’s performance, aligning with practical considerations in the procedure.

It is important to note that these strategies can be combined or used consecutively as needed. Nevertheless, the initial round of analysis consistently adhered to the default and basic settings of the CRC tool, utilizing the parameter options provided therein. Further details on the application of these approaches to individual items and rubric bins, along with illustrative examples of dummy response creation, can be found in the supplementary materials.

Results

Here, we report on the use of ML-based text classification models to assess CR questions focused on the FEW Nexus. This section is organized by the research question, beginning by describing the successes and challenges in applying ML to score student CR to questions about sources of FEW resources and trade-offs associated with biomass energy production. We then examine the two questions related to reservoirs and biomass to describe FEW connections in student CR and co-occurrences across responses to understand student system thinking capacities. Co-occurrence suggests evidence of systems thinking as multiple FEW systems are interacting simultaneously in student responses.

Research Question (1): can natural language processing be used to identify instructor-determined important concepts in student responses?

We developed a total of 11 text classification models for the Reservoir item, one each for the 11 “bins” contained in the coding rubric (Table 1). These eleven models had a range of overall performance metrics (Table 4), ranging from Cohen’s kappa of 0 to 0.957 and accuracies ranging from 0.892 to 0.992. Only one model (D2) failed to detect positive cases, which resulted in an overall Cohen’s kappa = 0.000. This was due to a severe data imbalance in the human-assigned codes in this rubric bin, meaning that there were very few positive codes to responses assigned by humans in this bin. All other ten models met acceptable performance levels as measured by Cohen’s Kappa values (kappa > 0.6 as substantial; kappa > 0.8 as “almost perfect” (Nehm et al., 2012)), with many models exhibiting “almost perfect” agreement with human assigned codes. We note that most models were tuned to this performance using only basic feature engineering manipulations, as described in the methods. There were also a few bins that met our target threshold of 0.6 only after employing extended strategies (e.g., employing dummy responses for A2), and for one bin (B4), we employed data rebalancing in tuning the model. The model for D2 showed high accuracy but decreased performance on other model metrics due to a severe imbalance of human code occurrence.

One result that emerged from discussions during iterative model development for the Reservoir question item was the similarity of codes A2 (producing hydropower) and B4 (energy transformations). Although we were successful in developing text classification for each code separately, the two models did require slightly different tuning strategies. When examining miscoded responses, the coding team noticed similar patterns in the groups of correctly and miscoded responses for each bin. Human coders reflected that during the coding of the responses, students expressed these ideas similarly, and it was, therefore, sometimes difficult to distinguish when students were explaining hydropower versus describing transformations of energy (e.g., moving water turning turbines). Thus, these two codes (A2, B4), which were initially intended to capture a specific understanding of hydropower and a more general description of energy transformations, ended up being more similar than intended in the context of this item. One potential way forward for text classification is to combine the A2 and B4 codes into a single code and redevelop a text classification model to recognize the single code.

We developed 15 text classification models (eight models for Part A; seven models for Part B) to detect student ideas in response to the Biomass question item (Table 5). Overall, for this item, models demonstrated lower performance metrics than models for the Reservoir question item. For the Biomass question item, no model achieved a level of almost perfect agreement (as measured by Cohen’s kappa value of >0.8), although the majority still achieved acceptable agreement with human scores. Due to the reduced maximal performance, these fifteen models had a narrower range of overall performance metrics than models for the Reservoir question item, ranging from Cohen’s kappa of 0–0.674 and accuracies ranging from 0.755 to 0.991. Correspondingly, these Biomass models had a much broader range of sensitivity, specificity, and F1 score metrics too. This reduction in performance metrics is likely due in part to the target of the Biomass item: trade-offs around FEW. Although the item still centers on the FEW Nexus, this item allows students to respond in numerous ways about any number of possible trade-offs between any of the vertices. Thus, this item allows for a much wider possible answer space. As a result, a few models failed to reach the benchmark performance metrics (e.g., B2 in Part A), despite having frequent occurrences of both codes. This also suggests that the text complexity of expressing these ideas or the range of possible ideas in these responses is difficult for these text classification models to reliably identify. Although we attempted extended strategies on models for many of the Biomass models, we report on a few of the bins and attempts as exemplars of this work, or findings that were similar between different bins. We provide more detail on applied strategies for each model in the Supplemental Materials.

The model for B3 code in Biomass question item Part A showed very low-performance metrics despite having a fair number of positive cases. The poor model performance is likely reflective of the range of student ideas covered by this rubric bin: a decrease in water availability for other uses (here, “other uses” means outside the context of bean and corn agriculture given in the question). As such, there is a wide range of possible other uses students could suggest, such as drinking water, home water use, and water for other crops. The broad range of acceptable answers was easy for humans to code, but difficult for the model to detect the underlying similarity. Although we tried some extended strategies for model iterations, these had little effect on overall model performance. During iterative rounds of model development, we decided to merge two codes, B3 and B4, since they both identified similar ideas, about less water available for other things and changes in human behavior due to less water. During our review of miscoded responses by the model, we noticed a number of miscoded responses were somewhat borderline cases of human code assignment between the two bins B3 and B4, with responses often implying or vaguely mentioning effects on community usage of water, without being explicit the change in use or behavior. For example, the response, “Since this is a place of limited rainfall, and the source of water is coming from the river I would expect that water use for the community may need to be diverted more towards the crops, and less towards other measures such as household use.” was coded positive for B3 by human coders but miscoded as missing B3 by the model. After merging these two codes into a single code and model, the performance of the overall model for the merged code was significantly improved for B3 and slightly decreased for B4 (see Table 5). After merging these bins into a single model, borderline responses, such as the example, were correctly classified by the model.

Similarly, the initial classification model for C1 in Biomass question item Part B failed to meet performance benchmarks even though student responses were nearly equally distributed between positive and negative cases, and we tried several extended strategies to improve model performance. However, the re-examination of coding rubrics for C1 and C2 presented an opportunity to recombine coding criteria as part of the iterative process of using model outputs to iterate on items and rubrics. The rubric was originally designed to identify student ideas about the production of energy (C1), but not when used in conjunction with trade-offs with other energy sources or energy return on investment (C2). After several rounds of model iteration and discussion with the coding team, we decided to recode the original dichotomous rubric bins C1 and C2 as a single, multi-class code (i.e., a holistic coding rubric, with levels as 0, 1, or 2). This preserved the exclusivity of these two codes (C1 and C2 were intended to be mutually exclusive) while encoding the exclusive classes in the model training set. Making this a single, multi-class prediction increased the overall performance of the model, above the performance for the separate, binary models made for the original rubrics.

Research Question (2): what do our students know about the interconnections between food, energy and water, and how have students assimilated “systems thinking” into their constructed responses about FEW?

Here we apply two different strategies for defining and evaluating student responses as novice to expert. To evaluate student knowledge about interconnections and how they have assimilated “systems thinking” into their constructed responses about FEW, we calculated the co-occurrence of codes. Level of expertise for the Reservoir question item is approximated by co-occurrence of the codes, and level of expertise for the Biomass question item is calculated by the code combination provided in Table 3.

Sources of FEW and connections: reservoir question item

We examined the predicted codes for each response to the Reservoir item to look for co-occurrence of codes in student responses. This can help identify connections students are making between FEW vertices, since the item prompts students to make these connections. For this analysis, we collapsed individual bins in Table 6 for the Reservoir rubric by grouping letter codes (e.g., A1 and A2 together as A bin), since these groupings indicate similar themes (A codes refer to hydroelectricity, B codes refer to energy production, C codes refer to use of energy; Table 1 in Supplemental Methods).

Responses frequently included ideas from A codes with ideas from C codes, indicating the same response connected generating hydropower to uses of energy for agriculture or infrastructure. The C codes also commonly occurred with the B codes, showing students explained connections between types of energy and uses of energy in agriculture or community resource use. D codes (uses of water) were the least frequently coded; however, when D was coded, these responses were very frequently connected to hydropower (A codes). Co-occurrence within A codes and D codes suggests that students understand that hydropower is powered by water and is needed to create electricity. A–C codes were the most likely to occur together when students were making connections between FEW systems (59 responses). Only 12 students made connections between A–D codes, suggesting that water use beyond hydropower is not as commonly associated with energy use and production in this scenario despite water providing the primary source of energy in the reservoir.

Co-occurrence is how we can approximate the level of understanding of the respondee from novice to expert for the Reservoir question item. The assumption is that the quantity of co-occurrences indicates students have an understanding that there is some sort of connection between Food, Energy, and Water. For example, student responses could be coded in a number of bins regarding the type of energy, and what the energy is used for, e.g., irrigation or powering homes. We assume that students’ answers indicating a greater understanding of the relationships between Food, Energy, and Water will include bin codes for hydroelectricity, irrigation for food, energy for machinery, and energy for housing/farm bins (Table 7). Novice responses show they know that the dam is used to create hydropower, but they do not have any further knowledge about how this energy can be used and how it relates to food (Table 7).

Trade-offs systems: biomass energy production question item

Overall, students perform at a higher level for explaining changing water usage (Part A) than discussing trade-offs (Part B) (Table 8). The large majority of students discuss at least one trade-off in their response for Part B and, therefore, are placed in level 1 or higher (see Table 9 for an example of student responses). Due to the ML model performance for this question item, we have also included the number of responses for each Novice to Expert Level as Supplemental Methods Table 9.

For the Biomass question item Part A, slightly over half of the responses scored a level 3, with over 20% as Level 4 and about 23% as Level 1, and no level 2 responses (example responses provided in Table 9). For Part B, about half of the responses were grouped in level 2 and roughly 20% in level 1; both of these levels) had similar numbers of responses in those levels by ML and human-assigned codes. A small percentage of responses (~11%) were placed in level 3 by the ML model, while human codes had slightly more responses (13.5%) in that level. There were no student responses predicted for level 4 by the ML model and only one response in that level based on human-assigned codes.

The lack of level 2 responses for Part A is due to having only one positive ML predicted for the C component. However, this response was scored to level 3 response because the student response also included one of the other codes. Since this was a poor-performing ML model for the C code (meaning that the model did not recognize any responses for this code), we explored using human scores for this code; even so, only three responses from the data set end up at level 2. Most responses in the dataset which are categorized in code C end up at levels 3 and 4, since these responses tend to incorporate water price increase as an effect of increased water use or water scarcity within their explanation (Table 9 provides an example student response).

For Biomass Part B, we found no level 4 responses in our data set, which was driven by the lack of ML predictions for category A2, which is a requirement for obtaining this level. A2 is an infrequent category in the dataset with only 4 positive cases assigned by human coders. Even when we explored using human scores in place of ML-predicted scores for this specific rubric bin, we observed only a single response in Level 4. About one-third of the student responses score at Level 2, which demonstrates an ability to connect at least two FEW vertices when discussing trade-offs. The largest group of students (~40%) end up at Level 1, which is a trade-off focused on a single vertice of the nexus (food, energy, or water).

Of the 138 responses that do not fit the other patterns in Part A and the 77 responses categorized as Level 0 in Part B, most were a combination of derivations of “I don’t know” or trivial responses such as “it will go up” or “You need all food, energy, and water in this situation.” However, there were also responses that the ML model did not predict any expertise level, but would be considered one of the expertise levels by human coders. For example, this student's response that was not predicted to achieve an expertise level includes concepts that occurred infrequently and, as such, was not provided a code—reduced water availability means that water would need to come from someplace else, and require more labor and cost for transportation:

“A shift from agriculture to biomass production means the community will need to pay for excess water. If there is very minimal rainfall during a year, the community will need to gain a water supply from the surrounding neighborhoods. Buying water, transporting it, and ensuring the corn is watered requires extra labor, which requires extra pay.”

Discussion

The application of ML for assessing interdisciplinary learning involves both the development of the process as well as using that process to understand student thinking and learning. The ML process here shows promise for use in evaluating complex constructed responses for systems thinking, especially as part of formative assessment practice, and we also report on the evaluation itself. Here we discuss findings in the context of our research questions and results, including limitations pertaining to each topic within each section.

Use of ML to uncover student understanding of FEW Nexus

Considerations for future assessments of student CRs, particularly in the context of science-related items, demand significant attention. Despite the relative success of current applications, there are remaining challenges to using ML approaches to score a broader range of assessment constructs and response types (Zhai et al., 2020a). These challenges can be characterized by limitations such as insufficient data (or specific types of responses/ideas in CR), subjectivity, imbalances, and the prevalence of noise, and these all present substantial obstacles within the iterative ML training process (Maestrales et al., 2021). These challenges, if not effectively addressed, have the potential to compromise the achievement of optimal model accuracy, thereby raising questions about the validity and reliability of ML applications in educational evaluation settings (Suresh and Guttag, 2021). Another challenge is the complexity of the assessment target (i.e., what you are trying to measure), and the complexity of expected student responses can pose challenges to such AI-based evaluation (Zhai et al., 2020a). Others have suggested that features of the assessment item itself, such as the subject domain or scenarios used in the assessment, might impact the accuracy of ML models (Lottridge et al., 2018; Zhai et al., 2021b). To address these challenges, we utilized automated scoring approaches for text classification, which examine complex systems integration. In this study, our technical strategies have introduced a practical solution through data augmentation to help address insufficient data and data imbalance, yielding promising implications. This approach involves generating dummy responses that are subsequently revised with identified synonym sets, thus facilitating the measurement of responses with similar structures and content while preserving the overall meaning and essence. Notably, we found that this approach effectively improved model performance, particularly when dealing with specific descriptors in Reservoir and Biomass question items.

One complexity of assessing complex CRs in postsecondary education is that there are varying disciplinary requirements and usages of student literacy compared to the more consistent expectations of K-12 education. As such, student responses considered holistically consist of a range of literacy abilities, which can impact the “understanding” of natural language processing and text classification models. For this research, student responses were collected from across the United States at different institution types (baccalaureate colleges, master’s colleges, and doctoral universities) to provide a wider range of student responses from which to develop the ML models. The resulting models are thus trained on the many ways that people may write about the question item concepts. High variation in the responses, which can be the result of variation in literacy, language, and understanding, result in more complexity, and are thus more difficult items for model development. Some of this difficulty may be addressed with a larger sample size, but if student responses are too varied or certain types of responses are too infrequent in the sample, then accurate ML models may not be easily achievable. Further, although we refer to the scoring of responses into rubric bins, we posit another important outcome of this work is characterizing students' thinking about FEW concepts. The inclusion of automated text scoring systems into formative assessment evaluation isn’t only for “scoring” but provides a way for instructors to use open-response items and identify complex student ideas, or potential barriers to student learning (Harris et al., 2023). This is a critical aspect of formative assessment practice, allowing instructors a richer, more nuanced view of how students’ think about complex systems like the FEW nexus.

Defining criteria for developing text classification models

During the course of our iterative process, models exhibited superior performance in certain rubric categories characterized by well-defined criteria and a robust explanatory framework outlining the expected content under each rubric category. This finding aligns with prior research that underscored the efficacy of ML algorithms in successfully discerning the quality of student responses using fine-grained analytic scoring methodologies (Ariely et al., 2023). Conversely, challenges become apparent in scenarios where substantial overlap exists between rubric categories, leading to redundancy and a lack of clarity (Liu et al., 2014). In such instances, the Kappa value frequently falls short of the desired threshold (Zhai et al., 2021). These insightful observations underscore the imperative need for the refinement of rubric definitions within future assessments. This refinement should be guided by a comprehensive and quantitative delineation of assessment criteria, aimed at mitigating the issues of overlap and ambiguity that our study and prior research have duly highlighted. For example, we revised closely related yet exclusive rubric bins to a single, multi-class prediction after attempting multiple model improvement strategies, yet failing to meet threshold performance metrics. Changing the structure of the rubric maintained the coding criteria of individual bins, now as “levels”, but provided additional information about exclusivity which resulted in better overall model performance. Alternatively, other coding bins with overlapping criteria or developed with too fine-grained of categories than needed to differentiate student ideas, can be merged into a single code. Conversely, other rubric codes that are too broad initially may need to be split or have better-defined coding criteria to better categorize cases (Sripathi et al., 2023).

We also note different levels of successful performance metrics for text classification models for the Reservoir versus Biomass question items. Indeed, most models for the Reservoir question item rubric bins achieved very good performance (i.e., “almost perfect” Cohen’s kappa measures), but most models for the Biomass question item rubric bins achieved only “acceptable” performance. This is despite both assessment items being in the Environmental Science domain, being centered on the FEW Nexus as context, and undergoing similar iterations in ML development. We interpret these findings to provide further evidence that the underlying construct of the assessment items and/or the expected complexity in student response can influence ML model performance, as noted by others (Haudek and Zhai, 2023; Lottridge et al., 2018). Thus, a practical implication of this work is that more complex assessment targets (e.g., trade-offs in socio-ecological systems), or assessment items that encompass larger systems will need additional feature engineering or more advanced ML techniques for accurate response evaluation (Wiley et al., 2017; Zhai et al., 2020a). Further, this highlights the need for an iterative approach in these research efforts. Although we lay out our approach as a “cycle” (see Fig. 1), in practice, it is highly iterative, with results from all stages informing the work of other stages, often in feedback loops. To improve final model outcomes, all stages of item development, data collection, and rubric alignment should be revisited, not only tuning specific model features. Following principled item design procedures (e.g. Harris et al., 2019) and incorporating automated scoring systems into the methodological pipeline (Rupp, 2018) are important considerations. Nevertheless, successful item/rubric/model development often takes multiple iterative rounds, which we continue to do, and models should be updated and expanded.

Such challenges to using NLP for short answer scoring are well reported and exist for assessments across science domains (Shermis, 2015; Liu et al., 2016). This leads to a broad range of scoring model performances (see Zhai et al., 2021b). These iterative cycles of revision do require an investment of human effort with an outcome of having automated classification models that can predict categories for any number of new responses and for any number of new users. Further, researchers also learn about student thinking about the targeted key concepts (see section “Student understanding of systems thinking in the FEW nexus” below) as they work to design items, rubrics, and models (e.g. Sripathi et al., 2023).

Scoring novice to expert levels

Scoring through levels [Level 1–Level 4] allows us to see the real distribution of knowledge for students in introductory courses. Level 4 responses were least frequent, most likely due to this level’s creation being based on an instructor’s expert response. Although, level 4 responses were seldom seen in students, it allows us to set a growth goal and see students who have previous knowledge at the expert level. As seen previously, only one student was able to achieve that level, which suggests that the task at hand is indicative of student ability. The level 4 response level is a baseline for exemplary understanding, it can also be used in the future to see if senior level or graduate students are performing at the expected level or to evaluate different strategies for achieving higher learning outcomes. Another We did have one student be within the level, which suggests that it is possible that students can strive to that level at a beginning level course. In addition, this supports student learning and growth, as we can expect as learning improves the FEW system understanding and could be a good baseline for growth as more students learn better. Additionally, no students fell within the pre-established Level 2 for responses that only included C codes (responses that only addressed a change in water prices), however students who were rated in Level 3 and Level 4 did include that content in their response. This particular content seems to be closely connected with the higher level responses rather than being a piece of information distinct from the other content and future research may delve into the content mapping of student responses.

We report on using the computer predicted scores to place student response in expertise levels. Overall, the computer placement may slightly underpredict student performance on these items as compared to human assignment, especially in the mid-level. This is more notable in the Biomass item, especially for Part A, indicating that the difficulty of this item may affect level assignment. However, although the classifications result from individual models with varying degrees of accuracy, the overall distribution of responses across all levels approximates the distribution from human assigned placements (see Table 9 of the Supplemental Methods for human assigned placements as comparison with the ML predictions of Table 8). This supports the use of these automated classification models to evaluate group or large class performance as part of formative assessment practice, even though individual response placement in specific levels may vary. That is, a reasonable approximation of the distribution of a large number of responses collected in an introductory course can be generated in seconds to minutes using the developed classification models, as opposed to the effort of human reading and assigning levels to all collected responses.

Future prospects of generative AI

The recent advancements in generative AI have raised additional considerations about assessment in education, among a host of many different possible applications (Kasneci et al., 2023). Although many of these issues are common to uses of classroom assessment in many contexts, some issues are particularly overlapping with the process of assessment development and automated scoring presented here. Recent explorations in using large language models for automated scoring of essays and short responses show great promise (e.g. Cochran et al., 2023; Mizumoto and Eguchi, 2023; Latif and Zhai, 2024). Using such an approach would simplify and expedite the automated scoring process, thus permitting automated scoring for different assessment prompts (Weegar and Idestam-Almquist, 2024) and could contribute to generalizability of models (Mayfield and Black, 2020). One promising application of generative AI is to do pattern finding,contextualized representation of information, and clustering of collections of student responses to open-ended tasks in support of formative assessment practice (Wang et al., in review; Wulff et al., 2022). This may assist instructors to easily find patterns and capture token-level representations in student responses based on the linguistic context, thus allowing them to attend to student ideas and thinking as exhibited in their classroom, without reading and sorting individual responses.

On the other hand, the use of generative AI in education raises many concerns about academic integrity and students easily finding or asking AI to generate answers to assessments (Chan, 2023). Some studies have found that generative AI models still perform less well for producing more complex assessment tasks and tend to do better on quantitative tasks as compared to explanatory (Nguyen Thanh et al., 2023). Additionally, regarding the language attributes, current AI-generated responses, when compared to human-authored counterparts, typically manifest a discernible deficiency in cohesive and coherent elements, accompanied by a writing style characterized by uniformity and repetition (Wang et al., 2023). It is very likely these shortcomings of these AI models will not last long. Instead, educators should re-evaluate the purposes of assessment (Chan, 2023), including how and what content and practices are necessary for students to be “skilled” in a discipline. Therefore, focusing teaching and learning on foundational principles within the discipline, which allows students to see science across contexts and define problem boundaries, like systems and systems models, maybe one such approach. Educators should also consider the purpose learning activities that students engage with, both in the classroom and outside of the classroom. The application of generative AI represents a frontier in the use of technology in support of formative assessment in the classroom (Harris et al., 2023).

Student understanding of systems thinking in the FEW Nexus

Systems thinking involves understanding the interdisciplinary connections and relationships between associated components within a system, rather than simply focusing on discrete concepts (Meadows, 2008). For teaching and learning contexts, the FEW Nexus provides a scaffold for incorporating systems thinking and sustainability concepts into courses and across curricula. A primary advantage of the NGCI is the potential to capture a student’s understanding of relationships within the FEW Nexus. While the analytic rubrics were developed to score student understanding of FEW isolated discrete parts of the systems in the scenario presented by each item, by examining the constellation of scores a student response achieved across criteria, we quantified student patterns of explanations about these systems.

What our students know about the Food–Energy–Water Nexus

Both the Reservoir and the Biomass question items present students with scenarios about the FEW Nexus relationship centering water with connections to energy production and agriculture. The codes described in the analytic rubric represent the most common concepts students included when presented with these scenarios. The frequency of these concepts may indicate that these ideas are foundational as introductory students construct knowledge about FEW systems. Many of the most common codes could be classified as demonstrating basic knowledge, which is the simplest cognitive task presented in Bloom’s taxonomy model (Bloom and Krathwohl, 1956; Krathwohl, 2002). For example, in the Reservoir question item rubric, the A codes were the most commonly found in our dataset (Table 6), and indicated responses identifying that a dam could be related to hydropower. While this type of statement is reasonable for introductory level courses where students are developing new understanding and aligns with the content presented in introductory IES courses (Horne et al., 2023), knowledge statements alone do not achieve the competency goals for IES students (Wiek et al., 2011). More complex student responses in our study contained combinations of codes, however exceptionally creative explanations or concepts were not always frequent enough to be included in the analytic rubric or be captured reliably in the ML models.

The Biomass item presents students with an opportunity to consider directionality within trade-offs, and directionality concepts are thus frequent in the associated rubric. In the Biomass sub-questions, students often included at least one statement about directionality of the quantity of food, energy, or water, but responses including predictions across these three ideas were infrequent. Making a statement about change or directionality, such as describing the quantity of food or water decreasing, is a relatively simple task in systems thinking, but is foundational to more complex tasks that consider changes over time (Sweeney and Sterman, 2007). Students who described trade-offs in their responses to this question sometimes went beyond discussing the cause-and-effect components of the system and discussed concepts not immediately asked by the question, such as the impact of this scenario on water pricing. However, these types of responses did not always register in the ML models, and some were too infrequent to be included in the rubric. The frequency of simpler codes describing FEW concepts in comparison to codes describing FEW consequences presents a challenge, given that IES curricula prioritizes FEW in relation to socio-environmental topics (Horne et al., 2023). The emergence of these concepts in student responses provides insight into what students will need to do with these ideas after the classroom and how students may move from identifying FEW concepts to applying predictions about FEW impacts on people, land, and communities.

How students assimilate systems thinking into their constructed responses