Abstract

Unnecessary preoperative testing poses a risk to patient safety, causes surgical delays, and increases healthcare costs. We describe the effects of implementing a fully EHR-integrated closed-loop clinical decision support system (CDSS) for placing automatic preprocedural test orders at two teaching hospitals in Madrid, Spain. Interrupted time series analysis was performed to evaluate changes in rates of preoperative testing after CDSS implementation, which took place from September 2019 to December 2019. We included 228,671 surgical procedures during a 69-month period from January 1st, 2018, to October 1st, 2023, of which 78,388 were from the preintervention period and 150,283 from the postimplementation period. We observed a significant reduction (p < 0.001) of 83% (−83.4% to −83.1%) for chest X-ray orders, 54% (−54.7% to −54.2%) for ECG orders, 50% for blood type testing (−50.5% to −50.1%), and 29% (−29.5% to −29.0%) for preoperative blood test order sets, leading to overall cost-savings of €1,013,666. No increase in postoperative adverse events or same-day cancellations was observed. Our results demonstrate that an EHR-embedded closed-loop CDSS can reduce avoidable preoperative testing without increasing surgical cancelations, postoperative adverse events, or early re-interventions, thus improving the quality of care and healthcare expenditure.

Similar content being viewed by others

Introduction

The increasing prevalence of chronic conditions and the aging population have led to a growing number of surgical procedures1, making sustainable surgery a worldwide priority for healthcare systems2. High-quality preoperative care is a pillar of sustainable surgery3 with important implications for public health, healthcare expenditure, and the environment. However, research shows that around 45% of preoperative tests for intermediate to low-risk procedures are unnecessary4. These unnecessary tests pose a risk to patient safety, cause surgical delays, worsen surgical backlogs, increase healthcare costs, and contribute to greenhouse gas emissions5,6,7. Eliminating excessive preoperative testing is an important step towards ensuring sustainable preoperative care.

Electronic health record (EHR) embedded clinical decision support systems (CDSS) have shown high potential for multiple applications in healthcare and are becoming increasingly common8. One of the main strengths of CDSS is their ability to aid clinicians in adhering to current practice guidelines9,10,11,12, and several studies report that CDSS can be effective in enhancing guideline adherence for preoperative testing, leading to important cost-savings13,14,15,16. A recent study17 describes a completely automated (“closed-loop”) CDSS18 for placing automatic test orders based on patient’s presenting complaints and clinical characteristics. However, the large-scale implementation of a closed-loop CDSS for automatic preoperative test orders and its effects on patient safety, resource allocation, and healthcare expenditure have not yet been reported.

We present an interrupted time series analysis describing the effects of a CDSS algorithm for optimizing testing prior to surgical procedures at two major academic hospitals in Madrid, Spain, during a 69-month period.

Results

During the study period, 228,671 surgical procedures took place (corresponding to 170,460 unique patients). The total number of surgical procedures for the pre-intervention period was 78,388 and 150,283 for the post-intervention period. Demographic and clinical characteristics of patients undergoing surgery during the study period are summarized in Table 1. Specific information on the most frequently performed surgical procedures is presented in Supplementary Table 1. During the preintervention period, a total of 20,708 chest X-rays (26.4% of procedures), 28,486 ECGs (36.3% of procedures), 42,116 preoperative blood tests (53.7% of procedures), and 5184 blood type tests were ordered (6.6% of procedures), compared with 9720 chest X-rays (6.5% of procedures), 32,162 ECGs (21.4% of procedures), 88,037 preoperative blood tests (58.5% of procedures), and 12,955 blood type tests in the postintervention period (8.6% of procedures).

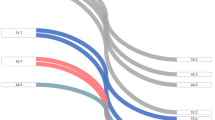

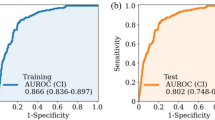

The observed time series for the primary endpoints (changes in the percentage of patients undergoing chest X-rays, ECGs, preoperative blood tests, and blood typing) are presented in Fig. 1, including fitted preintervention and postintervention trends, and extrapolated preintervention trends used to calculate counterfactual differences. Table 2 presents the estimated coefficients from the time series analysis. Statistically significant improvements in both level and change in slope were observed for all primary endpoints.

This figure presents changes in rates of preoperative orders for blood test order sets, chest X-rays, electrocardiograms, and blood type testing during the study period. By comparing our actual data (continuous line) with the counterfactual predictions (dashed line) for the postimplementation period, we estimated a reduction of 83.2% (−83.4% to −83.1%) for chest X-ray orders, 54.5% for ECG orders (−54.7% to −54.2%), 50.3% (−50.5% to −50.1%) for blood type testing, and 29.3% (−29.5% to −29.0%) for preoperative blood test order sets. The boxed area marked with a dashed line represents the implementation period (September 2019–December 2019).

Table 3 presents the overall effect of the intervention on each primary endpoint (absolute and relative change from the predicted percentage of test orders for patients scheduled for surgical procedures during the postintervention period), expressed as counterfactual differences calculated by combining intercept and slope changes. Statistically significant reductions were observed for all four preoperative test orders. By comparing our actual data with the counterfactual predictions for the postimplementation period, we estimated a reduction of 83.2% (−83.4% to −83.1%) for chest X-ray orders, 54.5% for ECG orders (−54.7% to −54.2%), 50.3% (−50.5% to −50.1%) for blood type testing, and 29.3% (−29.5% to −29.0%) for preoperative blood test order sets. Considering current costs for each test, an overall reduction in preoperative testing led to an estimated cost-savings of €1,013,666.63 at 45 months post-implementation.

Regarding secondary endpoints, the percentage of patients with a test order for albumin, liver function panel, or chloride levels decreased from 14,349 (34.07%) in the pre-intervention period to 6,286 (7.14%) in the post-intervention period (OR 0.15, CI: 0.14–0.15, p < 0.001). A slight, significant reduction in early reinterventions (<30 days from the initial surgery) was observed (3.16% vs 2.54%, p < 0.001). No significant changes were observed in rates of surgical complications included in the analysis except for postoperative sepsis, which decreased from 0.2% to 0.08% (p = 0.007) (Table 4). Finally, a slight reduction in same-day cancellations was also observed in the post-intervention period (2.92% vs 2.08%, p = 0.001), which was also reported for same-day cancellations due to incomplete preoperative testing (0.65% vs 0.53%, p = 0.001).

Discussion

This study reports the effect of a closed-loop CDSS for automatic placing of preoperative test orders on rates of preoperative testing for two academic hospitals in Madrid, Spain. A significant reduction in chest X-rays, ECGs, preoperative blood test order sets, and blood typing orders was observed. No increase in postoperative adverse events or same-day cancelations due to incomplete testing was demonstrated during the post-intervention period.

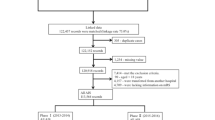

CDSSs have been reported as part of several quality improvement initiatives to optimize preoperative care. A single-center study15 implemented a templated EHR tool to improve standardization of preoperative ordering as part of a wider initiative, with an improvement observed in patients receiving care at a dedicated preoperative clinic but failing to demonstrate significant improvement in the entire study population. Another study demonstrated a reduction in unnecessary testing but also an increase in missed necessary testing14, which could potentially lead to increased same-day surgical cancellations. Due to the widespread use of EHRs and the growing importance of data-driven initiatives to improve healthcare19,20, implementing CDSS-based initiatives to optimize preoperative testing is feasible for an increasing number of healthcare providers worldwide. Although we believe that our initiative is both broad and straightforward enough to be implemented in other settings, we caution that the algorithm’s rules should be adapted to each specific context. Regarding implementation in other centers, although excessive preoperative testing has been identified as a worldwide public health challenge, it is important to consider that guidelines for preoperative testing vary across countries, and so algorithms must draw on appropriate local guidelines. For fellow healthcare professionals, hospital managers, and healthcare information technology specialists seeking to implement a similar program, we suggest following the steps presented in Fig. 2.

This diagram presents the authors’ seven-step proposal for implementing a closed-loop decision support system for reducing unnecessary preoperative testing, loosely based on the “Study-Plan-Do-Act” methodology. In the initial phase (Steps 1–3), a multidisciplinary group should be formed to define rules for each group of procedures, achieve consensus with clinical stakeholders, and inform and educate healthcare professionals. After developing and embedding the algorithm in the network EHR (Step 4), an initial testing period should be followed by system-wide roll-out, initial troubleshooting, and feedback from end users (Steps 5–6). Finally, the initiative should be reevaluated on a periodic basis (Step 7).

Strategies to reduce unnecessary preoperative tests are in line with patient safety initiatives such as the UK’s Choosing Wisely21,22. Our project was successful in reducing risks associated with over-testing, without an apparent increase in early surgical reinterventions, which have been shown to be associated with higher patient mortality, longer hospital stays, and higher healthcare costs in other studies23,24,25. Same-day surgical cancellations are an important source of avoidable healthcare expenditure and contribute to backlogs for elective surgery26, and avoiding cancellations has been described as one of the reasons why surgeons over-order certain tests27. However, we did not find an increase in same-day cancellations in the post-implementation period; in fact, slight reductions in surgical cancelations due to inadequate testing were observed, indicating that the algorithm did not only reduce avoidable test orders but also ensured that necessary tests were ordered. This finding highlights the potential of closed-loop CDSS for healthcare process optimization.

This study has several strengths. From a methodological perspective, time series analysis has been described as one of the most robust quasi-experimental models for quality improvement initiatives28. The dataset used to predict the counterfactual test order rates included all preoperative test orders and all surgical procedures performed during the pre-implementation period, thus reducing the risk of reporting bias. Also, to the best of our knowledge, this is the largest series in the literature to describe a multicenter initiative for sustainable preoperative care through the implementation of an EHR-embedded CDSS, demonstrating a reduction in unnecessary preoperative testing without increasing same-day surgical cancellation rates or early reinterventions. Improvements were found for both hospitals despite existing differences found in other aspects of preoperative care (for example, in the General Villalba University Hospital, most preoperative evaluations are carried out remotely, while for the Fundación Jiménez Díaz Hospital, patients receive in-person preoperative evaluation).

Limitations of the study include the lack of randomization or a control group, as the algorithm was designed to include all surgical procedures carried out at both centers. Also, the algorithm underwent minor adjustments for a few (<10) procedures during the implementation period, and this was not considered when performing the analysis. However, we estimate that the effect of these adjustments on the overall result was negligible. Regarding the distribution of surgical procedures by surgical specialty, our data show significant differences between the pre- and post-intervention periods, although the differences are slight and probably irrelevant with regards to the interpretations of our findings except for in the case of ophthalmological surgery, which rose from 9.1% of total procedures in the pre-intervention period to 17.7% of total procedures in the post-intervention period. This rise in ophthalmological procedures is probably due to factors such as the aging population and increasing demand for cataract surgery. As the interrupted time series did not correct for variations in the distribution of surgical procedures, the interrupted time series may have potentially underestimated the algorithm’s effectiveness regarding reductions in unnecessary tests, as ophthalmological procedures present notoriously high rates of unnecessary preoperative testing. However, we consider that the overall effect of changes in the volume of operations per specialty on the results of our analysis is negligible. Regarding AHRQ PSI indicators used to estimate differences in the prevalence of adverse events during the pre and post-implementation periods, we acknowledge that a limitation of this study is that the calculations were performed using a subset of the total sample due to the intrinsic nature of the indicators, which could lead to selection bias when estimating the effect of the algorithm on patient safety. However, the methodology used was the same for both periods, so we consider that the indicators are valid for the subset of patients included in the calculations. Also, to the best of our knowledge, no standardized, exhaustive categorization of surgical procedures by risk of adverse events exists in the literature29. In fact, the 2016 NICE guidelines for preoperative testing provide indications for different testing based on surgical complexity and risk (minor, intermediate, and major), but the interpretation of the procedures that fall into each category is left to the reader30. Examples of surgical procedures that are commonly cited as belonging to one of the three risk categories include excision of skin lesions or cataract surgery as minor or low risk; primary repair of inguinal hernia or knee arthroscopy as intermediate risk; and total joint replacement, colonic resection, or cardiac surgery as major or high-risk surgery4,31. However, other hospital networks may offer different procedures from those included in our centers’ catalogs. Therefore, although valid for our study population, our classification cannot be generalized to other centers. Although rates of same-day cancellations, early reinterventions, and postoperative sepsis showed a significant decrease in the post-intervention period, we advise that these findings be interpreted with caution, as the lack of a control group makes us unable to rule out other factors contributing to improved patient safety in the post-intervention period. Finally, our estimation for cost-saving may not be applicable to other health systems, as test-related costs vary greatly between countries.

In conclusion, reducing excessive preoperative testing is of crucial importance to achieving sustainable preoperative care. CDSS has demonstrated potential for helping clinicians adhere to preoperative testing guidelines. We observed that a closed-loop CDSS featuring an EHR-embedded algorithm for the automatic placing of preoperative test orders led to significant reductions in preoperative testing without increasing surgical reinterventions or same-day surgical cancellations due to incomplete testing, thus demonstrating reductions in healthcare expenditure and improvements in patient safety due to reducing risks associated with unnecessary testing.

Methods

Intervention

We developed a closed-loop CDSS which integrated a rule-based algorithm into the network EHR in close collaboration with anesthesiologists, surgeons, the hospital information technology and systems team, and hospital management. All surgical procedures were reviewed and classified according to surgical risk (Supplementary Note 2). Investigators (L.E.M.A. and F.M.L.) revised the catalog of surgical procedures offered by participating centers, classifying procedures by estimated surgical risk for complications based on the complexity of procedures and a priori (that is, without considering patient factors) risk of adverse events over a six-month period. We based our categorization on our knowledge of surgical procedures (more than 45 years of combined experience) and on the available literature, especially recent guidelines for preoperative antithrombotic treatment, which perform an exhaustive categorization of surgical procedures according to the risk of postoperative bleeding, and a rule-based, deterministic algorithm was developed to deploy automatic test orders based on current guidelines5,32,33 and expert opinion.

The algorithm considered inputs such as type of surgical procedure and clinical characteristics including age, sex, American Society of Anesthesiologists (ASA) physical status classification34, presence of heart disease, COPD, cancer, etc. The algorithm would place automatic orders for chest X-rays for patients undergoing high-risk surgery and presenting BMI > 40, a history of lung disease, or cancer, or those classified as heavy smokers (≥20 cigarettes per day). ECGs were ordered for patients >45 years old undergoing high-risk surgery, or those undergoing intermediate or low-risk surgery with a history of heart disease, cardiovascular risk factors, or lung disease. We designed a specific preoperative blood test order set, including a complete blood count, sodium and potassium, creatinine, and a basic coagulation panel (prothrombin time and activated partial thromboplastin time) which was automatically placed for patients undergoing moderate to high-risk surgery, and selected low-risk procedures based on patient’s clinical and demographic characteristics. When designing the test order set, we decided to focus on avoiding orders for those tests that we had observed as consistently over-ordered (blood chloride, albumin, and liver function). The algorithm would only order these tests for patients scheduled for intermediate and high-risk surgery with a history of cancer, liver disease, or chronic kidney disease. We also included rules for ordering blood typing, classifying surgical procedures according to bleeding risk32, and automatically ordering blood group typing (ABO, Rh, and irregular antibody screening) only for those surgeries with a high risk of blood loss.

We embedded the algorithm into the network’s custom EHR, Cassiopea®. Surgeons referring patients for preoperative evaluation respond to EHR prompts (obligatory dichotomic (yes/no) questions regarding clinical conditions such as the presence of heart disease, COPD, smoking status, cancer, etc.). Other inputs are automatically gleaned from EHR data, such as the type of surgical procedure, age, and sex. When surgeons complete the referral process over the EHR, preoperative test orders (outputs) are placed automatically, although the possibility of manual override exists (for example, surgeons can review the placed orders and delete any orders for tests that have recently been ordered by other physicians). Test results are automatically uploaded to the EHR for review by anesthesiologists during preoperative evaluation appointments.

We planned for an initial implementation period from September 2019 to December 2019, to account for staff onboarding, initial algorithm deployment, and technological troubleshooting. The implementation team, featuring members of the anesthesiology, surgery, and information technologies and systems departments, undertook staff education for both anesthesiology and surgery staff through written information and group workshops.

Regarding changes in preoperative testing during the COVID-19 period, apart from the automated test orders performed by the algorithm, the only additional protocol carried out during 2020–2021 was a required SARsCOV2 PCR test (nasal swab) for all patients admitted for elective or emergent surgery. SARSCoV2 PCR tests were ordered manually and were not included in our analysis. It is also important to note that, although the COVID-19 period served as a catalyst for the pre-existing “virtual pre-anesthesia clinic” in one of the participating centers (General Villalba Hospital), in which anesthesiologists review preoperative test results, clinical notes, and photos of the patient’s airway taken during an in-person appointment with a nurse practitioner, this did not affect the number of preoperative tests performed.

Study population

All patients undergoing surgical procedures from the thirteen surgical specialties (general surgery, maxillofacial surgery, pediatric surgery, plastic surgery, thoracic surgery, vascular surgery, dermatology, gynecology and obstetrics, neurosurgery, ophthalmology, ENT, traumatology and orthopedic surgery, and urology) at two academic hospitals in Madrid, Spain (Fundación Jiménez Díaz University Hospital and General Villalba University Hospital) from January 1st, 2018, to October 1st, 2023, were included in the analysis. We collected variables recorded as part of routine clinical care, such as age and sex. Where recorded, ASA class data were extracted from standardized fields from the EHR. The EHR database was queried for the number of chest X-rays, ECGs, and laboratory tests, including complete blood counts, coagulation studies, liver function, kidney function, and electrolytes. We also collected data on same-day surgery cancellation rates, which are recorded as part of routine hospital management, and surgical reintervention rates in the 30 days after initial surgery. Data for postoperative adverse events were recorded by the Coding and Clinical Audit Department as part of internal audits using the Agency for Healthcare Research and Quality Patient Safety Indicators (AHRQ PSIs), and included rates of postoperative bleeding, respiratory failure, pulmonary thromboembolism, deep vein thrombosis, sepsis, and wound dehiscence. AHRQ PSIs were calculated by the Coding and Clinical Audit Department, according to AHRQ Technical Specifications (Supplementary Note 1) using coding data from discharge reports to identify adverse events. The total number of patients included in the calculations differed according to indicator-specific criteria. Some common exclusion criteria were: patients younger than 18 years; patients with missing data for gender, age, date of surgery, or principal diagnosis; obstetric patients; patients undergoing same-day discharge; and indicator-specific preexisting conditions (such as pre-existing coagulation disorders for postoperative bleeding). For indicators such as wound dehiscence, only certain surgical procedures were included in the calculations as per AHRQ Technical Specifications. Data for surgical site infection were collected by the Infection Control Department as part of the Madrid health service’s yearly audit data collection and included rates of surgical site infection after colon surgery and after hip replacement surgery.

The primary endpoint of our study was to evaluate the effect of the algorithm on the change in the trend of the percentage of patients undergoing chest X-rays, ECGs, preoperative blood work, and blood typing as part of pre-procedural workup. Our secondary endpoint was to compare early surgical reintervention (<30 days) rates and same-day cancellation rates before and after the project’s implementation, and the rate of same-day cancellations due to insufficient preoperative testing.

Analysis

We chose an interrupted time series as the methodology for analyzing the primary endpoint. Time series analysis has been defined as one of the most robust quasi-experimental models for quality improvement projects, since randomization is often unfeasible, and blinding is usually impossible28,35. Briefly, an ITS design collects data from multiple time points both before and after an intervention (“interruption”). Pre-interruption data is modeled to allow estimation of the underlying secular trend, which is then extrapolated into the post-interruption time period, yielding a “counterfactual” estimation of what would have occurred in the absence of the intervention. Autocorrelation (a term denominating the characteristic of data collected over time by which data points that are close together in time tend to be more similar than data points further apart) was considered in the statistical analysis using the AR_SELECT_ORDER function, which automatically corrects for autocorrelation to improve the accuracy of the predictions (goodness of fit).

The interrupted time series analysis was carried out using an autoregressive model35. To analyze the effect of the algorithm we set the interruption on January 1st, 2020, coinciding with the end of the implementation period. The full dataset included a total of 69 time points (24 preintervention and 45 postintervention). Each ITS model included a linear monthly trend term for the preintervention period (‘Time’), a binary indicator variable for the months after algorithm implementation (‘Intervention’), capturing any level change in the outcome postintervention, and a linear monthly trend term only for the post-implementation period (‘Time-After’). These three terms allowed us to evaluate changes in the level and slope of the outcome after intervention. The model was estimated through conditional maximum likelihood estimation using the ARIMA function from the package ITS-Toolkit v0.0.1 and StatsModels v0.14.02036, and p-values and 95% confidence intervals were calculated using the same package. Automatic selection of the number of autocorrelation parameters was carried out using the AR_SELECT_ORDER function to optimize goodness of fit using the Akaike Information Criteria, Bayesian Information Criterion, and Hannan–Quinn Information Criterion scores. Visual inspection of residuals around predicted regression lines (standardized residual, histogram plus estimated density, and normal Q–Q) was also carried out.

We evaluated the absolute and relative reduction in a number of unnecessary preoperative tests at 45 months post-implementation by comparing fitted postimplementation rates and the projected rates using preintervention data (counterfactual effect). We calculated 95% CIs using the bootstrapping method described by Zhang et al. 37. The attributable reduction in preoperative test-related costs at 45 months post-implementation was estimated using counterfactual test ordering rates and current test costs.

Secondary endpoints included changes in the number of patients undergoing testing for liver function, albumin, or chloride. We also compared early surgical reintervention (<30 days) rates and same-day cancellation rates before and after implementation. Results were reported as mean (standard deviation), or absolute frequency (percentage) based on the nature of the variables. Differences in proportions were compared with a two-sided Chi-square test and differences in means or medians with a Mann–Whitney U-test. A p-value of less than <0.05 was considered statistically significant. Analyses were performed with Python 3.11.5.

The study protocol was approved by the Fundación Jiménez Díaz University Hospital Institutional Ethics Committee (EO084-24). The need for informed consent was waived due to the retrospective nature of the study and the fact that only pseudoanonymized data were included. SQUIRE 2.038 reporting guidelines were followed when writing the report.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Code availability

The underlying code for this study is not publicly available but may be made available to qualified researchers on reasonable request from the corresponding author.

References

Jablonski, S. G. & Urman, R. D. The growing challenge of the older surgical population. Anesthesiol. Clin. 37, 401–409 (2019).

Nepogodiev, D. & Bhangu, A. Sustainable surgery: roadmap for the next 5 years. Br. J. Surg. 109, 790–791 (2022).

Aronson, S., Sangvai, D. & McClellan, M. B. Why a proactive perioperative medicine policy is crucial for a sustainable population health strategy. Anesth. Analg. 126, 710–712 (2018).

Dossett, L. A., Edelman, A. L., Wilkinson, G. & Ruzycki, S. M. Reducing unnecessary preoperative testing. BMJ 379, e070118 (2022).

Mellin-Olsen, J., Staender, S., Whitaker, D. K. & Smith, A. F. The Helsinki Declaration on patient safety in anaesthesiology. Eur. J. Anaesthesiol. 27, 592–597 (2010).

Bernstein, J., Roberts, F. O., Wiesel, B. B. & Ahn, J. Preoperative testing for hip fracture patients delays surgery, prolongs hospital stays, and rarely dictates care. J. Orthop. Trauma 30, 78–80 (2016).

Wang, E. Y. et al. Environmental emissions reduction of a preoperative evaluation center utilizing telehealth screening and standardized preoperative testing guidelines. Resour. Conserv. Recycl. 171, 105652 (2021).

Sutton, R. T. et al. An overview of clinical decision support systems: benefits, risks, and strategies for success. npj Digital Med. 3, 1–10 (2020). 2020 3:1.

Ennis, J. et al. Clinical decision support improves physician guideline adherence for laboratory monitoring of chronic kidney disease: a matched cohort study. BMC Nephrol. 16, 1–11 (2015).

Karlsson, L. O. et al. A clinical decision support tool for improving adherence to guidelines on anticoagulant therapy in patients with atrial fibrillation at risk of stroke: a cluster-randomized trial in a Swedish primary care setting (the CDS-AF study). PLoS Med. 15, e1002528 (2018).

Magrath, M. et al. Impact of a clinical decision support system on guideline adherence of surveillance recommendations for colonoscopy after polypectomy. J. Natl. Compr. Cancer Netw. 16, 1321–1328 (2018).

Shalom, E., Shahar, Y., Parmet, Y. & Lunenfeld, E. A multiple-scenario assessment of the effect of a continuous-care, guideline-based decision support system on clinicians’ compliance to clinical guidelines. Int J. Med. Inf. 84, 248–262 (2015).

Sim, E. Y., Tan, D. J. A. & Abdullah, H. R. The use of computerized physician order entry with clinical decision support reduces practice variance in ordering preoperative investigations: a retrospective cohort study. Int J. Med. Inf. 108, 29–35 (2017).

Flamm, M. et al. Quality improvement in preoperative assessment by implementation of an electronic decision support tool. J. Am. Med. Inform. Assoc. 20, e91–e96 (2013).

Matulis, J., Liu, S., Mecchella, J., North, F. & Holmes, A. Choosing wisely: a quality improvement initiative to decrease unnecessary preoperative testing. BMJ Open Qual. 6, bmjqir.u216281.w6691 (2017).

Smetana, G. W. The conundrum of unnecessary preoperative testing. JAMA Intern Med. 175, 1359–1361 (2015).

Álvaro de la Parra, J. A. et al. Effect of an algorithm for automatic placing of standardised test order sets on low-value appointments and attendance rates at four Spanish teaching hospitals: an interrupted time series analysis. BMJ Open 14, e081158 (2024).

Morris, A. H. et al. Computer clinical decision support that automates personalized clinical care: a challenging but needed healthcare delivery strategy. J. Am. Med. Inform. Assoc. 30, 178–194 (2022).

Colicchio, T. K., Cimino, J. J. & Del Fiol, G. Unintended consequences of nationwide electronic health record adoption: challenges and opportunities in the post-meaningful use era. J. Med. Internet Res. 21, e13313 (2019).

Teisberg, E., Wallace, S. & O’Hara, S. Defining and implementing value-based health care: a strategic framework. Acad. Med. 95, 682 (2020).

Cassel, C. K. & Guest, J. A. Choosing wisely: helping physicians and patients make smart decisions about their care. JAMA 307, 1801–1802 (2012).

Sorenson, C., Japinga, M., Crook, H. & Mcclellan, M. Building a better health care system post-Covid-19: steps for reducing low-value and wasteful care. NEJM Catal. Innov. Care Deliv. 1, 1–10 (2020).

Dillström, M., Bjerså, K. & Engström, M. Patients’ experience of acute unplanned surgical reoperation. J. Surg. Res. 209, 199–205 (2017).

Dorobantu, D. M. et al. Factors associated with unplanned reinterventions and their relation to early mortality after pediatric cardiac surgery. J. Thorac. Cardiovasc. Surg. 161, 1155–1166.e9 (2021).

Deery, S. E. et al. Early reintervention after open and endovascular abdominal aortic aneurysm repair is associated with high mortality. J. Vasc. Surg. 67, 433–440.e1 (2018).

Wong, D. J. N. et al. Cancelled operations: a 7 day cohort study of planned adult inpatient surgery in 245 UK National Health Service hospitals. Br. J. Anaesth. 121, 730–738 (2018).

Brown, S. R. & Brown, J. Why do physicians order unnecessary preoperative tests? A qualitative study. Fam. Med. 43, 338–343 (2011).

Fan, E., Laupacis, A., Pronovost, P. J., Guyatt, G. H. & Needham, D. M. How to use an article about quality improvement. JAMA 304, 2279–2287 (2010).

Newsome, K., McKenny, M. & Elkbuli, A. Major and minor surgery: terms used for hundreds of years that have yet to be defined. Ann. Med. Surg. 66, 102409 (2021).

Routine preoperative tests for elective surgery: © NICE (2016) Routine preoperative tests for elective surgery. BJU Int. 121, 12–16 (2018).

Dell-Kuster, S. et al. Prospective validation of classification of intraoperative adverse events (ClassIntra): international, multicentre cohort study. BMJ 370, 2917 (2020).

Vivas, D. et al. Manejo perioperatorio y periprocedimiento del tratamiento antitrombótico: documento de consenso de SEC, SEDAR, SEACV, SECTCV, AEC, SECPRE, SEPD, SEGO, SEHH, SETH, SEMERGEN, SEMFYC, SEMG, SEMICYUC, SEMI, SEMES, SEPAR, SENEC, SEO, SEPA, SERVEI, SECOT y AEU. Rev. Española de. Cardiol.ía 71, 553–564 (2018).

Halvorsen, S. et al. ESC guidelines on cardiovascular assessment and management of patients undergoing non-cardiac surgery: developed by the task force for cardiovascular assessment and management of patients undergoing non-cardiac surgery of the European Society of Cardiology (ESC) Endorsed by the European Society of Anaesthesiology and Intensive Care (ESAIC). Eur. Heart J. 43, 3826–3924 (2022). 2022.

Mayhew, D., Mendonca, V. & Murthy, B. V. S. A review of ASA physical status— historical perspectives and modern developments. Anaesthesia 74, 373–379 (2019).

Penfold, R. B. & Zhang, F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad. Pediatr. 13, S38–S44 (2013).

Seabold, S. & Perktold, J. Statsmodels: econometric and statistical modeling with python. In Proceedings of the 9th Python in Science Conference 57–61 (SCIRP, Austin, 2010).

Zhang, F., Wagner, A. K., Soumerai, S. B. & Ross-Degnan, D. Methods for estimating confidence intervals in interrupted time series analyses of health interventions. J. Clin. Epidemiol. 62, 143–148 (2009).

Ogrinc, G. et al. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): revised publication guidelines from a detailed consensus process. BMJ Qual. Saf. 25, 986–992 (2016).

Acknowledgements

The authors would like to thank Juan José Serrano from the Red 4-H BI Department for his help with the acquisition of the data. We would like to thank the members of the Coding and Clinical Audit Department of the Fundación Jiménez Díaz University Hospital, in particular Dr. José Miguel Arce, Dr. Blanca Rodríguez Alonso, and Dr. Catalina Martín Cleary, for their help with the acquisition of safety data, as well as Dr María Dolores Martín Ríos and Dr Laura del Nido Varo of the Infection Control Departments for their contributions to this work. We would like to thank members of the Anesthesiology Department and Surgical Departments from the Fundación Jiménez Díaz University Hospital and General Villalba University Hospital for their support. This work did not receive any funding.

Author information

Authors and Affiliations

Contributions

L.E.M.A., J.L.G.M., M.d.O.R., J.S.A., J.A.A.d.l.P., C.C.S., F.M.L., and J.A.C. designed and executed the quality improvement intervention. B.P. drafted the manuscript. B.P., M.d.O.R., and J.S.A. were responsible for coordinating data extraction. M.A.M.C. performed data extraction. MAVG performed data analysis. All authors read and approved the final draft of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

J.L.G.M., L.E.M.A., B.P., M.A.M.C., and F.M.L. are employees of Quirónsalud Healthcare Network, and M.d.O.R., J.S.A., J.A.A.d.l.P., J.A.C., and C.C.S. form part of the Quirónsalud management team. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gracia Martínez, J.L., Pfang, B., Morales Coca, M.Á. et al. Implementing a closed loop clinical decision support system for sustainable preoperative care. npj Digit. Med. 8, 6 (2025). https://doi.org/10.1038/s41746-024-01371-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-024-01371-7