Abstract

Nasal endoscopy is crucial for the early detection of nasopharyngeal carcinoma (NPC), but its accuracy relies heavily on the clinician’s expertise, posing challenges for primary healthcare providers. Here, we retrospectively analysed 39,340 nasal endoscopic white-light images from three high-incidence NPC centres, utilising eight advanced deep learning models to develop an Internet-enabled smartphone application, “Nose-Keeper”, that can be used for early detection of NPC and five prevalent nasal diseases and assessment of healthy individuals. Our app demonstrated a remarkable overall accuracy of 92.27% (95% Confidence Interval (CI): 90.66%–93.61%). Notably, its sensitivity and specificity in NPC detection achieved 96.39% and 99.91%, respectively, outperforming nine experienced otolaryngologists. Explainable artificial intelligence was employed to highlight key lesion areas, improving Nose-Keeper’s decision-making accuracy and safety. Nose-Keeper can assist primary healthcare providers in diagnosing NPC and common nasal diseases efficiently, offering a valuable resource for people in high-incidence NPC regions to manage nasal cavity health effectively.

Similar content being viewed by others

Introduction

Nasopharyngeal carcinoma (NPC) is one of the most common malignancy of the head and neck, particularly in East and Southeast Asia1,2. Nonspecific early symptoms of NPC often lead to a delayed diagnosis, resulting in a poorer prognosis3,4. The 10-year survival rate of advanced cases typically ranges from 50% and 70%. In contrast, the 5-year survival rate for early NPC cases can approach 94%, highlighting the importance of early detection5,6,7,8,9. Thus, devising a technique for early detection of NPC during clinical examinations is the primary aim of this study.

Nasal endoscopy plays a crucial role in the early detection of NPC5,10. However, the accuracy of this examination relies heavily on the medical experience and expertise of the operators. Non-otolaryngology specialists, such as primary care doctors, emergency doctors, general practitioners, and paediatricians, may encounter difficulties in interpreting endoscopic images owing to professional obstacles and inadequate expertise. They often overlook the early signs of NPC and confuse them with those of nasal or nasopharyngeal diseases. This negligence frequently leads to missed diagnosis, misdiagnosis, and delayed referrals, resulting in patients missing critical treatment windows11,12,13. This issue is particularly pronounced in low- and middle-income countries where healthcare resources are limited and disease awareness is inadequate. Moreover, patients frequently overlook early NPC symptoms such as headaches and nasal congestion14,15. Concurrently, financial constraints also lead to delayed medical consultations, increasing the risk of missing crucial early diagnosis and treatment9,16. Consequently, to improve early detection rates and patient prognosis, it is essential to develop a novel, easy-to-use, and affordable method for the early detection of NPC using endoscopic images.

Intelligent diagnostic solutions based on smartphones have enormous potential in the medical field, especially given the rapid growth of smartphone capabilities and the widespread application of deep learning algorithms17. By analysing medical images immediately, these mobile health applications have demonstrated exceptional accuracy and efficiency in early disease identification and are becoming an emerging trend in healthcare18. For example, advances in the early diagnosis of disorders such as keratitis, biliary atresia, ear infections, skin cancer, and lupus have been made, with some applications outperforming human expert performance19,20,21,22,23,24. However, the creation of deep-learning smartphone applications for NPC remains an untapped research topic. Given the importance and complexity of identifying this malignancy, this gap highlights the importance and considerable potential of such applications. Therefore, exploring smartphone-based intelligent diagnostic methods for NPC promises to provide unique solutions for improving diagnostic accuracy and accessibility with significant scientific and practical significance.

In this study, we retrospectively collected 39,340 endoscopic white light images of 2134 NPC patients and 11,824 non-NPC patients without NPC from three centres in high-incidence areas of NPC and developed eight advanced deep learning models with different architectures. Through validation, testing, and comparison with nine experienced otolaryngologists, we ultimately developed a smartphone application based on the Swin Transformer model called Nose-Keeper to improve the accuracy and efficiency of healthcare workers (especially primary healthcare providers) in diagnosing NPC, raise public’s awareness of NPC, and refer patients to professional medical institutions in a timely manner.

Results

Internal evaluation of various deep learning models

Table 1 presents the average overall accuracy, standard deviation, and corresponding 95% CI of the eight models for the internal dataset. The results indicate that the eight models developed achieved encouraging results in diagnosing seven types of nasal endoscopic images using transfer learning strategies and a large-scale dataset. The average overall accuracy of all the models exceeded 0.92. SwinT performed the best among the eight models, with an average overall accuracy of 0.9515. ResNet had the lowest average overall accuracy among the eight models, reaching 0.9221. From the standard deviation perspective, the most stable model was MaxViT, followed by SwinT. In addition, PoolF exhibited the highest standard deviation and the worst stability. In addition, Supplementary Table 1 reports the time required for different models during the experimental process.

Table 2 reports the precision, sensitivity, specificity, and f1-score of eight models for diagnosing NPC. The experimental results showed that the developed models almost exceeded 0.9900 for all four indicators of NPC, except for the precision of ResNet. For sensitivity, SwinT achieved the best results, reaching 0.9984 (±0.0023) (0.9939–1.0000). For precision, specificity, and F1-score, PoolF achieved the best results, reaching 0.9959 (±0.0034) (0.9892–1.0000), 0.9992 (±0.0006) (0.9980–1.0000), and 0.9969 (±0.0012) (0.9945–0.9993), respectively. Table 3 reports the performance of the eight models developed in diagnosing five non-NPC diseases and normal samples. Based on the results of evaluation metrics and the potential impact of model architecture on the performance of external testing, we chose SwinT, PoolF, Xception, and ConvNeXt as candidate models for the smartphone application.

We initialised each candidate model parameter using the best weight from the training set and then used the corresponding internal validation set to determine the optimal temperature for each candidate model when using a temperature scaling strategy. Figure 1 shows the result changes of calibration metrics (Brier-score and Log-Loss) for each model on the internal test set. The experimental results indicate that the pre- and post-calibration results (Fig. 1a, b) of SwinT were the best among the candidate models. In comparison, the results of the remaining candidate models were obviously inferior to SwinT (Fig. 1c–h).

The result changes of Brier-Score and Log-Loss of each model for each category were plotted. a Brier-Score of SwinT. b Log-Loss of SwinT. c Brier-Score of Xception. d Log-Loss of Xception. e Brier-Score of PoolF. f Log-Loss of PoolF. g Brier-Score of ConvNeXt. h Log-Loss of ConvNeXt. Particularly, “ALL” in X-axis means the calibration performances of the entire external test set.

External evaluation of each candidate model

Four candidate models were tested using the external test set from LZH to further evaluate their performances in real-world clinical settings. Figure 2 shows the overall accuracy and confusion matrix of the four candidate models on the external test set. All the predicted results were shown after calibration. The confusion matrix was used to analyse the sensitivity and specificity of each model for a specific category. The experimental results indicate that SwinT (Fig. 2a) achieved a state-of-the-art performance, far superior to Xception (Fig. 2b), PoolF (Fig. 2c) and ConvNeXt (Fig. 2d). Concretely, it achieved the highest overall accuracy, reaching 92.27% (95% CI: 90.66%–93.61%). For NPC, the sensitivity and specificity of SwinT reached 96.39% (95% CI: 92.74%–98.24%) and 99.91% (95% CI: 99.47%–99.98%), respectively (Fig. 2a). For non-NPC categories, the sensitivity and specificity of the SwinT exceeded 86.00% and 95.00%, respectively. Figure 3 shows the ROC curves of the four candidate models. In terms of the ROC curve, the SwinT was also found to be the best model (Fig. 3a). SwinT’s AUCs for all seven categories were greater than 0.9900. For NPC, SwinT’s AUC reached 0.9999 (95% CI: 0.9996–1.0000). In contrast, the AUCs of Xception (Fig. 3b), PoolF (Fig. 3c), and ConvNeXt (Fig. 3d) for NPC were 0.9994 (95% CI: 0.9985–0.9999), 0.9916 (95% CI: 0.9856–0.9958), and 0.9989 (95% CI: 0.9976–0.9997), respectively,

However, compared to the internal test set, the performances of the four candidate models decreased on the external test set. We initially extrapolated that this phenomenon might be caused by the different imaging equipment and image acquisition process in the external test set. Figure 3 also presents the optimum Youden index results for each model for the different categories to compare the performance of the different models further. Experimental verification showed that SwinT was the best model for diagnosing NPC, with a Youden index of 0.992. SwinT also demonstrated excellent performance in diagnosing AH, AR, and CRP with Youden indices of 0.991, 0.925, and 0.924, respectively. The results fully demonstrated the application potential of SwinT in diagnosing different categories. Overall, the experimental results for the external test set indicated that SwinT performed the best among the four candidate models. Therefore, SwinT was chosen to deploy the smartphone application.

Robustness analysis of the SwinT

Figure 4 reports the results of the robustness analysis of the SwinT. Figure 4a illustrates examples from the external test set using various transformation strategies. Figure 4b–f details the performance metrics (overall accuracy, sensitivity, precision, specificity, and f1-score) of SwinT across the 12 enhanced datasets. The SwinT showed good robustness to rotation changes, likely benefiting from the training of the model with random rotation augmentations. For Gaussian blur transformation, the performance of the model decreased with an increase in the blur level, but the overall performance remained relatively stable. When using a slight decrease (Brightness I) or increase (Brightness II) in brightness, the accuracy of the model was minimally affected by interference, and its overall performance remained relatively steady. However, an excessive increase in brightness caused a significant decrease in model performance, affecting the sensitivity (AH, CRP, NPC, and RHI), precision (AH), F1-score (AH, CRP, and NPC), and specificity for AH. Similarly, when applying slight decreases (Saturation I) and increases (Saturation II) in the saturation, the accuracy of the model was not significantly affected. However, significant saturation enhancement (Saturation III) led to notable deviations in accuracy, particularly affecting the sensitivity for AH, precision for CRP and RHI, F1-score for AH and CRP, and specificity for CRP and RHI.

Heatmap and performance comparison between the SwinT and otolaryngologists

Figure 5 visually explains the model’s internal decision-making mechanism and represents the results of the human-machine comparison experiment. Figure 5a shows the heat maps generated by SwinT using the Grad-CAM algorithm for seven types of nasal endoscopic images. Experimental results indicated that SwinT could effectively focus on the key areas of each type of image. Visually, the colour distribution of the heat map conformed to the professional insights of otolaryngologists. For NPC, Grad-CAM effectively helped SwinT to highlight the lesion area.

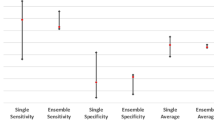

Figure 5b shows the performance of SwinT and the nine otolaryngologists in diagnosing different diseases. In Fig. 5b, the closer the colour was to green or yellow, the more accurate the doctor or model was in diagnosing the disease. The average sensitivity of the nine physicians for NPC was 0.8927. Among them, the best doctor to diagnose NPC was a doctor with eight years of clinical experience, with a sensitivity of 0.9433, and the worst doctor was a doctor with three years of clinical experience, with a sensitivity of 0.8247. Obviously, SwinT outperformed all experts in diagnosing NPC. Furthermore, for AR, SwinT outperformed all experts. Because SwinT was prone to misjudge CRP as an AH, its performance was not as good as that of experts. For AH, the SwinT was superior to most other physicians. The SwinT was superior to some doctors in terms of the DNS, NOR, and RHI scores. Figure 5c and Supplementary Fig. 1 show the performance differences between SwinT and the nine otolaryngologists in diagnosing various types of endoscopic images in more detail. Based on the optimum Youden index results of SwinT and otolaryngologists, we concluded that SwinT outperformed all otolaryngologists in diagnosing AH, AR, NPC, and RHI. When diagnosing the remaining three types of endoscopic images, SwinT was slightly inferior to some otolaryngologists (clinical experience: from five years to nine years).

Display of the smartphone application

The main function of Nose-Keeper (Fig. 6) is to read real-time images captured by a nasal endoscope connected to an Android phone with a Micro USB interface or to load local nasal endoscope images (for example, the user can capture images from other endoscope devices, and then the image is uploaded to the smartphone album) (Fig. 6a). Subsequently, by clicking the one-click detection button (Fig. 6b) on the application, the user can obtain the diagnosis results of the image using the AI model. In addition, we listed a heat map corresponding to the original image on the results page to enhance the security of the application and to remind users to pay attention to the diseased area actively (Fig. 6c). Nose-Keeper can also read multiple endoscopic images simultaneously and use a voting mechanism to further improve prediction accuracy (Supplementary Note 6). In particular, we listed reference images of various diseases and some common medical sense to improve the users’ understanding of diseases and medical procedures. Such an intelligent application will potentially help the majority of primary care providers who lack clinical experience and professional knowledge in diagnosing nasal diseases, and the public residing in high-risk areas will primarily judge whether the captured nasal endoscopic image contains NPC, common nasal cavities, and nasopharynx diseases. We tested the running speed of Nose-Keeper using four different Android smartphones (i.e. Xiaomi 14, Xiaomi 12S Pro, HUAWEI nova 12, and HUAWEI mate 60) at a network speed of 100 Mb/S. The results show that the time consumption is ~0.5 s to 1.1 s. For more detailed feature introductions and user pages of Nose-Keeper, please refer to Supplementary Fig. 2 and Supplementary Video 1.

Discussion

To the best of our knowledge, this study is the first to develop a smartphone application based on a deep learning model named Nose-Keeper to diagnose NPC and non-NPC effectively. To ensure the practicality of Nose-Keeper, we retrospectively collected 6,014 NPC white-light endoscopic images and 33,326 white-light endoscopic images of common diseases of the nasal cavity, nasopharynx, and normal nasal cavities from three hospitals and trained eight different deep learning models. In this study, to shorten the training time of the model and improve the generalisation of the model as much as possible, we used a popular transfer learning strategy. Through extensive evaluation and testing (including model metric comparison, model calibration, robustness analysis, and human-machine comparison), we found that the developed SwinT reached state-of-the-art application potential and encapsulated it into Nose-Keeper. Compared to nine otolaryngologists with different diagnostic experiences, Nose-Keeper outperformed all otolaryngologists in diagnosing NPC. For diseases other than NPC, the diagnostic performance of the model was comparable to that of most physicians. In addition, we used the Grad-CAM algorithm in the application to visually display the areas in the image that affect the decision-making results of the model, which effectively reminds users to pay attention to the lesion area. Because the Nose-Keeper is deployed on a cloud server, its operation is not affected by the hardware. Users only need to use the Internet to obtain diagnostic results from the Nose-Keeper in real-time.

Previously, researchers in the field of computer vision used CNNs to process various images. Researchers have recently begun to focus on the potential of ViTs in computer vision. Initially, ViTs were applied to natural language processing systems such as the recently popular large language models. Compared with traditional CNNs, ViTs rely on a self-attention mechanism to achieve better results in image processing. Considering that ViTs are attracting more and more attention and may be more suitable for processing endoscopic images, we tentatively trained three ViTs and three CNNs as well as two hybrid models in this work to obtain the best model. The experimental results demonstrated that most of the selected ViTs were better than convolutional neural networks for diagnosing nasal endoscopic images. Notably, we found that SwinT achieved state-of-the-art performance on both the internal and external test sets. From the perspective of its internal modelling mechanism, SwinT is essentially a hierarchical Transformer that uses shifted windows. SwinT constructs a hierarchical representation by starting from small-sized patches and gradually merging neighbouring patches in deeper Transformer layers25. By employing the shifted window-based self-attention, it only calculates self-attention within a local window, which greatly reduces the computational complexity. Meanwhile, in consecutive SwinT blocks, the shifted windowing scheme allows for cross-window connection, i.e. the model can shift the windows in a certain pattern to ensure that the lesion feature information flows between different windows. The unique mechanism of the SwinT enables it to maintain the advantages of the ViTs in modelling long-range dependencies while effectively capturing local information in nasal endoscopy images, thereby improving the accuracy in diagnosing NPC and non-NPC diseases. These findings provide practical guidance for nasal endoscopy researchers.

The World Health Organization (WHO) Global Observatory for eHealth (GOe) defines mHealth as medical and public health practice supported by mobile devices26. mHealth has the potential to change healthcare and support public health and primary healthcare27. With the booming development of smartphones in our daily lives, the combination of advanced medical technology and mHealth to manage diseases has become an unstoppable trend28. Nowadays, smartphones have become an indispensable part of daily life, and mHealth applications have found a place in healthcare systems29. On a global scale, the penetration rate of smartphones reached 68% in 202230. Especially in developing countries, the number of smartphone owners is constantly increasing, leading to significant social and economic changes31. In 2022, nearly nine in 10 internet users in Southeast Asia located in the high incidence area of NPC will use smartphones this year32. All these facts prompted us to develop a smartphone application called Nose-Keeper which utilise artificial intelligence and may drive the development of the primary healthcare industry. With the support of the Internet, especially in developing countries and areas with high incidences of NPC, Nose-Keeper can be used to improve the care of nasal health and reduce medical costs. It can be predicted that with the further increase of the penetration rate of smartphone devices and the Internet in the future, the availability of Nose Keeper will be greatly improved, and it can effectively provide as many patients with fast, convenient and non-professional primary diagnosis services.

NPC is a severe public health problem in the underdeveloped Southeast Asian countries. In Indonesia, 13,000 new cases of NPC are reported every year33. NPC is the fifth most common cancer and ninth most common cancer in Malaysia and Vietnam34,35. Unfortunately, NPC is characterised by its high invasiveness and early metastasis. Owing to the insufficient number of experts in these countries, lack of sufficient experience among most primary care providers, and lack of medical awareness and adequate financial income among patients themselves, misdiagnosis and delayed diagnosis often occur, seriously threatening the lives of patients. Many patients with NPC are at an advanced stage when they first seek treatment36. Clinically, NPC is considered to be the result of the interaction between Epstein–Barr virus (EBV) infection and genetic and environmental factors (such as drinking, smoking, and eating salted fish)37. Therefore, during the actual medical consultation process, general practitioners or doctors in primary care institutions can comprehensively consider the diagnostic results, risk factors, and EB antibody results of the Nose-Keeper, thereby further enhancing the reliability of the diagnostic results and improving timely referrals of patients with NPC. The general public can use Nose-Keeper as a daily nasal health management tool. Specifically, the public can purchase high-resolution electronic nasal endoscopes on the market, learn about and use the endoscope under the instructions and professionals, and regularly upload endoscopic images to Nose-Keeper. In addition, our experiments show that Nose-Keeper is even more sensitive to NPC than a clinician with nine years of experience; therefore, it may also be used as an auxiliary tool to reduce the work stress of experts. Overall, AI-based Nose-Keeper has the following significant advantages in early detection of NPC in clinical practice: Firstly, unlike human otolaryngologists, AI models do not suffer from fatigue or variability in performance, which can usually be caused by human factors like physical and mental stress. AI models can consistently implement the same standards across all cases, which is especially beneficial in mass screening scenarios where consistency is critical. Secondly, AI models can analyze large datasets more efficiently and fastly than humans. The large-scale dataset provides the models with rich learning materials, enabling them to learn how to effectively detect and diagnose ENT diseases in a short period of time. In contrast, human clinicians require extensive learning, training, and long-term experience accumulation. Thirdly, AI models, particularly deep learning models, are adept at recognising complex patterns in endoscopic images, such as subtle variations and early characteristics of ENT diseases that experienced otolaryngologists might ignore. This capability is crucial in the early detection of diseases from medical images, where early signs may be easily overlooked. Fourthly, AI can significantly reduce the diagnosis time, which is critical in managing a large number of patients. AI can process and analyze data much faster than human experts, enabling quicker decision-making that can be vital in clinical settings where time is of the essence, such as in emergency care.

Recently, several deep-learning studies on NPC have been published. In 2018, Li et al.38 used 28,966 white-light endoscopic images to develop a deep learning model for detecting the normal nasopharynx, NPC, and other nasopharyngeal malignant tumours. Their model performance surpassed that of experts, with an overall accuracy of 88.7%. In 2022, Xu et al.39 developed a deep learning model using 4,783 nasopharyngoscopy images to identify NPC and non-NPC (inflammation and hyperplasia). In 2023, He et al.40 developed a deep learning model using 2,429 nasal endoscopy video frames and an algorithm named You Only Look Once (YOLO) for real-time detection of NPC in endoscopy videos. The sensitivity of their system for the detection of NPC was 74.3%. Compared with these studies, our work has the following highlights. First, our dataset consisted of 39,340 white-light endoscopic images containing both NPC and six categories of non-NPCs. The scale of our dataset is the largest ever, and the images were from three hospitals in areas with a high incidence of NPC, indicating that our dataset is more representative of real-world data. Second, by verifying many deep learning models with different architectures, we found that the Vision Transformers using the transfer learning strategy were better than the convolutional neural network using the transfer learning strategy for diagnosing NPC, providing model development guidance for subsequent researchers. The third and most important point is that we developed the Nose-Keeper. This is the first smartphone-based cloud application for NPC diagnosis in the world and is convenient to operate. To ensure the safety of the Nose-Keeper, we used the Grad-CAM algorithm to explain the decision-making process of the Nose-Keeper model visually and compared it with that of nine otolaryngologists. In addition, Nose-Keeper can identify five common diseases in daily life that are similar in appearance and clinical manifestations to NPC. These category settings make Nose-Keeper more reasonable and reliable. In fact, in areas with a high incidence of NPC, the number of non-NPC patients is far greater than the number of NPC patients. Therefore, Nose-Keeper can also provide convenient primary diagnostic services for numerous non-NPC patients and reduce their concerns about NPC, thereby alleviating the burden on the local medical system. Likewise, people in low-incidence areas of NPC can use Nose-Keeper as a nasal health management tool in their daily lives.

The application of the Nose-Keeper in healthcare in developing countries is expected to have a significant positive impact. Its primary advantage is that it significantly improves the early diagnosis of NPC and other diseases. Given that developing countries may lack skilled medical professionals and advanced medical facilities, the preliminary screening features of smartphone applications can significantly enhance early detection rates. This is especially important for decreasing misdiagnoses or missed diagnoses, which helps save medical resources and reduces reliance on more expensive and sophisticated treatment plans. In addition, the Nose-Keeper can be used as an educational tool to raise public knowledge of NPC and other frequent nasopharyngeal disorders, particularly in places with limited medical education resources. Primary healthcare professionals (PHCPs) play a significant role in developing countries. A Nose-Keeper is an essential auxiliary tool for accurate and effective disease diagnosis. In the future, the Nose-Keeper will incorporate advanced artificial intelligence algorithms, improve its ability to recognise a broader spectrum of nasal and ENT ailments and serve as a comprehensive diagnostic tool for various nasal health issues. Simultaneously, artificial intelligence will be employed to deliver personalised health advice based on user-specific data, such as lifestyle and environmental changes, to lower the risk of illness. By analysing anonymised aggregate data from users, Nose-Keeper can discover trends and patterns in NPC and other ENT disorders, providing helpful information for public health policies and resource allocation, particularly in areas with high disease incidence. Finally, recognising the value of education in health management, the Nose-Keeper will undertake an education campaign on NPC and general nasal health to raise public awareness and early detection rates. The adoption of these functions and goals will allow Nose-Keeper to play an increasingly crucial role in the healthcare systems of developing countries.

We acknowledge several limitations in our study. First, the absence of prospective testing due to dataset constraints may influence the confidence healthcare professionals and common users have in the Nose-Keeper. Second, Nose-Keeper currently lacks an image quality control function, which can result in unreliable outputs when processing images of substandard quality, such as those with blur, uneven lighting, or improper angles. Third, the datasets used were collected with professional equipment in medical settings. Therefore, biases inherent in our dataset, such as variations in image quality, imaging protocol, and imaging view, might limit the applicability of Nose-Keeper in non-clinical environments. There is a need to validate the performance of our model on images captured by household endoscopes, which have not yet been included in our dataset. Fourth, Nose-Keeper requires internet connection to link up with the cloud-based AI model for lesions detection. For some developing countries that have not yet popularize the Internet, Nose-Keeper’s availability is relatively limited. Therefore, there is a need to develop lightweight AI models to eliminate the need for high-performance devices in our next-generation Nose-Keeper, so as to achieve both cloud and local deployment simultaneously. Fifth, all images used in this study were obtained from Chinese hospitals. For safety reasons, it is crucial to use endoscopic data from people living in other high-risk areas (Such as Vietnam, Indonesia and Malaysia) to test Nose-Keeper. Future work will focus on prospective testing, developing an independent image quality control system, collecting images of different people using household nasal endoscopes and constructing a larger dataset including various image qualities. Additionally, recognising that NPC diagnosis must also consider a patient’s clinical information like gender, age, dietary habits, and genetic factors, future studies will aim to develop multimodal deep learning models that integrate these variables.

The Transformer model has been widely applied in multimodal learning tasks and has achieved great success, becoming the backbone of multimodal models41. At the same time, using multimodal clinical information for medical diagnosis has become a common practice in modern medicine42. Especially, our work has fully validated that the Transformer model can effectively diagnose diseases such as NPC. Based on these insights, we have developed a strategic roadmap for integrating multimodal data (Fig. 7, Supplementary Note 4) to enhance the practicality of Nose-Keeper in the future. We believe that with the enrichment and improvement of multimodal endoscopic datasets, along with advancements in smartphones and deep learning technologies, Transformer-based multimodal models will be able to diagnose nasal and nasopharyngeal lesions in patients. More specifically, the future version of Nose-Keeper will use Transformer-based multimodal models to integrate clinical symptoms and risk factors (text input) of patients with endoscopic images (image input) from different regions of the nasal cavity (such as inferior turbinate, middle turbinate and nasopharynx), effectively detecting whether patients have NPC and other nasal diseases. Especially, the fused multimodal features can be further utilised to generate image captions, which can help PHCPs and the public better understand various lesions43. In addition, another key point of the future Nose-Keeper is to utilise model compression technology (Such as knowledge distillation, model pruning and model quantization) to reduce the device performance requirements of applications, thereby achieving local deployment and efficient cloud deployment.

In summary, this study represents a significant advancement in NPC diagnostics through developing Nose-Keeper, a smartphone cloud application based on cutting-edge Swin Transformer technology. Our findings demonstrate that Nose-Keeper surpasses the diagnostic sensitivity of nine professional otolaryngologists in diagnosing NPC. This was achieved by analysing a diverse and extensive nasal endoscopic dataset from multiple centres, supported by testing eight different deep learning models. Nose-Keeper’s user-friendly interface enables both medical professionals and the general public to upload endoscopic images and receive real-time AI-based preliminary screening results. Nose-Keeper is especially crucial in regions with limited access to specialized nasal endoscopy services since it not only improves primary diagnosis accuracy but also enhances awareness of NPC among primary healthcare providers and residents in high-risk areas. Furthermore, the study lays groundwork for future research into mobile healthcare and cancer detection, expanding the potential impact of DL-based smartphone app across other medical fields.

Methods

This study was divided into three main parts: collecting datasets, constructing deep learning models, and developing mobile applications. Figure 8 illustrates this workflow. The details of the study were comprehensively presented in the following subsections.

Construction of the multi-centre dataset

In this study, we reviewed and constructed a dataset from three hospitals located in high-risk areas of NPC. We retrospectively collected numerous white-light nasal endoscopic images of patients with NPC from the Department of Otolaryngology of the Second Affiliated Hospital of Shenzhen University (SZH) and the Department of Otolaryngology of Foshan Sanshui District People’s Hospital (FSH) between 1 January 2014 and 31 January 2023. Given that the early clinical symptoms of NPC (such as headache, cervical lymph node enlargement, nasal congestion, and nosebleeds) are similar to those of common diseases of the nasal cavity and nasopharynx44, and rhinosinusitis, allergic rhinitis, and chronic sinusitis may be risk factors for NPC45,46,47, we collected white-light nasal endoscopic images of non-NPC patients visiting SZH and FSH from the same period to develop deep learning models. In addition, Leizhou People’s Hospital (LZH) provided nasal endoscopic images of patients who visited the Department of Otolaryngology between 1 January 2015 and 31 April 2022. From an application perspective, including the images of non-NPC patients in the dataset can effectively improve the comprehensiveness and accuracy of the results of the deep learning model for diagnosing nasal endoscopic images. The collected images were divided into seven categories (Fig. 9): NPC (Fig. 9a), adenoidal hypertrophy (AH) (Fig. 9b), allergic rhinitis (AR) (Fig. 9c), chronic rhinosinusitis with nasal polyps (CRP) (Fig. 9d), deviated nasal septum (DNS) (Fig. 9e), normal nasal cavity and nasopharynx (NOR) (Fig. 9f) and rhinosinusitis (RHI) (Fig. 9g). Table 4 presents the detailed characteristics of the dataset.

These endoscopic images are given from different angles and parts for each disease type. a Nasopharyngeal carcinoma (NPC). b Adenoidal hypertrophy (AH). c Allergic rhinitis (AR). d Chronic rhinosinusitis with nasal polyps (CRP). e Deviated nasal septum (DNS). f Normal nasal cavity and nasopharynx (NOR). g Rhinosinusitis (RHI).

This study was approved by the Ethics Committee of the Second Affiliated Hospital of Shenzhen University, the Institutional Review Board of Leizhou People’s Hospital and the Ethics Committee of Foshan Sanshui District People’s Hospital (reference numbers: ‘BY-EC-SOP-006-01.0-A01’, ‘BYL20220531’ and SRY-KY-2023045’) and adhered to the principles of the Declaration of Helsinki. Due to the retrospective nature of the study and the use of unidentified data, the Institutional Review Boards of SZH, FSH and LZH exempted informed consent. Supplementary Note 5 presents more detailed ethics declarations and procedures.

Diagnostic criteria of the nasal endoscopic images

In this study, to ensure the accuracy of the endoscopic image labels, three otolaryngologists with over 15 years of clinical experience set the diagnostic criteria based on practical clinical diagnostic processes and reference literature. Specifically, the expert combined each patient’s endoscopic examination results with the corresponding medical history, record of clinical manifestations, computed tomography results, allergen testing (skin prick tests or serum-specific IgE tests), lateral cephalograms, histopathological examination results, and laboratory test results (such as nasal smear examination) to further review and confirm the diagnostic results of the existing nasal endoscopic images of each patient. A diagnosis based on the aforementioned medical records was considered the reference standard for this study. Our otolaryngologists independently reviewed all data in detail before any analysis and validated that each endoscopic image was correctly matched to a specific patient. Patients with insufficient diagnostic medical records were excluded. During the review process, when an expert doubted the diagnostic results of a particular patient, the three experts jointly made decisions on the patient’s medical records and various examination results to determine whether to include the patient in this study. The standard diagnosis for seven types of nasal endoscopic images in the dataset was as follows: (1) NPC: providing the standard diagnostic label for patient images directly based on histopathological examination results48,49; (2) Rhinosinusitis: further combining the patient’s medical history, clinical manifestations, and computed tomography examination50; (3) Chronic rhinosinusitis with nasal polyps: further combining the patient’s medical history, clinical manifestations, computed tomography results, and pathological tissue biopsy results51,52; (4) Allergic rhinitis: further combining the patient’s medical history, clinical manifestations, and allergen testing or laboratory methods53,54,55; (5) Deviation of nasal septum: further combine the patient’s medical history and clinical manifestations and secondary analyse and evaluate the shape of the nasal septum56. (6) Adenoid hypertrophy: further combine the patient’s medical history, clinical manifestations, or lateral cephalograms57,58. (7) Normal nasal cavity and nasopharynx: further combination of the patient’s medical history and clinical manifestations. The nasal mucosa of a normal nasal cavity should be light red, and its surface should be smooth, moist, and glossy. The nasal cavity and nasopharyngeal mucosa show no congestion, edema, dryness, ulcers, bleeding, vasodilation, neovascularization, or purulent secretions. Table 5 details the distribution of image categories across hospitals.

Deep transfer learning models

Transfer learning aims to improve the model performance on new tasks by leveraging pre-learned knowledge of similar tasks59. It has significantly contributed to medical image analysis, as it overcomes the data scarcity problem and saves time and hardware resources.

In this study, we effectively combined deep learning models, which are popular in artificial intelligence, with this powerful strategy. To build an optimal NPC diagnostic model, we studied Vision Transformers (ViTs), convolutional neural networks (CNNs), and hybrid models based on the latest advances in deep learning. Among them were (1) ViTs: Swin Transformer (SwinT)25, Multi-Axis Vision Transformer (MaxViT)60, and Class Attention in Image Transformers (CaiT)61. These models were selected for their ability to model long-range dependencies and their adaptability to various image resolutions, which are crucial for medical image analysis. These models represent the latest shifts in deep learning from convolutional to attention-based mechanisms, providing a fresh perspective on feature extraction. (2) CNNs: ResNet62, DenseNet63, and Xception64. CNNs have gradually become the mainstream algorithm for image classification since 2012, and have shown very competitive performance in medical image analysis tasks65. ResNet and DenseNet excel in addressing the vanishing gradient problem and strengthening feature propagation. Xception achieves a good trade-off between parameter efficiency and feature extraction capability using depth-wise separable convolutions. (3) Hybrid Models: PoolFormer (PoolF)66 and ConvNeXt67. PoolFormer enhances feature extraction by leveraging spatial pooling operations, while ConvNeXt incorporates ViT-inspired design concepts into CNNs to improve model performance, particularly in capturing global context through enhanced architectures. These architectures help improve model performance in downstream tasks by effectively utilising the advantages of CNNs and ViTs.

We then initialised the eight architectures using pretrained weights obtained by classifying the large natural image dataset ImageNet68. Because the original number of nodes of the classifiers of these networks was 1000, we reset the number of nodes of their classifiers to seven to fit our dataset. After completing initialization and adjusting the classifier, we did not choose to fine-tune some layers but instead performed comprehensive training on the entire model from scratch. Moreover, we performed probability thresholding based on Softmax.

Explainable artificial intelligence in medical image

In medical imaging, Explainable Artificial Intelligence (XAI) is critical because it fosters trust and understanding among medical practitioners and facilitates accurate diagnosis and treatment by elucidating the rationale behind AI-driven image analysis. In this study, we used Gradient-weighted Class Activation Mapping (Grad-CAM)69 to generate a corresponding heatmap. Red indicates high relevance, yellow indicates medium relevance, and blue indicates low relevance. Grad-CAM helps to visualise the regions of an image that are important for a particular classification. This is crucial in medical image classification, as it helps people understand which parts of the image contribute to model decision-making and validates whether the model focuses on disease-related features. By providing visual explanations through heat maps, Grad-CAM can help build trust among medical practitioners and the public regarding the decisions made by AI systems. However, Grad-CAM depends on the model’s architecture and may not provide more detailed insights. Besides, in real-time applications or scenarios requiring quick analysis, the computational demands of Grad-CAM might hinder its practicality.

Development process of models and smartphone applications

All the nasal endoscopic images were divided into two parts. The first part contained 38,073 images from SZH and FSH, which were used as the development dataset for training and validating the performance of the model. The development dataset was further divided into three parts in a 7:1:2 ratio, i.e. internal training, internal validation, and internal test sets. The second part contained 1267 images from the LZH, which were used as an external test set to test the performance of the model in real-world settings and verify the robustness of the model.

Before training the various networks, we resized all images to 224 × 224 × 3. Subsequently, the images were normalised and standardised using the mean [0.2394,0.2421,0.2381] and standard deviation [0.1849, 0.28, 0.2698] of the three channels. To avoid degradation of model performance when transitioning from the development set to the external test set, we used online data augmentation and early stopping strategies as well as model calibration techniques. Concretely, we first utilised the Transformers Library provided by Pytorch to automatically transform (RandomRotation, RandomAffine, GaussianBlur and Color Jitter) the image inputs during training to improve the robustness and generalisation ability of the models. During the training process, the loss functions of all models uniformly used the cross-entropy loss function, and we employed the AdamW optimiser with a 0.001 initial learning rate, β1 of 0.9, β2 of 0.999, and weight-decay of 0.0001 to optimise eight models’ parameters. We set the number of epochs to 150 and used a batch size of 64 for each model training. The we adopted an early stopping strategy, which meant that the model training will be stopped automatically stopped when its accuracy (patience and min_delta were set to 10 and 0.001, respectively) on the internal validation set no longer significantly improved for some time, thereby preventing overfitting. We calibrated each model using an internal validation set with temperature scaling, a method for calibrating deep learning models and assessed the calibration performance using the Brier-Score and Log-Loss. During the validation and inference stages, the model’s image preprocessing process was consistent with the training stage, but automatic image transformation was no longer implemented. We used the PyTorch framework (version 2.1), a computer with the Ubuntu 20.04 system and an NVIDIA GeForce RTX 4090 to complete the entire experiment. The weights of all models were saved in ‘Pth’ format.

In this study, we developed a responsive and user-friendly Android application that prioritises maintainability and scalability. Various software engineering principles and practices (e.g. separation of concerns and dependency Inversion) were followed to ensure that the Nose-keeper application maintain consistent performance and reliability across a diverse range of Android devices with varying hardware capabilities. Additionally, we adopted a responsive layout and conducted multiple user tests and iterative feedback to ensure that Nose-Keeper’s user interface is simple and easy to use and can adapt to various screen sizes, different screen orientations and device states. Utilising Java for native Android development, we embraced the MVVM design pattern for application modularisation, incorporating bidirectional data binding for seamless UI and data synchronisation. Our tech stack included Retrofit for network requests alongside third-party libraries like ButterKnife, Gson, Glide, EventBus, and MPAndroidChart for enhanced functionality and user experience, complemented by custom animations and NDK for hardware interaction. At the backend, we leveraged SSM (Spring + SpringMVC + MyBatis), Nginx, and MySQL for a high-performance architecture. For database, we used MySQL to manage data and adopted Redis for caching. The backend of the application and deep learning model were deployed on a high-performance Cloud Server (Manufacturer: Tencent; Equipment Type: Standard Type S6; Operating System: Centos 7.6; CPU: Intel® Xeon® Ice Lake; Memory: DDR4) with Nginx load balancing to optimise server resource utilisation (See Supplementary Note 1 for details). To ensure the security of applications and personal privacy data, we used encryption protocols and algorithms and toolkits that comply with industry standards (See Supplementary Note 2 for details). When utilising Nose-Keeper, all input images must go through an image preprocessing pipeline consistent with the model inference stage.

Model evaluation and statistical analysis

For the development datasets (SZH and FSH), eight models were evaluated using five standard metrics: overall accuracy (Eq. (1), precision (Eq. (2), sensitivity (Eq. (3), specificity (Eq. (4), and f1-score (Eq. (5). The definitions of these five metrics were as follows (See Supplementary Note 3 for details).

To avoid performance uncertainty caused by random splitting of the development dataset, we used a fivefold cross-validation strategy to evaluate the potential of various models on the development dataset and then selected four more excellent models from the eight models that can be used for the smartphone application based on the quality of the metric results. After selecting the four candidate models, we used a confusion matrix and Receiver Operating Characteristic (ROC) curve to further evaluate the performance of the candidate models in an external test set (LZH). A larger area under the ROC curve (AUC) indicated better performance. We used the best model to develop a smartphone application. Statistical analyses were performed using Python 3.9. Owing to the large sample size of the internal dataset and the use of fivefold cross-validation, we used the normal approximation to calculate the 95% confidence intervals (CI) of overall accuracy, precision, sensitivity, specificity, and f1-score. In the external test set, we used an Empirical Bootstrap with 1000 replicates to calculate the 95% CI of the AUC. The 95% CIs of overall accuracy, sensitivity and specificity were calculated using the Wilson Score approach in the Statsmodels package (version 0.14.0).

Analysing the robustness of the deep learning models via data augmentation

The use of images with different data augmentations to test the model can reveal its adaptability to input changes and analyse its robustness70. In particular, data augmentation simulates possible image transformations in practical applications, thereby testing the stability and performance of a model when faced with unseen or changing images. This strategy helps developers identify the potential weaknesses of the model, guide subsequent improvements, and enhance the application reliability of the model in complex and ever-changing environments. We used an external test set to analyse the prediction result changes of the model under Gaussian blur, Saturation changes, Image rotation and Brightness changes. Prior to testing the model, we augmented the external test set using Pillow (version 9.3.0). For each transformation, we assigned different parameter values to the built-in functions of Pillow, resulting in 12 enhanced datasets from the external datasets.

Human-machine comparison experiment

The representativeness of the external test set is crucial for fully comparing the performance differences between AI and human experts. Therefore, when retrospectively collecting endoscopic images, in addition to ensuring the accuracy of image labels, our expert team also fully considered the severity of lesions, different stages of disease, and differences in appearance in each endoscopic image. Meanwhile, the expert group also ensured as much as possible the age difference and gender balance of the entire dataset. Especially, the time span of external test set has reached five years. We recruited nine otolaryngologists with different clinical experiences from three institutions, i.e. one year (two otolaryngologists), three years (one otolaryngologist), four years (one otolaryngologist), five years (one otolaryngologist), six years (one otolaryngologist), eight years (two otolaryngologists), and nine years (one otolaryngologist). Before each expert independently evaluated the external test set, we shuffled dataset and renamed each image as “test_xxxx. jpg” and distributed it to all experts. We required experts to independently evaluate each endoscope within a specified time frame to simulate the physical and mental stress faced by experts in actual clinical settings, which further reflects the efficiency of AI. Notably, we prohibited experts from consulting diagnostic guidelines and mutual communication. All expert evaluation results were anonymized and automatically verified through a python program. Finally, we plotted a diagnostic performance heatmap, confusion matrix, ROC curve, and optimal Youden-index to comprehensively and intuitively demonstrate the performance differences between AI and clinicians in diagnosing different diseases.

Data availability

A subsample of the internal test set with 110 images per diagnostic class is available upon reasonable request from the authors.

Code availability

The custom codes and model weights for Nose-Keeper development and evaluation in this study are accessible on GitHub (https://github.com/YubiaoYue/Nose-Keeper).

References

Siak, P. Y., Khoo, A. S.-B., Leong, C. O., Hoh, B.-P. & Cheah, S.-C. Current status and future perspectives about molecular biomarkers of nasopharyngeal carcinoma. Cancers 13, 3490 (2021).

Tian, Y. et al. MiRNAs in radiotherapy resistance of nasopharyngeal carcinoma. J. Cancer 11, 3976–3985 (2020).

Tang, L.-L. et al. The Chinese Society of Clinical Oncology (CSCO) clinical guidelines for the diagnosis and treatment of nasopharyngeal carcinoma. Cancer Commun. 41, 1195–1227 (2021).

Sung, H. et al. Global Cancer Statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. 71, 209–249 (2021).

Tabuchi, K., Nakayama, M., Nishimura, B., Hayashi, K. & Hara, A. Early detection of nasopharyngeal carcinoma. Int. J. Otolaryngol. 2011, e638058 (2011).

Liang, H. et al. Survival impact of waiting time for radical radiotherapy in nasopharyngeal carcinoma: A large institution-based cohort study from an endemic area. Eur. J. Cancer 73, 48–60 (2017).

Yi, J. et al. Nasopharyngeal carcinoma treated by radical radiotherapy alone: Ten-year experience of a single institution. Int. J. Radiat. Oncol.*Biol.*Phys. 65, 161–168 (2006).

Su, S.-F. et al. Treatment outcomes for different subgroups of nasopharyngeal carcinoma patients treated with intensity-modulated radiation therapy. Chin. J. Cancer 30, 565–573 (2011).

Wu, Z.-X., Xiang, L., Rong, J.-F., He, H.-L. & Li, D. Nasopharyngeal carcinoma with headaches as the main symptom: a potential diagnostic pitfall. J. Cancer Res. Ther. 12, 209 (2016).

Abdullah, B., Alias, A. & Hassan, S. Challenges in the management of nasopharyngeal carcinoma: a review. Malays. J. Med Sci. 16, 50–54 (2009).

Siti-Azrin, A. H., Norsa’adah, B. & Naing, N. N. Prognostic factors of nasopharyngeal carcinoma patients in a tertiary referral hospital: a retrospective cohort study. BMC Res. Notes 10, 705 (2017).

Balachandran, R. et al. Exploring the knowledge of nasopharyngeal carcinoma among medical doctors at primary health care level in Perak state, Malaysia. Eur. Arch. Otorhinolaryngol. 269, 649–658 (2012).

Fles, R., Wildeman, M. A., Sulistiono, B., Haryana, S. M. & Tan, I. B. Knowledge of general practitioners about nasopharyngeal cancer at the Puskesmas in Yogyakarta, Indonesia. BMC Med. Educ. 10, 81 (2010).

Adham, M. et al. Current status of cancer care for young patients with nasopharyngeal carcinoma in Jakarta, Indonesia. PLoS ONE 9, e102353 (2014).

Qu, L. G., Brand, N. R., Chao, A. & Ilbawi, A. M. Interventions addressing barriers to delayed cancer diagnosis in low‐ and middle‐income countries: a systematic review. Oncologist 25, e1382–e1395 (2020).

Fles, R. et al. The role of Indonesian patients’ health behaviors in delaying the diagnosis of nasopharyngeal carcinoma. BMC Public Health 17, 510 (2017).

Luxton, D. D., McCann, R. A., Bush, N. E., Mishkind, M. C. & Reger, G. M. mHealth for mental health: Integrating smartphone technology in behavioral healthcare. Prof. Psychol. Res. Pract. 42, 505–512 (2011).

Göçeri, E. Impact of deep learning and smartphone technologies in dermatology: automated diagnosis. In Proc. 2020 Tenth International Conference on Image Processing Theory, Tools and Applications (IPTA) 1–6 (IEEE, 2020). https://doi.org/10.1109/IPTA50016.2020.9286706.

Li, Z. et al. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat. Commun. 12, 3738 (2021).

Zhou, W. et al. Ensembled deep learning model outperforms human experts in diagnosing biliary atresia from sonographic gallbladder images. Nat. Commun. 12, 1259 (2021).

Wu, Z. et al. Deep learning for classification of pediatric otitis media. Laryngoscope 131, E2344–E2351 (2021).

Chen, Y.-C. et al. Smartphone-based artificial intelligence using a transfer learning algorithm for the detection and diagnosis of middle ear diseases: a retrospective deep learning study. eClinicalMedicine 51, 101543 (2022).

Oztel, I., Oztel, G. Y. & Sahin, V. H. Deep learning-based skin diseases classification using smartphones. Adv. Intell. Syst. https://doi.org/10.1002/aisy.202300211 (2023).

Wu, H. et al. A deep learning-based smartphone platform for cutaneous lupus erythematosus classification assistance: simplifying the diagnosis of complicated diseases. J. Am. Acad. Dermatol. 85, 792–793 (2021).

Liu, Z. et al. Swin transformer: hierarchical vision transformer using shifted windows. In Proc. 2021 IEEE/CVF International Conference on Computer Vision (ICCV) 9992–10002 (IEEE, 2021). https://doi.org/10.1109/ICCV48922.2021.00986.

Rowland, S. P., Fitzgerald, J. E., Holme, T., Powell, J. & McGregor, A. What is the clinical value of mHealth for patients? NPJ Digit Med. 3, 4 (2020).

Aboye, G. T., Vande Walle, M., Simegn, G. L. & Aerts, J.-M. Current evidence on the use of mHealth approaches in Sub-Saharan Africa: a scoping review. Health Policy Technol. 12, 100806 (2023).

Li, R.-Q. et al. mHealth: A smartphone-controlled, wearable platform for tumour treatment. Mater. Today 40, 91–100 (2020).

Zakerabasali, S., Ayyoubzadeh, S. M., Baniasadi, T., Yazdani, A. & Abhari, S. Mobile health technology and healthcare providers: systemic barriers to adoption. Health. Inf. Res. 27, 267–278 (2021).

Global smartphone penetration 2016-2022. Statista https://www.statista.com/statistics/203734/global-smartphone-penetration-per-capita-since-2005/.

Messner, E.-M., Probst, T., O’Rourke, T., Stoyanov, S. & Baumeister, H. mHealth Applications: Potentials, Limitations, Current Quality and Future Directions. In Proc. Digital Phenotyping and Mobile Sensing: New Developments in Psychoinformatics (eds. Baumeister, H. & Montag, C.) 235–248 (Springer International Publishing, Cham, 2019). https://doi.org/10.1007/978-3-030-31620-4_15.

Rising smartphone usage paves way for ecommerce opportunities in Southeast Asia. EMARKETER https://www.emarketer.com/content/rising-smartphone-usage-paves-way-ecommerce-opportunities-southeast-asia.

Adham, M. et al. Nasopharyngeal carcinoma in Indonesia: epidemiology, incidence, signs, and symptoms at presentation. Chin. J. Cancer 31, 185–196 (2012).

Dung, T. N. et al. Epstein–Barr virus-encoded RNA expression and its relationship with the clinicopathological parameters of Vietnamese patients with nasopharyngeal carcinoma. Biomed. Res. Ther. 10, 5924–5933 (2023).

Linton, R. E. et al. Nasopharyngeal carcinoma among the Bidayuh of Sarawak, Malaysia: history and risk factors (Review). Oncol. Lett. 22, 1–8 (2021).

Long, Z. et al. Trend of nasopharyngeal carcinoma mortality and years of life lost in China and its provinces from 2005 to 2020. Int. J. Cancer 151, 684–691 (2022).

Lang, J., Hu, C., Lu, T., Pan, J. & Lin, T. Chinese expert consensus on diagnosis and treatment of nasopharyngeal carcinoma: evidence from current practice and future perspectives. Cancer Manag. Res. 11, 6365–6376 (2019).

Li, C. et al. Development and validation of an endoscopic images-based deep learning model for detection with nasopharyngeal malignancies. Cancer Commun. 38, 59 (2018).

Xu, J. et al. Deep learning for nasopharyngeal carcinoma identification using both white light and narrow-band imaging endoscopy. Laryngoscope 132, 999–1007 (2022).

He, Z. et al. Deep learning for real-time detection of nasopharyngeal carcinoma during nasopharyngeal endoscopy. iScience 26, 107463 (2023).

Xu, P., Zhu, X. & Clifton, D. A. Multimodal learning with transformers: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 45, 12113–12132 (2023).

Zhou, H.-Y. et al. A transformer-based representation-learning model with unified processing of multimodal input for clinical diagnostics. Nat. Biomed. Eng. 7, 743–755 (2023).

Ayesha, H. et al. Automatic medical image interpretation: state of the art and future directions. Pattern Recognit. 114, 107856 (2021).

Lee, H. M., Okuda, K. S., González, F. E. & Patel, V. Current Perspectives on Nasopharyngeal Carcinoma. In Proc. Human Cell Transformation: Advances in Cell Models for the Study of Cancer and Aging (eds. Rhim, J. S., Dritschilo, A. & Kremer, R.) 11–34 (Springer International Publishing, Cham, 2019). https://doi.org/10.1007/978-3-030-22254-3_2.

Chung, S.-D., Wu, C.-S., Lin, H.-C. & Hung, S.-H. Association between allergic rhinitis and nasopharyngeal carcinoma: a population-based study. Laryngoscope 124, 1744–1749 (2014).

Hung, S.-H., Chen, P.-Y., Lin, H.-C., Ting, J. & Chung, S.-D. Association of rhinosinusitis with nasopharyngeal carcinoma: a population-based study. Laryngoscope 124, 1515–1520 (2014).

Huang, P.-W., Chiou, Y.-R., Wu, S.-L., Liu, J.-C. & Chiou, K.-R. Risk of nasopharyngeal carcinoma in patients with chronic rhinosinusitis: a nationwide propensity score matched study in Taiwan. Asia-Pac. J. Clin. Oncol. 17, 442–447 (2021).

Irekeola, A. A. & Yean Yean, C. Diagnostic and prognostic indications of nasopharyngeal carcinoma. Diagnostics 10, 611 (2020).

Yuan, Y. et al. Early screening of nasopharyngeal carcinoma. Head. Neck 45, 2700–2709 (2023).

Dass, K. & Peters, A. T. Diagnosis and management of rhinosinusitis: highlights from the 2015 practice parameter. Curr. Allergy Asthma Rep. 16, 29 (2016).

Bhattacharyya, N. & Fried, M. P. The accuracy of computed tomography in the diagnosis of chronic rhinosinusitis. Laryngoscope 113, 125–129 (2003).

Bhattacharyya, N. & Lee, L. N. Evaluating the diagnosis of chronic rhinosinusitis based on clinical guidelines and endoscopy. Otolaryngol. Head. Neck Surg. 143, 147–151 (2010).

Nevis, I. F., Binkley, K. & Kabali, C. Diagnostic accuracy of skin-prick testing for allergic rhinitis: a systematic review and meta-analysis. Allergy Asthma Clin. Immunol. 12, 20 (2016).

M., S., Gopal, S., P.M., R., C.R.K., B. & N., R. A study on the significance of nasal smear eosinophil count and blood absolute eosinophil count in patients with allergic rhinitis of varied severity of symptoms. Indian J. Otolaryngol. Head Neck Surg. 75, 3449–3452 (2023).

Testera-Montes, A., Jurado, R., Salas, M., Eguiluz-Gracia, I. & Mayorga, C. Diagnostic tools in allergic rhinitis. Front. Allergy 2, 721851 (2021).

Janovic, N., Janovic, A., Milicic, B. & Djuric, M. Is computed tomography imaging of deviated nasal septum justified for obstruction confirmation? Ear Nose Throat J. 100, NP131–NP136 (2021).

Saedi, B., Sadeghi, M., Mojtahed, M. & Mahboubi, H. Diagnostic efficacy of different methods in the assessment of adenoid hypertrophy. Am. J. Otolaryngol. 32, 147–151 (2011).

Pereira, L. et al. Prevalence of adenoid hypertrophy: a systematic review and meta-analysis. Sleep. Med. Rev. 38, 101–112 (2018).

Kim, H. E. et al. Transfer learning for medical image classification: a literature review. BMC Med Imaging 22, 69 (2022).

Tu, Z. et al. MaxViT: Multi-axis Vision Transformer. In Proc. Computer Vision – ECCV 2022 (eds. Avidan, S., Brostow, G., Cissé, M., Farinella, G. M. & Hassner, T.) 459–479 (Springer Nature Switzerland, Cham, 2022). https://doi.org/10.1007/978-3-031-20053-3_27.

Touvron, H., Cord, M., Sablayrolles, A., Synnaeve, G. & Jégou, H. Going deeper with Image Transformers. In Proc. 2021 IEEE/CVF International Conference on Computer Vision (ICCV) 32–42 (IEEE, 2021). https://doi.org/10.1109/ICCV48922.2021.00010.

He, K., Zhang, X., Ren, S. & Sun, J. Deep Residual Learning for Image Recognition. In Proc. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778 (IEEE, 2016). https://doi.org/10.1109/CVPR.2016.90.

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. In Proc. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2261–2269 (IEEE, 2017). https://doi.org/10.1109/CVPR.2017.243.

Chollet, F. Xception: deep learning with depthwise separable convolutions. In Proc. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1800–1807 (IEEE, 2017). https://doi.org/10.1109/CVPR.2017.195.

Dai, Y., Gao, Y. & Liu, F. TransMed: transformers advance multi-modal medical image classification. Diagnostics 11, 1384 (2021).

Yu, W. et al. MetaFormer is actually what you need for vision. In Proc. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 10809–10819 (IEEE, 2022). https://doi.org/10.1109/CVPR52688.2022.01055.

Liu, Z. et al. A ConvNet for the 2020s. In Proc. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 11966–11976 (IEEE, 2022). https://doi.org/10.1109/CVPR52688.2022.01167.

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In Proc. 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009). https://doi.org/10.1109/CVPR.2009.5206848.

Selvaraju, R. R. et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. In Proc. 2017 IEEE International Conference on Computer Vision (ICCV) 618–626 (IEEE, 2017). https://doi.org/10.1109/ICCV.2017.74.

Young, A. T. et al. Stress testing reveals gaps in clinic readiness of image-based diagnostic artificial intelligence models. npj Digit. Med. 4, 1–8 (2021).

Acknowledgements

The author is supported by the NSF of Guangdong Province (No.2022A1515011044, No.2023A1515010885), and the project of promoting research capabilities for key constructed disciplines in Guangdong Province (No.2021ZDJS028).

Author information

Authors and Affiliations

Contributions

Conception and design: Y.Y., X.Z., H.L., and Z.L. Funding obtainment: Z.L. Provision of study data: X.Z., F.Z., K.Z., and L.L. Collection and assembly of data: X.Z., F.Z., J.X., and L.L. Data analysis and interpretation: Y.Y., X.Z., and Z.L. Manuscript writing: Y.Y., X.Z., H.L., and Z.L. All authors have read and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yue, Y., Zeng, X., Lin, H. et al. A deep learning based smartphone application for early detection of nasopharyngeal carcinoma using endoscopic images. npj Digit. Med. 7, 384 (2024). https://doi.org/10.1038/s41746-024-01403-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-024-01403-2

This article is cited by

-

A Hierarchical-approximation-based Interpretable Multi-view TSK Fuzzy Classification for Diverse Aggregates of Fuzzy Rules

International Journal of Control, Automation and Systems (2025)