Abstract

Early screening and evaluation of infant motor development are crucial for detecting motor deficits and enabling timely interventions. Traditional clinical assessments are often subjective, without fully capturing infants’ “real-world” behavior. This has sparked interest in portable, low-cost technologies to objectively and precisely measure infant motion at home, with a goal of enhancing ecological validity. In this systematic review, we explored the current landscape of portable, technology-based solutions to assess early motor development (within the first year), outlining the prevailing challenges and future directions. We reviewed 66 publications, which utilized video, sensors, or a combination of technologies. There were three key applications of these technologies: (1) automating clinical assessments, (2) illuminating new measures of motor development, and (3) predicting developmental outcomes. There was a promising trend toward earlier and more accurate detection using portable technologies. Additional research and demographic diversity are needed to develop fully automated, robust, and user-friendly tools. Registration & Protocol OSF Registries https://doi.org/10.17605/OSF.IO/R6JAE.

Similar content being viewed by others

Introduction

An estimated 1 in 6 children in the United States grapple with motor deficits that can profoundly affect their mobility, development, and quality of life1. For many children, motor deficits (such as from developmental delay or underlying disorder) are not detected until two years of age or older2,3,4,5. This extended timeline underscores the difficulty of early detection using current healthcare practices. One barrier to early detection is that motor deficits in very young children can be subtle and easily overlooked, especially for mild symptoms. Motor deficits may not be evident until the child has missed critical motor milestones or demonstrates abnormal movement patterns later in life. Other barriers may include a lack of caregiver education and limited access to healthcare resources. Detecting motor deficits earlier (i.e., within the first year of life) would improve our ability to deliver targeted interventions during the period of maximal neuroplasticity for the developing brain6, thereby improving lifelong outcomes7,8.

Current gold standard assessments of motor development, such as the General Movements Assessment (GMA) or the Hammersmith Infant Neurological Examination (HINE), though widely used, present several critical challenges9,10,11. These assessments require specialized training12 and can be highly subjective, relying on the expertise and experience of the examiner13. They also are administered at specific times of development; for example, though GMA can be performed before 5 months, the GMA is most predictive when it is administered within the narrow timeframe between 3-5 months of age12. Other tools for detection and diagnosis, such as magnetic resonance imaging (MRI) or additional long-term clinical testing, are costly and may be difficult to schedule. Due to limited clinical and financial resources, clinical tests are often not administered for infants at low risk or with mild symptoms14,15. However, these infants may still be diagnosed with neurologic dysfunctions later in life. Thus, there is a critical need for easy-to-use, quantitative tools to assess infant motor development, which would transform our capacity for early detection both in the clinic and at home.

To address this need, researchers have been pursuing portable, low-cost technological alternatives, such as wearable sensors or video recordings, to objectively measure and evaluate various facets of infant motor development16,17,18,19,20,21,22. These technologies are less obtrusive, more affordable, and more user-friendly, making them feasible to implement across the clinic, home, and community settings. Data from these technologies can be collected at a high frequency (providing continuous, high-resolution information), and due to their digital, quantitative nature they can be analyzed computationally and objectively (not relying on manual rating), thereby reducing the need for specialized clinical training and mitigating interrater variability13,23,24,25,26. As such, portable technologies may offer an accessible, accurate, and digital approach to evaluate motor development, facilitating early detection and intervention for a broader population of infants.

While a growing number of studies have attempted to develop early detection strategies using portable technologies, the landscape of these technologies and their relative effectiveness have not been sufficiently evaluated. Previous reviews have predominantly focused on automatically scoring the GMA or predicting the presence of cerebral palsy (CP), neglecting the potential applications of portable technologies to evaluate other neurodevelopmental disorders. Moreover, the differences in study design, data collection procedures, analysis techniques, and demographic cohorts, have not been systematically evaluated17,18,20,21,22. To better understand the state-of-the-art in this field, we conducted a systematic review of portable technologies to assess early infant motor development. We included publications using portable technologies to evaluate infant motor development across three specific domains: automatically scoring standardized pediatric assessments (Automate), providing illuminative new measures to quantify or track underlying motor deficits (Illuminate), and/or predicting developmental outcomes for infants at risk of developmental disabilities or disorders (Predict)27. Our objectives were twofold: (1) to summarize the state of science of portable technologies to assess motor development in infants younger than 1 year old, and (2) to identify the limitations, challenges, and future directions of these technologies for clinical translation. Specifically, we focused on technologies that could reasonably be deployed into the home or community settings. We only considered publications that tested the performance of these technologies on infants with motor delay or deficit.

Results

A total of 3722 publications were identified from the initial literature search. After removing duplicated or ineligible records based on the title and abstract, 159 full manuscripts were screened. After full text review, 93 publications were excluded for not adhering to criteria related to the publication type, study design, population, and/or outcome. The remaining 66 publications, published between 2009 and 2024, were included for systematic review and data extraction (Fig. 1). These publications and their extracted data are summarized in Tables 1–3.

Over the last decade, there has been a steady increase in publications using portable technologies to evaluate infant motor development, especially video-based analysis (Fig. 2a)17,18. The number of publications using wearable sensors as their primary data collection method has remained relatively steady over the years28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44, and only two publications incorporated a sensorized pressure mat37,41.

a The 66 publications by year, categorized by the type of portable technology investigated. b Percentage of publications implementing different combinations of three approaches to assess motor development using portable technologies (automate, illuminate, and/or predict outcomes). c Geographic distribution of publications based on all authors’ institutional affiliations. d Cumulative distribution of participant ages across publications, assuming a normal distribution centered on the midpoint of the age range reported in the text. e Target risk groups and diagnoses across publications, categorized by the approach used to assess motor development. PMA Postmenstrual age, ASD Autism spectrum disorder, NDDs Neurodevelopmental disorders.

We identified three key applications of these technologies to assess infant motor development: (1) automating clinical assessments, (2) illuminating new measures of motor deficits, and (3) predicting future outcomes/diagnoses. Of the 66 publications included for analysis, more than half attempted to automate scoring of standard clinical assessments (primarily the GMA), with the goal of streamlining and enhancing the assessment process16,34,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68 (Fig. 2b). Eighteen investigated illuminative measures that could distinguish atypical and typical movement patterns54,65,69,70,71,72,73,74,75. Nineteen utilized methods to predict either the current or future developmental outcomes, at ages ranging from 1 to 7 years, with the goal of improving early diagnosis and guiding early intervention34,47,76,77,78,79,80,81,82,83,84,85,86,87. Eight publications pursued multiple applications34,47,54,65,70.

Participant characteristics

Most publications originated from North America, Europe, and Asia, with the highest number of publications coming from the United States (Fig. 2c). None of the publications included information about race, ethnicity, or socioeconomic status of their participants.

Participant ages spanned from preterm infants to approximately 2 years old. Most participants were enrolled between 3 and 5 months of age, aligning with the observational period of fidgety movements in the GMA12, which was the primary clinical assessment employed in these publications. The next most common enrollment age was term age. Most publications focused on assessing infants at risk for or diagnosed with CP (Fig. 2e), followed by other neurodevelopmental delays and disorders (e.g., spinal muscular atrophy).

Technology characteristics

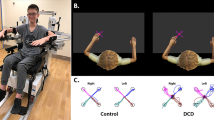

Of the 17 publications that deployed sensors, twelve measured motion via wearable accelerometers, either alone or combined with gyroscopes and magnetometers as part of an inertial measurement unit (IMU/IMMU)43,44 (Fig. 3a). Other sensors measured muscle activity via electromyography sensors (EMG), measured motion through magnetometers33,35,36,42, and measured center of pressure through pressure mat34,37,41. Sampling frequencies ranged from 19 to 500 Hz, with most publications recording data at or near 20 Hz for motion-based sensors. Recording duration was usually less than one hour and occurred in controlled laboratory or clinical settings. The number of sensors deployed ranged from 2 to 13, and sensors were commonly placed at the wrists, ankles, and chest (Fig. 3b). Alternative placement included the upper arms, upper legs, head, neck, back, shoulders, and stomach.

Of the 52 publications that deployed video, 46 used standard RGB cameras (Fig. 3c). Others explored depth cameras or cameras capturing both RGB and depth to obtain three-dimensional information53,54,56,62,64,88. Most employed a single camera, with two publication using multiple portable cameras62,67. Frame rates ranged from 25–120 fps, with 25 or 30 fps as the most common. Resolutions varied from 250 × 350 pixels to 1980 × 1080 pixels, with 1920 × 1080 pixels as the most common. Cameras were typically set up for an overhead view, similar to the method for GMA. However, two papers used front and side views for automating the HINE68,89.

Video-based approaches for infant assessment often relied on pose estimation to track body posture and movements. Pose estimation techniques included OpenPose (13 publications)48,59,63,69,83,85,90, optical flow (6 publications)47,82, and DeepLabCut (3 publications)65,81,86 (Fig. 3d). Recent work has relied more heavily on deep learning-based motion tracking algorithms such as OpenPose (trained on a human skeleton model)91 and DeepLabCut (a pre-trained neural network that relies on additional keypoint annotations and transfer learning)92.

Clinical assessments and diagnoses

A wide variety of clinical and diagnostic assessments were used as ground truth indicators of motor development across publications (Table 1). For example, publications attempting to automate the GMA typically utilized clinician ratings of GMA from preterm to 5 months of age16,90. On the other hand, publications attempting to illuminate or predict developmental outcomes utilized clinician diagnosis or caregiver reports obtained at 1–7 years of age, though often without a description of the diagnostic process34,38,65,71,80,82,83,87. Other assessments, such as the Bayley Scales of Infant and Toddler Development, HINE, or Autism Diagnostic Observation Schedule, were also conducted at various ages41,47,73,75,79,84,85,88,93.

Algorithms

When building models to assess developmental outcomes, statistical methods (such as regression and parametric/nonparametric techniques) have remained popular for early-stage exploration and decision-making over the past 14 years (Fig. 4a)16,68,69,75,76,79,80,82,84,85,87,90,94,95,96. Other methods have included correlation analysis34,35,37,54,71,74,75,90,96, frequency analysis (Fourier transform and wavelet analysis)40,49,77,90, probabilistic analysis (Hidden Markov Model, Erlang-Coxian Distribution, and Dynamic Bayesian Network)68,85, performance analysis (Break Point Detection Algorithm)89, network analysis (Small-World Network)54, Dimensionality Reduction (PCA)49,85, and geometrical modeling67.

Regression methods (linear mixed effect model, logistic regression, multivariable regression model, partial least squares regression), Parametric methods (ANOVA, independent sample t-test, Fisher’s test, z-score, Hierarchical Linear Modeling, Generalized linear model), Non-parametric methods (Mann–Whitney U test, Kruskal–Wallis test, Wilcoxon signed-rank test, Newman–Keuls test, Kolmogorov–Smirnov test), Probabilistic methods (hidden Markov model, Erlang-Coxian distribution, dynamic Bayesian network, naive bayes), Frequency analysis (fast-Fourier transform, wavelet analysis), Correlation (Correlation coefficient, autocorrelation), Other methods (break point detection algorithm, network analysis, AUC-ROC), Dimensionality reduction (principle component analysis, singular value decomposition), SVM Support vector machine, Tree-based methods (Decision trees, random forest, XGBoost, Adaboost), NCC Nearest centroid classifier, Clustering Gaussian mixture network, LDA Linear discriminant analysis, GCN Graph convolution network, RNN Recurrent neural network (Long short-term memory network, Spatio-temporal attention-based model, convolutional recurrent neural network), FNN Feedforward neural networks (shallow multilayer neural network, multi-layered perceptron), Deep learning ensemble methods(Part-Based Modeling, Weakly supervised online action detection setting), CNN Convolutional neural network. a Number of publications using various statistical, machine learning (ML), or deep learning methods to automate or predict motor-related outcomes using portable technology, and their chronological year of publication from 2009–2024; b Performance of technology-based methods to automate the GMA; c Performance of technology-based methods to predict future developmental outcomes.

Alternative methods have utilized machine learning models – mainly dominated by support vector machines29,31,43,51,58,77,78,86, tree-based approaches (Decision Trees; Random Forest; XGBoost; Adaboost)47, and ensemble methods49,53,59,61,64,66,90. However, recent years have witnessed a fast-growing adoption of deep learning techniques such as Graph Convolutional Networks and Convolutional Neural Networks65,71,83,88. Model performance was usually determined via leave-one-out cross-validation, or 5- or 10-fold cross-validation.

Performance

For publications aiming to automate the GMA, we extracted the sensitivity and specificity for the best-performing algorithms from 22 publications16,34,49,51,53,54,56,57,76,90 (Fig. 4b). Of these, four publications employed sensors, achieving sensitivities between 49–85% and specificities between 57–83% to classify the typicality of GMA34. However, three of the four publications developed their models with a limited sample of 10 infants, which may limit their generalizability28,29,30. Other publications used video analysis and were able to achieve sensitivities and specificities greater than 80%, with a notably wider range of samples spanning 12–1127 infants46,49,53,54,56,76,90. One publication achieved perfect sensitivity and specificity with only 12 infants56. Additionally, we observed that the performance of algorithms targeting writhing movements is generally lower than those focused on fidgety movements (Supplementary Fig. 1). This may be attributed to the lower sensitivity of GMA during the writhing period, which presents a greater challenge for distinguishing motor abnormalities early on.

In addition to GMA, one publication attempted to automate the CHOP INTEND (which assesses motor skills for infants with neuromuscular disorders, with a maximal score of 64) and achieved a model with an average error mean of 5.495 points, considering a total possible score of 6488. Three publications attempted to automate the HINE (which assesses neurological development and motor skills in infants and young children, with a maximal score of 78), with the best model achieving 80% sensitivity and 89% specificity67,68,89.

For publications exploring new sensor-based features to illuminate differences between infants with typical development and infants with or at risk of motor deficits39,40, promising measures to date have included posture adjustment patterns, tonic response, motion complexity, barcoding, and sample entropy38,40,41,74. Abrishami et al. found that full-day sensor recordings exhibited more pronounced group differences than brief, 5-min recordings, particularly for measures related to movement duration and acceleration39. For publications using video-based features to illuminate group differences65,75,94,97, promising measures have included jerkiness, periodicity, cosine similarity, autocorrelation, and variability of movement41,94.

For publications aiming to predict developmental outcomes, we extracted or calculated the sensitivity and specificity for the best-performing algorithms from 13 publications in Fig. 4c42,45,47,86,93. Of these, 10 targeted the prediction of a CP diagnosis at 1–5 years of age42,45,47,82,83 (44–93% sensitivity, 63–99% specificity), one targeted the prediction of neuromotor delay (79% sensitivity, 78% specificity)86, and two targeted the risk of ASD (57–71% sensitivity, 97% specificity)84,93. The sample sizes across these publications exhibited a wide range spanning from 30 to 557 infants. When predicting CP, the best-performing method to date was by Ihlen et al., who presented the Computer-based Infant Movement Assessment (CIMA) model to assess CP risk80. They used video data from 377 high-risk infants and achieved 93% sensitivity and 82% specificity. This method performed nearly as well as the GMA to predict CP, which is estimated to have 97% sensitivity and 89% specificity at 9–20 weeks corrected age based on a recent review report98.

Two publications included in this review predicted infant neuromotor deficits using wearable sensors42,43. Fitter et al. found adding acceleration data improved outcome prediction by over 10% compared to using clinical scores from the AIMS alone43. Rahmati et al. highlighted the informative frequency range of 25–35 Hz for data acquisition and achieved an outcome with 86% sensitivity and 88% specificity with an unbalanced sample (14 with CP and 64 without CP)42.

Four publications investigated the prediction of different developmental disabilities. Doi et al. predicted tendencies towards ASD at 18 months of age with 71.4% sensitivity and 97.1% specificity as the best performance93. Garello et al. predicted the likelihood of infants developing neuromotor disorders with 78.6% sensitivity and 77.8% specificity86. Taleb et al. predicted infants with spinal muscular atrophy with 85.5% accuracy87.

Discussion

Over the last 15 years, there have been substantial advancements in portable technologies used to assess infant motor development17,18,25,99. Recent approaches have nearly achieved parity with gold standard clinical assessments for their respective sample cohorts49,56,61,80,83.

Since the 1970s, wearable sensor systems have gained attention in the monitoring of infant physiological behaviors due to their portable size, high temporal resolution, minimal privacy invasion, extended battery life and low cost100. Common limitations for wearable sensors include low spatial resolution, data loss, drifting, and synchronization issues101,102. A common practice is to place these sensors to the wrists and ankles, likely owing to the heightened activity and ease of placement in these anatomical locations.

The publications reviewed here demonstrated varied data recording and acquisition techniques. Most publications only recorded infant behavior for less than 1 h in lab or clinic settings, despite previous publications advocating full day or 2 day recordings for an accurate representation of infant behavior39,103,104. While there is concern about potential sensor interference with infant movements due to its weight, a previous study showed that wearable sensors did not change the frequency of leg movements for infants under one year of age105,106. However, heavy and bulky sensors would likely affect the movement of small or very young infants, such as those in a Neonatal Intensive Care Unit (NICU), which could be addressed by using lightweight, miniaturized sensors107. During data collection, caregiver handling may also introduce significant noise to sensor data, constituting approximately 15% of the overall recording108,109. This may not be an issue for GMA automation since there is no handling during the recording. However, it will significantly affect the other assessments that require clinician-infant interactions. One group designed a wearable jumpsuit embedded with sensors to estimate an infant’s posture and, subsequently, motor development levels110. This approach may offer a more robust solution to community monitoring, by selectively applying algorithms to the most meaningful or relevant infant activities. Future research should also consider distinguishing infant movements from passive carrying and enhancing caregiver education to improve data quality (e.g., sensor positioning and wear compliance).

Moreover, the integration of sensor fusion approaches can significantly enhance the accuracy and scalability of infant monitoring systems31,41. By combining data from accelerometers, pressure sensors, video recordings, or other data streams, researchers can achieve a more holistic understanding of infant behavior and movement patterns. For instance, fusing accelerometer data with video data allows for precise motion tracking, compensating for the spatial limitations of wearables while leveraging the temporal information of video analysis. Future research should explore these sensor fusion approaches to enhance the scalability and applicability of infant behavior monitoring in diverse environments.

Video-based methods offer a straightforward means of designing customized measures of motor development, by drawing knowledge from traditional clinical observations and expert knowledge and aligning these with objective, automated motion tracking data from video. Compared to marker-based motion capture systems in controlled lab settings111, video-based (markerless) motion capture utilizing standard cameras is more cost-effective, flexible for various environments, and easy to setup which makes it more practical for deployment to the home.

Most publications opted for 2D cameras due to affordability and high spatial resolution but faced limitations in temporal resolution. In contrast, newer 3D cameras offer depth information and higher temporal resolution but require substantial computational power and storage17. Generally, video-based methods may struggle with performance if parts of the infant’s body are occluded or if movements occur perpendicularly to the camera’s view. These are issues that can be addressed through additional development of infant-specific 3D pose estimation algorithms, sensor integration for parallel data acquisition, or the use of depth cameras. For example, Yin et al. adopted a novel method using three portable cameras to estimate the 3D pose without the need of prior knowledge of 3D skeleton or camera calibration62.

Video-based methods are also susceptible to variations in environmental conditions, including lighting, skin tone, attire, and background noise, which can impact assessment quality and reliability17. Future investigations should maintain consistency in data collection approaches and prioritize algorithm development that accounts for diverse populations and environments. For instance, Schroeder et al. introduced a Skinned Multi-Infant Linear Model, enabling the creation of de-identified 3D model videos while mitigating the influence of skin color and background noise112.

Mobile solutions, such as smartphone apps, offer a promising avenue for scaling these methods for home environments. These apps could allow for real-time data collection, analysis, and visualization, enabling monitoring infant behavior outside of clinical settings25,65. In addition, smartphone cameras and embedded sensors could be used to capture video and accelerometry data concurrently, eliminating the need for specialized equipment. Given the ubiquity of smartphones, video-based methods should prioritize handheld smartphone videos that can be easily recorded by caregivers, which would require accommodating a range of video qualities. Previous work has demonstrated the feasibility of analyzing caregiver-recorded smartphone videos for GMA scoring and markerless motion tracking25,65,113. Future work should explore the feasibility of analyzing smartphone videos locally on smartphone applications.

A wide variety of statistical and machine learning models have been employed to automate, illuminate, and predict infant motor development and outcomes, with an increased adoption of deep learning methods over the past few years.

Video-based techniques have implemented image differencing or pose estimation models. Image differencing methods, such as optic flow, track pixel changes across frames to detect infant body parts and movement patterns114, which can be significantly affected by lighting conditions, background patterns, and skin color. To reduce the influence of background factors, many groups have adopted skeleton-based pose estimation models such as OpenPose91 to track infant body poses during a recording and then analyze motion from the pose estimates. OpenPose was the most prevalent model among the reviewed publications. However, this model is originally targeted to recognize poses from adult skeleton structures91. Infants differ substantially from adults in their anatomical proportions and distribution of poses, which can drastically affect performance. Retraining these models using infant poses and domain adaptation techniques has been shown to reduce pose estimation error by 60%55. Future work should continue validating and refining these tools for infant population. In contrast, algorithms that do not rely on skeleton models, such as DeepLabCut92, may utilize transfer learning or other techniques to train an infant-specific pose tracking model, but these often necessitate a substantial amount of high-quality, manually labeled data for training. To alleviate manual labeling efforts, future research should consider utilizing weakly-supervised or self-supervised models to reduce annotation labor59,60. For example, Luo employed a weakly supervised online action detection approach to detect fidgety movements, achieving comparable results with only 20% of the video duration compared to utilizing the entire video59.

A significant proportion of the examined publications primarily concentrated on quantitative kinematic or black box features in individual body parts when incorporating machine learning algorithms. However, qualitative information, such as infant behavioral state and environmental setup, are also considered by clinicians during traditional developmental assessments. For the GMA specifically, which assesses a gestalt perception of an infant’s spontaneous movements, features may relate to movement complexity, variation, fluidity, and smoothness, characterizing the overall patterns and qualities rather than isolated components11. Future studies should consider incorporating features related to this qualitative clinical knowledge, including behavioral states and quantify the gestalt patterns115.

Many publications suffer from small or unbalanced sample sizes, limiting their generalizability to new cohorts. For instance, Zhang et al. achieved perfect scores (1.0) on all metrics using the MINI-RGBD dataset with only 12 synthetic infant videos56. However, their algorithm showed decreased performance when exposed to introduced noise, suggesting potential overfitting. Rahmati et al. also cautioned against excessive features with a limited subject pool, which could yield suboptimal algorithm performance, and decided to generate separate algorithms for video and sensor data42. Longer or multiple recordings may enhance performance, as demonstrated by Adde et al., who found that using two videos for CP prediction outperformed a single video45. Previous studies using sensors also demonstrated that at least two days of recordings better represented infants’ typical behaviors103,104. Future studies should test their algorithms on a population sample with participants from diverse backgrounds and opt for long recordings if feasible.

Some publications only reported a single performance metric, such as accuracy28,31,32,50,58,81, which can be problematic if there is an imbalanced distribution of positive and negative cases that a model is attempting to predict. Given the typically low prevalence rates of developmental disabilities, model could exhibit high accuracy by always assuming the negative case. To enhance the robustness of future investigations and facilitate inter-study comparisons, future publications should report the confusion matrix and provide, at minimum, sensitivity, specificity, and the F1 score for a more comprehensive and comparable assessment of outcomes.

A consistent issue that emerged from our analysis is the potential for demographic disparities. Most publications to date are from developed countries, and no publication included cohorts from Africa or Central and South America. Moreover, no publication disclosed their racial distribution or the participants’ socioeconomic status which have been reported to influence their motor and cognitive developmental trajectories116. Though GMA was not influenced by most demographic factors, standardized assessments such as Bayley shows significant difference in different countries and genders117,118. Thus, it is not clear whether these models could be generalizable or scalable to infants outside their immediate geographical location, which limits our ability to interpret their usability.

Another aspect that can be improved concerning participants’ characteristics is their age and conditions. Most infants within the included publications were at risk of CP at ages align with sensitive periods of evaluating writhing movements (term age) and fidgety movements (3–5 months corrected age) using the GMA12. For predicting CP diagnosis, it is important to explore algorithms that focus on ages beyond the sensitive period of GMA since many infants may not undergo the screening process until 6 months or older, thereby surpassing the narrow GMA window. A limited number of publications in this review targeted other neurodevelopmental disorders such as ASD. Future work should consider broadening their participant cohorts to encompass individuals with diverse developmental disabilities, characterized by distinct atypical movement patterns. Due to the limitation of available clinical tools for various developmental disorders at various ages, the data-driven method could be an alternative method to fill the gap. Furthermore, given the association between motor, language and cognitive development, it is advisable to explore the predictive capabilities of these technologies in other developmental domains119,120.

For data collection, despite the long-term vision and advantage of developing portable technologies to improve access to screening, only 27% of included publications collected the data in a home setting43,44,50,52,60,62,63,65,70,73,75,85,87. Portable technologies have the advantage of collecting data within diverse home settings, whereas traditional motion tracking is confined to laboratory settings104. The home and community may offer a more accurate representation of an infant’s everyday movement behaviors and abilities, and thus better capture whether persistent motor deficits truly exist compared to a brief clinic visit. This is especially likely for children with mild or difficult-to-observe symptoms. However, algorithms developed from data in a laboratory or clinical setting may not be valid for data collected in the home, since the algorithms may not be trained to account for movement differences between these environments121. Moreover, the variable and dynamically changing home environment could pose another challenge when using video analysis. To improve ecological validity, future research should focus on adapting their algorithms to sensor and video recordings from various environments.

There was no standardized approach for cross-validating the technologies against clinical outcomes in the included publications. Diagnostic times ranged between 1 to 7 years of age. Relying on a pre-specified temporal endpoint for obtaining a clinical outcome may inadvertently introduce bias into the algorithm, such as for mild cases of CP that may not be conclusively diagnosed at 1 year old. Some publications adopted Bayley scores as a determinant of CP outcomes47,81,85,86, although the Bayley is not inherently a diagnostic tool. Alternatively, some publications used information from caregiver reports as their target outcomes39,43,45. In general, practices that are heavily contingent upon whether the infant underwent routine screening can potentially introduce bias against infants and families with reduced access to healthcare. Future studies should use internationally recognized diagnostic protocols at appropriate ages to ensure the accuracy and reliability of diagnostic conclusions.

There is a promising trend with the emergence of new algorithms that nearly achieved parity with current gold standard clinical assessments. This achievement is an exciting initial stride toward establishing a fully automated tool for assessing infant motor development. However, there is still no readily deployable, scalable technique that is ready for integration with clinical practice.

When developing and selecting the best models for potential clinical use, it is crucial to determine an expectation for what the model should accomplish in clinical practice (such as for early screening and outcome prediction). Algorithms with high sensitivity would reduce the risk of overlooking children with motor deficits (true positives), thereby enabling earlier provisions for additional clinical assessments, caregiver resources, or interventions to maximize infant outcomes. However, they may also incorrectly identify infants without deficits (more false positives). Conversely, algorithms with high specificity would minimize unnecessary caregiver distress and unwarranted medical procedures and treatments for children without motor deficits (true negatives), though they may miss detecting infants with more nuanced symptoms (more false negatives). Models with both high sensitivity and specificity are certainly preferred, but selecting the best model for implementation often requires evaluating the tradeoff between these two metrics.

There are some limitations to this review. First, the exclusion of non-English language publications may have resulted in the omission of pertinent research from developing nations. Additional review would be needed to determine if additional work has been done to assess infant motor development with portable technologies in these nations. Second, the heterogeneity of model performance metrics among the included publications restricts our ability to directly compare all publications in each area of focus. Despite the limitations of sensitivity and specificity as comprehensive metrics for assessing algorithm performance, we selected these as the primary metrics for comparison due to their prevalence in the majority of included publications. Third, the limited availability of publications addressing neurodevelopmental delays or disorders other than CP prevented additional performance comparisons between infant risk groups or diagnoses. Lastly, there was no singular clinical outcome reported consistently across publications, so for the purpose of comparability we treated them with uniform validity. Future research should consider including standardized designs and analytical methods to facilitate more robust and comparative reporting of their research findings against existing literature.

Overall, the field of portable technologies for evaluating infant motor development is experiencing rapid growth. There is significant interest in developing tools to assess infants throughout the first year of life, with applications for different medical conditions and outcomes. However, no method has yet achieved the capability to perform as an off-the-shelf early assessment tool for the clinic or community. Additional performance improvement and validation across diverse cohorts are needed before these methods can reliably inform clinical care for infants with motor deficits. Algorithms should be robust, scalable, user-friendly, and can seamlessly integrate into clinical practice or home environments to effectively address the complexity and variability of infant movements, with customizable features to suit specific clinical evaluation needs.

Methods

Search strategy

We searched four databases (SCOPUS, PubMed, MEDLINE [Ovid], and Web of Science) for publications through July 2024. Keywords were selected based on the relevant populations, technologies, outcomes, and diagnoses (Fig. 5). The detailed search strings used to generate results are provided in Table 4.

Inclusion criteria

Inclusion criteria for the systematic review were based on a modification of the PICO guidelines122. Publications were eligible for the review if they included a cohort of infants aged 1 year or younger who were at risk for atypical or delayed motor development (Population). Portable technology should be used to record motor behavioral data (Intervention: Technology). We considered portable technologies to be devices or systems that could be practically deployed in the home and community settings. Publications were required to use automated/computerized measures from these technologies rather than human annotation or interpretation. Included publications should have group comparison or classification. Publications were required to validate the technology-based outcomes against standardized clinical assessments or confirmed diagnoses, or to compare outcomes between different risk cohorts (Comparison). The outcome of interest is the performance of portable technologies at assessing motor deficits relative to the clinical ground truth (Outcome).

Exclusion criteria

Publications were ineligible if they were review papers, book chapters, written in languages other than English, or with fewer than five participants in the study sample.

Review process

Four reviewers (WD, RA, EJ, RR) participated in the review process, including screening, full text review, and data extraction. Each publication was reviewed by two reviewers independently. Disagreements were resolved through discussion between the reviewers.

After the initial literature search, publications were screened based on title and abstract. Publications that passed screening were reviewed using the inclusion and exclusion criteria. For eligible publications, data were extracted about the study design, sample size, participant demographics, settings, and comparison to diagnostic standards. Data were also extracted about the portable technologies, including their type and placement, data acquisition methods, analysis, and performance. Each publication was categorized as Automate, Illuminate, or Predict based on the objectives, methodology, and potential applications described in the study. Specifically, whether they sought to automatically score a standardized pediatric assessment (Automate), obtain a quantitative measure related to movement characteristics or motor deficits (Illuminate), and/or predict the developmental outcomes of at-risk infants (Predict). Publications could belong to more than one category. Data extraction and visualization were performed by two reviewers independently using Python and Google Sheets. Some performance metrics (sensitivity and specificity) were calculated from confusion matrices, when provided. No estimation or manipulation of missing data was performed during data synthesis. Disagreements were resolved through discussion between the reviewers.

Data availability

The authors declare that all data supporting the findings of this study are available within the paper and its Supplementary Information files.

References

Zablotsky, B. et al. Prevalence and Trends of Developmental Disabilities among Children in the United States: 2009-2017. Pediatrics 144, e20190811 (2019).

Lord, C. et al. Autism from 2 to 9 years of age. Arch. Gen. Psychiatry 63, 694–701 (2006).

te Velde, A., Morgan, C., Novak, I., Tantsis, E. & Badawi, N. Early Diagnosis and Classification of Cerebral Palsy: An Historical Perspective and Barriers to an Early Diagnosis. J. Clin. Med. 8, 1599 (2019).

Granild-Jensen, J. B., Rackauskaite, G., Flachs, E. M. & Uldall, P. Predictors for early diagnosis of cerebral palsy from national registry data. Dev. Med. Child Neurol. 57, 931–935 (2015).

Boychuck, Z. et al. Current Referral Practices for Diagnosis and Intervention for Children with Cerebral Palsy: A National Environmental Scan. J. Pediatr. 216, 173–180.e1 (2020).

Novak, I. et al. Early, accurate diagnosis and early intervention in cerebral palsy: Advances in diagnosis and treatment. JAMA Pediatr. 171, 897–907 (2017).

Morgan, C. et al. Effectiveness of motor interventions in infants with cerebral palsy: a systematic review. Dev. Med. Child Neurol. 58, 900–909 (2016).

Spittle, A., Orton, J., Anderson, P. J., Boyd, R. & Doyle, L. W. Early developmental intervention programmes provided post hospital discharge to prevent motor and cognitive impairment in preterm infants. Cochrane database Syst. Rev. 2015, CD005495 (2015).

Hadders-Algra, M., Klip-Van den Nieuwendijk, A., Martijn, A. & van Eykern, L. A. Assessment of general movements: towards a better understanding of a sensitive method to evaluate brain function in young infants. Dev. Med. Child Neurol. 39, 88–98 (1997).

Haataja, L. et al. Optimality score for the neurologic examination of the infant at 12 and 18 months of age. J. Pediatr. 135, 153–161 (1999).

Prechtl, H. F. et al. An early marker for neurological deficits after perinatal brain lesions. Lancet 349, 1361–1363 (1997).

Einspieler, C. & Prechtl, H. F. Prechtl’s assessment of general movements: a diagnostic tool for the functional assessment of the young nervous system. Ment. Retard. Dev. Disabil. Res. Rev. 11, 61–67 (2005).

Peyton, C. et al. Inter-observer reliability using the General Movement Assessment is influenced by rater experience. Early Hum. Dev. 161, 105436 (2021).

Bright, M. A., Zubler, J., Boothby, C. & Whitaker, T. M. Improving Developmental Screening, Discussion, and Referral in Pediatric Practice. Clin. Pediatr. 58, 941–948 (2019).

Bailey, D. B. J., Skinner, D. & Warren, S. F. Newborn screening for developmental disabilities: reframing presumptive benefit. Am. J. Public Health 95, 1889–1893 (2005).

Adde, L., Helbostad, J. L., Jensenius, A. R., Taraldsen, G. & Støen, R. Using computer-based video analysis in the study of fidgety movements. Early Hum. Dev. 85, 541–547 (2009).

Silva, N. et al. The future of General Movement Assessment: The role of computer vision and machine learning - A scoping review. Res. Dev. Disabil. 110, 103854 (2021).

Irshad, M. T., Nisar, M. A., Gouverneur, P., Rapp, M. & Grzegorzek, M. AI Approaches Towards Prechtl’s Assessment of General Movements: A Systematic Literature Review. Sensors 20, 5321 (2020).

Marschik, P. B. et al. A Novel Way to Measure and Predict Development: A Heuristic Approach to Facilitate the Early Detection of Neurodevelopmental Disorders. Curr. Neurol Neurosci. Rep. 17, 43 (2017).

Marcroft, C., Khan, A., Embleton, N. D., Trenell, M. & Plötz, T. Movement recognition technology as a method of assessing spontaneous general movements in high risk infants. Front. Neurol. 5, 284 (2014).

Redd, C. B., Karunanithi, M., Boyd, R. N. & Barber, L. A. Technology-assisted quantification of movement to predict infants at high risk of motor disability: A systematic review. Res. Dev. Disabil. 118, 104071 (2021).

Raghuram, K. et al. Automated movement recognition to predict motor impairment in high-risk infants: a systematic review of diagnostic test accuracy and meta-analysis. Dev. Med. Child Neurol. 63, 637–648 (2021).

Valencia, A., Viñals, C., Alvarado, E., Balderas, M. & Provasi, J. Prechtl’s method to assess general movements: Inter-rater reliability during the preterm period. PLoS One 19, e0301934 (2024).

Bosanquet, M., Copeland, L., Ware, R. & Boyd, R. A systematic review of tests to predict cerebral palsy in young children. Dev. Med. Child Neurol. 55, 418–426 (2013).

Marschik, P. B. et al. Mobile Solutions for Clinical Surveillance and Evaluation in Infancy-General Movement Apps. J. Clin. Med. 12, (2023).

Örtqvist, M. et al. Reliability of the Motor Optimality Score-Revised: A study of infants at elevated likelihood for adverse neurological outcomes. Acta Paediatr. 112, 1259–1265 (2023).

O’Brien, M. K., Hohl, K., Lieber, R. L. & Jayaraman, A. Automate, Illuminate, Predict: A Universal Framework for Integrating Wearable Sensors in Healthcare. Digit. Biomark. 8, 149–158 (2024).

Singh, M. & Patterson, D. J. Involuntary gesture recognition for predicting cerebral palsy in high-risk infants. In International Symposium on Wearable Computers (ISWC) 2010 1–8 (IEEE, 2010).

Gravem, D. et al. Assessment of Infant Movement With a Compact Wireless Accelerometer System. J. Med. Device. 6, 021013 (2012).

Fan, M., Gravem, D., Cooper, D. M. & Patterson, D. J. Augmenting Gesture Recognition with Erlang-Cox Models to Identify Neurological Disorders in Premature Babies. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing 411–420 (Association for Computing Machinery, 2012).

Machireddy, A. et al. A video/IMU hybrid system for movement estimation in infants. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2017, 730–733 (2017).

Bao, B. et al. Intelligence Sparse Sensor Network for Automatic Early Evaluation of General Movements in Infants. Adv. Sci. 11, e2306025 (2024).

Hamer, E. G., Dijkstra, L. J., Hooijsma, S. J., Zijdewind, I. & Hadders-Algra, M. Knee jerk responses in infants at high risk for cerebral palsy: an observational EMG study. Pediatr. Res. 80, 363–370 (2016).

Celik, H. I. et al. Using the center of pressure movement analysis in evaluating spontaneous movements in infants: a comparative study with general movements assessment. Ital. J. Pediatr. 49, 165 (2023).

Boxum, A. G. et al. Postural adjustments in infants at very high risk for cerebral palsy before and after developing the ability to sit independently. Early Hum. Dev. 90, 435–441 (2014).

Hamer, E. G. et al. The tonic response to the infant knee jerk as an early sign of cerebral palsy. Early Hum. Dev. 119, 38–44 (2018).

Rihar, A. et al. CareToy: Stimulation and Assessment of Preterm Infant’s Activity Using a Novel Sensorized System. Ann. Biomed. Eng. 44, 3593–3605 (2016).

Deng, W., Marmelat, V., Vanderbilt, D. L., Gennaro, F. & Smith, B. A. Barcoding, linear and nonlinear analysis of full-day leg movements in infants with typical development and infants at risk of developmental disabilities: Cross-sectional study. Infancy 28, 650–666 (2023).

Abrishami, M. S. et al. Identification of developmental delay in infants using wearable sensors: full-day leg movement statistical feature analysis. IEEE J. Transl. Eng. Heal. Med. 7, 2800207 (2019).

Wilson, R. B., Vangala, S., Elashoff, D., Safari, T. & Smith, B. A. Using wearable sensor technology to measure motion complexity in infants at high familial risk for autism spectrum disorder. Sensors 21, 616 (2021).

Iverson, J. M. et al. Reaching While Learning to Sit: Capturing the Kinematics of Co-Developing Skills at Home. Dev. Psychobiol. 66, e22527 (2024).

Rahmati, H. et al. Frequency Analysis and Feature Reduction Method for Prediction of Cerebral Palsy in Young Infants. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 24, 1225–1234 (2016).

Fitter, N. T., Funke, R., Pulido, J. C., Mataric, M. J. & Smith, B. A. Toward Predicting Infant Developmental Outcomes From Day-Long Inertial Motion Recordings. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 28, 2305–2314 (2020).

Franchi De’ Cavalieri, M. et al. Wearable accelerometers for measuring and monitoring the motor behaviour of infants with brain damage during CareToy-Revised training. J. Neuroeng. Rehabil. 20, 62 (2023).

Adde, L., Helbostad, J., Jensenius, A. R., Langaas, M. & Støen, R. Identification of fidgety movements and prediction of CP by the use of computer-based video analysis is more accurate when based on two video recordings. Physiother. Theory Pract. 29, 469–475 (2013).

Støen, R. et al. Computer-based video analysis identifies infants with absence of fidgety movements. Pediatr. Res. 82, 665–670 (2017).

Orlandi, S. et al. Detection of Atypical and Typical Infant Movements using Computer-based Video Analysis. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2018, 3598–3601 (2018).

McCay, K. D., Ho, E. S. L., Marcroft, C. & Embleton, N. D. Establishing Pose Based Features Using Histograms for the Detection of Abnormal Infant Movements. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2019, 5469–5472 (2019).

Dai, X., Wang, S., Li, H., Yue, H. & Min, J. Image-Assisted Discrimination Method for Neurodevelopmental Disorders in Infants Based on Multi-feature Fusion and Ensemble Learning. In Brain Informatics (eds. Liang, P., Goel, V. & Shan, C.) 105–114 (Springer International Publishing, 2019).

Tsuji, T. et al. Markerless Measurement and Evaluation of General Movements in Infants. Sci. Rep. 10, 1422 (2020).

Doroniewicz, I. et al. Writhing Movement Detection in Newborns on the Second and Third Day of Life Using Pose-Based Feature Machine Learning Classification. Sensors. 20, 5986 (2020).

Nguyen-Thai, B. et al. A Spatio-Temporal Attention-Based Model for Infant Movement Assessment From Videos. IEEE J. Biomed. Heal. Inform. 25, 3911–3920 (2021).

Sakkos, D. et al. Identification of Abnormal Movements in Infants: A Deep Neural Network for Body Part-Based Prediction of Cerebral Palsy. IEEE Access 9, 94281–94292 (2021).

Wu, Q., Xu, G., Wei, F., Chen, L. & Zhang, S. RGB-D Videos-Based Early Prediction of Infant Cerebral Palsy via General Movements Complexity. IEEE Access 9, 42314–42324 (2021).

Hashimoto, Y. et al. Automated Classification of General Movements in Infants Using Two-Stream Spatiotemporal Fusion Network. In Medical Image Computing and Computer Assisted Intervention – MICCAI 2022 (eds. Wang, L., Dou, Q., Fletcher, P. T., Speidel, S. & Li, S.) 753–762 (Springer Nature Switzerland, 2022).

Zhang, H., Shum, H. P. H. & Ho, E. S. L. Cerebral Palsy Prediction with Frequency Attention Informed Graph Convolutional Networks. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2022, 1619–1625 (2022).

Tong, W., Yang, C., Li, X., Shi, F. & Zhai, G. Cost-Effective Video-Based Poor Repertoire Detection for Preterm Infant General Movement Analysis. In Proceedings of the 2022 5th International Conference on Image and Graphics Processing 51–58 (Association for Computing Machinery, 2022).

Gong, X. et al. Preterm infant general movements assessment via representation learning. Displays 75, 102308 (2022).

Luo, T. et al. Weakly Supervised Online Action Detection for Infant General Movements. In Medical Image Computing and Computer Assisted Intervention – MICCAI 2022 (eds. Wang, L., Dou, Q., Fletcher, P. T., Speidel, S. & Li, S.) 721–731 (Springer Nature Switzerland, 2022).

Ni, H. et al. Semi-supervised body parsing and pose estimation for enhancing infant general movement assessment. Med. Image Anal. 83, 102654 (2023).

Gao, Q. et al. Automating General Movements Assessment with quantitative deep learning to facilitate early screening of cerebral palsy. Nat. Commun. 14, 8294 (2023).

Yin, W. et al. A self-supervised spatio-temporal attention network for video-based 3D infant pose estimation. Med. Image Anal. 96, 103208 (2024).

Morais, R. et al. Robust and Interpretable General Movement Assessment Using Fidgety Movement Detection. IEEE J. Biomed. Heal. Inform. 27, 5042–5053 (2023).

Soualmi, A., Alata, O., Ducottet, C., Patural, H. & Giraud, A. Mean 3D Dispersion for Automatic General Movement Assessment of Preterm Infants. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2023, 1–5 (2023).

Passmore, E. et al. Automated identification of abnormal infant movements from smart phone videos. PLOS Digit. Heal. 3, e0000432 (2024).

Huang, X. et al. Automatic quantitative intelligent assessment of neonatal general movements with video tracking. Displays 82, 102658 (2024).

Dogra, D. P. et al. Toward automating Hammersmith pulled-to-sit examination of infants using feature point based video object tracking. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 20, 38–47 (2012).

Dey, P., Dogra, D. P., Roy, P. P. & Bhaskar, H. Autonomous vision-guided approach for the analysis and grading of vertical suspension tests during Hammersmith Infant Neurological Examination (HINE). Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2016, 863–866 (2016).

Marchi, V. et al. Automated pose estimation captures key aspects of General Movements at eight to 17 weeks from conventional videos. Acta Paediatr. 108, 1817–1824 (2019).

Tacchino, C. et al. Spontaneous movements in the newborns: a tool of quantitative video analysis of preterm babies. Comput. Methods Prog. Biomed. 199, 105838 (2021).

Letzkus, L., Pulido, J. V., Adeyemo, A., Baek, S. & Zanelli, S. Machine learning approaches to evaluate infants’ general movements in the writhing stage-a pilot study. Sci. Rep. 14, 4522 (2024).

Adde, L. et al. Early motor repertoire in very low birth weight infants in India is associated with motor development at one year. Eur. J. Paediatr. Neurol. EJPN J. Eur. Paediatr. Neurol. Soc. 20, 918–924 (2016).

Caruso, A. et al. Early Motor Development Predicts Clinical Outcomes of Siblings at High-Risk for Autism: Insight from an Innovative Motion-Tracking Technology. Brain Sci. 10, 379 (2020).

Shin, H. I. et al. Deep learning-based quantitative analyses of spontaneous movements and their association with early neurological development in preterm infants. Sci. Rep. 12, 3138 (2022).

Park, M. W. et al. Reduction in limb-movement complexity at term-equivalent age is associated with motor developmental delay in very-preterm or very-low-birth-weight infants. Sci. Rep. 14, 8432 (2024).

Adde, L. et al. Early prediction of cerebral palsy by computer-based video analysis of general movements: a feasibility study. Dev. Med. Child Neurol. 52, 773–778 (2010).

Stahl, A. et al. An optical flow-based method to predict infantile cerebral palsy. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 20, 605–614 (2012).

Rahmati, H., Aamo, O. M., Stavdahl, Ø., Dragon, R. & Adde, L. Video-based early cerebral palsy prediction using motion segmentation. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2014, 3779–3783 (2014).

Raghuram, K. et al. Automated movement analysis to predict motor impairment in preterm infants: a retrospective study. J. Perinatol. J. Calif. Perinat. Assoc. 39, 1362–1369 (2019).

Ihlen, E. A. F. et al. Machine Learning of Infant Spontaneous Movements for the Early Prediction of Cerebral Palsy: A Multi-Site Cohort Study. J. Clin. Med. 9, 5 (2019).

Moro, M. et al. A markerless pipeline to analyze spontaneous movements of preterm infants. Comput. Methods Prog. Biomed. 226, 107119 (2022).

Raghuram, K. et al. Automated Movement Analysis to Predict Cerebral Palsy in Very Preterm Infants: An Ambispective Cohort Study. Children 9, 843 (2022).

Groos, D. et al. Development and Validation of a Deep Learning Method to Predict Cerebral Palsy From Spontaneous Movements in Infants at High Risk. JAMA Netw. open 5, e2221325 (2022).

Doi, H. et al. Spatiotemporal patterns of spontaneous movement in neonates are significantly linked to risk of autism spectrum disorders at 18 months old. Sci. Rep. 13, 13869 (2023).

Chambers, C. et al. Computer Vision to Automatically Assess Infant Neuromotor Risk. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 28, 2431–2442 (2020).

Garello, L. et al. A Study of At-term and Preterm Infants’ Motion Based on Markerless Video Analysis. In 2021 29th European Signal Processing Conference (EUSIPCO) 1196–1200 (EUSIPCO, 2021).

Taleb, A. et al. Improve Pose Estimation Model Performance with Unlabeled Data. In 2023 Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE) 1316–1321 (CSCE, 2023).

Soran, B., Lowes, L. & Steele, K. M. Evaluation of Infants with Spinal Muscular Atrophy Type-I Using Convolutional Neural Networks. In Computer Vision – ECCV 2016 Workshops (eds. Hua, G. & Jégou, H.) 495–507 (Springer International Publishing, 2016).

Dogra, D. P. et al. Video analysis of Hammersmith lateral tilting examination using Kalman filter guided multi-path tracking. Med. Biol. Eng. Comput. 52, 759–772 (2014).

McCay, K. D. et al. A Pose-Based Feature Fusion and Classification Framework for the Early Prediction of Cerebral Palsy in Infants. IEEE Trans. neural Syst. Rehabil. Eng. a Publ. IEEE Eng. Med. Biol. Soc. 30, 8–19 (2022).

Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E. & Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 43, 172–186 (2021).

Mathis, A. et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 21, 1281–1289 (2018).

Doi, H. et al. Prediction of autistic tendencies at 18 months of age via markerless video analysis of spontaneous body movements in 4-month-old infants. Sci. Rep. 12, 18045 (2022).

Kanemaru, N. et al. Jerky spontaneous movements at term age in preterm infants who later developed cerebral palsy. Early Hum. Dev. 90, 387–392 (2014).

Abrishami, M. S. et al. Identification of developmental delay in infants using wearable sensors: Full-day leg movement statistical feature analysis. IEEE J. Transl. Eng. Heal. Med. 7, 1–7 (2019).

Deng, W. et al. Protocol for a randomized controlled trial to evaluate a year-long (NICU-to-home) evidence-based, high dose physical therapy intervention in infants at risk of neuromotor delay. PLoS One 18, e0291408 (2023).

Marchi, V. et al. Movement analysis in early infancy: Towards a motion biomarker of age. Early Hum. Dev. 142, 104942 (2020).

Kwong, A. K. L., Fitzgerald, T. L., Doyle, L. W., Cheong, J. L. Y. & Spittle, A. J. Predictive validity of spontaneous early infant movement for later cerebral palsy: a systematic review. Dev. Med. Child Neurol. 60, 480–489 (2018).

Spittle, A. J. et al. The Baby Moves prospective cohort study protocol: using a smartphone application with the General Movements Assessment to predict neurodevelopmental outcomes at age 2 years for extremely preterm or extremely low birthweight infants. BMJ Open 6, e013446 (2016).

Zhu, Z., Liu, T., Li, G., Li, T. & Inoue, Y. Wearable sensor systems for infants. Sensors 15, 3721–3749 (2015).

Chen, H., Xue, M., Mei, Z., Bambang Oetomo, S. & Chen, W. A Review of Wearable Sensor Systems for Monitoring Body Movements of Neonates. Sensors 16, 2134 (2016).

Samuelsson, O., Lindblom, E. U., Björk, A. & Carlsson, B. To calibrate or not to calibrate, that is the question. Water Res. 229, 119338 (2023).

Deng, W., Nishiyori, R., Vanderbilt, D. L. & Smith, B. A. How many days are necessary to represent typical daily leg movement behavior for infants at risk of developmental disabilities? Sensors 20, 5344 (2020).

Deng, W., Trujillo-Priego, I. A. & Smith, B. A. How many days are necessary to represent an infant’s typical daily leg movement behavior using wearable sensors? Phys. Ther. 99, 730–738 (2019).

Jiang, C. et al. Determining if wearable sensors affect infant leg movement frequency. Dev. Neurorehabil. 00, 1–4 (2017).

Dibiasi, J. & Einspieler, C. Load perturbation does not influence spontaneous movements in 3-month-old infants. Early Hum. Dev. 77, 37–46 (2004).

Jeong, H. et al. Miniaturized wireless, skin-integrated sensor networks for quantifying full-body movement behaviors and vital signs in infants. Proc. Natl. Acad. Sci. USA 118, 1–10 (2021).

Zhou, J., Schaefer, S. Y. & Smith, B. A. Quantifying Caregiver Movement when Measuring Infant Movement across a Full Day: A Case Report. Sensors 19, 2886 (2019).

Worobey, J., Vetrini, N. R. & Rozo, E. M. Mechanical measurement of infant activity: a cautionary note. Infant Behav. Dev. 32, 167–172 (2009).

Airaksinen, M. et al. Automatic Posture and Movement Tracking of Infants with Wearable Movement Sensors. Sci. Rep. 10, 169 (2020).

Tsushima, H., Morris, M. E. & McGinley, J. Test-retest reliability and inter-tester reliability of kinematic data from a three-dimensional gait analysis system. J. Jpn. Phys. Ther. Assoc. Rigaku ryoho 6, 9–17 (2003).

Schroeder, A. S. et al. General Movement Assessment from videos of computed 3D infant body models is equally effective compared to conventional RGB video rating. Early Hum. Dev. 144, 104967 (2020).

Adde, L. et al. In-Motion-App for remote General Movement Assessment: a multi-site observational study. BMJ Open 11, e042147 (2021).

Horn, B. K. P. & Schunck, B. G. Determining optical flow. Artif. Intell. 17, 185–203 (1981).

Jäkel, F., Singh, M., Wichmann, F. A. & Herzog, M. H. An overview of quantitative approaches in Gestalt perception. Vis. Res 126, 3–8 (2016).

Karasik, L. B., Adolph, K. E., Fernandes, S. N., Robinson, S. R. & Tamis-LeMonda, C. S. Gahvora cradling in Tajikistan: Cultural practices and associations with motor development. Child Dev. 94, 1049–1067 (2023).

Hua, J. et al. The reliability and validity of Bayley-III cognitive scale in China’s male and female children. Early Hum. Dev. 129, 71–78 (2019).

Kosmann, P. et al. Make Bayley III Scores Comparable between United States and German Norms-Development of Conversion Equations. Neuropediatrics 54, 147–152 (2023).

Einspieler, C., Bos, A. F., Libertus, M. E. & Marschik, P. B. The General Movement Assessment Helps Us to Identify Preterm Infants at Risk for Cognitive Dysfunction. Front. Psychol. 7, 406 (2016).

Salavati, S. et al. The association between the early motor repertoire and language development in term children born after normal pregnancy. Early Hum. Dev. 111, 30–35 (2017).

O’Brien, M. K. et al. Activity Recognition for Persons With Stroke Using Mobile Phone Technology: Toward Improved Performance in a Home Setting. J. Med. Internet Res. 19, e184 (2017).

Richardson, W. S., Wilson, M. C., Nishikawa, J. & Hayward, R. S. The well-built clinical question: a key to evidence-based decisions. ACP J. Club 123, A12–A13 (1995).

Acknowledgements

This work was supported by the Max Nader Center for Rehabilitation Technologies and Outcomes Research.

Author information

Authors and Affiliations

Contributions

All authors have read and approved the manuscript. W.D.: Conceptualization and design, literature search and screening, data extraction and analysis, and manuscript writing; M.O.: Conceptualization and design, manuscript writing, supervision and project administration; R.A.: Literature search and screening, data extraction and analysis, and manuscript writing; R.R.: Literature screening, data extraction and analysis, and manuscript writing; E.J.: Literature screening, data extraction and analysis, and manuscript writing; A.J.: Conceptualization and design, manuscript writing, supervision and project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Deng, W., O’Brien, M.K., Andersen, R.A. et al. A systematic review of portable technologies for the early assessment of motor development in infants. npj Digit. Med. 8, 63 (2025). https://doi.org/10.1038/s41746-025-01450-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-025-01450-3

This article is cited by

-

Digital Twins for Monitoring Neuromotor Development in Preterm Infants: Conceptual Framework and Proof-of-concept Study

Journal of Medical Systems (2025)