Abstract

Cochlear implants (CIs) have transformed the lives of over one million individuals with hearing impairment, including children as young as nine months. This systematic review critically examines the current literature on the application of machine learning (ML) techniques for predicting CI outcomes. A comprehensive search identified 20 relevant studies. Imaging-based studies demonstrated high predictive accuracy for language and speech perception outcomes. Neural function measures provided a feasible way to assess the functional status of the auditory nerve, while clinical and audiological predictors were extensively explored through data mining techniques. Additionally, ML-based speech enhancement algorithms showed promise in improving speech recognition in noisy environments, a major challenge for CI users. Despite these advancements, a significant gap remains in developing models that can be directly integrated into CI programming. Integrating ML into CIs— in areas like signal processing and device programming—holds immense potential to support personalized patient care for hearing-impaired individuals.

Similar content being viewed by others

Introduction

Cochlear implants (CIs) are surgically implanted neural prosthesis for individuals with deafness. More than one million hearing-impaired adults and children are able to hear and understand spoken language due to the technological success of CIs. In fact, it is now common for hearing-impaired infants to receive CIs prior to their first birthday, at around 9 months1. CIs have transformed the lives of individuals with moderate to profound sensorineural hearing loss by directly stimulating the auditory nerve, leading to significant improvements in speech perception for both children and adults, and near normal language acquisition in children2,3,4. However, outcomes in speech perception vary widely among CI recipients5,6. Several factors contribute to this variability, including age at implantation, the electrode-neuron interface, the etiology of hearing loss, and neurocognitive functions7,8,9.

Despite the knowledge about the factors, predicting individual CI outcomes remains challenging10,11. For example, age at implantation is a well-known variable, but it alone does not guarantee successful outcomes with CIs. This is largely due to the inherent heterogeneity of patient data and the complex, multifactorial nature of human performance with CIs. Predicting individual outcomes in CI recipients is crucial for both children and adults for several reasons. It facilitates personalized rehabilitation, allowing clinicians to tailor post-implantation therapy for children to enhance communication abilities and develop spoken language. This also helps families set realistic expectations about potential improvements in hearing and speech perception. For adults, understanding individual variability in outcomes is equally important. It allows clinicians to identify CI recipients needing additional services, such as group training or counseling. The predictive capability also plays an important role in pre-operative assessments, guiding clinical decision-making about who is a suitable candidate for CI. Overall, predicting outcomes enhances patient care, family expectations and appropriate planning for resource allocation in the clinic, ensuring that each CI recipient—whether a child or an adult—receives the best possible personalized intervention.

Currently, many important decisions regarding CI often rely on guesswork and a trial-and-error approach by CI teams. This is particularly evident in programming CI devices for infants, where precise mapping is essential. As the age for CI surgery decreases—often around 9 months—it is important to establish objective parameters for programming these devices. Optimal mapping not only enhances the effectiveness of the CIs but also reduces logistics strain on the family and the clinic. By incorporating predictive models, clinicians can make more informed decisions that cater to the unique rehabilitation needs of each infant with hearing loss.

Although linear models are commonly employed to integrate the predictive factors, their capacity to explain the variance in CI outcomes is limited. A major reason could be that non-linear behavior commonly occurs within human systems that are difficult to capture using traditional statistical methods12. Traditional statistical methods often fail to model these complexities, as they are not well-suited to capture the hidden, nonlinear relationships among variables. Also, they are restricted by several underlying assumptions related to the data structure, such as normality, homogeneity, multicollinearity, etc. Practically, linear models do not provide information about prediction accuracy for new patients. More importantly, significant statistical variables may not have strong predictivity, and strong predictive variables could fail to be statistically significant13. There has been a longstanding effort to develop robust decision-making tools that combine multiple predictive factors to support clinicians in determining CI outcomes. Machine learning (ML) offers a promising approach to address this challenge. ML algorithms can analyze large datasets, identify patterns, and make data-driven predictions without relying on predefined assumptions. For example, compared to general linear models (GLMs), ML can handle diverse data types and explore nonlinear relationships, providing a more comprehensive understanding of the factors contributing to CI outcomes12,13,14,15 ML can be used to holistically predict outcomes from several variables, where individual variables may have disparate effects but produce cohesive effects in combination16. Recent studies show the potential of ML to predict speech perception outcomes in CI recipients. Studies have applied various ML techniques, including decision trees, random forests, support vector machines, and neural networks, to analyze patient data and identify important predictor variables17.

In principle, ML can also significantly improve the programming of CIs. By analyzing large datasets from previous CI recipients, ML algorithms can identify patterns and correlations that traditional methods might miss. This enables understanding of programming parameters that lead to optimal outcomes for different patient profiles. Predictive modeling allows clinicians to model individual responses to various settings based on factors such as age and type of hearing loss, facilitating accurate CI mapping, especially for infants. Additionally, ML can automate adjustments to CI parameters based on real-time feedback, leading to optimal programming of CI devices. As more data is collected, these models can continually improve, potentially enhancing speech-in-noise recognition, which continues to be a significant challenge for CI recipients (Table 1).

The goal of this study was to conduct a systematic review of existing literature on the application of ML techniques to predict CI outcomes. Specifically, the study aimed to (1) assess the performance of different ML algorithms in predicting speech perception and production outcomes, (2) determine the patient characteristics and CI-related factors that are associated with CI outcomes as identified by ML models, and (3) identify the relationship between neural or brain region activity and ML-predicted outcomes, providing insights into the underlying mechanisms of CI performance. By addressing these objectives, this review contributes to a state-of-the-art understanding of the factors influencing CI outcomes that can inform the development of more effective CI outcome prediction models (Table 2).

Results

A comprehensive search was conducted across all relevant databases to identify studies examining the application of ML to predict CI outcomes. A total of 1580 articles were retrieved. After removing duplicates, 1537 abstracts were screened for eligibility based on pre-defined criteria. Following the abstract screening, 31 full-text articles were selected for a detailed evaluation. Ultimately, 20 articles were deemed pertinent for information extraction based on their relevance to the research question. Two investigators reviewed the methodology of the selected articles in detail using the Newcastle Ottawa Scale adapted for cross-sectional studies for quality assessment18. The studies were categorized into four distinct groups based on the variables used to predict outcomes after CI surgery: (i) Brain imaging variables (studies: pediatric = 3, adult = 2); (ii) Neural function measures (studies: pediatric = 1, both age groups = 1); (iii) Clinical, audiological, and speech perception/production variables (studies: pediatric = 3, adult = 6); and (iv) Algorithms for speech enhancement (studies: adult = 2, simulation study=1); all adult CI studies included participants with post-lingual hearing loss. Additionally, the performance of various ML algorithms used within the selected studies was compared to evaluate their effectiveness in predicting CI outcomes (Table 3).

Prediction based on studies of brain imaging measures in pediatric CI users

Several studies highlight the growing potential of combining neuroimaging techniques with ML models to predict CI outcomes in children16,19,20. These studies show the role of early auditory brain development and the preservation of specific brain regions in predicting CI outcomes, demonstrating the potential of neuroimaging and ML techniques in personalizing CI candidacy and post-surgical expectations (Table 4).

Tan et al.16 used functional magnetic resonance imaging (fMRI) to predict language skills in children with congenital sensorineural hearing loss (SNHL) before the CI surgery (n = 23, mean age: 20 months, range = 8–67 months). Children with normal hearing (NH) served as controls (n = 21, mean age: 12.1 months, range = 8–17 months). Language skills were assessed two years after surgery using the Clinical Evaluation of Language Fundamentals-Preschool, Second Edition (CELF-P2) for the 16 children who completed follow-up; seven children were unavailable for follow-up. Prediction accuracy was compared between supervised and semi-supervised support vector machine (SVM) classifiers. Input feature vectors for the models were generated using contrast maps of fMRI data, processed with a general linear model and a Bag-of-Words (BoW) approach. Results revealed that cortical activation patterns observed during fMRI in infancy correlated with language performance two years post-CI. The semi-supervised SVM model, using the BoW feature extraction approach, outperformed the supervised model, achieving a classification accuracy of 93.8% and an area under the curve (AUC) of 0.92, compared to the supervised model’s accuracy of 68.8% and AUC of 0.71. The study identified the left temporal gyri (superior and middle) as the most predictive brain region for distinguishing effective from ineffective CI users. However, regression analysis—controlling for age at implantation and preoperative hearing levels—showed that this region alone could not fully separate the two groups. When a second feature region, located in the right cerebellum, was included, the model successfully classified effective versus ineffective users. Remarkably, despite the small sample size, the study demonstrated that just two features derived from fMRI contrast maps (speech versus silence) were sufficient to classify the groups using a semi-supervised learning approach. This underscores the potential of fMRI-based biomarkers to predict CI outcomes early in development.

Feng et al.19 applied SVM based on neural morphological data from MRI to predict speech perception abilities in individual children with CIs. In order to identify the brain structures impacted or unaffected by auditory deprivation, they compared neuroanatomical differences using voxel-based morphometry and multivoxel pattern similarity between bilateral SNHL (n = 37, mean age at implantation = 17.9 months, range = 8–38 months) and NH children (n = 40; mean age = 18 months, range = 8–38 months). Speech perception was assessed using the speech reception in quiet test, conducted before surgery and six months after CI activation. A linear SVM classifier was applied to distinguish CI candidates from NH participants. The classifier achieved high accuracy, with grey matter multivoxel pattern similarity and density reaching 95.9% and 97.3%, respectively, and white matter multivoxel pattern similarity and density achieving 97.3% and 91.9%, respectively. The study identified that brain regions unaffected by auditory deprivation, particularly those involved in auditory association and cognitive functions, were the most reliable predictors for classification. Additionally, the dorsal auditory network, a critical component for speech perception, emerged as the most robust predictor of future speech perception outcomes in children with CIs. These findings indicate the importance of preserved auditory and cognitive brain regions in predicting CI outcomes.

Song et al.20 examined the utility of functional connections derived from fMRI combined with ML models to predict CI outcomes in children and to identify SNHL. The sample included 68 children with SNHL (mean age = 46.24 months, SD = 24.38 months) and 34 NH children (mean age = 45.62 months, SD = 27.63 months). Additionally, 52 children with SNHL who underwent CI were analyzed to build a model predicting postoperative auditory performance, measured by the categories of auditory performance score. They used kernel principal component analysis for dimensionality reduction of functional connections and three algorithms for classification: SVM, logistic regression, and k-nearest neighbor (kNN). A voting ensemble method, which averaged the predicted probabilities of the three classifiers, achieved an AUC of 0.84 for distinguishing SNHL from NH. For predicting categories of auditory performance scores after CI surgery, a multiple logistic regression model achieved an accuracy of 82.7%. Although the multiple regression model had a higher AUC compared to the individual classifiers, the differences were not statistically significant (p > 0.05, DeLong test). Similarly, the voting ensemble method achieved a higher AUC than individual classifiers, but this improvement was also not significant (p > 0.05). These findings indicate that fMRI-based functional connections combined with ML algorithms hold promise for the prediction of CI outcomes in children. However, the model did not support a clinical diagnosis of SNHL.

Prediction based on studies of brain imaging measures in adult CI users

Sun et al.21 explored how accurately voxel-based morphometry could predict word recognition scores in adult CI users (n = 47) by analyzing gray matter density and structural changes in cortical regions. Adults with unilateral SNHL (n = 35) served as controls. They applied random forest (RF) and linear SVM regression models to MRI brain scans. The region of interest (ROI)-based method produced a higher mean absolute error (MAE) of 15.9. In contrast, the cluster-based approach, which combines clinical features with imaging data, yielded a more accurate prediction with a relatively lower MAE of 14.25 (a lower MAE indicates better model prediction). This finding highlights the advantage of integrating diverse data sources for improving word recognition score predictions. The study also showed that the right medial temporal cortex and right thalamus are key brain regions for predicting word recognition scores in CI users. Among clinical features, the duration of deafness had the strongest influence on predictions, followed by the age at CI surgery. Additionally, the RF model consistently outperformed the SVM model across both the ROI-based and cluster-based approaches. This study highlights the importance of combining brain structural imaging with clinical data for word recognition score predictions and demonstrates the higher accuracy of RF models over SVMs for outcome predictions in adults.

Kyong et al.22 examined the role of cortical cross-modal plasticity changes in predicting CI outcomes in adult CI users. They used electroencephalography (EEG) measures such as cortical auditory evoked potentials, cortical somatosensory evoked potentials, and cortical visual evoked potentials as biomarkers to predict CI outcomes. They evaluated CI outcomes in terms of features like sensor level (latency and amplitude), source level (current source density), and a combination of both. An SVM was applied to 13 datasets from 3 patients. Prediction accuracy differed between modalities; interestingly, tactile stimuli showed the highest accuracy (auditory: 82.71%, tactile: 98.88%, visual: 93.55%). Classification accuracy was generally higher when combining sensor and source features, compared to using sensor or source features alone—except for the tactile modality, where source-level features alone achieved comparable accuracy. These findings suggest that features from auditory, tactile, and visual stimulation at both the sensor and source levels, or a combination, can serve as inputs for ML models to predict CI outcomes. Additionally, the study supports the idea that cross-modal brain plasticity resulting from deafness may provide a basis for predicting CI outcomes.

Prediction based on neural function measures

There were two studies in this category: one focused on pediatric populations23 and one on CI users from both age groups24. Lu et al.23 used a SVM classifier to predict postoperative outcomes in children with anatomically normal cochlea but cochlear nerve deficiency. A total of 70 children with CIs, with a mean age of 27.31 months (SD = 13.92 months), were included in the study. Multiple data types such as demographic, radiographic, audiologic, and speech assessments, were included to build the model. The outcome measures included categories of auditory performance, speech intelligibility rating, and infant/toddler meaningful auditory integration scale after two years of CI. Post-operative hearing and speech rehabilitation outcomes were classified using the SVM algorithm. They reported that children with a higher number of nerve bundles and a larger vestibulocochlear nerve area showed better CI outcomes in terms of both hearing and speech rehabilitation measures. A significant positive correlation was observed between categories of auditory performance scores and speech intelligibility rating at two years post-CI surgery, as well as the number of identifiable nerve bundles and the area of the vestibulocochlear nerve. The model predicted postoperative hearing with an accuracy of 71% and speech rehabilitation with an accuracy of 93%, suggesting that a relatively functional cochlear nerve tends to result in better CI outcomes.

Skidmore et al.24 compared linear regression, SVM, and logistic regression models to predict auditory nerve function in bilateral CI users. The input variables were derived from electrically evoked compound action potentials (eCAP) refractory recovery and input/output (I/O) functions. Study participants were children with cochlear nerve deficiency (n = 23, mean age = 3.42 years), children with normal-sized cochlear nerves (n = 29, mean age = 3.18 years), and adults (n = 20, mean age = 69.22 years) with normal-sized cochlear nerves. The three models predicted two distinct distributions of cochlear nerve indices for cochlear nerve deficiency and normal-sized cochlear nerves, with classification accuracy of 0.93 for the linear model, 0.91 for SVM, and 0.95 for logistic regression. In adult CI users, although the models varied slightly, cochlear nerve indices were found to correlate with Consonant-Nucleus-Consonant word and AzBio sentence scores in quiet. These findings suggest that machine learning models can accurately predict auditory nerve function in bilateral CI users, with cochlear nerve indices correlating with speech recognition performance.

Prediction based on clinical, audiological, speech perception, and production data studies in pediatric CI users

Few studies have explored ML models to predict developmental and speech outcomes in children with CIs25,26,27. By integrating clinical and audiological data into the ML models, these studies offer additional insights into the known factors that influence CI success.

Abousetta et al.25 examined a scoring system to improve CI candidacy selection. Data from 100 children, with a mean age of 78.28 months (S.D = 31.63), were collected from three rehabilitation centers following CI surgery. Statistical and ML approaches were applied to analyze the data. They used metrics related to language, phonological, and social deficits to quantify developmental delays (in months) in these areas. The classification predictive models achieved superior validation accuracy compared to linear regression in predicting phonological deficits (88.11%). However, the accuracy for language and social deficits was moderate (56.66% and 40.46%, respectively). In the regression analysis, the RF model outperformed the evaluated models in predicting language age and phonological deficit. The MAE for the RF model was 8.95 and 5.51 months for language and phonological deficits, respectively. The linear regression model had an MAE of 8.29 months for the social deficit. The average duration of auditory deprivation and family support emerged as significant factors affecting language, phonological, and social deficits following CI. These variables each contributed more than 17% to the overall model weight in predicting the outcomes. Thus, the scoring system incorporating statistical and ML approaches effectively predicted developmental deficits in children post-CI, with the RF model showing superior accuracy in predicting phonological and language deficits. At the same time, factors like auditory deprivation and family support played significant roles in the outcomes.

Byeon26 used the RF model to investigate the factors affecting articulation accuracy in children with CI. The study involved 82 children (4 to 8 years, mean age = 6.3 years, SD = 3.1 years) who were using a CI for at least one year but less than five years. Articulation accuracy was measured using a nine-sentence speech intelligibility test and was rated by two undergraduate students. The variables used were age, family income, gender, duration of CI use, vocabulary level, and corrected hearing. The model achieved a classification accuracy of 78.80% in predicting speech intelligibility. The analysis revealed that duration of CI use, vocabulary skills, household income, age, and gender significantly influenced speech intelligibility outcomes.

In a follow-up study, Byeon27 used ML models to predict the intelligibility of speech produced by children with CIs. Their study included 91 children with CIs, and 80 college students evaluated their speech samples (speaking and reading). The RF model had the lowest MAE of 0.81 and the lowest root mean squared error (RMSE) of 0.108, indicating that it made the most accurate predictions among the methods tested (multiple regression analysis, SVM regression, and RF). In addition, duration of CI use, auditory training, corrected hearing, and age were some of the variables that influenced speech intelligibility, including pitch, loudness, and speech quality.

Prediction based on clinical, audiological, speech perception, and production data studies in adult CI users

Several studies have utilized ML techniques to predict CI outcomes based on a combination of clinical, audiological, and speech perception data15,17,28,29,30,31. These studies show reasonable accuracy of ML algorithms in predicting CI outcomes, with RF models consistently outperforming other methods, and substantiate the importance of preoperative clinical and audiological factors in determining CI success.

Ramos-Miguel et al.28 used data mining techniques, including the kNN model for classification and linear regression for estimating influential variables, to develop algorithms predicting the performance of adult CI users (n = 60) on disyllabic word tests. Input factors were categorized into demographics, hearing aid use, CI use, audiological data, and quality of life. The kNN algorithm achieved a 90.83% success rate in predicting test performance, while linear regression showed a strong positive correlation between predicted and actual scores (R² = 0.96), with a mean error of 5.99% (SD = 4.25%). Key predictors included disyllabic word scores in the first implanted ear, the time between CI surgeries, the type of hearing loss (prelingual or post-lingual), and residual hearing in the non-implanted ear. Their study demonstrated that data mining techniques could predict word test performance in adult CI users and identified relevant predictors. Similarly, Guerra-Jiménez et al.29 applied a data mining technique (kNN algorithm) to predict the benefits of CI in terms of speech recognition and quality of life in 29 adult CI users. The Glasgow benefit inventory32 and the specific questionnaire33 were used to evaluate the benefits and their association with quality of life. The kNN method achieved 80.7% accuracy for predicting speech recognition and quality of life, while decision tree analysis of Glasgow benefit factors reached 81% accuracy. Linear regression yielded 85% accuracy for speech recognition, 68% for Glasgow benefit inventory, and 71% for specific questionnaires. They identified factors such as age, duration of deafness, prior hearing aid use, and preoperative residual hearing as influencing speech recognition and quality of life.

Kim et al.15 compared the predictive accuracy of the GLMs and RF model for postoperative CI outcomes in 120 adults. The preoperative factors, such as duration of deafness, age at implantation, and duration of hearing aid use, were input as predictors for word recognition scores. The RF model significantly outperformed the GLM (p < 0.00001), achieving a correlation coefficient of 0.96 and an MAE of 6.10 (SD = 4.70), compared to the GLM’s coefficient of 0.70 and MAE of 15.60 (SD = 9.50). Adding principal component analysis to the RF improved the prediction with a coefficient of 0.97 and an MAE of 4.80 (SD = 4.40). The cross-validation of the RF model with a new dataset resulted in a higher MAE of 17.10. This discrepancy likely stemmed from differences in how word recognition scores were measured across the datasets. To address this, the authors assumed a linear bias and applied a post-hoc GLM correction, incorporating the test site as a covariate to combine data from all three sites. This adjustment reduced the MAE of the RF model to 9.60 (SD = 5.20) for the test cohort. Duration of deafness was the strongest preoperative predictor of postoperative word recognition scores, followed by hearing aid use duration and age at CI surgery. In contrast, preoperative hearing ability and word recognition thresholds showed weaker correlations with postoperative word recognition scores. Their findings suggest RF as a robust model for predicting CI outcomes and emphasize the effect of dataset variability on prediction accuracy.

Crowson et al.30 predicted the postoperative outcome in adult CI users using various categorical (e.g., hearing loss cause) and numerical variables (e.g., pure tone average). A total of 282 preoperative variables were included in the ML model. The outcome variable was the hearing in Noise Test (HINT) score after one year of CI. The ML algorithms included neural networks and an XGBoost gradient-boosted tree algorithm. The numerical variables were given as input for the neural networks, and the prediction of HINT scores resulted in an RMSE of 57.0% (a lower RMSE is better) and a classification accuracy of 95.40%. Adding the categorical variables to the model reduced the RMSE to 25% (better) and classification accuracy to 73.30% (reduced). When the XGBoost algorithm was applied with only numerical variables, the HINT score RMSE prediction performance was 25.30%. The most crucial preoperative variable found was the HINT sentence score, followed by age at surgery. The XGBoost ensemble decision tree model could predict the association between the various preoperative measures and HINT scores. They also found the effect of subjective factors like quality of life and vestibular function in predicting postoperative performances.

Shafieibavani et al.17 assessed seven different ML models to predict postoperative word recognition scores in adult CI users and examined how well the outcomes from these models can be generalized to new datasets. Input to models considered various factors such as demographics, hearing test results, medical history, and causes of hearing loss. They used the RF model, extreme gradient boosting with linear models (XGB-Lin), extreme gradient boosting with random forest (XGB-RF), artificial neural networks (ANN), and three more baseline models (linear and RF models) to predict word recognition scores. Additionally, they examined the influence of sample size on the model’s accuracy. XGB-RF with all features achieved the best predictive performance (median MAE: 20.81), while the RF performed similarly (median MAE: 20.76). The performance of the XGB-RF model on the new datasets slightly varied across the three new datasets, and the MAE ranged from 17.90 to 21.80, depending on the dataset. Doubling the sample size improved the model performance by 3%. The XGB-RF achieved the best predictive performance among seven different ML models (XGB-RF, RF, ANN, XGB-Lin model, model A, model B, model C) that were compared, and it was statistically significant. Overall, this study found that the XGB-RF model provided the best predictive performance for postoperative word recognition scores in adult CI users, with slight variations across new datasets and improved accuracy with larger sample sizes.

Zeitler et al.31 examined supervised machine learning classifiers to predict postoperative acoustic hearing preservation in 761 adult CI users, utilizing variables such as standard pure tone average (SPTA), low-frequency pure tone average (LFPTA), hearing preservation pure tone average (HPPTA). Their analysis involved two phases: statistical analysis using multivariate logistic regression to identify associations between covariates and outcomes, followed by feature engineering and evaluation of different supervised learning classifiers. They compared the relative performance of gradient boosting machine (GBM), AdaBoost, RF, SVM, kNN, and Gaussian naive bayes (GNB) in predicting change in hearing thresholds before and one month after CI surgery. The RF model was found to be the superior classifier based on mean performance across validation cycles, and it achieved the highest average accuracy in predicting each variable on the validation set. For SPTA, the RF had a mean MCC (Mathews Correlation Coefficient) of 0.52 (SD = 0.11) and a mean AUC of 0.83 (SD = 0.05). Similar trends were observed for LFPTA (MCC mean = 0.42, SD = 0.12; AUC mean = 0.73, SD = 0.07) and HPPTA (MCC mean = 0.38, SD = 0.10; AUC mean = 0.76, SD = 0.02). GNB showed lower classification performance compared to other algorithms (GBM, SVM, RF, kNN, and AdaBoost classifiers) and was statistically significant (p < 0.001). The preoperative LFPTA and standard PTA were found to be important predictors of hearing preservation. A significant negative association was observed between the predictor variables, such as sudden hearing loss, noise exposure, aural fullness, and abnormal ear anatomy, and the response variable, one-month change in the lowest quartile of the SPTA. In contrast, a significant positive association was found between preoperative LFPTA and the one-month change in the lowest quartile of LFPTA. This study demonstrated the RF model outperformed other ML classifiers in predicting postoperative acoustic hearing preservation in CI recipients, with preoperative LFPTA and standard PTA as important predictors of hearing outcomes.

Together, these studies underline the growing importance of ML for adult CI applications, especially in predicting speech recognition scores and other critical outcomes. The consistent performance of RF models, in particular, suggests that they are among the most reliable methods for predicting CI outcomes across different datasets and clinical settings. These findings support the notion that a combination of preoperative clinical data, audiological assessments, and speech perception measures can be effectively used in predictive modeling to optimize CI outcomes and guide clinical decision-making.

ML-based speech enhancement algorithm

ML techniques can be applied to the design of CI speech processors. To date, studies on speech enhancement algorithms based on ML have been conducted exclusively in adult CI users. Few studies have explored the integration of ML algorithms with CI strategies to address challenges faced by CI users, such as recognizing speech in noise and minimizing interference from multi-talker environments34,35,36,37. Together, these studies emphasize the promising role of ML techniques in optimizing speech processing for CI users to improve communication in noisy environments.

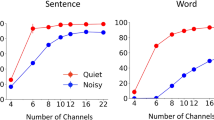

Goehring et al.34 compared speech-in-noise recognition using the Advanced Combination Encoder (ACE) strategy with and without a Neural Network Speech Enhancement (NNSE) algorithm. The NNSE algorithm was designed to improve speech intelligibility in noise by attenuating noise-dominated channels and preserving speech-dominated channels. They evaluated the algorithm measuring speech recognition threshold in 14 CI adult users and employing three types of background noise: speech-weighted noise, multi-talker babble, and International Collegium of Rehabilitative Audiology (ICRA) noise. The NNSE algorithm integrated into the ACE strategy consisted of a feature extraction step and a neural network implementation. The features were extracted from the noisy speech signals and passed through a feedforward neural network. The SRT results showed that the NNSE-enhanced ACE strategy outperformed the unprocessed ACE for all noise types, with improvements ranging from 1.4 to 6.4 dB at different signal-to-noise ratios. They implemented a speaker-dependent and a speaker-independent version of the NNSE algorithm. The speaker-dependent NNSE provided higher gains (up to 6.4 dB in ICRA noise), while the speaker-independent version showed improvements in 2 out of 3 noise types, but to a lower extent. Thus, this study demonstrated that incorporating an NNSE algorithm into the ACE strategy could significantly improve speech-in-noise recognition for CI users, with the speaker-dependent version yielding the most substantial improvements across various noise conditions.

In a simulation study, Grimm et al.35 compared the potential negative impact of channel interaction on speech perception between congenitally deaf and post-lingually deaf adults with CIs. They approximated the speech stimulus to simulate how it stimulates the normal cochlea versus CI. The neural networks were provided with high-resolution (32 channels), like intact cochlea, and low-resolution (16) linearly combined channels similar to CI-delivered speech. The low-resolution networks were designed to mimic the limitations of CI, specifically by introducing channel interaction into the speech data. The models were initially trained on high-resolution speech and then tested on modified, low-resolution speech with channel interaction. Channel interaction in low-resolution speech significantly influenced the performance of the networks. This suggests that spectral degradation due to channel interaction in CIs may impede auditory learning in post-lingual CI users. Overall, these findings provide further evidence for the effect of channel interaction on speech perception in CI users, emphasizing the challenges post-lingual users may face in learning.

Lai et al.36 developed a deep neural network method that combined auditory and visual cues (lip movements) to enhance speech perception for individuals with CIs in noisy environments. Their proposed model, self-supervised learning-based audio-visual speech enhancement (SSL-AVSE), combines visual and auditory signals from the target speaker. The features of the AV-HuBERT model were extracted from the combined audio and visual data, which were then processed using a bidirectional long short-term memory model. The study included 80 participants, with 20 individuals allocated to each noise or sound condition to minimize cross-referencing bias. The SSL-AVSE method significantly improved speech enhancement performance, as measured by the perceptual evaluation of speech quality and short-time objective intelligibility tests. Additionally, the method was evaluated using a CI vocoder to verify its intelligibility. The SSL-AVSE method showed significant improvements in the presence of dynamic noise at different signal-to-noise ratio conditions, and the normal correlation matrix scores improved from 26.5% to 87.2% when compared to the baseline model. Thus, combining auditory and visual cues through the SSL-AVSE model significantly enhances speech perception in noisy environments for CI users.

Borjigin et al.37 investigated the effectiveness of deep neural networks, specifically a recurrent neural network and SepFormer, in reducing multi-talker noise interference for CI users. The study used a custom data set consisting of clean target speech and different noise types mixed at signal-to-noise ratios ranging from 1 to 10 dB datasets. The recurrent neural network architecture consisted of an input layer of 512 units, two hidden long short-term memory layers of 256 units each, and a projection layer of 128 units, while the SepFormer used a single-layer convolutional network as an encoder to learn 2-dimensional features. The scale-invariant source-to-distortion ratio, short-time objective intelligibility, and perceptual evaluation of speech quality validated the performance of the models. The algorithms were tested on 13 adult CI users and showed that both deep neural network models significantly improved speech intelligibility in stationary and non-stationary noise conditions. These results highlight the potential of advanced neural network architectures, such as recurrent neural networks and SepFormer, to enhance auditory experiences for CI users in noisy environments.

Discussion

This systematic review examined the application of ML for CI outcome prediction. Specifically, it critically analyzed the efficacy, limitations, and generalizability of various ML models in predicting CI outcomes across a spectrum of neural markers and clinical features. Among the 20 studies analyzed, various ML models were employed to predict speech intelligibility and perception, with inputs ranging from imaging data to audiological and clinical variables. Additionally, it found the challenges of small sample sizes, overfitting, and the need for further validation studies. The review findings offer insights for personalized CI intervention, particularly emphasizing the role of brain plasticity and its impact on long-term auditory and speech performance.

Numerous clinical variables recognized by ML studies as predictors of CI outcomes have already been identified in the existing literature, including studies that did not use ML approaches38,39,40,41,42,43,44,45,46. For instance, ML models consistently revealed age at the time of surgery as a critical variable for predicting outcomes15,26,30. Similarly, other variables that are known to influence performance in varying degrees included the duration of deafness15,29, the length of hearing aid use prior to implantation15,28,29, pure-tone thresholds31, preoperative residual hearing29, preoperative sentence list scores30, duration of auditory deprivation, family support25, duration of CI usage, auditory training, wear time of the implant, vocabulary skills, and socioeconomic factors such as household income26,27. These findings provide broad external validation for the ML models, as they align with previously identified predictors in non-ML studies.

In addition to these established clinical predictors, ML-based studies identified some novel predictors of CI outcomes. For example, Tan et al.16 applied imaging techniques to reveal that the superior and middle temporal gyri and the right cerebellum are critical regions influencing speech outcomes. Similarly, Feng et al.19 highlighted the significance of auditory association and cognitive networks for effective speech perception. Other important brain regions include the right medial temporal cortex and the right thalamus21, both of which contribute to the development of speech and word recognition abilities. Furthermore, Skidmore et al.24 demonstrated that eCAP metrics differed in cases of normal versus deficient cochlear nerves. The total number of nerve bundles and the overall area of the vestibulocochlear nerve were also found to influence outcome measures. These findings suggest that integrating imaging-based neural features reflecting speech-brain correlates into ML models provides a robust data source for training and deploying more accurate predictors of CI outcomes.

Beyond outcome prediction, this review also highlighted the application of ML algorithms for improving speech processing within CIs. For instance, Goehring et al.34 demonstrated that integrating NNSE within the ACE strategy improved SRTs by 1.4 to 6.4 dB across various background noise types. In a simulation study, Grimm et al.35 demonstrated how channel interaction adversely affects speech perception, particularly for post-lingually deafened adults. This suggests that the spectral degradation inherent in CI technology could impede auditory learning and slow rehabilitation. Complementing this, Lai et al.36 introduced an SSL-AVSE model, which effectively combines auditory and visual cues to enhance speech intelligibility in noisy conditions. Similarly, Borjigin et al.37 confirmed that deep neural networks could reduce multi-talker noise interference, improving speech intelligibility for CI users in both stationary and non-stationary environments. Collectively, these studies highlight the potential of integrating ML techniques into speech enhancement frameworks for designing effective speech-processing algorithms.

The studies reviewed present some limitations that need to be addressed in future work on predicting CI outcomes using ML algorithms. The issue of small sample sizes and the risk of overfitting remains prevalent across studies. A standard problem in using small datasets is that it reduces the robustness and predictive power of ML models16,21,24. For instance, Feng et al.19 identified the need for larger datasets to test the predictive power of their model. However, the limited size of training datasets is often due to inadequate publicly available data repositories. Strategies such as data augmentation should be explored as a potential solution to enhance dataset size, quality, and diversity47. In addition to addressing data limitations, choosing the appropriate classification or ML approach is highly relevant to making robust predictions. While some models, like RF, have shown better performance than traditional regression models15,27 there is considerable variability in prediction accuracy and MAE across different datasets. This variability raises concerns about the generalizability of these models and may limit practical applications. In many cases, ML models performed well on training data but showed poorer performance when tested on new, independent datasets15,17. This issue was exacerbated by the lack of uniformity in clinical data, as clinics use varied protocols, materials, and testing conditions. These differences, along with language-specific factors, challenge standardization and undermine the reliability of models validated with a single dataset. A few studies, such as Shafieibavani et al.17 have addressed this limitation by implementing external validation. They collected large datasets from three independent clinics, trained the model using two datasets, and evaluated its performance on the third. Future studies should incorporate external validation datasets to evaluate prediction robustness and employ standardized evaluation metrics to ensure consistency and comparability across models. This would help to address the overfitting issues and improve the generalizability of ML algorithms.

A notable limitation in many studies was the lack of statistical comparison between different ML models. While studies such as Sun et al.21 reported that RF outperformed linear models in predicting CI outcomes, many others did not use statistical tests to validate these claims. This absence of statistical comparisons makes it difficult to confidently recommend one model over another. Comparing the performance of multiple models becomes even more challenging when different types of data and loss functions are used to train the models. Even when performance metrics provide a quantitative way to assess models, determining the optimal indicator is crucial. For classification tasks, metrics such as accuracy, precision, recall, F1 score, and AUC are commonly used, while MSE and MAE are preferred for regression problems. It is also important to consider whether CI outcome prediction should focus on classification or regression models. Additionally, the lack of standardized metrics across studies complicates cross-study comparisons. For example, different studies have used different outcome measures, such as word recognition scores or other speech perception tests, making it challenging to assess the performance of different models across different studies. This issue will likely persist in clinical datasets, where outcome measures may not always be standardized. To address these challenges, statistical approaches such as cross-validation should become the norm when comparing model performance, helping to ensure more reliable and meaningful evaluations in future research.

Moreover, focusing solely on speech perception tests—while useful—may oversimplify the multifaceted nature of CI outcomes. For example, Kim et al.15 and Crowson et al.30 found a strong association between the duration of deafness and sentence recognition scores, yet these outcome measures may not fully capture the broader spectrum of cognitive, auditory, and emotional factors that influence CI success. To improve the predictive accuracy of ML models, future studies should incorporate a broader set of features, including demographic variables (e.g., ethnicity/race), socioeconomic status, electrode-neural interface, and cognitive factors to develop the model. This approach could enhance predictions by better reflecting the individual variability seen in CI recipients. In this context, gradient boosting decision tree algorithms should be explored, as they effectively integrate data from multiple sources, perform well with small datasets, and handle categorical variables. Unlike deep learning approaches, which require large datasets and primarily work with numerical features, or require one-hot encoding to handle categorical variables. Notably, CatBoost—an open-source algorithm for gradient boosting on decision trees—could be a valuable tool for predicting CI outcomes as it can incorporate diverse clinical, imaging, and demographic data48,49 without extensive preprocessing, making it well-suited for capturing the multifaceted nature of CI success.

ML models, particularly in the context of predicting CI outcomes, are often referred to as “black box” models. While these models can deliver accurate predictions, the specific variables that contribute to those predictions are typically not transparent or easily interpretable. In clinical practice, this lack of interpretability can create significant challenges. For clinicians, understanding which variables most influence an outcome prediction is crucial for making informed decisions about patient care. For instance, if an ML model predicts that a particular patient will have a favorable outcome with a CI, clinicians need to know which factors—such as age, duration of deafness, or post-operative cognitive function—are driving that prediction. Without this understanding, clinicians might be hesitant to fully use model recommendations, potentially limiting its adoption in real-world clinical settings. One effective way to address this issue is by applying explainable AI (XAI) techniques, such as SHAP (Shapley Additive Explanations) analysis, to improve model transparency50. Calculating the Shapley values for each feature can identify each input variable’s contribution to a specific prediction, providing insights into how different factors interact within the model. For example, in the context of CI outcomes, SHAP can help identify which variables—such as the age at implantation, the duration of auditory deprivation, or neuroimaging features—are most important in predicting speech recognition performance post-implantation. By understanding the relative importance of each variable, clinicians can better assess the unique profile of each patient and adjust CI programming accordingly. For example, suppose the model indicates that preoperative speech perception scores are highly influential in predicting postoperative outcomes for a specific patient. In that case, clinicians may prioritize targeted auditory training to optimize performance in that area. Furthermore, the transparency of SHAP allows for continuous refinement of ML models. If a specific variable is consistently found to have minimal impact on predictions, it could be excluded in future iterations of the model, improving both its efficiency and interpretability. This ability to both explain and improve ML predictions will be essential as the application of ML in CI programming continues to evolve. While SHAP has been applied in the hearing sciences literature to interpret ML models51, only a few studies, such as Crowson et al.30, utilized Shapley analysis to assess the contributions of individual variables to predictions of CI outcomes. For applicability and transparency, future studies should apply XAI techniques to determine the variables that drive predictions in CI outcomes.

An important finding of this review is the lack of studies exploring the application of ML algorithms in the programming of CIs, particularly for pediatric populations. While the reviewed studies predominantly focused on predicting postoperative outcomes, there is a noticeable absence of research aimed at optimizing the CI fitting process, especially for infants and young children who cannot provide verbal feedback. Clinicians often rely on indirect means, or trial and error to adjust CI settings, which can be time-consuming and less precise.

To provide reliable and functional electrical hearing, CIs must be tailored to meet the individual needs of each patient52. This process is dynamic, as intracochlear conditions change over time. For this reason, exploring the use of ML algorithms to determine behavioral thresholds (T-levels) and maximum comfortable hearing levels (M-levels) for each electrode could improve the CI fitting processes, especially for pediatric users who cannot provide verbal feedback. To enable real-time CI adjustments, it is essential to leverage postoperative data effectively. Modern CIs allow for the monitoring of voltage changes within the cochlear electrode interface53, providing data such as intracochlear impedance measurements and eCAPs54. These electrode-nerve interface markers should be explored as input features to train ML models capable of making real-time fitting adjustments. Future research should focus on integrating ML-driven approaches into practical clinical applications, ensuring that real-time CI adjustments become a viable tool for clinicians.

Incorporating ML into the CI programming process could reduce the reliance on educated guesses and provide a more data-driven approach to optimizing device settings. Most models discussed here primarily focus on predictive analytics, which analyzes data to predict trends. Predictive approaches are valuable but cannot provide specific guidance on decision-making. In contrast, prescriptive analytics determines the best actions to take by recommending optimal solutions for anticipated scenarios. That is, what should be done to achieve the best possible outcome? This approach is useful in scenarios with large and diverse datasets, where it can maximize outcomes and streamline decision-making processes. Prescriptive analytics is successfully applied in areas like resource allocation and risk management in business55. However, its application in CI programming remains unexplored.

The most formidable challenge remains making predictions at the individual case level. To improve the accuracy of these predictions, several clinical strategies can be employed. One effective approach would be to implement a personalized, data-driven framework that incorporates not only traditional clinical measures but also cognitive assessments, neuroimaging data, and insights gleaned from CI data logging56. For example, brain plasticity, as reflected in specific brain regions such as the superior and middle temporal gyri, has been shown to correlate with improved CI outcomes16,21. Combining these neuroanatomical insights with patient-specific variables—such as age at implantation, duration of auditory deprivation, and cognitive function, could allow ML models to provide more tailored predictions. Additionally, continuous post-implantation monitoring, incorporating both auditory performance data and patient-reported outcomes, could provide valuable feedback to the ML models. This feedback could allow for real-time updates to predictions, enabling online adjustments to CI settings and optimizing device performance over time.

In conclusion, this systematic review underscores the promise and challenges of using ML techniques for predicting CI outcomes. While ML has demonstrated the potential to revolutionize CI outcome prediction, its clinical application requires overcoming several challenges, including small sample sizes, model overfitting, and limited generalizability. Future research should prioritize larger, more diverse datasets, incorporate advanced ML techniques such as ensembles. The reduced availability of data can be improved by promoting transparency and the creation of public repositories of a broad range of predictive features, such as image-based neural features and sociological and physiological variables, to capture the complexity of CI outcomes. Addressing these limitations would have a clinical impact, as it would lead to more personalized interventions, ultimately improving the long-term performance of CI users. Ultimately, integrating ML into clinical practice can empower CI teams to make more informed, objective decisions, improving patient care and quality of life for all CI recipients—including hearing-impaired infants who undergo implantation within the first year of life.

Methods

Protocol and registration

The review protocol followed the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA), Fig. 1. The review included studies with both children and adult CI users.

Eligibility criteria

This systematic review included studies that applied ML methods to predict the outcome following CI. It included studies with children and adult populations.

Data sources and search strategy

Five scientific databases, PubMed, Embase, Scopus, CINAHL, and CENTRAL, were searched to identify articles published between 2000 and 2024 that investigated the application of ML to predict outcomes after CI. The search was carried out between 03/01/2024 to 04/01/2024. The keywords used were artificial intelligence, artificial neural network, cochlear implantation, cochlear implants, cognition, deep learning, language outcome, machine learning, speech outcome, speech perception, speech intelligibility, and speech production.

Study selection

Duplicates were removed after merging the results from the five databases (PubMed, Embase, Scopus, CINAHL, and CENTRAL). Two authors then independently screened titles and abstracts to identify relevant studies.

Data extraction and management

A structured data collection form was developed to capture study characteristics, including author(s), publication year, study methods, outcome variables, specific machine learning algorithms employed, results obtained, and main findings.

Data availability

The data used in this systematic review are derived from publicly available peer-reviewed journal articles. All studies included in the review can be accessed through the following databases: PubMed, Embase, Scopus, CINAHL, and CENTRAL. The full list of included studies and their respective references is already reported. For transparency and reproducibility, all search strategies and inclusion/exclusion criteria are provided in the Methods section.

References

Deep,N. L. et al. Cochlear Implantation in Infants: Evidence of Safety. Trends Hear 25, https://doi.org/10.1177/23312165211014695 (2021).

Välimaa, T. T. et al. Spoken language skills in children with bilateral hearing aids or bilateral cochlear implants at the age of three years. Ear Hear. 43, 220–233 (2022).

Culbertson, S. R. et al. Younger age at cochlear implant activation results in improved auditory skill development for children with congenital deafness. J. Speech, Lang., hearing Res.: JSLHR 65, 3539–3547 (2022).

Boisvert, I. Cochlear implantation outcomes in adults: A scoping review. PLoS One 15, e0232421 (2020).

Tropitzsch, A. et al. Variability in cochlear implantation outcomes in a large German cohort with a genetic etiology of hearing loss”. Ear Hear 44, 1464–1484 (2023).

Lazard, D. S. et al. pre-, per- and postoperative factors affecting performance of post linguistically deaf adults using cochlear implants: a new conceptual model overtime. PLoSOne 7, e48739 (2012).

Hasan, Z. et al. Systematic review of intracochlear measurements and effect on postoperative auditory outcomes after cochlear implant surgery. Otol. Neurotol. 45, e1–e17 (2024).

Kraaijenga, V. J. et al. Factors that influence outcomes in cochlear implantation in adults, based on patient-related characteristics - a retrospective study. Clin. Otolaryngol. 41, 585–592 (2016).

Beckers, L. et al. Exploring neurocognitive factors and brain activation in adult cochlear implant recipients associated with speech perception outcomes-A scoping review, Front. Neurosci. 17, 1046669 (2023).

Nikki, P. et al. Variability in clinicians’ prediction accuracy for outcomes of adult cochlear implant users. Int J. Audio. 63, 613–621 (2024).

Moberly, A. C. The enigma of poor performance by adults with cochlear implants. Otol. Neurotol. 37, 1522–1528 (2016).

Ngiam, K. Y. & Khor, I. W. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 20, e262–e273 (2019).

Azzolina, D. et al. Machine learning in clinical and epidemiological research: Isn’t it time for biostatisticians to work on it? Epidemiol. Biostat. Public Health, 16, https://doi.org/10.2427/13245 (2019).

Verlato, G. et al. Short-term and long-term risk factors in gastric cancer. World J. Gastroenterol. 21, 6434–6443 (2015).

Kim, H. et al. Cochlear implantation in post lingually deaf adults is time-sensitive towards positive outcome: prediction using advanced machine learning techniques. Sci. Rep. 8, 18004 (2018).

Tan, L. et al. A semi-supervised Support Vector Machine model for predicting the language outcomes following cochlear implantation based on pre-implant brain fMRI imaging. Brain Behav. 5, e00391 (2015).

Shafieibavani, E. et al. Predictive models for cochlear implant outcomes: Performance, generalizability, and the impact of cohort size. Trends Hear 25, 23312165211066174 (2021).

Wells, G. A. et al. The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp (2000).

Feng, G. et al. Neural preservation underlies speech improvement from auditory deprivation in young cochlear implant recipients. Proc. Natl Acad. Sci. USA 115, E1022–E1031 (2018).

Song, Q. et al. Functional brain connections identify sensorineural hearing loss and predict the outcome of cochlear implantation. Front. Comput. Neurosci. 16, 825160 (2022).

Sun, Z. et al. Cortical reorganization following auditory deprivation predicts cochlear implant performance in postlingually deaf adults. Hum. Brain Mapp. 42, 233–244 (2021).

Kyong, J. et al. Cross-modal cortical activity in the brain can predict cochlear implantation outcoe in adults: A machine learning study. J. Int. Adv. Otol. 17, 380–386 (2021).

Lu, S. et al. Machine learning-based prediction of the outcomes of cochlear implantation in patients with cochlear nerve deficiency and normal cochlea: a 2-year follow-up of 70 children. Front Neurosci. 16, 895560 (2022).

Skidmore, J. et al. Prediction of the functional status of the cochlear nerve in individual cochlear implant users using machine learning and electrophysiological measures. Ear Hear 42, 180–192 (2021).

Abousetta, A. et al. A scoring system for cochlear implant candidate selection using artificial intelligence. Hear. Balance Commun. 21, 114–121 (2023).

Byeon, H., Developing a model for predicting the speech intelligibility of South Korean children with cochlear implantation using a random forest algorithm, Int. J. Adv. Comput. Sci. Appl. 9, https://doi.org/10.14569/IJACSA.2018.091113 (2018).

Byeon, H. Evaluating the accuracy of models for predicting the speech acceptability for children with cochlear implants, Int. J. Adv. Comput. Sci. Appl. 12, https://doi.org/10.14569/IJACSA.2021.0120203 (2021).

Ramos-Miguel, A. et al. Use of data mining to predict significant factors and benefits of bilateral cochlear implantation. Eur. Arch. Otorhinolaryngol. 272, 3157–3162 (2015).

Guerra-Jiménez, G. Cochlear implant evaluation: prognosis estimation by data mining system. J. Int. Adv. Otol. 12, 1–7 (2016).

Crowson, G. et al. Predicting postoperative cochlear implant performance using supervised machine learning. Otol. Neurotol. 41, e1013–e1023 (2020).

Zeitler et al. Predicting acoustic hearing preservation following cochlear implant surgery using machine learning. Laryngoscope 134, 926–936 (2024).

Robinson, K. et al. Measuring patient benefit from otorhinolaryngological surgery and therapy. Ann. Otol., Rhinol., Laryngol. 105, 415–422 (1996).

Faber, C. E. & Grontved, A. M. Cochlear implantation and change in quality of life. Acta Otolaryngol Suppl 543, 151–153 (2000).

Goehring, T. et al. Speech enhancement based on neural networks improves speech intelligibility in noise for cochlear implant users. Hear. Res. 344, 183–194 (2017).

Grimm, R. et al. Simulating speech processing with cochlear implants: How does channel interaction affect learning in neural networks?. PloS One 14, e0212134 (2019).

Lai, Richard Lee, et al. Audio-visual speech enhancement using self-supervised learning to improve speech intelligibility in cochlear implant simulations. arXiv preprint arXiv:2307.07748 (2023).

Borjigin, A. et al. Deep learning restores speech intelligibility in multi-Talker interference for Cochlear implant users. bioRxiv (Cold Spring Harbor Laboratory). https://doi.org/10.1101/2022.08.25.504678 (2022).

Pisoni, D. B. et al. Three challenges for future research on cochlear implants. World J. Otorhinolaryngol. Head. Neck Surg. 3, 240–254 (2017).

Bartholomew, R. A. et al. On the difficulty predicting word recognition performance after cochlear implantation. Otol. Neurotol. 45, e393–e399 (2024).

Higgins, J. P. Nonlinear systems in medicine. Yale J. Biol. Med. 75, 247–260 (2003).

Lo, A. et al. Why significant variables aren’t automatically good predictors. Proc. Natl Acad. Sci. 112, 13892–13897 (2015).

Crowson, M. G. Machine learning and cochlear implantation-a structured review of opportunities and challenges. Otol. Neurotol. 41, e36–e45 (2020).

Bernhard, N. et al. Duration of deafness impacts auditory performance after cochlear implantation: A meta-analysis. Laryngosc. Investig. Otolaryngol. 6, 291–301 (2021).

Tobey, E. A. et al. Influence of implantation age on school-age language performance in pediatric cochlear implant users. Int J. Audio. 52, 219–229 (2013).

Wick, C. C. et al. Hearing and quality-of-life outcomes after cochlear implantation in adult hearing aid users 65 years or older: a secondary analysis of a nonrandomized clinical trial. JAMA Otolaryngol. Head. Neck Surg. 146, 925–932 (2020).

Pisoni, D. B. Cognitive factors and cochlear implants: some thoughts on perception, learning, and memory in speech perception. Ear Hear 21, 70–78 (2000).

Mumuni, A. & Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array 16, 100258 (2022).

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V. & Gulin, A. CatBoost: Unbiased boosting with categorical features. NeurIPS, (2018).

Dorogush, A. V., Ershov, V. & Gulin, A. CatBoost: Gradient boosting with categorical features support. Workshop on ML Systems at NIPS, (2017).

Lundberg, S. M. & Lee, S. I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems, (2017).

Balan, J. R., Rodrigo, H., Saxena, U. & Mishra, S. K. Explainable machine learning reveals the relationship between hearing thresholds and speech-in-noise recognition in listeners with normal audiograms. J. Acoust. Soc. Am. 154, 2278–2288 (2023).

Zeng, F. G., Rebscher, S., Harrison, W., Sun, X. & Feng, H. Cochlear Implants: System Design, Integration, and Evaluation. IEEE Rev. Biomed. Eng. 1, 115–142 (2008).

Tykocinski, M., Cohen, L. T. & Cowan, R. S. Measurement and analysis of access resistance and polarization impedance in cochlear implant recipients. Otol. Neurotol. 26, 948–956 (2005).

de Vos, J. J. et al. Use of electrically evoked compound action potentials for cochlear implant fitting: A systematic review. Ear. Hear. 39, 401–411 (2018).

Lepenioti, K., Bousdekis, A., Apostolou, D. & Mentzas, G. Prescriptive analytics: Literature review and research challenges. Int. J. Inf. Manag. 50, 57–70 (2020).

Lindquist, N. R. et al. Early datalogging predicts cochlear implant performance: building a recommendation for daily device usage. Otol. Neurotol. 44, e479–e485 (2023).

Acknowledgements

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

A.N., and S.M. conceptualized and designed the study; A.N., and P.A. coordinated the data selection and extraction. A.N., S.M., and P.A. drafted the manuscript; and all authors contributed to critical revisions of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Nair, A.P.S., Mishra, S.K. & Alba Diaz, P.A. A systematic review of machine learning approaches in cochlear implant outcomes. npj Digit. Med. 8, 411 (2025). https://doi.org/10.1038/s41746-025-01733-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-025-01733-9